Primers • Transformers

- Background: Representation Learning for Natural Language Processing

- Enter the Transformer

- Transformers vs. Recurrent and Convolutional Architectures: An Overview

- Breaking Down the Transformer

- Background

- One-Hot Encoding

- Dot product

- Matrix Multiplication as a Series of Dot Products

- First-Order Sequence Model

- Second-Order Sequence Model

- Second-Order Sequence Model with Skips

- Masking Features

- From Feature Vectors to Transformers

- Attention as Matrix Multiplication

- Second-Order Sequence Model as Matrix Multiplications

- Sampling a Sequence of Output Words

- Transformer Core

- Embeddings

- Positional Encoding

- Decoding Output Words / De-Embeddings / Un-Embeddings

- Attention

- Dimensional Restrictions on Queries, Keys, and Values

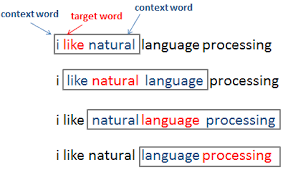

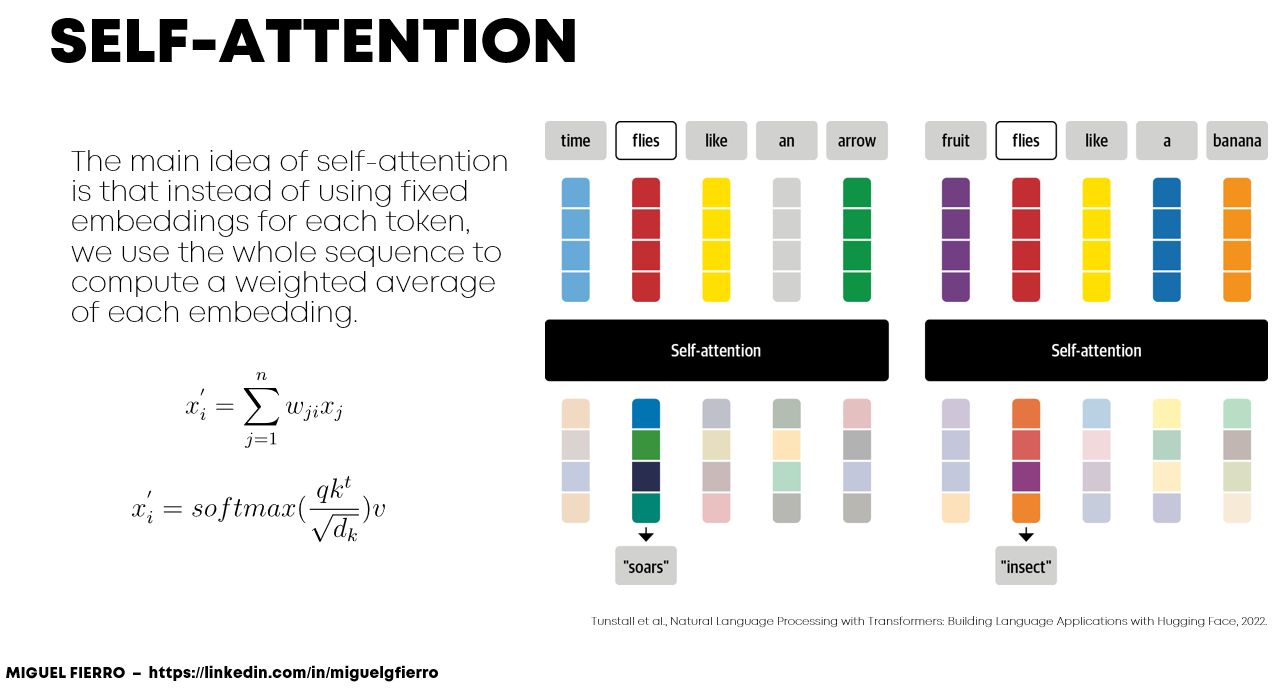

- Why attention? Contextualized Word Embeddings

- Types of Attention: Additive, Multiplicative (Dot-product), and Scaled

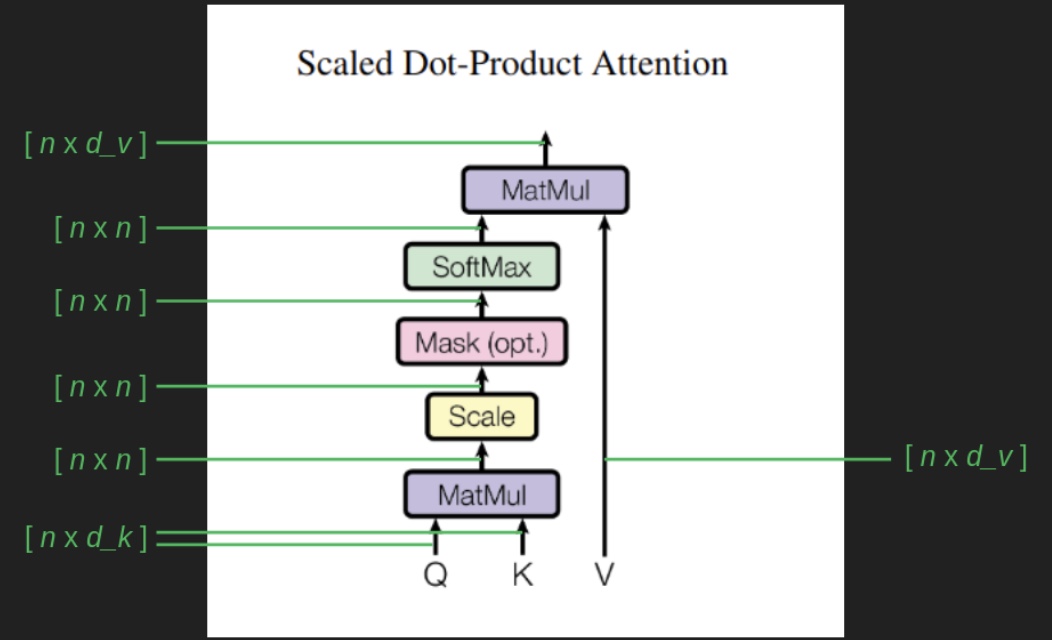

- Attention calculation

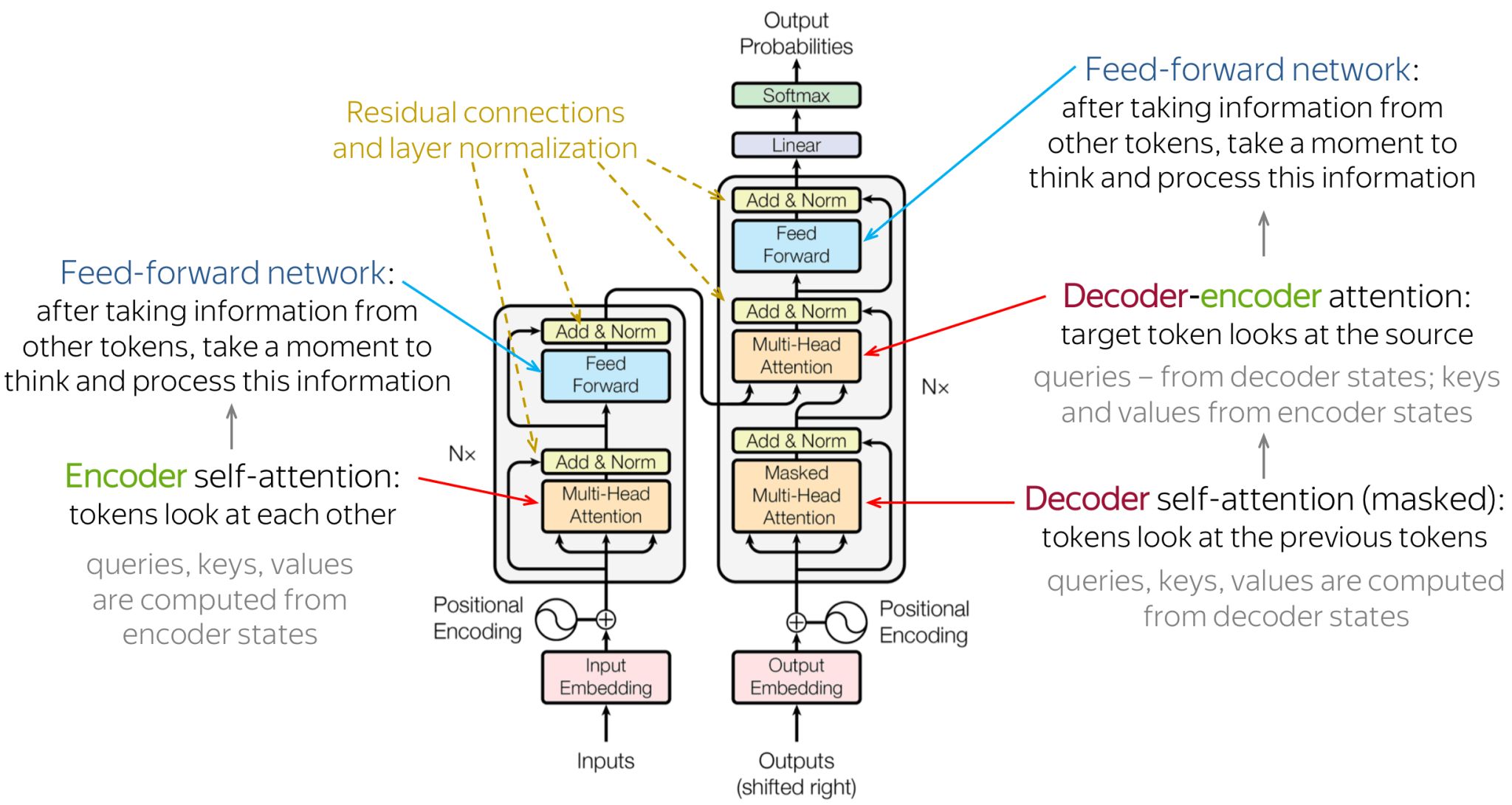

- Self-Attention

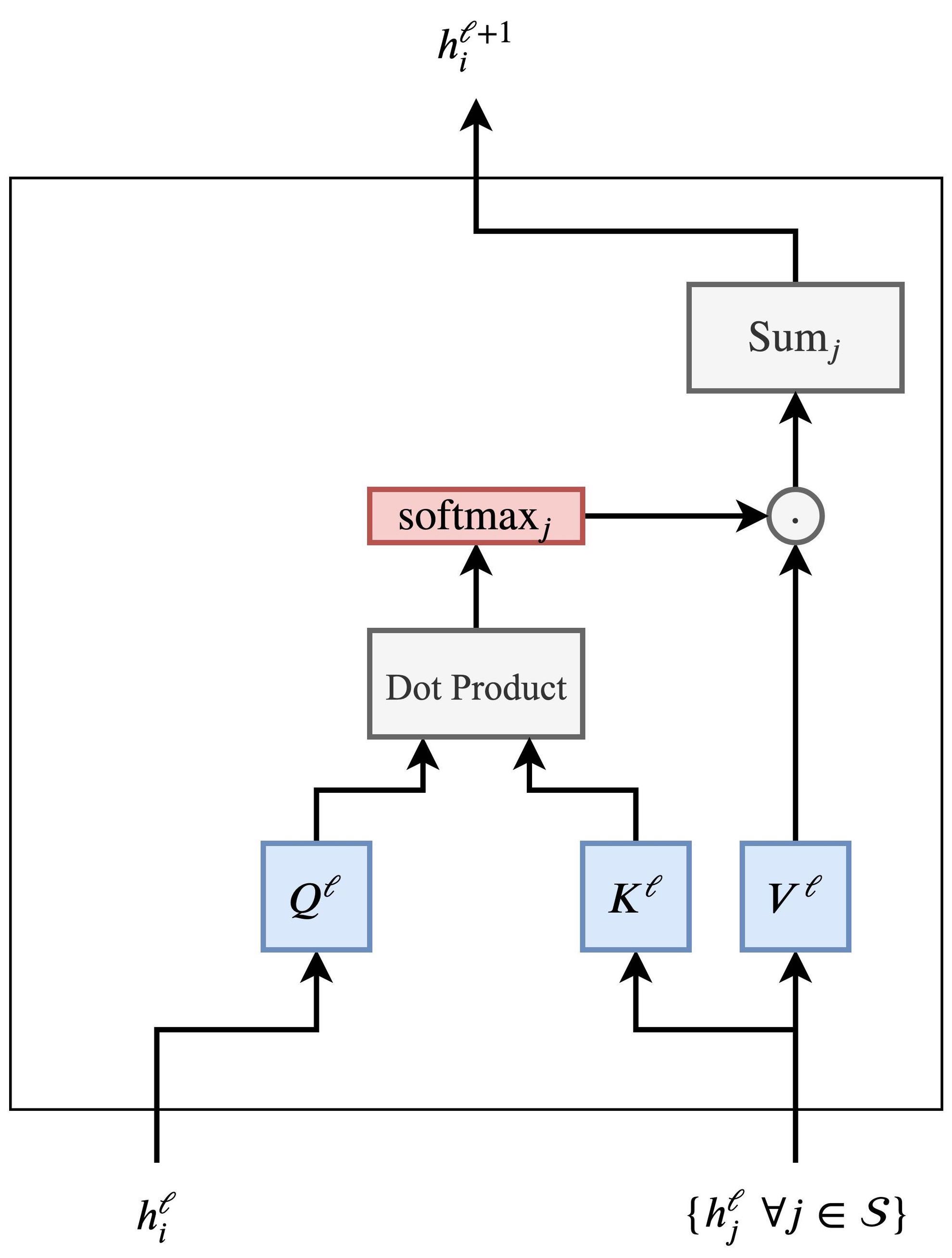

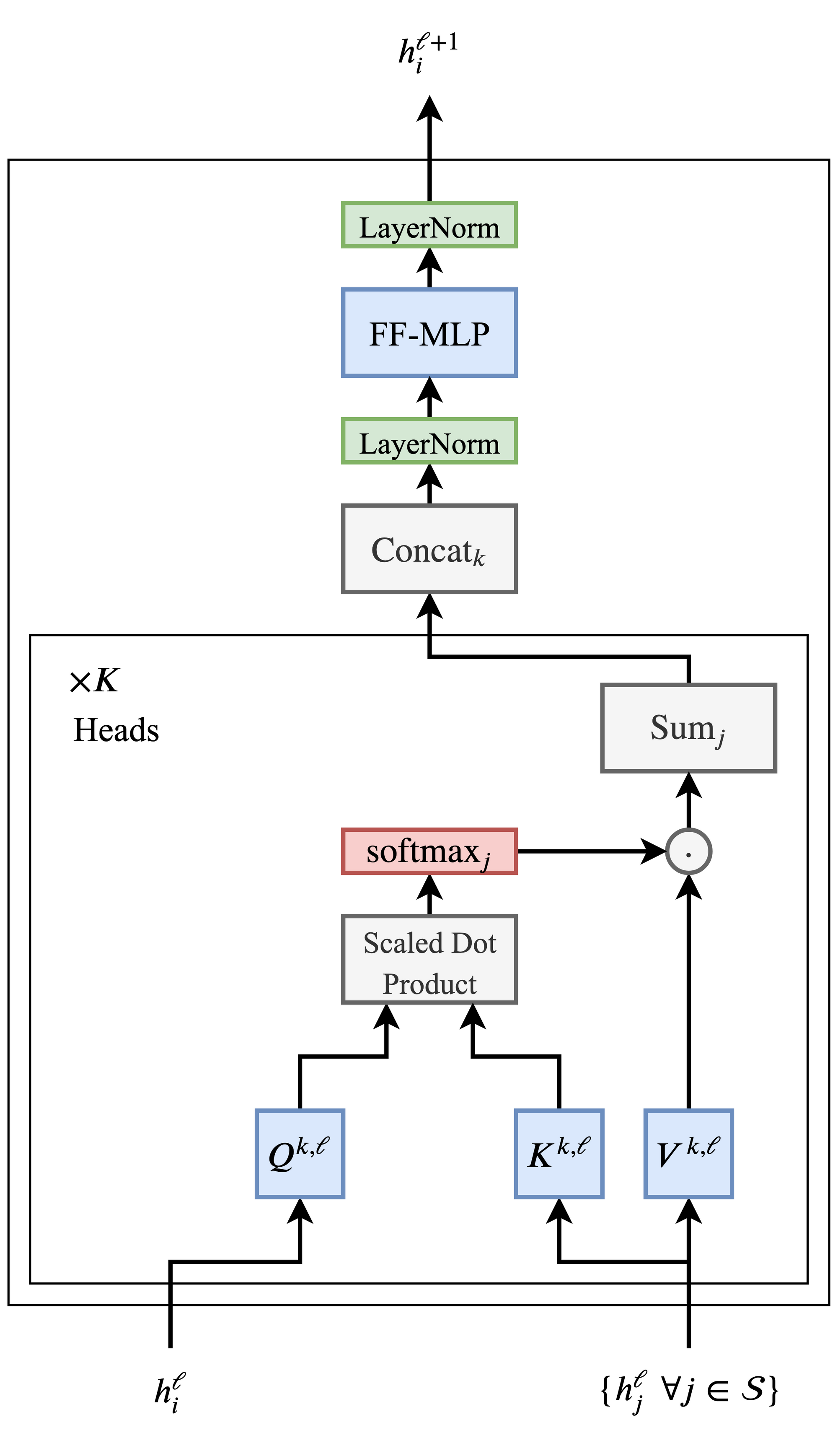

- Single Head Attention Revisited

- Why is the product of the \(Q\) and \(K\) matrix in Self-Attention normalized?

- Coding up self-attention

- Averaging is equivalent to uniform attention

- Activation Functions

- Attention in Transformers: What is new and what is not?

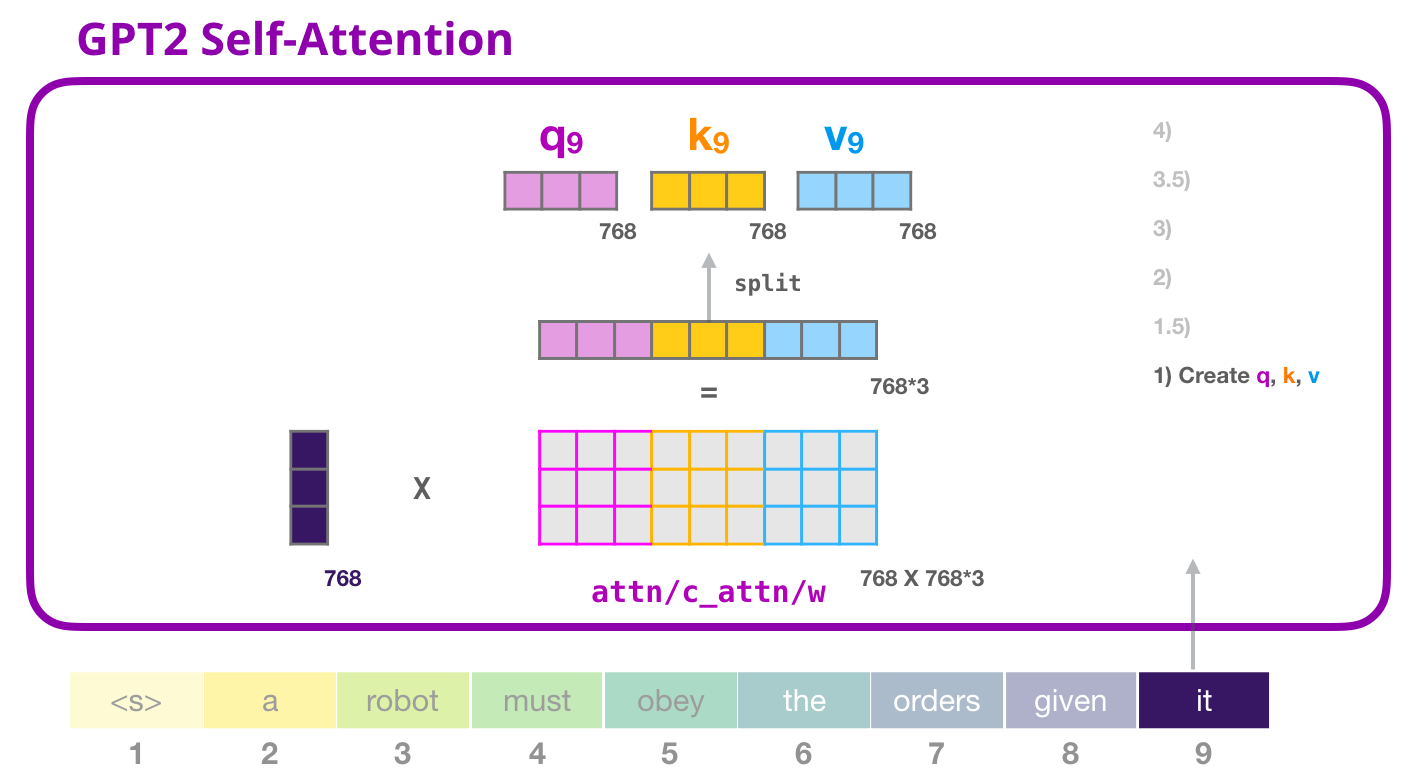

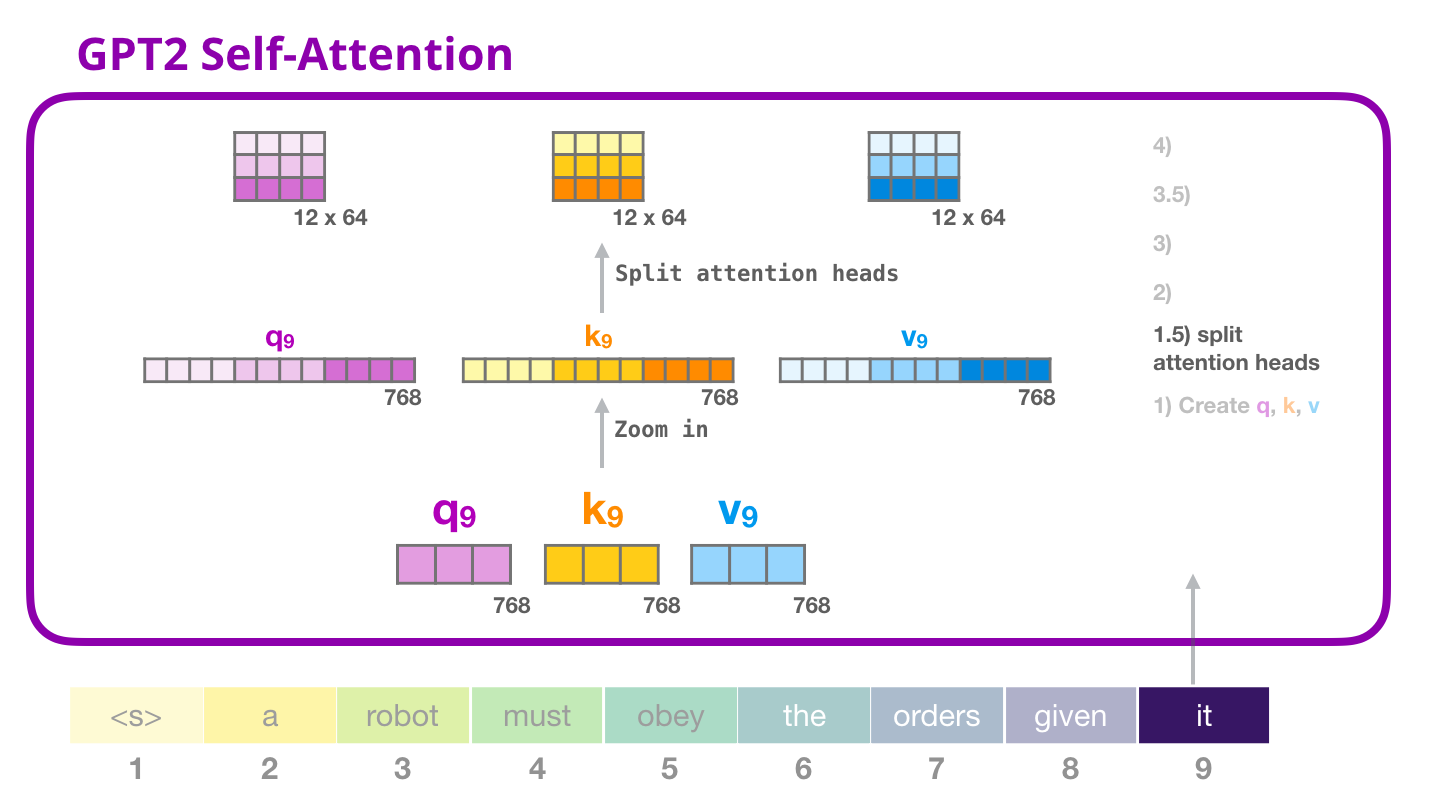

- Calculating \(Q\), \(K\), and \(V\) matrices in the Transformer architecture

- Calculating \(Q\), \(K\), and \(V\) matrices in the Transformer architecture

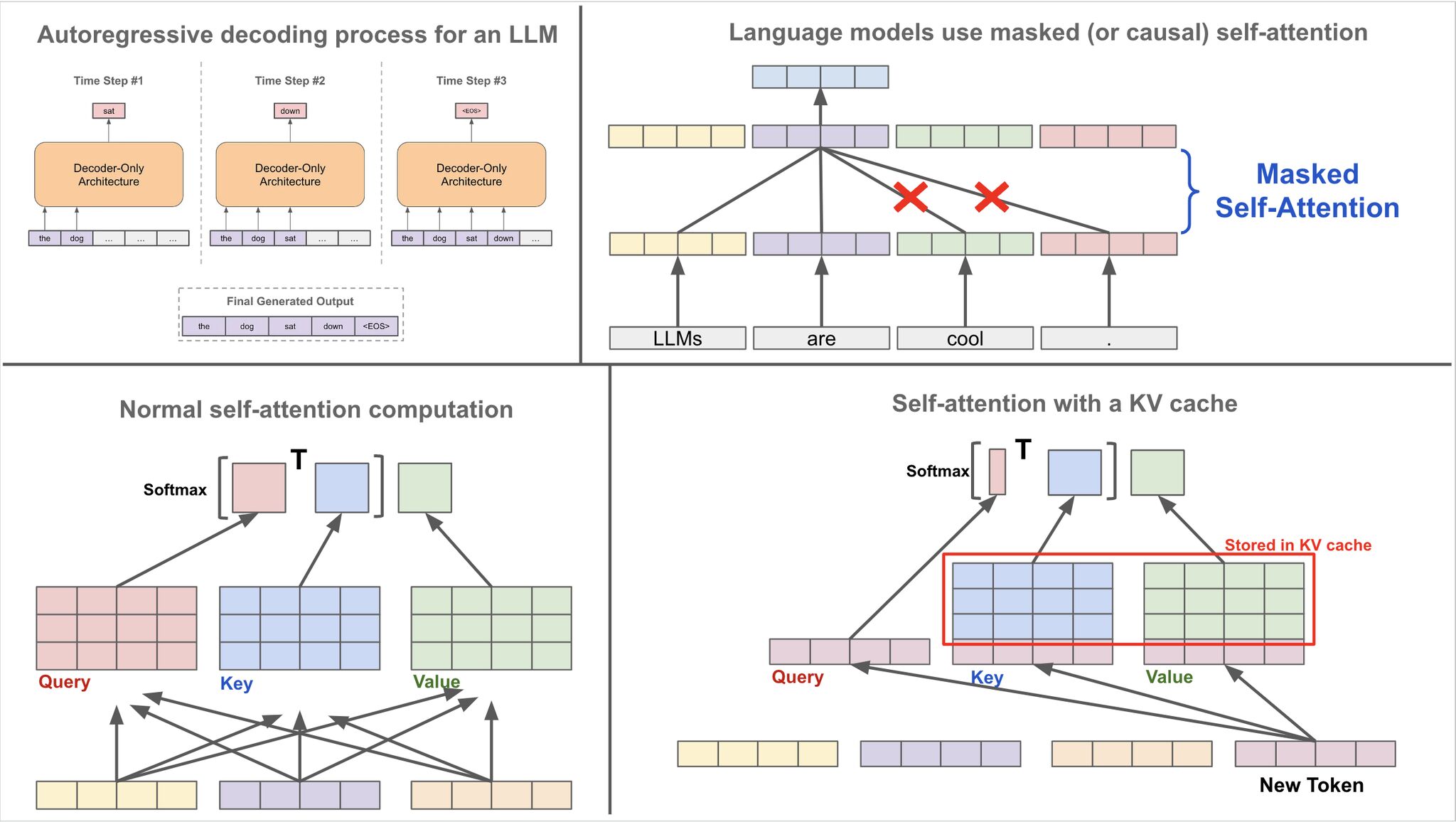

- Optimizing Performance with the KV Cache

- Applications of Attention in Transformers

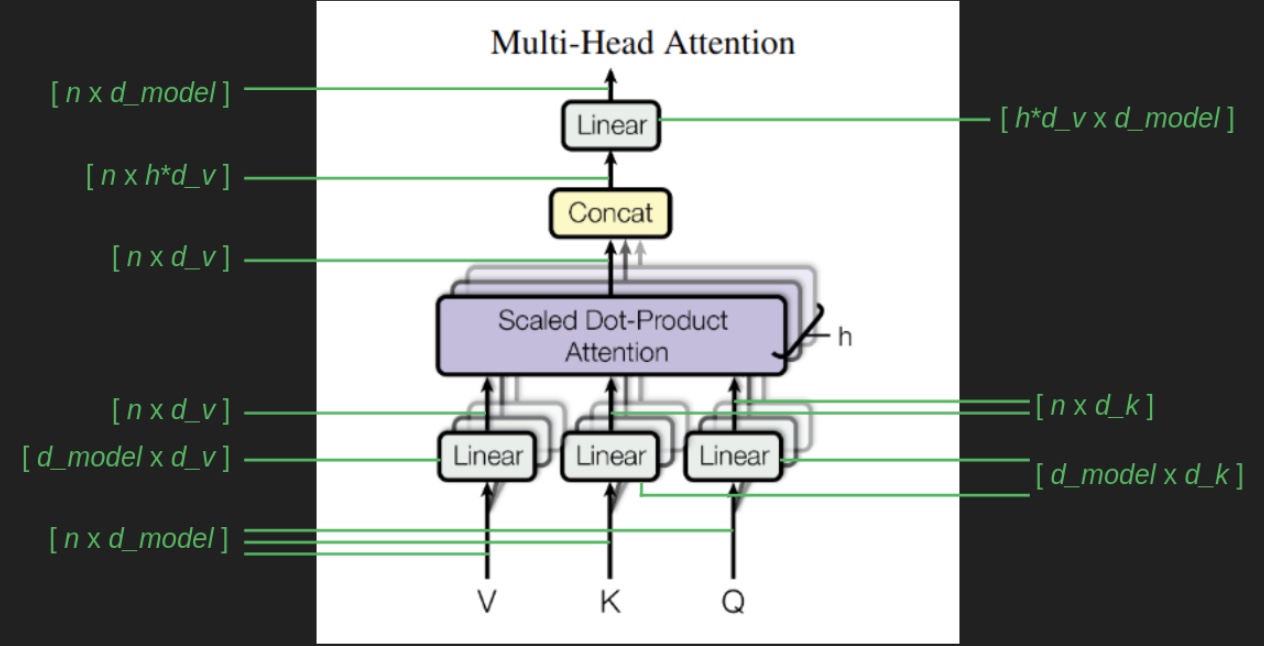

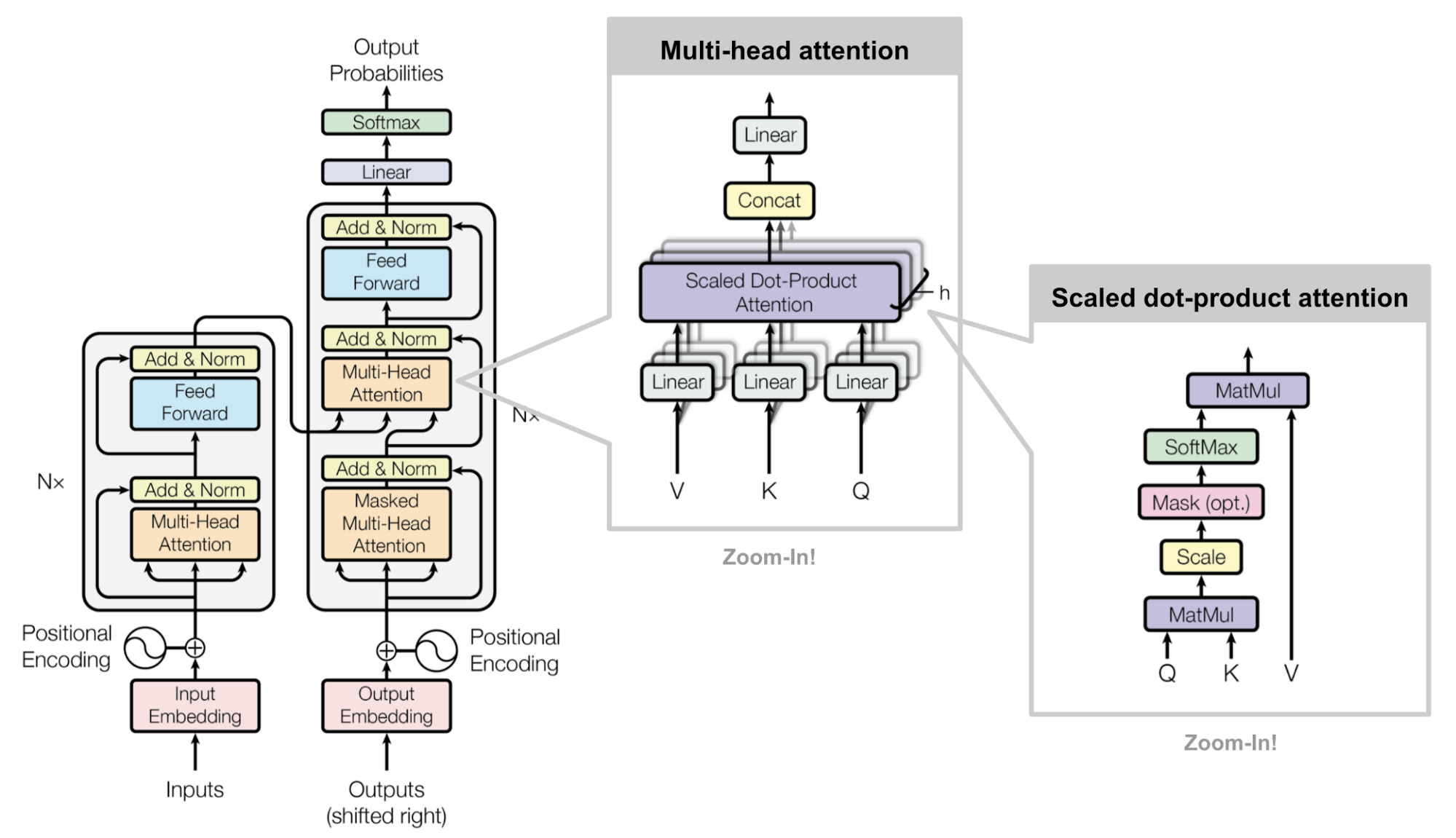

- Multi-Head Attention

- Cross-Attention

- Dropout

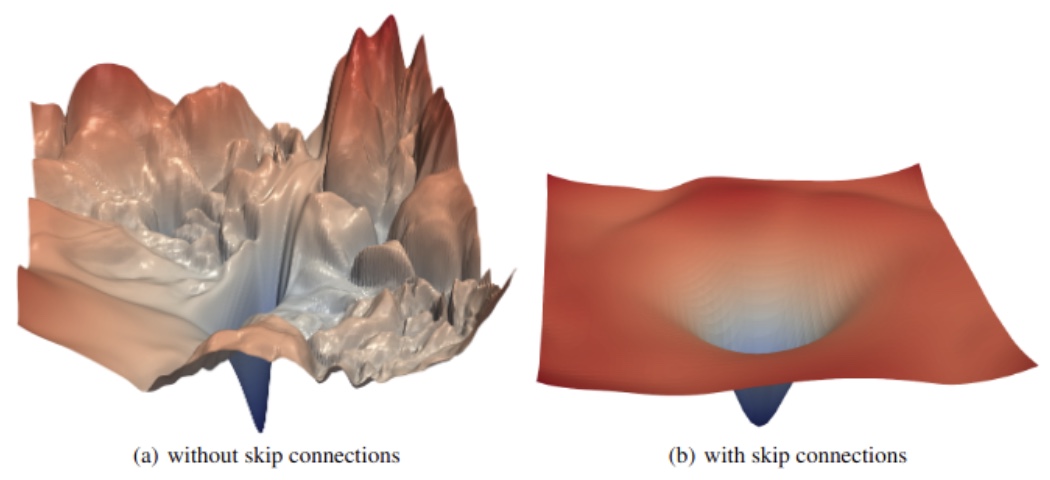

- Skip connections

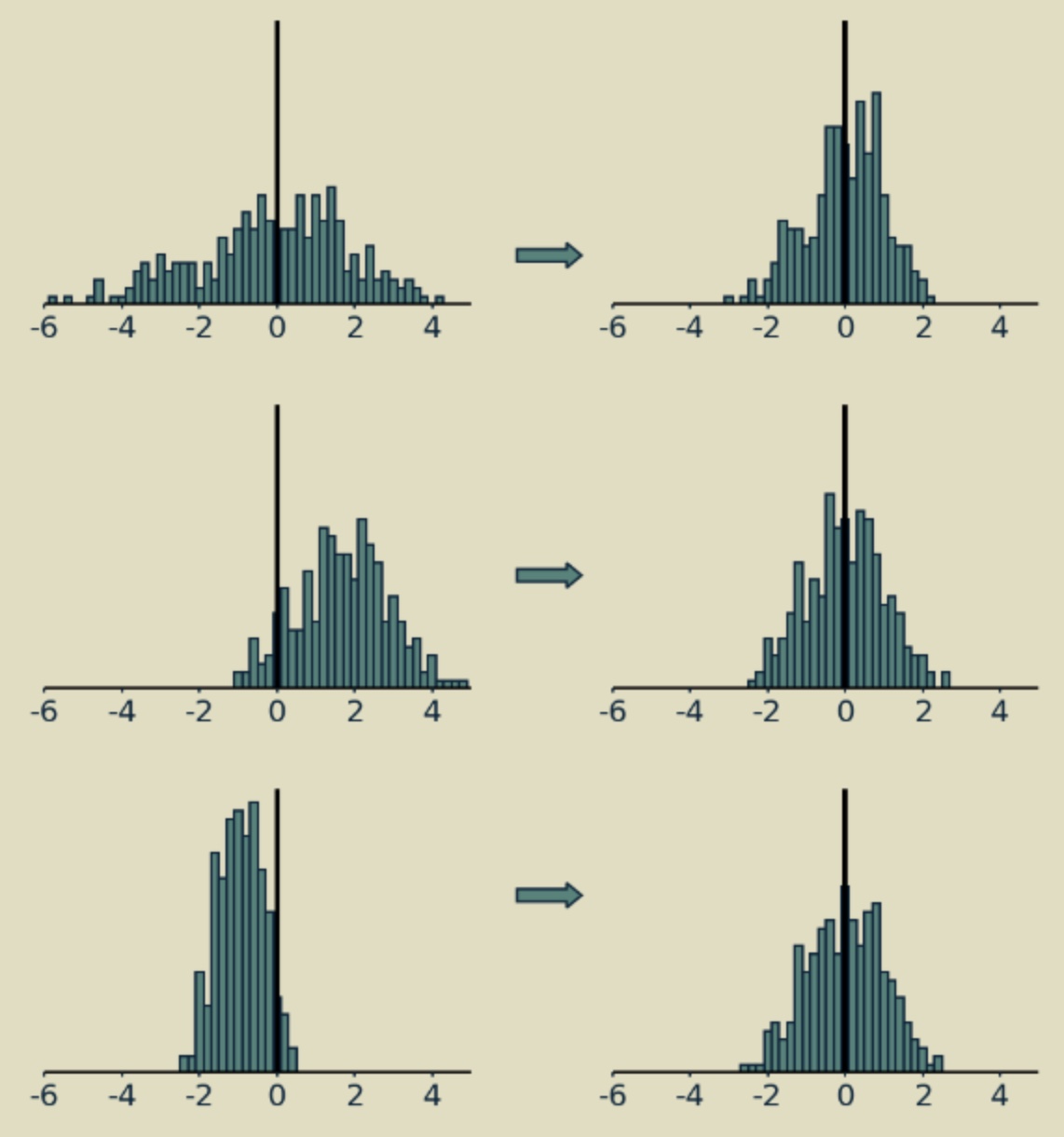

- Layer Normalization

- Comparison of Normalization Techniques

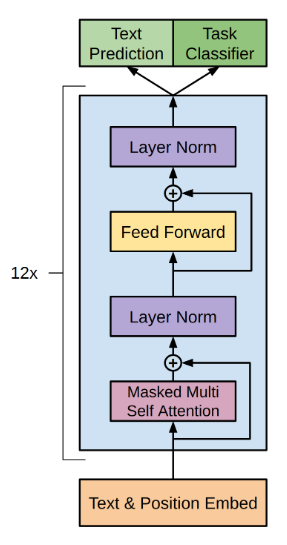

- Pre-Norm vs. Post-Norm Transformer Architectures

- Why Transformer Architectures Use Layer Normalization

- How Layer Normalization Works Compared to Batch Normalization

- Why Transformers Use Layer Normalization vs. Other Forms

- Related: Modern Normalization Alternatives for Transformers (such as RMSNorm, ScaleNorm, and AdaNorm)

- Softmax

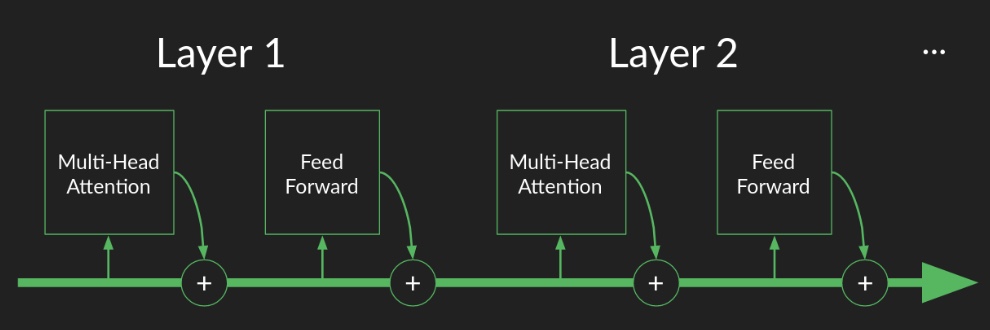

- Stacking Transformer Layers

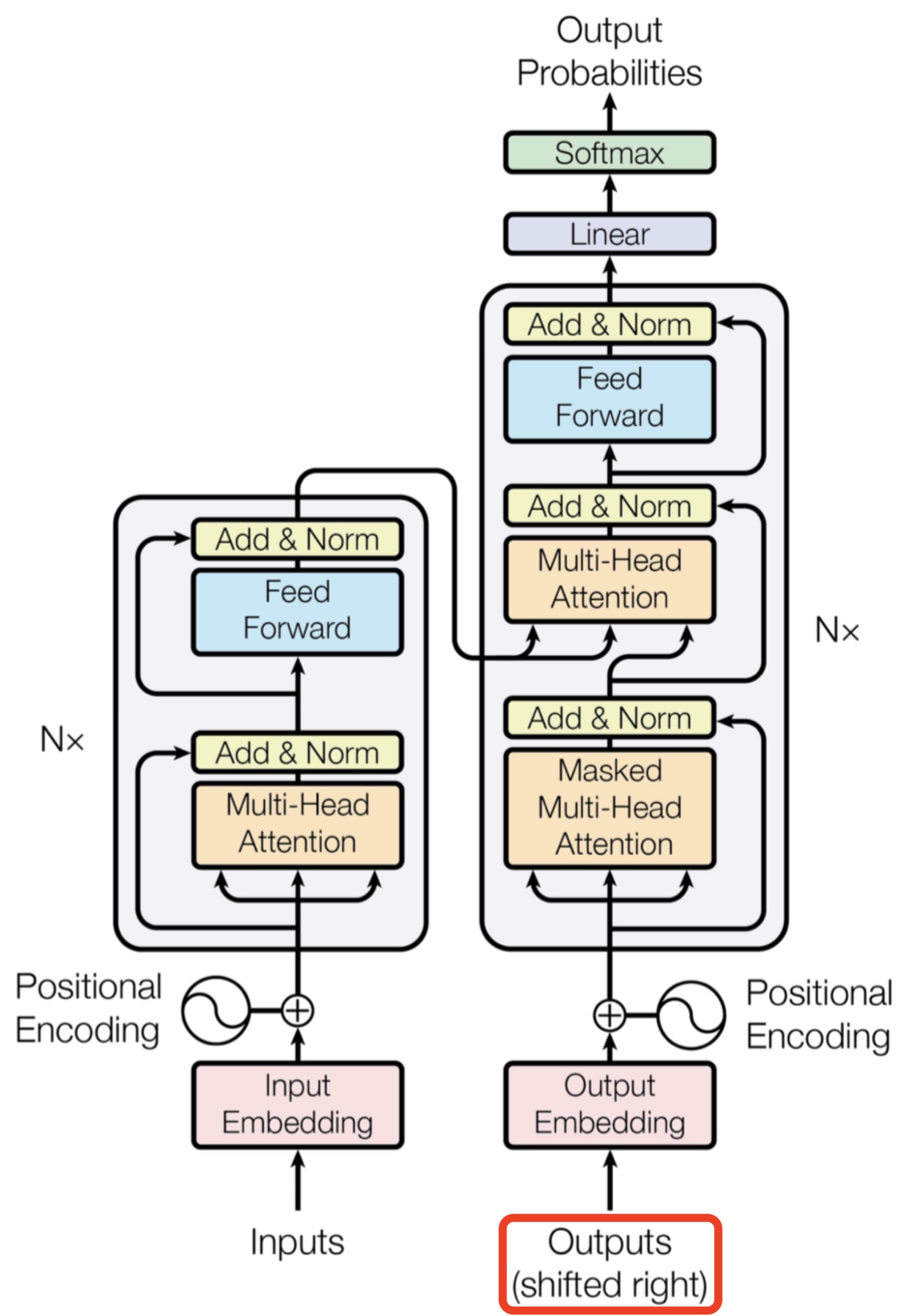

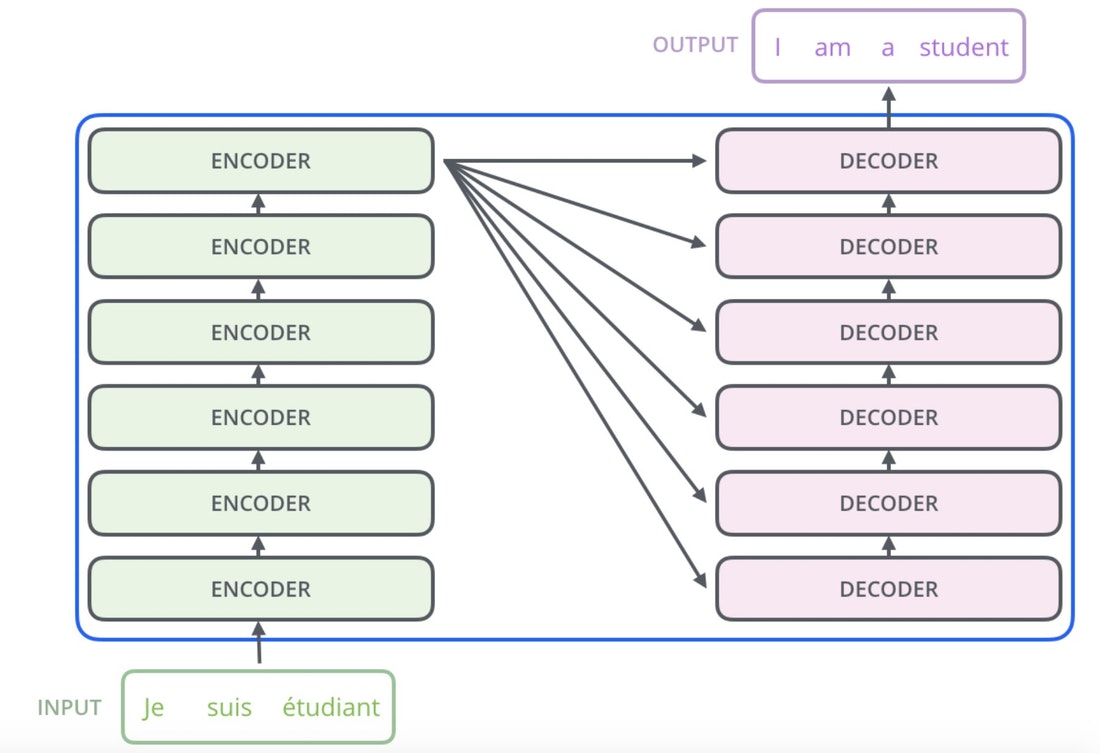

- Transformer Encoder and Decoder

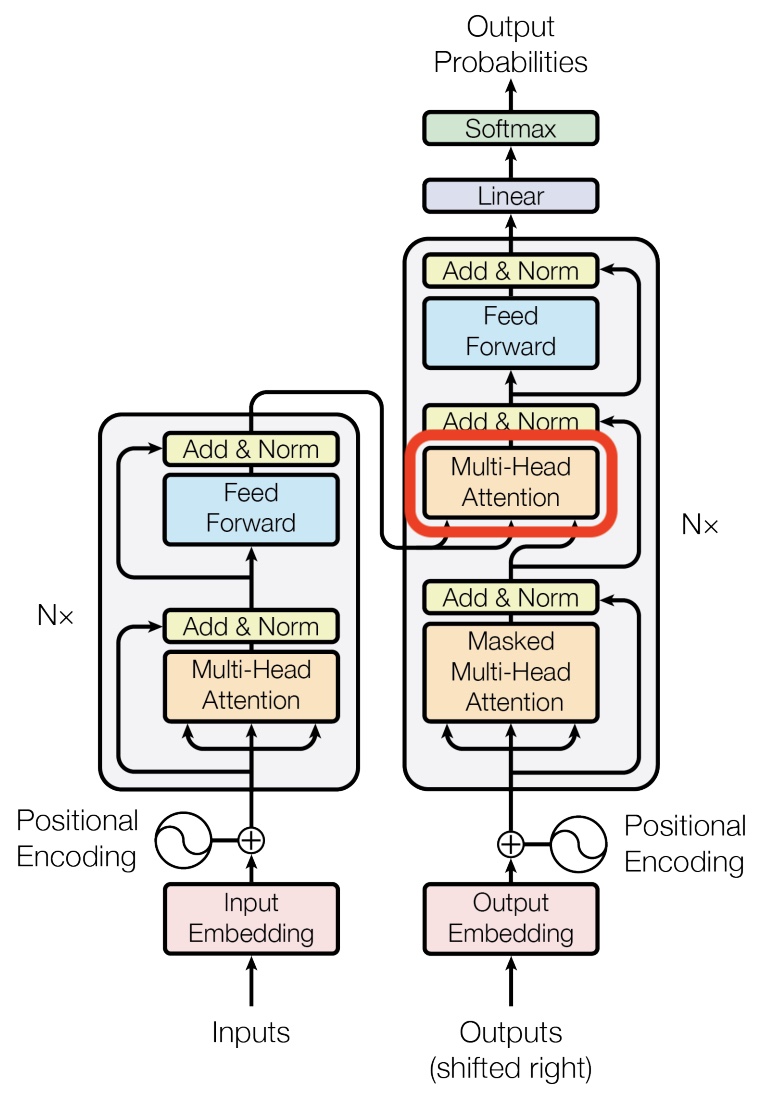

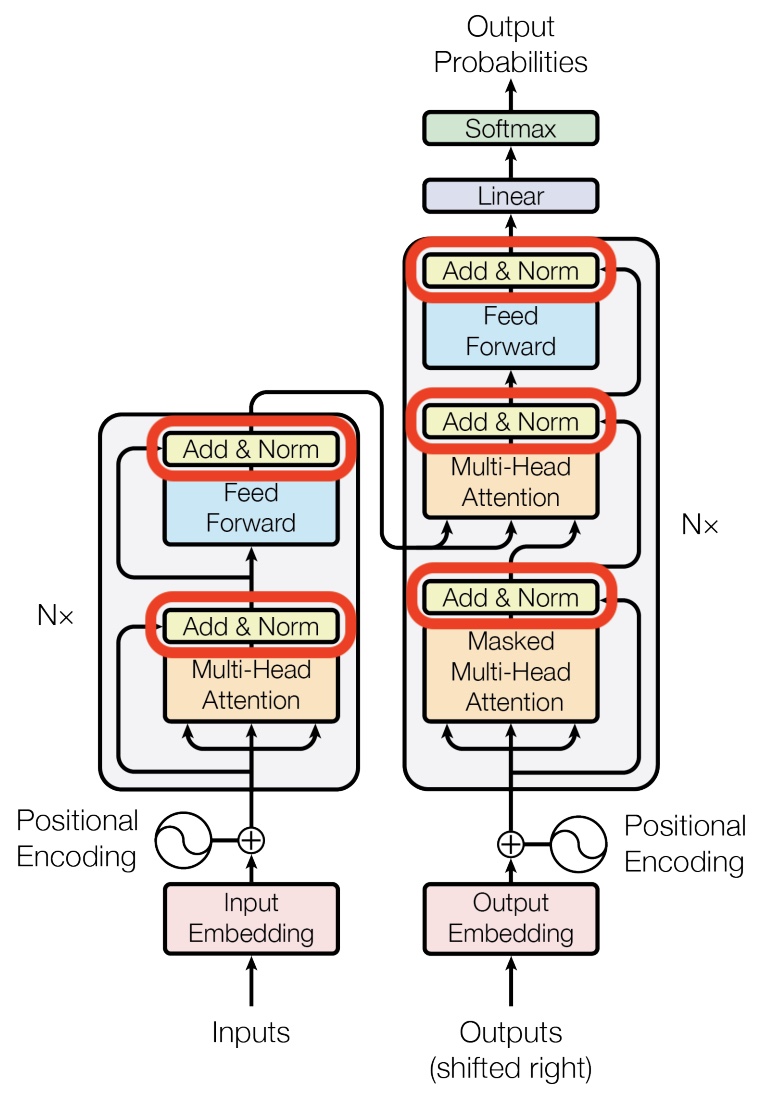

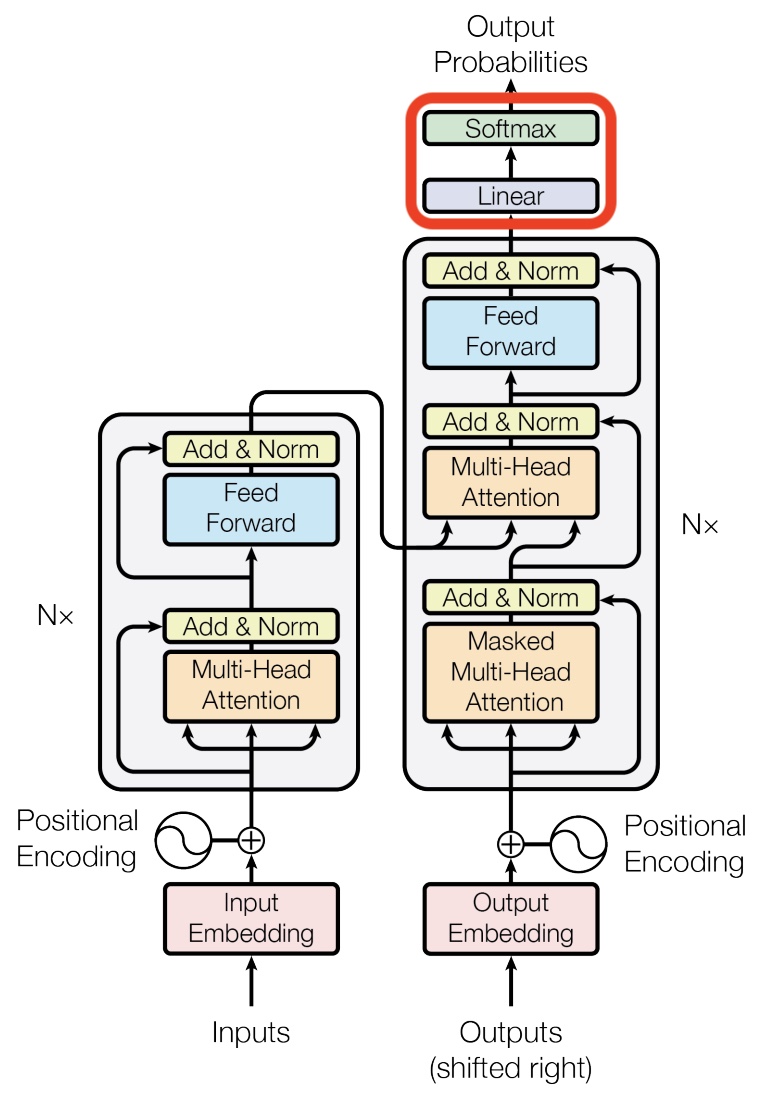

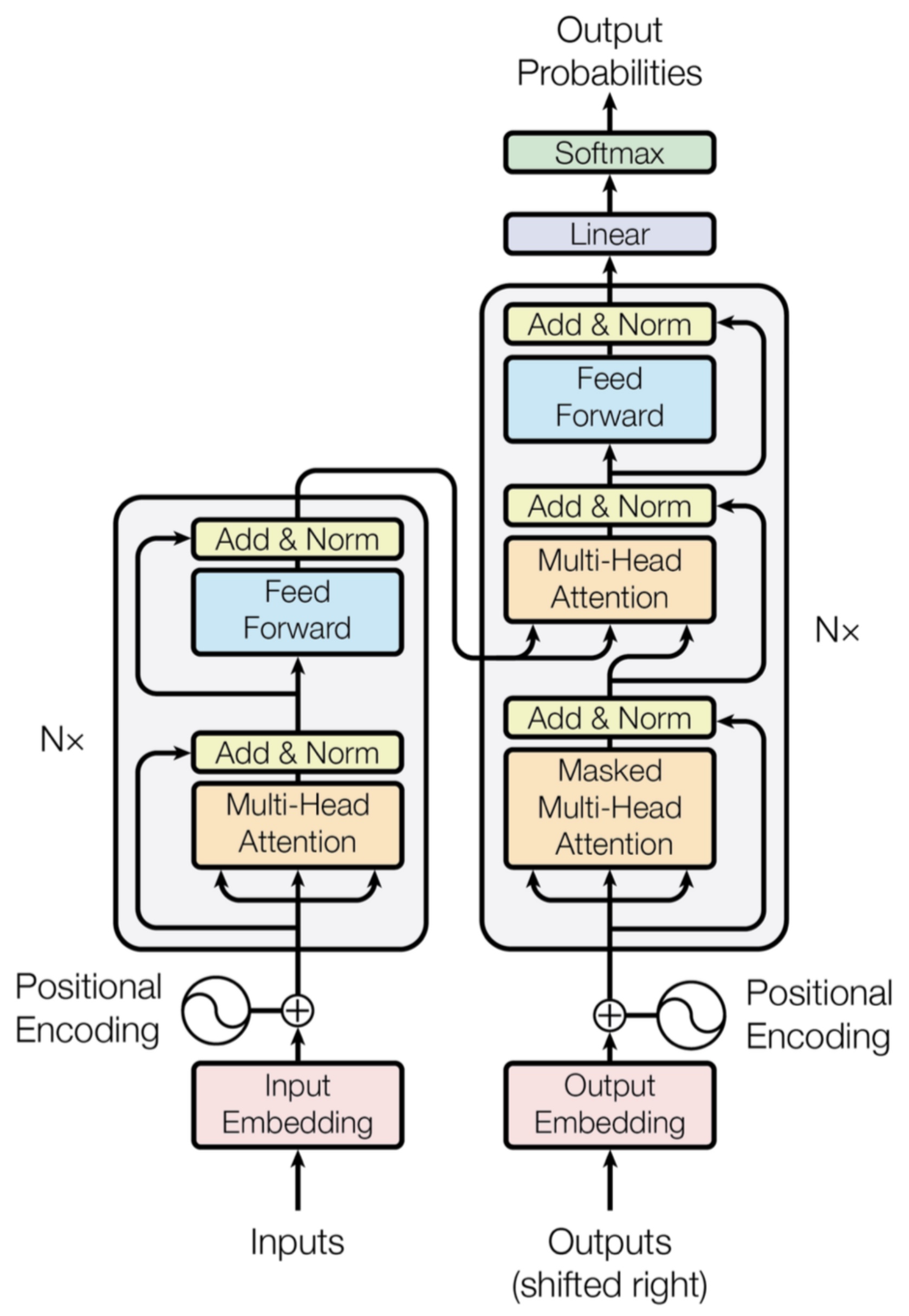

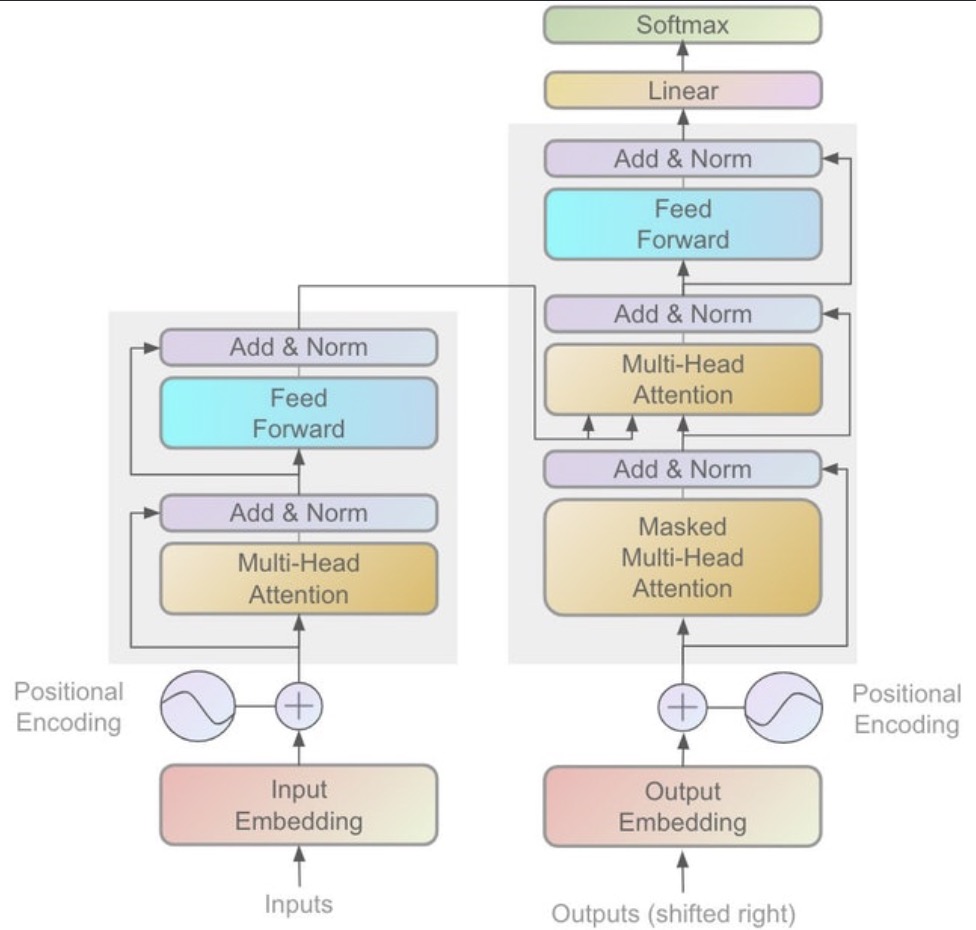

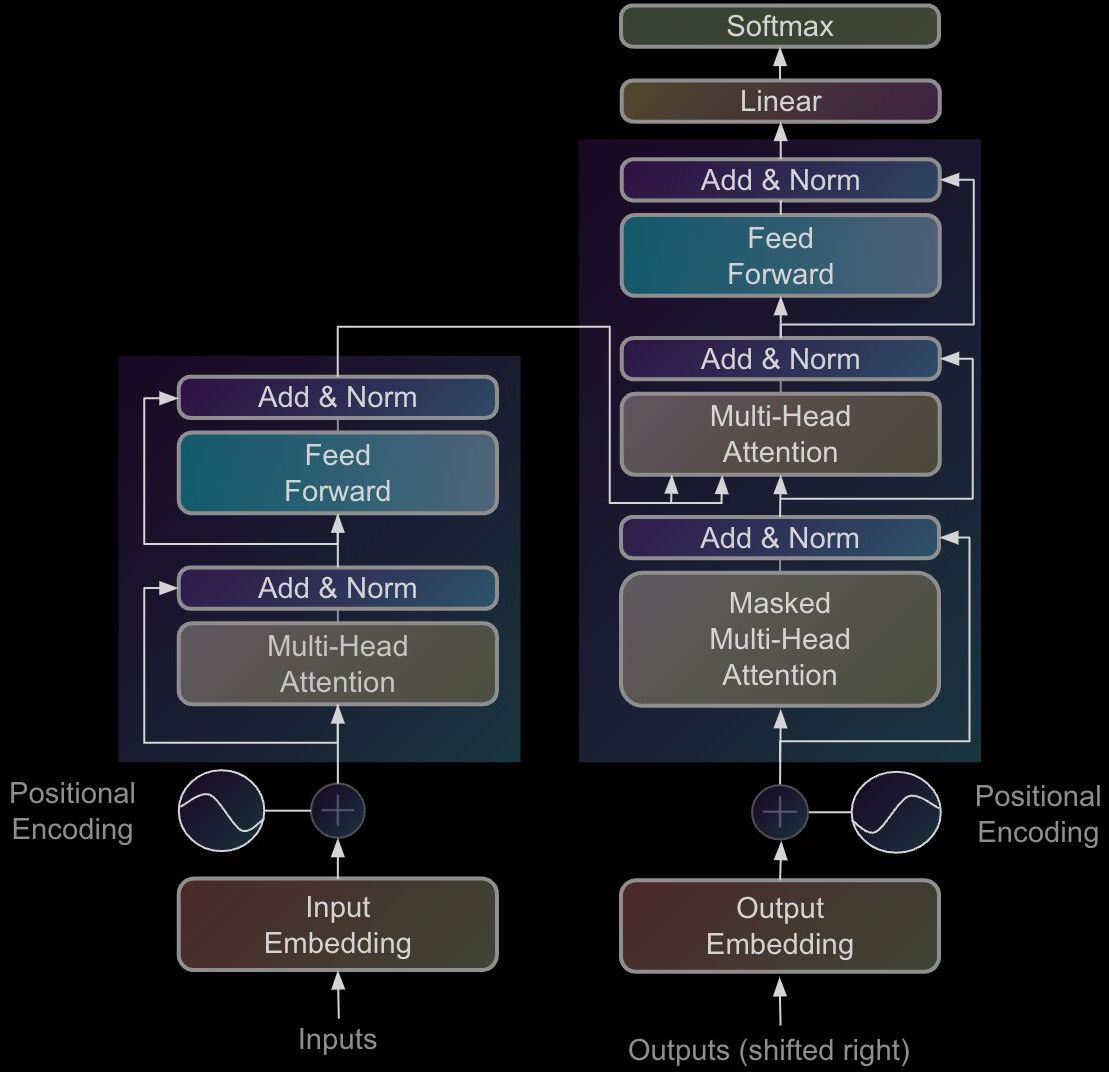

- Putting it all together: The Transformer Architecture

- Loss Function

- Background

- Implementation details

- Tokenization

- Byte Pair Encoding (BPE)

- Teacher Forcing

- Shift Right (Off-by-One Label Shift) in Decoder Inputs

- Scheduled Sampling

- Label Smoothing as a Regularizer

- Scaling Issues

- Adding a New Token to the Tokenizer’s Vocabulary and Model’s Embedding Table

- Extending the Tokenizer to New Languages

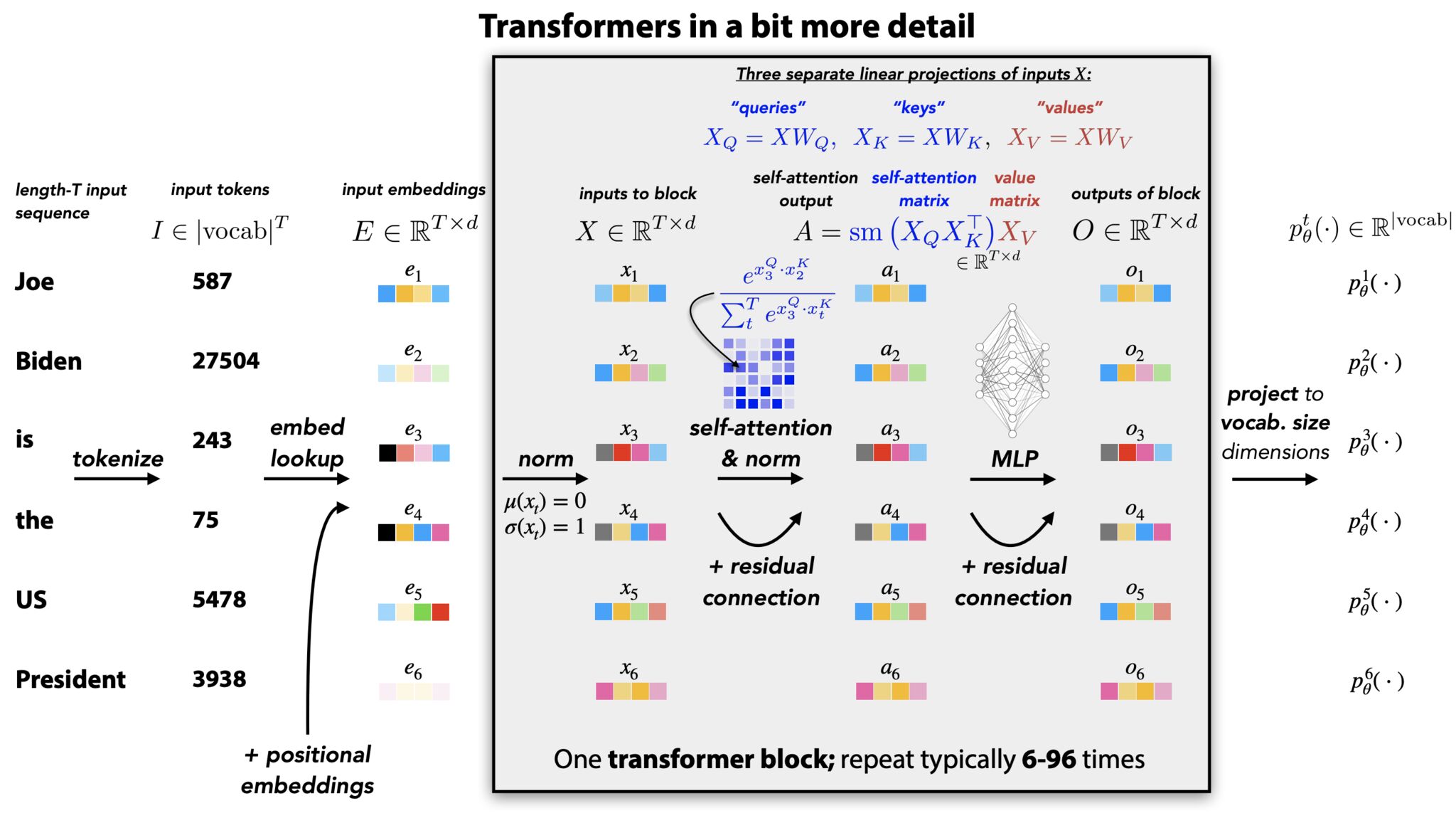

- End-to-end flow: from input embeddings to next-token prediction

- Step 1: Tokenization \(\rightarrow\) token IDs

- Step 2: Token embeddings and positional encoding

- Step 3: Stack of \(L\) Transformer decoder layers

- Step 4: Select the final token representation

- Step 5: Projection to vocabulary logits

- Step 6: Softmax and token selection

- Step 7: Autoregressive generation loop

- Step 8: Key–value (KV) caching for efficient inference

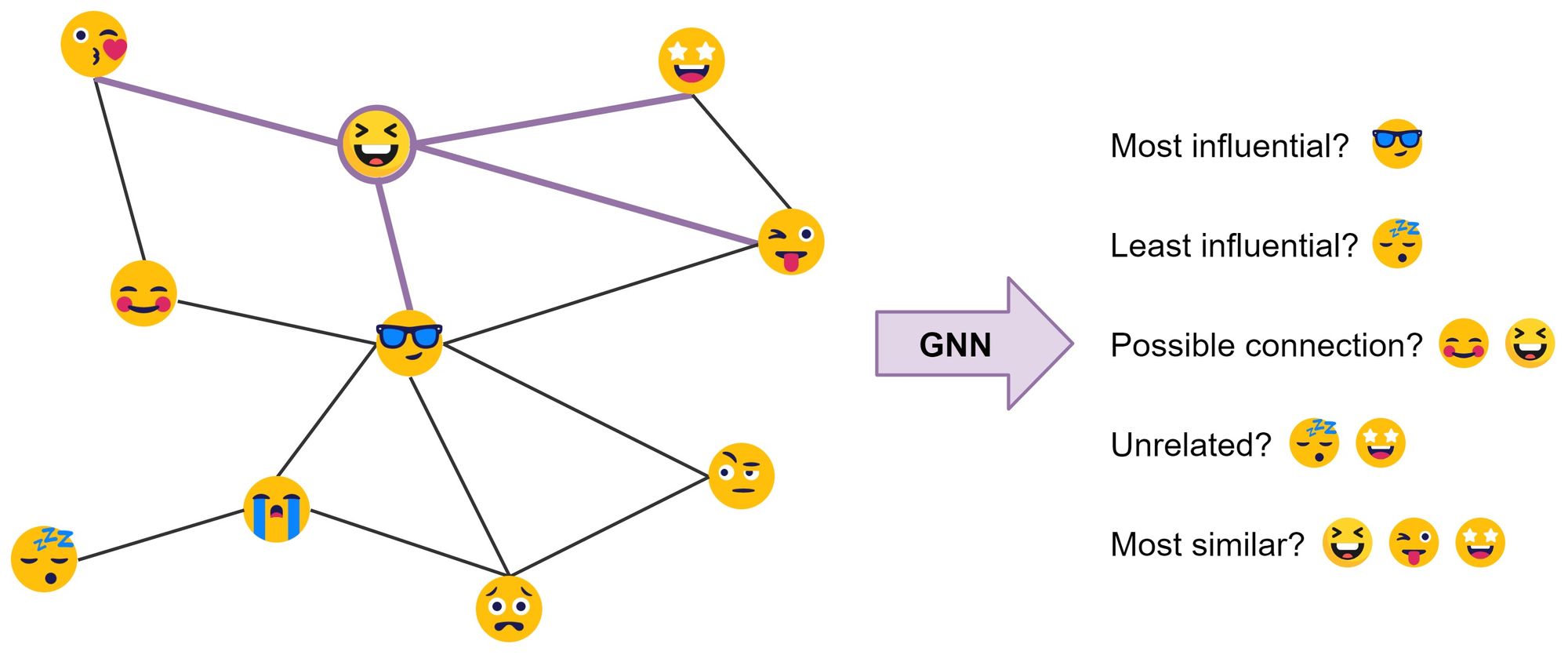

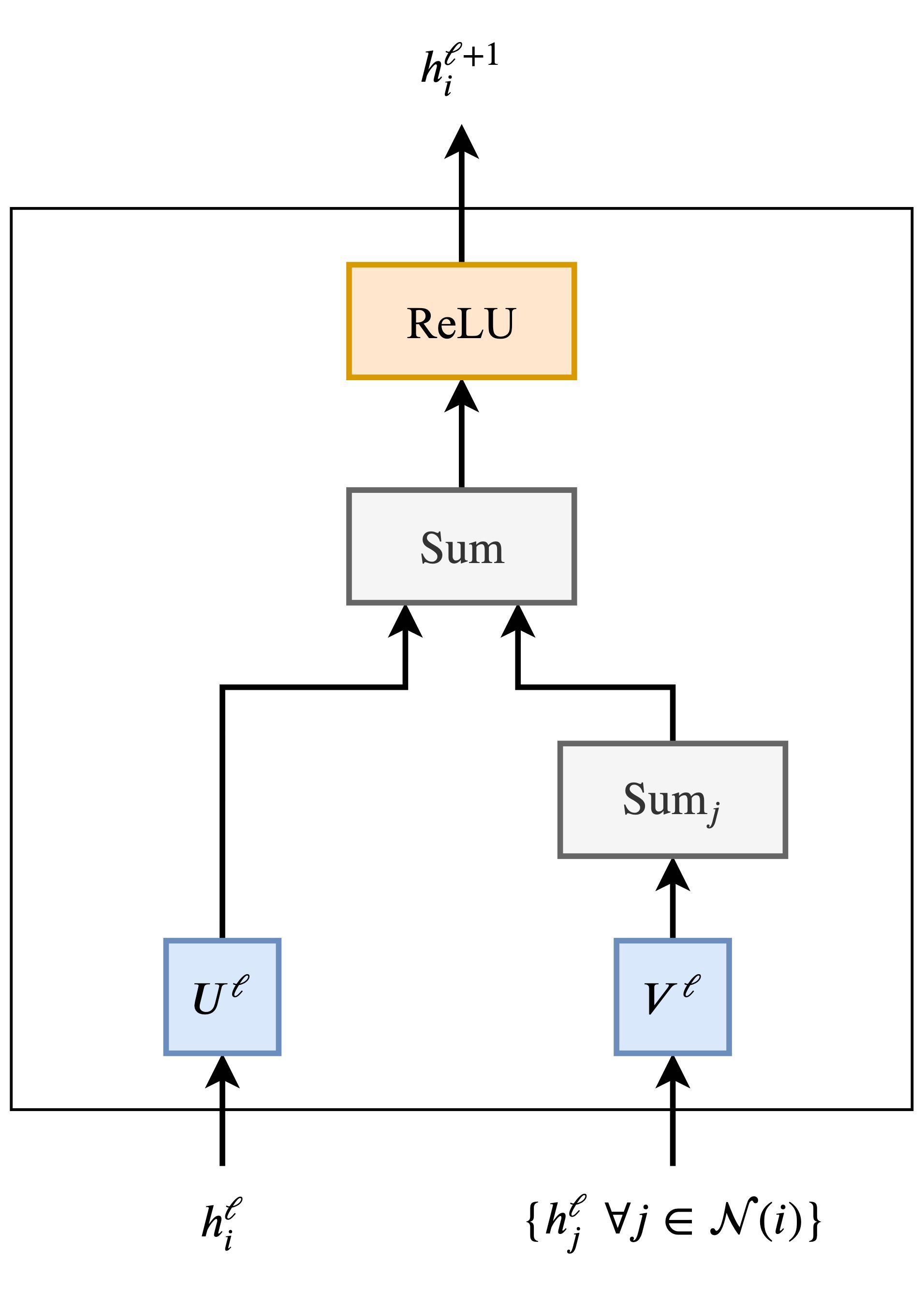

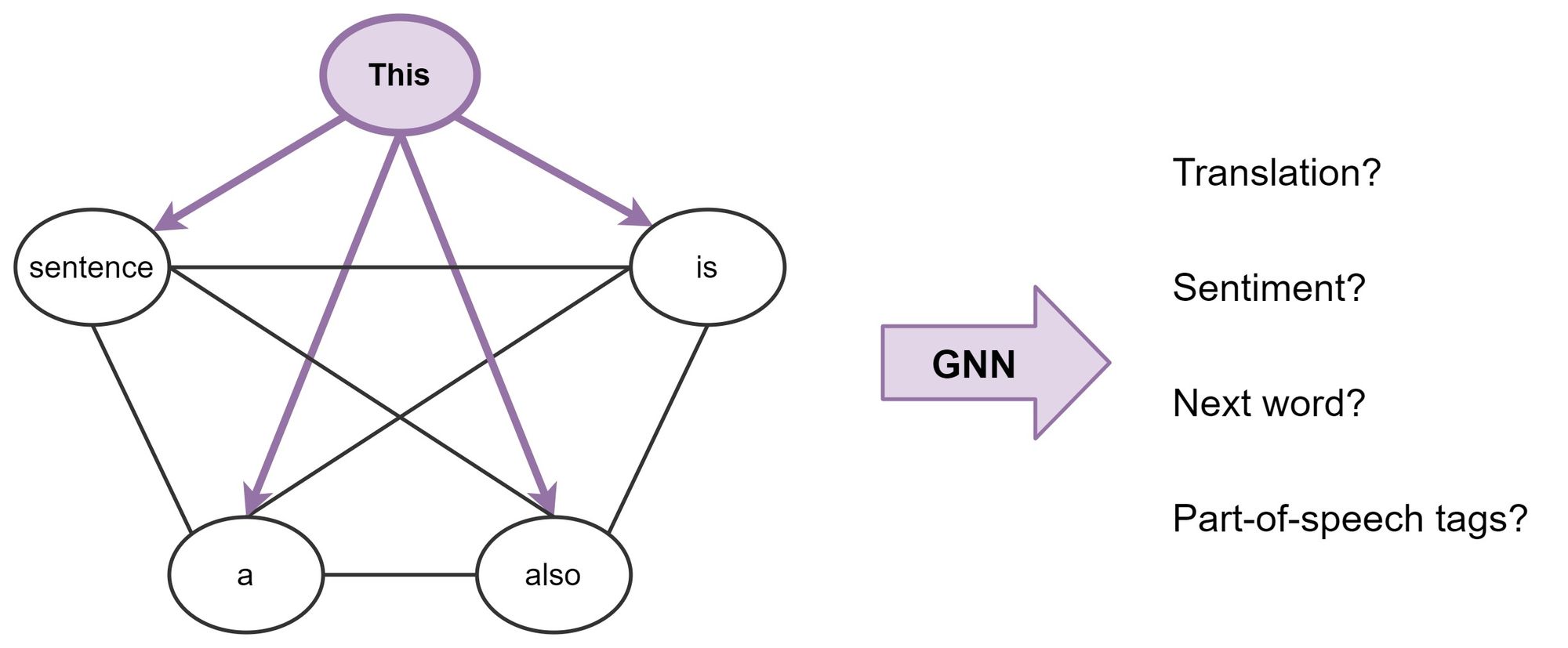

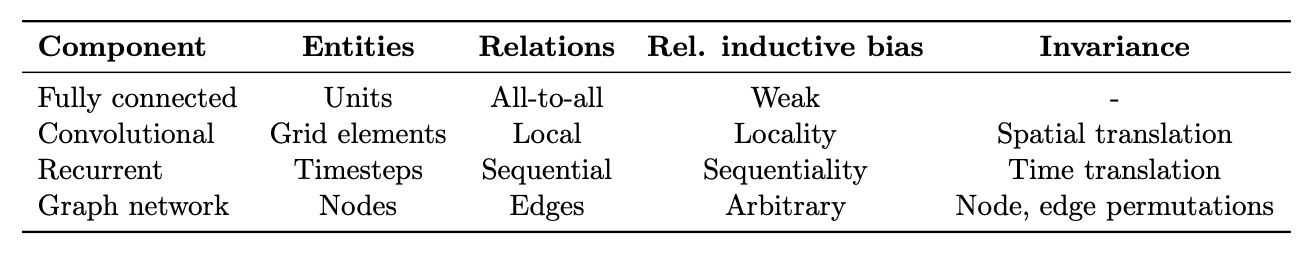

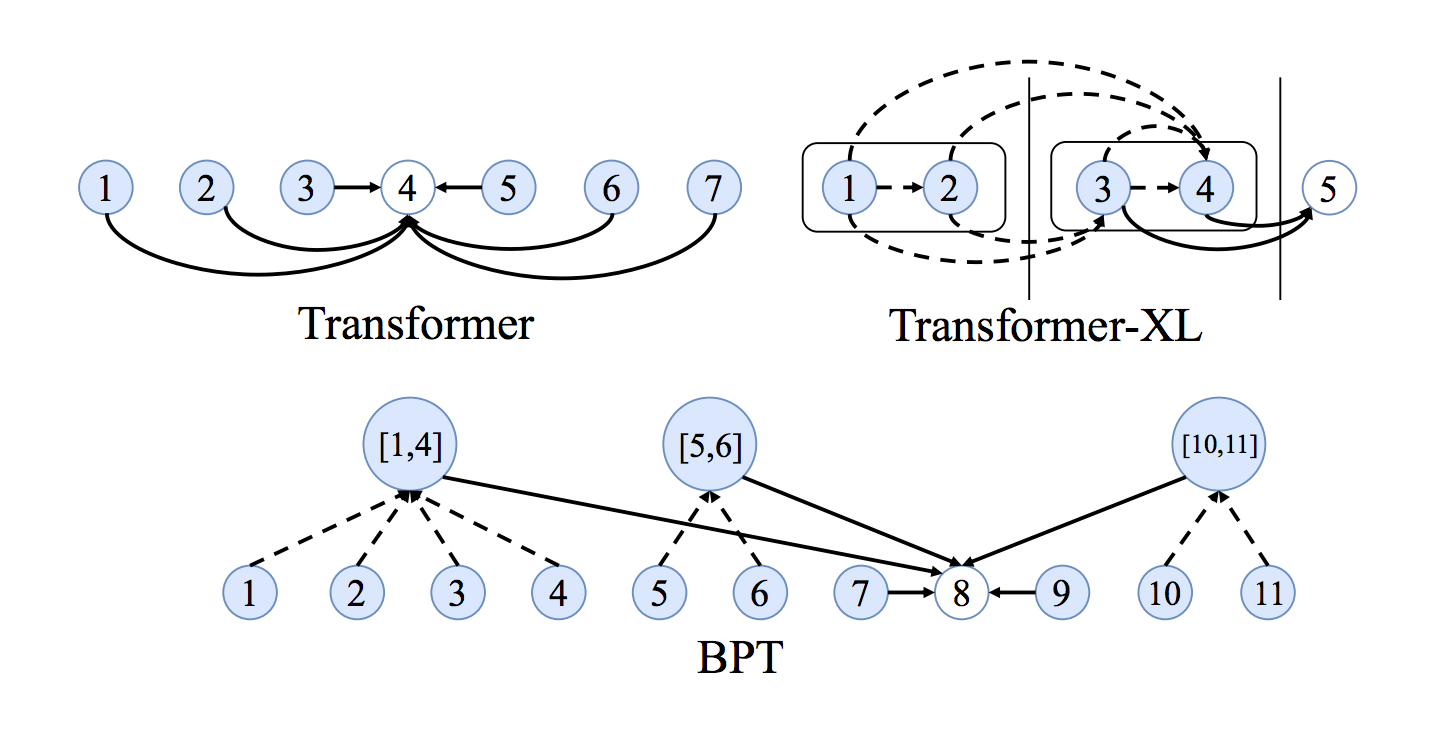

- The relation between transformers and Graph Neural Networks

- Time complexity: RNNs vs. Transformers

- Lessons Learned

- Transformers: merging the worlds of linguistic theory and statistical NLP using fully connected graphs

- Long term dependencies

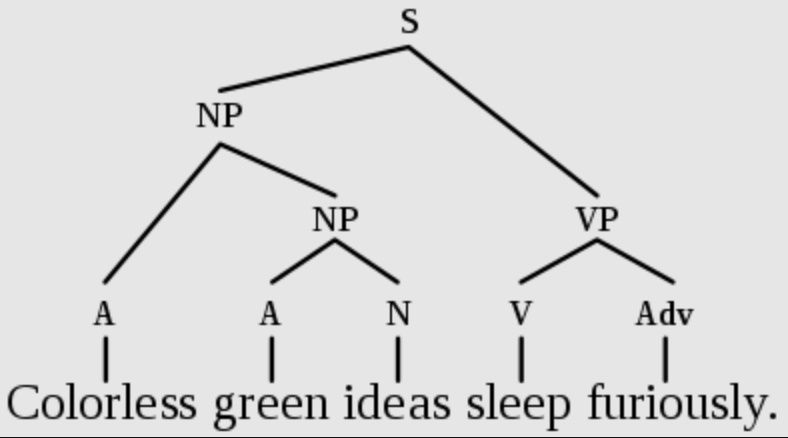

- Are Transformers learning neural syntax?

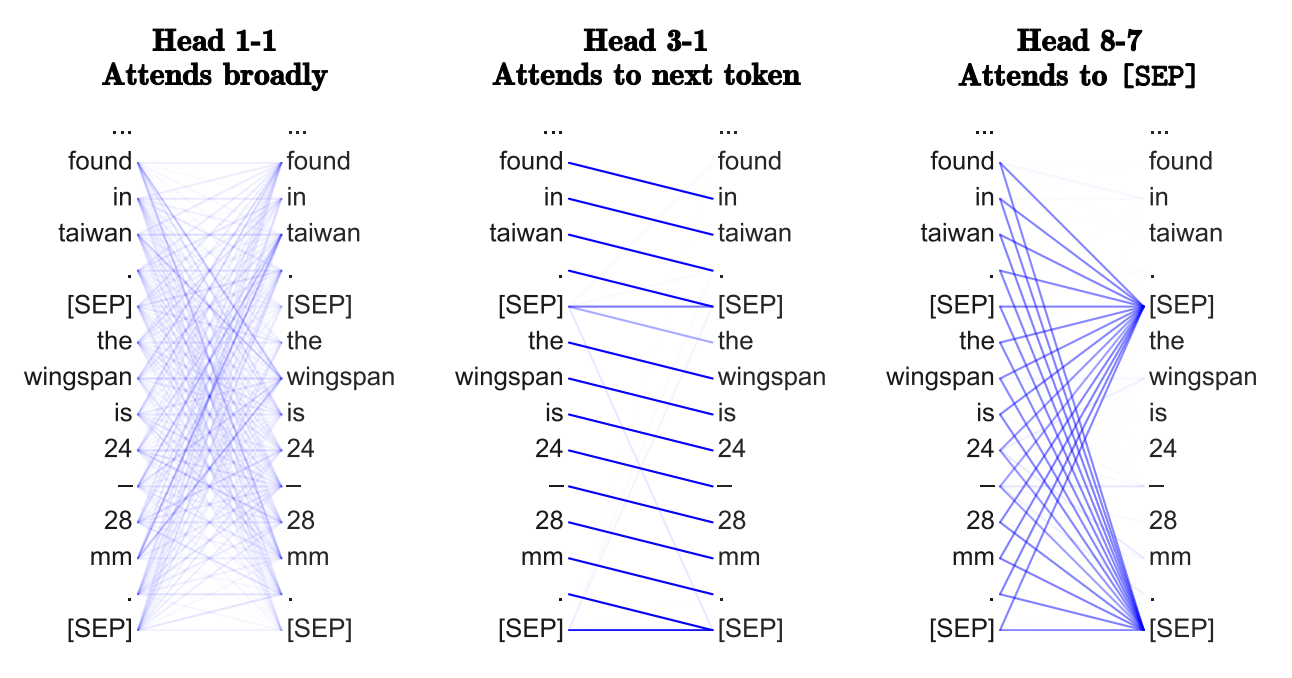

- Why multiple heads of attention? Why attention?

- Benefits of Transformers compared to RNNs/GRUs/LSTMs

- What would we like to fix about the transformer? / Drawbacks of Transformers

- Why is training Transformers so hard?

- Transformers: Extrapolation engines in high-dimensional space

- The road ahead for Transformers

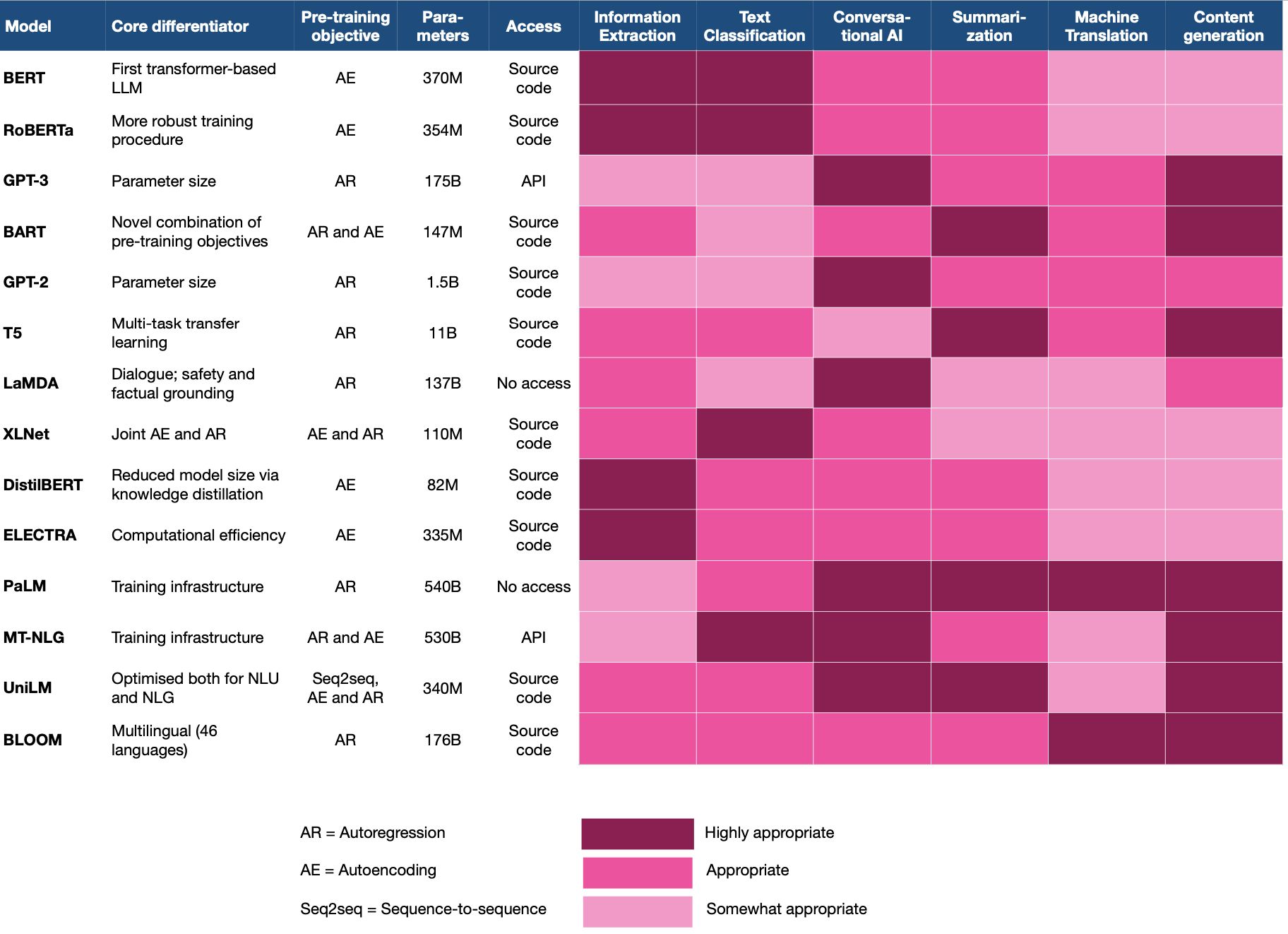

- Choosing the right language model for your NLP use-case: key takeaways

- Transformers Learning Recipe

- Transformers From Scratch

- The Illustrated Transformer

- Lilian Weng’s The Transformer Family

- The Annotated Transformer

- Attention Is All You Need

- HuggingFace Encoder-Decoder Models

- Transformers library by HuggingFace

- Inference Arithmetic

- Transformer Taxonomy

- GPT in 60 Lines of NumPy

- x-transformers

- Speeding up the GPT - KV cache

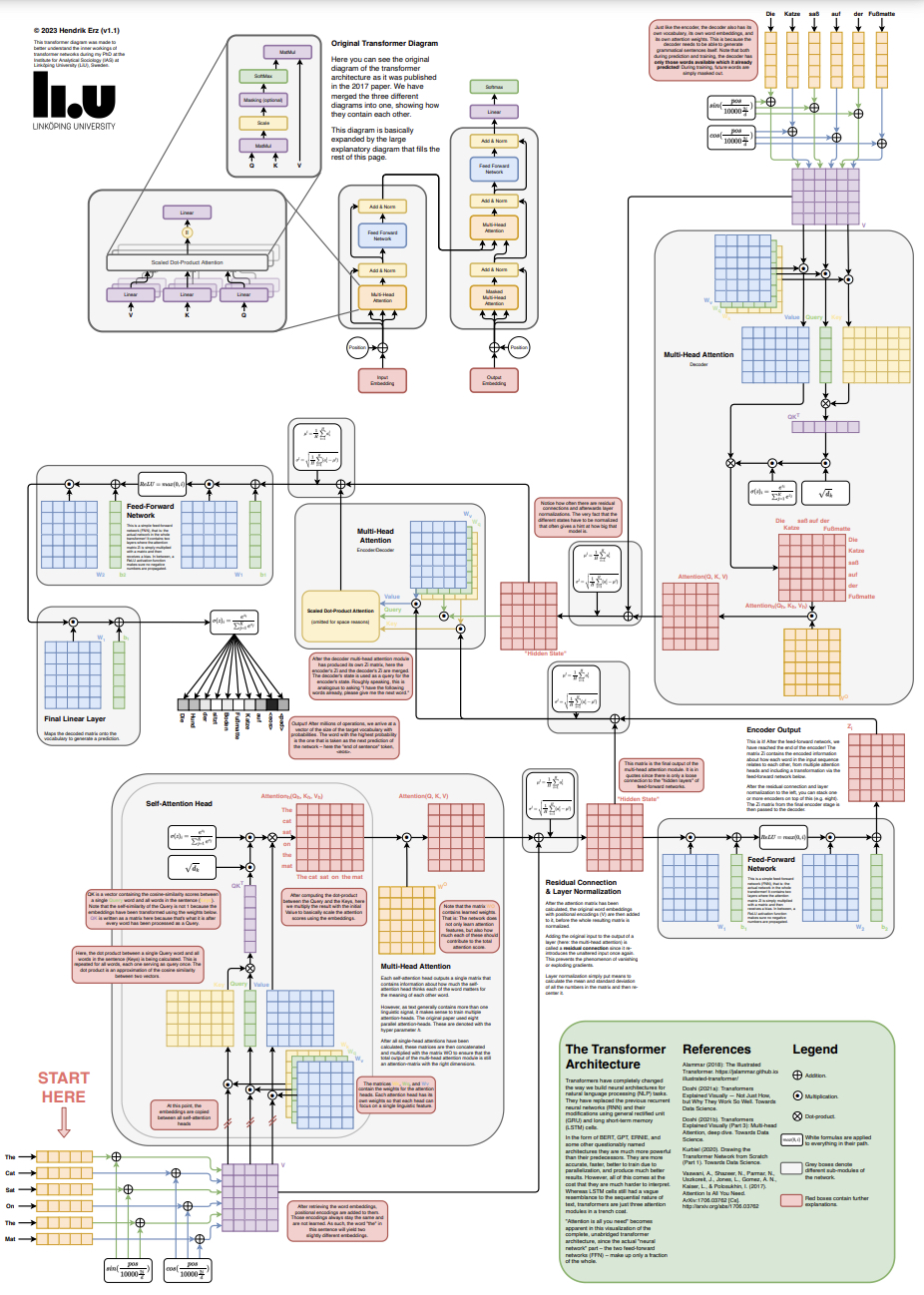

- Transformer Poster

- FAQs

- In Transformers, how does categorical cross entropy loss enable next token prediction?

- In the transformer’s language modeling head, after the softmax function, argmax is performed which is non-differentiable. How does backprop work in this case during training?

- Explain attention scores vs. attention weights? How are attention weights derived from attention scores?

- Did the original Transformer use absolute or relative positional encoding?

- How does the choice of positional encoding method can influence the number of parameters added to the model? Consider absolute, relative, and rotary positional encoding mechanisms.

- In Transformer-based models, how does RoPE enable context length extension?

- Why is the Transformer Architecture not as susceptible to vanishing gradients compared to RNNs?

- What is the fraction of attention weights relative to feed-forward weights in common LLMs?

- In BERT, how do we go from \(Q\), \(K\), and \(V\) at the final transformer block’s output to contextualized embeddings?

- What gets passed on from the output of the previous transformer block to the next in the encoder/decoder?

- In the vanilla transformer, what gets passed on from the output of the encoder to the decoder?

- How does attention mask differ for encode vs. decoder models? How is loss masking enforced?

- Further Reading

- References

- Citation

Background: Representation Learning for Natural Language Processing

- At a high level, all neural network architectures build representations of input data as vectors/embeddings, which encode useful syntactic and semantic information about the data. These latent or hidden representations can then be used for performing something useful, such as classifying an image or translating a sentence. The neural network learns to build better-and-better representations by receiving feedback, usually via error/loss functions.

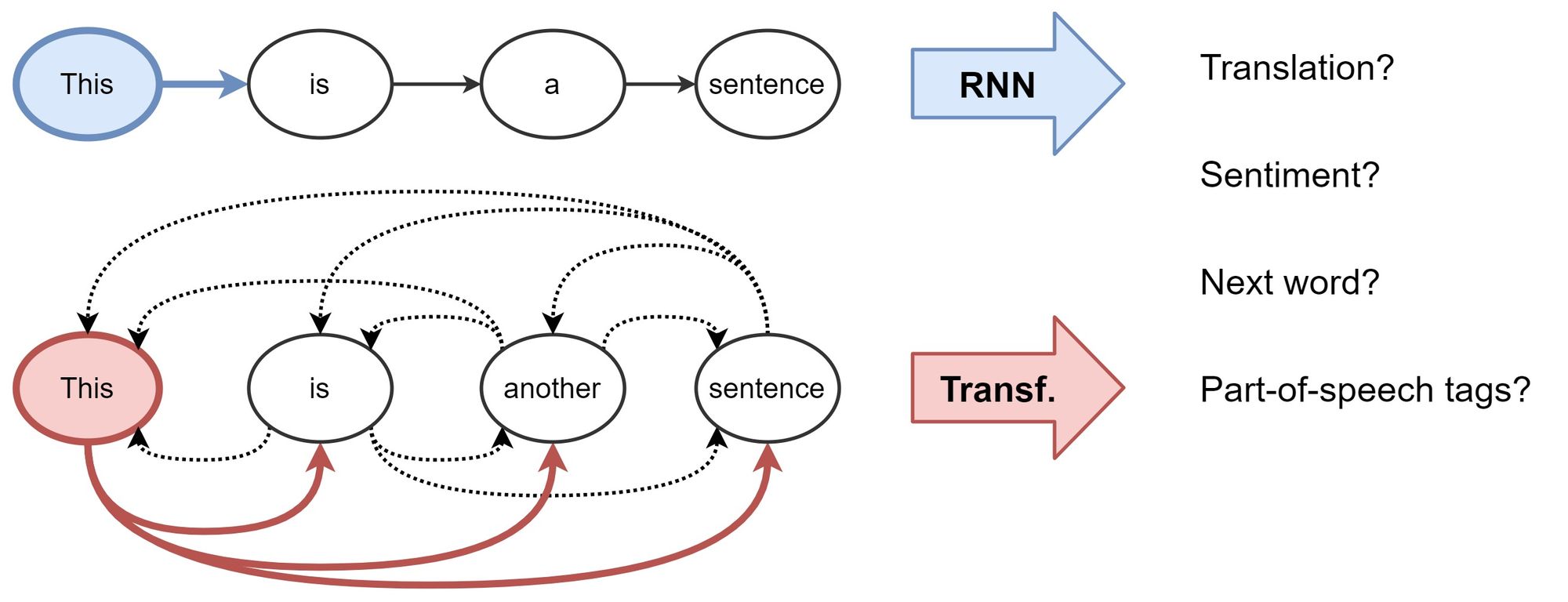

- For Natural Language Processing (NLP), conventionally, Recurrent Neural Networks (RNNs) build representations of each word in a sentence in a sequential manner, i.e., one word at a time. Intuitively, we can imagine an RNN layer as a conveyor belt (as shown in the figure below; source), with the words being processed on it autoregressively from left to right. In the end, we get a hidden feature for each word in the sentence, which we pass to the next RNN layer or use for our NLP tasks of choice. Chris Olah’s legendary blog for recaps on LSTMs and representation learning for NLP is highly recommend to develop a background in this area

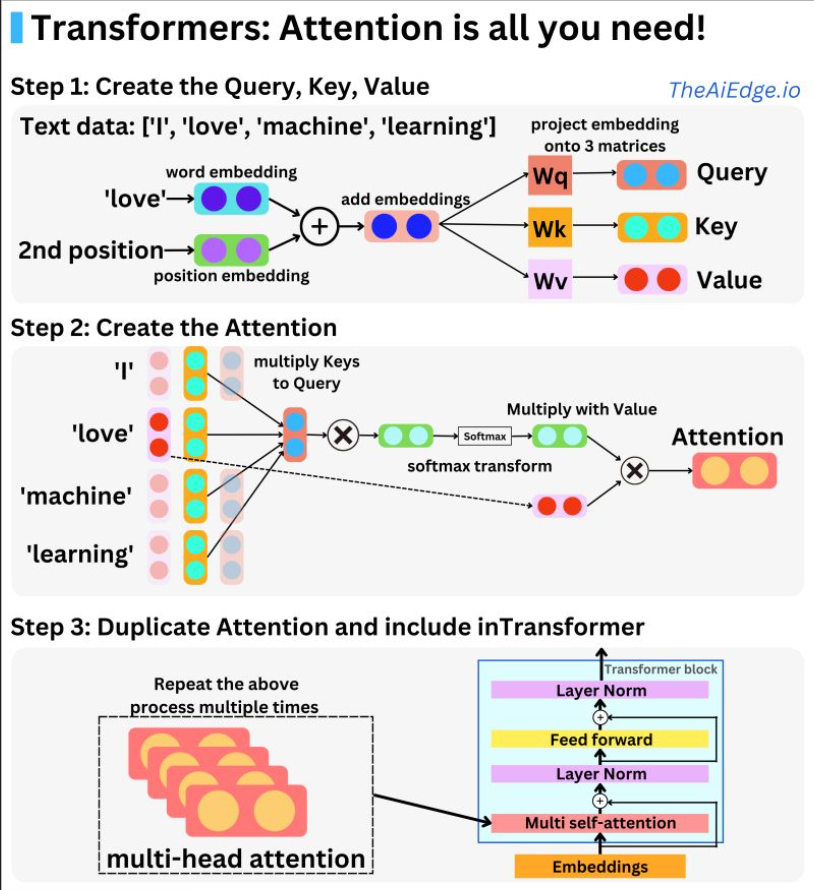

- Initially introduced for machine translation, Transformers have gradually replaced RNNs in mainstream NLP. The architecture takes a fresh approach to representation learning: Doing away with recurrence entirely, Transformers build features of each word using an attention mechanism (which had also been experimented in the world of RNNs as “Augmented RNNs”) to figure out how important all the other words in the sentence are w.r.t. to the aforementioned word. Knowing this, the word’s updated features are simply the sum of linear transformations of the features of all the words, weighted by their importance (as shown in the figure below; source). Back in 2017, this idea sounded very radical, because the NLP community was so used to the sequential–one-word-at-a-time–style of processing text with RNNs. As recommended reading, Lilian Weng’s Attention? Attention! offers a great overview on various attention types and their pros/cons.

Enter the Transformer

- History:

- LSTMs, GRUs and other flavors of RNNs were the essential building blocks of NLP models for two decades since 1990s.

- CNNs were the essential building blocks of vision (and some NLP) models for three decades since the 1980s.

- In 2017, Transformers (proposed in the “Attention Is All You Need” paper) demonstrated that recurrence and/or convolutions are not essential for building high-performance natural language models.

- In 2020, Vision Transformer (ViT) (An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale) demonstrated that convolutions are not essential for building high-performance vision models.

- The most advanced architectures in use before Transformers gained a foothold in the field were RNNs with LSTMs/GRUs. These architectures, however, suffered from the following drawbacks:

- They struggle with really long sequences (despite using LSTM and GRU units).

- They are fairly slow, as their sequential nature doesn’t allow any kind of parallel computing.

- At the time, LSTM-based recurrent models were the de-facto choice for language modeling. Here’s a timeline of some relevant events:

- ELMo (LSTM-based): 2018

- ULMFiT (LSTM-based): 2018

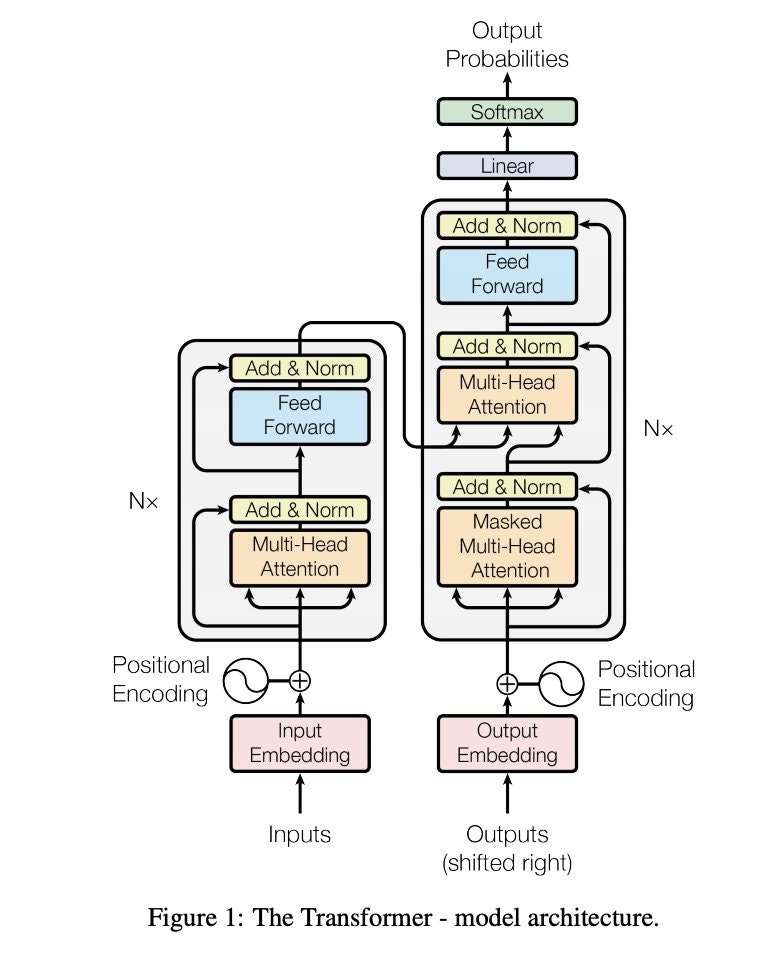

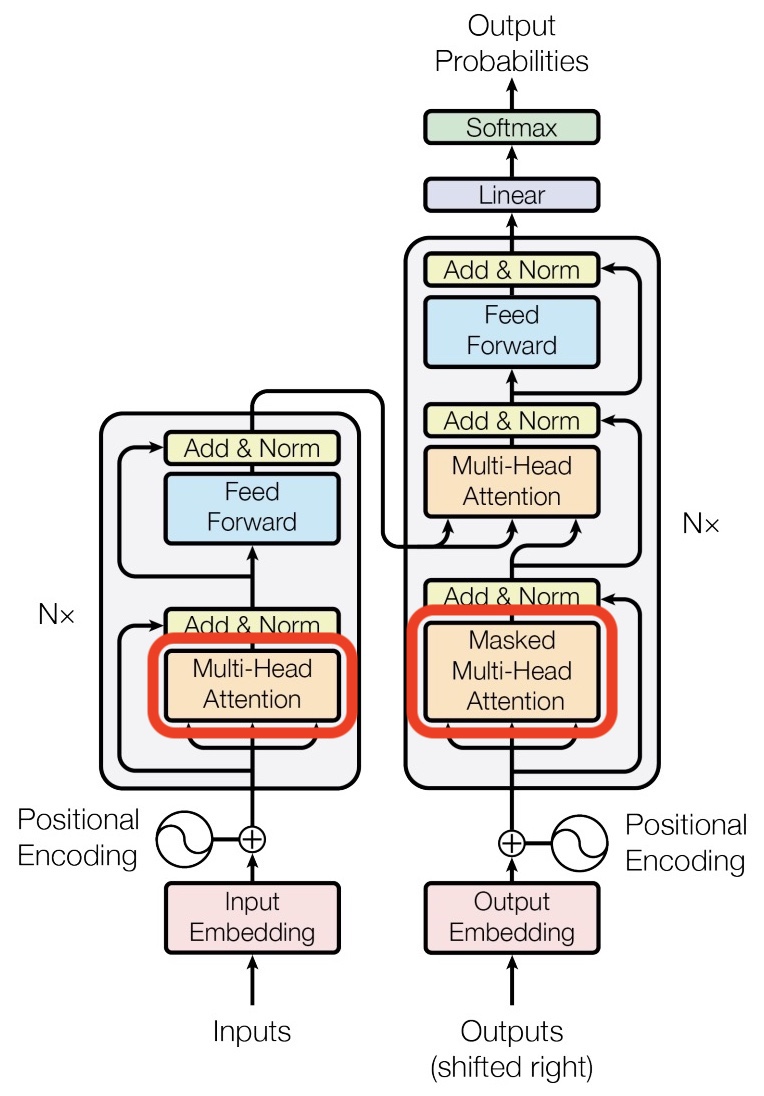

- Initially introduced for machine translation by Vaswani et al. (2017), the vanilla Transformer model utilizes an encoder-decoder architecture, which is able to perform sequence transduction with a sophisticated attention mechanism. As such, compared to prior recurrent architectures, Transformers possess fundamental differences in terms of how they work:

- They work on the entire sequence calculating attention across all word-pairs, which let them learn long-range dependencies.

- Some parts of the architecture can be processed in parallel, making training much faster.

- Owing to their unique self-attention mechanism, transformer models offer a great deal of representational capacity/expressive power.

- These performance and parallelization benefits led to Transformers gradually replacing RNNs in mainstream NLP. The architecture takes a fresh approach to representation learning: Doing away with recurrence entirely, Transformers build features of each word using an attention mechanism to figure out how important all the other words in the sentence are w.r.t. the aforementioned word. As such, the word’s updated features are simply the sum of linear transformations of the features of all the words, weighted by their importance.

- Back in 2017, this idea sounded very radical, because the NLP community was so used to the sequential – one-word-at-a-time – style of processing text with RNNs. The title of the paper probably added fuel to the fire! For a recap, Yannic Kilcher made an excellent video overview.

- However, Transformers did not become a overnight success until GPT and BERT immensely popularized them. Here’s a timeline of some relevant events:

- Attention is all you need: 2017

- Transformers revolutionizing the world of NLP, Speech, and Vision: 2018 onwards

- GPT (Transformer-based): 2018

- BERT (Transformer-based): 2018

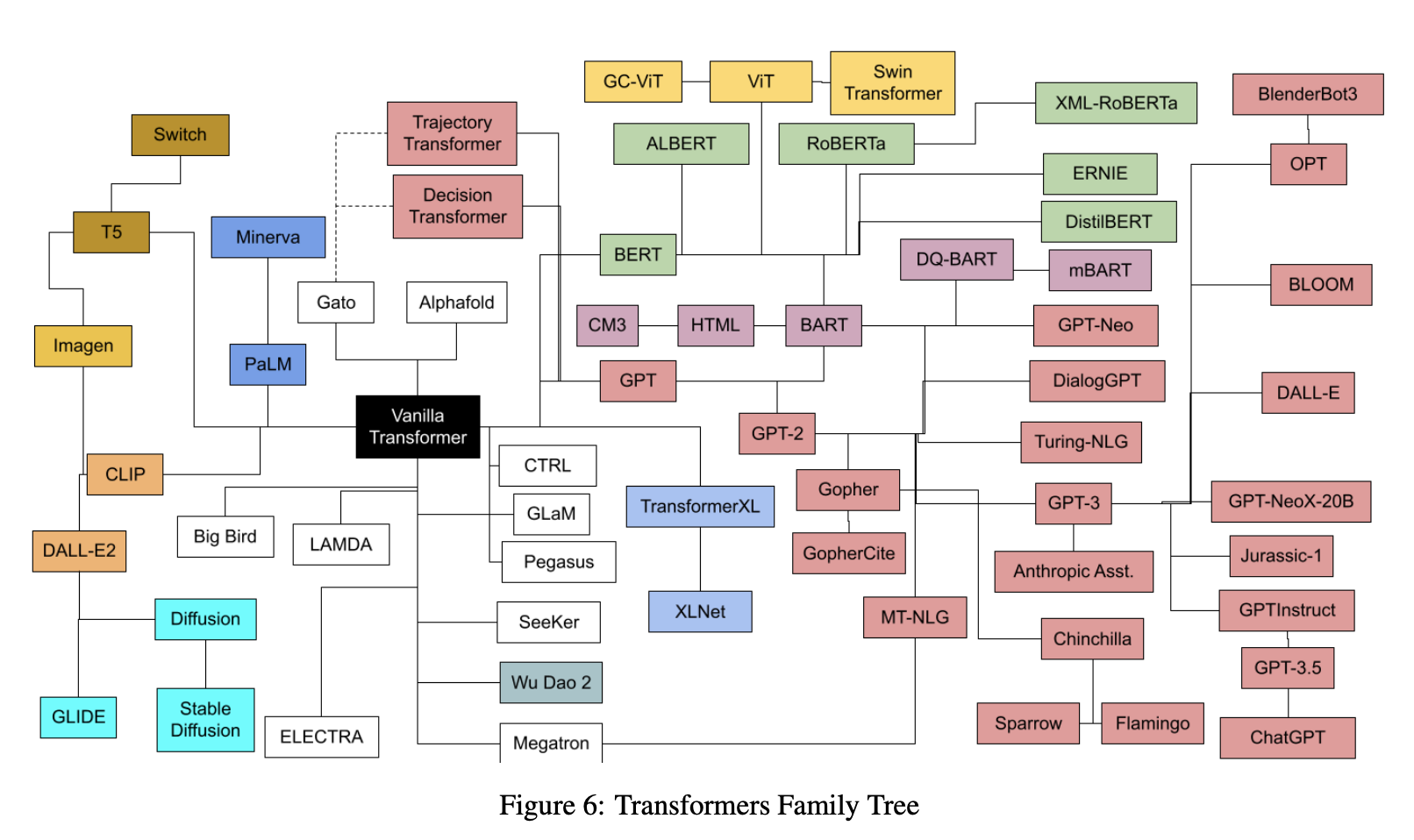

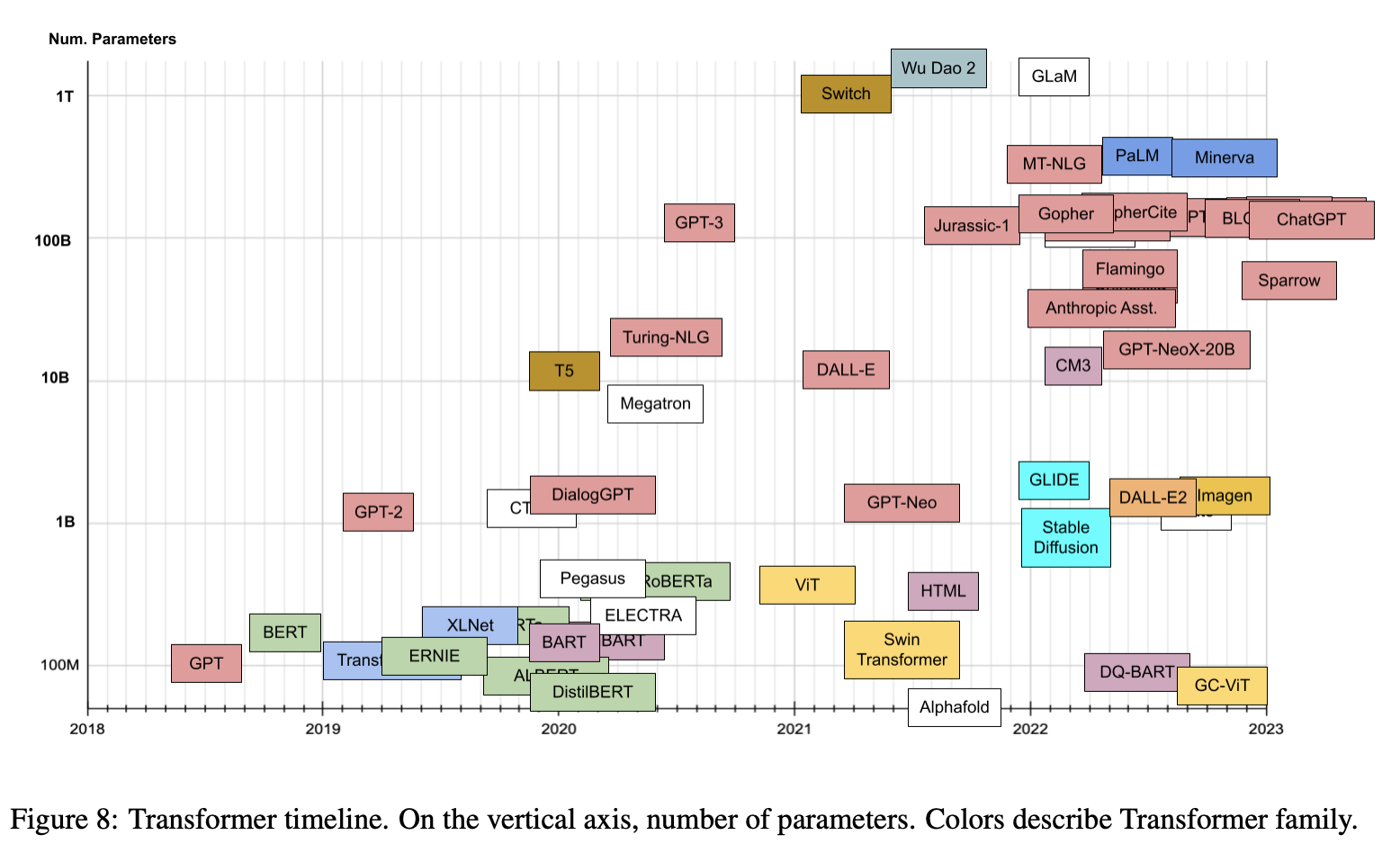

- Today, transformers are not just limited to language tasks but are used in vision, speech, and so much more. The following plot (source) shows the transformers family tree with prevalent models:

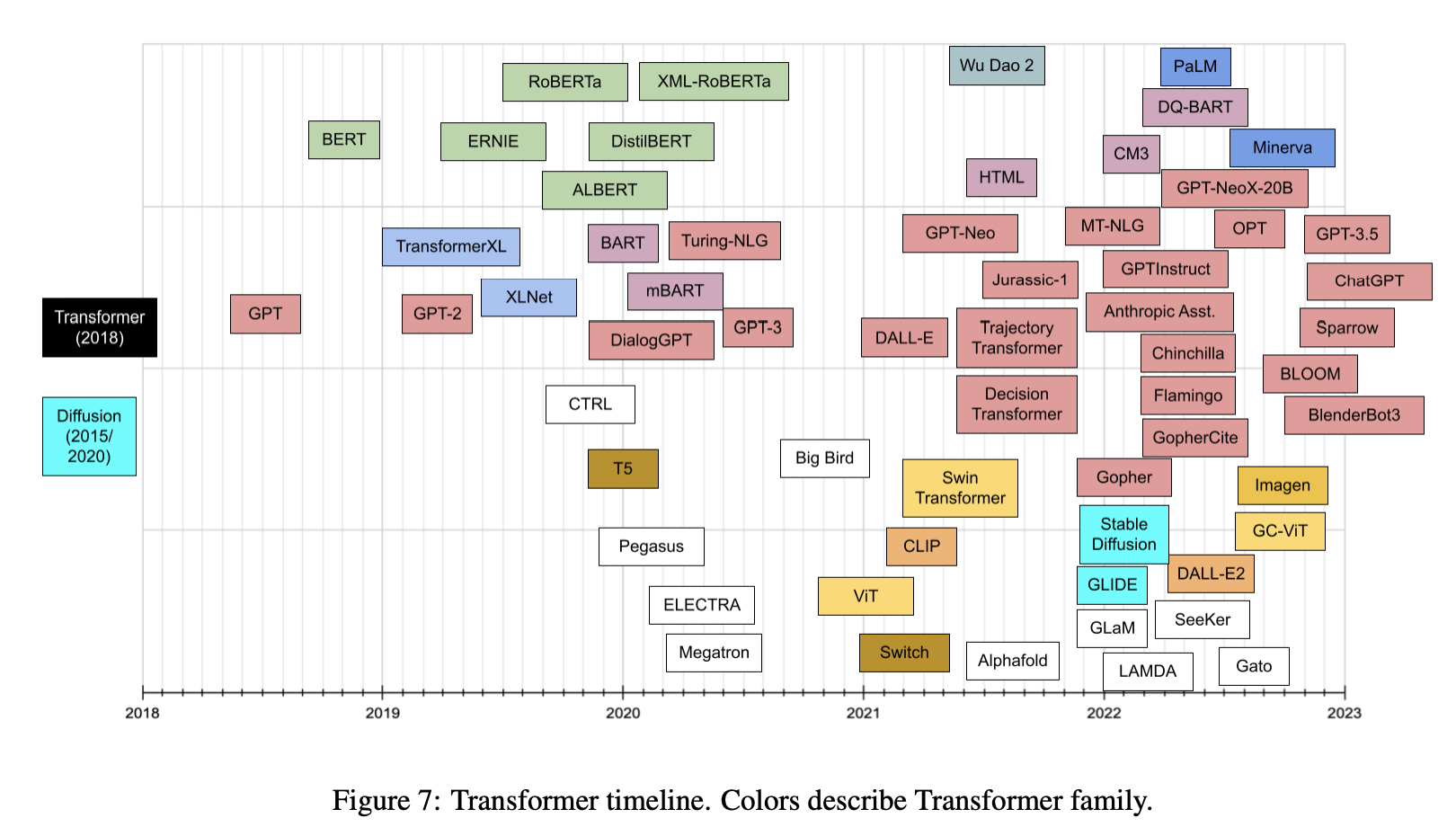

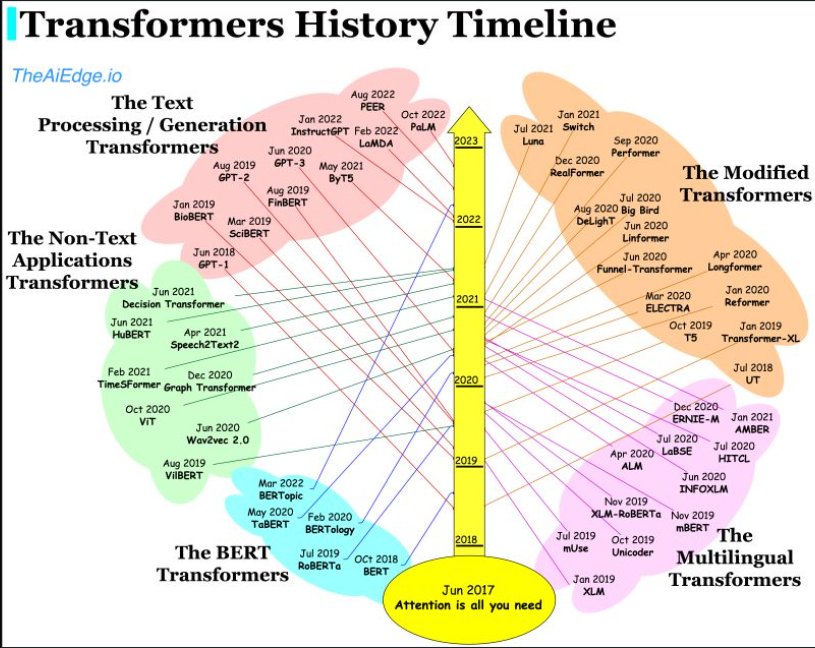

- And, the plots below (first plot source); (second plot source) show the timeline for prevalent transformer models:

- Lastly, the plot below (source) shows the timeline vs. number of parameters for prevalent transformer models:

Transformers vs. Recurrent and Convolutional Architectures: An Overview

Language

- In a vanilla language model, for example, nearby words would first get grouped together. The transformer, by contrast, runs processes so that every element in the input data connects, or pays attention, to every other element. This is referred to as “self-attention.” This means that as soon as it starts training, the transformer can see traces of the entire data set.

- Before transformers came along, progress on AI language tasks largely lagged behind developments in other areas. Infact, in this deep learning revolution that happened in the past 10 years or so, natural language processing was a latecomer and NLP was, in a sense, behind computer vision, per the computer scientist Anna Rumshisky of the University of Massachusetts, Lowell.

- However, with the arrival of Transformers, the field of NLP has received a much-needed push and has churned model after model that have beat the state-of-the-art in various NLP tasks.

- As an example, to understand the difference between vanilla language models (based on say, a recurrent architecture such as RNNs, LSTMs or GRUs) vs. transformers, consider these sentences: “The owl spied a squirrel. It tried to grab it with its talons but only got the end of its tail.” The structure of the second sentence is confusing: What do those “it”s refer to? A vanilla language model that focuses only on the words immediately around the “it”s would struggle, but a transformer connecting every word to every other word could discern that the owl did the grabbing, and the squirrel lost part of its tail.

Vision

In CNNs, you start off being very local and slowly get a global perspective. A CNN recognizes an image pixel by pixel, identifying features like edges, corners, or lines by building its way up from the local to the global. But in transformers, owing to self-attention, even the very first attention layer models global contextual information, making connections between distant image locations (just as with language). If we model a CNN’s approach as starting at a single pixel and zooming out, a transformer slowly brings the whole fuzzy image into focus.

- CNNs work by repeatedly applying filters on local patches of the input data, generating local feature representations (or “feature maps”) and incrementally increase their receptive field and build up to global feature representations. It is because of convolutions that photo apps can organize your library by faces or tell an avocado apart from a cloud. Prior to the transformer architecture, CNNs were thus considered indispensable to vision tasks.

- With the Vision Transformer (ViT), the architecture of the model is nearly identical to that of the first transformer proposed in 2017, with only minor changes allowing it to analyze images instead of words. Since language tends to be discrete, a lot of adaptations were to discretize the input image to make transformers work with visual input. Exactly mimicing the language approach and performing self-attention on every pixel would be prohibitively expensive in computing time. Instead, ViT divides the larger image into square units, or patches (akin to tokens in NLP). The size is arbitrary, as the tokens could be made larger or smaller depending on the resolution of the original image (the default is 16x16 pixels). But by processing pixels in groups, and applying self-attention to each, the ViT could quickly churn through enormous training data sets, spitting out increasingly accurate classifications.

- In Do Vision Transformers See Like Convolutional Neural Networks?, Raghu et al. sought to understand how self-attention powers transformers in vision-based tasks.

Multimodal Tasks

- As discussed in the Enter the Transformer section, other architectures are “one trick ponies” while multimodal learning requires handling of modalities with different patterns within a streamlined architecture with a reasonably high relational inductive bias to even remotely reach human-like intelligence. In other words, we needs a single versatile architecture that seamlessly transitions between senses like reading/seeing, speaking, and listening.

- The potential to offer a universal architecture that can be adopted for multimodal tasks (that requires simultaneously handling multiple types of data, such as raw images, video and language) is something that makes the transformer architecture unique and popular.

- Because of the siloed approach with earlier architectures where each type of data had its own specialized model, this was a difficult task to accomplish. However, transformers offer an easy way to combine multiple input sources. For example, multimodal networks might power a system that reads a person’s lips in addition to listening to their voice using rich representations of both language and image information.

- By using cross-attention, where the query vector originates from one source and the key and value vectors come from another, transformers become highly effective for multimodal learning.

- The transformer thus offers be a big step toward achieving a kind of “convergence” for neural net architectures, resulting in a universal approach to processing data from multiple modalities.

Breaking Down the Transformer

- Prior to delving into the internal mechanisms of the Transformer architecture by examining each of its constituent components in detail, it is essential to first establish a foundational understanding of several underlying mathematical and conceptual constructs. These include, but are not limited to, one-hot vectors, the dot product, matrix multiplication, embedding generation, and the attention mechanism.

Background

One-Hot Encoding

Overview

-

Digital computers are inherently designed to process numerical data. However, in most real-world scenarios, the input data encountered is not naturally numerical. For instance, images are represented by pixel intensity values, and speech signals are modeled as oscillograms or spectrograms. Therefore, the initial step in preparing such data for computational models, especially machine learning algorithms, is to convert non-numeric inputs—such as text—into a numerical format that can be subjected to mathematical operations.

-

One-hot encoding is a method that transforms categorical variables into a format suitable for machine learning algorithms to enhance their predictive performance. Specifically, it converts categorical data into a binary matrix that enables the model to interpret each category as a distinct and independent feature.

Conceptual Intuition

- As one begins to work with machine learning models, the term “one-hot encoding” frequently arises. For example, in the scikit-learn documentation, one-hot encoding is described as a technique to “encode categorical integer features using a one-hot aka one-of-K scheme.” To elucidate this concept, let us consider a concrete example.

Example: Basic Dataset

- Consider the following illustrative dataset:

| CompanyName | CategoricalValue | Price |

|---|---|---|

| VW | 1 | 20000 |

| Acura | 2 | 10011 |

| Honda | 3 | 50000 |

| Honda | 3 | 10000 |

-

In this example, the column CategoricalValue represents a numerical label associated with each unique categorical entry (i.e., company names). If an additional company were to be included, it would be assigned the next incremental value, such as 4. Thus, as the number of distinct entries increases, so too does the range of the categorical labels.

-

It is important to note that the above table is a simplified representation. In practice, categorical values are typically indexed from 0 to \(N - 1\), where \(N\) is the number of distinct categories.

-

The assignment of categorical labels can be efficiently performed using the

LabelEncoderprovided by thesklearnlibrary. -

Returning to one-hot encoding: by adhering to the procedures outlined in the

sklearndocumentation and conducting minor data preprocessing, we can transform the previous dataset into the following format, wherein a value of1denotes presence and0denotes absence:

| VW | Acura | Honda | Price |

|---|---|---|---|

| 1 | 0 | 0 | 20000 |

| 0 | 1 | 0 | 10011 |

| 0 | 0 | 1 | 50000 |

| 0 | 0 | 1 | 10000 |

-

At this point, it is worth contemplating why mere label encoding might be insufficient when training machine learning models. Why is one-hot encoding preferred?

-

The limitation of label encoding lies in its implicit assumption of ordinal relationships among categories. For example, it inadvertently introduces a false hierarchy by implying

VW > Acura > Hondadue to their numeric encodings. If the model internally computes an average or distance metric over such values, the result could be misleading. Consider:(1 + 3)/2 = 2, which incorrectly suggests that the average of VW and Honda is Acura. Such outcomes undermine the model’s predictive accuracy and can lead to erroneous inferences. -

Therefore, one-hot encoding is employed to mitigate this issue. It effectively “binarizes” the categorical variable, enabling each category to be treated as an independent and mutually exclusive feature.

-

As a further example, suppose there exists a categorical feature named

flower, which can take the valuesdaffodil,lily, androse. One-hot encoding transforms this feature into three distinct binary features:is_daffodil,is_lily, andis_rose.

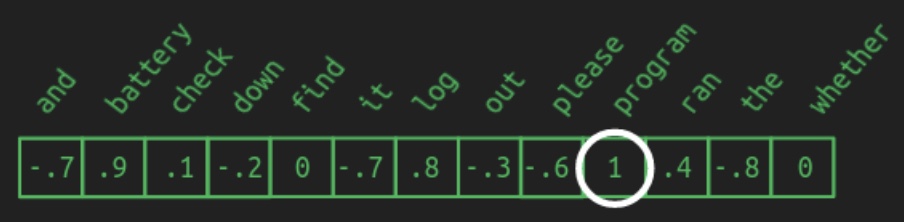

Example: Natural Language Processing

-

Drawing inspiration from Brandon Rohrer’s “Transformers From Scratch”, let us consider another illustrative scenario within the domain of natural language processing. Imagine we are designing a machine translation system that converts textual commands from one language to another. Such a model would receive a sequence of sounds and produce a corresponding sequence of words.

-

The first step involves defining the vocabulary—the set of all symbols that may appear in any input or output sequence. For this task, we would require two separate vocabularies: one representing input sounds and the other for output words.

-

Assuming we are working in English, the vocabulary could easily span tens of thousands of words, with additional entries to capture domain-specific jargon. This would result in a vocabulary size approaching one hundred thousand.

-

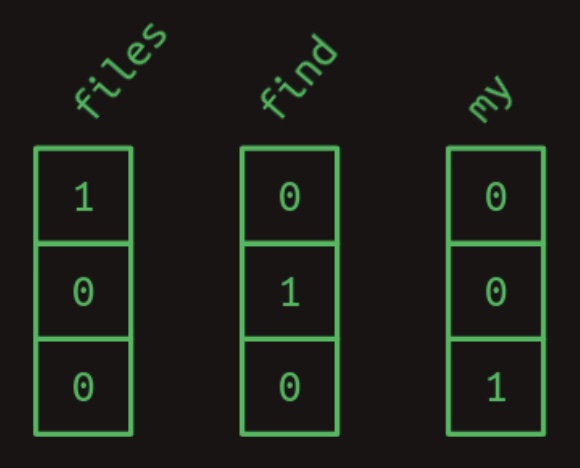

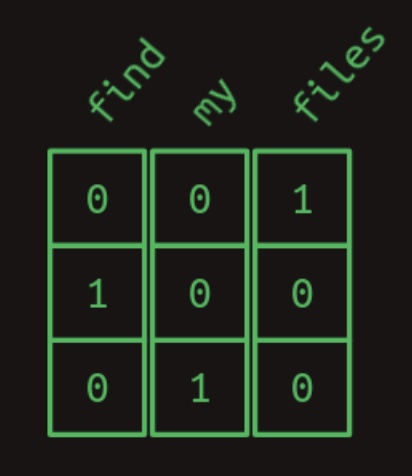

One straightforward method to convert words to numbers is to assign each word a unique integer ID. For instance, if our vocabulary consists of only three words—

files,find, andmy—we might map them as follows:files = 1,find = 2, andmy = 3. The phrase “Find my files” then becomes the sequence[2, 3, 1]. -

While this method is valid, an alternative representation that is more computationally favorable is one-hot encoding. In this approach, each word is encoded as a binary vector of length equal to the vocabulary size, where all elements are

0except for a single1at the index corresponding to the word. -

In other words, each word is still assigned a unique number, but now this number serves as an index in a binary vector. Using our earlier vocabulary, the phrase “find my files” can be encoded as follows:

- Thus, the sentence becomes a sequence of one-dimensional arrays (i.e., vectors), which, when concatenated, forms a two-dimensional matrix:

- It is pertinent to note that in this primer and many other contexts, the terms “one-dimensional array” and “vector” are used interchangeably. Likewise, “two-dimensional array” and “matrix” may be treated synonymously.

Dot product

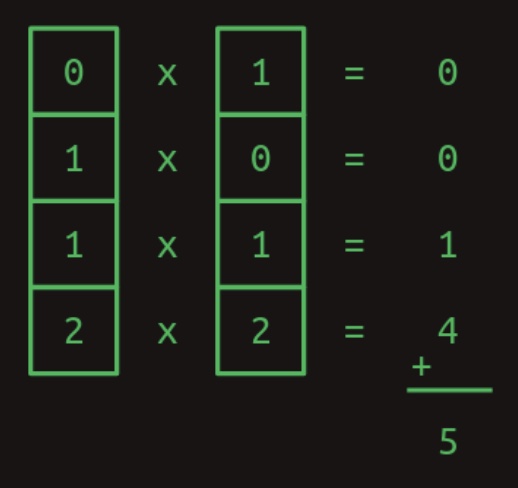

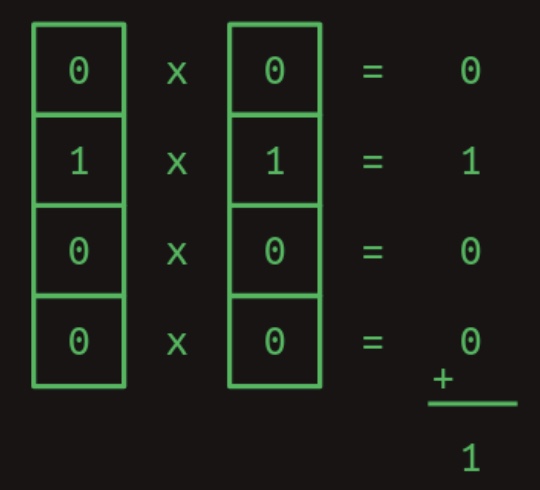

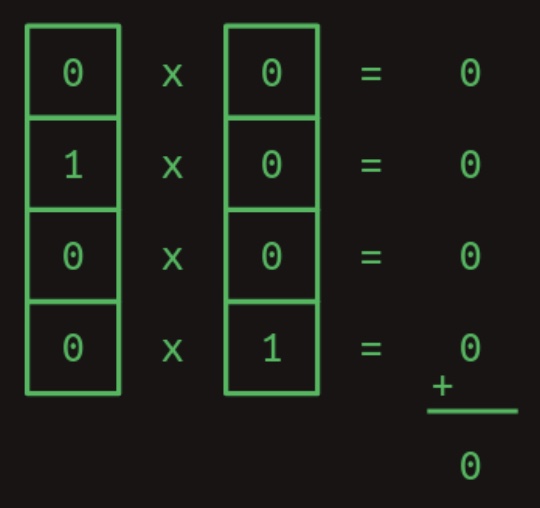

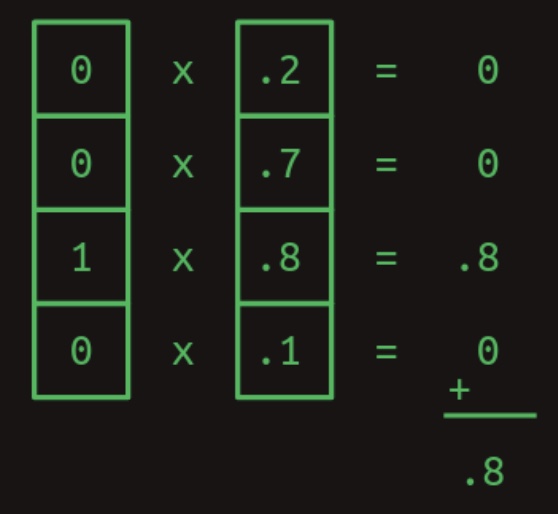

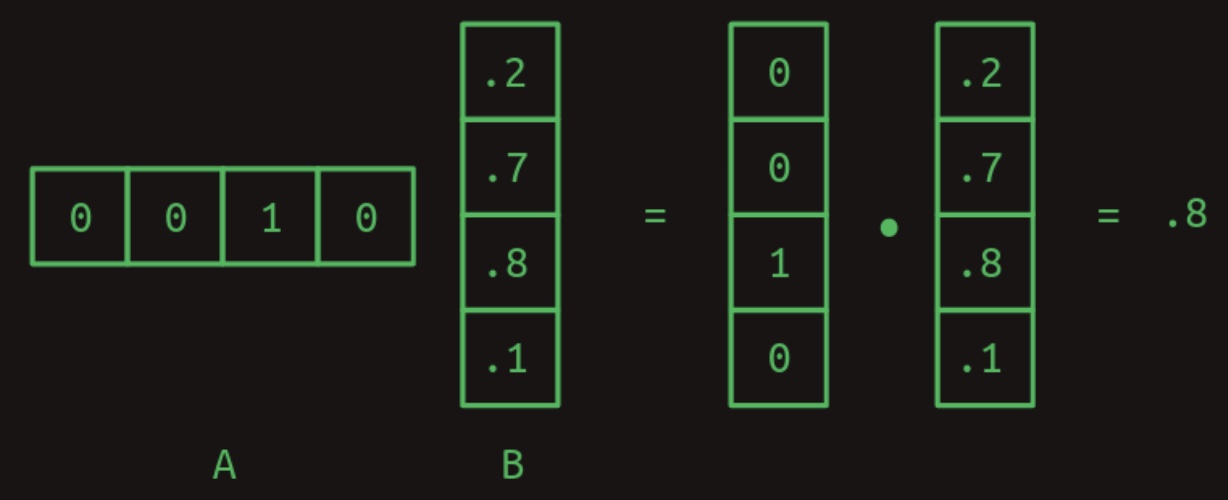

- One really useful thing about the one-hot representation is that it lets us compute dot product (also referred to as the inner product, scalar product or cosine similarity).

Algebraic Definition

-

The dot product of two vectors \(\mathbf{a}=\left[a_{1}, a_{2}, \ldots, a_{n}\right]\) and \(\mathbf{b}=\left[b_{1}, b_{2}, \ldots, b_{n}\right]\) is defined as:

\[\mathbf{a} \cdot \mathbf{b}=\sum_{i=1}^{n} a_{i} b_{i}=a_{1} b_{1}+a_{2} b_{2}+\cdots+a_{n} b_{n}\]- where \(\Sigma\) denotes summation and \(n\) is the dimension of the vector space.

-

For instance, in three-dimensional space, the dot product of vectors \([1, 3, -5]\) and \([4,-2,-1]\) is:

\[\begin{aligned} {[1,3,-5] \cdot[4,-2,-1] } &=(1 \times 4)+(3 \times-2)+(-5 \times-1) \\ &=4-6+5 \\ &=3 \end{aligned}\] -

The dot product can also be written as a product of two vectors, as below.

\[\mathbf{a} \cdot \mathbf{b}=\mathbf{a b}^{\top}\]- where \(\mathbf{b}^{\top}\) denotes the transpose of \(\mathbf{b}\).

-

Expressing the above example in this way, a \(1 \times 3\) matrix (row vector) is multiplied by a \(3 \times 1\) matrix (column vector) to get a \(1 \times 1\) matrix that is identified with its unique entry:

\[\left[\begin{array}{lll} 1 & 3 & -5 \end{array}\right]\left[\begin{array}{c} 4 \\ -2 \\ -1 \end{array}\right]=3\] -

Key takeaway:

- In summary, to get the dot product of two vectors, multiply their corresponding elements, then add the results. For a visual example of calculating the dot product for two vectors, check out the figure below.

Geometric Definition

-

In Euclidean space, a Euclidean vector is a geometric object that possesses both a magnitude and a direction. A vector can be pictured as an arrow. Its magnitude is its length, and its direction is the direction to which the arrow points. The magnitude of a vector a is denoted by \(\mid \mid a \mid \mid\). The dot product of two Euclidean vectors \(\mathbf{a}\) and \(\mathbf{b}\) is defined by,

\[\mathbf{a} \cdot \mathbf{b}=\mid \mathbf{a}\mid \mid \mathbf{b}\mid \cos \theta\]- where \(\theta\) is the angle between \(\mathbf{a}\) and \(\mathbf{b}\).

-

The above equation establishes the relation between dot product and cosine similarity.

Properties of the dot product

-

Dot products are especially useful when we’re working with our one-hot word representations owing to it’s properties, some of which are highlighted below.

-

The dot product of any one-hot vector with itself is one.

- The dot product of any one-hot vector with another one-hot vector is zero.

- The previous two examples show how dot products can be used to measure similarity. As another example, consider a vector of values that represents a combination of words with varying weights. A one-hot encoded word can be compared against it with the dot product to show how strongly that word is represented. The following figure shows how a similarity score between two vectors is calculated by way of calculating the dot product.

Matrix Multiplication as a Series of Dot Products

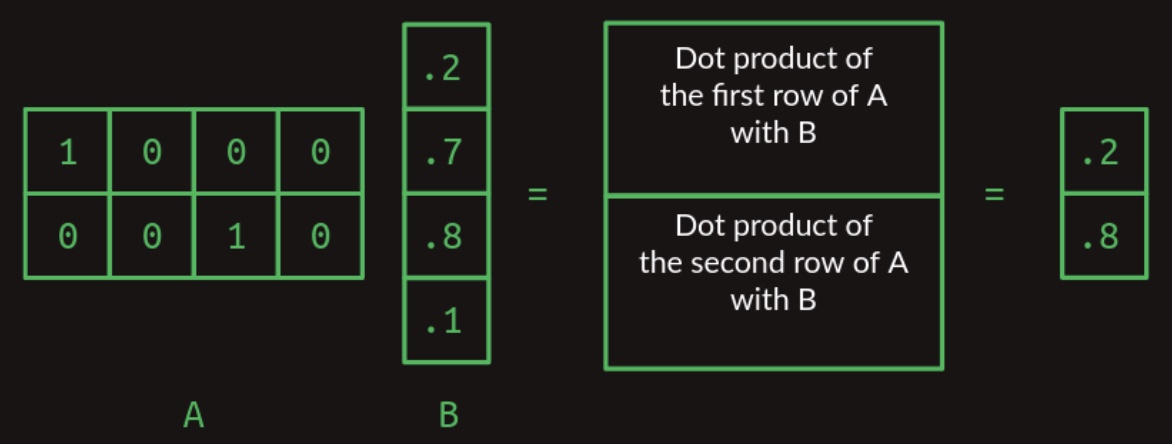

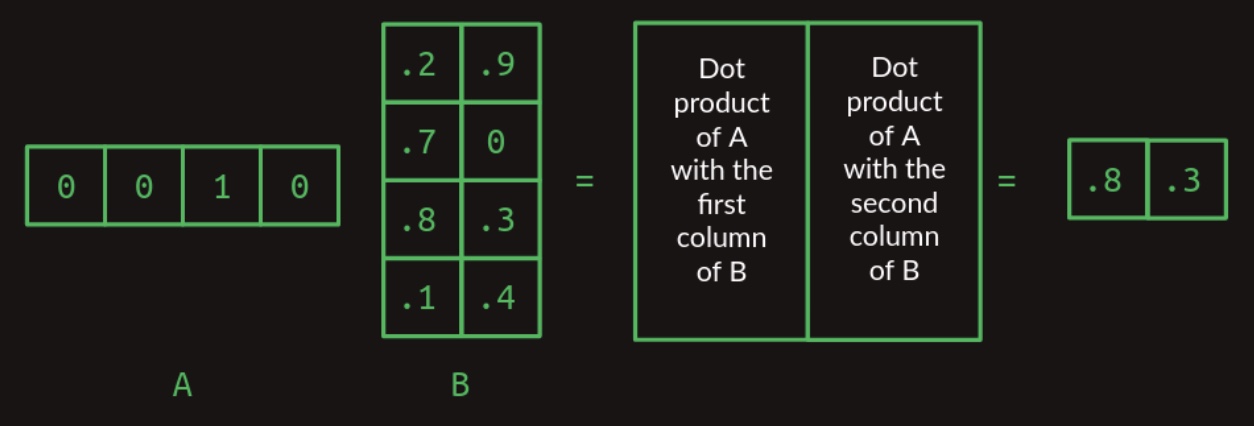

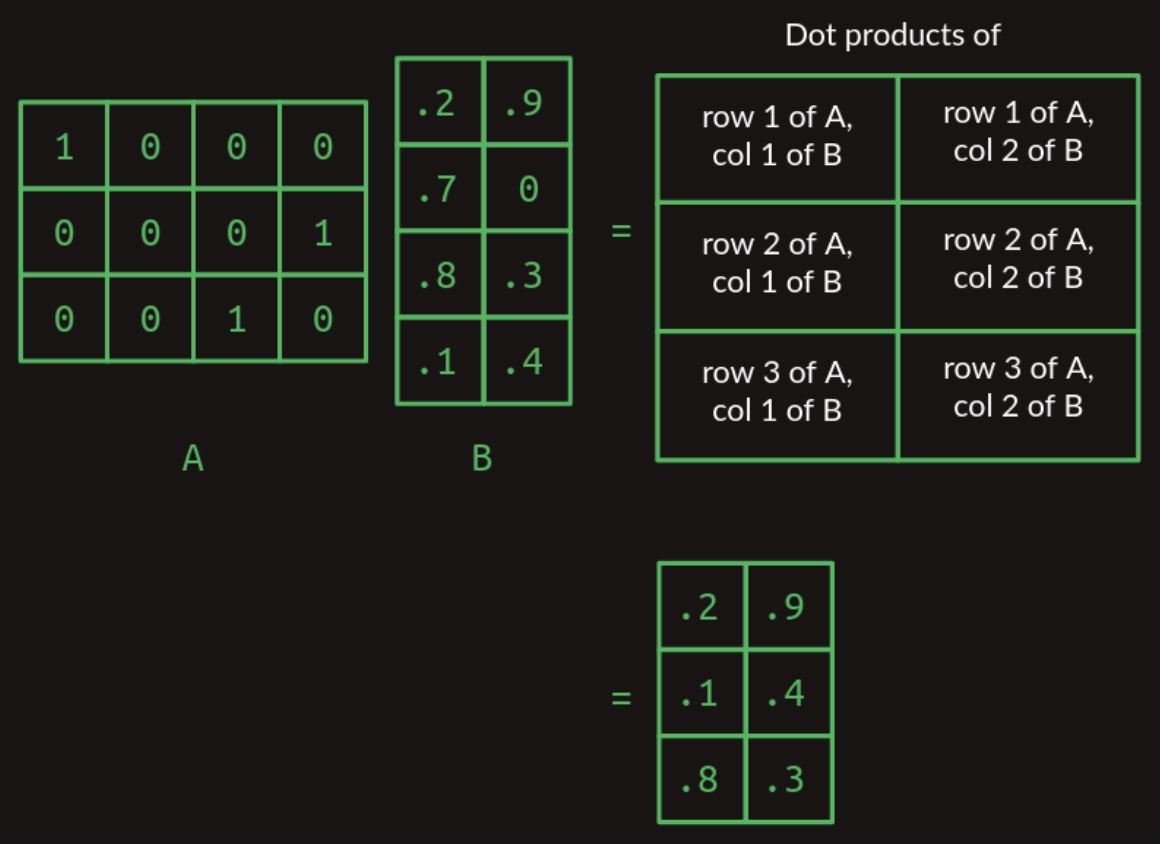

- The dot product constitutes the fundamental operation underlying matrix multiplication, which is a highly structured and well-defined procedure for combining two two-dimensional arrays (matrices). Let us denote the first matrix by \(A\) and the second by \(B\). In the most elementary scenario, where \(A\) consists of a single row and \(B\) consists of a single column, the matrix multiplication reduces to the dot product of these two vectors. This is illustrated in the figure below:

-

Observe that for this operation to be well-defined, the number of columns in matrix \(A\) must be equal to the number of rows in matrix \(B\). This dimensional compatibility is a prerequisite for the dot product to be computable.

-

As the dimensions of matrices \(A\) and \(B\) increase, the computational complexity of matrix multiplication grows accordingly—specifically, in a quadratic manner with respect to the matrix dimensions. When matrix \(A\) contains multiple rows, the multiplication proceeds by computing the dot product between each row of \(A\) and the entire matrix \(B\). Each such operation produces a single scalar value, and the collection of these values forms a resulting matrix with the same number of rows as \(A\). This process is depicted in the following figure, which shows the multiplication of a two-row matrix and a single-column matrix:

- If matrix \(B\) possesses more than one column, the operation is generalized by taking the dot product of each row in \(A\) with each column in \(B\). The outcome of each row-column dot product populates the corresponding cell in the resultant matrix. The figure below demonstrates the multiplication of a one-row matrix with a two-column matrix:

- Building on these principles, we can now define the general case of matrix multiplication for two arbitrary matrices, provided that the number of columns in matrix \(A\) equals the number of rows in matrix \(B\). The resultant matrix will have a shape defined by the number of rows in \(A\) and the number of columns in \(B\). This general case is visualized in the figure below, which illustrates the multiplication of a one-by-three matrix with a two-column matrix:

Matrix Multiplication as a Table Lookup

-

In the preceding section, we examined how matrix multiplication can function as a form of table lookup.

-

Consider a matrix \(A\) composed of a stack of one-hot encoded vectors. For the sake of illustration, suppose these vectors have non-zero entries (i.e., ones) located in the first column, fourth column, and third column, respectively. During matrix multiplication with another matrix \(B\), these one-hot vectors act as selection mechanisms that extract the corresponding rows—specifically, the first, fourth, and third rows—from matrix \(B\), in that order.

-

This method of employing a one-hot vector to selectively retrieve a specific row from a matrix lies at the conceptual foundation of the Transformer architecture. It enables discrete, deterministic access to embedding representations or other learned vector structures by treating the multiplication as a row-indexing operation.

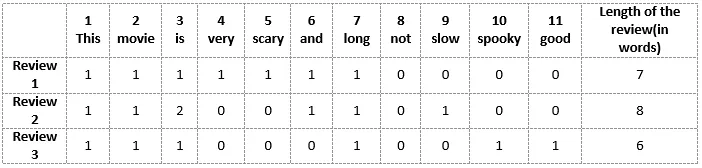

First-Order Sequence Model

-

Let us momentarily set aside matrices and return our focus to sequences of words, which are the primary objects of interest in natural language processing.

-

Suppose we are developing a rudimentary natural language interface for a computer system, and initially, we aim to accommodate only three predefined command phrases:

Show me my directories please.

Show me my files please.

Show me my photos please.

- Given these sample utterances, our working vocabulary consists of the following seven distinct words:

{directories, files, me, my, photos, please, show}

-

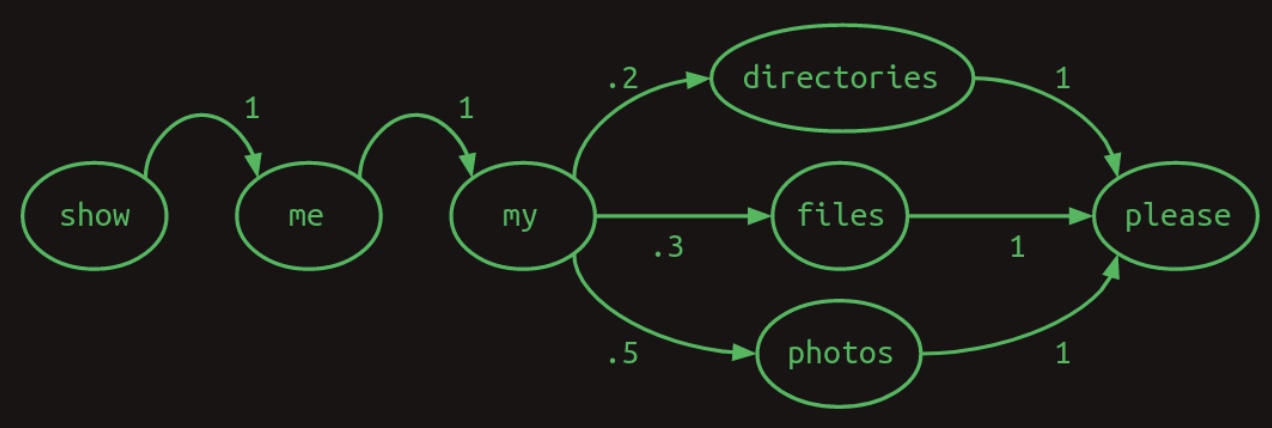

One effective way to represent such sequences is through the use of a transition model, which encapsulates the probabilistic dependencies between successive words. For each word in the vocabulary, the model estimates the likelihood of possible subsequent words. For instance, if users refer to photos 50% of the time, files 30% of the time, and directories 20% of the time following the word “my”, these probabilities define a distribution over transitions from “my”.

-

Importantly, the transition probabilities originating from any given word must collectively sum to one, reflecting a complete probability distribution over the vocabulary. The following diagram illustrates this concept in the form of a Markov chain:

-

This specific type of transition model is referred to as a Markov chain, as it satisfies the Markov property: the probability of transitioning to the next word depends only on a limited number of prior states. More precisely, this is a first-order Markov model, meaning that the next word is conditioned only on the immediately preceding word. If the model instead considered the two most recent words, it would be categorized as a second-order Markov model.

-

We now return to matrices, which offer a convenient and compact representation of such probabilistic transition systems. The Markov chain can be encoded as a transition matrix, where each row and column corresponds to a unique word in the vocabulary, indexed identically to their respective positions in the one-hot encoding.

-

The transition matrix can thus be interpreted as a lookup table. Each row represents a starting word, and the values in that row’s columns indicate the probabilities of each word in the vocabulary occurring next. Because these values represent probabilities, they all lie in the interval \([0, 1]\), and the entries in each row collectively sum to 1.

-

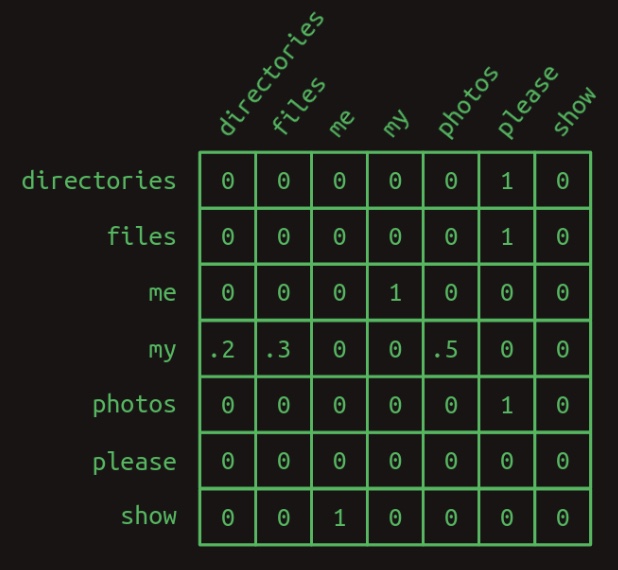

The diagram below, adapted from Brandon Rohrer’s “Transformers From Scratch”, illustrates such a transition matrix:

-

Within this matrix, the structure of the three example sentences is clearly discernible. The vast majority of the transition probabilities are binary (i.e., either 0 or 1), indicating deterministic transitions. The only point of stochasticity arises after the word “my,” where the model branches probabilistically to either “directories,” “files,” or “photos.” Outside of this branching, the sequence progression is entirely deterministic, and this is reflected by the predominance of ones and zeros in the matrix.

-

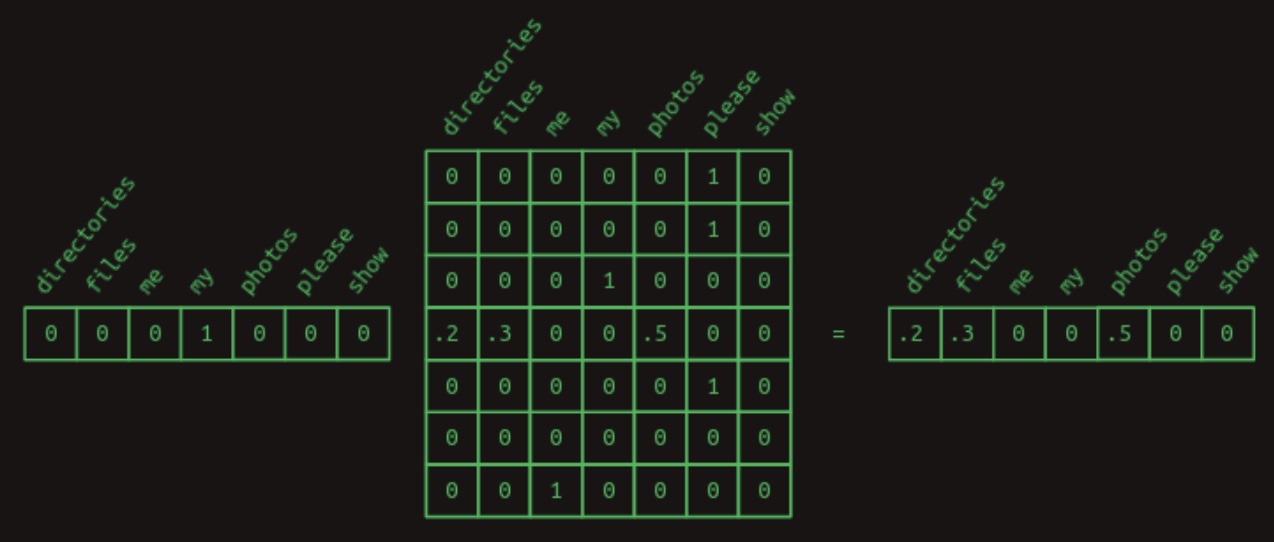

We now revisit the earlier technique of matrix-vector multiplication for efficient retrieval. Specifically, we can multiply a one-hot vector—representing a given word—with the transition matrix to extract the associated row, which contains the conditional probability distribution for the next word. For example, to determine the distribution over words that follow “my,” we construct a one-hot vector for “my” and multiply it with the transition matrix. This operation retrieves the relevant row and thus reveals the desired transition probabilities.

-

The following figure, also from Brandon Rohrer’s “Transformers From Scratch”, visualizes this operation:

Second-Order Sequence Model

-

Predicting the next word in a sequence based solely on the current word is inherently limited. It is akin to attempting to predict the remainder of a musical composition after hearing only the initial note. The likelihood of accurate prediction improves significantly when at least two preceding words are taken into account.

-

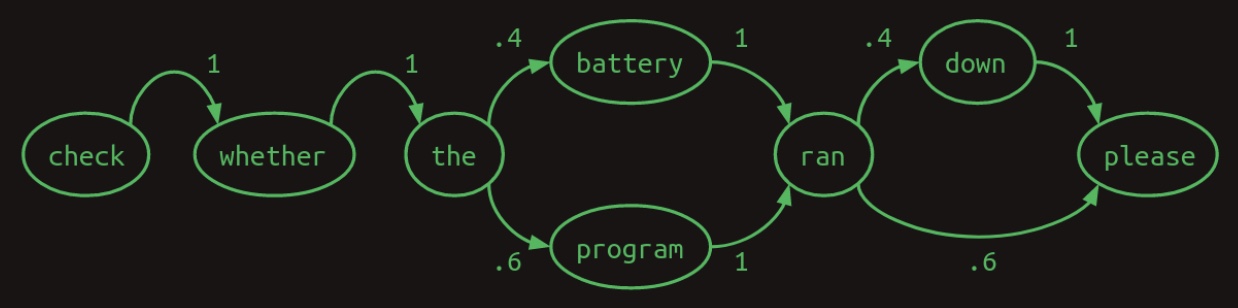

This improvement is demonstrated using a simplified language model tailored for basic computer commands. Suppose the model is trained to recognize only the following two sentences, occurring in a \(\frac{40}{60}\) ratio, respectively:

Check whether the battery ran down please.

Check whether the program ran please.

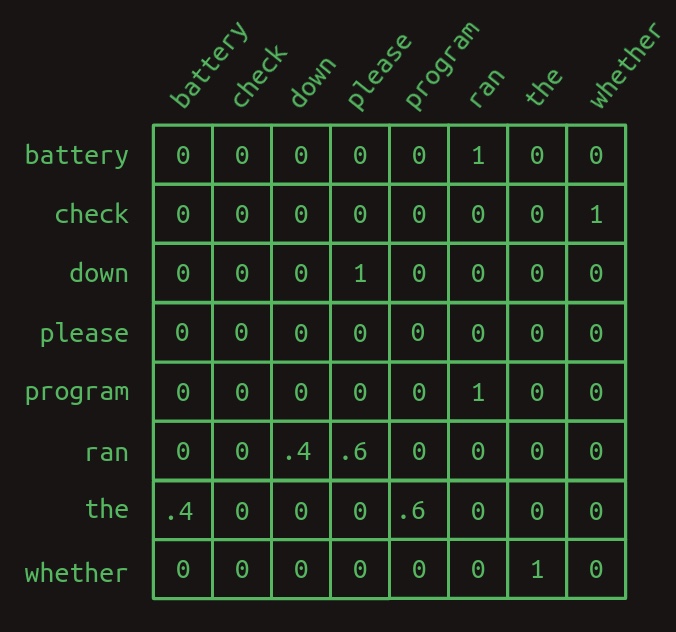

- A first-order Markov chain—where the next word depends only on the immediately preceding word—can model this system. The diagram below, sourced from Brandon Rohrer’s Transformers From Scratch, illustrates the first-order transition structure:

-

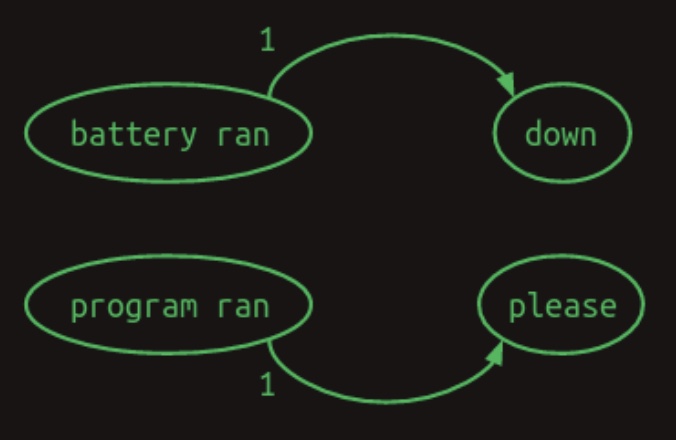

However, this model exhibits limitations. If the model considers not just one but the two most recent words, its predictive accuracy improves. For instance, when it encounters the phrase

battery ran, it can confidently predict that the next word isdown. Conversely,program ranleads unambiguously toplease. Incorporating the second-most-recent word eliminates branching ambiguity, reduces uncertainty, and enhances model confidence. -

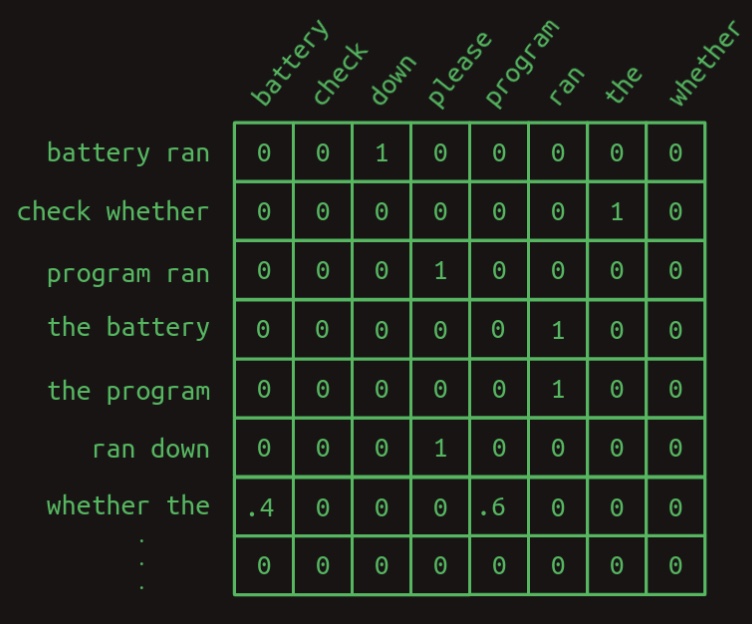

Such a system is known as a second-order Markov model, as it uses two previous states (words) to predict the next. While second-order chains are more difficult to visualize, the underlying connections offer greater predictive power. The diagram below, again from Brandon Rohrer’s Transformers From Scratch, illustrates this structure:

-

To emphasize the contrast, consider the following two transition matrices:

- First-order transition matrix:

- Second-order transition matrix:

-

In the second-order matrix, each row corresponds to a unique combination of two words, representing context for predicting the next word. Consequently, with a vocabulary size of \(N\), the matrix will contain \(N^2\) rows.

-

The advantage of this structure is increased certainty. The second-order matrix contains more entries with a value of 1 and fewer fractional probabilities, indicating a more deterministic model. Only a single row contains fractional values—highlighting the only point of uncertainty in the model. Intuitively, incorporating two words rather than one provides additional context, thereby enhancing the reliability of next-word predictions.

Second-Order Sequence Model with Skips

- A second-order model is effective when the word immediately following depends primarily on the two most recent words. However, complications arise when longer-range dependencies are necessary. Consider the following pair of equally likely sentences:

Check the program log and find out whether it ran please.

Check the battery log and find out whether it ran down please.

-

In this case, to accurately predict the word following

ran, one would need to reference context extending up to eight words into the past. One potential solution is to adopt a higher-order Markov model, such as a third-, fourth-, or even eighth-order model. However, this approach becomes computationally intractable: a naive implementation of an eighth-order model would necessitate a transition matrix with \(N^8\) rows, which is prohibitively large for realistic vocabulary sizes. -

An alternative strategy is to preserve a second-order model while allowing for non-contiguous dependencies. Specifically, the model considers the combination of the most recent word with any previously seen word in the sequence. Although each prediction still relies on just two words, the approach enables the model to capture long-range dependencies.

-

This technique, often termed second-order with skips, differs from full higher-order models in that it disregards much of the sequential ordering and only retains select pairwise interactions. Nevertheless, it remains effective for sequence modeling in many practical cases.

-

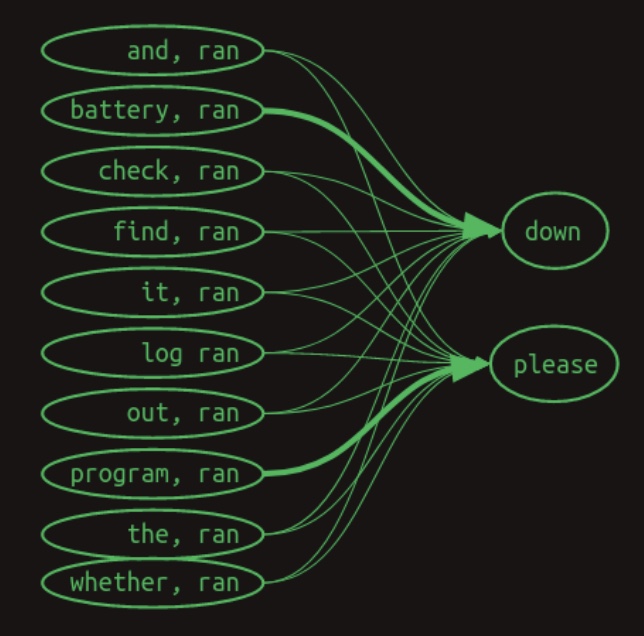

At this point, classical Markov chains are no longer applicable. Instead, the model tracks associative links between earlier words and subsequent words, regardless of strict temporal adjacency. The diagram below from Brandon Rohrer’s Transformers From Scratch visualizes these interactions using directional arrows. Numeric weights are omitted; instead, line thickness indicates the strength of association:

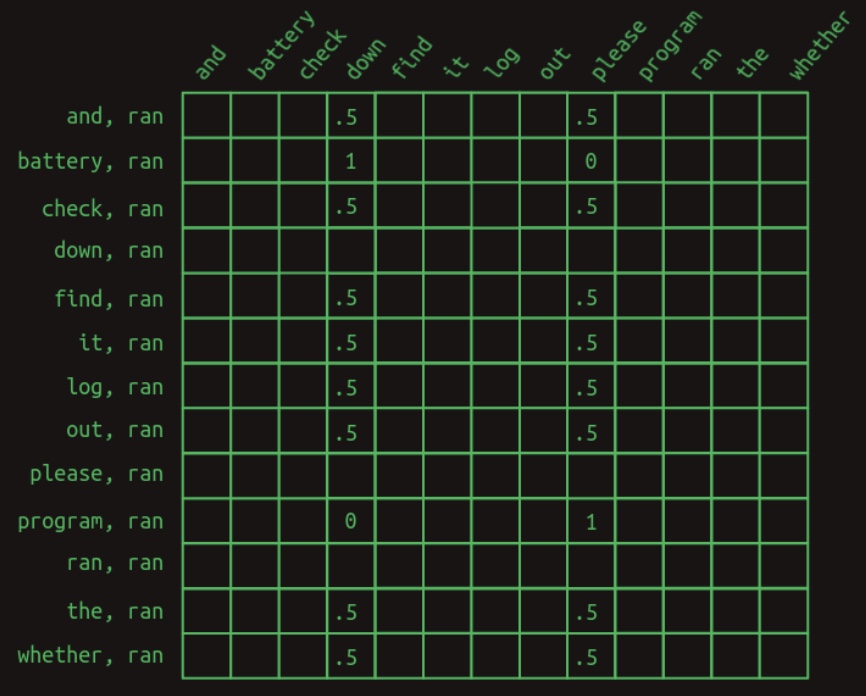

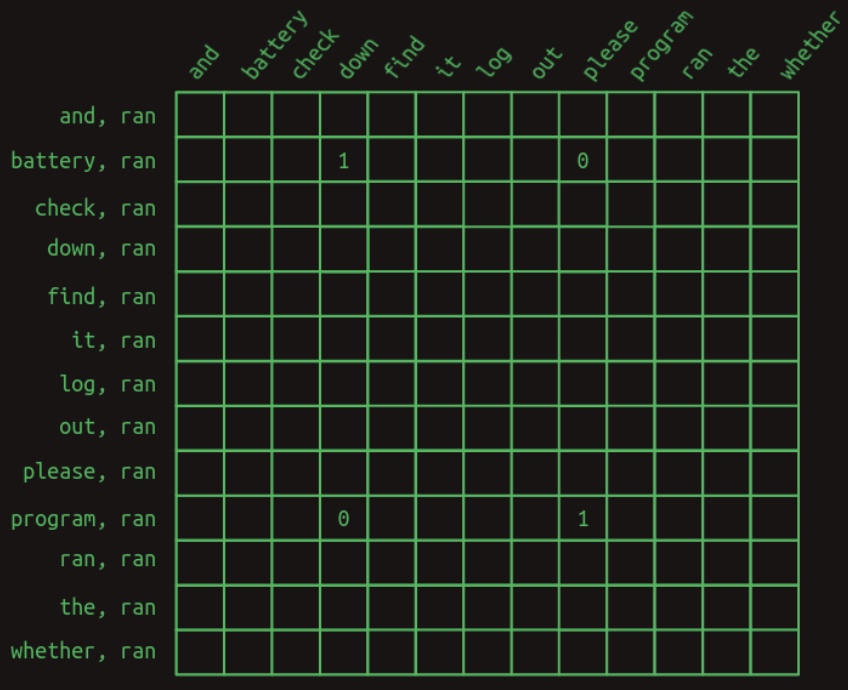

- The corresponding transition matrix for this second-order-with-skips model is shown below:

-

This matrix view is restricted to the rows pertinent to predicting the word that follows

ran. Each row corresponds to a pair consisting ofranand another word in the vocabulary. Only non-zero entries are shown; cells not displayed are implicitly zero. -

The first key insight is that, in this model, prediction is based not on a single row but on a collection of rows—each representing a feature defined by a specific word pair. Consequently, we move beyond traditional Markov chains. Rows no longer represent the complete state of a sequence, but instead denote individual contextual features active at a specific moment.

-

As a result of this shift, each value in the matrix is no longer interpreted as a probability, but rather as a vote. When predicting the next word, votes from all active features are aggregated, and the word receiving the highest cumulative score is selected.

-

The second key observation is that most features have little discriminatory power. Since the majority of words appear in both sentences, their presence does not help disambiguate what comes after

ran. These features contribute uniformly with a value of 0.5, offering no directional influence. -

The only features with predictive utility in this example are

battery, ranandprogram, ran. The featurebattery, ranimplies thatranis the most recent word andbatteryoccurred earlier. This feature assigns a vote of 1 todownand 0 toplease. Conversely,program, ranassigns the inverse: a vote of 1 topleaseand 0 todown. -

To generate a next-word prediction, the model sums all applicable feature values column-wise. For instance:

- In the sequence

Check the program log and find out whether it ran, the cumulative votes are 0 for most words, 4 fordown, and 5 forplease. - In the sequence

Check the battery log and find out whether it ran, the votes are reversed: 5 fordownand 4 forplease.

- In the sequence

-

By selecting the word with the highest vote total, the model makes the correct next-word prediction—even when the relevant information is located eight words earlier. This highlights the utility and efficiency of feature-based second-order-with-skips models in capturing long-range dependencies without incurring the exponential complexity of full higher-order Markov models.

Masking Features

-

Upon closer examination, the predictive difference between vote totals of 4 and 5 is relatively minor. Such a narrow margin indicates that the model lacks strong confidence in its prediction. In larger and more naturalistic language models, these subtle distinctions are likely to be obscured by statistical noise, potentially leading to inaccurate or unstable predictions.

-

One effective strategy to sharpen predictions is to eliminate the influence of uninformative features. In the given example, only two features—

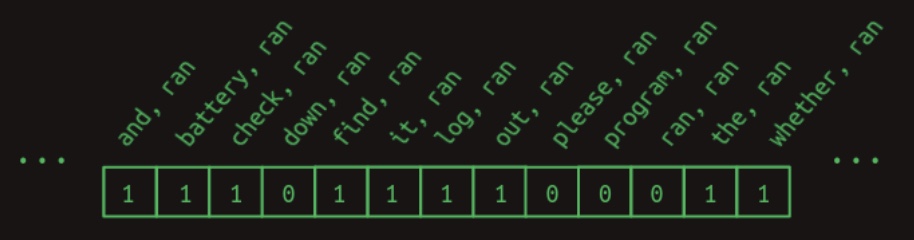

battery, ranandprogram, ran—meaningfully contribute to next-word prediction. It is instructive at this point to recall that relevant rows are extracted from the transition matrix via a dot product between the matrix and a feature activity vector, which encodes the features currently active. For this scenario, the implicitly used feature vector is visualized in the following diagram from Brandon Rohrer’s Transformers From Scratch:

-

This vector includes an entry with the value 1 for each feature formed by pairing

ranwith each preceding word in the sentence. Notably, words that occur afterranare excluded, as in the next-word prediction task these words remain unseen at prediction time and therefore must not influence the outcome. Moreover, combinations that do not arise in the example context are safely assumed to yield zero values and can be ignored without loss of generality. -

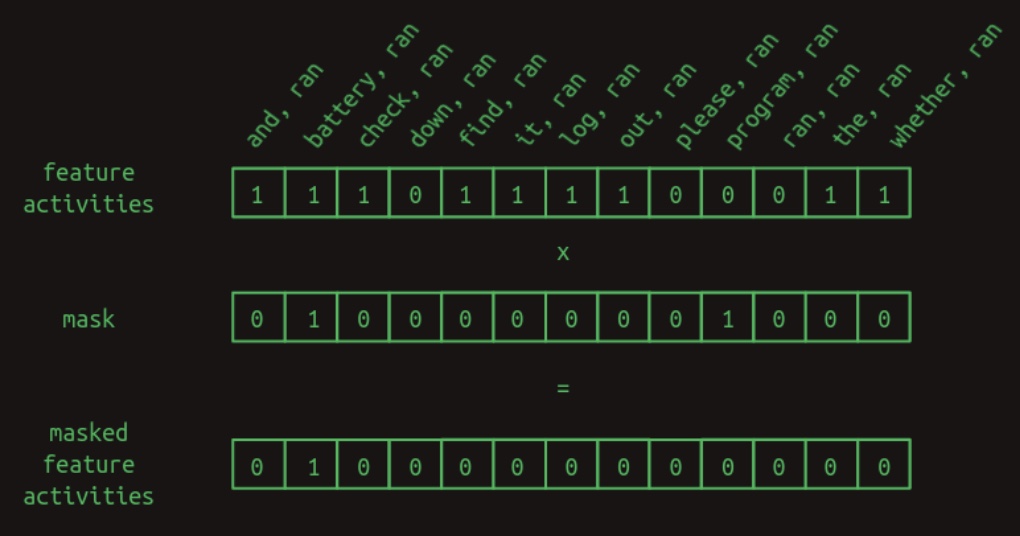

To enhance model precision further, we can introduce a masking mechanism that explicitly nullifies unhelpful features. A mask is defined as a binary vector, populated with ones at positions corresponding to features we wish to retain, and zeros at positions to be suppressed or ignored. In this case, we wish to retain only

battery, ranandprogram, ran, the features that empirically prove to be informative. The masked feature vector is illustrated in the diagram below, also from Brandon Rohrer’s Transformers From Scratch:

-

The mask is applied to the original feature activity vector via element-wise multiplication. For any feature retained by the mask (i.e., mask value of 1), its corresponding activity remains unchanged. Conversely, features masked out (i.e., mask value of 0) are forcibly zeroed out, regardless of their original value.

-

The practical effect of the mask is that large portions of the transition matrix are suppressed. All feature combinations of

ranwith any word other thanbatteryorprogramare effectively removed from consideration. The resultant masked transition matrix is shown below:

-

Once uninformative features are masked out, the model’s predictive power becomes significantly stronger. For instance, when the word

batteryappears earlier in the sequence, the model now assigns a probability weight of 1 todownand 0 topleasefor the next word followingran. What was previously a 25% difference in weighting has now become an unambiguous selection, or informally, an “infinite percent” improvement in certainty. A similar confidence gain is observed when the wordprogramappears earlier, resulting in a decisive preference forplease. -

This process of selective masking is a core conceptual component of the attention mechanism, as referenced in the title of the original Transformer paper. While the simplified mechanism described here provides an intuitive foundation, the actual implementation of attention in Transformers is more sophisticated. For a comprehensive treatment, refer to the original paper.

Generally speaking, an attention function determines the relative importance (or “weight”) of different input elements in producing an output representation. In the specific case of scaled dot-product attention, which the Transformer architecture employs, the mechanism adopts the query-key-value paradigm from information retrieval. An attention function performs a mapping from a query and a set of key-value pairs to a single output. This output is computed as a weighted sum of the values, where each weight is derived from a compatibility function—also known as an alignment function, as introduced in Bahdanau et al. (2014)—which measures the similarity between the query and each key.

- This overview introduces the fundamental principles of attention. The specific computational details and extensions, including multi-head attention and positional encoding, are addressed in the dedicated section on Attention.

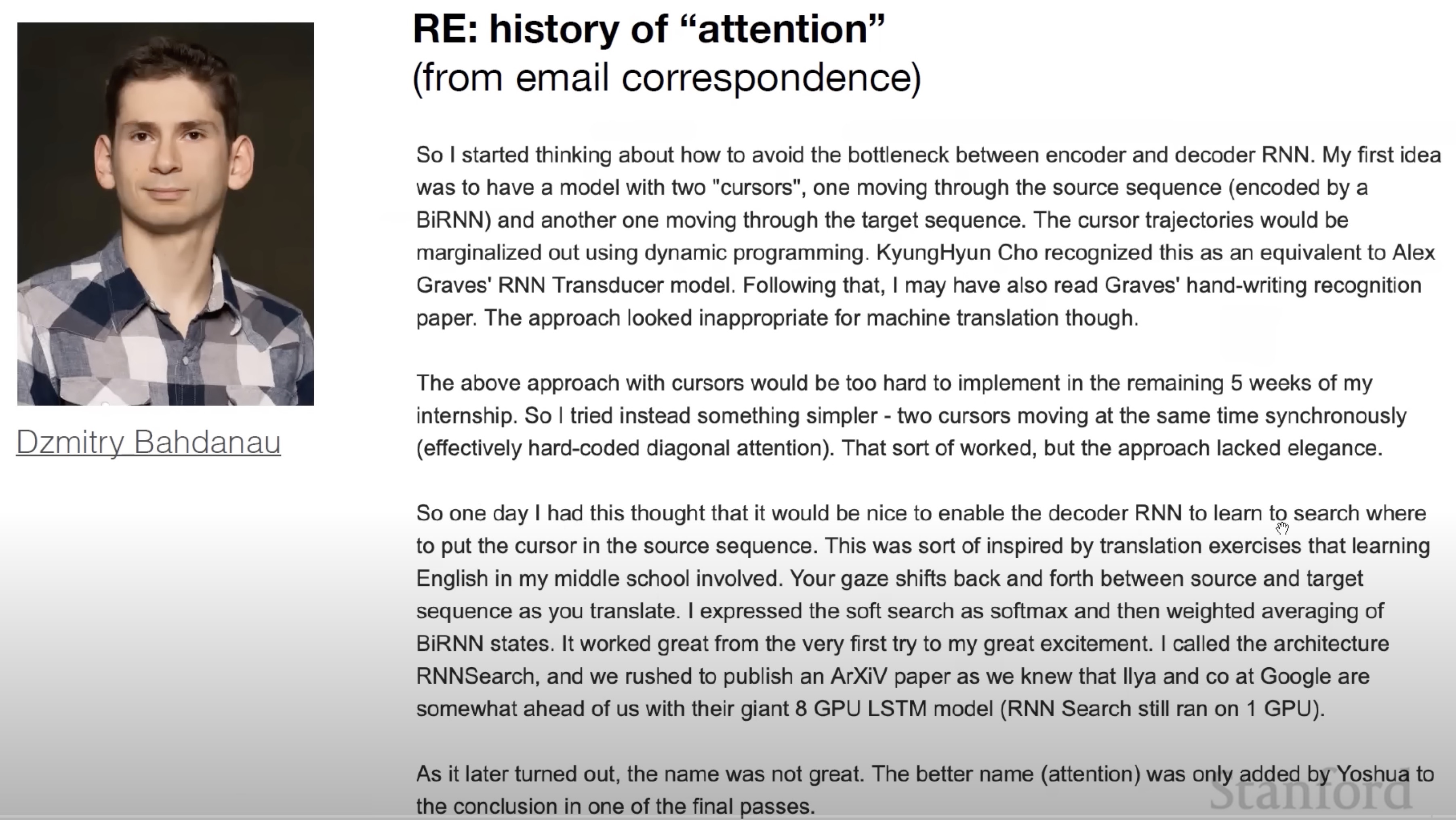

Origins of attention

- As mentioned above, the attention mechanism originally introduced in Bahdanau et al. (2015) served as a foundation upon which the self-attention mechanism in the Transformer paper was based on.

- The following slide from Stanford’s CS25 course shows how the attention mechanism was conceived and is a perfect illustration of why AI/ML is an empirical field, built on intuition.

From Feature Vectors to Transformers

-

The selective-second-order-with-skips model provides a valuable conceptual framework for understanding the operations of Transformer-based architectures, particularly on the decoder side. It serves as a reasonable first-order approximation of the underlying mechanics in generative language models such as OpenAI’s GPT-3. Although it does not fully encompass the complexity of Transformer models, it encapsulates the core intuition that drives them.

-

The subsequent sections aim to bridge the gap between this high-level conceptualization and the actual computational implementations of Transformers. The evolution from intuition to implementation is primarily shaped by three key practical considerations:

-

Computational efficiency of matrix multiplications Modern computers are exceptionally optimized for performing matrix multiplications. In fact, an entire industry has emerged around designing hardware tailored for this specific operation. Central Processing Units (CPUs) handle matrix multiplications effectively due to their ability to leverage multi-threading. Graphics Processing Units (GPUs), however, are even more efficient, as they contain hundreds or thousands of dedicated cores optimized for highly parallelized computations. Consequently, any algorithm or computation that can be reformulated as a matrix multiplication can be executed with remarkable speed and efficiency. This efficiency has led to the analogy: matrix multiplication is like a bullet train—if your data (or “baggage”) can be expressed in its format, it will reach its destination extremely quickly.

-

Differentiability of every computational step Thus far, our examples have involved manually defined transition probabilities and masking patterns—effectively, manually specified model parameters. In practical settings, however, these parameters must be learned from data using the process of backpropagation. For backpropagation to function, each computational operation in the network must be differentiable. This means that any infinitesimal change in a parameter must yield a corresponding, computable change in the model’s loss function—the measure of error between predictions and target outputs.

-

Gradient smoothness and conditioning The loss gradient, which comprises the set of all partial derivatives with respect to the model’s parameters, must exhibit smoothness and favorable conditioning to ensure effective optimization. A smooth gradient implies that small parameter updates result in proportionally small and consistent changes in loss—facilitating stable convergence. A well-conditioned gradient further ensures that no direction in the parameter space dominates excessively over others. To illustrate: if the loss surface were analogous to a geographic landscape, then a well-conditioned loss would resemble gently rolling hills (as in the classic Windows screensaver), whereas a poorly conditioned loss would resemble the steep, asymmetrical cliffs of the Grand Canyon. In the latter case, optimization algorithms would struggle to find a consistent update direction due to varying gradients depending on orientation.

-

-

If we consider the science of neural network architecture to be about designing differentiable building blocks, then the art lies in composing these blocks such that the gradient is smooth and approximately uniform in all directions—ensuring robust training dynamics.

Attention as Matrix Multiplication

-

While it is relatively straightforward to assign feature weights by counting co-occurrences of word pairs and subsequent words during training, attention masks are not as trivially derived. Until now, mask vectors have been assumed or manually specified. However, within the Transformer architecture, the process of discovering relevant masks must be both automated and differentiable.

-

Although it might seem intuitive to use a lookup table for this purpose, the design imperative in Transformers is to express all major operations as matrix multiplications, for the reasons discussed above.

-

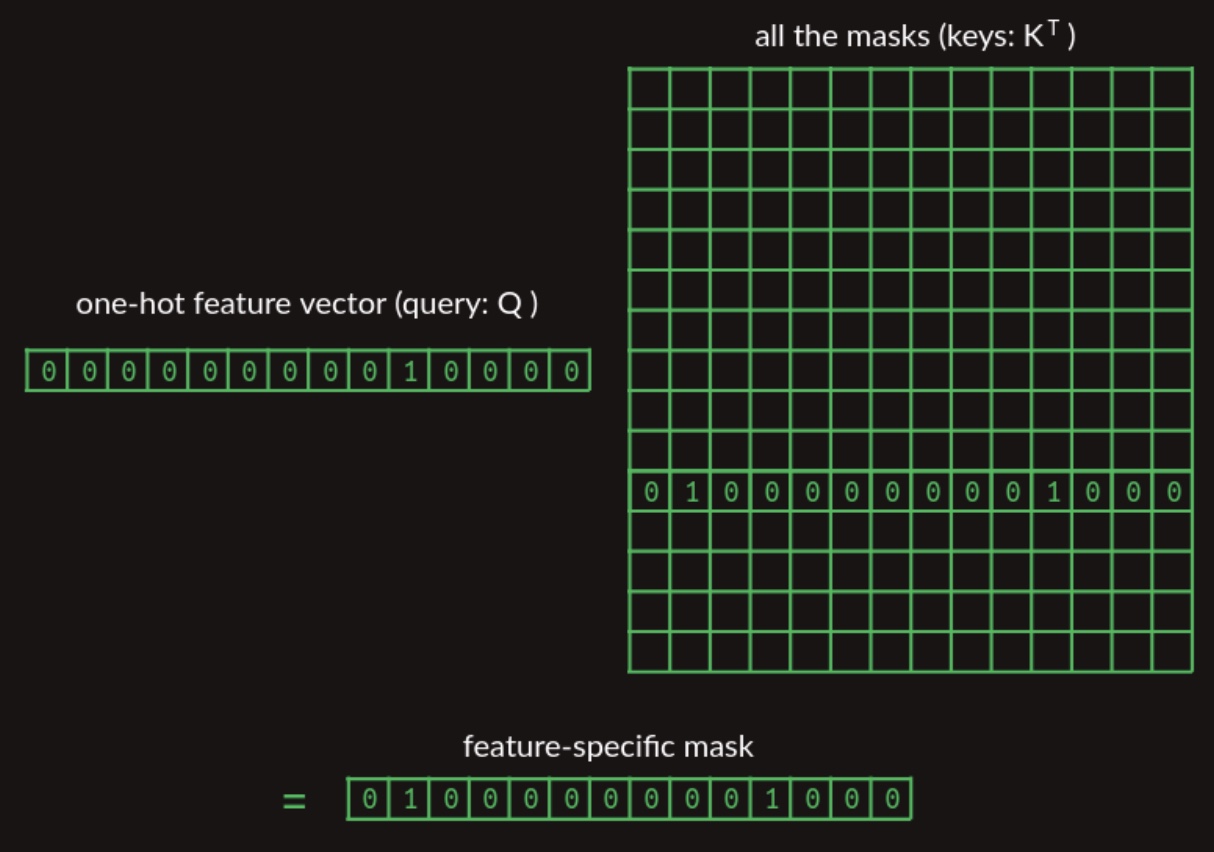

We can adapt the earlier lookup mechanism by aggregating all possible mask vectors into a matrix, and using the one-hot representation of the current word to extract the appropriate mask vector. This procedure is depicted in the diagram below:

-

For visual clarity, the diagram illustrates only the specific mask vector being accessed, though the full matrix contains one mask vector for each vocabulary entry.

-

This leads us into alignment with the formal Transformer architecture as described in the original paper. The mechanism for retrieving a relevant mask via matrix operations corresponds to the \(QK^T\) term in the attention equation, which is introduced in more detail in the section on Single Head Attention Revisited:

-

In this formulation:

- The matrix \(Q\) (queries) encodes the features we are currently focusing on.

- The matrix \(K\) (keys) stores the collection of masking vectors (or more broadly, content to be attended to).

- Since the keys are stored in columns, but queries are row vectors, the keys must be transposed (denoted by the \(T\) operator) to enable appropriate dot-product alignment.

-

The resulting dot product between the query and each key vector yields a compatibility score. This score is then scaled by \(\sqrt{d_k}\) (to stabilize gradients during training), and passed through a softmax function to convert it into a probability distribution over the values. Finally, this distribution is used to compute a weighted sum of the value vectors in \(V\).

-

While we will revisit and refine this formulation in upcoming sections, this abstraction already demonstrates the core idea: attention as differentiable lookup, implemented entirely through matrix operations.

-

Additional elaboration on this mechanism can be found in the section on Attention below.

Second-Order Sequence Model as Matrix Multiplications

-

One aspect we have thus far treated somewhat informally is the construction of transition matrices. While the logical structure and function of these matrices have been discussed, we have not yet fully articulated how to implement them using matrix multiplication, which is central to efficient neural network computation.

-

Once the attention step is complete, it produces a vector that represents the most recent word along with a small subset of previously encountered words. This attention output provides the raw material necessary for feature construction, but it does not directly generate the multi-word (word-pair) features required for downstream processing. To construct these features—combinations of the most recent word with one or more earlier words—we can employ a single-layer fully connected neural network.

-

To illustrate how such a neural network layer can perform this construction, we will design a hand-crafted example. While this example is intentionally stylized and its weight values do not reflect real-world training outcomes, it serves to demonstrate that a neural network possesses the expressive capacity required to form word-pair features. For clarity and conciseness, we will restrict the vocabulary to just three attended words:

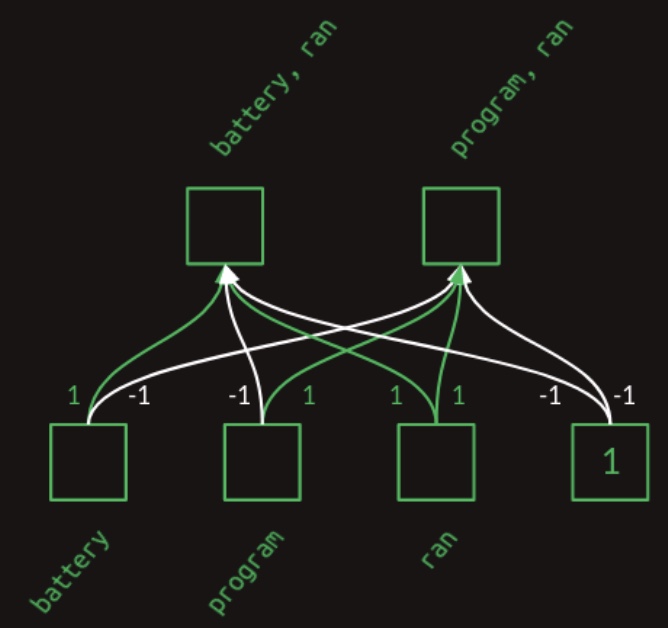

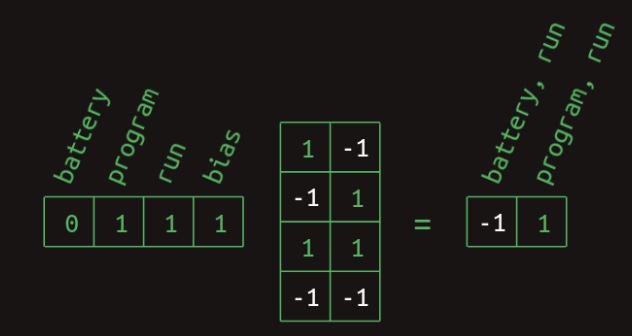

battery,program, andran. The following diagram from Brandon Rohrer’s Transformers From Scratch shows a neural network layer designed to generate multi-word features:

- The diagram illustrates how learned weights in the network can combine presence (indicated by a

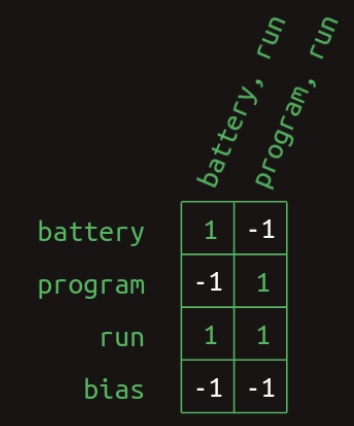

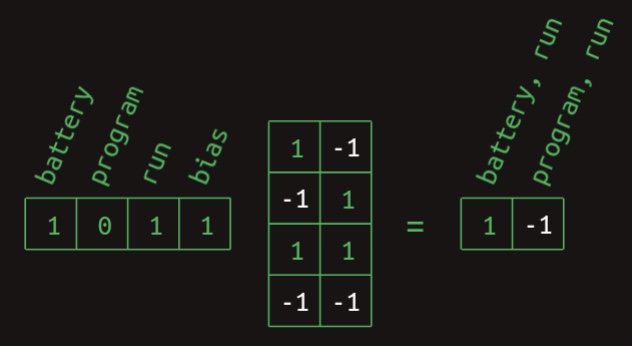

1) and absence (indicated by a0) of words to produce a set of feature activations. This same transformation can also be expressed in matrix form. The following image depicts the weight matrix corresponding to this feature generation layer:

- Feature activations are computed by multiplying this weight matrix by a vector representing the current word context—that is, the presence or absence of each relevant word seen so far. The next diagram, also from Rohrer’s primer, illustrates this computation for the feature

battery, ran:

- In this instance, the vector has ones in the positions corresponding to

batteryandran, a zero forprogram, and a bias input fixed at one (a standard element in neural networks to allow shifting the activation). The result of the matrix multiplication yields a1for thebattery, ranfeature and-1forprogram, ran. This demonstrates how specific combinations of input activations result in distinct feature detections. The computation forprogram, ranproceeds analogously, as shown here:

-

The final step in constructing these features involves applying a Rectified Linear Unit (ReLU) non-linearity. The ReLU function replaces any negative values with zero, effectively acting as a thresholding mechanism that retains only positive activations. This ensures that features are expressed in binary form—indicating presence with a

1and absence with a0. -

With these steps complete, we now have a matrix-multiplication-based procedure for generating multi-word features. Although we initially described these as consisting solely of the most recent word and one preceding word, a closer examination reveals that this method is more general. When the feature generation matrix is learned (rather than hard-coded), the model is capable of representing more complex structures, including:

- Three-word combinations, such as

battery, program, ran, if they occur frequently enough during training. - Co-occurrence patterns that ignore the most recent word, such as

battery, program.

- Three-word combinations, such as

-

Such capabilities reveal that the model is not strictly limited to a selective-second-order-with-skips formulation, as previously implied. Rather, the actual representational capacity of Transformers extends beyond this simplification, capturing more nuanced and flexible feature structures. This additional complexity illustrates that our earlier model was a useful abstraction, but not a complete one—and that abstraction will continue to evolve as we explore further layers of the architecture.

-

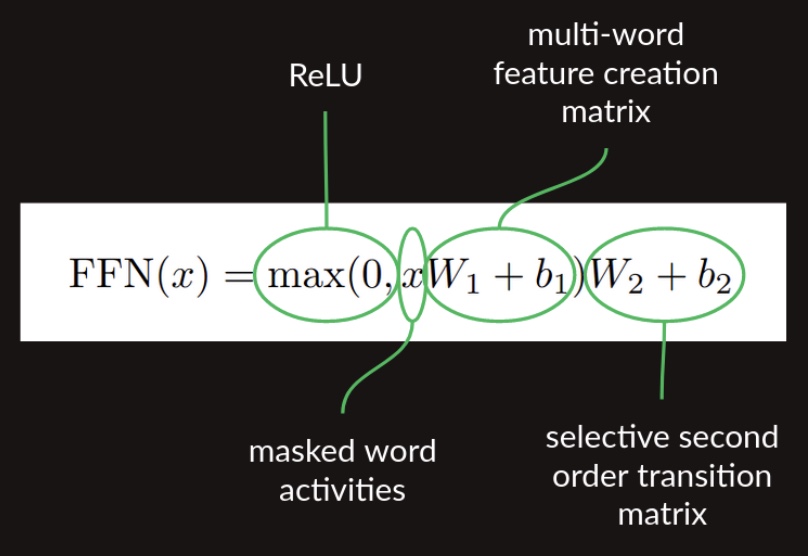

Once generated, the multi-word feature matrix is ready to undergo one final matrix multiplication: the application of the second-order sequence model with skips, as introduced earlier. Altogether, the following sequence of feed-forward operations is applied after the attention mechanism:

- Feature creation via matrix multiplication

- Application of ReLU non-linearity

- Transition matrix multiplication

-

These operations correspond to the feed forward block in the Transformer architecture. The following equation from the original paper expresses this process concisely in mathematical terms:

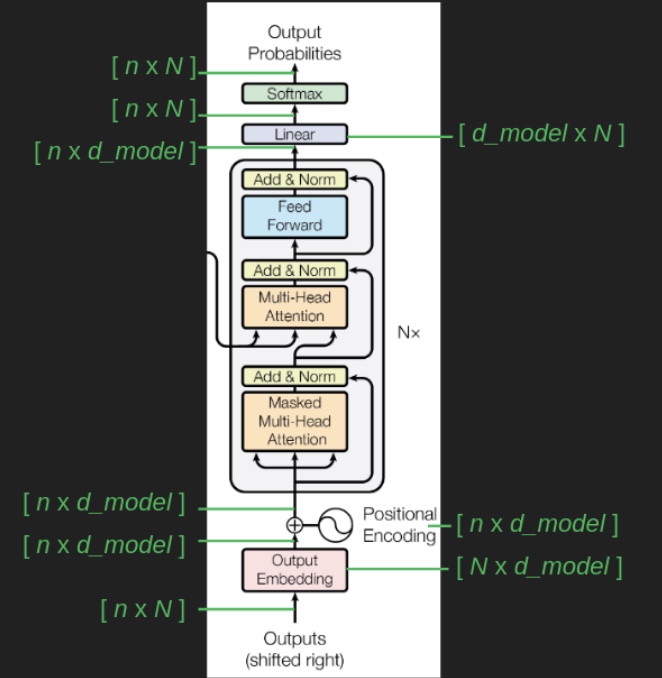

- In the architectural diagram below, also from the Transformer paper, these operations are grouped together under the label feed forward:

Sampling a Sequence of Output Words

Generating Words as a Probability Distribution over the Vocabulary

-

Up to this point, our discussion has focused primarily on the task of next-word prediction. To extend this into the generation of entire sequences, such as complete sentences or paragraphs, several additional components must be introduced. One critical element is the prompt—a segment of initial text that provides the Transformer with contextual information and a starting point for further generation. This prompt serves as an input to the decoder, which corresponds to the right-hand side of the model architecture (as labeled “Outputs (shifted right)” in conventional visualizations).

-

The selection and design of a prompt that elicits meaningful or interesting responses from the model is a specialized practice known as prompt engineering. This emerging field exemplifies a broader trend in artificial intelligence where human users adapt their inputs to support algorithmic behavior, rather than expecting models to adapt to arbitrary human instructions.

-

During sequence generation, the decoder is typically initialized with a special token such as

<START>, which acts as a signal to commence decoding. This token enables the decoder to begin leveraging the compressed representation of the source input, as derived from the encoder (explored further in the section on Cross-Attention). The following animation from Jay Alammar’s The Illustrated Transformer illustrates two key processes:- Parallel ingestion of tokens by the encoder, culminating in the construction of key and value matrices.

- The decoder generating its first output token (although the

<START>token itself is not shown in this particular animation).

-

Once the decoder receives an initial input—either a prompt or a start token—it performs a forward pass. The output of this pass is a sequence of predicted probability distributions, with one distribution corresponding to each token position in the output sequence.

-

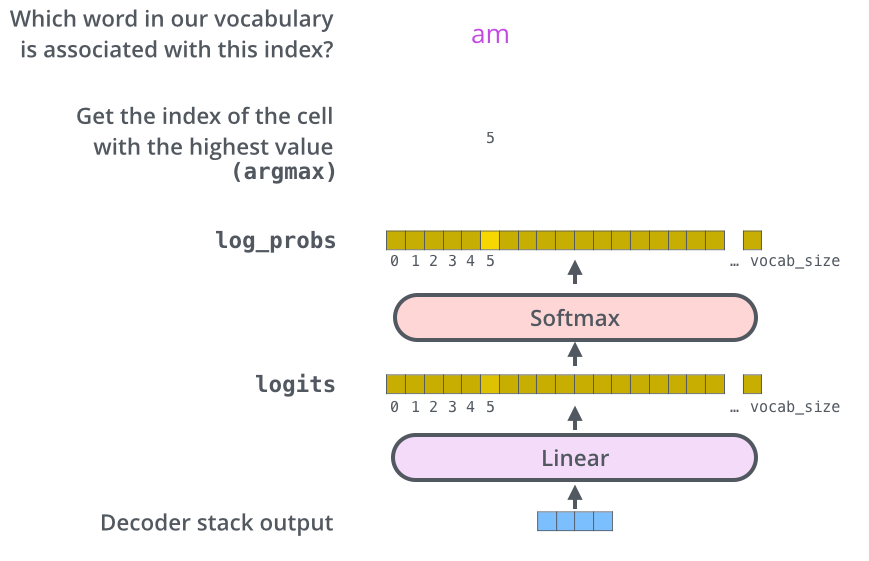

The process of translating internal model representations into discrete words involves several steps:

- The output vector from the decoder is passed through a linear transformation (a fully connected layer).

- The result is a high-dimensional vector of logits—unnormalized scores representing each word in the vocabulary.

- A softmax function converts these scores into a probability distribution.

- A final word is selected from this distribution (e.g., by choosing the most probable word).

-

This de-embedding pipeline is depicted in the following visualization from Jay Alammar’s The Illustrated Transformer:

Role of the Final Linear and Softmax Layers

-

The linear layer is a standard fully connected neural layer that projects the decoder’s output vector into a logits vector—a vector whose dimensionality equals the size of the model’s output vocabulary.

-

For context, a typical NLP model may recognize approximately 40,000 distinct English words. Consequently, the logits vector would be 40,000-dimensional, with each element representing the unnormalized score of a corresponding word in the vocabulary.

-

These raw scores are then processed by the softmax layer, which transforms them into a probability distribution over the vocabulary. This transformation enforces two key constraints:

- All output values are in the interval \([0, 1]\).

- The values collectively sum to 1.0, satisfying the conditions of a probability distribution.

-

At each decoding step, the probability distribution specifies the model’s predictions for all possible next words. However, we are primarily interested in the distribution’s output at the final position of the current sequence, since earlier tokens are already known and fixed.

-

The word corresponding to the highest probability in the distribution is selected as the next token (further elaborated in the section on Greedy Decoding).

Greedy Decoding

-

Several strategies exist for selecting the next word from the predicted probability distribution. The most straightforward among them is greedy decoding, which involves choosing the word with the maximum probability at each step.

-

After selecting this word, it is appended to the input sequence and the updated sequence is re-fed into the decoder. This process repeats auto-regressively, generating one token at a time until a stopping criterion is met—typically, the generation of an

<EOS>(end-of-sequence) token or the production of a predefined number of tokens. -

The animation below from Jay Alammar’s The Illustrated Transformer demonstrates how the decoder recursively generates output tokens by ingesting previously generated tokens:

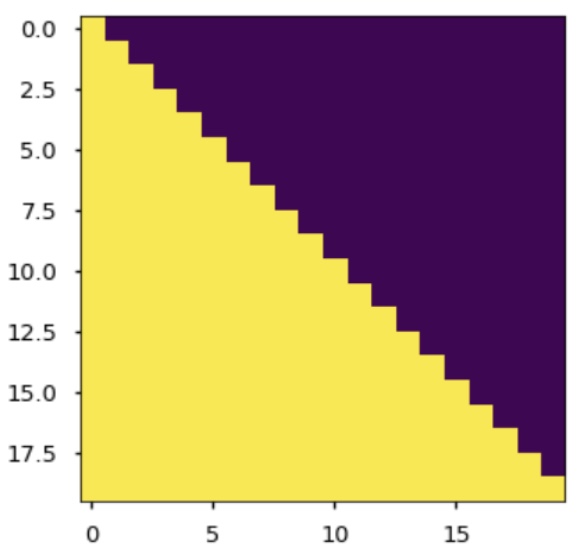

- One additional mechanism relevant to decoding—but not yet detailed—is the use of a specialized masking strategy to ensure that the model only attends to past tokens and not future ones. This constraint enforces causality in the generation process and is implemented via masked multi-head attention. The specifics of this masking mechanism are addressed later in the section on Single Head Attention Revisited.

Transformer Core

Embeddings

-

As described thus far, a naïve representation of the Transformer architecture quickly becomes computationally intractable. For example, with a vocabulary size \(N = 50{,}000\), a transition matrix encoding probabilities between all possible input word pairs and their corresponding next words would require a matrix with 50,000 columns and \(50{,}000^2 = 2.5 \times 10^9\) rows—amounting to over 100 trillion parameters. Such a configuration is impractically large, even given the capabilities of modern hardware accelerators.

-

The computational burden is not solely due to the matrix size. Constructing a stable and robust transition-based language model would necessitate a training corpus that illustrates every conceivable word sequence multiple times. This requirement would far exceed the size and diversity of even the most extensive language datasets.

-

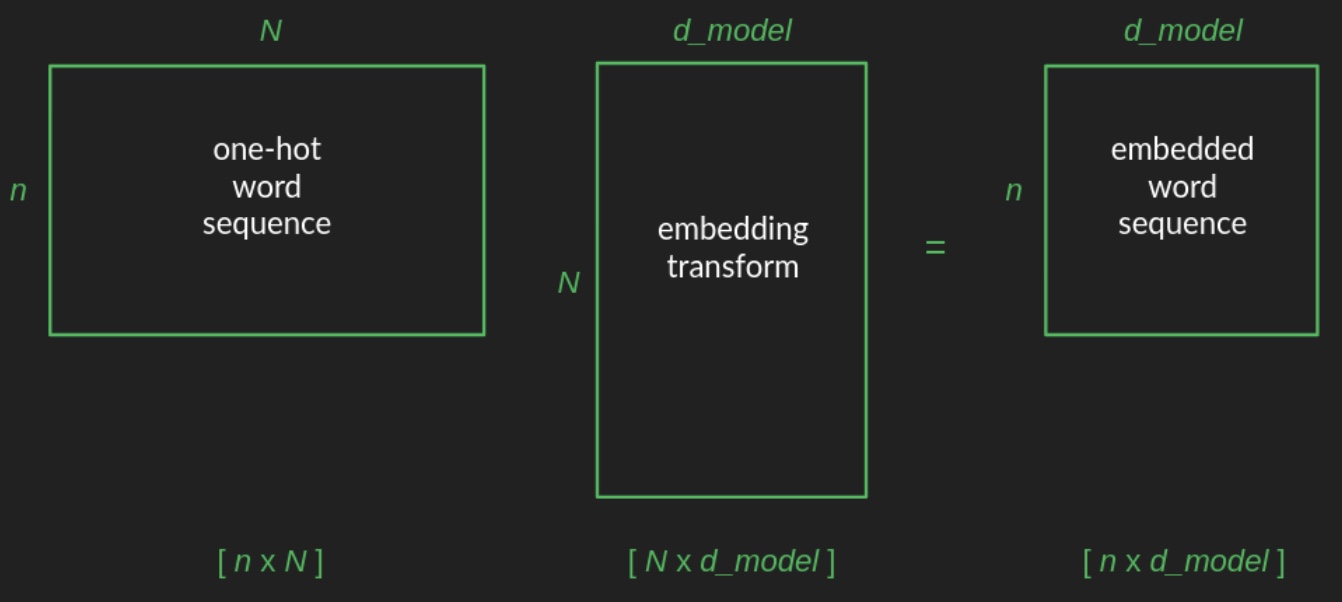

Fortunately, these challenges are addressed through the use of embeddings.

-

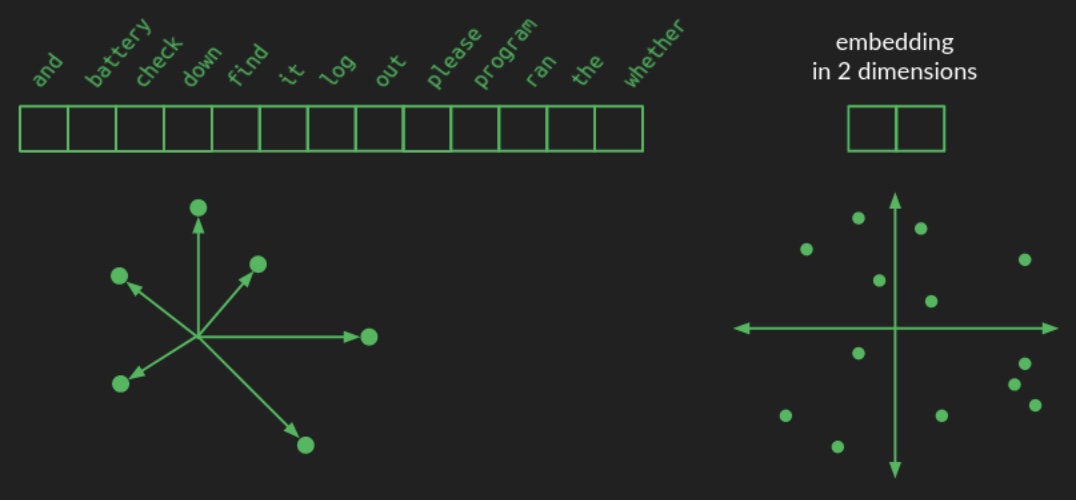

In a one-hot encoding scheme, each word in the vocabulary is represented as a vector of length \(N\), with all elements set to zero except for a single

1in the position corresponding to the word. Consequently, this representation lies in an \(N\)-dimensional space, where each word occupies a unique position one unit away from the origin along one axis. A simplified visualization of such a high-dimensional structure is provided below:

- By contrast, an embedding maps each word from this high-dimensional space into a lower-dimensional continuous space. In the language of linear algebra, this operation is known as projection. The image above illustrates how words might be projected into a two-dimensional space for illustrative purposes. Instead of needing \(N\) elements to represent each word, only two numbers—\((x, y)\) coordinates—are needed. A hypothetical 2D embedding for a small vocabulary is shown below, along with coordinates for some sample words:

-

A well-constructed embedding clusters semantically or functionally similar words near one another in this reduced space. Consequently, models trained in the embedding space learn generalized patterns that can be applied across groups of related words. For instance, if the model learns a transformation applicable to one word, that knowledge implicitly extends to all neighboring words in the embedded space. This property not only reduces the total number of parameters required but also significantly decreases the amount of training data needed to achieve generalization.

-

The illustration highlights how meaningful groupings may emerge: domain-specific nouns such as

battery,log, andprogrammay cluster in one region; prepositions likedownandoutin another; and verbs such ascheck,find, andranmay lie closer to the center. Although actual embeddings are generally more abstract and less visually interpretable, the core principle holds: semantic similarity corresponds to spatial proximity in the embedding space. -

Embeddings enable a drastic reduction in the number of trainable parameters. However, reducing dimensionality comes with a trade-off: semantic fidelity may be lost if too few dimensions are used. Rich linguistic structures and nuanced relationships require adequate space for distinct concepts to remain non-overlapping. Thus, the choice of embedding dimensionality reflects a compromise between computational efficiency and model expressiveness.

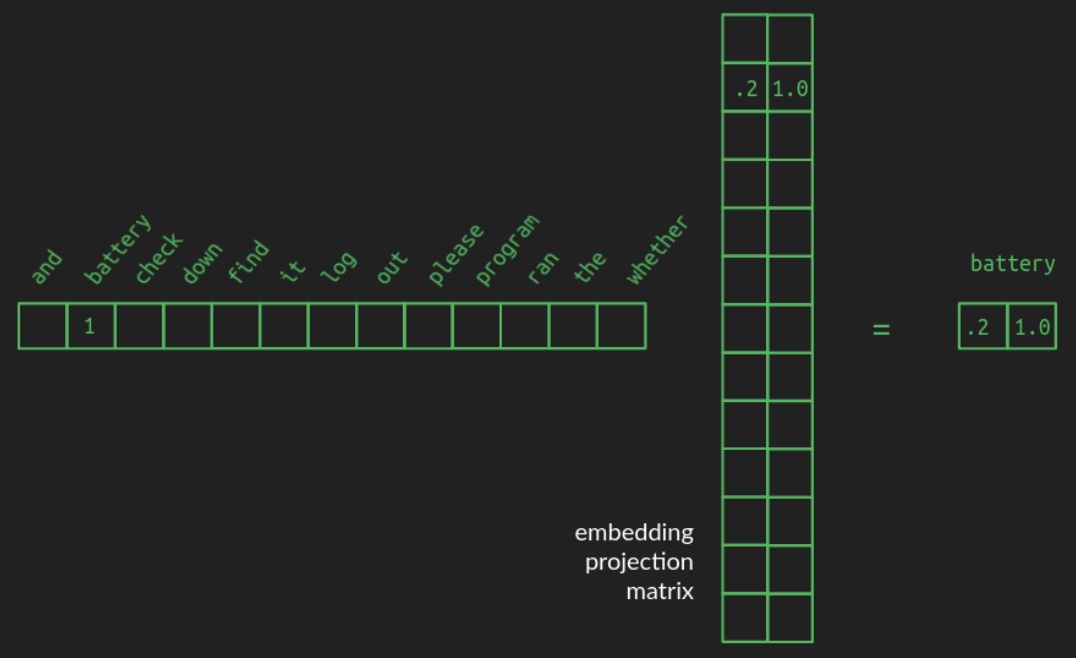

-

The transformation from a one-hot vector to its corresponding position in the embedded space is implemented as a matrix multiplication—a foundational operation in linear algebra and neural network design. Specifically, starting from a one-hot vector of shape \(1 \times N\), the word is projected into a space of dimension \(d\) (e.g., \(d = 2\)) using a projection matrix of shape \(N \times d\). The following diagram from Brandon Rohrer’s Transformers From Scratch illustrates such a projection matrix:

-

In the example, a one-hot vector representing the word

batteryselects the corresponding row in the projection matrix. This row contains the coordinates ofbatteryin the lower-dimensional space. For clarity, all other zeros in the one-hot vector and unrelated rows of the projection matrix are omitted in the diagram. In practice, however, the projection matrix is dense, with each row encoding a learned vector representation for its associated vocabulary word. -

Projection matrices can transform the original collection of one-hot vectors into arbitrary configurations in any target dimensionality. The core challenge lies in learning a useful projection—one that clusters related words and separates unrelated ones sufficiently. High-quality pre-trained embeddings (e.g., Word2Vec, GloVe) are available for many common languages. Nevertheless, in Transformer models, these embeddings are typically learned jointly during training, allowing them to adapt dynamically to the task at hand.

-

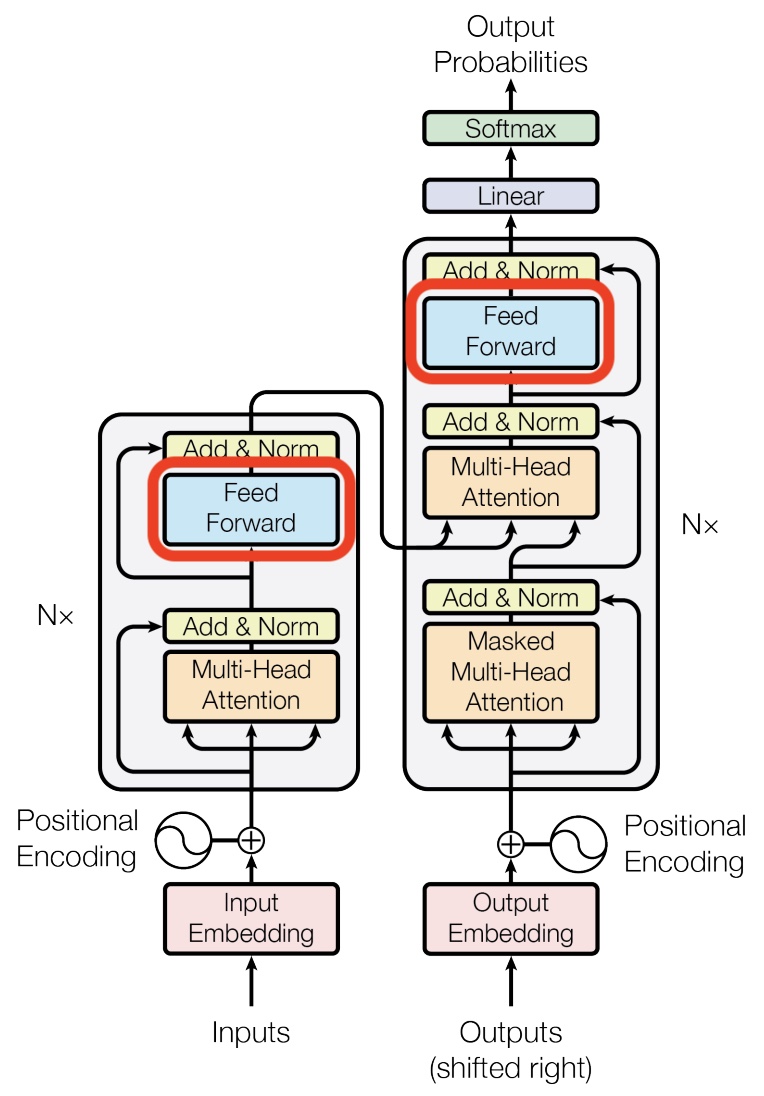

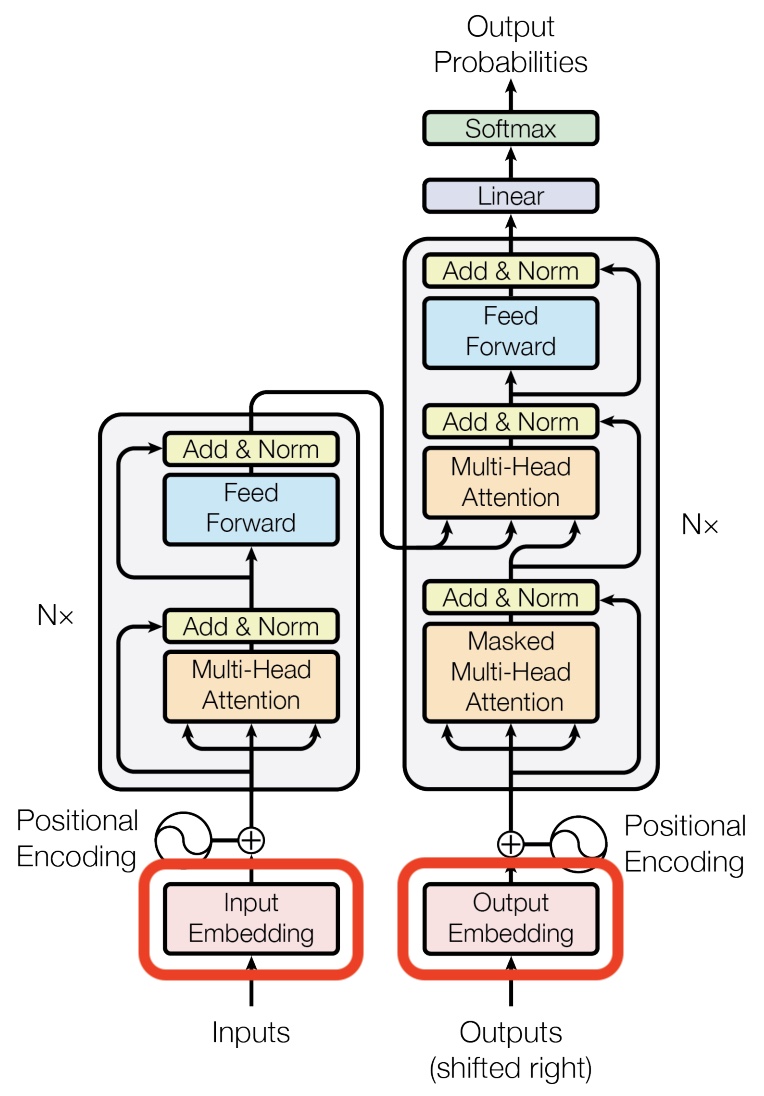

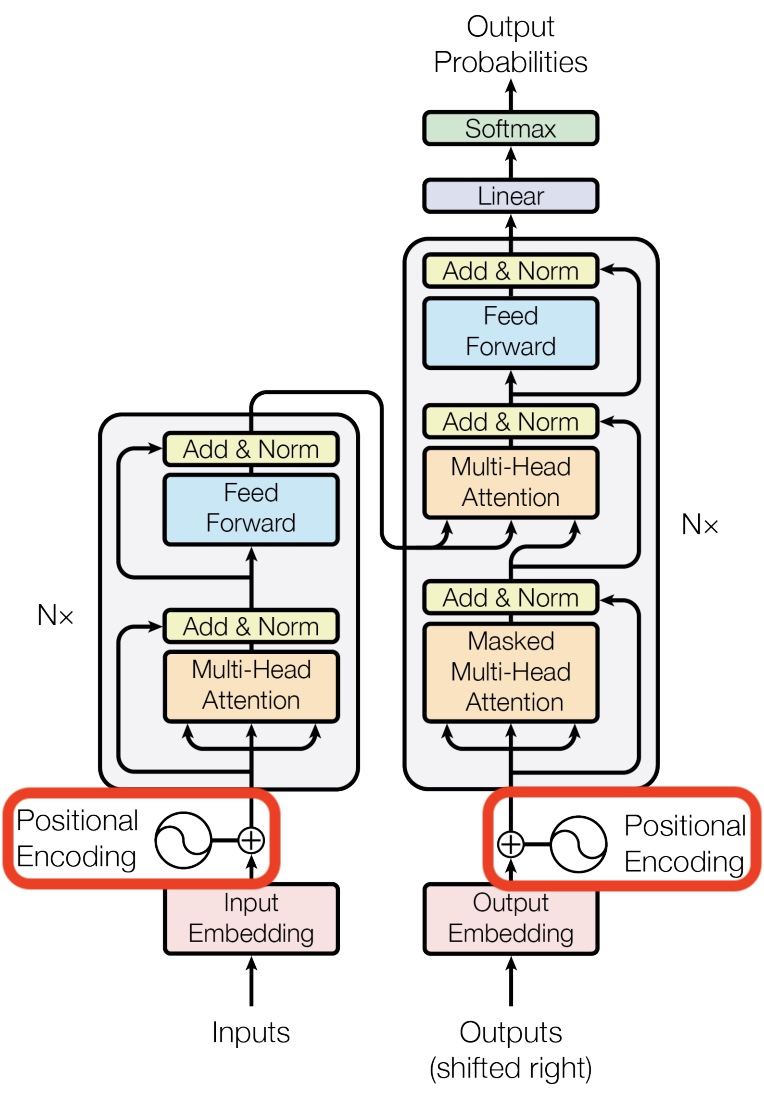

The placement of the embedding layer within the Transformer architecture is shown in the following diagram from the original Transformer paper:

Positional Encoding

In contrast to recurrent and convolutional neural networks, the Transformer architecture does not explicitly model relative or absolute position information in its structure.

- Up to this point, positional information for words has been largely overlooked, particularly for any words preceding the most recent one. Positional encodings (also known as positional embeddings) address this limitation by embedding spatial information into the transformer, allowing the model to comprehend the order of tokens in a sequence.

- Positional encodings are a crucial component of transformer models, enabling them to understand the order of tokens in a sequence. Absolute positional encodings, while straightforward, are limited in their ability to generalize to different sequence lengths. Relative positional encodings address some of these issues but at the cost of increased complexity. Rotary Positional Encodings offer a promising middle ground, capturing relative positions efficiently and enabling the processing of very long sequences in modern LLMs. Each method has its strengths and weaknesses, and the choice of which to use depends on the specific requirements of the task and the model architecture.

Absolute Positional Encoding

- Definition and Purpose:

- Absolute positional encoding, proposed in the original Transformer paper Attention Is All You Need (2017) by Vaswani et al., is a method used in transformer models to incorporate positional information into the input sequences. Since transformers lack an inherent sense of order, positional encodings are essential for providing this sequential information. The most common method, introduced in the original transformer model by Vaswani et al. (2017), is to add a circular wiggle to the embedded representation of words using sinusoidal positional encodings.

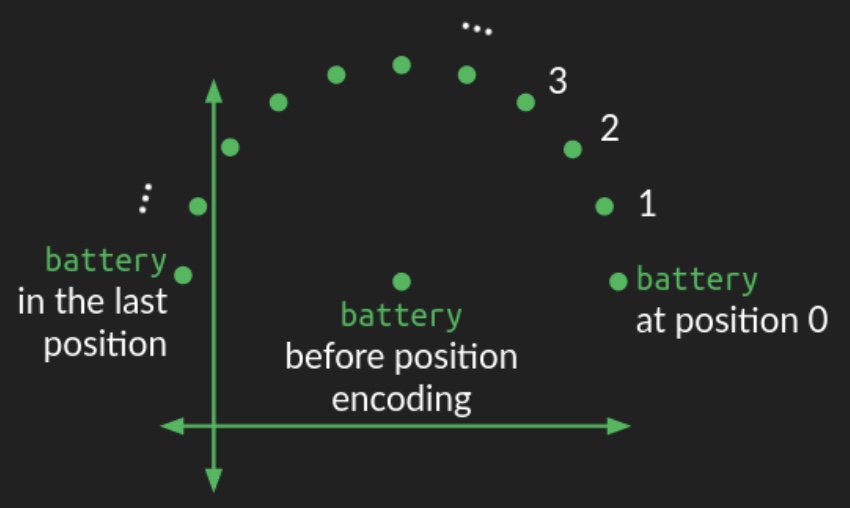

- The position of a word in the embedding space acts as the center of a circle. A perturbation is added based on the word’s position in the sequence, causing a circular pattern as you move through the sequence. Words that are close to each other in the sequence have similar perturbations, while words that are far apart are perturbed in different directions.

- Circular Wiggle:

- The following diagram from Brandon Rohrer’s Transformers From Scratch illustrates how positional encoding introduces this circular wiggle:

- Since a circle is a two-dimensional figure, representing this circular wiggle requires modifying two dimensions of the embedding space. In higher-dimensional spaces (as is typical), the circular wiggle is repeated across all other pairs of dimensions, each with different angular frequencies. In some dimensions, the wiggle completes many rotations, while in others, it may only complete a fraction of a rotation. This combination of circular wiggles of different frequencies provides a robust representation of the absolute position of a word within the sequence.

- Formula: For a position \(pos\) and embedding dimension \(i\), the embedding vector can be defined as:

\(PE_{(pos, 2i)} = \sin\left(\frac{pos}{10000^{2i/d_{model}}}\right)\)

\(PE_{(pos, 2i+1)} = \cos\left(\frac{pos}{10000^{2i/d_{model}}}\right)\)

- where \(d_{model}\) is the dimensionality of the model.

- Architecture Diagram: The architecture diagram from the original Transformer paper highlights how positional encoding is generated and added to the embedded words:

Why sinusoidal positional embeddings work?

- Absolute/sinusoidal positional embeddings add position information into the mix in a way that doesn’t disrupt the learned relationships between words and attention. For a deeper dive into the math and implications, Amirhossein Kazemnejad’s positional encoding tutorial is recommended.

Limitations of Absolute Positional Encoding

- Lack of Flexibility: While absolute positional encodings encode each position with a unique vector, they are limited in that they do not naturally generalize to unseen positions or sequences longer than those encountered during training. This poses a challenge when processing sequences of varying lengths or very long sequences, as the embeddings for out-of-range positions are not learned.

- Example: Consider a transformer trained on sentences with a maximum length of 100 tokens. If the model encounters a sentence with 150 tokens during inference, the positional encodings for positions 101 to 150 would not be well-represented, potentially degrading the model’s performance on longer sequences.

Relative Positional Encoding

- Definition and Purpose:

- Relative positional encoding, proposed in Self-Attention with Relative Position Representations (2018) by Shaw et al., addresses the limitations of absolute positional encoding by encoding the relative positions between tokens rather than their absolute positions. In this approach, the focus is on the distance between tokens, allowing the model to handle sequences of varying lengths more effectively.

- Relative positional encodings can be integrated into the attention mechanism of transformers. Instead of adding a positional encoding to each token, the model learns embeddings for the relative distances between tokens and incorporates these into the attention scores.

-

Relative Positional Encoding for a Sequence of Length N: For a sequence of length \(N\), the relative positions between any two tokens range from \(-N+1\) to \(N-1\). This is because the relative position between the first token and the last token in the sequence is \(-(N-1)\), and the relative position between the last token and the first token is \(N-1\). Therefore, we need \(2N-1\) unique relative positional encoding vectors to cover all possible relative distances between tokens.

- Example: If \(N = 5\), the possible relative positions range from \(-4\) (last token relative to the first) to \(+4\) (first token relative to the last). Thus, we need 9 relative positional encodings corresponding to the relative positions: \(-4, -3, -2, -1, 0, +1, +2, +3, +4\).

Limitations of Relative Positional Encoding

- Complexity and Scalability: While relative positional encodings offer more flexibility than absolute embeddings, they introduce additional complexity. The attention mechanism needs to account for relative positions, which can increase computational overhead, particularly for long sequences.

- Example: In scenarios where sequences are extremely long (e.g., hundreds or thousands of tokens), the number of relative positional encodings required (\(2N-1\)) can become very large, potentially leading to increased memory usage and computation time. This can make the model slower and more resource-intensive to train and infer.

Rotary Positional Embeddings (RoPE)

- Definition and Purpose:

- Rotary Positional Embeddings (RoPE), proposed in RoFormer: Enhanced Transformer with Rotary Position Embedding (2021) by Su et al., are a more recent advancement in positional encoding, designed to capture the benefits of both absolute and relative positional embeddings while being parameter-efficient. RoPE encodes absolute positional information using a rotation matrix, which naturally incorporates explicit relative position dependency in the self-attention formulation.

- RoPE applies a rotation matrix to the token embeddings based on their positions, enabling the model to infer relative positions directly from the embeddings. The very ability of RoPE to capture relative positions while being parameter-efficient has been key in the development of very long-context LLMs, like GPT-4, which can handle sequences of thousands of tokens.

- Mathematical Formulation: Given a token embedding \(x\) and its position \(pos\), the RoPE mechanism applies a rotation matrix \(R(pos)\) to the embedding:

-

The rotation matrix \(R(pos)\) is constructed using sinusoidal functions, ensuring that the rotation angle increases with the position index.

-

Capturing Relative Positions: The key advantage of RoPE is that the inner product of two embeddings rotated by their respective positions encodes their relative position. This means that the model can infer the relative distance between tokens from their embeddings, allowing it to effectively process long sequences.

-

Example: Imagine a sequence with tokens A, B, and C at positions 1, 2, and 3, respectively. RoPE would rotate the embeddings of A, B, and C based on their positions. The model can then determine the relative positions between these tokens by examining the inner products of their rotated embeddings. This ability to capture relative positions while maintaining parameter efficiency has been crucial in the development of very long-context LLMs like GPT-4, which can handle sequences of thousands of tokens.

-

Further Reading: For a deeper dive into the mathematical details of RoPE, Rotary Embeddings: A Relative Revolution by Eleuther AI offers a comprehensive explanation.

Limitations of Rotary Positional Embeddings

- Specificity of the Mechanism: While RoPE is powerful and efficient, it is specifically designed for certain architectures and may not generalize as well to all transformer variants or other types of models. Moreover, its mathematical complexity might make it harder to implement and optimize compared to more straightforward positional encoding methods.

- Example: In practice, RoPE might be less effective in transformer models that are designed with very different architectures or in tasks where positional information is not as crucial. For instance, in some vision transformers where spatial positional encoding is more complex, RoPE might not offer the same advantages as in text-based transformers.

Decoding Output Words / De-Embeddings / Un-Embeddings

-

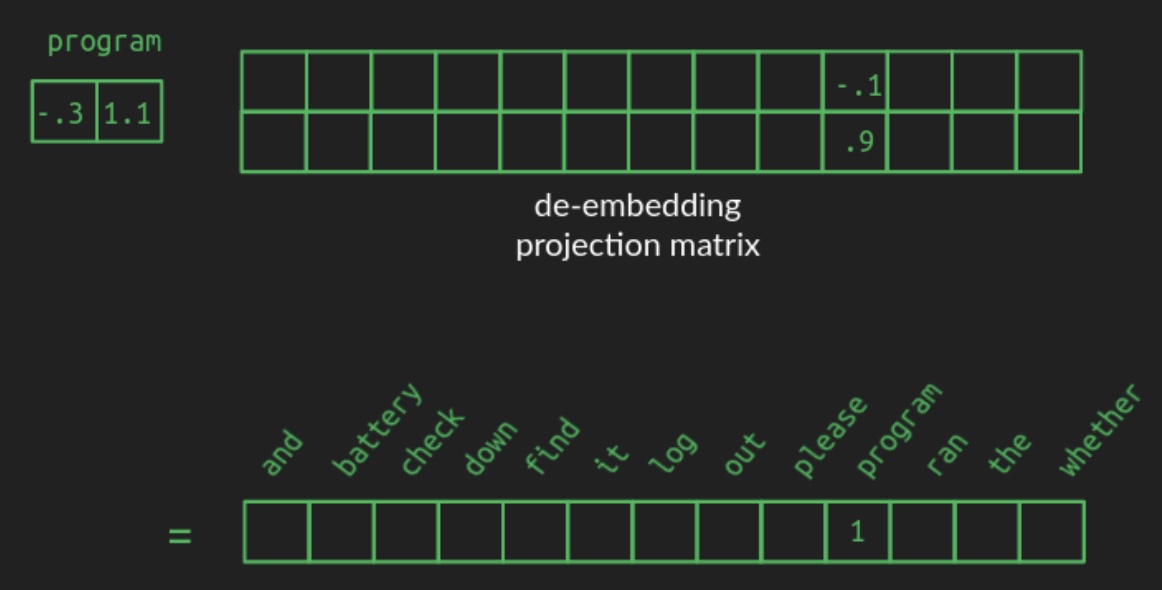

While embedding words into a lower-dimensional continuous space significantly improves computational efficiency, at some point—particularly during inference or output generation—the model must convert these representations back into discrete tokens from the original vocabulary. This process, known as de-embedding (also commonly called un-embedding), occurs after the transformer has finished computing contextualized representations and is conceptually and operationally analogous to embedding: it involves a learned projection from one vector space to another, implemented via matrix multiplication.

-

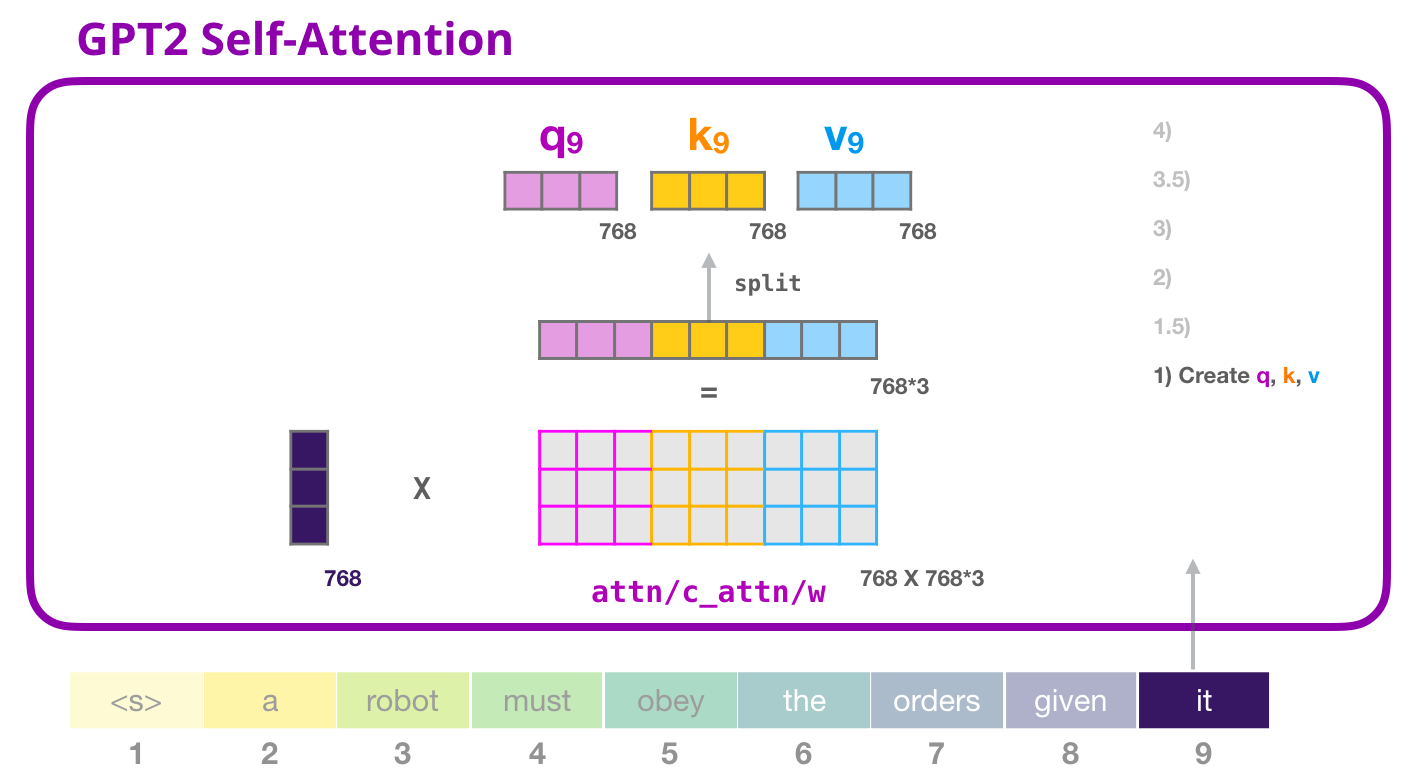

In an autoregressive transformer, the specific vector that is de-embedded to predict the next token is the final hidden state at the last sequence position/index, i.e., the output representation corresponding to the last token generated so far. This hidden state is produced by passing the token’s initial embedding through a stack of transformer blocks (also known as transformer layers), where each block (layer) applies masked self-attention and feed-forward transformations that incorporate information from all preceding tokens—each of these operations are referred to as a sublayer when a transformer block is described as a layer. Concretely, at each attention layer, the last token’s current representation is linearly projected into its own query, key, and value (Q, K, V) vectors; its query is then compared against (i.e., attends over) the keys of all previous tokens to compute attention weights, which are used to form a weighted sum of the corresponding value vectors. The resulting attention output is combined with the token’s existing representation (via residual connections) and further transformed by a feed-forward network, yielding an updated representation for the last token that becomes its hidden state for the next layer.

-