Recommendation Systems • Candidate Generation

- Overview

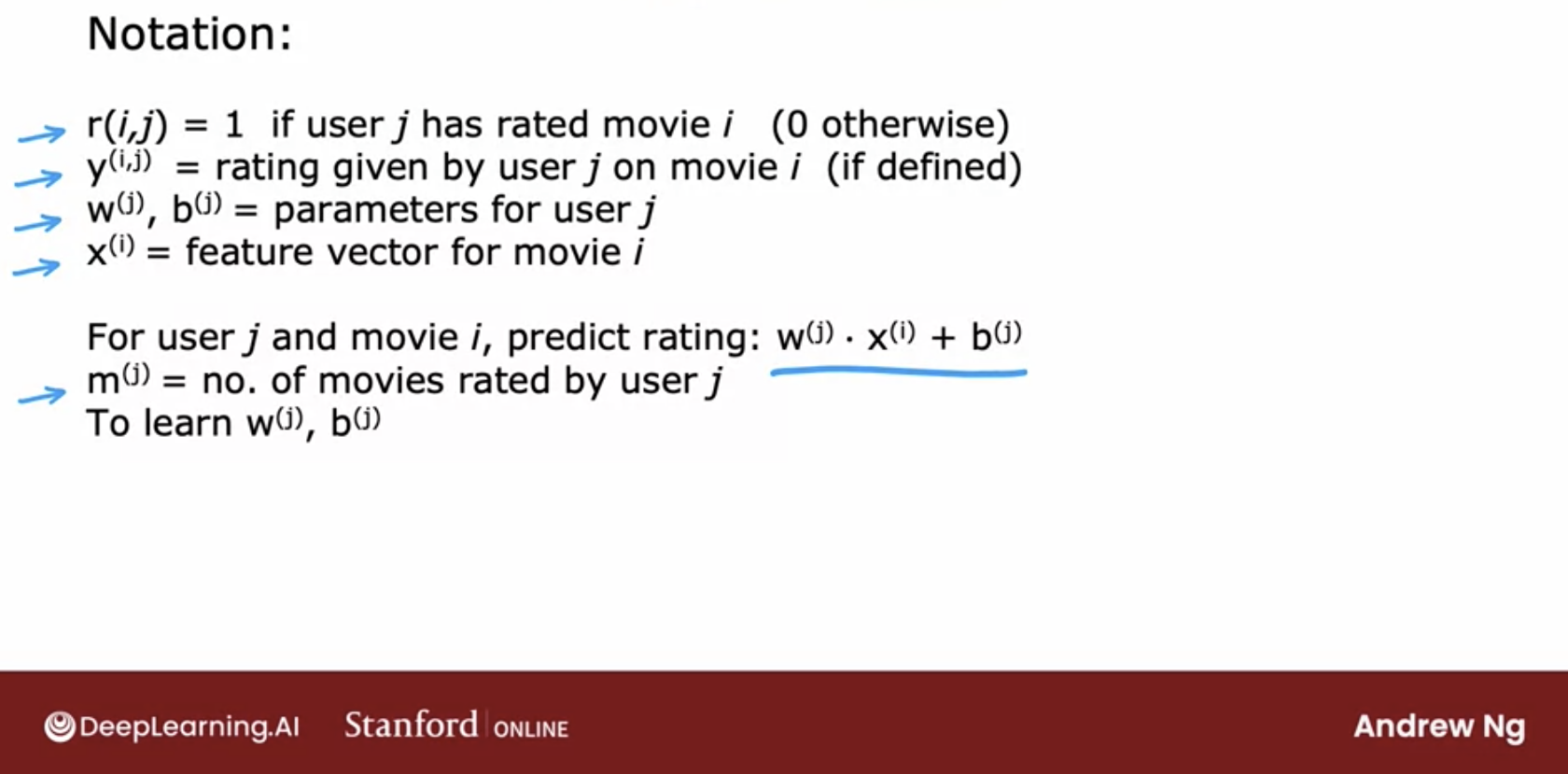

- Notation

- Input Features

- Content-Based Filtering

- Collaborative Filtering

- Mechanism of Collaborative Filtering

- Example of Collaborative Filtering

- Objective of Collaborative Filtering

- Advantages of Collaborative Filtering

- Disadvantages of Collaborative Filtering

- Types of Collaborative Filtering

- User-based Collaborative Filtering

- Item-based Collaborative Filtering

- Data Collection

- Calculate Similarity Between Items

- Build the Item-Item Similarity Matrix

- Identify Nearest Neighbors

- Generate Predictions

- Recommend Items

- Implementation Considerations

- Optimization Strategies

- Advantages of Item-Based collaborative filtering

- Disadvantages of Item-Based collaborative filtering

- Example Workflow

- A Movie Recommendation Case-study

- Matrix Factorization (MF)

- Training Matrix Factorization

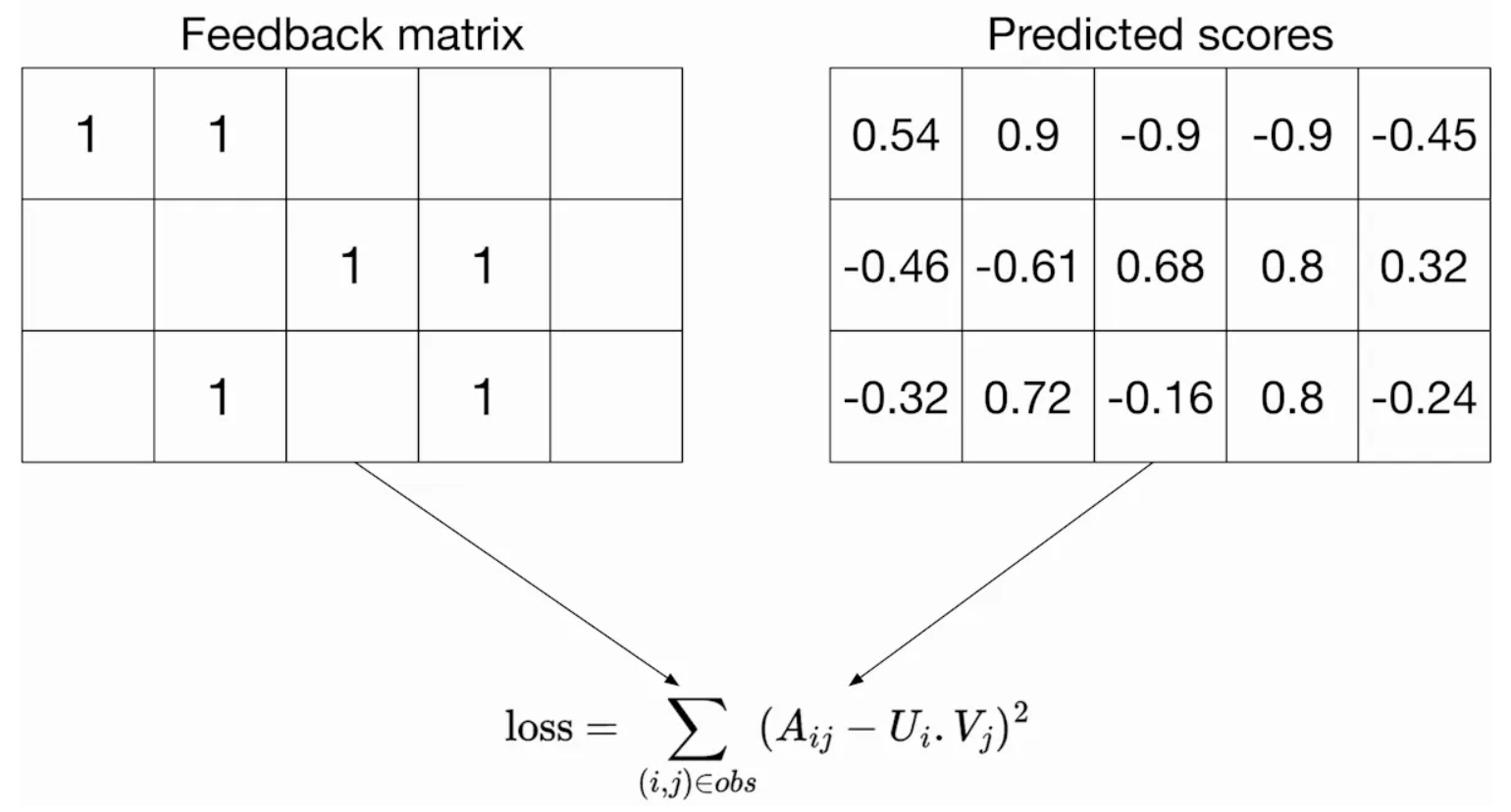

- Squared Distance over Observed User-Item Pairs

- The Concept of Fold-In

- Loss Function Options and Issues with Unobserved Pairs

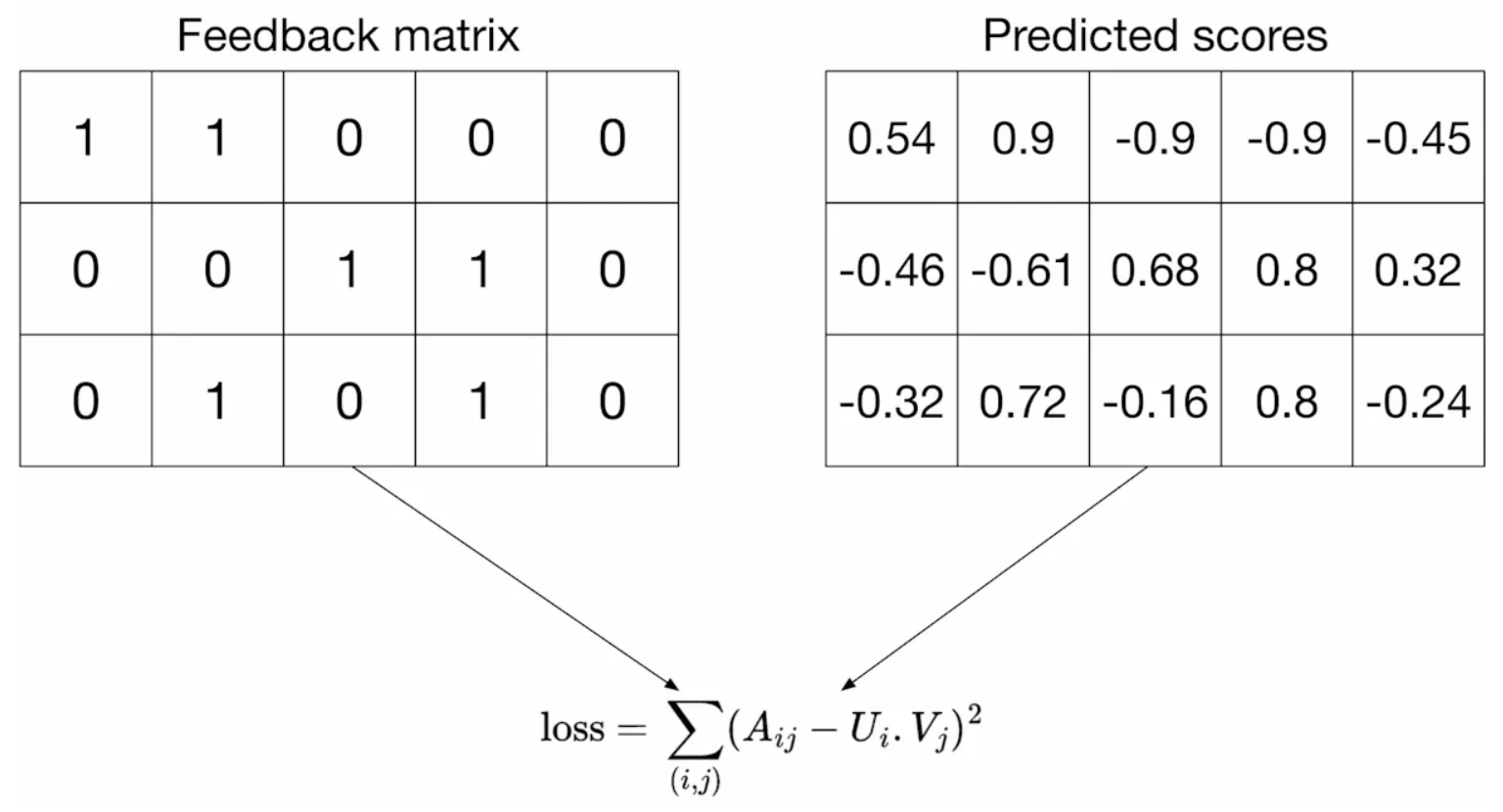

- Squared Distance over Both Observed and Unobserved Pairs

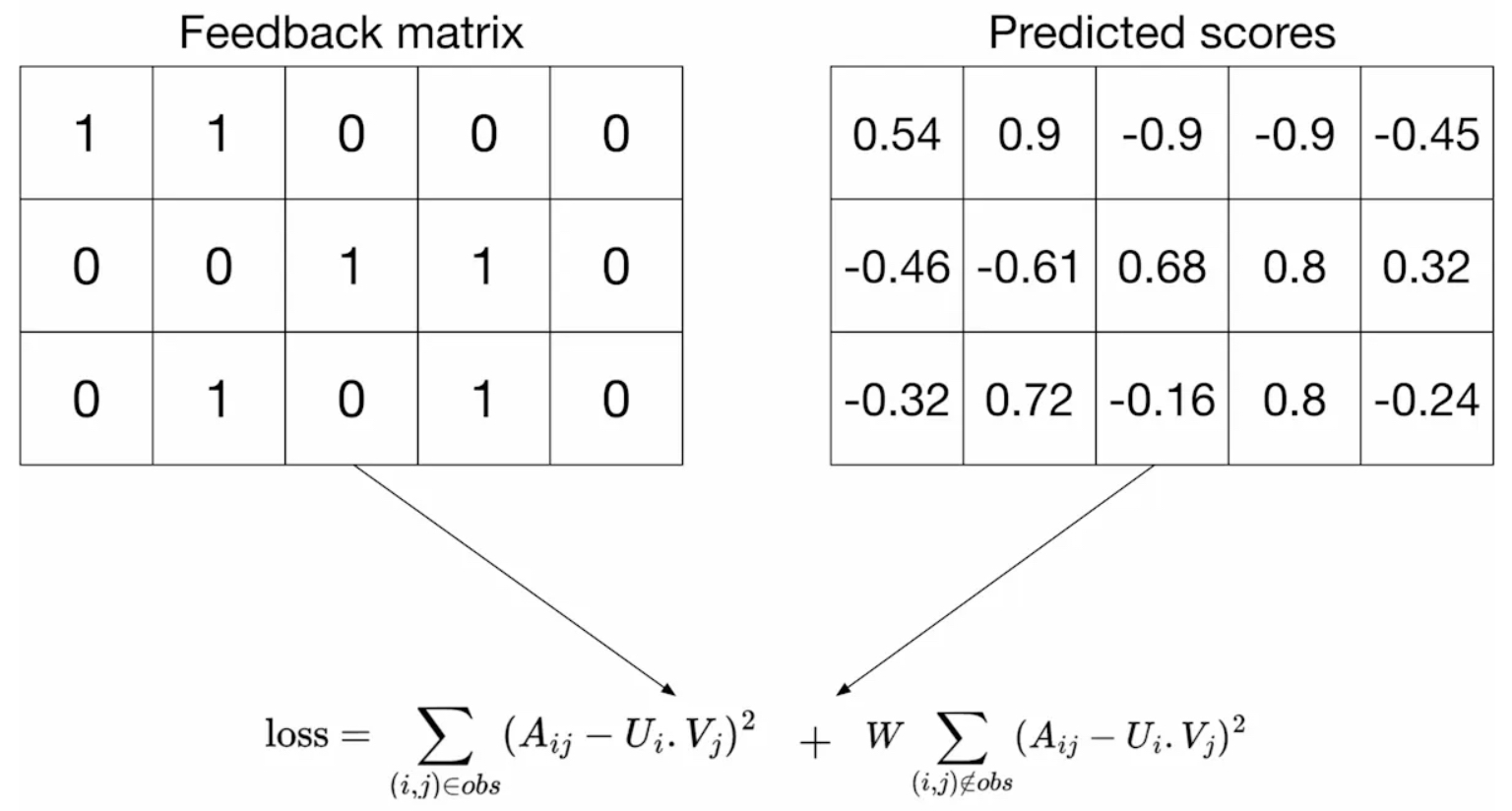

- A Weighted Combination of Losses for Observed and Unobserved Pairs

- Practical Considerations for Weighting Observed Pairs

- Minimizing the Objective Function

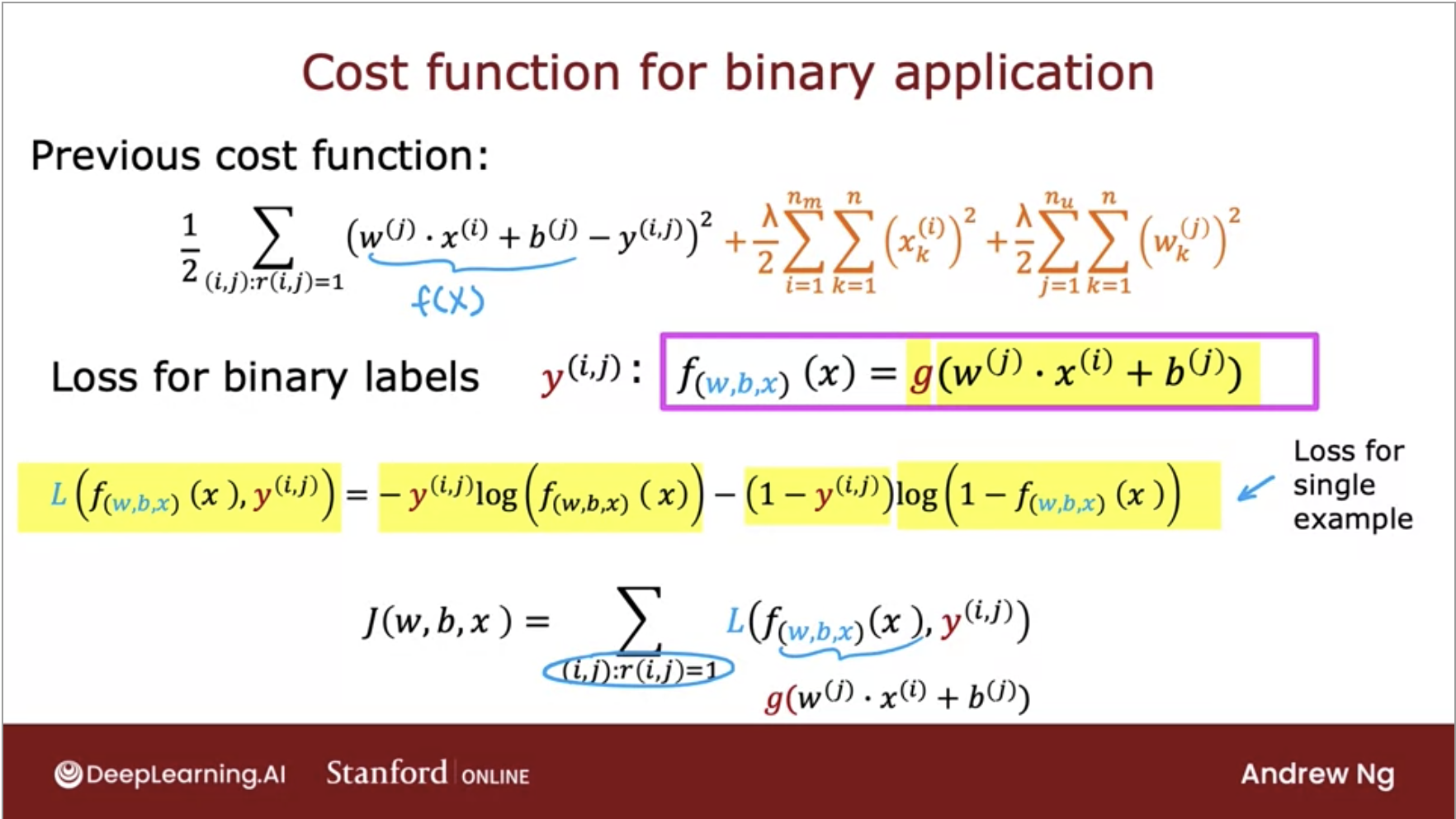

- Cost Function for Binary Labels (Regression to Classification)

- Example: Learning Process for Embedding Vectors

- Non-Negative Matrix Factorization (NMF)

- Asymmetric Matrix Factorization (AMF)

- SVD++

- Comparative Analysis: Standard MF, Non-Negative MF, Asymmetric MF, and SVD++

- FAQs

- For a large dimensional utility matrix, can we use WALS in a distributed manner (“Distributed WALS”) to carry out matrix factorization?

- Does matrix factorization handle sparse interactions well?

- Compared to matrix factorization or linear models, do neural networks handle sparse data well?

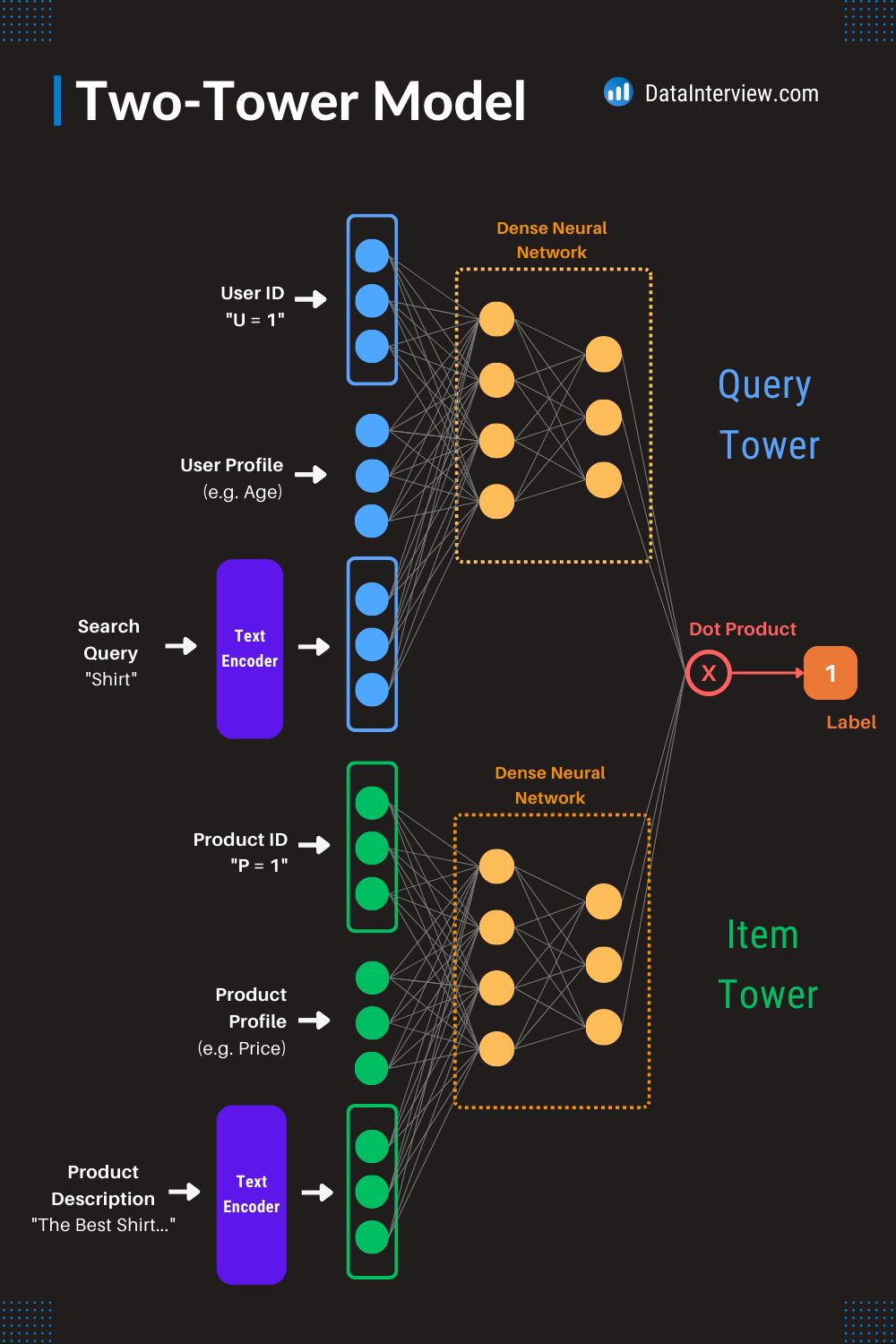

- Are two-tower architectures in recommender systems typically used for retrieval or ranking?

- Are two-tower architectures typically used for collaborative or content-based filtering?

- Code deep-dive

- Content-based vs. Collaborative Filtering

- Deep Neural Network based Recommendations

- Candidate Retrieval

- Negative Sampling

- Evaluation

- FAQs

- In the context of recommender systems, in which scenarios does delayed feedback occur?

- Purchase-Based Recommendations (E-commerce)

- Content Consumption (Streaming Platforms)

- Rating and Review Systems

- Click-Through Delays (Online Advertising)

- Complex Decision-Making (Travel and Real Estate)

- Subscription-Based Services

- Event-Driven Recommendations

- Delayed Behavioral Signals in Long-Term Engagement

- User Conversion Funnels

- Conclusion

- What is the network effect in recommender systems?

- In recommender systems, do ranking models process items in batches or individually?

- What are some ways to improve latency in recommender systems?

- In the context of recommender systems, in which scenarios does delayed feedback occur?

- Serving Optimizations

- Further Reading

- References

Overview

- Candidate generation is the first stage of recommendations and is typically achieved by finding features for users that relate to features of the items. Example, if the user searches for food, the search engine will look at the users location to determine possible candidates. It would filter out searches that were not in New York and look at latent representation of the user and find items that have latent representations that are close using approximate nearest neighbor algorithms.

- Note: Candidate generation is for recommender systems like a search engine where the candidates are not particularly based on time (For instance, searching for how to tie a tie should roughly return the same results in every instance) whereas candidate retrieval is for news-feed based systems where time is important, such as Instagram or Facebook, to make sure you don’t keep seeing the same content again.

- However, in order to achieve this, we would have to iterate over every item which could be computationally expensive.

- Let’s dive a little deeper into candidate generation, aka the first step in a general recommendation architecture.

- In recommender systems, candidate generation is the process of selecting a set of items that are likely to be recommended to a user. This process is typically performed after user preferences have been collected, and it involves filtering a large set of potential recommendations down to a more manageable number. The goal of candidate generation is to reduce the number of items that must be evaluated by the system, while still ensuring that the most relevant recommendations are included.

- Given a query (user information), the model generates a set of relevant candidates (videos, movies).

- There are two common candidate generation approaches:

- Content-based filtering: Uses similarity between content to recommend new content.

- For example, if user watches corgi videos, the model will recommend more corgi videos.

- Collaborative filtering: Uses similarity between queries (2 or more users) and items (videos, movies) to provide recommendations.

- For example, if user \(A\) watches corgi videos and user \(A\) is similar to user \(B\) (in demographics and other areas), then the model can recommend corgi videos to user \(B\) even if user \(B\) has never watched a corgi video before.

- Content-based filtering: Uses similarity between content to recommend new content.

Notation

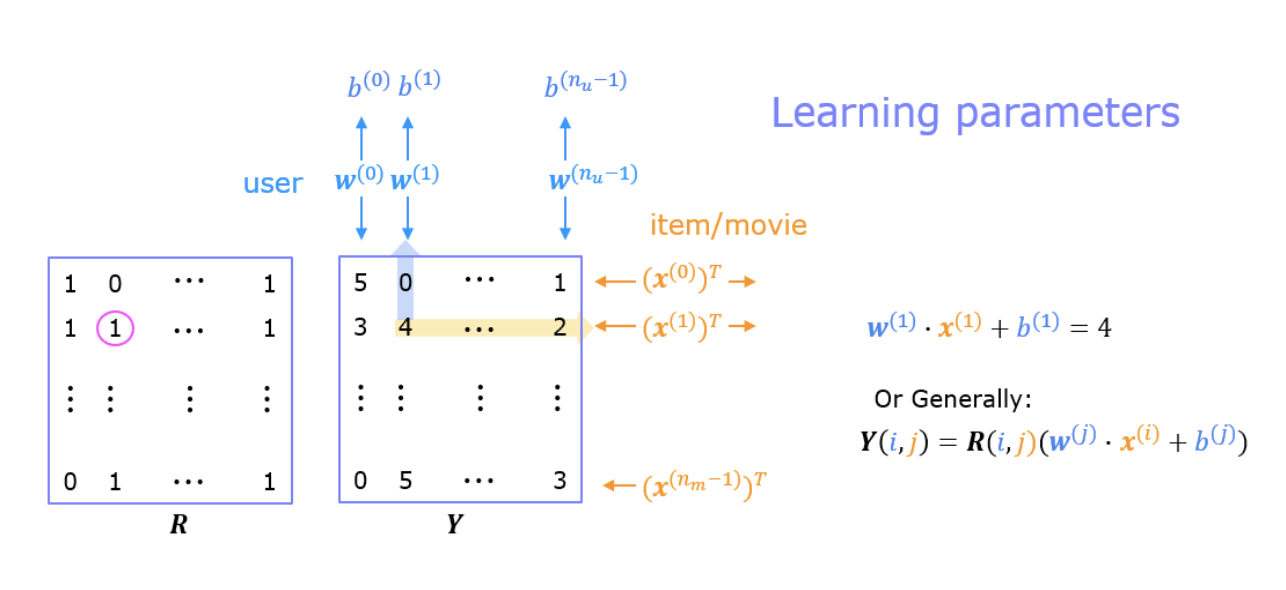

- A quick note on notation you’ll see in the notes moving forward: we will represent \(r\) to be ratings, specifically if user \(i\) has rated item \(j\), \(n\) to hold a number value of users or items, and \(y\) to hold the rating given by a user to a particular item. We can see the notation in the image below (source).

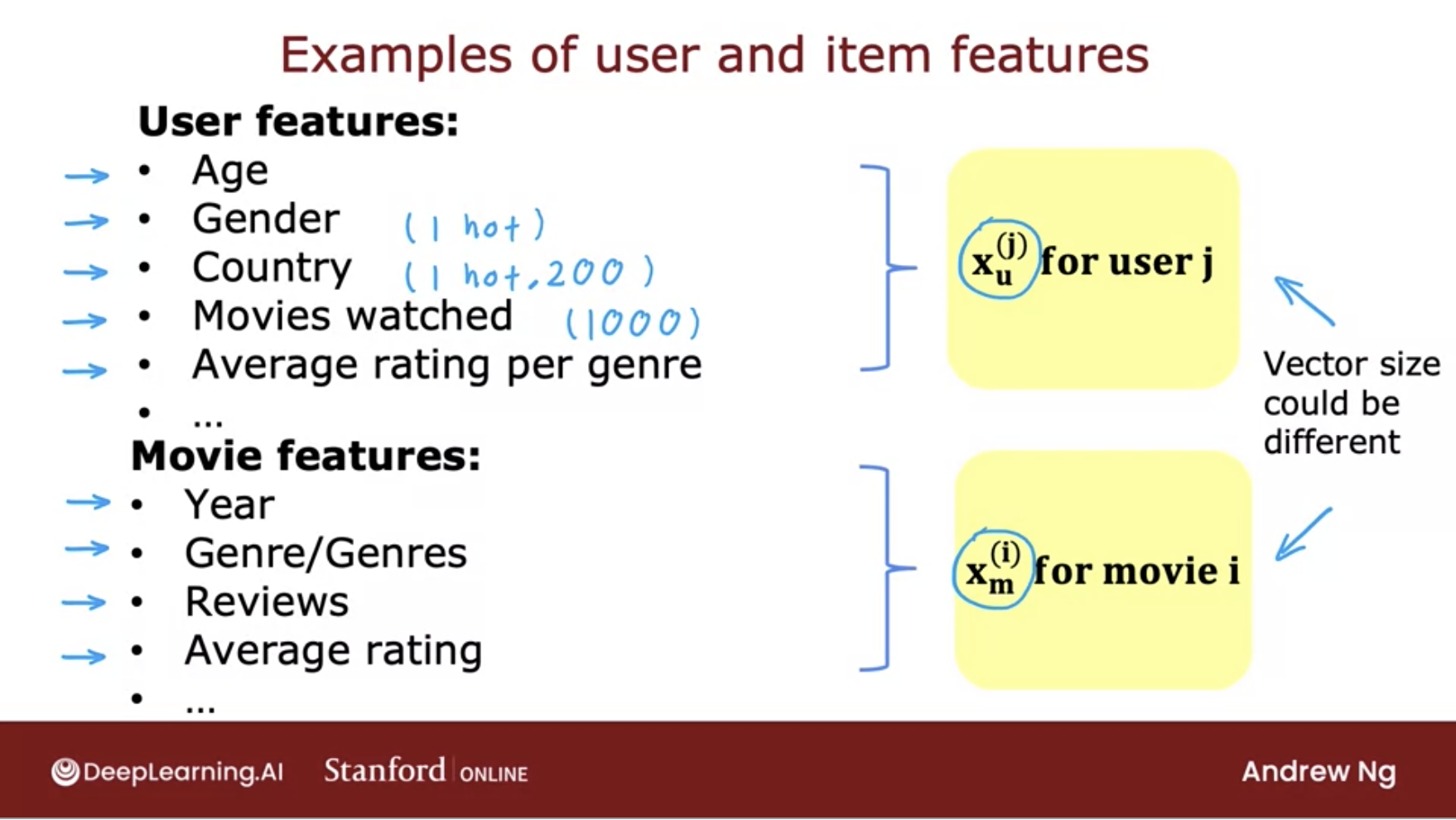

Input Features

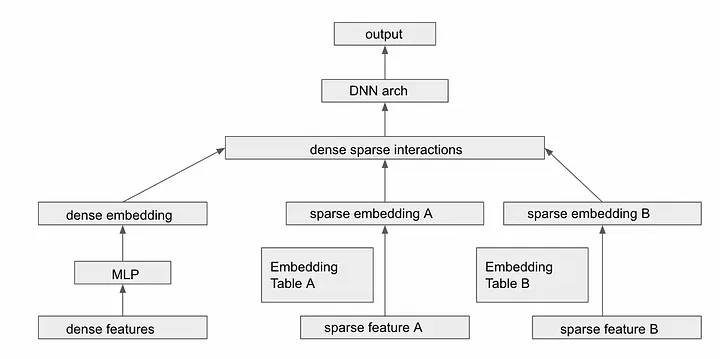

- In recommender systems, input features play a crucial role in capturing user and item characteristics. These features are broadly categorized into dense (continuous/numerical) and sparse (categorical) types. Dense features, such as numerical ratings or timestamps, are directly input into models, while sparse features, such as categories or tags, require encoding methods like embeddings to handle their high dimensionality efficiently. By combining these feature types, models can effectively learn patterns and relationships to generate accurate predictions and recommendations.

- Sparse features can further be classified into univalent (single-value) and multivalent (multi-value) types. Univalent features represent attributes with a single value, such as a user’s primary language, while multivalent features capture sets of attributes, such as the genres of a movie or tags on a product. Appropriately encoding and embedding these feature types enables models to capture complex interactions and improve the quality of predictions and recommendations.

Sparse and Dense Features

- Recommender systems typically deal with two kinds of features: dense and sparse. Dense features are continuous real values, such as movie ratings or release years. Sparse features, on the other hand, are categorical and can vary in cardinality, like movie genres or the list of actors in a film.

- The architectural transformation of these features in RecSys models can be broadly divided into two parts:

- Dense Features (Continuous / real / numerical values):

- Movie Ratings: This feature represents the continuous real values indicating the ratings given by users to movies. For example, a rating of 4.5 out of 5 would be a dense feature value.

- Movie Release Year: This feature represents the continuous real values indicating the year in which the movie was released. For example, the release year 2000 would be a dense feature value.

- Sparse Features (Categorical with low or high cardinality):

- Movie Genre: This feature represents the categorical information about the genre(s) of a movie, such as “Action,” “Comedy,” or “Drama.” These categorical values have low cardinality, meaning there are a limited number of distinct genres.

- Movie Actors: This feature represents the categorical information about the actors who starred in a movie. These categorical values can have high cardinality, as there could be numerous distinct actors in the dataset.

- Dense Features (Continuous / real / numerical values):

- In the model architecture, the dense features like movie ratings and release year can be directly fed into a feed-forward dense neural network. The dense network performs transformations and computations on the continuous real values of these features.

- On the other hand, the sparse features like movie genre and actors require a different approach. Such features are often encoded as one-hot vectors, e.g.,

[0,1,0]; however, this often leads to excessively high-dimensional feature spaces for large vocabularies. This is especially true in the case of web-scale recommender systems such as CTR prediction, the inputs are mostly categorical features, e.g.,country = usa. Instead of directly using the raw categorical values, an embedding network is employed to reduce the dimensionality. Each of the sparse, high-dimensional categorical features are first converted into a low-dimensional, dense real-valued vector, often referred to as an embedding vector. The dimensionality of the embeddings are usually on the order of O(10) to O(100). The embedding vectors are initialized randomly and then the values are trained to minimize the final loss function during model training. The embedding network maps each sparse feature value (e.g., genre or actor) to a low-dimensional dense vector representation called an embedding. These embeddings capture the semantic relationships and similarities between different categories. The embedding lookup tables contain pre-computed embeddings for each sparse feature value, allowing for efficient retrieval during the model’s inference. - By combining the outputs of the dense neural network and the embedding lookup tables, the model can capture the interactions between dense and sparse features, leading to better recommendations based on both continuous and categorical information.

- The figure below (source) illustrates a deep neural network (DNN) architecture for processing both dense and sparse features: dense features are processed through an MLP (multi-layer perceptron) to create dense embeddings, while sparse features are converted to sparse embeddings via separate embedding tables (A and B). These embeddings are then combined to facilitate dense-sparse interactions before being fed into the DNN architecture to produce the output.

Univalent and Multivalent Features

- In recommender systems, input features often fall into two distinct categories: univalent features and multivalent features, which define the nature of the data associated with an entity (such as a user, item, or event) and how it is represented in the model. These features are essential for capturing intricate patterns in categorical data and enabling models to make powerful predictions. By appropriately encoding and embedding these features, machine learning systems can effectively leverage their information to enhance performance and deliver accurate, personalized outcomes across a wide range of applications. Understanding their usage is critical to designing robust and efficient models.

Univalent Features

- Definition:

- Univalent features are those that have a single value for each entity. These values typically represent a unique attribute or characteristic of the entity.

- Examples:

- A person’s gender.

- The primary language of a user.

- The category or type of a product.

- The current status of a user (e.g., active or inactive).

- Representation:

- Univalent features are usually represented using one-hot encoding:

- If a feature can take on \(n\) possible categorical values, it is encoded as a vector of size \(n\), where only one position (corresponding to the feature’s value) is set to “1” while the rest are “0”.

- For instance, if there are 5 possible product categories (Electronics, Furniture, Books, Clothing, Food), and a product belongs to the “Books” category, its one-hot vector would look like: \([0, 0, 1, 0, 0]\).

- This encoding is sparse but effective for capturing the uniqueness of a single value.

- Univalent features are usually represented using one-hot encoding:

Multivalent Features

- Definition:

- Multivalent features are those that have multiple values for each entity. These values represent a set of attributes or properties associated with the entity, rather than a single attribute.

- Examples:

- The categories associated with a book (e.g., “Fiction”, “Mystery”, “Bestseller”).

- The list of movies watched by a user in the past week.

- The set of tags assigned to a blog post.

- A user’s skills in a professional profile.

- Representation:

- Multivalent features are typically represented using multi-hot encoding:

- If a feature can take on \(n\) possible categorical values, it is encoded as a vector of size \(n\), where multiple positions corresponding to the feature’s values are set to “1” while the rest remain “0”.

- For example, if a user has watched movies from the genres “Action”, “Comedy”, and “Sci-Fi” out of 5 possible genres, the multi-hot vector might look like:

\([1, 1, 0, 0, 1]\).

- Like one-hot encoding, this approach results in a sparse representation but can handle sets of attributes effectively.

- Multivalent features are typically represented using multi-hot encoding:

How These Features Are Used

- Embedding Categorical Features:

- Both univalent (one-hot) and multivalent (multi-hot) features are often embedded into dense numerical vectors in a shared vector space. This process reduces the sparsity and dimensionality of the input while capturing meaningful relationships between categories.

- For instance, embeddings can learn that two languages (e.g., English and Spanish) are more closely related than others, or that certain movie genres tend to co-occur in user preferences.

- Integration with Continuous Features:

- Once embedded, these categorical features are typically combined with normalized continuous features (e.g., time since the last event, the number of interactions, or numerical ratings) to form a comprehensive input representation for the machine learning model.

Applications in Machine Learning

-

Univalent and multivalent features are widely used across domains to model complex relationships:

- Recommendation Systems:

- Univalent: The primary language of a user.

- Multivalent: A user’s previously interacted items.

- Natural Language Processing:

- Univalent: The dominant language of a document.

- Multivalent: Keywords or topics associated with a document.

- E-Commerce:

- Univalent: The category of a product.

- Multivalent: Tags or attributes describing the product (e.g., color, material, style).

- Healthcare:

- Univalent: The primary diagnosis of a patient.

- Multivalent: A set of symptoms or previous treatments.

- Recommendation Systems:

Benefits of Encoding and Representation

- One-Hot Encoding for Univalent Features:

- Simplifies feature representation for single-value attributes.

- Ensures that each possible category is uniquely represented.

- Multi-Hot Encoding for Multivalent Features:

- Allows effective modeling of sets or groups of attributes without collapsing them into a single value.

- Preserves the full scope of relevant information (e.g., all categories a user interacts with).

Challenges and Considerations

- High Dimensionality:

- Both one-hot and multi-hot encodings can result in high-dimensional sparse vectors, especially when the number of categories is large.

- Embedding Training:

- The quality of embeddings learned from categorical data can significantly impact model performance.

- Interpretability:

- While sparse representations (one-hot and multi-hot) are easy to interpret, embeddings are dense and less transparent, requiring additional techniques to understand their semantics.

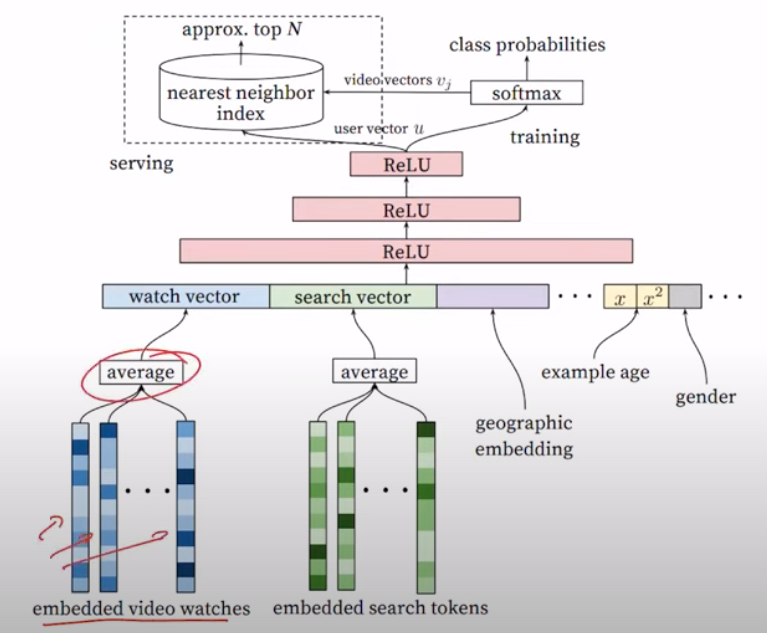

Transforming Variable-Sized Sparse IDs into Fixed-Width Vectors

-

At the input stage of the recommendation model, one key challenge is the variability in size of sparse feature inputs (e.g., a list of watched videos in a video recommendation system or search tokens in an information retrieval system). These features are multivalent, meaning they contain multiple values (e.g., a user may have watched 10 videos or searched for 5 different topics). To process these multivalent sparse features efficiently in the deep learning architecture, the following steps are employed:

- Embedding Sparse Features:

- Sparse feature values, which are categorical in nature, are first mapped to dense, low-dimensional embedding vectors using embedding lookup tables. Each categorical ID (e.g., a video ID or a search term) is assigned a fixed-width embedding vector. For instance, if the embedding dimension is 128, each sparse ID is represented as a 128-dimensional dense vector.

- Averaging Embeddings:

- Since sparse feature inputs are variable-sized (e.g., a user may watch different numbers of videos), embeddings for all IDs in a single feature (e.g., all watched videos) are averaged together. This process transforms the variable-sized “bag” of sparse embeddings into a single fixed-width vector representation.

- For example, if a user has watched 5 videos, their corresponding 5 embedding vectors (each of size 128) are averaged element-wise to produce a single embedding vector of size 128. Similarly, if a user has searched for 3 topics, the embeddings for these topics are averaged to form another fixed-width vector.

- Concatenation with Other Features:

- The averaged embeddings are then concatenated with other feature types, such as dense features (e.g., user age, gender) or other sparse features (that may or may not have undergone similar transformations). This concatenated representation forms a unified, fixed-width input vector that is suitable for feeding into the neural network’s hidden layers.

- Benefits of Averaging:

- Dimensionality Reduction: Averaging condenses variable-sized inputs into a consistent format, reducing computational complexity.

- Noise Mitigation: By averaging multiple embeddings, the model can smooth out noise and capture the overall trend or preference represented by the set of sparse IDs.

- Scalability: This approach allows the model to handle a wide range of input sizes without increasing the number of parameters or computational requirements.

- Embedding Sparse Features:

-

By averaging embeddings for variable-sized sparse features, the recommendation system ensures a consistent, efficient, and scalable input representation, enabling it to handle the diverse and large-scale datasets characteristic of recommender systems.

Content-Based Filtering

- Content-based filtering is a sophisticated recommendation system that suggests items to users by analyzing the similarity between item features and the user’s known preferences. This approach recommends items that resemble those a user has previously liked or interacted with.

Mechanism of Content-Based Filtering

- Content-based filtering operates by examining the attributes or features of items, such as textual descriptions, images, or tags, and constructing a profile of the user’s preferences based on the attributes of items they have engaged with. The system then recommends items similar to those the user has previously interacted with, drawing on the similarity between the item attributes and the user’s profile.

- For example, consider a content-based filtering system designed to recommend movies. The system would analyze various attributes of the movies, such as genre, actors, directors, and plot summaries, and build a user profile based on the movies they have liked in the past. If a user has shown a preference for action movies featuring Bruce Willis, the system might recommend other action movies that also star Bruce Willis or share similar attributes.

A major difference between content-based filtering and collaborative filtering is that content-based filtering only relies on video features (while collaborative filtering relies exclusively upon users’ historical interactions to make recommendations). This enables content-based filtering to tackle the item cold-start problem effectively.

Advantages of Content-Based Filtering

-

Content-based filtering offers several advantages over alternative recommendation approaches:

- Effective in Data-Limited Environments: This method is particularly useful in situations where there is limited or no data on user behavior, such as in new or niche domains with a small user base.

- Personalized Recommendations: Since content-based filtering relies on an individual user’s preferences rather than the collective behavior of other users, it can provide highly personalized recommendations.

- Scalability: The model does not require data from other users, focusing solely on the current user’s information, which makes it easier to scale.

- Recommendation of Niche Items: It can recommend niche items tailored to each user’s unique preferences, items that might not be of interest to a broader audience.

- Ability to Recommend New Items: The method can recommend newly introduced items without waiting for user interaction data, as the recommendations are based on the item’s inherent features.

- Capturing Unique User Interests: Content-based filtering excels at capturing and catering to the distinct interests of users by recommending items based on their previous engagements.

Limitations of Content-Based Filtering

-

Despite its advantages, content-based filtering has several limitations:

- Need for Domain Knowledge: The method requires extensive domain knowledge, as features must often be manually engineered. Consequently, the effectiveness of the model is closely tied to the quality of these hand-engineered features.

- Limited Exploration of New Interests: The model tends to recommend items within the user’s existing preferences, limiting its ability to introduce new or diverse interests.

- Difficulty in Discovering New Interests: The method may struggle to identify and suggest items that do not align with the user’s current interests, making it challenging to expand the user’s horizons.

- Dependence on Feature Availability: The method may be ineffective in situations where there is a lack of detailed item information or where items possess limited attributes or features.

Types of Content-Based Filtering

-

Item-Based Filtering: In this approach, the recommender system suggests new items based on their similarity to items previously selected by the user, which are treated as implicit feedback. This method can be visualized in the diagram provided.

-

User-Based Filtering: This approach involves collecting user preferences through explicit feedback mechanisms, such as questionnaires. The gathered information is then used to recommend items with features similar to those of items the user has previously liked. An example is illustrated in the diagram provided.

Example

-

Consider the following example, which begins by examining the features illustrated in the image below (source):

-

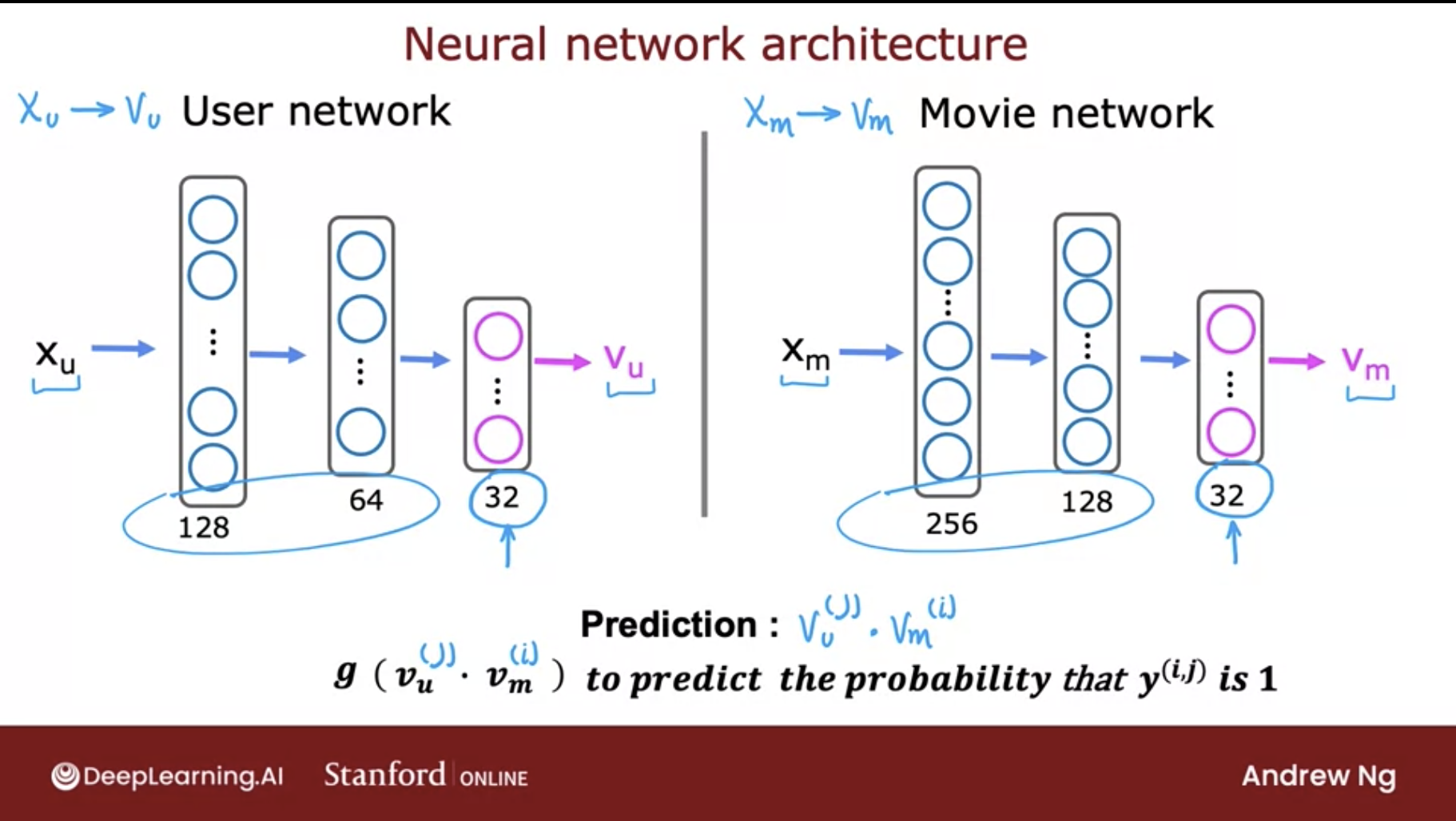

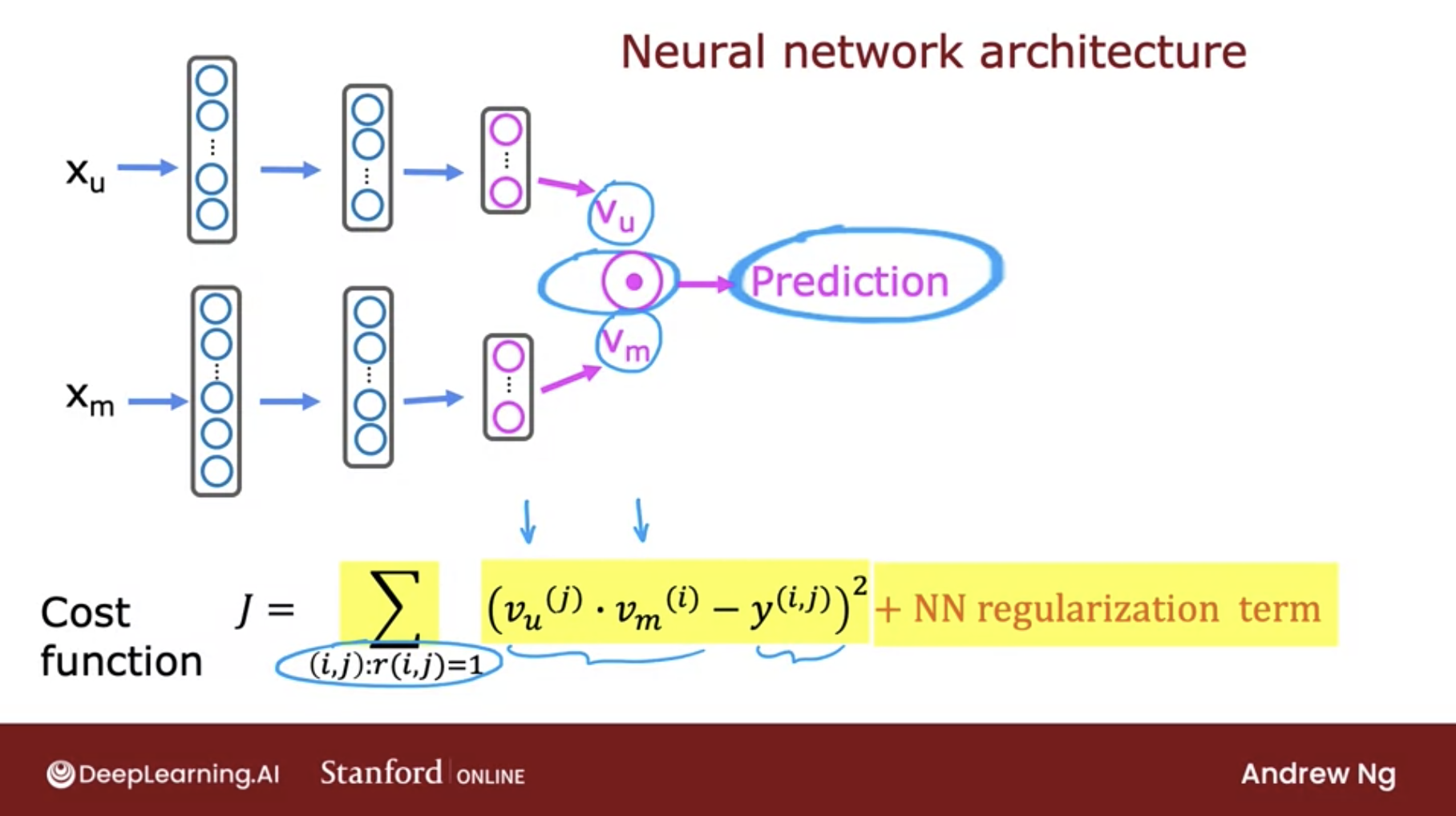

We aim to develop an algorithm that utilizes deep learning to effectively match users with items.

-

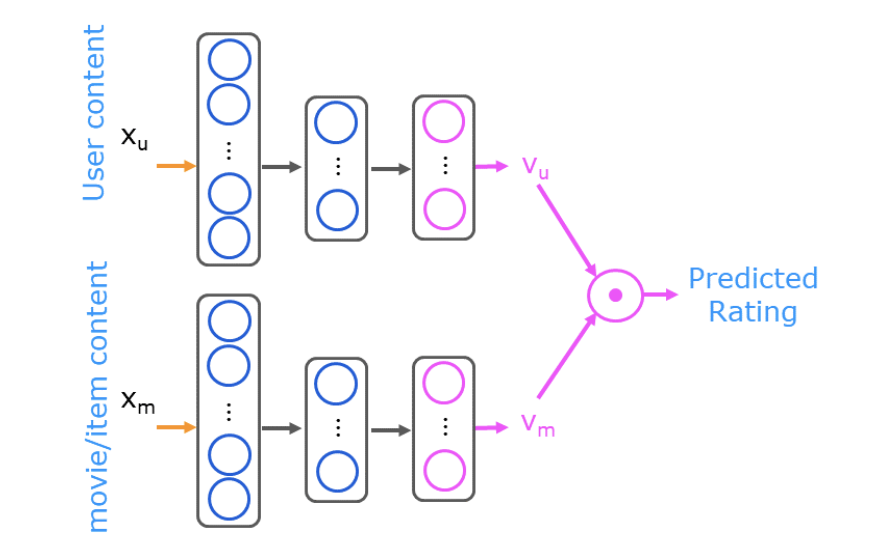

The architecture of our proposed model is depicted in the images below (source):

-

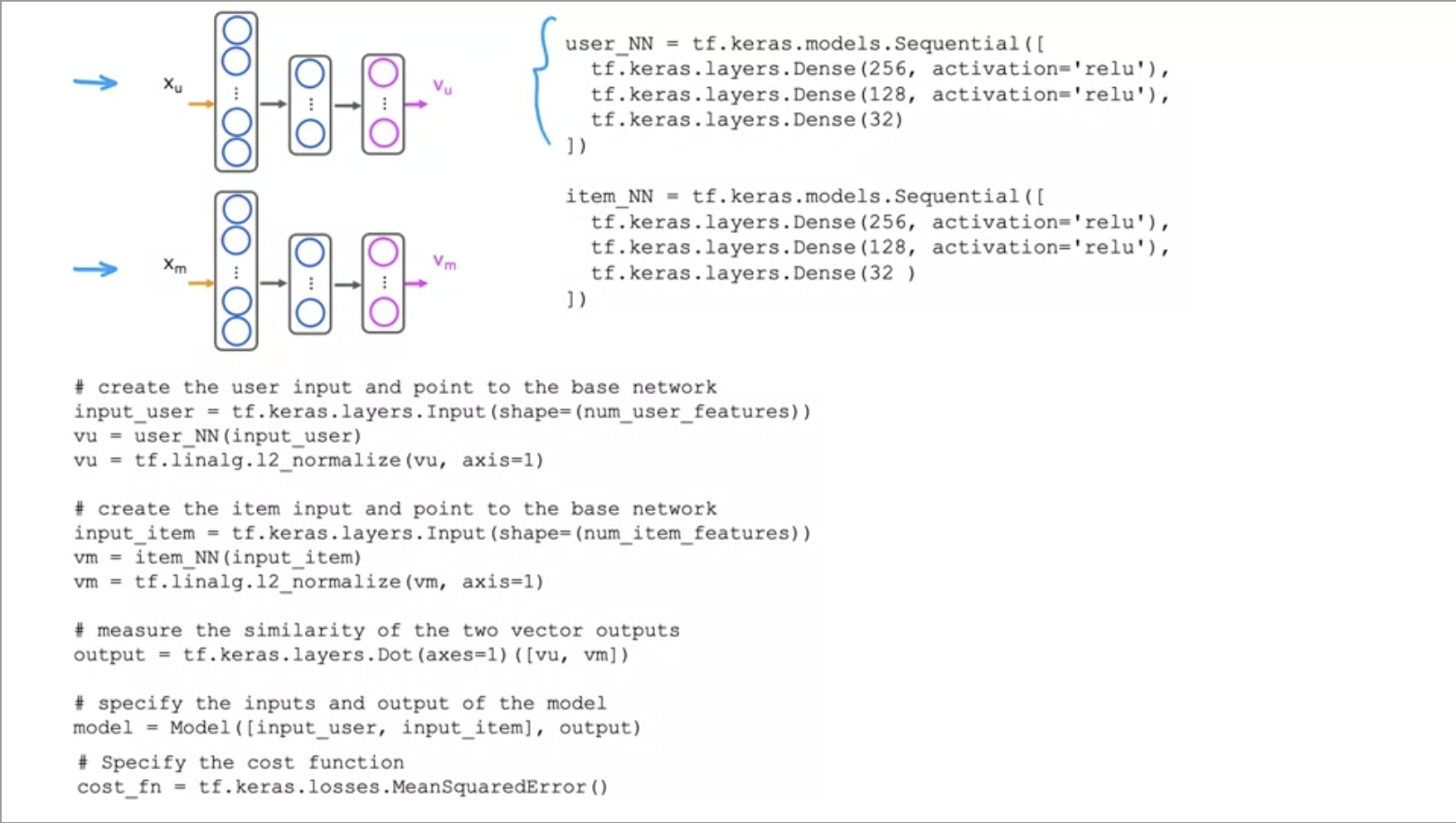

Next, we will examine a sequential model in TensorFlow that implements a content-based filtering approach (source):

-

This model consists of two dense hidden layers, and the final layer produces 32 output values. All layers employ the ReLU activation function.

Code deep-dive

- Now, lets build a content-based filtering system using neural networks to recommend movies. The architecture is listed below (source).

- Lets import our packages and load our dataset:

import numpy as np

import numpy.ma as ma

from numpy import genfromtxt

from collections import defaultdict

import pandas as pd

import tensorflow as tf

from tensorflow import keras

from sklearn.preprocessing import StandardScaler, MinMaxScaler

from sklearn.model_selection import train_test_split

import tabulate

from recsysNN_utils import *

pd.set_option("display.precision", 1)

# Load Data, set configuration variables

item_train, user_train, y_train, item_features, user_features, item_vecs, movie_dict, user_to_genre = load_data()

num_user_features = user_train.shape[1] - 3 # remove userid, rating count and ave rating during training

num_item_features = item_train.shape[1] - 1 # remove movie id at train time

uvs = 3 # user genre vector start

ivs = 3 # item genre vector start

u_s = 3 # start of columns to use in training, user

i_s = 1 # start of columns to use in training, items

scaledata = True # applies the standard scalar to data if true

print(f"Number of training vectors: {len(item_train)}")

- Let’s take a quick look at our feature vectors and data:

pprint_train(user_train, user_features, uvs, u_s, maxcount=5)

[user id] [rating count] [rating ave] Act ion Adve nture Anim ation Chil dren Com edy Crime Docum entary Drama Fan tasy Hor ror Mys tery Rom ance Sci -Fi Thri ller

2 16 4.1 3.9 5.0 0.0 0.0 4.0 4.2 4.0 4.0 0.0 3.0 4.0 0.0 4.2 3.9

2 16 4.1 3.9 5.0 0.0 0.0 4.0 4.2 4.0 4.0 0.0 3.0 4.0 0.0 4.2 3.9

2 16 4.1 3.9 5.0 0.0 0.0 4.0 4.2 4.0 4.0 0.0 3.0 4.0 0.0 4.2 3.9

2 16 4.1 3.9 5.0 0.0 0.0 4.0 4.2 4.0 4.0 0.0 3.0 4.0 0.0 4.2 3.9

2 16 4.1 3.9 5.0 0.0 0.0 4.0 4.2 4.0 4.0 0.0 3.0 4.0 0.0 4.2 3.9

- Let’s prepare the data by doing a bit of preprocessing:

# scale training data

if scaledata:

item_train_save = item_train

user_train_save = user_train

scalerItem = StandardScaler()

scalerItem.fit(item_train)

item_train = scalerItem.transform(item_train)

scalerUser = StandardScaler()

scalerUser.fit(user_train)

user_train = scalerUser.transform(user_train)

print(np.allclose(item_train_save, scalerItem.inverse_transform(item_train)))

print(np.allclose(user_train_save, scalerUser.inverse_transform(user_train)))

- And now split it into test and train:

item_train, item_test = train_test_split(item_train, train_size=0.80, shuffle=True, random_state=1)

user_train, user_test = train_test_split(user_train, train_size=0.80, shuffle=True, random_state=1)

y_train, y_test = train_test_split(y_train, train_size=0.80, shuffle=True, random_state=1)

print(f"movie/item training data shape: {item_train.shape}")

print(f"movie/item test data shape: {item_test.shape}")

movie/item training data shape: (46549, 17)

movie/item test data shape: (11638, 17)

- Now, let’s construct our neural network as the architecture displayed above. It will have two networks that are combined by a dot product.

# GRADED_CELL

# UNQ_C1

num_outputs = 32

tf.random.set_seed(1)

user_NN = tf.keras.models.Sequential([

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(num_outputs),

])

item_NN = tf.keras.models.Sequential([

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(num_outputs),

])

# create the user input and point to the base network

input_user = tf.keras.layers.Input(shape=(num_user_features))

vu = user_NN(input_user)

vu = tf.linalg.l2_normalize(vu, axis=1)

# create the item input and point to the base network

input_item = tf.keras.layers.Input(shape=(num_item_features))

vm = item_NN(input_item)

vm = tf.linalg.l2_normalize(vm, axis=1)

# compute the dot product of the two vectors vu and vm

output = tf.keras.layers.Dot(axes=1)([vu, vm])

# specify the inputs and output of the model

model = Model([input_user, input_item], output)

model.summary()

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 14)] 0

__________________________________________________________________________________________________

input_2 (InputLayer) [(None, 16)] 0

__________________________________________________________________________________________________

sequential (Sequential) (None, 32) 40864 input_1[0][0]

__________________________________________________________________________________________________

sequential_1 (Sequential) (None, 32) 41376 input_2[0][0]

__________________________________________________________________________________________________

tf_op_layer_l2_normalize/Square [(None, 32)] 0 sequential[0][0]

__________________________________________________________________________________________________

tf_op_layer_l2_normalize_1/Squa [(None, 32)] 0 sequential_1[0][0]

__________________________________________________________________________________________________

tf_op_layer_l2_normalize/Sum (T [(None, 1)] 0 tf_op_layer_l2_normalize/Square[0

__________________________________________________________________________________________________

tf_op_layer_l2_normalize_1/Sum [(None, 1)] 0 tf_op_layer_l2_normalize_1/Square

__________________________________________________________________________________________________

tf_op_layer_l2_normalize/Maximu [(None, 1)] 0 tf_op_layer_l2_normalize/Sum[0][0

__________________________________________________________________________________________________

tf_op_layer_l2_normalize_1/Maxi [(None, 1)] 0 tf_op_layer_l2_normalize_1/Sum[0]

__________________________________________________________________________________________________

tf_op_layer_l2_normalize/Rsqrt [(None, 1)] 0 tf_op_layer_l2_normalize/Maximum[

__________________________________________________________________________________________________

tf_op_layer_l2_normalize_1/Rsqr [(None, 1)] 0 tf_op_layer_l2_normalize_1/Maximu

__________________________________________________________________________________________________

tf_op_layer_l2_normalize (Tenso [(None, 32)] 0 sequential[0][0]

tf_op_layer_l2_normalize/Rsqrt[0]

__________________________________________________________________________________________________

tf_op_layer_l2_normalize_1 (Ten [(None, 32)] 0 sequential_1[0][0]

tf_op_layer_l2_normalize_1/Rsqrt[

__________________________________________________________________________________________________

dot (Dot) (None, 1) 0 tf_op_layer_l2_normalize[0][0]

tf_op_layer_l2_normalize_1[0][0]

==================================================================================================

Total params: 82,240

Trainable params: 82,240

Non-trainable params: 0

__________________________________________________________________________________________________

- Finally, it’s time to use a mean squared error loss and an Adam optimizer

tf.random.set_seed(1)

cost_fn = tf.keras.losses.MeanSquaredError()

opt = keras.optimizers.Adam(learning_rate=0.01)

model.compile(optimizer=opt,

loss=cost_fn)

- Let’s get to training!

tf.random.set_seed(1)

model.fit([user_train[:, u_s:], item_train[:, i_s:]], ynorm_train, epochs=30)

Train on 46549 samples

Epoch 1/30

46549/46549 [==============================] - 6s 122us/sample - loss: 0.1254

Epoch 2/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.1187

Epoch 3/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1169

Epoch 4/30

46549/46549 [==============================] - 5s 118us/sample - loss: 0.1154

Epoch 5/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1142

Epoch 6/30

46549/46549 [==============================] - 5s 114us/sample - loss: 0.1130

Epoch 7/30

46549/46549 [==============================] - 5s 114us/sample - loss: 0.1119

Epoch 8/30

46549/46549 [==============================] - 5s 114us/sample - loss: 0.1110

Epoch 9/30

46549/46549 [==============================] - 5s 114us/sample - loss: 0.1095

Epoch 10/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.1083

Epoch 11/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.1073

Epoch 12/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1066

Epoch 13/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.1059

Epoch 14/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1054

Epoch 15/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1047

Epoch 16/30

46549/46549 [==============================] - 5s 114us/sample - loss: 0.1041

Epoch 17/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1036

Epoch 18/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.1030

Epoch 19/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1027

Epoch 20/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.1021

Epoch 21/30

46549/46549 [==============================] - 5s 114us/sample - loss: 0.1018

Epoch 22/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1014

Epoch 23/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.1010

Epoch 24/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.1006

Epoch 25/30

46549/46549 [==============================] - 5s 116us/sample - loss: 0.1003

Epoch 26/30

46549/46549 [==============================] - 5s 114us/sample - loss: 0.0999

Epoch 27/30

46549/46549 [==============================] - 5s 115us/sample - loss: 0.0997

Epoch 28/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.0991

Epoch 29/30

46549/46549 [==============================] - 5s 113us/sample - loss: 0.0989

Epoch 30/30

46549/46549 [==============================] - 5s 112us/sample - loss: 0.0985

<tensorflow.python.keras.callbacks.History at 0x7fab691f12d0>

- Evaluate the model to determine the loss on the test data.

model.evaluate([user_test[:, u_s:], item_test[:, i_s:]], ynorm_test)

11638/11638 [==============================] - 0s 33us/sample - loss: 0.1045

0.10449595100221243

- Making predictions is the next step, first lets start off by predicting for a new user:

new_user_id = 5000

new_rating_ave = 1.0

new_action = 1.0

new_adventure = 1

new_animation = 1

new_childrens = 1

new_comedy = 5

new_crime = 1

new_documentary = 1

new_drama = 1

new_fantasy = 1

new_horror = 1

new_mystery = 1

new_romance = 5

new_scifi = 5

new_thriller = 1

new_rating_count = 3

user_vec = np.array([[new_user_id, new_rating_count, new_rating_ave,

new_action, new_adventure, new_animation, new_childrens,

new_comedy, new_crime, new_documentary,

new_drama, new_fantasy, new_horror, new_mystery,

new_romance, new_scifi, new_thriller]])

- Let’s look at the top-rated movies for the new user:

# generate and replicate the user vector to match the number movies in the data set.

user_vecs = gen_user_vecs(user_vec,len(item_vecs))

# scale the vectors and make predictions for all movies. Return results sorted by rating.

sorted_index, sorted_ypu, sorted_items, sorted_user = predict_uservec(user_vecs, item_vecs, model, u_s, i_s,

scaler, scalerUser, scalerItem, scaledata=scaledata)

print_pred_movies(sorted_ypu, sorted_user, sorted_items, movie_dict, maxcount = 10)

y_p movie id rating ave title genres

4.86762 64969 3.61765 Yes Man (2008) Comedy

4.86692 69122 3.63158 Hangover, The (2009) Comedy|Crime

4.86477 63131 3.625 Role Models (2008) Comedy

4.85853 60756 3.55357 Step Brothers (2008) Comedy

4.85785 68135 3.55 17 Again (2009) Comedy|Drama

4.85178 78209 3.55 Get Him to the Greek (2010) Comedy

4.85138 8622 3.48649 Fahrenheit 9/11 (2004) Documentary

4.8505 67087 3.52941 I Love You, Man (2009) Comedy

4.85043 69784 3.65 Brüno (Bruno) (2009) Comedy

4.84934 89864 3.63158 50/50 (2011) Comedy|Drama

- Now let’s make predictions for an existing user:

uid = 36

# form a set of user vectors. This is the same vector, transformed and repeated.

user_vecs, y_vecs = get_user_vecs(uid, scalerUser.inverse_transform(user_train), item_vecs, user_to_genre)

# scale the vectors and make predictions for all movies. Return results sorted by rating.

sorted_index, sorted_ypu, sorted_items, sorted_user = predict_uservec(user_vecs, item_vecs, model, u_s, i_s, scaler,

scalerUser, scalerItem, scaledata=scaledata)

sorted_y = y_vecs[sorted_index]

#print sorted predictions

print_existing_user(sorted_ypu, sorted_y.reshape(-1,1), sorted_user, sorted_items, item_features, ivs, uvs, movie_dict, maxcount = 10)

y_p y user user genre ave movie rating ave title genres

3.1 3.0 36 3.00 2.86 Time Machine, The (2002) Adventure

3.0 3.0 36 3.00 2.86 Time Machine, The (2002) Action

2.8 3.0 36 3.00 2.86 Time Machine, The (2002) Sci-Fi

2.3 1.0 36 1.00 4.00 Beautiful Mind, A (2001) Romance

2.2 1.0 36 1.50 4.00 Beautiful Mind, A (2001) Drama

1.6 1.5 36 1.75 3.52 Road to Perdition (2002) Crime

1.6 2.0 36 1.75 3.52 Gangs of New York (2002) Crime

1.5 1.5 36 1.50 3.52 Road to Perdition (2002) Drama

1.5 2.0 36 1.50 3.52 Gangs of New York (2002) Drama

Collaborative Filtering

- Collaborative filtering is a recommendation system technique used to suggest items to users based on the behaviors and preferences of a broader user group. It operates on the principle that users with similar tastes are likely to enjoy similar items.

A major difference between content-based filtering and collaborative filtering is that collaborative filtering does not use item features and relies exclusively upon users’ historical interactions to make recommendations.

- Collaborative filtering enhances recommendation systems by utilizing similarities between both users and items, enabling cross-user recommendations. This approach allows the system to recommend an item to one user based on the interests of another user with similar preferences. Unlike content-based filtering, collaborative filtering automatically learns embeddings without relying on manually engineered features.

Mechanism of Collaborative Filtering

- Collaborative filtering functions by analyzing user behavior, such as ratings, purchases, or clicks. The system identifies users with similar preferences and recommends items to an individual user based on what others with similar tastes have liked or engaged with.

Example of Collaborative Filtering

- In a movie recommendation system, collaborative filtering would analyze the ratings or reviews provided by users. If a user consistently rates certain movies highly, and those movies have also been highly rated by users with similar preferences, the system would recommend other movies that the user has not yet seen but have been positively rated by those similar users.

Objective of Collaborative Filtering

- The primary goal of a collaborative filtering system is to generate two vectors:

- User Parameter Vector: Captures the user’s tastes.

- Item Feature Vector: Represents certain characteristics or descriptions of the item.

- The system aims to predict how a user might rate an item by calculating the dot product of these two vectors, often with an added bias term. This prediction is then used to generate personalized recommendations.

Advantages of Collaborative Filtering

- Effectiveness in Sparse Data Environments: Collaborative filtering is particularly effective when there is limited information about item attributes, as it relies on user behavior rather than item features.

- Scalability: It performs well in environments with a large and diverse user base, making it suitable for systems with extensive datasets.

- Serendipitous Recommendations: The system can provide unexpected yet relevant recommendations, helping users discover items they might not have found independently.

- No Domain Knowledge Required: It does not require specific knowledge of item features, eliminating the need for domain-specific expertise in engineering these features.

- Efficiency: Collaborative filtering models are generally faster and less computationally intensive than content-based filtering, as they do not require analysis of item-specific attributes.

Disadvantages of Collaborative Filtering

- Data Scarcity: Collaborative filtering can struggle in situations where there is insufficient data on user behavior, limiting its effectiveness.

- Unique Preferences: The system may have difficulty making accurate recommendations for users with highly unique or niche preferences, as it relies on similarities between users.

- Cold Start Problem: New items or users with limited data pose challenges for generating accurate recommendations due to the lack of historical interaction data.

- Difficulty Handling Niche Interests: The approach may struggle to cater to users with highly specialized or niche interests, as it depends on finding comparable users with similar preferences.

Types of Collaborative Filtering

- User-Based Collaborative Filtering: This method focuses on identifying users with similar preferences or behaviors. The system recommends items to a user by considering the items liked or interacted with by other users who share similar tastes. It is highly personalized but may face scalability challenges as the user base grows.

- Item-Based Collaborative Filtering: This approach emphasizes the relationships between items rather than users. It recommends items to a user based on the similarity of items that the user has previously interacted with. Item-based filtering is generally more scalable and effective when dealing with large user bases, though it may be less personalized than user-based filtering.

User-based Collaborative Filtering

- User-based Collaborative Filtering is a recommendation technique that suggests items to a user based on the preferences of similar users, operating on the principle that if users have agreed on items in the past, they are likely to agree on other items in the future. This technique is powerful for generating personalized recommendations, but its effectiveness can be hampered by challenges such as scalability, sparsity, and the cold start problem. To address these issues, various optimizations and hybrid models, which combine user-based and item-based collaborative filtering, can be employed, leading to more efficient and accurate recommendation systems.

- Here’s a detailed explanation of how user-based collaborative filtering works:

Data Collection

- User-Item Interaction Matrix: The fundamental data structure in user-based collaborative filtering is the user-item interaction matrix. In this matrix:

- Rows represent users.

- Columns represent items.

- The entries in the matrix represent a user’s interaction with an item, such as a rating, purchase, click, or like.

- Example:

| User | Item A | Item B | Item C | Item D |

|---|---|---|---|---|

| $$U_1$$ | 5 | 3 | 4 | ? |

| $$U_2$$ | 4 | 1 | 5 | 2 |

| $$U_3$$ | ? | 5 | 4 | 1 |

| $$U_4$$ | 2 | 4 | ? | 5 |

- In this matrix, the question mark (

?) indicates unknown or missing ratings that the system needs to predict.

Calculate Similarity Between Users

- Purpose: To find users who have similar tastes or preferences as the active user (the user for whom we want to make recommendations).

- Methods: Common similarity measures include:

- Cosine Similarity: Measures the cosine of the angle between two user vectors (rows in the matrix).

- Pearson Correlation Coefficient: Measures the linear correlation between the users’ ratings, considering the tendency of users to rate items higher or lower overall.

- Jaccard Similarity: Measures the similarity based on the intersection over the union of the sets of items rated by both users.

- Example Calculation (Cosine Similarity):

- For two users \(U_1\) and \(U_2\), their similarity is calculated as: \(\text{Similarity}(U_1, U_2) = \frac{\sum_{i} r_{U_1,i} \times r_{U_2,i}}{\sqrt{\sum_{i} r_{U_1,i}^2} \times \sqrt{\sum_{i} r_{U_2,i}^2}}\)

- Here, \(r_{U_1,i}\) is the rating given by user \(U_1\) to item \(i\), and similarly for \(U_2\).

Identify Nearest Neighbors

- Nearest Neighbors: Once similarities are calculated, the system identifies the “nearest neighbors” to the active user. These are the users who have the highest similarity scores with the active user.

- Selecting K-Nearest Neighbors: Often, the top \(K\) users with the highest similarity scores are selected. This value of \(K\) is a parameter that can be tuned based on the performance of the recommendation system.

Generate Predictions

- Weighted Average: To predict the rating for an item that the active user has not yet rated, a weighted average of the ratings given by the nearest neighbors (“similar users”) to that item is computed. The weights are the similarity scores between the active user and the similar users/neighbors.

- Formula: \(\text{Predicted Rating for User } U \text{ on Item } I = \frac{\sum_{\text{neighbors}} \text{Similarity}(U, \text{Neighbor}) \times \text{Rating}(\text{Neighbor}, I)}{\sum_{\text{neighbors}} \text{Similarity}(U, \text{Neighbor})}\)

- Example:

- If we want to predict \(U_1\)’s rating for item \(D\), and we know \(U_2\) and \(U_3\) are \(U_1\)’s nearest neighbors, we calculate the predicted rating as a weighted sum of \(U_2\) and \(U_3\)’s ratings for item \(D\), using the similarity scores as weights.

Recommend Items

- Top-\(N\) Recommendations: After predicting the ratings for all items the active user has not interacted with, the system can recommend the top \(N\) items with the highest predicted ratings.

- These are the items that the system predicts the user will like the most based on the preferences of similar users.

Implementation Considerations

- Sparsity: The user-item matrix is often sparse, meaning most users have interacted with only a small fraction of the available items. This sparsity can make it challenging to find meaningful similarities between users.

- Cold Start Problem: New users with little interaction history can be difficult to recommend items to, as there isn’t enough data to find similar users.

- Scalability: User-based collaborative filtering can be computationally expensive as it requires calculating similarities between all pairs of users. As the number of users grows, this can become infeasible without optimizations.

Optimization Strategies

- Dimensionality Reduction: Techniques like Singular Value Decomposition (SVD) can reduce the dimensionality of the user-item matrix, making similarity calculations more efficient.

- Clustering: Users can be clustered based on their preferences, and similarities can be calculated within clusters rather than across all users.

- Incremental Updates: Instead of recalculating similarities from scratch whenever new data comes in, the system can incrementally update the similarity scores.

Advantages of User-Based collaborative filtering

- Simplicity: The approach is conceptually simple and easy to implement.

- Intuitive: Recommendations are based on actual user behavior, making the system’s choices easy to explain.

Disadvantages of User-Based collaborative filtering

- Scalability Issues: As the number of users grows, the computational cost of finding similar users increases significantly.

- Cold Start Problem: New users without much interaction history are hard to recommend items to.

- Limited Diversity: If the user base is homogenous, recommendations may lack diversity, as they are based on what similar users liked.

Example Workflow

- Suppose user \(U_1\) has watched and liked several movies. The system identifies users \(U_2\) and \(U_3\) who have similar tastes (based on their movie ratings). \(U_1\) hasn’t rated movie \(D\) yet, but \(U_2\) and \(U_3\) have rated it highly. The system predicts that \(U_1\) will also like movie \(D\) and recommends it to \(U_1\).

Item-based Collaborative Filtering

- Item-based Collaborative Filtering is a robust recommendation technique that focuses on the relationships between items rather than users. The central idea is to recommend items that are similar to the ones a user has liked or interacted with in the past, based on the assumption that if a user liked one item, they are likely to enjoy similar items. This method works by finding items that are often rated or interacted with similarly by users, making it particularly effective in scenarios where item interactions are stable and the system needs to scale to a large number of users. While it shares challenges with user-based collaborative filtering, such as sparsity and cold start problems, item-based collaborative filtering often provides more stable and scalable recommendations, especially in environments with a large user base.

- Here’s a detailed explanation of how item-based collaborative filtering works:

Data Collection

- User-Item Interaction Matrix: The starting point is the user-item interaction matrix, where:

- Rows represent users.

- Columns represent items.

- Entries in the matrix represent the interaction between users and items, such as ratings, purchases, or clicks.

- Example:

| User | Item A | Item B | Item C | Item D |

|---|---|---|---|---|

| $$U_1$$ | 5 | 3 | 4 | ? |

| $$U_2$$ | 4 | 1 | 5 | 2 |

| $$U_3$$ | 2 | 4 | 1 | 5 |

| $$U_4$$ | 3 | 5 | ? | 4 |

- In this matrix, the question mark (

?) indicates unknown or missing ratings that the system may need to predict.

Calculate Similarity Between Items

- Purpose: To identify items that are similar based on user interactions. The assumption is that items that are rated similarly by users are likely to be similar in content or appeal.

- Methods: Common similarity measures include:

- Cosine Similarity: Measures the cosine of the angle between two item vectors (columns in the matrix), which effectively compares the direction of the vectors.

- Pearson Correlation: Measures the linear correlation between two item vectors, considering the users’ rating tendencies (e.g., some users rate items more generously than others).

- Jaccard Similarity: Measures the similarity between two sets of users who have rated or interacted with both items, based on the intersection over the union of these sets.

- Example Calculation (Cosine Similarity):

- For two items \(A\) and \(B\), their similarity is calculated as: \(\text{Similarity}(A, B) = \frac{\sum_{i} r_{i,A} \times r_{i,B}}{\sqrt{\sum_{i} r_{i,A}^2} \times \sqrt{\sum_{i} r_{i,B}^2}}\)

- Here, \(r_{i,A}\) is the rating given by user \(i\) to item \(A\), and similarly for \(B\).

Build the Item-Item Similarity Matrix

- Similarity Matrix: After calculating the similarity scores for all pairs of items, an item-item similarity matrix is constructed. This matrix helps the system understand which items are similar to each other.

- Structure: In this matrix:

- Rows and columns represent items.

- The entries represent the similarity score between pairs of items.

- Example:

| Item A | Item B | Item C | Item D | |

|---|---|---|---|---|

| Item A | 1.00 | 0.92 | 0.35 | 0.45 |

| Item B | 0.92 | 1.00 | 0.20 | 0.30 |

| Item C | 0.35 | 0.20 | 1.00 | 0.85 |

| Item D | 0.45 | 0.30 | 0.85 | 1.00 |

- Here, the similarity between Item \(A\) and Item \(B\) is 0.92, indicating that they are very similar based on user ratings.

Identify Nearest Neighbors

- Rather than building an item-item similarity matrix, a more scalable implementation is to use Approximate Nearest Neighbors (ANN) to quickly identify the most similar items. ANN methods like Locality-Sensitive Hashing (LSH) or Hierarchical Navigable Small World Graphs (HNSW) can significantly reduce the computational cost while maintaining high accuracy.

- Nearest Neighbors: Once similarities are calculated, the system identifies the “nearest neighbors” to the target item. These are the items that have the highest similarity scores with the active item.

- Selecting K-Nearest Neighbors: The top \(K\) items with the highest similarity scores are selected. This value of \(K\) is a parameter that can be tuned based on the performance of the recommendation system.

Generate Predictions

- Prediction Process: To predict a user’s rating or interest in an item they haven’t interacted with yet, the system uses the user’s ratings of similar items. The idea is to give more weight to items that are more similar to the target item.

- Weighted Average: The predicted rating for an item is calculated as a weighted average of the user’s ratings for similar items, with the weights being the similarity scores.

- Formula: \(\text{Predicted Rating for User } U \text{ on Item } I = \frac{\sum_{\text{similar items } J} \text{Similarity}(I, J) \times \text{Rating}(U, J)}{\sum_{\text{similar items } J} \text{Similarity}(I, J)}\)

- Example:

- Suppose we want to predict \(U_1\)’s rating for Item \(D\). If Items \(A\) and \(C\) are similar to Item \(D\) and \(U_1\) has already rated them, the predicted rating for Item \(D\) would be a weighted average of \(U_1\)’s ratings for Items \(A\) and \(C\), weighted by their similarity to Item \(D\).

Recommend Items

- Top-\(N\) Recommendations: After predicting the ratings for all items that the user hasn’t interacted with, the system can recommend the top \(N\) items with the highest predicted ratings.

- Filtering: The system typically filters out items that the user has already rated or interacted with to ensure that only new items are recommended.

Implementation Considerations

- Sparsity: Similar to user-based collaborative filtering, the user-item matrix in item-based collaborative filtering is often sparse. However, since item similarities are typically more stable and less sensitive to new data than user similarities, item-based collaborative filtering can handle sparsity better.

- Scalability: Item-based collaborative filtering is generally more scalable than user-based collaborative filtering because the number of items is often smaller than the number of users, making the item-item similarity matrix easier to compute and store.

- Cold Start Problem: New items with little interaction data may not be effectively recommended because their similarities with other items cannot be accurately calculated.

Optimization Strategies

- Precomputing Similarities: Item-item similarities can be precomputed and stored, allowing for fast retrieval during recommendation generation.

- Clustering: Items can be clustered based on their similarities, and recommendations can be made within clusters, reducing the computational load.

- Dimensionality Reduction: Techniques like Singular Value Decomposition (SVD) can reduce the dimensionality of the item space, making similarity computations more efficient.

Advantages of Item-Based collaborative filtering

- Scalability: More scalable than user-based collaborative filtering, especially in environments with a large number of users.

- Stability: Item similarities are generally more stable over time than user similarities, leading to more consistent recommendations.

- Better Performance with Sparse Data: Performs better than user-based collaborative filtering when the user-item matrix is sparse.

Disadvantages of Item-Based collaborative filtering

- Cold Start Problem: Less effective for recommending new items that have few interactions.

- Limited Discovery: May limit users to items similar to what they have already interacted with, reducing the diversity of recommendations.

Example Workflow

- Suppose \(U_1\) has rated items \(A\) and \(B\) highly. The system identifies items \(C\) and \(D\) as similar to \(A\) and \(B\) based on other users’ ratings. It then predicts \(U_1\)’s potential ratings for items \(C\) and \(D\), and if the predicted rating for item \(D\) is high, it recommends item \(D\) to \(U_1\).

A Movie Recommendation Case-study

-

Consider a movie recommendation system, as described in Google’s course on Recommendation Systems, where the training data comprises a feedback matrix structured as follows:

- Each row corresponds to a user.

- Each column corresponds to an item, in this case, a movie.

-

The feedback regarding movies is categorized into two types:

- Explicit Feedback: Users explicitly rate a movie by assigning it a numerical value, indicating how much they liked it.

- Implicit Feedback: The system infers a user’s interest in a movie based on their viewing behavior.

- For the sake of simplicity, we will assume that the feedback matrix is binary, where a value of 1 denotes interest in the movie.

-

When a user accesses the homepage, the system should recommend movies based on the following criteria:

- The similarity of the recommended movies to those the user has previously enjoyed.

- Movies that have been favored by users with similar preferences.

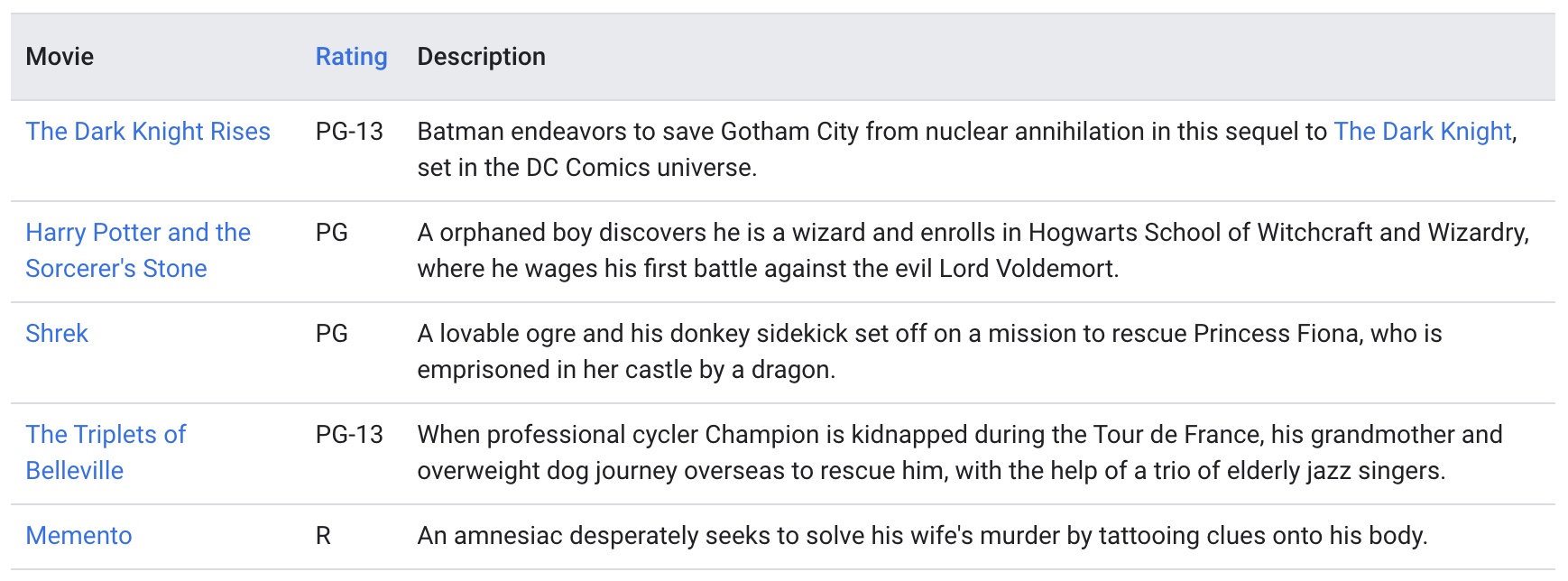

- For illustrative purposes, we will manually engineer some features for the movies described in the table (source) below:

1D Embedding

- Suppose we assign to each movie a scalar in

[-1, 1]that describes whether the movie is for children (negative values) or adults (positive values). Suppose we also assign a scalar to each user in[-1, 1]that describes the user’s interest in children’s movies (closer to -1) or adult movies (closer to +1). The product of the movie embedding and the user embedding should be higher (closer to 1) for movies that we expect the user to like. (source for image below)

- In the diagram below (source), each checkmark identifies a movie that a particular user watched. The third and fourth users have preferences that are well explained by this feature—the third user prefers movies for children and the fourth user prefers movies for adults. However, the first and second users’ preferences are not well explained by this single feature.

2D Embedding

- One feature was not enough to explain the preferences of all users. To overcome this problem, let’s add a second feature: the degree to which each movie is a blockbuster or an art-house movie. With a second feature, we can now represent each movie with the following two-dimensional embedding (image source).

- We again place our users in the same embedding space to best explain the feedback matrix: for each \((user, item)\) pair, we would like the dot product of the user embedding and the item embedding to be close to 1 when the user watched the movie, and to 0 otherwise.

-

Note: We represented both items and users in the same embedding space. This may seem surprising. After all, users and items are two different entities. However, you can think of the embedding space as an abstract representation common to both items and users, in which we can measure similarity or relevance using a similarity metric. In this example, we hand-engineered the embeddings. In practice, the embeddings can be learned automatically, which is the power of collaborative filtering models. In the next two sections, we will discuss different models to learn these embeddings, and how to train them.

-

The collaborative nature of this approach is apparent when the model learns the embeddings. Suppose the embedding vectors for the movies are fixed. Then, the model can learn an embedding vector for the users to best explain their preferences. Consequently, embeddings of users with similar preferences will be close together. Similarly, if the embeddings for the users are fixed, then we can learn movie embeddings to best explain the feedback matrix. As a result, embeddings of movies liked by similar users will be close in the embedding space.

Examples

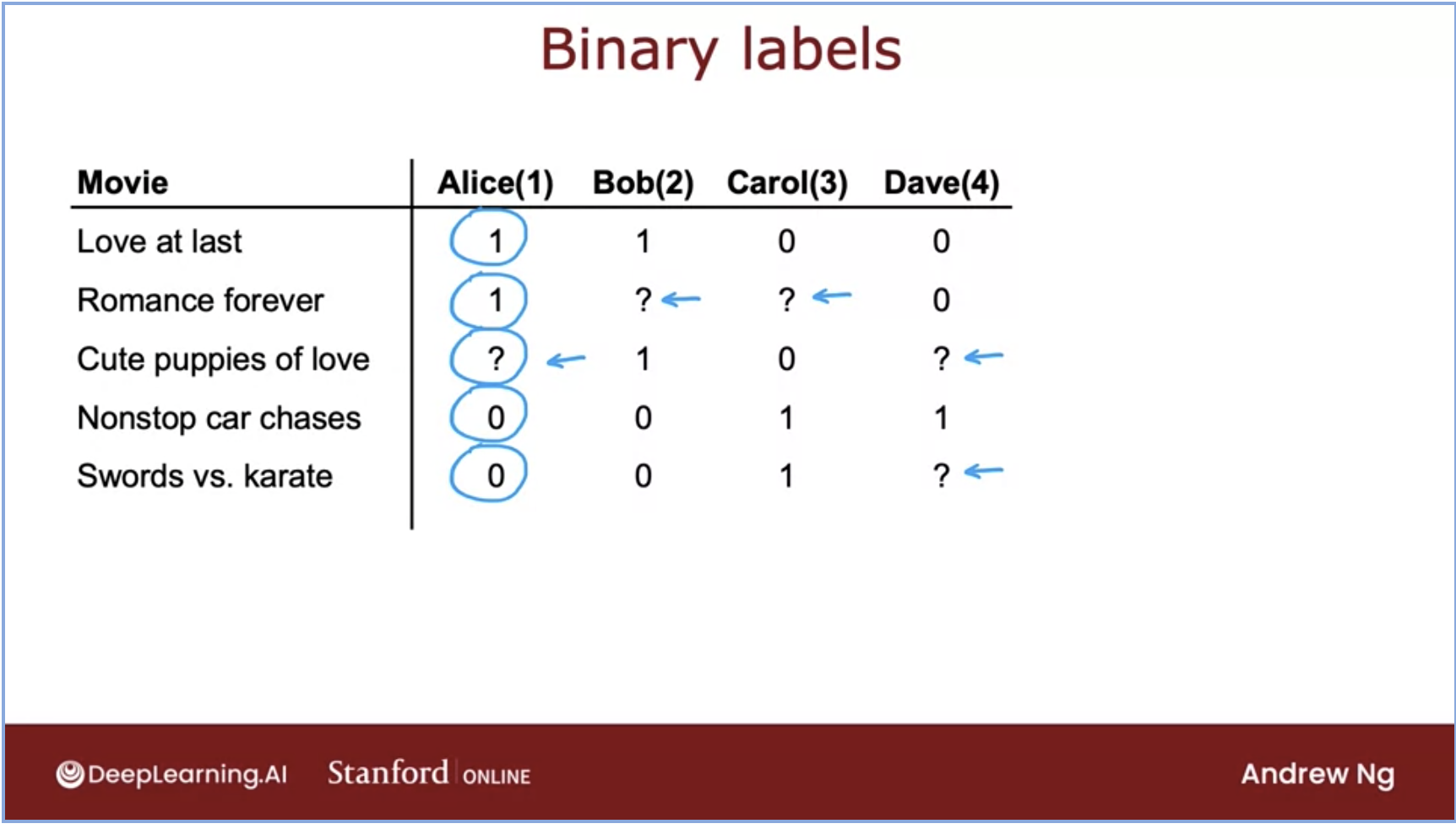

- As a real-world example, let’s look at an example (source) with binary labels signifying engagement (using favorites, likes, and clicks):

- Let’s talk about the meaning of each rating in the above dataset. We can choose what each of our labels mean so here, we have chosen the following:

1: user was engaged after being shown the item.0: user did not engage after being shown the item.?: the item is not yet shown to the user.

Matrix Factorization (MF)

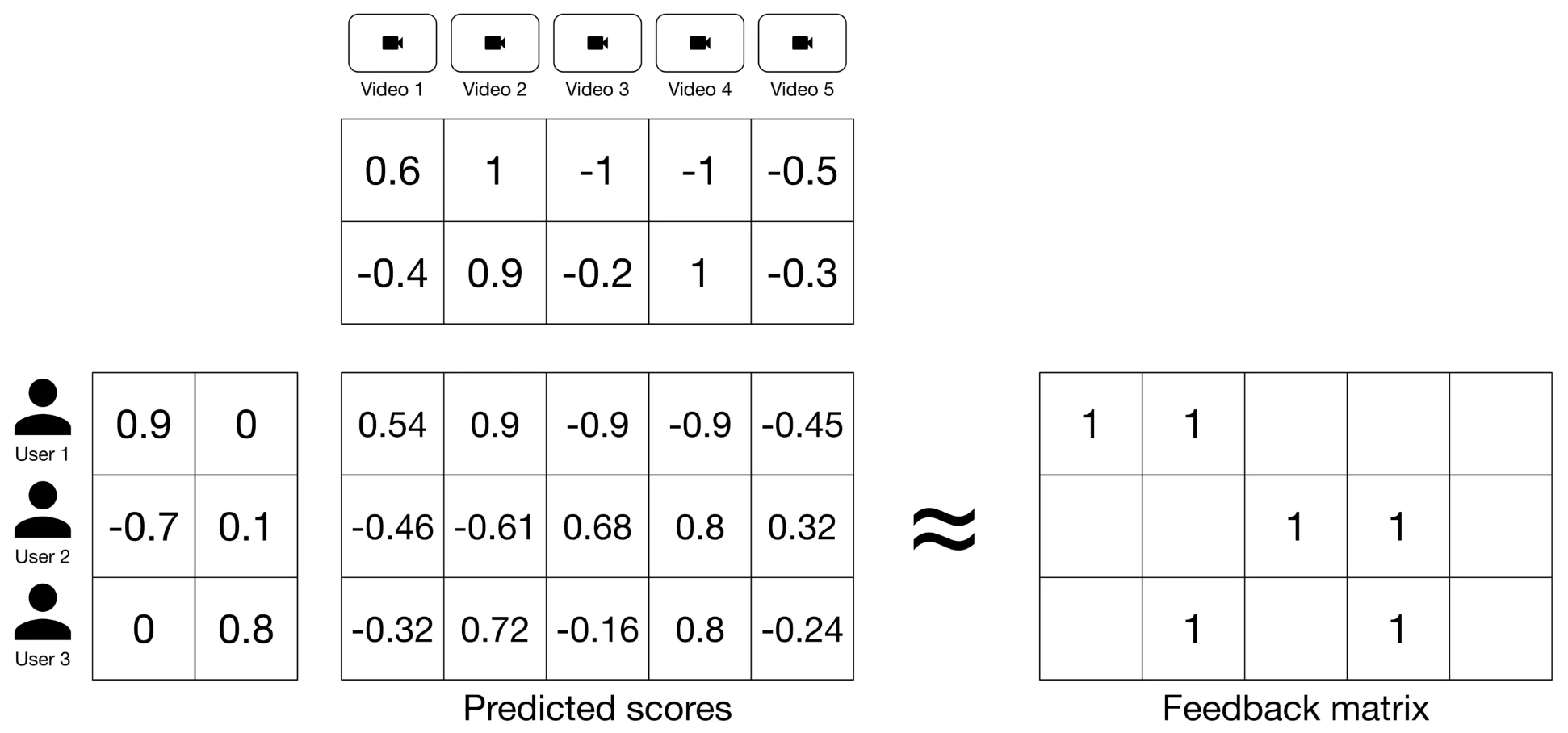

- Matrix Factorization (MF) is a simple embedding model. The algorithm performs a decomposition of the (sparse) user-item feedback matrix into the product of two (dense) lower-dimensional matrices. One matrix represents the user embeddings, while the other represents the item embeddings. In essence, the model learns to map each user to an embedding vector and similarly maps each item to an embedding vector, such that the distance between these vectors reflects their relevance.

- Formally, given a feedback matrix \(\mathrm{A} \in R^{m \times n}\), where \(m\) is the number of users (or queries) and \(n\) is the number of items, the model learns:

- A user embedding matrix \(U \in \mathbb{R}^{m \times d}\), where row \(i\) is the embedding for user \(i\).

- An item embedding matrix \(V \in \mathbb{R}^{n \times d}\), where row \(j\) is the embedding for item \(j\). The image below depicts this (source):

- The embeddings are learned such that the product \(U V^{T}\) is a good approximation of the feedback matrix. Observe that the \((i, j)\) entry of \(U \cdot V^{T}\) is simply the dot product \(\left\langle U_{i}, V_{j}\right\rangle\) of the embeddings of user \(i\) and item \(j\), which you want to be close to \(A_{i, j}\).

Training Matrix Factorization

- As part of training, we aim to produce user and item embedding matrices so that their product is a good approximation of the feedback matrix. The figure below (source) illustrates this concept:

-

To achieve this, MF first randomly initializes the user and item embedding matrices, then iteratively optimizes the embeddings to decrease the loss between the Predicted Scores Matrix and the Feedback Matrix.

-

The key to successful training lies in choosing the right loss function. During training, the algorithm minimizes the difference between the predicted scores (i.e., the dot product of the user and item embeddings) and the actual feedback values. Let’s explore a few loss function options:

Squared Distance over Observed User-Item Pairs

- One intuitive loss function is to minimize the squared distance over the observed \(\langle \text{user}, \text{item} \rangle\) pairs. This loss function measures the sum of the squared distances between the predicted and actual feedback values over all pairs of observed (non-zero values) entries in the feedback matrix. This approach focuses solely on the known interactions between users and items, which are the non-zero entries in the feedback matrix.

- This concept is depicted in the figure below (source):

-

The loss function is defined as:

\[\min _{U \in \mathbb{R}^{m \times d}, V \in \mathbb{R}^{n \times d}} \sum_{(i, j) \in \mathrm{obs}}\left(A_{i j}-\left\langle U_{i}, V_{j}\right\rangle\right)^{2}\]- where, \(A_{ij}\) refers to the entry at row \(i\) and column \(j\) in the feedback matrix (representing the interaction between user \(i\) and item \(j\)), \(U_i\) is the embedding of user \(i\), and \(V_j\) is the embedding of item \(j\). The summation is performed only over the observed pairs. This method ensures that the model optimizes the predictions for pairs that we have data for, rather than attempting to account for unobserved pairs, which might represent irrelevant content or interactions that never occurred.

The Concept of Fold-In

-

A unique aspect of MF is the concept of fold-in, which is employed during inference when the system encounters new users or items that were not part of the training data.

- What is Fold-In?

- Fold-in is the process of incorporating a new user or item into the factorization framework without retraining the entire model. Instead of learning all embeddings from scratch, the existing item embeddings \(V\) (which are precomputed during training) are held fixed, and only the embedding for the new user (or item) is optimized.

- How Does Fold-In Work?

- For a new user, the algorithm initializes their embedding vector randomly and optimizes it iteratively to minimize the loss between their known interactions (with items) and the predictions made by the model using the fixed item embeddings. Similarly, for a new item, the user embeddings are fixed, and the new item’s embedding is adjusted.

- This enables the system to adapt to new data in a computationally efficient manner, making fold-in a critical feature for scalable recommendation systems.

Loss Function Options and Issues with Unobserved Pairs

- However, as discussed earlier, only summing over observed pairs leads to poor embeddings because the loss function doesn’t penalize the model for bad predictions on unobserved pairs. For instance, a matrix of all ones would have zero loss on the training data, but those embeddings would perform poorly on unseen \(\langle \text{user}, \text{item} \rangle\) pairs. This is because a model that doesn’t account for unobserved interactions may struggle to generalize effectively beyond the training data.

- Additionally, unobserved pairs can often act as “soft negatives” rather than strict negative data points. These soft negatives arise when a user doesn’t interact with a particular item. This lack of interaction doesn’t necessarily mean that the user dislikes the content—it could simply indicate that they haven’t encountered it yet. Therefore, treating all unobserved pairs as strictly negative can distort the model’s predictions.

Squared Distance over Both Observed and Unobserved Pairs

-

One solution is to use a loss function that considers both observed and unobserved pairs. For example, by treating unobserved pairs as negative data points and assigning them a zero value in the feedback matrix, we can minimize the squared Frobenius distance between the actual feedback matrix and the predicted matrix, which is the product of the embeddings:

\[\min _{U \in \mathbb{R}^{m \times d}, V \in \mathbb{R}^{n \times d}}\left\|A-U V^{T}\right\|_{F}^{2}\] -

In the above formula, the squared Frobenius norm measures the overall difference between the actual feedback matrix \(A\) and its approximation \(U V^{T}\) across all entries, whether observed or unobserved. The figure below (source) shows this loss function, which computes the sum of squared distances over all entries, both observed and unobserved.

- While this addresses the problem of unobserved pairs by penalizing bad predictions, it introduces a new challenge. Because the feedback matrix is usually sparse, unobserved pairs dominate the observed ones, leading to predictions that are mostly close to zero and, thus, poor generalization performance.

A Weighted Combination of Losses for Observed and Unobserved Pairs

-

A more balanced approach is to use a weighted combination of squared distances for both observed and unobserved pairs. This method combines the advantages of both approaches. The first summation calculates the loss for observed pairs, while the second summation accounts for unobserved pairs, treating them as soft negatives. As shown in the figure below (source), the loss function is weighted by a hyperparameter \(w_0\) that ensures one term doesn’t dominate the other:

\[\min _{U \in \mathbb{R}^{m \times d}, V \in \mathbb{R}^{n \times d}} \sum_{(i, j) \in \mathrm{obs}}\left(A_{i j}-\left\langle U_{i}, V_{j}\right\rangle\right)^{2}+w_{0} \sum_{(i, j) \notin \mathrm{obs}}\left\langle U_{i}, V_{j}\right\rangle^{2}\]

- The first term in the equation represents the loss over observed pairs, where \(A_{ij}\) is the actual feedback for the pair \(\langle \text{user} i, \text{item} j \rangle\), and \(\left\langle U_{i}, V_{j}\right\rangle\) is the predicted interaction score based on the embeddings. The second term applies to unobserved pairs, which are treated as soft negatives. Here, \(w_0\) is the hyperparameter that controls the weight of the unobserved pairs’ contribution to the loss function. By carefully tuning \(w_0\), this loss function effectively balances the contributions of observed and unobserved pairs, leading to better generalization performance on unseen \(\langle \text{user}, \text{item} \rangle\) pairs.

-

This approach has proven successful in practice for recommendation systems, ensuring that both types of pairs—observed and unobserved—are taken into account during training without one set dominating the other.

Practical Considerations for Weighting Observed Pairs

-

In practical applications, you also need to weight the observed pairs carefully. For example, frequent items (such as extremely popular YouTube items) or frequent queries (from heavy users) may dominate the objective function. To correct for this effect, training examples can be weighted to account for item frequency. This modifies the objective function as follows:

\[\sum_{(i, j) \in \mathrm{obs}} w_{i, j}\left(A_{i, j}-\left\langle U_{i}, V_{j}\right\rangle\right)^{2}+w_{0} \sum_{(i, j) \notin \mathrm{obs}}\left\langle U_{i}, V_{j}\right\rangle^{2}\]- In this equation, \(w_{i, j}\) represents the weight assigned to the observed pair \(\langle i, j \rangle\), which could be a function of the frequency of query \(i\) and item \(j\). By weighting frequent items or users differently, the model can avoid being overly influenced by these frequent interactions, ensuring better generalization across all users and items.

Minimizing the Objective Function

- Common methods to minimize this objective function include Stochastic Gradient Descent (SGD) and Weighted Alternating Least Squares (WALS).

Stochastic Gradient Descent (SGD)

-

Stochastic Gradient Descent (SGD) is a general-purpose optimization algorithm widely used to minimize loss functions in machine learning and deep learning. It operates by iteratively updating the model parameters in the opposite direction of the gradient of the loss function with respect to the parameters, thereby carrying out gradient descent. Unlike traditional gradient descent, which computes gradients using the entire dataset, SGD approximates this by using a randomly selected subset (often a single sample or a small batch) at each iteration. This approximation allows SGD to converge faster, especially for large-scale datasets, although it introduces some variance in the updates.

- Steps in SGD:

- Compute Gradient: For each iteration, SGD calculates the gradient of the loss function with respect to the parameters based on a randomly chosen subset of the data.

- Parameter Update: Parameters are updated by moving in the direction opposite to the gradient by a small step size, also known as the learning rate.

- Repeat: This process continues until convergence or for a predefined number of iterations.

- Advantages of SGD:

- Efficiency on Large Datasets: Since it operates on a small subset of data per iteration, SGD is well-suited for large datasets.

- Fast Convergence: With appropriate learning rate tuning, SGD often converges more quickly than traditional gradient descent.

- Generalization: The noise introduced by updating based on a subset of data can help prevent overfitting, potentially improving model generalization.

Weighted Alternating Least Squares (WALS)

- Weighted Alternating Least Squares (WALS) is an efficient algorithm that is particularly well-suited for MF, since it effectively deals with large, sparse matrices common in recommendation systems. WALS can be distributed across multiple nodes, making it efficient for large-scale data.

-

In WALS, the loss function is quadratic in each of the two embedding user and item matrices, i.e., \(U\) and \(V\). WALS performs MF in an alternating fashion: it iteratively fixes one of the two embedding matrices (such as user or item embeddings) and solves a least squares optimization problem for the other. This alternating approach is repeated until convergence, enabling WALS to handle weighted MF objectives.

- Optimization Process in WALS:

- Fix One Matrix: Start by fixing one matrix, say the user matrix, and then optimize the other matrix (e.g., the item matrix). Put simply, WALS works by alternating between fixing one matrix and optimizing the other:

- Fix \(U\) and solve (i.e., optimize) for \(V\).

- Fix \(V\) and solve (i.e., optimize) for \(U\).

- Alternate: After solving for the item matrix, fix it and solve for the user matrix.

- Iterate Until Convergence: Continue alternating between the two matrices until the objective function converges. Each step can be solved exactly by solving a linear system, leading to efficient convergence.

- Fix One Matrix: Start by fixing one matrix, say the user matrix, and then optimize the other matrix (e.g., the item matrix). Put simply, WALS works by alternating between fixing one matrix and optimizing the other:

- Benefits of WALS:

- Weighted Regularization: WALS is designed to handle weighted data, allowing it to apply different weights to observed and unobserved entries, which is crucial for balancing frequent and infrequent interactions in recommendation systems.

- Scalability: The algorithm can be distributed across multiple nodes, making it suitable for large-scale data.

- Tunable Hyperparameter: The weight for unobserved pairs, often denoted by the hyperparameter \(w_0\), is adjustable in WALS, providing flexibility in fine-tuning model performance based on specific dataset characteristics.

SGD vs. WALS

- SGD and WALS have advantages and disadvantages. Review the information below to see how they compare:

SGD

- (+) Very flexible—can use other loss functions.

- (+) Can be parallelized.

- (-) Slower—does not converge as quickly.

- (-) Harder to handle the unobserved entries (need to use negative sampling or gravity).

WALS

- (-) Reliant on Loss Squares only.

- (+) Can be parallelized.

- (+) Converges faster than SGD.

- (+) Easier to handle unobserved entries.

Cost Function for Binary Labels (Regression to Classification)

-

The MF algorithm can be generalized to handle binary labels, where the task is to predict whether a user will interact with a particular item (e.g., click or not click). In this case, the problem transitions from a regression task to a binary classification task. The following image represents the cost function for binary applications (source):

-

By adapting the linear regression-like collaborative filtering algorithm to predict binary labels, we move from regression-based recommendations to handling classification tasks, making the approach suitable for binary interaction prediction.

Example: Learning Process for Embedding Vectors

- The process of learning vectors in MF elegantly combines user preferences and item characteristics into a shared embedding space. By optimizing the embeddings to minimize prediction errors for observed interactions, the system generalizes to predict unseen interactions effectively. This approach forms the backbone of many modern recommendation systems.

- The learning process for embedding vectors in MF is illustrated in the diagram below, which provides a structured view of how embeddings are trained and utilized in a collaborative filtering framework (source).

Components of the System

- Feedback Matrix (\(Y\)):

- Represents user-item interactions, where entries range from 0.5 to 5 (inclusive, in 0.5 increments) for rated items.

- Unrated items are represented with a value of 0.

- Mask Matrix (\(R\)):

- A binary matrix with a value of 1 at positions corresponding to rated items in the feedback matrix (\(Y\)).

- Unrated items are represented by a value of 0, distinguishing observed entries from missing data.

- Embedding Vectors and Biases:

- User Parameters (\(w_{user}\) and bias): Each user is associated with an embedding vector \(w_{user}\) and a bias term that reflect their preferences.

- Item Features (\(x_{movie}\)): Each item (e.g., a movie) is represented by a feature vector \(x_{movie}\), capturing its characteristics.

Training Process

-