Natural Language Processing • Word Vectors/Embeddings

- Overview

- Motivation

- Word Embeddings

- Conceptual Framework of Word Embeddings

- Related: WordNet

- Background: Synonymy, Antonymy, and Polysemy (Multi-Sense)

- Word Embedding Techniques

- Semantic Similarity and its Geometric Interpretation

- Bag of Words (BoW)

- Term Frequency-Inverse Document Frequency (TF-IDF)

- Best Match 25 (BM25)

- Key Components of BM25

- Example

- BM25: Evolution of TF-IDF

- Limitations of BM25

- Parameter Sensitivity

- Non-Handling of Semantic Similarities

- Ineffectiveness with Short Queries or Documents

- Length Normalization Challenges

- Query Term Independence

- Difficulty with Rare Terms

- Performance in Specialized Domains

- Ignoring Document Quality

- Vulnerability to Keyword Stuffing

- Incompatibility with Complex Queries

- Word2Vec

- Motivation

- Theoretical Foundation: Distributional Hypothesis

- Representational Power and Semantic Arithmetic

- Probabilistic Interpretation

- Motivation behind Word2Vec: The Need for Context-based Semantic Understanding

- Core Idea

- Word2Vec Architectures

- Training and Optimization

- Embedding and Semantic Relationships

- Distinction from Traditional Models

- Semantic Nature of Word2Vec Embeddings

- Limitations and Advances

- Additional Resources

- Global Vectors for Word Representation (GloVe)

- fastText

- BERT Embeddings

- Handling Polysemous Words – Key Limitation of BoW, TF-IDF, BM25, Word2Vec, GloVe, and fastText

- Example: BoW, TF-IDF, BM25, Word2Vec, GloVe, fastText, and BERT Embeddings

- Summary: Types of Embeddings

- Comparative Analysis of BoW, TF-IDF, BM25, Word2Vec, GloVe, fastText, and BERT Embeddings

- FAQs

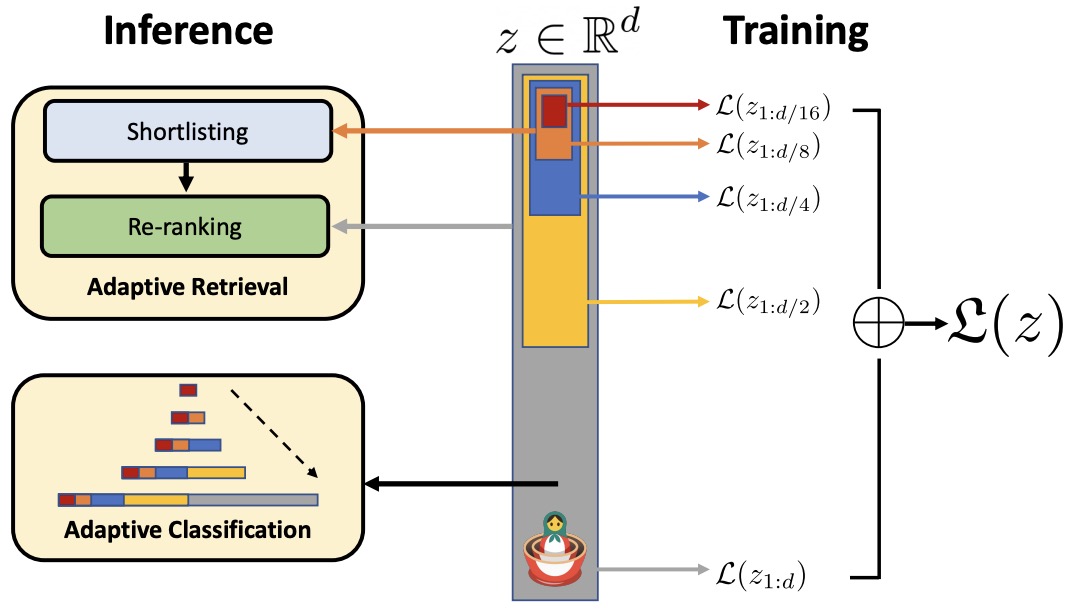

- Related: Matryoshka Representation Learning

- Further Reading

- References

- Citation

Overview

- Word embeddings are a fascinating aspect of modern computational linguistics, particularly in the domain of Natural Language Processing (NLP). These embeddings serve as the foundation for interpreting and processing human language in a format that computers can understand and utilize. Here, we delve into an overview of word embeddings, focusing on their conceptual framework and practical applications.

Motivation

- J.R. Firth’s Insight and Distributional Semantics: The principle of distributional semantics is encapsulated in J.R. Firth’s famous quote (below), which highlights the significance of contextual information in determining word meaning and captures the importance of contextual information in defining word meanings. This principle is a cornerstone in the development of word embeddings.

“You shall know a word by the company it keeps.”

- Role in AI and NLP: Situated at the heart of AI, NLP aims to bridge the gap between human language and machine understanding. The primary motivation for developing word embeddings within NLP is to create a system where computers can not only recognize but also understand and interpret the subtleties and complexities of human language, thus enabling more natural and effective human-computer interactions.

- Advancements in NLP: The evolution of NLP, especially with the integration of deep learning methods, has led to significant enhancements in various language-related tasks, underscoring the importance of continuous innovation in this field.

- Historical Context and Evolution: With over 50 years of development, originating from linguistics, NLP has grown to embrace sophisticated models that generate word embeddings. The motivation for this evolution stems from the desire to more accurately capture and represent the nuances and complexities of human language in digital form.

- Word embeddings as a lens for nuanced language interpretation: Word embeddings, underpinned by the concept of distributional semantics, represent word meanings through vectors of real numbers. While not perfect, this method provides a remarkably effective means of interpreting and processing language in computational systems. The ongoing developments in this field continue to enhance our ability to model and understand natural language in a digital context.

Word Embeddings

- Word embeddings, also known as word vectors, provide a dense, continuous, and compact representation of words, encapsulating their semantic and syntactic attributes. They are essentially real-valued vectors, and the proximity of these vectors in a multidimensional space is indicative of the linguistic relationships between words.

An embedding is a point in an \(N\)-dimensional space, where \(N\) represents the number of dimensions of the embedding.

-

This concept is rooted in the Distributional Hypothesis, which posits that words appearing in similar contexts are likely to bear similar meanings. Consequently, in a high-dimensional vector space, vectors representing semantically related words (e.g., ‘apple’ and ‘orange’, both fruits) are positioned closer to each other compared to those representing semantically distant words (e.g., ‘apple’ and ‘dog’).

-

Word embeddings are constructed by forming dense vectors for each word, chosen in such a way that they resemble vectors of contextually similar words. This process effectively embeds words in a high-dimensional vector space, with each dimension contributing to the representation of a word’s meaning. For example, the concept of ‘banking’ is distributed across all dimensions of its vector, with its entire semantic essence embedded within this multidimensional space.

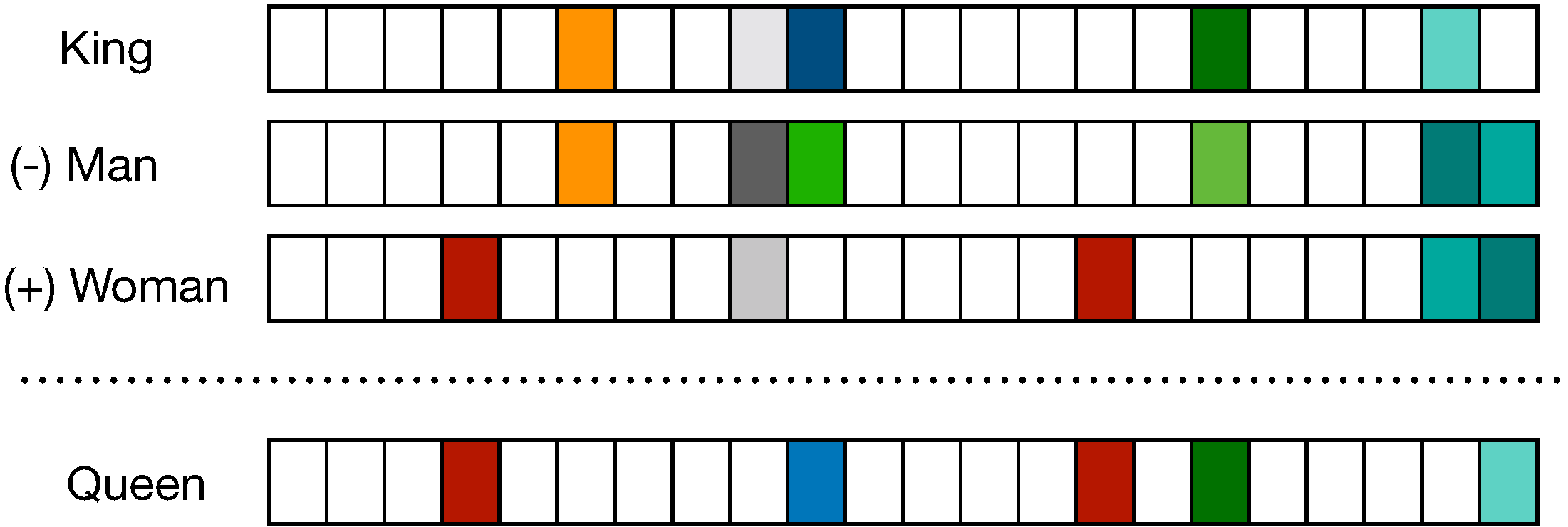

- The term ‘embedding’ in this context refers to the transformation of discrete words into continuous vectors, achieved through word embedding algorithms. These algorithms are designed to convert words into vectors that encapsulate a significant portion of their semantic content. A classic example of the effectiveness of these embeddings is the vector arithmetic that yields meaningful analogies, such as

'king' - 'man' + 'woman' ≈ 'queen'. The figure below (source) shows distributional vectors represented by a \(D\)-dimensional vector where \(D<<V\), where \(V\) is size of the vocabulary.

-

The term ‘embedding’ in this context refers to the transformation of discrete words into continuous vectors, achieved through word embedding algorithms. These algorithms are designed to convert words into vectors that encapsulate a significant portion of their semantic content. A classic example of the effectiveness of these embeddings is the vector arithmetic that yields meaningful analogies, such as

'king' - 'man' + 'woman' ≈ 'queen'. -

Word embeddings are typically pre-trained on large, unlabeled text corpora. This training often involves optimizing auxiliary objectives, like predicting a word based on its contextual neighbors, as demonstrated in Word2Vec by Mikolov et al. (2013). Through this process, the resultant word vectors encapsulate both syntactic and semantic properties of words.

-

The effectiveness of word embeddings lies in their ability to capture similarities between words, making them invaluable in NLP tasks. This is typically done by using similarity measures such as cosine similarity to quantify how close or distant the meanings of different words are in a vector space.

-

Over the years, the creation of word embeddings has generally relied on shallow neural networks rather than deep ones. However, these embeddings have become a fundamental layer in deep learning-based NLP models. This use of embeddings is a key difference between traditional word count models and modern deep learning approaches, contributing to state-of-the-art performance across a variety of NLP tasks (Bengio and Usunier, 2011; Socher et al., 2011; Turney and Pantel, 2010; Cambria et al., 2017).

-

In summary, word embeddings not only efficiently encapsulate the semantic and syntactic nuances of language but also play a pivotal role in enhancing the computational efficiency of numerous NLP tasks.

Conceptual Framework of Word Embeddings

- Continuous Knowledge Representation:

- Nature of LLM Embeddings:

- LLM embeddings are essentially dense, continuous, real-valued vectors situated within a high-dimensional space. For instance, in the case of BERT, these vectors are 768-dimensional. This concept can be analogized to geographical coordinates on a map. Just as longitude and latitude offer specific locational references on a two-dimensional plane, embeddings provide approximations of positions within a multi-dimensional semantic space. This space is constructed from the interconnections among words across vast internet resources.

- Characteristics of Embedding Vectors:

- Since these vectors are continuous, they permit an infinite range of values within specified intervals. This continuity results in a certain ‘fuzziness’ in the embeddings’ coordinates, allowing for nuanced and context-sensitive interpretation of word meanings.

- Example of LLM Embedding Functionality:

- Consider the LLM embedding for a phrase like ‘Jennifer Aniston’. This embedding would be a multi-dimensional vector leading to a specific location in a vast ‘word-space’, comprising several billion parameters. Adding another concept, such as ‘TV series’, to this vector could shift its position towards the vector representing ‘Friends’, illustrating the dynamic and context-aware nature of these embeddings. However, this sophisticated mechanism is not without its challenges, as it can sometimes lead to unpredictable or ‘hallucinatory’ outputs.

Related: WordNet

- One of the initial attempts to digitally encapsulate a word’s meaning was through the development of WordNet. WordNet functioned as an extensive thesaurus, encompassing a compilation of synonym sets and hypernyms, the latter representing a type of hierarchical relationship among words.

- Despite its innovative approach, WordNet encountered several limitations:

- Inefficacy in capturing the full scope of word meanings.

- Inadequacy in reflecting the subtle nuances associated with words.

- An inability to incorporate evolving meanings of words over time.

- Challenges in maintaining its currency and relevance in an ever-changing linguistic landscape.

- Moreover, WordNet employed the principles of distributional semantics, which posits that a word’s meaning is largely determined by the words that frequently appear in close proximity to it.

- Subsequently, the field of NLP witnessed a paradigm shift with the advent of word embeddings. These embeddings marked a significant departure from the constraints of traditional lexical databases like WordNet. Unlike its predecessors, word embeddings provided a more dynamic and contextually sensitive approach to understanding language. By representing words as vectors in a continuous vector space, these embeddings could capture a broader array of linguistic relationships, including semantic similarity and syntactic patterns.

- Today, word embeddings continue to be a cornerstone technology in NLP, powering a wide array of applications and tasks. Their ability to efficiently encode word meanings into a dense vector space has not only enhanced the performance of various NLP tasks but also has laid the groundwork for more advanced language processing and understanding technologies.

Background: Synonymy, Antonymy, and Polysemy (Multi-Sense)

- Synonymy deals with words that share similar meanings, antonymy concerns words with opposite meanings, and polysemy refers to a single word carrying multiple related meanings. Together, these three relationships form a core part of lexical semantics — the study of meaning in words and their interrelations. They define how language encodes similarity, contrast, and multiplicity of sense, shaping both communication and interpretation.

Synonymy

- Synonymy refers to the linguistic phenomenon where two or more words have the same or very similar meanings. Synonyms are words that can often be used interchangeably in many contexts, although subtle nuances, connotations, or stylistic preferences might make one more appropriate than another in specific situations.

- Synonymy is a vital aspect of language as it provides speakers with a choice of words, adding richness, variety, and flexibility to expression.

Characteristics of Synonymy

-

Complete Synonymy: This is when two words mean exactly the same thing in all contexts, with no differences in usage or connotation. However, true cases of complete synonymy are extremely rare.

- Example: car and automobile.

-

Partial Synonymy: In most cases, synonyms share similar meanings but might differ slightly in terms of usage, formality, or context.

- Example: big and large are generally synonymous but might be preferred in different contexts (e.g., “big mistake” vs. “large building”).

-

Different Nuances: Even if two words are synonyms, one might carry different emotional or stylistic undertones.

- Example: childish vs. childlike. Both relate to behaving like a child, but childish often has a negative connotation, while childlike tends to be more positive.

-

Dialects and Variations: Synonyms can vary between regions or dialects.

- Example: elevator (American English) and lift (British English).

Antonymy

- Antonymy describes the semantic relationship between words that express opposite or contrasting meanings. It is as fundamental to linguistic structure as synonymy because it defines conceptual boundaries and helps organize meaning across dimensions such as quantity, quality, direction, and emotion. Antonyms play an essential role in how humans perceive, categorize, and describe the world, often occurring as natural pairs that emphasize contrast and polarity.

Types of Antonymy

-

Gradable Antonyms: Words that occupy opposite ends of a continuous scale or spectrum.

- Example: hot and cold, happy and sad, tall and short.

- These antonyms allow intermediate degrees (warm, lukewarm, neutral) and can be intensified or diminished using degree modifiers (very cold, quite happy). Importantly, negating one term doesn’t imply the other (not hot ≠ cold).

-

Complementary Antonyms: Pairs where the existence of one necessarily implies the absence of the other — there is no middle ground.

- Example: alive vs. dead, present vs. absent, true vs. false.

- These opposites are binary and mutually exclusive within their conceptual domain.

-

Relational (Conversive) Antonyms: Words that express reciprocal relationships, where one implies the other from a different perspective.

- Example: buy vs. sell, parent vs. child, teacher vs. student.

- The contrast here arises from role reversal rather than direct negation.

-

Directional Antonyms: Words expressing movement or orientation in opposite directions.

- Example: up vs. down, enter vs. exit, rise vs. fall.

Linguistic and Cognitive Role

- Antonymy defines semantic contrast, allowing speakers to express differentiation and opposition, which are crucial to reasoning and classification.

- It contributes to cognitive structuring, framing concepts along continua of meaning and helping categorize experience (e.g., light–dark, good–bad, success–failure).

- In computational linguistics and NLP, antonymy presents a notable challenge: while antonyms are semantically related, they exhibit negative correlation in meaning. Embedding models, which rely on co-occurrence statistics, often mistakenly place antonyms near each other in vector space because they appear in similar syntactic contexts (e.g., hot and cold co-occur with temperature).

- Therefore, distinguishing opposition from similarity remains a crucial goal in developing semantically aware models.

Polysemy (Multi-Sense)

- Polysemy occurs when a single word or expression has multiple related meanings or senses that share a conceptual or historical connection. Unlike homonymy, where words share the same spelling or pronunciation but have entirely unrelated meanings (e.g., bat — the flying mammal vs. bat — the sports implement), polysemy captures how one lexical form can extend its meaning through metaphor, metonymy, or functional association.

Characteristics of Polysemy

-

Multiple Related Meanings: A polysemous word carries several senses that stem from a shared semantic root or conceptual metaphor.

-

Example: Bank can refer to:

- a financial institution (I deposited money in the bank),

- the side of a river (We walked along the river bank).

-

Despite differing domains, both meanings involve the concept of accumulation — of money or land — showing a conceptual link rather than arbitrary coincidence.

-

-

Semantic Extension: New meanings of polysemous words often evolve through metaphorical or functional extensions of an existing sense.

-

Example: Head:

- Literal: part of the human body (She nodded her head),

- Metaphorical: leader of a group (the head of the company),

- Functional: the front or top of something (the head of the line).

-

Each new sense maintains a logical or spatial connection to the core concept of top or control.

-

-

Context-Dependent Interpretation: The correct sense of a polysemous word depends on the context in which it appears.

-

Example: Run:

- Movement (She runs every morning),

- Operation (The engine runs smoothly),

- Management (He runs the business).

-

The surrounding words and syntax determine which sense is activated.

-

-

Cognitive Efficiency: Polysemy demonstrates the economy of language, where existing lexical forms are reused for conceptually related meanings rather than inventing new words for every nuance. This flexibility enhances communication while minimizing vocabulary load.

Linguistic and Computational Relevance

- In linguistics, polysemy illustrates how meaning evolves through metaphor, metonymy, and conceptual mapping, making it central to studies of semantic change.

- In computational linguistics and NLP, polysemy introduces word sense ambiguity — a major challenge in embedding and translation models. Traditional word embeddings like Word2Vec assign one vector per word, merging multiple senses (e.g., bank as finance and geography), while contextual models like BERT dynamically adjust meaning based on context, successfully distinguishing different senses of the same word.

Key Differences Between Synonymy, Antonymy, and Polysemy

-

Synonymy involves different words that have similar or identical meanings.

- Example: happy and joyful both convey the sense of positive emotion, differing mainly in tone or intensity.

- Synonyms often cluster around the same semantic field, allowing subtle variation in expression without changing the fundamental meaning.

-

Antonymy involves different words that express opposite or contrasting meanings.

- Example: increase vs. decrease, love vs. hate.

- Unlike synonyms, antonyms establish a semantic axis of contrast, defining boundaries of meaning (e.g., hot–cold, true–false). This contrast helps structure conceptual domains in a way that allows gradation and polarity.

-

Polysemy involves a single word that carries multiple related meanings.

- Example: bright can mean intelligent or full of light.

- Polysemy reflects the dynamic evolution of meaning, showing how words adapt across contexts while maintaining a conceptual link between senses.

Comparative Analysis

| Aspect | Synonymy | Antonymy | Polysemy |

|---|---|---|---|

| Number of Words | Two or more different words | Two or more different words | One word with multiple meanings |

| Meaning Relationship | Similar or identical | Opposite or contrasting | Related but distinct |

| Example | begin / start | light / dark | paper (material / essay) |

| Function in Language | Adds expressiveness and variety | Defines contrast and logical opposition | Enables flexibility and metaphorical extension |

| Challenge in NLP | Identifying subtle contextual preference | Detecting oppositional relations despite similar contexts | Distinguishing multiple senses in one vector representation |

- In summary, synonymy enriches language through variation, antonymy structures meaning through opposition, and polysemy fuels adaptability and semantic evolution. Together, they define the intricate web of relationships that make natural language both expressive and conceptually organized.

Why Are Synonymy, Antonymy, and Polysemy Important?

- Synonymy enriches language by giving speakers multiple ways to express the same concept, allowing for stylistic variation, precision, and emotional nuance. It underpins paraphrasing, synonym substitution, and diversity in expression.

- Antonymy provides the structural backbone of contrast in meaning, enabling logical reasoning, polarity, and comparison. It helps define categorical boundaries (e.g., good vs. bad, success vs. failure) and sharpens conceptual distinctions in discourse.

-

Polysemy reflects the evolutionary adaptability of language. Words develop multiple meanings over time through metaphorical, cultural, or functional extensions, allowing speakers to describe new ideas without constantly coining new terms.

- Together, these three relationships create the semantic topology of language — the intricate network through which meaning is differentiated, extended, and interconnected.

Challenges

-

Ambiguity: Synonymy, antonymy, and polysemy all introduce potential ambiguity in communication.

- For instance, polysemy can obscure meaning (She banked by the river — financial or geographic sense?), while antonymy can lead to subtle contextual inversions (not happy doesn’t necessarily mean sad).

-

Disambiguation in Language Processing: In linguistics and natural language processing (NLP), determining whether two words are similar (synonyms), opposite (antonyms), or multi-sense (polysemous) remains a central challenge.

- Word embeddings like Word2Vec often capture relatedness rather than true semantic opposition, leading antonyms (hot, cold) to appear close in vector space.

- Contextual models such as BERT address polysemy by dynamically adjusting word meaning based on surrounding text, but fine-grained semantic disambiguation — distinguishing similarity from contrast — remains an open area of research in computational semantics.

Word Embedding Techniques

- Accurately representing the meaning of words is a crucial aspect of NLP. This task has evolved significantly over time, with various techniques being developed to capture the nuances of word semantics.

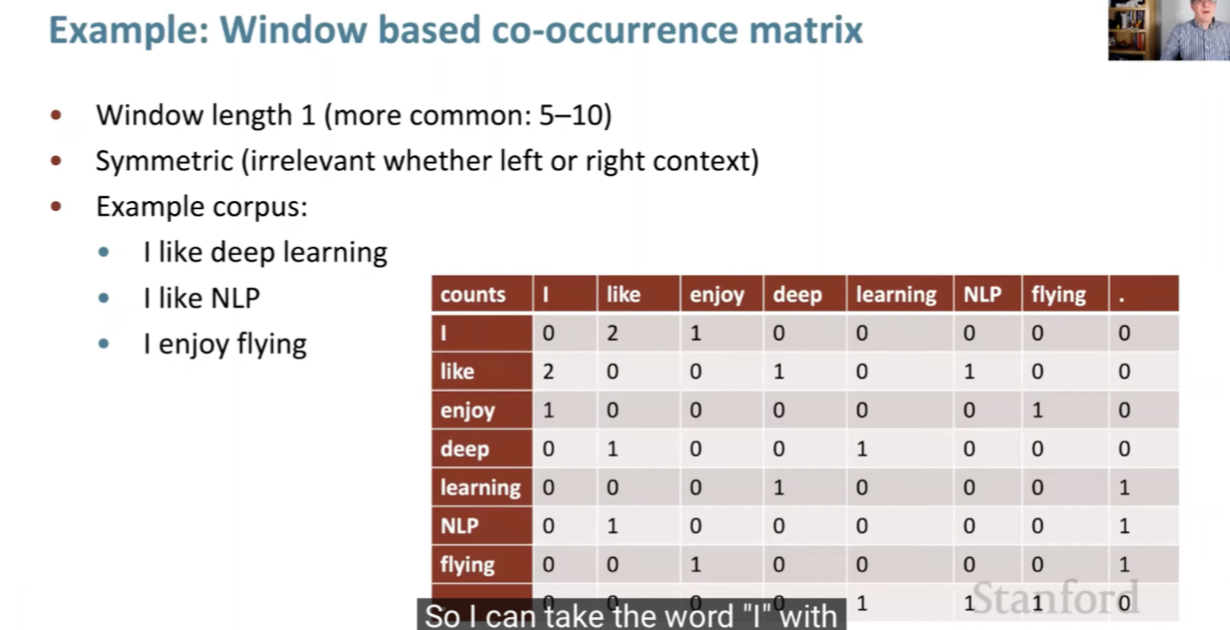

- Count-based methods like TF-IDF and BM25 focus on word frequency and document uniqueness, offering basic information retrieval capabilities.

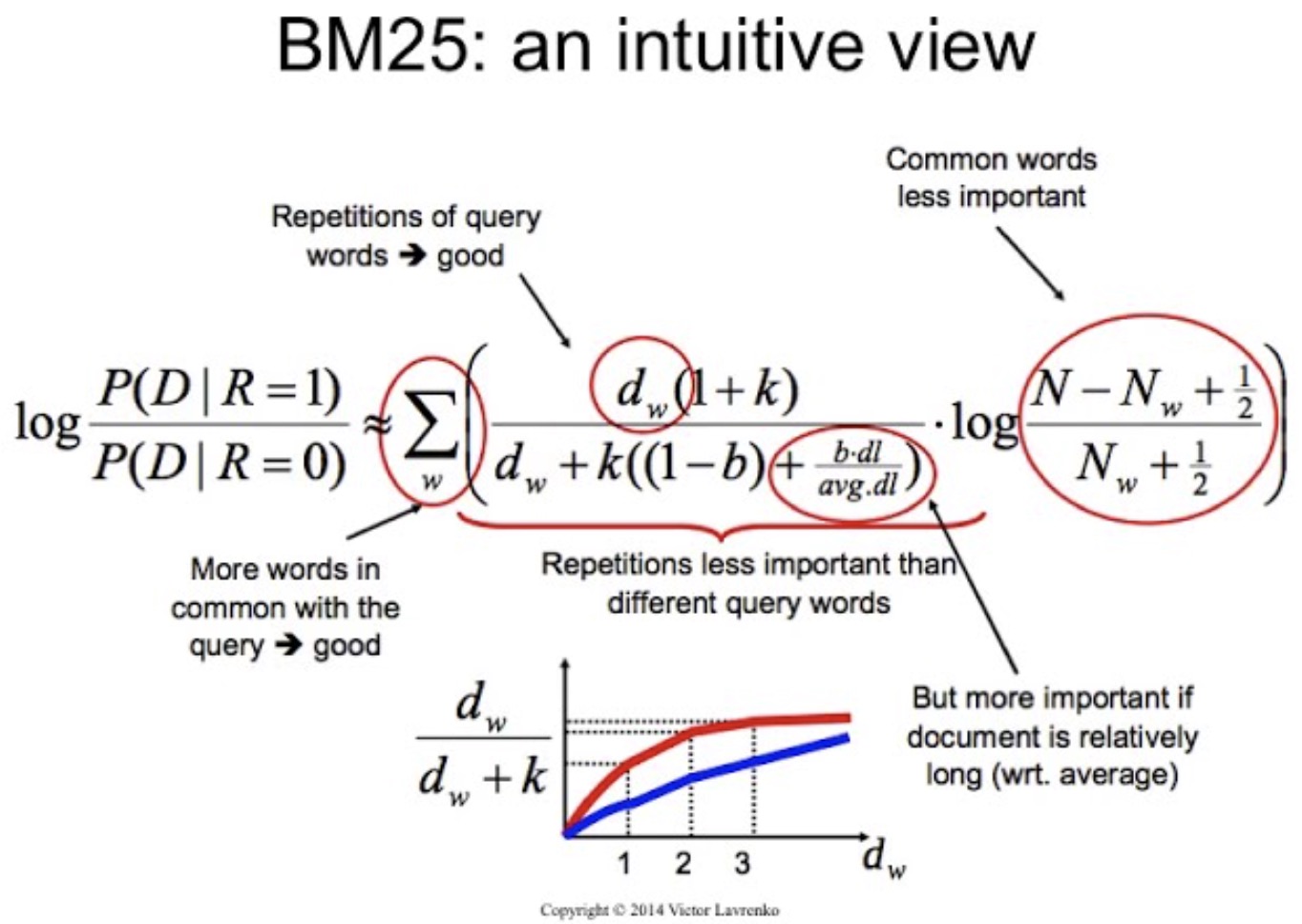

- Co-occurrence-based techniques such as Word2Vec, GloVe, and fastText analyze word contexts in large corpora, capturing semantic relationships and morphological details.

- Contextualized models like BERT and ELMo provide dynamic, context-sensitive embeddings, significantly enhancing language understanding by generating varied representations for words based on their usage in sentences.

-

The details of this taxonomy are as follows:

- Count-Based Techniques (TF-IDF and BM25):

- These methods, originating in information retrieval, rely on counting word occurrences within and across documents.

- TF-IDF identifies words that are frequent in a document but rare in the corpus, emphasizing their discriminative power.

- BM25 improves upon TF-IDF through probabilistic modeling, incorporating document length normalization and term saturation.

- While effective for keyword-based retrieval, these approaches are not semantic—they treat words as independent symbols without capturing meaning or contextual relationships.

- Co-occurrence Based / Static Embedding Techniques (Word2Vec, GloVe, fastText):

- These models mark the transition from frequency-based to predictive and semantic approaches.

- Word2Vec learns embeddings by predicting context words from a target (Skip-gram) or vice versa (CBOW), yielding dense vector representations that reflect semantic similarity.

- GloVe (Global Vectors for Word Representation) combines global co-occurrence statistics with local context learning, encoding both syntactic and semantic regularities.

- fastText extends Word2Vec by incorporating subword information, enabling the model to represent morphological variations and unseen words.

- These embeddings are static—each word has a single vector—but they are inherently semantic, as vector distances correspond to meaning-based similarity.

-

Contextualized / Dynamic Representation Techniques (ELMo, BERT):

- ELMo (Embeddings from Language Models) generates context-dependent embeddings by processing text bidirectionally using deep recurrent neural networks, allowing the same word to have different representations depending on its sentence context.

- BERT (Bidirectional Encoder Representations from Transformers) advances this idea using Transformer architecture, encoding bidirectional dependencies and enabling fine-grained understanding of syntax, semantics, and ambiguity.

- These models produce dynamic semantic embeddings—vectors that adapt to context, capturing multiple senses (polysemy) and resolving ambiguity more effectively than static models.

- Count-Based Techniques (TF-IDF and BM25):

Semantic Similarity and its Geometric Interpretation

-

In the process of learning representations, the models that produce semantic embeddings—namely Word2Vec, GloVe, fastText, BERT, and ELMo—map words into a geometric space such that semantic similarity corresponds to geometric (spatial) proximity in the embedding space. Words that occur in similar linguistic environments cluster together, allowing computational models to reason about meaning quantitatively and perform tasks such as analogy, clustering, and semantic search with human-like sensitivity to contextual meaning. Put simply, in these vector spaces, words with similar meanings or functions occupy nearby regions, and the degree of similarity is measured using geometric metrics such as cosine similarity, dot product, or Euclidean distance.

-

This geometric perspective enables embeddings to encode linguistic relationships as spatial relationships:

- Synonyms (e.g., king, monarch) lie close to each other because they occur in similar contexts.

- Analogical relationships (e.g., man : woman :: king : queen) manifest through vector arithmetic, where semantic relations correspond to geometric offsets.

- Antonyms (e.g., hot, cold) may also appear close due to shared contextual environments, highlighting a limitation of distributional methods that capture relatedness rather than true opposition.

Bag of Words (BoW)

Concept

- Bag of Words (BoW) is a simple and widely used technique for text representation in NLP. It represents text data (documents) as vectors of word counts, disregarding grammar and word order but keeping multiplicity. Each unique word in the corpus is a feature, and the value of each feature is the count of occurrences of the word in the document.

Steps to Create BoW Embeddings

- Tokenization:

- Split the text into words (tokens).

- Vocabulary Building:

- Create a vocabulary list of all unique words in the corpus.

- Vector Representation:

- For each document, create a vector where each element corresponds to a word in the vocabulary. The value is the count of occurrences of that word in the document.

Example

- Consider a corpus with the following two documents:

- “The cat sat on the mat.”

- “The dog sat on the log.”

-

Steps:

- Tokenization:

- Document 1:

["the", "cat", "sat", "on", "the", "mat"] - Document 2:

["the", "dog", "sat", "on", "the", "log"]

- Document 1:

- Vocabulary Building:

- Vocabulary:

["the", "cat", "sat", "on", "mat", "dog", "log"]

- Vocabulary:

- Vector Representation:

- Document 1:

[2, 1, 1, 1, 1, 0, 0] - Document 2:

[2, 0, 1, 1, 0, 1, 1]

- Document 1:

- The resulting BoW vectors are:

- Document 1:

[2, 1, 1, 1, 1, 0, 0] - Document 2:

[2, 0, 1, 1, 0, 1, 1]

- Document 1:

- Tokenization:

Limitations of BoW

- Bag of Words (BoW) embeddings, despite their simplicity and effectiveness in some applications, have several significant limitations. These limitations can impact the performance and applicability of BoW in more complex NLP tasks. Here’s a detailed explanation of these limitations:

Lack of Contextual Information

- Word Order Ignored:

- BoW embeddings do not take into account the order of words in a document. This means that “cat sat on the mat” and “mat sat on the cat” will have the same BoW representation, despite having different meanings.

- Loss of Syntax and Semantics:

- The embedding does not capture syntactic and semantic relationships between words. For instance, “bank” in the context of a financial institution and “bank” in the context of a riverbank will have the same representation.

High Dimensionality

- Large Vocabulary Size:

- The dimensionality of BoW vectors is equal to the number of unique words in the corpus, which can be extremely large. This leads to very high-dimensional vectors, resulting in increased computational cost and memory usage.

- Sparsity:

- Most documents use only a small fraction of the total vocabulary, resulting in sparse vectors with many zero values. This sparsity can make storage and computation inefficient.

Lack of Handling of Polysemy and Synonymy

- Polysemy:

- Polysemous words (same word with multiple meanings) are treated as a single feature, failing to capture their different senses based on context. Traditional word embedding algorithms assign a distinct vector to each word, which makes them unable to account for polysemy. For instance, the English word “bank” translates to two different words in French—”banque” (financial institution) and “banc” (riverbank)—capturing its distinct meanings.

- Synonymy:

- Synonyms (different words with similar meaning) are treated as completely unrelated features. For example, “happy” and “joyful” will have different vector representations even though they have similar meanings.

Fixed Vocabulary

- OOV (Out-of-Vocabulary) Words: BoW cannot handle words that were not present in the training corpus. Any new word encountered will be ignored or misrepresented, leading to potential loss of information.

Feature Independence Assumption

- No Inter-Feature Relationships: BoW assumes that the presence or absence of a word in a document is independent of other words. This independence assumption ignores any potential relationships or dependencies between words, which can be crucial for understanding context and meaning.

Scalability Issues

- Computational Inefficiency: As the size of the corpus increases, the vocabulary size also increases, leading to scalability issues. High-dimensional vectors require more computational resources for processing, storing, and analyzing the data.

No Weighting Mechanism

- Equal Importance: In its simplest form, BoW treats all words with equal importance, which is not always appropriate. Common but less informative words (e.g., “the”, “is”) are treated the same as more informative words (e.g., “cat”, “bank”).

Lack of Generalization

- Poor Performance on Short Texts: BoW can be particularly ineffective for short texts or documents with limited content, where the lack of context and the sparse nature of the vector representation can lead to poor performance.

Examples of Limitations

- Example of Lack of Contextual Information:

- Consider two sentences: “Apple is looking at buying a U.K. startup.” and “Startup is looking at buying an Apple.” Both would have similar BoW representations but convey different meanings.

- Example of High Dimensionality and Sparsity:

- A corpus with 100,000 unique words results in BoW vectors of dimension 100,000, most of which would be zeros for any given document.

Summary

- While BoW embeddings provide a straightforward and intuitive way to represent text data, their limitations make them less suitable for complex NLP tasks that require understanding context, handling large vocabularies efficiently, or dealing with semantic and syntactic nuances. More advanced techniques like TF-IDF, word embeddings (e.g., Word2Vec, GloVe, fastText), and contextual embeddings (e.g., ELMo, BERT) address many of these limitations by incorporating context, reducing dimensionality, and capturing richer semantic information.

Term Frequency-Inverse Document Frequency (TF-IDF)

- Term Frequency-Inverse Document Frequency (TF-IDF) is a statistical measure used to evaluate the importance of a word to a document in a collection or corpus. It is a fundamental technique in text processing that ranks the relevance of documents to a specific query, commonly applied in tasks such as document classification, search engine ranking, information retrieval, and text mining.

- The TF-IDF value increases proportionally with the number of times a word appears in the document, but this is offset by the frequency of the word in the corpus, which helps to control for the fact that some words (e.g., “the”, “is”, “and”) are generally more common than others.

Term Frequency (TF)

- Term Frequency measures how frequently a term occurs in a document. Since every document is different in length, it is possible that a term would appear much more times in long documents than shorter ones. Thus, the term frequency is often divided by the document length (the total number of terms in the document) as a way of normalization:

Inverse Document Frequency (IDF)

- Inverse Document Frequency measures how important a term is. While computing TF, all terms are considered equally important. However, certain terms, like “is”, “of”, and “that”, may appear a lot of times but have little importance. Thus, we need to weigh down the frequent terms while scaling up the rare ones, by computing the following:

Example

Steps to Calculate TF-IDF

- Step 1: TF (Term Frequency): Number of times a word appears in a document divided by the total number of words in that document.

- Step 2: IDF (Inverse Document Frequency): Calculated as

log(N / df), where:Nis the total number of documents in the collection.dfis the number of documents containing the word.

- Step 3: TF-IDF: The product of TF and IDF.

Document Collection

- Doc 1: “The sky is blue.”

- Doc 2: “The sun is bright.”

- Total documents (

N): 2

Calculate Term Frequency (TF)

| Word | TF in Doc 1 ("The sky is blue") | TF in Doc 2 ("The sun is bright") |

|---|---|---|

| the | 1/4 | 1/5 |

| sky | 1/4 | 0/5 |

| is | 1/4 | 1/5 |

| blue | 1/4 | 0/5 |

| sun | 0/4 | 1/5 |

| bright | 0/4 | 1/5 |

Calculate Document Frequency (DF) and Inverse Document Frequency (IDF)

| Word | DF (in how many docs) | IDF (log(N/DF)) |

|---|---|---|

| the | 2 | log(2/2) = 0 |

| sky | 1 | log(2/1) ≈ 0.693 |

| is | 2 | log(2/2) = 0 |

| blue | 1 | log(2/1) ≈ 0.693 |

| sun | 1 | log(2/1) ≈ 0.693 |

| bright | 1 | log(2/1) ≈ 0.693 |

Calculate TF-IDF for Each Word

| Word | TF in Doc 1 | IDF | TF-IDF in Doc 1 | TF in Doc 2 | IDF | TF-IDF in Doc 2 |

|---|---|---|---|---|---|---|

| the | 1/4 | 0 | 0 | 1/5 | 0 | 0 |

| sky | 1/4 | log(2) ≈ 0.693 | (1/4) * 0.693 ≈ 0.173 | 0/5 | log(2) ≈ 0.693 | 0 |

| is | 1/4 | 0 | 0 | 1/5 | 0 | 0 |

| blue | 1/4 | log(2) ≈ 0.693 | (1/4) * 0.693 ≈ 0.173 | 0/5 | log(2) ≈ 0.693 | 0 |

| sun | 0/4 | log(2) ≈ 0.693 | 0 | 1/5 | log(2) ≈ 0.693 | (1/5) * 0.693 ≈ 0.139 |

| bright | 0/4 | log(2) ≈ 0.693 | 0 | 1/5 | log(2) ≈ 0.693 | (1/5) * 0.693 ≈ 0.139 |

Explanation of Table

- The TF column shows the term frequency for each word in each document.

- The IDF column shows the inverse document frequency for each word.

- The TF-IDF columns for Doc 1 and Doc 2 show the final TF-IDF score for each word, calculated as

TF * IDF.

Key Observations

- Words like “the” and “is” have an IDF of 0 because they appear in both documents, making them less distinctive.

- Words like “blue,” “sun,” and “bright” have higher TF-IDF values because they appear in only one document, making them more distinctive for that document.

- The TF-IDF score for “blue” in Doc 1 is thus a measure of its importance in that document, within the context of the given document collection. This score would be different in a different document or a different collection, reflecting the term’s varying importance.

Limitations of TF-IDF

- While TF-IDF is a powerful tool for certain applications, the limitations highlighted below make it less suitable for tasks that require deep understanding of language, such as semantic search, word sense disambiguation, or processing of very short or dynamically changing texts. This has led to the development and adoption of more advanced techniques like word embeddings and neural network-based models in NLP.

Lack of Context and Word Order

- TF-IDF treats each word in a document independently and does not consider the context in which a word appears. This means it cannot capture the meaning of words based on their surrounding words or the overall semantic structure of the text. The word order is also ignored, which can be crucial in understanding the meaning of a sentence.

Does Not Account for Polysemy

- Words with multiple meanings (polysemy) are treated the same regardless of their context. For example, the word “bank” would have the same representation in “river bank” and “savings bank”, even though it has different meanings in these contexts.

Lack of Semantic Understanding

- TF-IDF relies purely on the statistical occurrence of words in documents, which means it lacks any understanding of the semantics of the words. It cannot capture synonyms or related terms unless they appear in similar documents within the corpus.

Bias Towards Rare Terms

- While the IDF component of TF-IDF aims to balance the frequency of terms, it can sometimes overly emphasize rare terms. This might lead to overvaluing words that appear infrequently but are not necessarily more relevant or important in the context of the document.

Vocabulary Limitation

- The TF-IDF model is limited to the vocabulary of the corpus it was trained on. It cannot handle new words that were not in the training corpus, making it less effective for dynamic content or languages that evolve rapidly.

Normalization Issues

- The normalization process in TF-IDF (e.g., dividing by the total number of words in a document) may not always be effective in balancing document lengths and word frequencies, potentially leading to skewed results.

Requires a Large and Representative Corpus

- For the IDF part of TF-IDF to be effective, it needs a large and representative corpus. If the corpus is not representative of the language or the domain of interest, the IDF scores may not accurately reflect the importance of the words.

No Distinction Between Different Types of Documents

- TF-IDF treats all documents in the corpus equally, without considering the type or quality of the documents. This means that all sources are considered equally authoritative, which may not be the case.

Poor Performance with Short Texts

- In very short documents, like tweets or SMS messages, the TF-IDF scores can be less meaningful because of the limited word occurrence and context.

Best Match 25 (BM25)

- BM25 is a ranking function used in information retrieval systems, particularly in search engines, to rank documents based on their relevance to a given search query. It’s a part of the family of probabilistic information retrieval models and is an extension of the TF-IDF (Term Frequency-Inverse Document Frequency) approach, though it introduces several improvements and modifications.

Key Components of BM25

-

Term Frequency (TF): BM25 modifies the term frequency component of TF-IDF to address the issue of term saturation. In TF-IDF, the more frequently a term appears in a document, the more it is considered relevant. However, this can lead to a problem where beyond a certain point, additional occurrences of a term don’t really indicate more relevance. BM25 addresses this by using a logarithmic scale for term frequency, which allows for a point of diminishing returns, preventing a term’s frequency from having an unbounded impact on the document’s relevance.

-

Inverse Document Frequency (IDF): Like TF-IDF, BM25 includes an IDF component, which helps to weight a term’s importance based on how rare or common it is across all documents. The idea is that terms that appear in many documents are less informative than those that appear in fewer documents.

-

Document Length Normalization: BM25 introduces a sophisticated way of handling document length. Unlike TF-IDF, which may unfairly penalize longer documents, BM25 normalizes for length in a more balanced manner, reducing the impact of document length on the calculation of relevance.

-

Tunable Parameters: BM25 includes parameters like \(k1\) and \(b\), which can be adjusted to optimize performance for specific datasets and needs. \(k1\) controls how quickly an increase in term frequency leads to term saturation, and \(b\) controls the degree of length normalization.

Example

-

Imagine you have a collection of documents and a user searches for “solar energy advantages”.

- Document A is 300 words long and mentions “solar energy” 4 times and “advantages” 3 times.

- Document B is 1000 words long and mentions “solar energy” 10 times and “advantages” 1 time.

- Using BM25:

- Term Frequency: The term “solar energy” appears more times in Document B, but due to term saturation, the additional occurrences don’t contribute as much to its relevance score as the first few mentions.

- Inverse Document Frequency: If “solar energy” and “advantages” are relatively rare in the overall document set, their appearances in these documents increase the relevance score more significantly.

- Document Length Normalization: Although Document B is longer, BM25’s length normalization ensures that it’s not unduly penalized simply for having more words. The relevance of the terms is balanced against the length of the document.

- So, despite Document B having more mentions of “solar energy”, BM25 will calculate the relevance of both documents in a way that balances term frequency, term rarity, and document length, potentially ranking them differently based on how these factors interplay. The final relevance scores would then determine their ranking in the search results for the query “solar energy advantages”.

BM25: Evolution of TF-IDF

- BM25 is a ranking function used by search engines to estimate the relevance of documents to a given search query. It’s part of the probabilistic information retrieval model and is considered an evolution of the TF-IDF (Term Frequency-Inverse Document Frequency) model. Both are used to rank documents based on their relevance to a query, but they differ in how they calculate this relevance.

BM25

- Term Frequency Component: Like TF-IDF, BM25 considers the frequency of the query term in a document. However, it adds a saturation point to prevent a term’s frequency from disproportionately influencing the document’s relevance.

- Length Normalization: BM25 adjusts for the length of the document, penalizing longer documents less harshly than TF-IDF.

- Tuning Parameters: It includes two parameters, \(k1\) and \(b\), which control term saturation and length normalization, respectively. These can be tuned to suit specific types of documents or queries.

TF-IDF

- Term Frequency: TF-IDF measures the frequency of a term in a document. The more times the term appears, the higher the score.

- Inverse Document Frequency: This component reduces the weight of terms that appear in many documents across the corpus, assuming they are less informative.

- Simpler Model: TF-IDF is generally simpler than BM25 and doesn’t involve parameters like \(k1\) or \(b\).

Example

-

Imagine a search query “chocolate cake recipe” and two documents:

- Document A: 100 words, “chocolate cake recipe” appears 10 times.

- Document B: 1000 words, “chocolate cake recipe” appears 15 times.

Using TF-IDF:

- The term frequency for “chocolate cake recipe” would be higher in Document A.

- Document B, being longer, might get a lower relevance score due to less frequency of the term.

Using BM25:

- The term frequency component would reach a saturation point, meaning after a certain frequency, additional occurrences of “chocolate cake recipe” contribute less to the score.

- Length normalization in BM25 would not penalize Document B as heavily as TF-IDF, considering its length.

- The tuning parameters \(k1\) and \(b\) could be adjusted to optimize the balance between term frequency and document length.

-

In essence, while both models aim to determine the relevance of documents to a query, BM25 offers a more nuanced and adjustable approach, especially beneficial in handling longer documents and ensuring that term frequency doesn’t disproportionately affect relevance.

Limitations of BM25

- Understanding the limitations below is crucial when implementing BM25 in a search engine or information retrieval system, as it helps in identifying cases where BM25 might need to be supplemented with other techniques or algorithms for better performance.

Parameter Sensitivity

- BM25 includes parameters like \(k1\) and \(b\), which need to be fine-tuned for optimal performance. This tuning process can be complex and is highly dependent on the specific nature of the document collection and queries. Inappropriate parameter settings can lead to suboptimal results.

Non-Handling of Semantic Similarities

- BM25 primarily relies on exact keyword matching. It does not account for the semantic relationships between words. For instance, it would not recognize “automobile” and “car” as related terms unless explicitly programmed to do so. This limitation makes BM25 less effective in understanding the context or capturing the nuances of language.

Ineffectiveness with Short Queries or Documents

- BM25’s effectiveness can decrease with very short queries or documents, as there are fewer words to analyze, making it harder to distinguish relevant documents from irrelevant ones.

Length Normalization Challenges

- While BM25’s length normalization aims to prevent longer documents from being unfairly penalized, it can sometimes lead to the opposite problem, where shorter documents are unduly favored. The balance is not always perfect, and the effectiveness of the normalization can vary based on the dataset.

Query Term Independence

- BM25 assumes independence between query terms. It doesn’t consider the possibility that the presence of certain terms together might change the relevance of a document compared to the presence of those terms individually.

Difficulty with Rare Terms

- Like TF-IDF, BM25 can struggle with very rare terms. If a term appears in very few documents, its IDF (Inverse Document Frequency) component can become disproportionately high, skewing results.

Performance in Specialized Domains

- In specialized domains with unique linguistic features (like legal, medical, or technical fields), BM25 might require significant customization to perform well. This is because standard parameter settings and term-weighting mechanisms may not align well with the unique characteristics of these specialized texts.

Ignoring Document Quality

- BM25 focuses on term frequency and document length but doesn’t consider other aspects that might indicate document quality, such as authoritativeness, readability, or the freshness of information.

Vulnerability to Keyword Stuffing

- Like many other keyword-based algorithms, BM25 can be susceptible to keyword stuffing, where documents are artificially loaded with keywords to boost relevance.

Incompatibility with Complex Queries

- BM25 is less effective for complex queries, such as those involving natural language questions or multi-faceted information needs. It is designed for keyword-based queries and may not perform well with queries that require understanding of context or intent.

Word2Vec

- Proposed in Efficient Estimation of Word Representations in Vector Space by Mikolov et al. (2013), the Word2Vec algorithm marked a significant advancement in the field of NLP as a notable example of a word embedding technique.

- Word2Vec is renowned for its effectiveness in learning word vectors, which are then used to decode the semantic relationships between words. It utilizes a vector space model to encapsulate words in a manner that captures both semantic and syntactic relationships. This method enables the algorithm to discern similarities and differences between words, as well as to identify analogous relationships, such as the parallel between “Stockholm” and “Sweden” and “Cairo” and “Egypt.”

- Word2Vec’s methodology of representing words as vectors in a semantic and syntactic space has profoundly impacted the field of NLP, offering a robust framework for capturing the intricacies of language and its usage.

Motivation

- Word2Vec introduced a fundamental shift in NLP by allowing efficient learning of distributed word representations that capture both semantic and syntactic relationships.

- These embeddings support a wide range of downstream tasks, such as text classification, translation, and recommendation systems, due to their ability to encode meaning in vector space.

- Key advantages include:

- The ability to capture semantic similarity — words appearing in similar contexts have similar vector representations.

- Support for vector arithmetic to reveal analogical relationships (for example, “king - man + woman ≈ queen”).

- Computational efficiency due to simplified training strategies such as negative sampling and hierarchical softmax (covered in detail later).

- A shallow network design, allowing rapid training even on large corpora.

- Generalization across linguistic tasks by representing words in a continuous vector space rather than as discrete symbols.

Theoretical Foundation: Distributional Hypothesis

-

At the heart of Word2Vec lies the distributional hypothesis in linguistics, which states that “words that occur in similar contexts tend to have similar meanings.” Formally, this implies that the meaning of a word \(w\) can be inferred from the statistical distribution of other words that co-occur with it in text.

-

If \(C(w)\) denotes the set of context words appearing around \(w\) within a fixed window, Word2Vec seeks to learn an embedding function \(f: w \mapsto v_w \in \mathbb{R}^N\) that maximizes the likelihood of observing those context words.

-

Thus, for every word \(w_t\) in the corpus, the training objective is to maximize:

\[\frac{1}{T} \sum_{t=1}^{T} \sum_{-c \le j \le c, j \ne 0} \log p(w_{t+j} | w_t)\]- where \(c\) is the context window size and \(T\) is the corpus length.

Representational Power and Semantic Arithmetic

- One of the key insights from Word2Vec is that semantic relationships between words can be captured through linear relationships in vector space. This means that algebraic operations on word vectors can reveal linguistic regularities, such as:

- These relationships emerge naturally because Word2Vec embeds words in such a way that cosine similarity corresponds to semantic relatedness:

- This property allows for analogical reasoning, clustering, and downstream use in a wide range of NLP tasks.

Probabilistic Interpretation

-

From a probabilistic standpoint, Word2Vec models the conditional distribution of context words given a target word (Skip-gram) or a target word given its context (CBOW). The softmax function formalizes this as:

\[p(w_o | w_i) = \frac{\exp(u_{w_o}^T v_{w_i})}{\sum_{w' \in V} \exp(u_{w'}^T v_{w_i})}\]-

where

- \(v_{w_i}\) is the input vector (representing the center or target word),

- \(u_{w_o}\) is the output vector (representing the context word), and

- \(V\) is the vocabulary.

-

-

This formulation defines a differentiable objective that allows embeddings to be learned through backpropagation and stochastic gradient descent.

-

The following figure shows a simplified visualization of the training process using context prediction.

Motivation behind Word2Vec: The Need for Context-based Semantic Understanding

- Traditional approaches to textual representation—such as TF-IDF and BM25—treat words as independent entities and rely on counting-based statistics rather than semantic relationships. While these methods are effective for ranking documents or identifying keyword importance, they fail to represent the contextual and relational meaning that underpins natural language.

- The motivation for Word2Vec arises from the limitations of count-based models that fail to capture semantics. By introducing a predictive, context-driven learning mechanism, Word2Vec constructs a semantic embedding space where contextual relationships between words are preserved. This makes it a foundational technique for subsequent deep learning models such as GloVe by Pennington et al. (2014), fastText by Bojanowski et al. (2017), ELMo by Peters et al. (2018), and BERT by Devlin et al. (2018), which further refine contextual understanding at the sentence and discourse level.

Background: Limitations of Frequency-based Representations

-

TF-IDF (Term Frequency–Inverse Document Frequency):

-

This method assigns weights to terms based on how frequently they appear in a document and how rare they are across a corpus.

-

Mathematically, the weight for a term \(t\) in a document \(d\) is given by:

\[\text{TF-IDF}(t, d) = \text{TF}(t, d) \times \log\frac{N}{\text{DF}(t)}\]- where \(\text{TF}(t, d)\) is the frequency of term \(t\) in document \(d\), \(\text{DF}(t)\) is the number of documents containing \(t\), and \(N\) is the total number of documents.

-

While TF-IDF captures word importance, it ignores semantic similarity—two words like “doctor” and “physician” are treated as entirely distinct, even though they share similar meanings.

-

-

BM25 (Best Matching 25):

-

BM25 is a probabilistic ranking function often used in information retrieval, first described by Robertson & Walker (1994). It improves upon TF-IDF by introducing parameters to handle term saturation and document length normalization:

\[\text{BM25}(t, d) = \log\left(\frac{N - \text{DF}(t) + 0.5}{\text{DF}(t) + 0.5}\right) \cdot \frac{(k_1 + 1) \text{TF}(t, d)}{k_1 \left[(1 - b) + b\frac{|d|}{\text{avgdl}}\right] + \text{TF}(t, d)}\]- where \(k_1\) and \(b\) are tunable parameters, \(\mid d \mid\) is the document length, and \(\text{avgdl}\) is the average document length across the corpus.

-

BM25 effectively balances term relevance and document length normalization, but it remains a lexical rather than semantic measure. It doesn’t model relationships such as synonymy, antonymy, or analogy.

-

The image below provides an intuitive visualization of the BM25 mechanism. It shows how the formula rewards documents containing more query word repetitions (up to a saturation point) while discounting overly common terms. The right-hand logarithmic component reduces the weight of frequent words, while the normalization term adjusts for document length. The graph below the equation illustrates how term frequency contributions flatten as occurrences increase, reflecting diminishing returns for repeated terms.

-

Motivation for Contextual Representations

-

Human language is inherently contextual: the meaning of a word depends on the words surrounding it. For example, the word bank in “river bank” differs from bank in “bank loan.”

- Frequency-based methods cannot distinguish these meanings because they represent bank as a single static token.

- What is required is a context-aware model that learns word meaning from its usage patterns in sentences—capturing semantics not just by frequency, but by co-occurrence structure and distributional behavior.

Word2Vec as a Contextual Solution

- Word2Vec resolves these shortcomings by learning dense, low-dimensional embeddings that encode semantic similarity through co-occurrence patterns.

- Instead of treating each word as an independent unit, Word2Vec models conditional probabilities such as:

- These probabilities are parameterized by neural network weights that correspond to word embeddings.

- Through training, the model positions semantically similar words near each other in the embedding space.

Semantic Vector Space: A Conceptual Leap

- In Word2Vec, each word is represented as a continuous vector \(v_w \in \mathbb{R}^N\), where semantic similarity corresponds to geometric proximity.

-

This vector representation allows the model to capture linguistic phenomena that statistical models cannot:

- Synonymy: Words like “car” and “automobile” appear near each other.

- Antonymy: Words like “hot” and “cold” occupy positions with structured contrastive relations.

- Analogies: Relationships such as \(v_{\text{Paris}} - v_{\text{France}} + v_{\text{Italy}} \approx v_{\text{Rome}}\) demonstrate how linear vector operations encode relational meaning.

Comparison with Traditional Models

| Aspect | TF-IDF | BM25 | Word2Vec |

|---|---|---|---|

| Representation | Sparse, count-based | Sparse, probabilistic | Dense, continuous |

| Captures context | No | No | Yes |

| Semantic similarity | Not modeled | Not modeled | Explicitly modeled |

| Handles polysemy | No | No | Partially (through contextual learning but not fully since it assigns a single vector per word) |

| Learning mechanism | Frequency-based | Probabilistic ranking | Neural prediction |

Why Context Matters: Intuitive Illustration

- Imagine reading the sentence: “The bat flew across the cave.”, and then another: “He swung the bat at the ball.”

- In traditional models, the token “bat” is identical in both contexts.

- However, Word2Vec distinguishes them by how “bat” co-occurs with words like flew, cave, swung, and ball. The embeddings for these contexts push the representation of bat toward two distinct regions of the semantic space—one near animals, the other near sports equipment.

Core Idea

- Word2Vec represents a transformative shift in natural language understanding by learning word meanings through prediction tasks rather than through counting word co-occurrences.

- At its core, the algorithm employs a shallow neural network trained on a large corpus to predict contextual relationships between words, producing dense, meaningful vector representations that encode both syntactic and semantic regularities.

- The core idea behind Word2Vec is to transform linguistic co-occurrence information into a geometric form that captures word meaning through spatial relationships. It does this not by memorizing frequencies, but by predicting contexts, allowing the embedding space to inherently encode semantic similarity, analogy, and syntactic relationships in a mathematically continuous manner.

Predictive Nature of Word2Vec

-

Unlike earlier statistical methods that rely on co-occurrence counts (e.g., Latent Semantic Analysis by Deerwester et al. (1990)), Word2Vec learns embeddings by solving a prediction problem:

- Given a target word, predict its context words (Skip-gram).

- Given a set of context words, predict the target word (CBOW).

-

This approach stems from the distributional hypothesis, operationalized via probabilistic modeling.

-

Formally, for a corpus with words \(w_1, w_2, \dots, w_T\), and context window size \(c\), the model maximizes the following average log probability:

- This objective encourages the model to learn embeddings \(v_{w_t}\) and \(u_{w_{t+j}}\) such that similar words (those that appear in similar contexts) have similar vector representations.

Word Vectors and Semantic Encoding

-

Each word \(w\) in the vocabulary is associated with two vectors:

- Input vector \(v_w\): representing the word when it is the center (target) word.

- Output vector \(u_w\): representing the word when it appears in the context.

-

These vectors are columns in the weight matrices:

- \(W \in \mathbb{R}^{V \times N}\) (input-to-hidden layer)

- \(W' \in \mathbb{R}^{N \times V}\) (hidden-to-output layer)

-

Thus, the total parameters of the model are \(\theta = {W, W'}\), and for any word \(w_i\) and context word \(c\):

\[p(w_i | c) = \frac{\exp(u_{w_i}^T v_c)}{\sum_{w'=1}^{V} \exp(u_{w'}^T v_c)}\] -

This softmax-based conditional probability is the foundation for learning embeddings that maximize the likelihood of true word–context pairs.

Vector Arithmetic and Semantic Regularities

- One of Word2Vec’s most striking properties is its ability to encode linguistic regularities as linear relationships in vector space.

- For example:

- Such arithmetic operations are possible because the training objective aligns words based on shared contextual usage, as demonstrated in Mikolov et al. (2013).

- Consequently, the cosine similarity between two word vectors reflects their semantic closeness:

Network Architecture and Operation

-

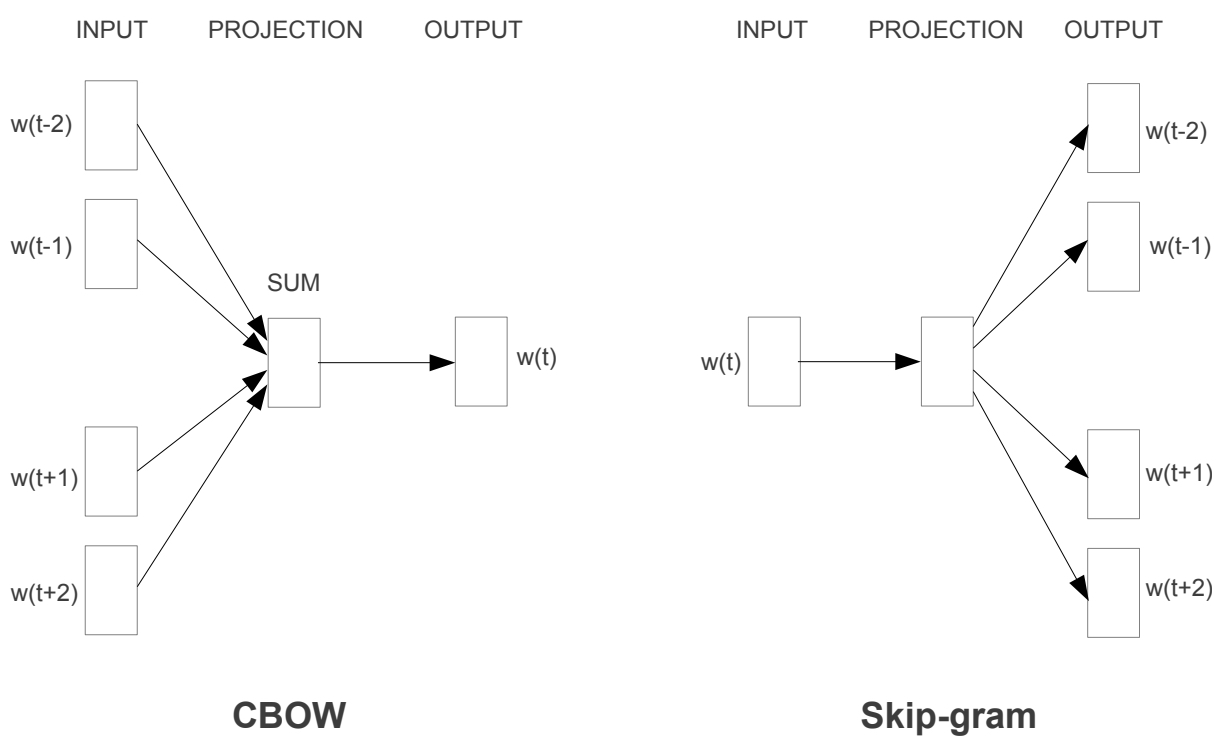

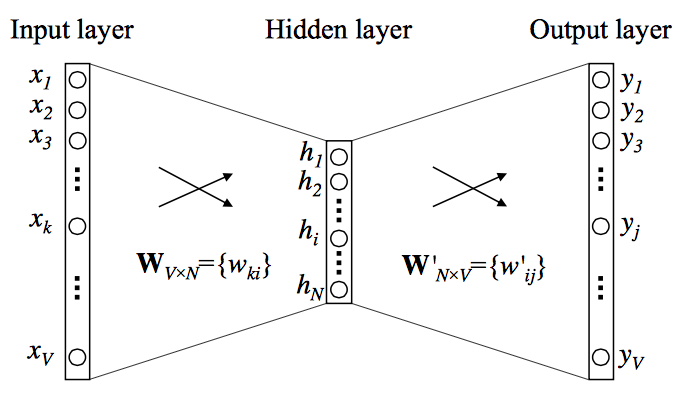

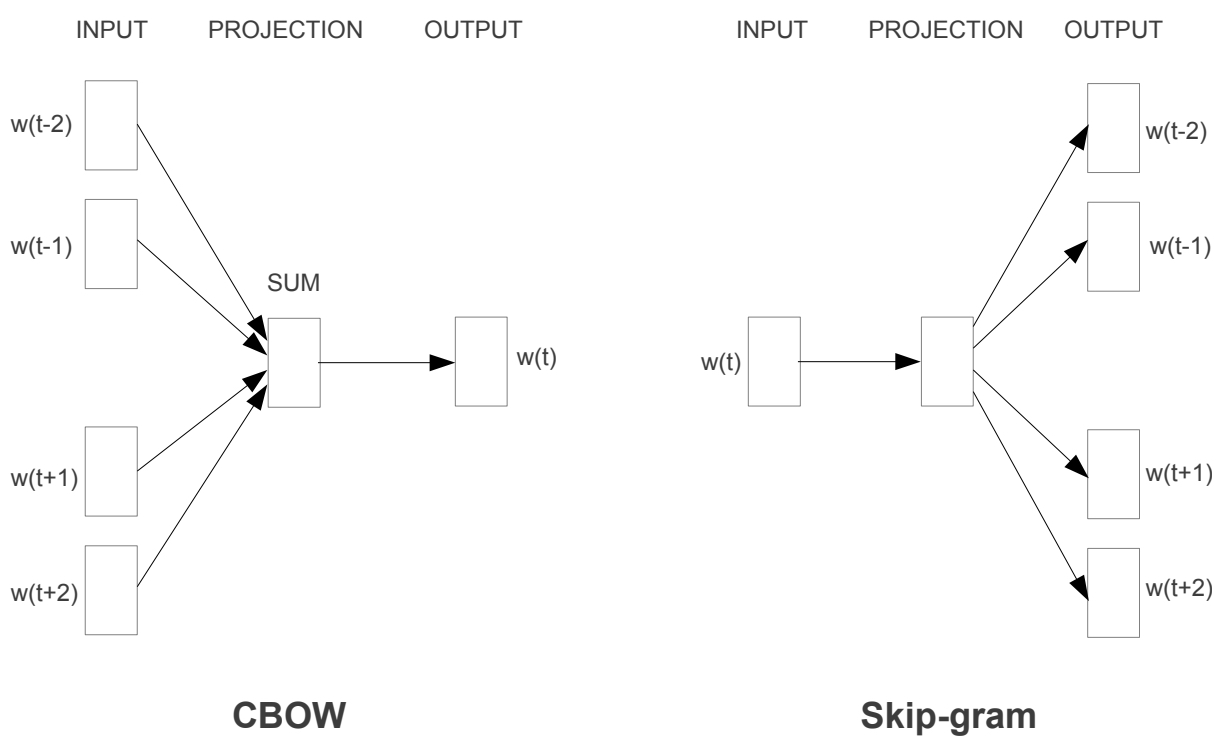

The figure below illustrates the internal neural network structure of Word2Vec, showing both Continuous Bag of Words (CBOW) and Skip-gram architectures.

- Input layer: each word in the context window (for CBOW) or the target word (for Skip-gram) is represented as a one-hot encoded vector, where only one element corresponding to the word’s index in the vocabulary is 1 and the rest are 0.

- Projection (hidden) layer: a shared weight matrix transforms these sparse one-hot inputs into dense N-dimensional embeddings. In CBOW, the embeddings of context words are averaged (or summed); in Skip-gram, the target word’s embedding is used directly.

- Output layer: applies a softmax to predict either the target word (CBOW) or the surrounding context words (Skip-gram) across the vocabulary.

-

After training, the weight matrix of the projection layer contains the learned word embeddings, capturing semantic relationships through co-occurrence patterns.

-

The following figure shows shows a visualization of this architecture and the two modeling directions: CBOW and Skip-gram. In CBOW (left side of the figure), multiple context words such as \(w(t-2), w(t-1), w(t+1),\) and \(w(t+2)\) are aggregated to predict the central target word \(w(t)\). In Skip-gram (right side of the figure), the target/central word \(w(t)\) is fed in as input and the surrounding context words within a given window are predicted as output — both using the same embedding space to learn distributed representations of words.

Interpretability of the Embedding Space

-

Through iterative training across billions of word pairs, the model learns embeddings such that:

- Words that appear in similar contexts have similar directions in the vector space.

- Analogous relationships are captured through vector offsets.

- Syntactic categories (e.g., plurals, verb tenses) and semantic groupings (e.g., cities, countries) naturally emerge as clusters.

-

For instance, after training, the vectors for [“Paris”, “London”, “Berlin”] form a subspace distinct from [“France”, “UK”, “Germany”], yet maintain parallel structure, enabling analogical reasoning such as:

Word2Vec Architectures

-

Word2Vec offers two distinct neural architectures for learning word embeddings, as introduced by Mikolov et al. (2013) and further detailed in their follow-up paper, Distributed Representations of Words and Phrases and their Compositionality:

- Continuous Bag-of-Words (CBOW)

- Continuous Skip-gram (Skip-gram)

- Both are trained on the same corpus using similar mechanisms but differ in the direction of prediction — that is, whether the model predicts the center word from its context words or the context words from the center word. Both CBOW and Skip-gram learn embeddings that reflect word meaning through context prediction.

- CBOW excels in efficiency and stability for frequent words, while Skip-gram provides richer embeddings for rare words. Together, they form the foundation of Word2Vec’s success — enabling scalable and semantically powerful word representations.

Continuous Bag-of-Words (CBOW)

-

Concept:

- The CBOW model predicts the target (center) word based on the words surrounding it.

- Given a window of context words \(C_t = {w_{t-c}, \ldots, w_{t-1}, w_{t+1}, \ldots, w_{t+c}}\), the goal is to maximize:

- This makes CBOW a context-to-word model — the inverse of Skip-gram.

-

Architecture:

- The following figure shows the CBOW model, where multiple context word one-hot vectors are fed into a shared embedding matrix, averaged, and used to predict the central target word.

- Mathematically, the average of the context word vectors is computed as:

- The probability of predicting the target word \(w_t\) given this averaged context is then defined using the softmax function:

-

Learning Objective:

- The model’s training objective is to maximize the log-likelihood of all observed target words given their contexts over the entire corpus:

-

where:

- \(T\): total number of words (training instances) in the corpus.

- \(t\): index of the current target word position in the sequence.

- \(w_t\): the target (center) word being predicted.

- \(C_t\): the set of surrounding context words within the window size \(c\), i.e., \({w_{t-c}, \ldots, w_{t-1}, w_{t+1}, \ldots, w_{t+c}}\).

- \(p(w_t \mid C_t)\): the conditional probability of predicting the target word \(w_t\) given its context, computed via the softmax function.

- \(\log p(w_t \mid C_t)\): the log-likelihood term, used to penalize incorrect predictions smoothly.

- \(\mathcal{L}_{CBOW}\): the overall average log-likelihood objective that the model seeks to maximize during training.

-

Intuitively, this means CBOW learns embeddings that make the correct target word highly probable given its neighboring context — effectively maximizing the model’s ability to predict real word–context co-occurrences.

-

Gradients are propagated to update both input (\(v_w\)) and output (\(u_w\)) embeddings via stochastic gradient descent.

-

Parameterization:

-

For a given word index \(k\) in vocabulary \(V\), the referenced word is represented as:

- Input vector \(v_w = W_{(k, .)}\)

- Output vector \(u_w = W'_{(., k)}\)

-

The hidden layer has \(N\) neurons, and the model learns weight matrices \(W \in \mathbb{R}^{V \times N}\) and \(W' \in \mathbb{R}^{N \times V}\).

-

-

Overall Formula:

\[p(w_i | c) = y_i = \frac{e^{u_i}}{\sum_{i=1}^V e^{u_i}}, \quad \text{where } u_i = u_{w_i}^T v_c\]

Continuous Skip-gram (SG)

-

Concept:

- The Skip-gram model reverses CBOW’s direction: instead of predicting the target from the context, it predicts context words from the center word.

-

Architecture:

- The following figure shows the structure of both Word2Vec architectures side-by-side: CBOW, which predicts the current word based on its context, and skip-gram, which predicts the surrounding words given the current word.

-

Softmax Prediction:

-

Recall that each word in Word2Vec has two representations: an input vector \(v_w\) for when it serves as the target (center) word, and an output vector \(u_w\) for when it appears as a context word.

-

Each target–context pair \((w_t, w_{t+j})\) is modeled as:

\[p(w_{t+j} | w_t) = \frac{\exp(u_{w_{t+j}}^T v_{w_t})} {\sum_{w' \in V} \exp(u_{w'}^T v_{w_t})}\] -

where:

- \(w_t\): the target (center) word.

- \(w_{t+j}\): a context word within a window of size \(c\) around \(w_t\).

- \(v_{w_t}\): the input embedding of the target word \(w_t\).

- \(u_{w_{t+j}}\): the output embedding of the context word \(w_{t+j}\).

- \(V\): the entire vocabulary.

- \(\exp(u_{w_{t+j}}^T v_{w_t})\): measures the similarity (via dot product) between the target and context embeddings in the exponentiated space.

- \(\sum_{w' \in V} \exp(u_{w'}^T v_{w_t})\): the normalization term ensuring the probabilities sum to 1 over all possible context words.

-

-

Learning Objective:

-

The Skip-gram objective is to maximize the log-likelihood of context words given the center word \(w_t\) over the entire corpus:

\[\mathcal{L}_{SG} = \frac{1}{T} \sum_{t=1}^{T} \sum_{-c \le j \le c, j \ne 0} \log p(w_{t+j} | w_t)\]- where:

- \(T\): total number of words (training samples) in the corpus.

- \(c\): context window size, determining how many words on each side of \(w_t\) are considered as context.

- \(w_t\): the center (target) word at position \(t\).

- \(w_{t+j}\): a context word at an offset \(j\) from the center word.

- \(p(w_{t+j} \mid w_t)\): the probability of observing the context word \(w_{t+j}\) given the center word \(w_t\), modeled using a softmax over the vocabulary.

- \(\log\): the natural logarithm, used to convert the product of probabilities into a sum for numerical stability and optimization.

- where:

-

Here, every occurrence of a word generates multiple (target \(\rightarrow\) context) prediction pairs, which makes training computationally heavier but more expressive — particularly for rare words.

-

Comparison: CBOW vs. Skip-gram

| Aspect | CBOW | Skip-gram |

|---|---|---|

| Prediction Direction | Context \(\rightarrow\) Target | Target \(\rightarrow\) Context |

| Input | Multiple context words | Single target word |

| Output | One target word | Multiple context words |

| Training Speed | Faster | Slower |

| Works Best For | Frequent words; large datasets/corpora | Rare words; small datasets/corpora |

| Robustness | Smoother embeddings | More detailed embeddings |

| Objective Function | \(\log p(w_t \mid C_t)\) | \(\sum_{-c \le j \le c, j \ne 0} \log p(w_{t+j} \mid w_t)\) |

Why Skip-gram Handles Rare Words and Small Datasets Better

-

Skip-gram updates the embeddings of the center (target) word for each of its context words, meaning that every occurrence of a word — even a rare one — produces multiple training examples. Each (target, context) pair contributes a separate gradient update, allowing the model to refine the embedding of infrequent words through repeated exposure to their surrounding context.

-

By contrast, CBOW treats rare words as targets to be predicted from their neighboring words. Because uncommon words appear less frequently as targets, they are updated fewer times and often with noisy or insufficient context, leading to less accurate representations.

-

In small datasets or corpora, this distinction becomes even more critical. With limited training data, rare words might occur only a few times. Skip-gram’s multiple updates per occurrence help compensate for data scarcity by amplifying each word’s learning signal. CBOW, however, struggles in such settings since it relies on aggregating context signals to predict sparse targets — an approach that benefits more from large, diverse corpora.

-

Example:

- In the sentence “The iguana basked on the rock,” the rare word iguana generates multiple Skip-gram training pairs — (iguana \(\rightarrow\) the), (iguana \(\rightarrow\) basked), (iguana \(\rightarrow\) on), (iguana \(\rightarrow\) rock) — each producing an update to its embedding.

- Under CBOW, iguana would be predicted only once from the context {the, basked, on, rock}, resulting in fewer gradient updates and weaker representation learning, especially in a small corpus.

Which Model to Use When

-

Use CBOW when:

- The dataset is large and contains many frequent words.

- You require fast training and smoother embeddings.

- The task emphasizes semantic similarity among common words (e.g., topic clustering, document similarity).

- Example: Training on Wikipedia or Common Crawl for general-purpose embeddings.

-

Use Skip-gram when:

- The dataset is smaller or contains many rare and domain-specific words.

- Skip-gram performs better in such cases because it creates multiple training pairs for each occurrence of a rare word, giving it more opportunities to learn meaningful relationships from limited data.

- You want to capture fine-grained syntactic or semantic nuances.

- The focus is on representation quality rather than speed.

- Example: Training embeddings for biomedical text, legal documents, or historical corpora.

-

Hybrid Strategy:

- Some implementations begin with CBOW pretraining and fine-tune with Skip-gram for precision.

- For multilingual or low-resource settings, Skip-gram tends to outperform due to its capacity to learn richer embeddings from detailed contextual cues using fewer examples.

Training and Optimization

-

The training of Word2Vec centers on optimizing word embeddings so that they accurately predict contextual relationships between words. Each word in the vocabulary is assigned two learnable vectors: one for when it acts as a target (input) word and another for when it acts as a context (output) word. These vectors are iteratively updated during training to maximize the probability of observed word–context pairs (equivalently, to maximize the log-likelihood of correct predictions). In implementation, this is often expressed as minimizing the negative log-likelihood loss, which is the inverse of the same objective.

-

However, training Word2Vec models efficiently is challenging, especially with large vocabulary sizes. Mikolov et al. (2013) introduced key approximation strategies — most notably negative sampling and hierarchical softmax — that make large-scale training computationally feasible while maintaining embedding quality. These techniques drastically reduce the cost of computing softmax over all vocabulary words by focusing updates on a small subset of informative examples.

Objective Function

-

The central goal of Word2Vec is to maximize the likelihood of predicting correct word–context pairs.

- In the Skip-gram model, the network predicts surrounding context words given a target word.

- In the CBOW model, it predicts the target word given its surrounding context.

-

Each word \(w\) in the vocabulary is represented by two embeddings:

- \(v_w\): the input vector, used when the word serves as the target (center) word.

- \(u_w\): the output vector, used when the word serves as a context word to be predicted.

-

The Skip-gram objective maximizes the average log-probability of observing context words around each target word:

\[\mathcal{L}_{SG} = \frac{1}{T} \sum_{t=1}^{T} \sum_{-c \le j \le c, j \ne 0} \log p(w_{t+j} | w_t)\] -

The CBOW objective instead maximizes the log-probability of the target word given its surrounding context:

\[\mathcal{L}_{CBOW} = \frac{1}{T} \sum_{t=1}^{T} \log p(w_t | C_t)\] -

In both models, the conditional probability is computed using the softmax function:

\[p(w_o | w_i) = \frac{\exp(u_{w_o}^T v_{w_i})} {\sum_{w' \in V} \exp(u_{w'}^T v_{w_i})}\]-

where:

- \(v_{w_i}\): input (target) word vector.

- \(u_{w_o}\): output (context) word vector.

- \(T\): total number of words in the training corpus.

- \(c\): context window size, defining how many words to the left and right are considered.

- \(w_t\): the target (center) word at position \(t\).

- \(w_{t+j}\): a context word located \(j\) positions away from the target.

- \(C_t\): the set of context words surrounding \(w_t\).

- \(V\): the vocabulary containing all unique words.

- \(p(w_o \mid w_i)\): the model’s predicted probability of observing context word \(w_o\) given target word \(w_i\).

-

-

The model thus maximizes the log-likelihood that true word–context pairs occur more frequently than random combinations, effectively shaping the embedding space to reflect semantic relationships.

Why the Full Softmax Is Computationally Expensive

- The denominator of the naive softmax function used in CBOW and Skip-gram requires computing the normalization term over all words in the vocabulary (\(V\)): \(\sum_{w' \in V} \exp(u_{w'}^T v_{w_I})\).

- For a large vocabulary (where \(\mid V \mid\) can exceed millions, say \(10^5\) to \(10^7\)), this becomes computationally intractable because the denominator must be recalculated for every training pair.

- To address this, Mikolov et al. (2013) introduced two key approximation methods: Hierarchical Softmax and Negative Sampling.

Hierarchical Softmax

- Hierarchical Softmax, introduced by Morin and Bengio (2005) and later applied by Mikolov et al. (2013), is an efficient alternative to the standard softmax layer used in language models.

Concept

-