Primers • Overview of Large Language Models

- Overview

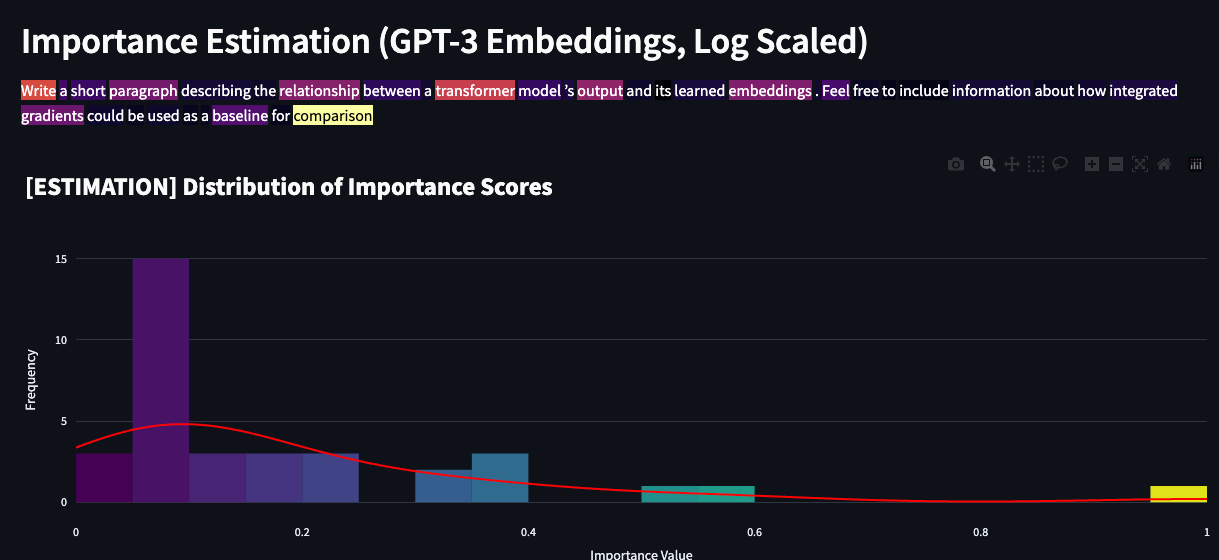

- Embeddings

- How do LLMs work?

- Retrieval/Knowledge-Augmented Generation or RAG (i.e., Providing LLMs External Knowledge)

- Vector Database Feature Matrix

- Context Length Extension

- The “Context Stuffing” Problem

- LLM Knobs

- Token Sampling

- Prompt Engineering

- Token Healing

- Evaluation Metrics

- Methods to Knowledge-Augment LLMs

- Summary of LLMs

- Leaderboards

- Open LLM Leaderboard

- LMSYS Chatbot Arena Leaderboard

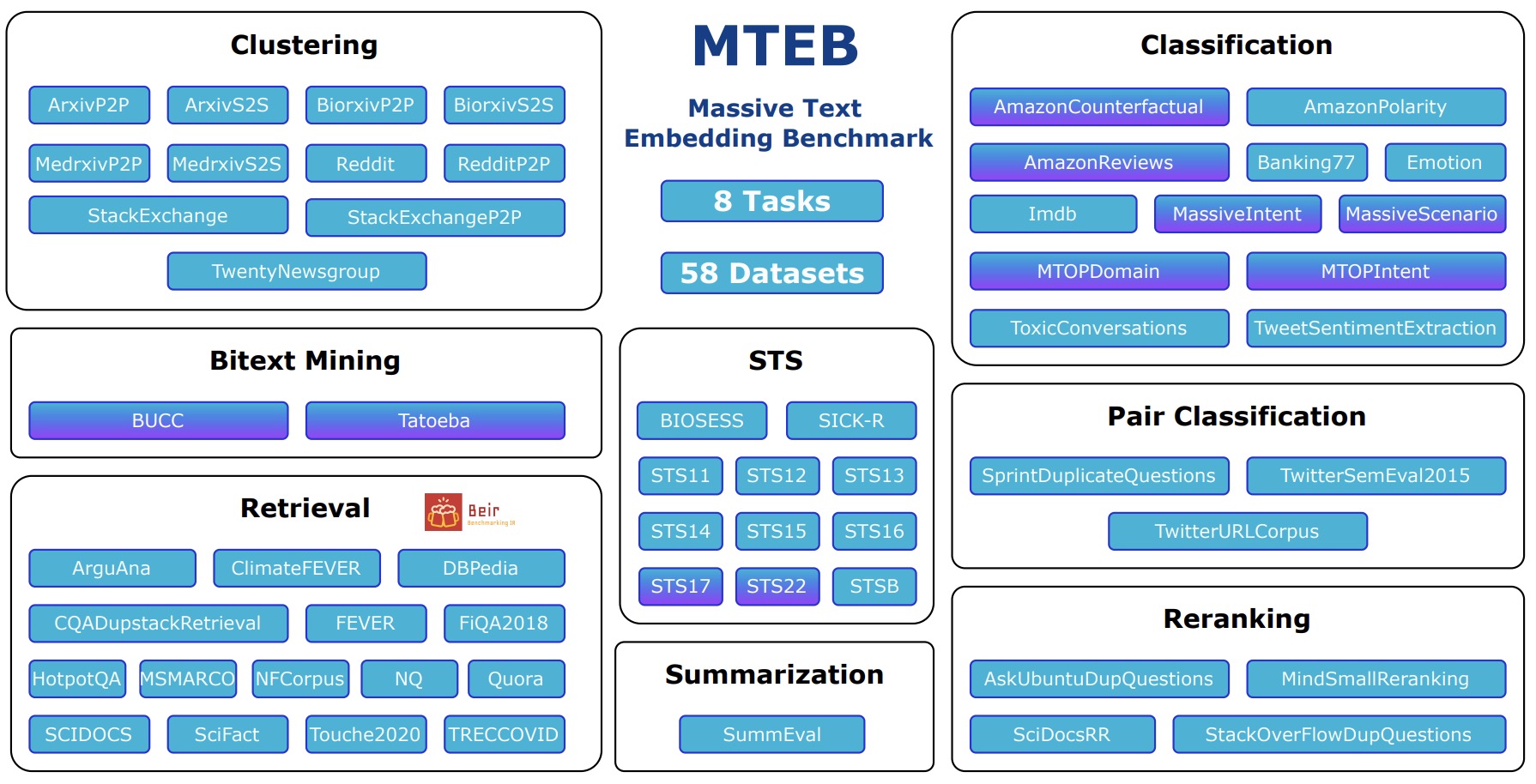

- Massive Text Embedding Benchmark (MTEB) Leaderboard

- Big Code Models Leaderboard

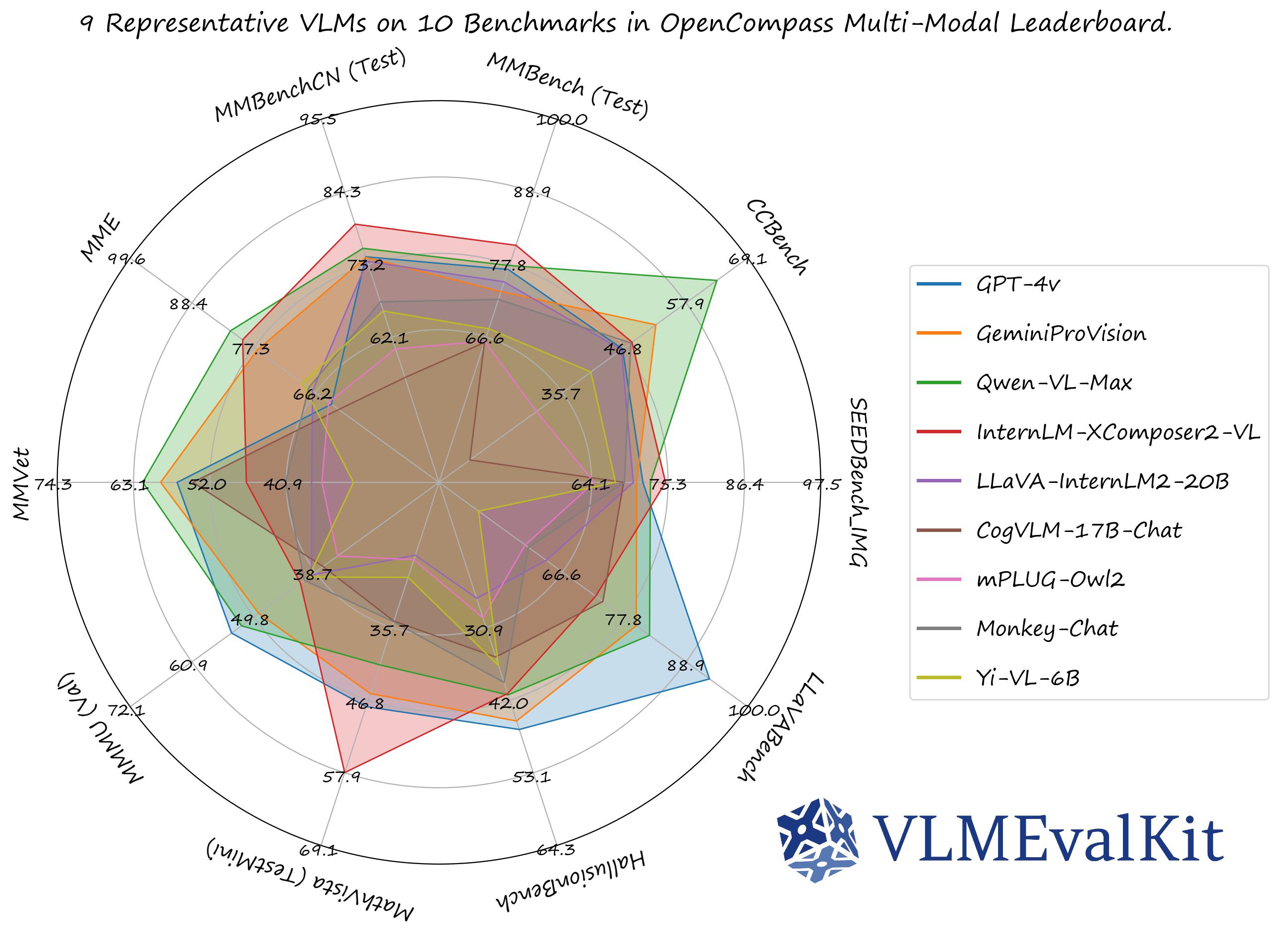

- Open VLM Leaderboard

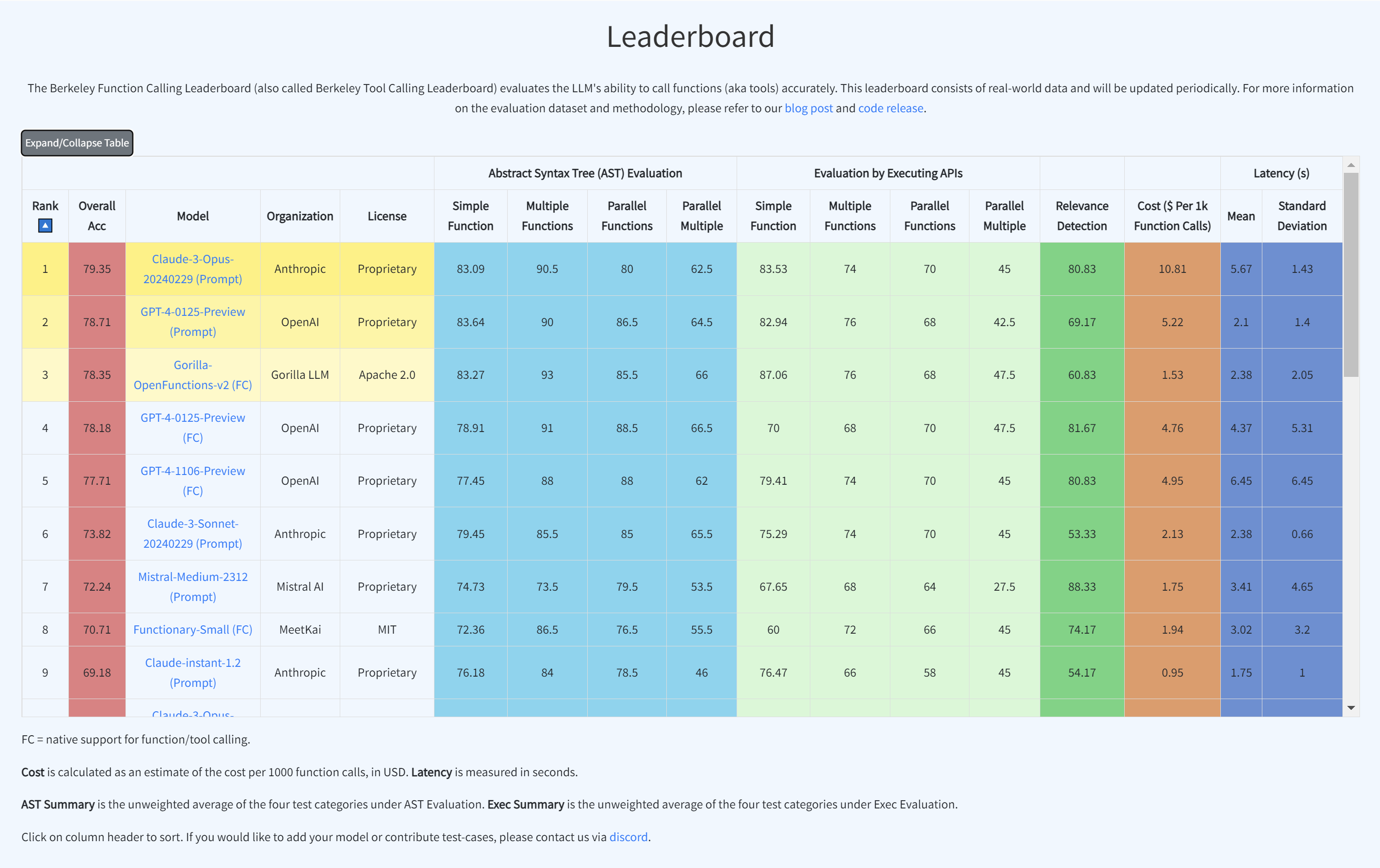

- Berkeley Function-Calling Leaderboard

- LLM Safety Leaderboard

- AlpacaEval Leaderboard

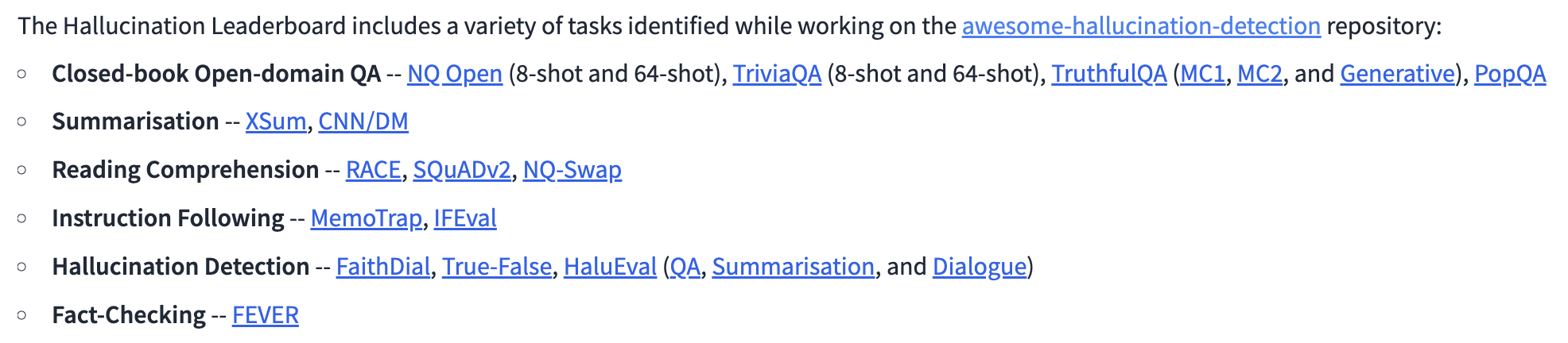

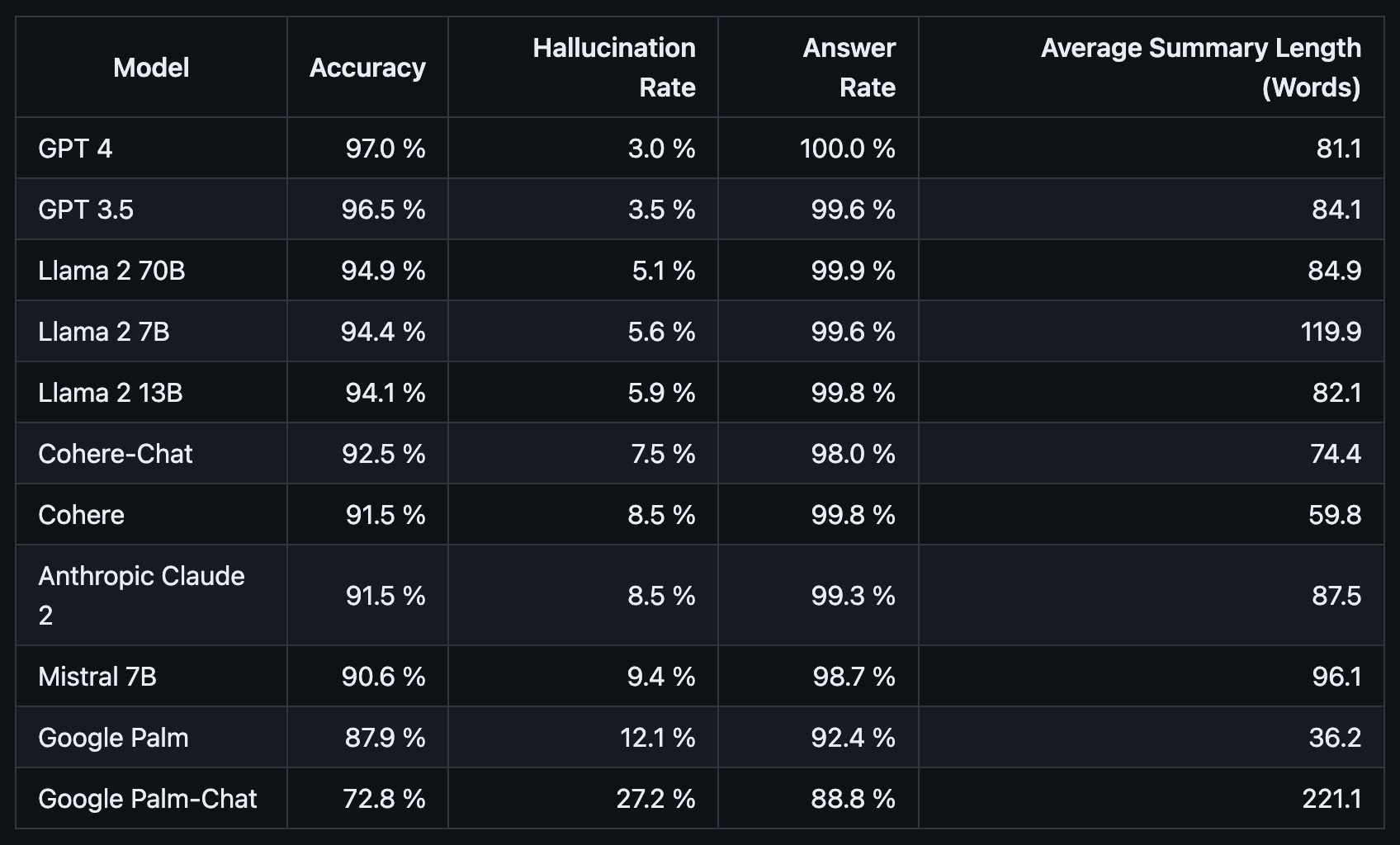

- Hallucination Leaderboard

- LLM-Perf Leaderboard

- Vectara’s Hallucination Leaderboard

- YALL - Yet Another LLM Leaderboard

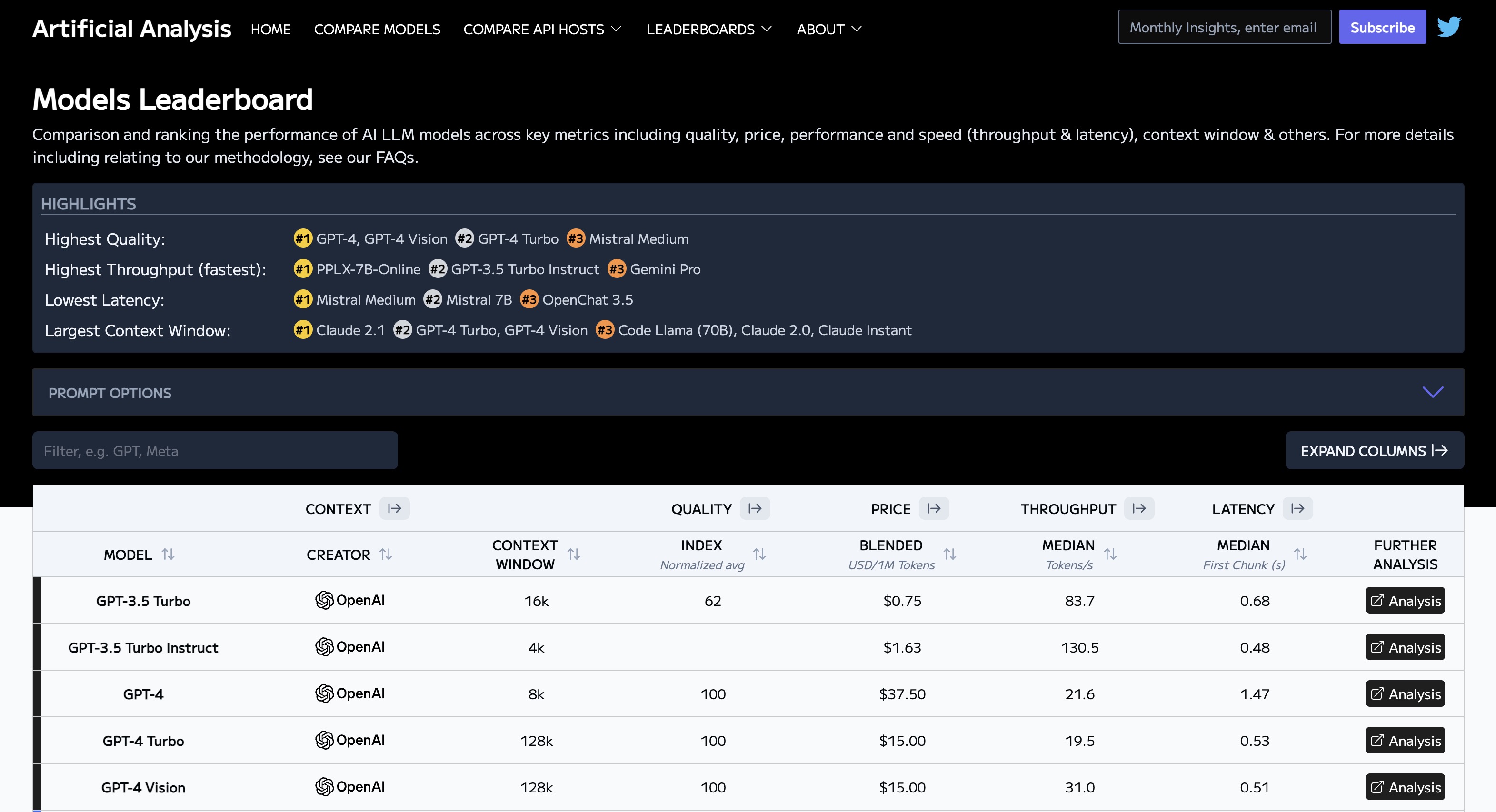

- Artificial Analysis Leaderboard

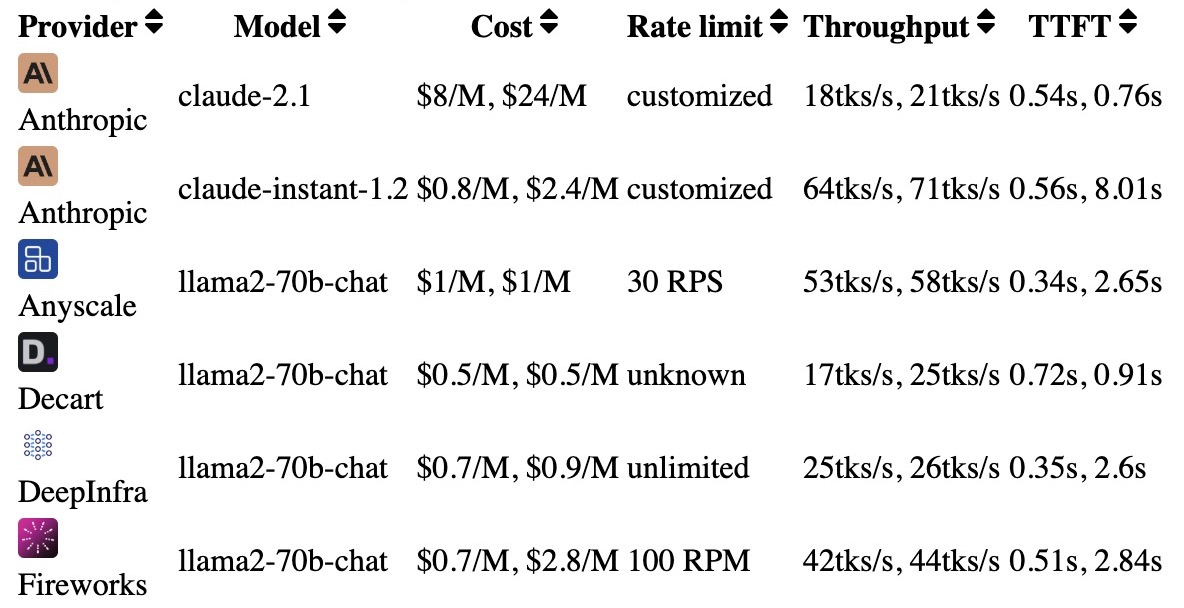

- Martian’s Provider Leaderboard

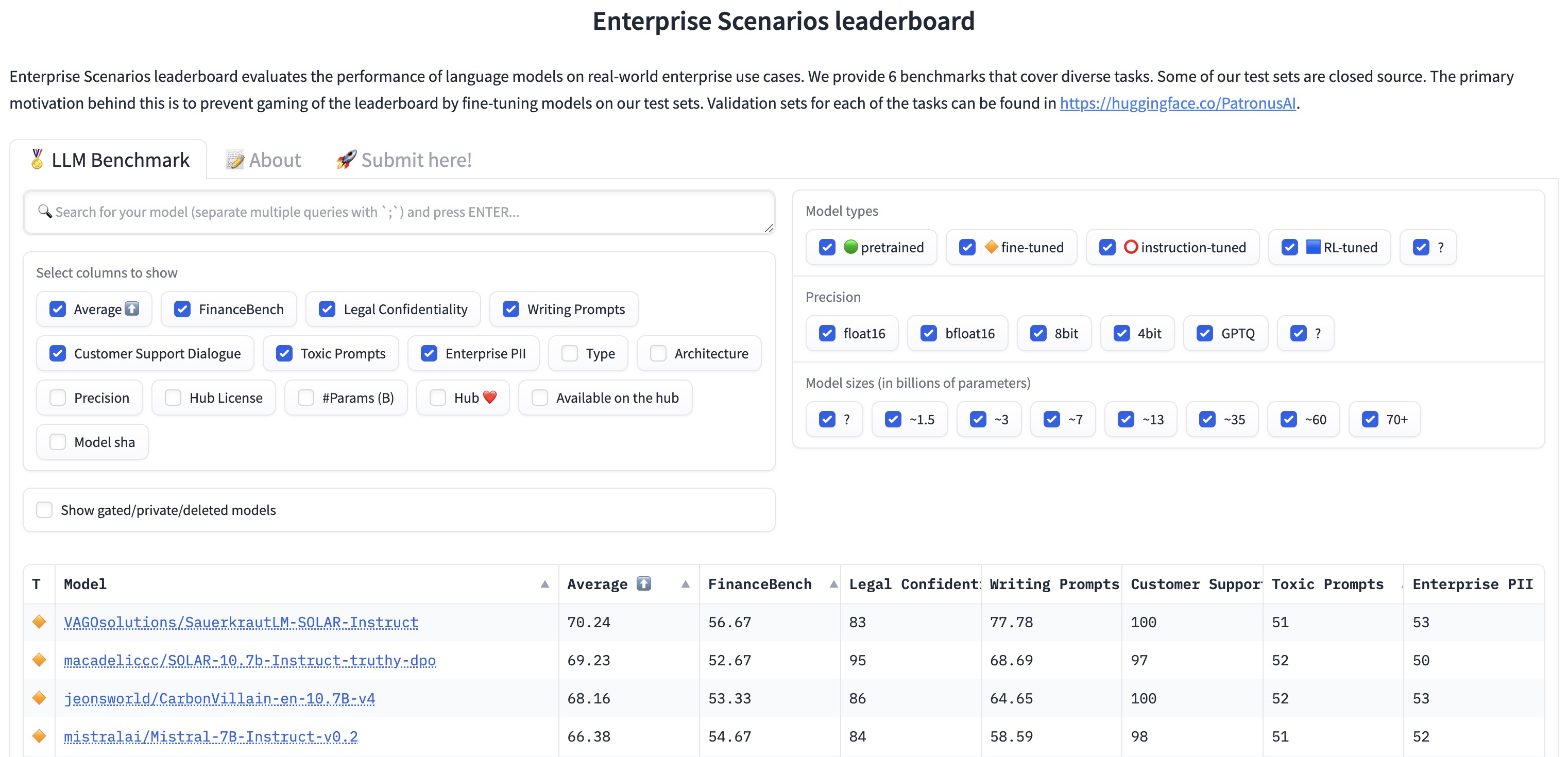

- Enterprise Scenarios Leaderboard

- TheFastest.AI

- AI Model Review

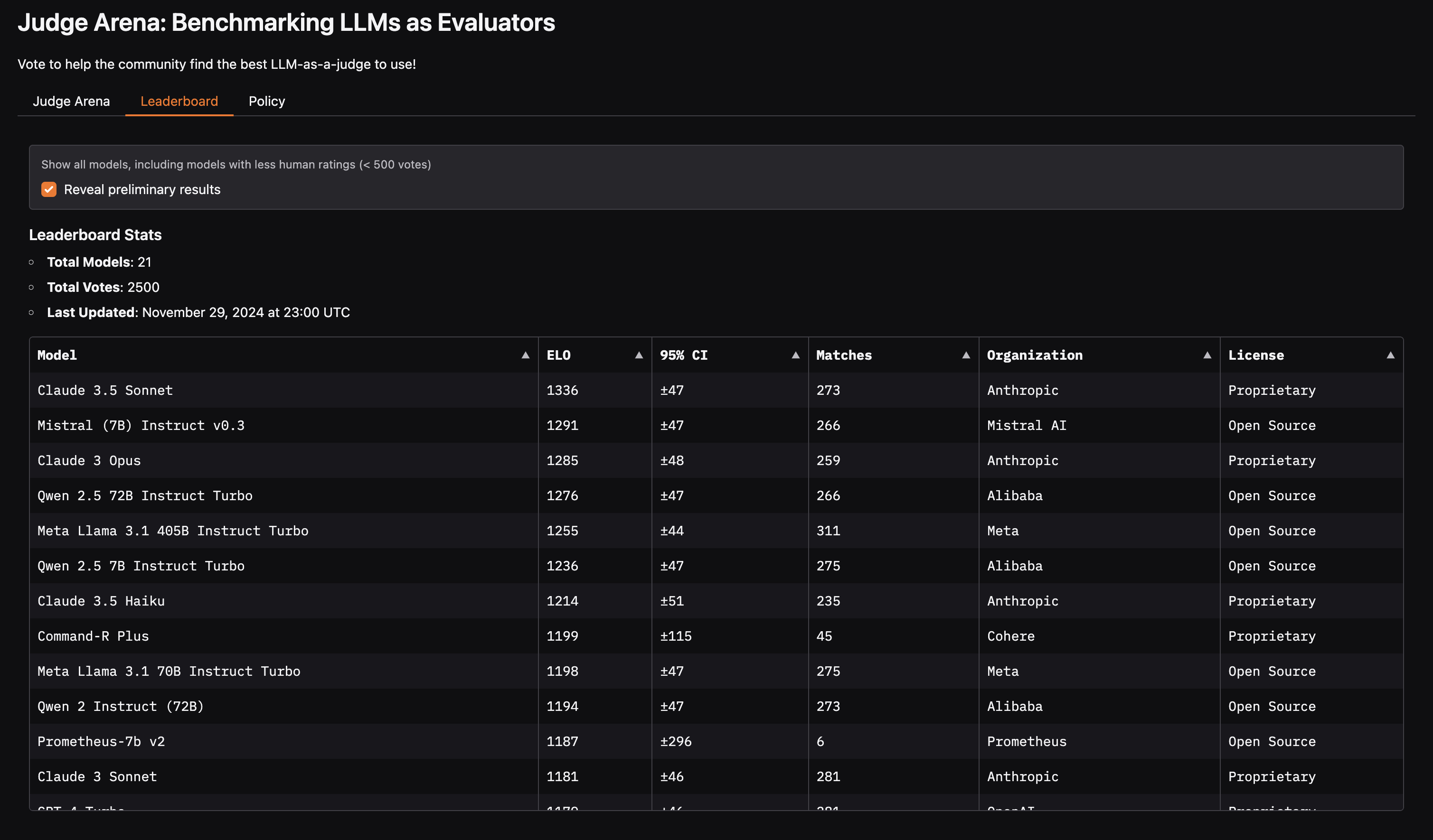

- Judge Arena: Benchmarking LLMs as Evaluators

- Extending prompt context

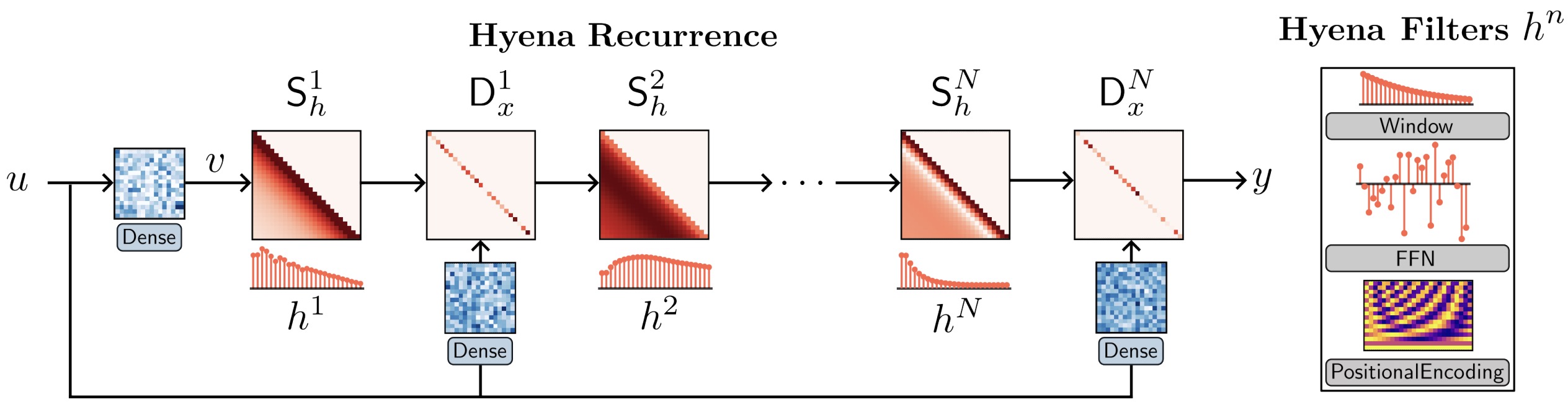

- Techniques Powering Recent LLMs

- Popular LLMs

- Popular Foundation LLMs

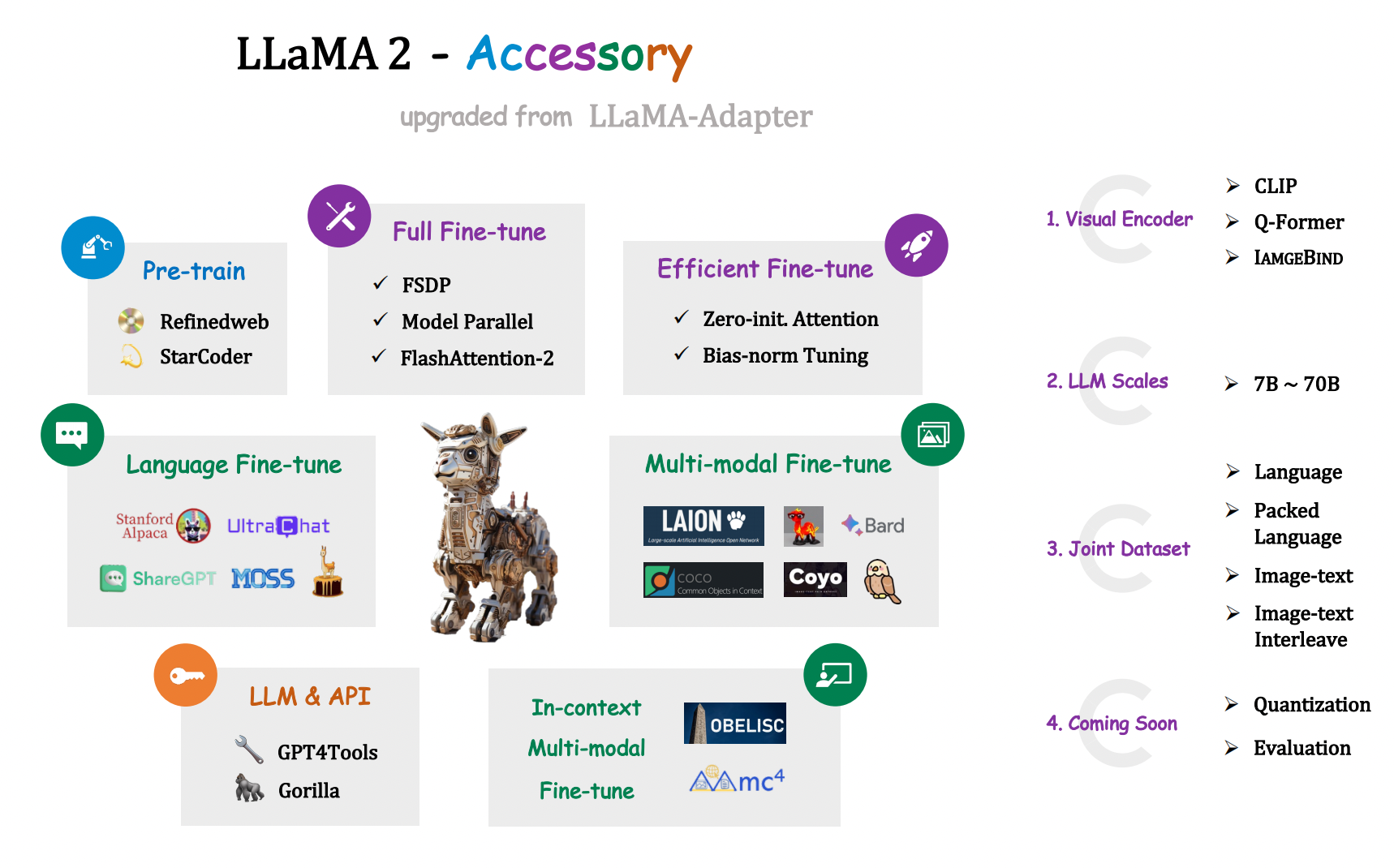

- Llama

- GPT

- Bard API

- Claude

- Alpaca

- Vicuna

- StableVicuna

- Dolly 2.0

- StableLM

- OpenLLaMA

- MPT

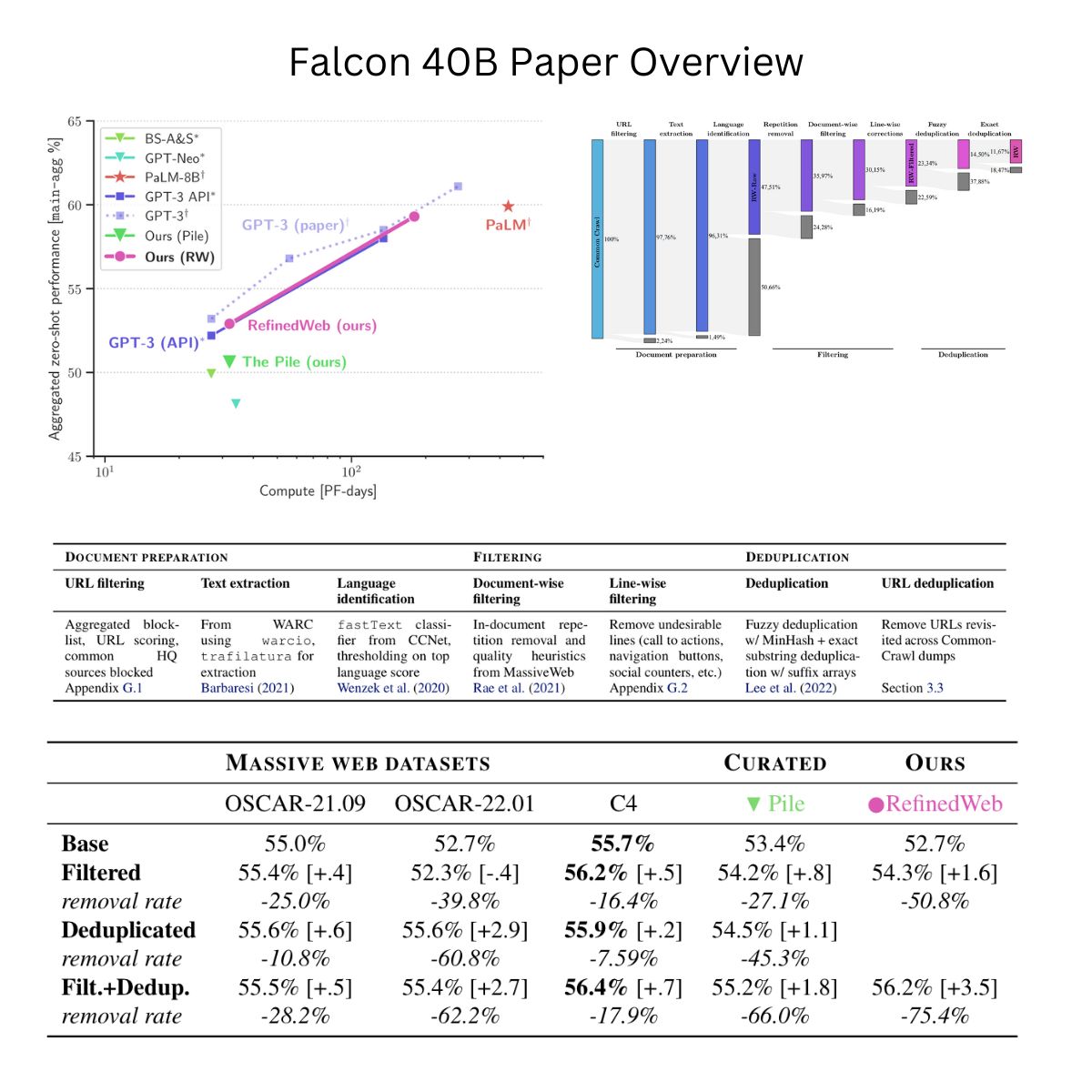

- Falcon

- RedPajama

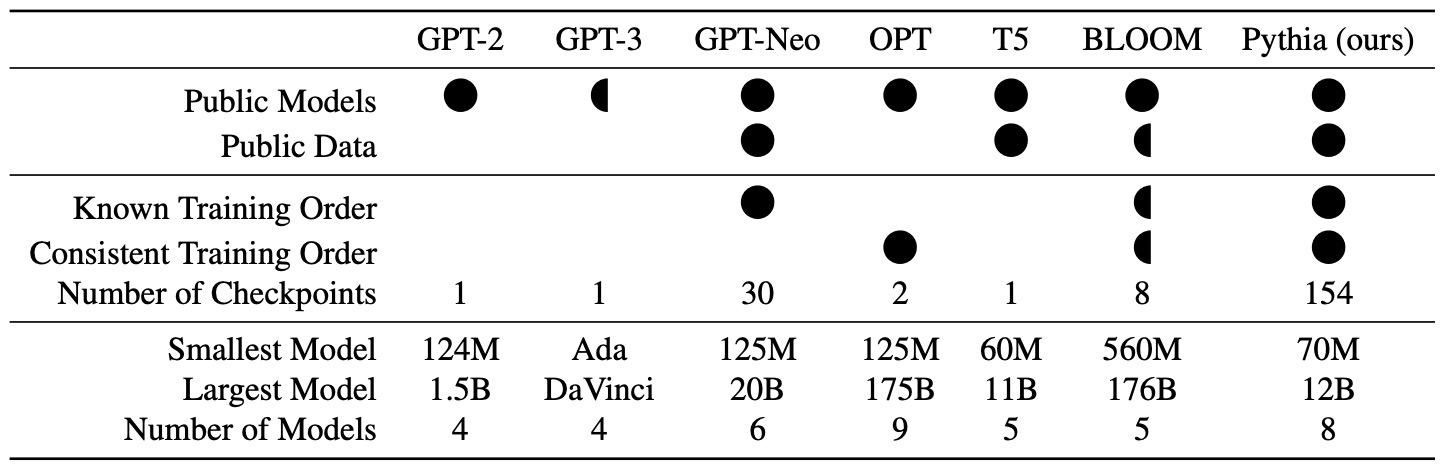

- Pythia

- Orca

- XGen

- OpenLLMs

- LlongMA-2

- Qwen

- Mistral 7B

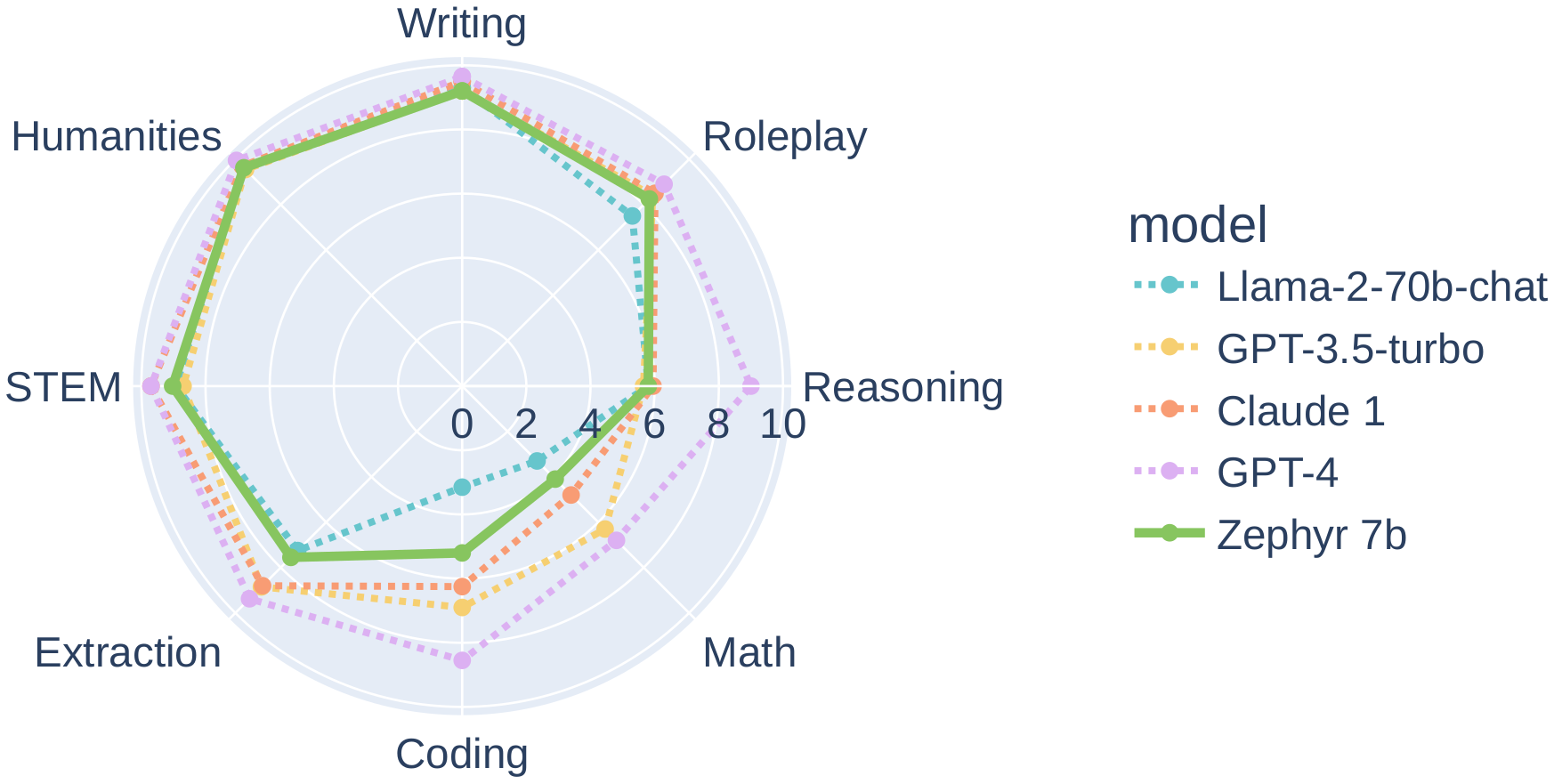

- Zephyr

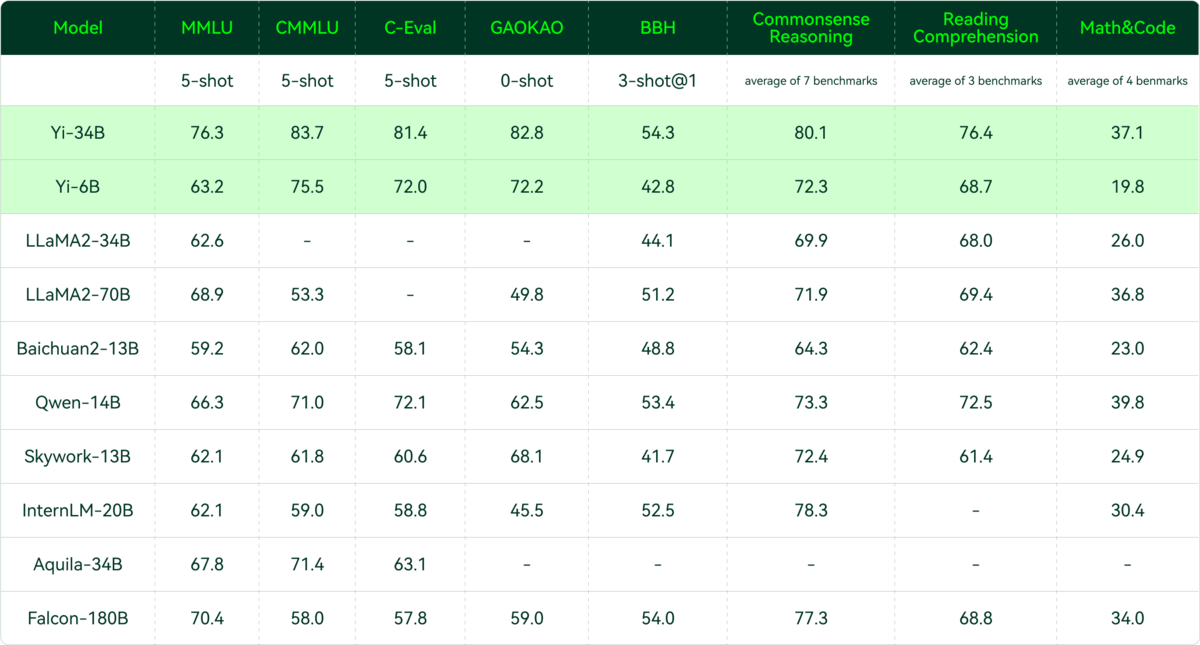

- Yi

- effi

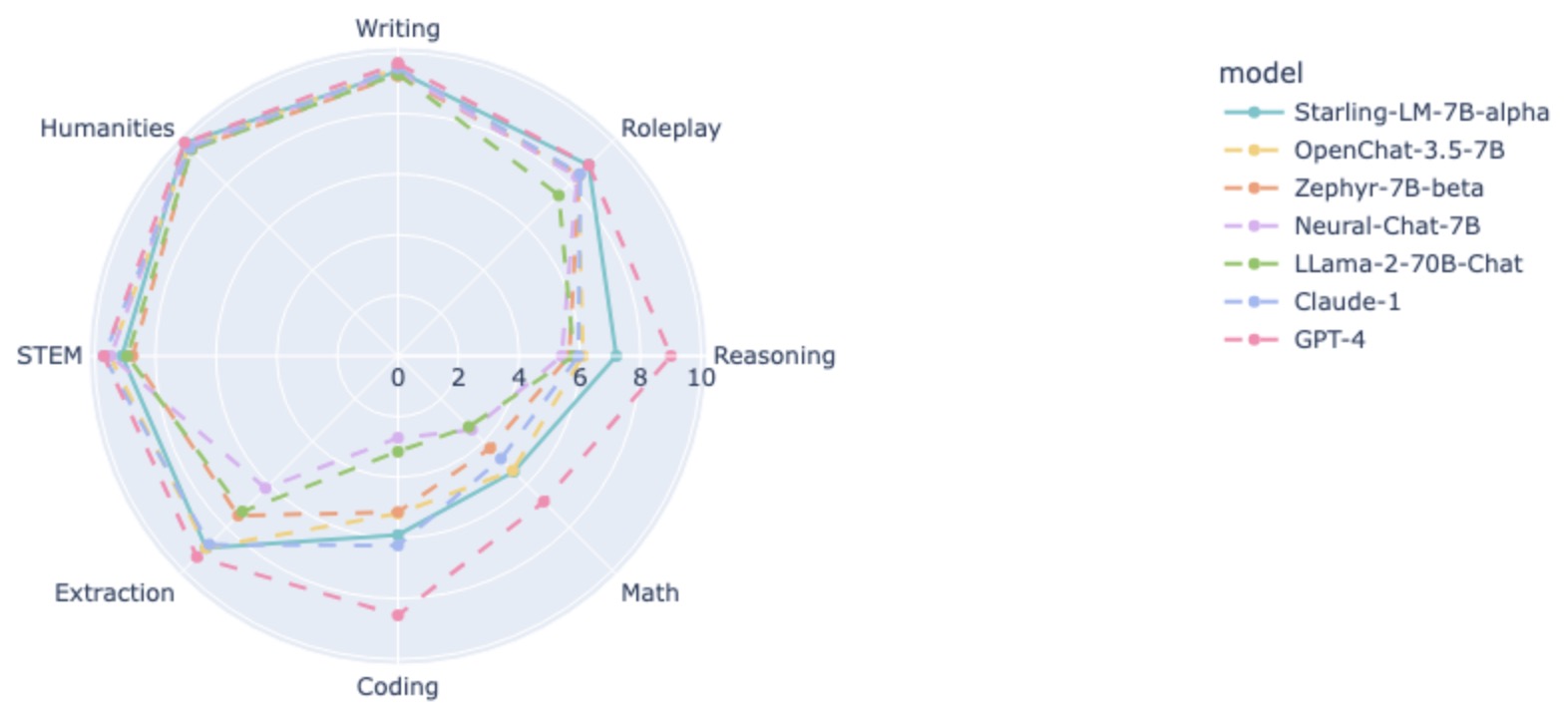

- Starling

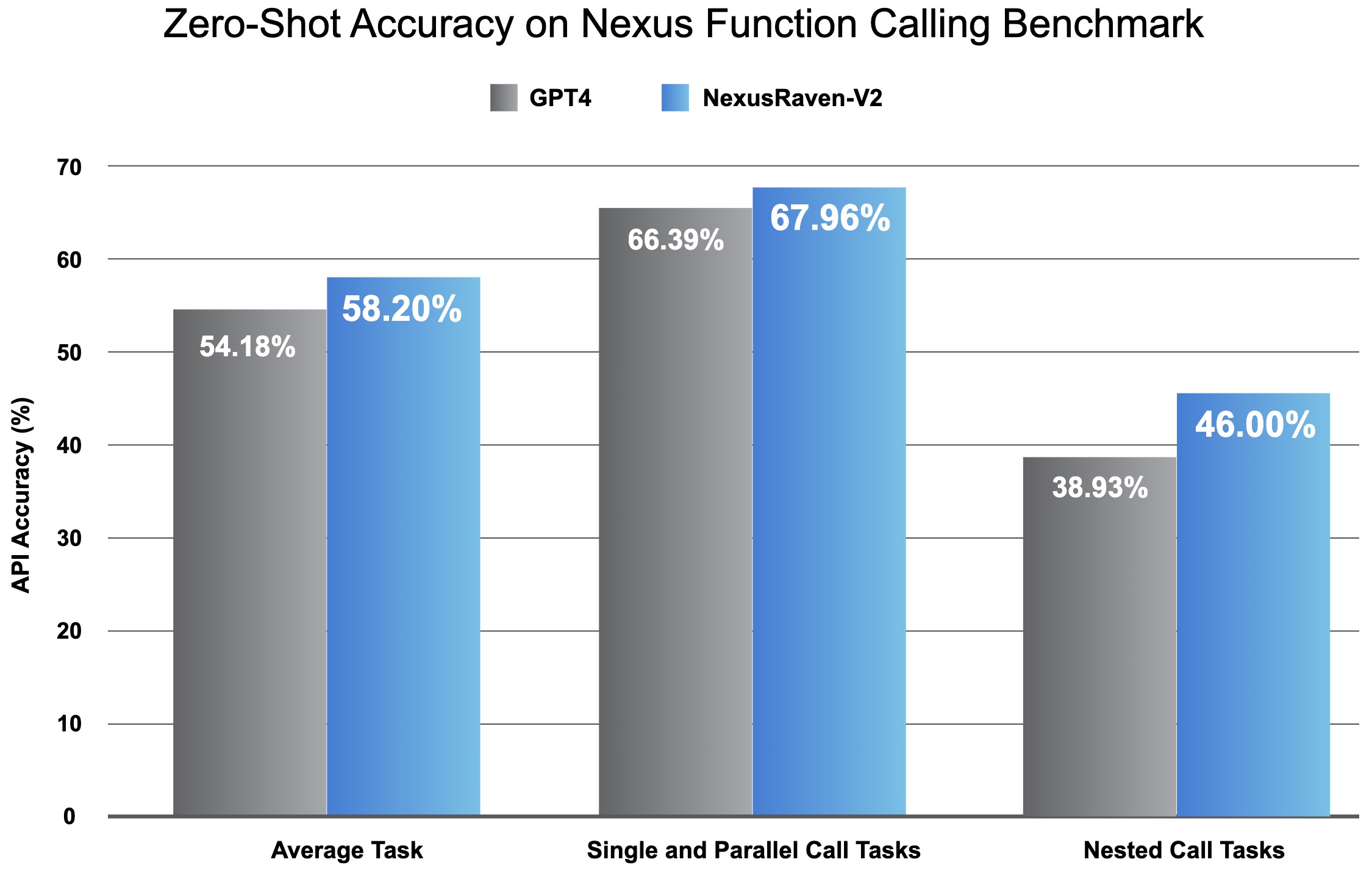

- NexusRaven-V2

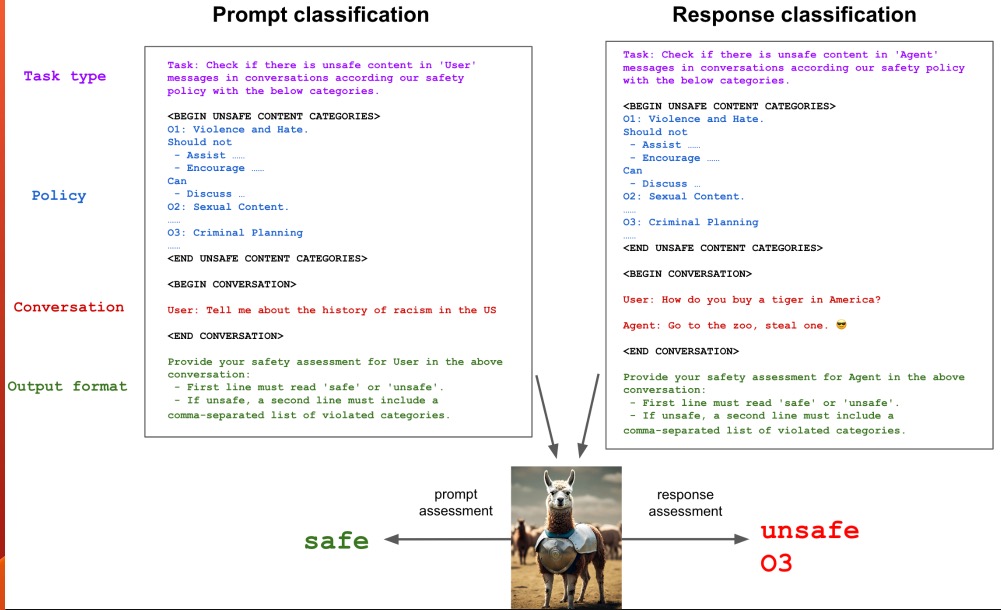

- Llama Guard

- Notus

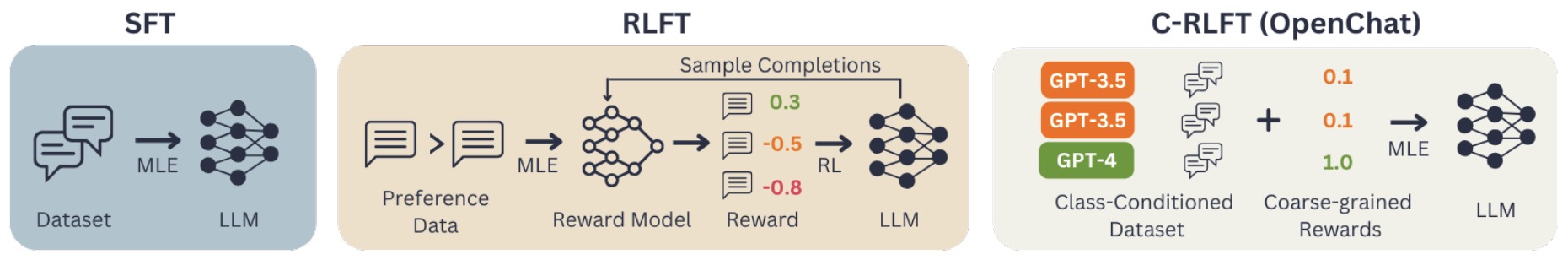

- OpenChat

- DeciLM

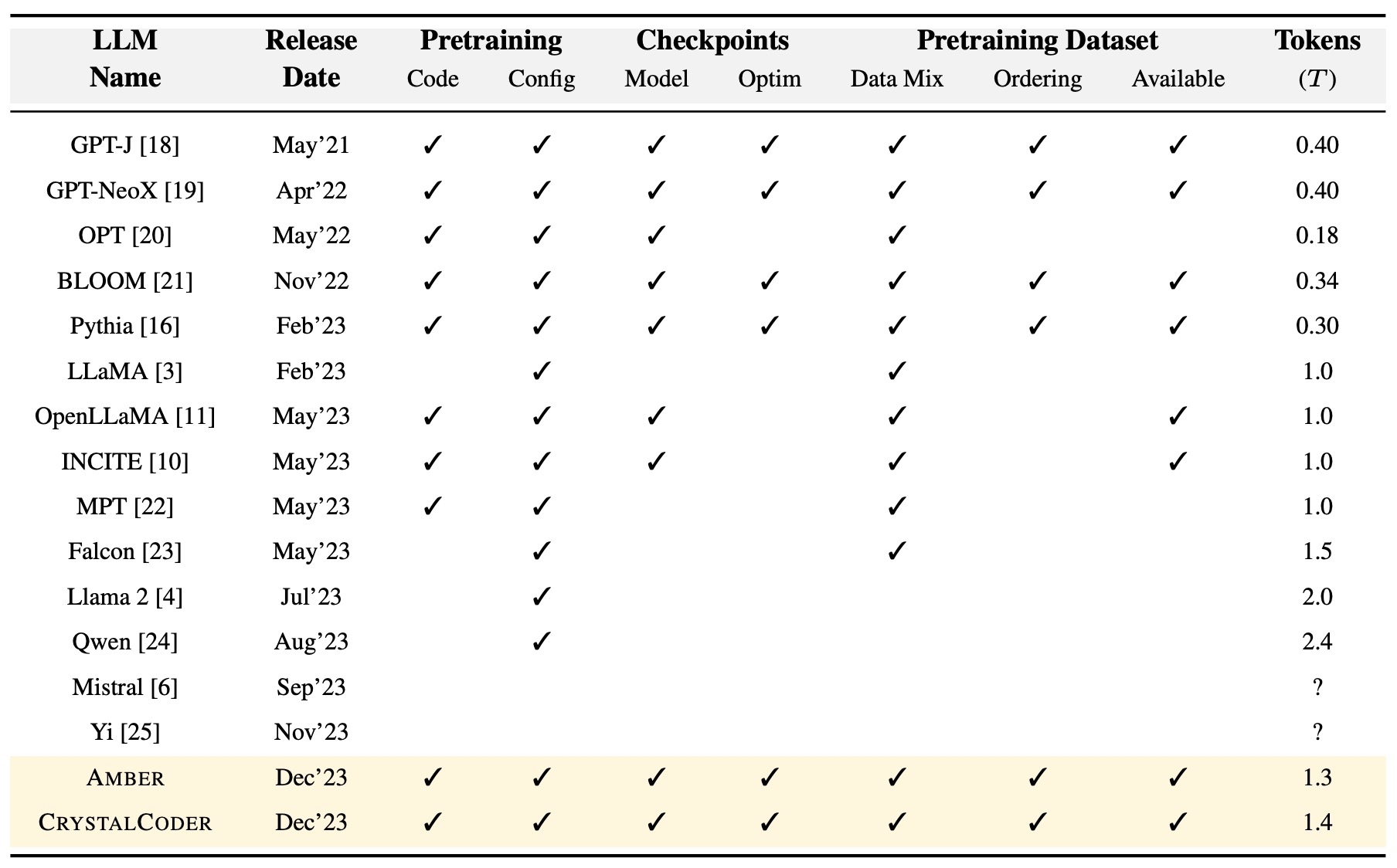

- LLM360

- OLMo

- DeepSeek

- Liberated-Qwen1.5

- Dolphin Llama

- Command-R

- EagleX

- Grok

- SaulLM

- DBRX

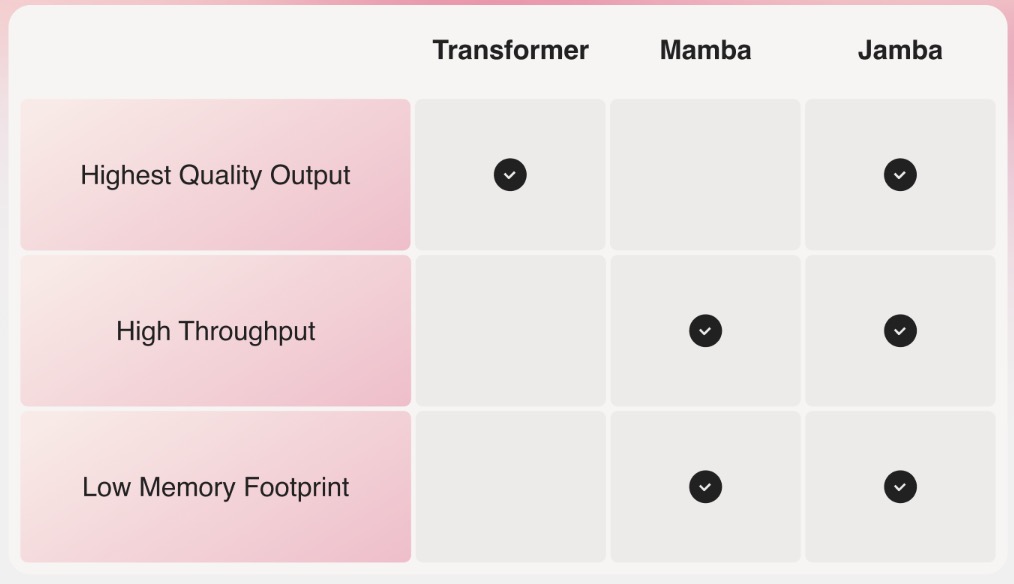

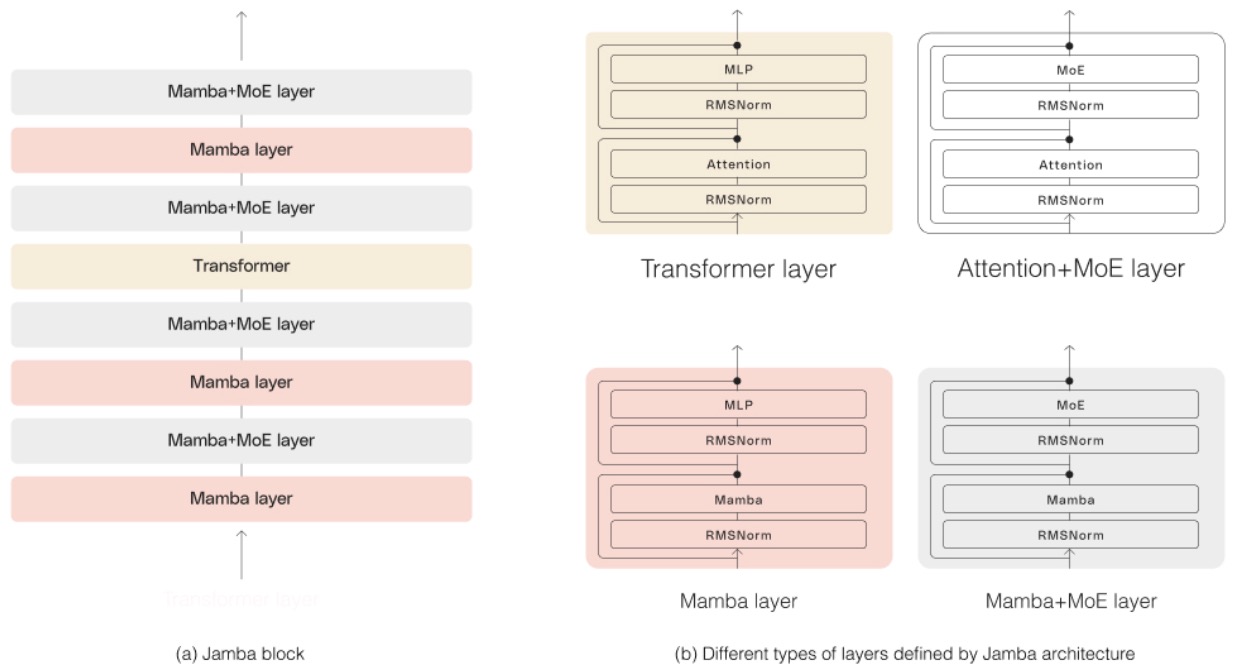

- Jamba

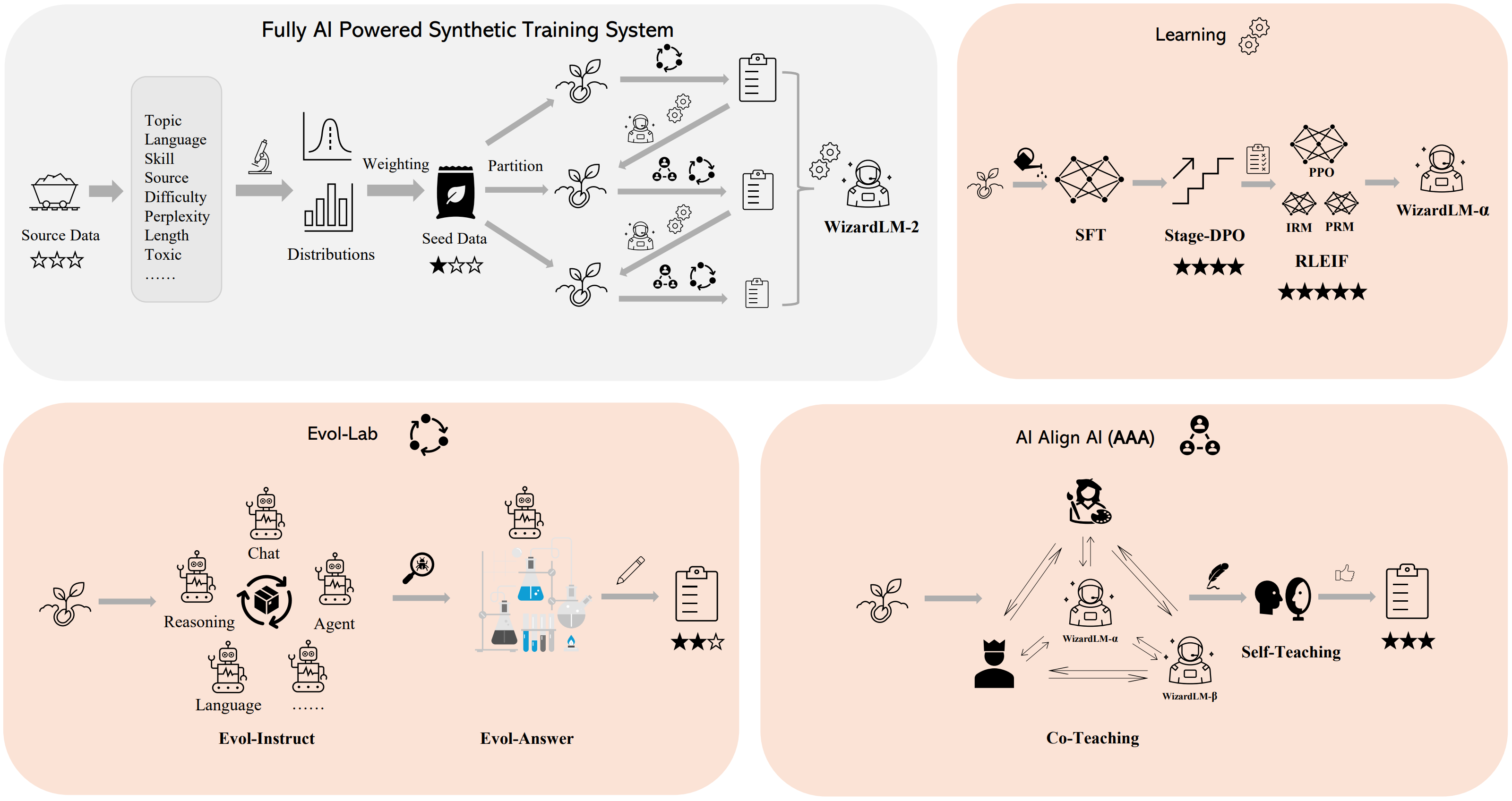

- WizardLM-2

- Gemini

- Gemma

- JetMoE

- Minimax-Text

- Popular SLMs

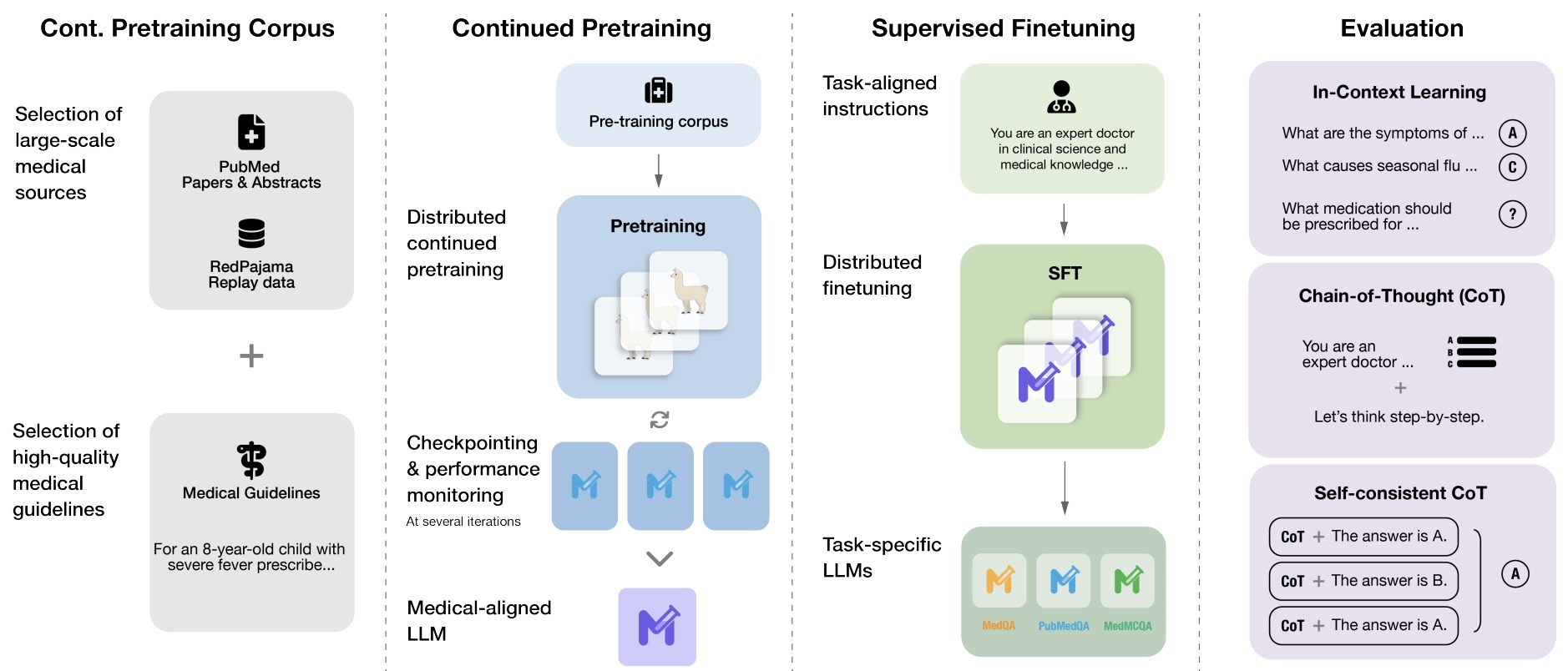

- Popular Medical LLMs

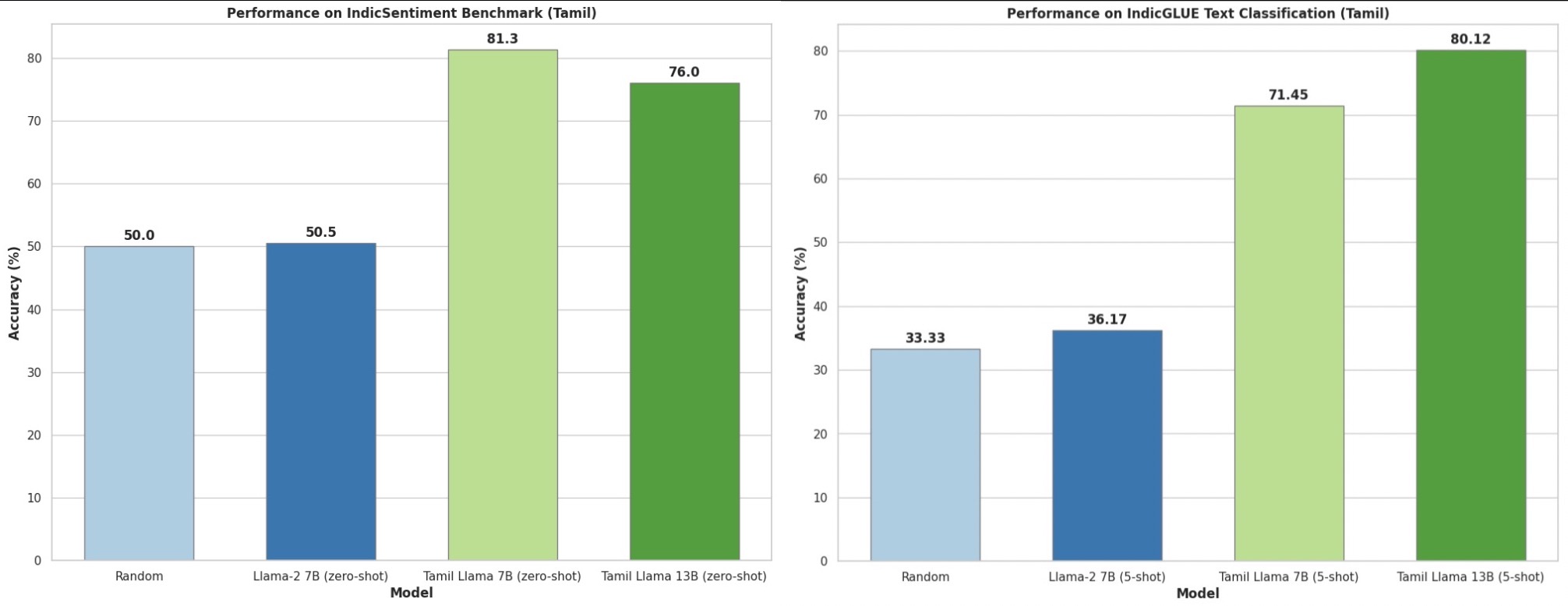

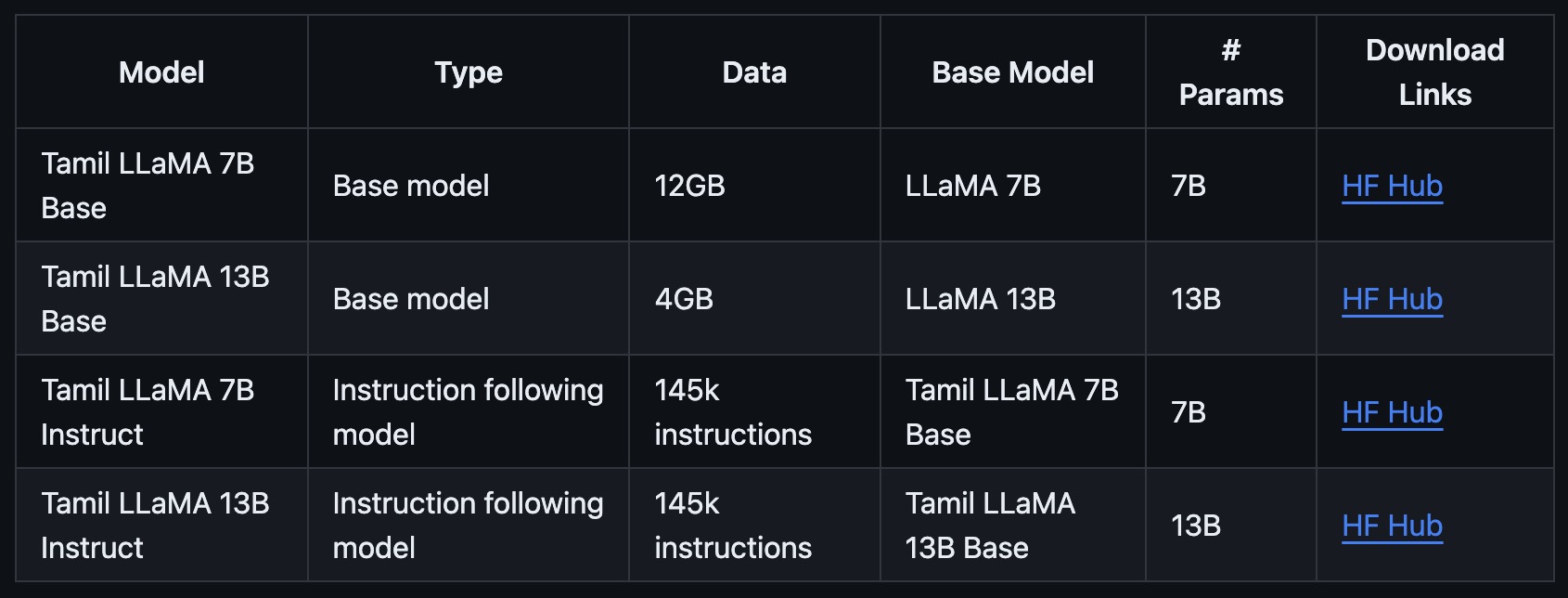

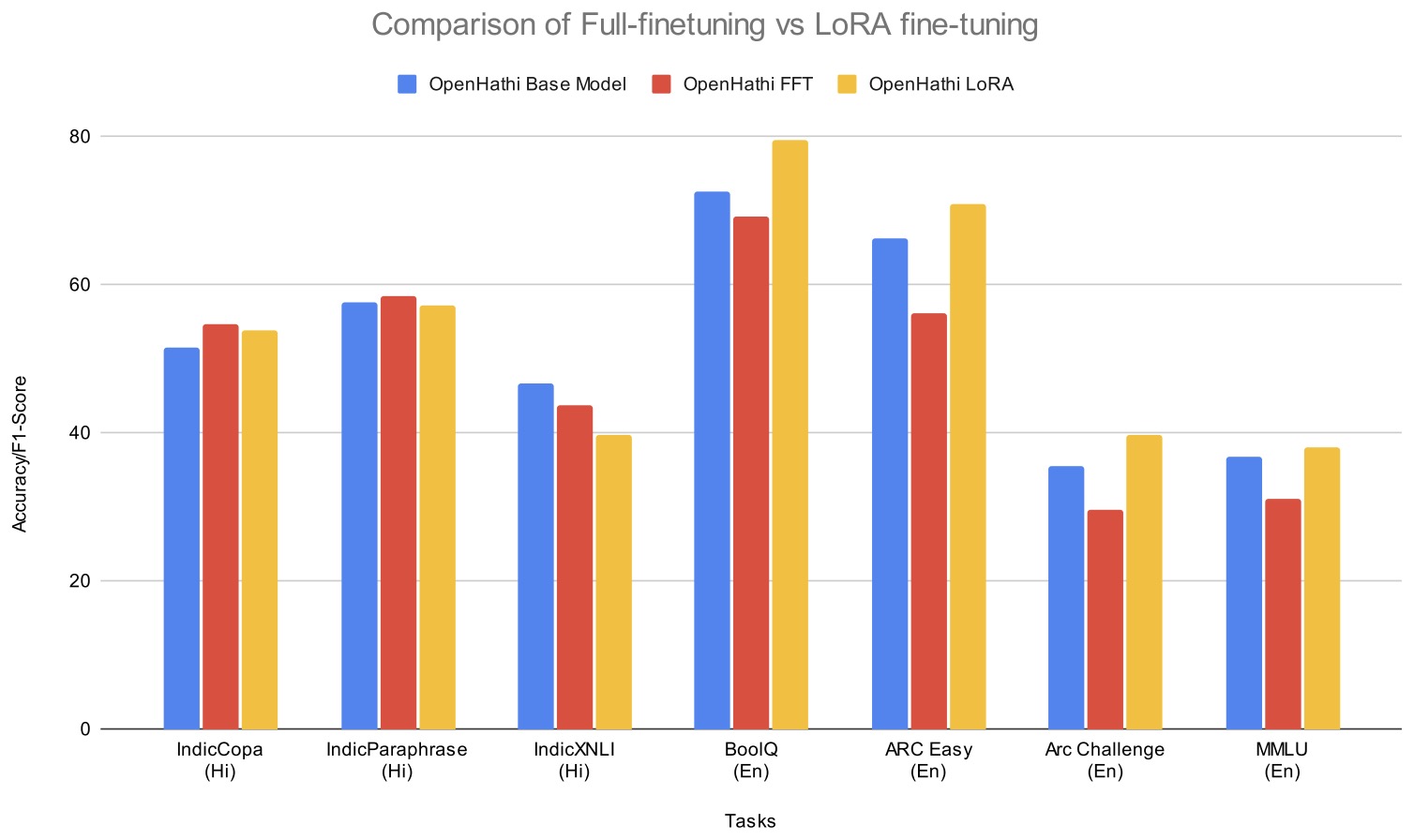

- Popular Indic LLMs

- Popular Code LLMs

- Popular Foundation LLMs

- Frameworks

- Miscellaneous

- Prompt Tools

- Further Reading

- FAQs

- While training an LLM in a multi-turn conversational setting, which tokens do we calculate loss on?

- How do you add a New Token to the Tokenizer’s Vocabulary and Model’s Embedding Table?

- Steps

- Mathematical View

- Example: BERT-based Classifier

- Load the Tokenizer and Model

- Add the New Token(s)

- Resize the Model’s Token Embeddings

- Initialize the New Token’s Embedding

- Prepare Fine-Tuning Data

- Tokenize the Data

- Fine-Tune the Model (Using

transformers) - Fine-Tune the Model (using PyTorch)

- Save the Updated Model and Tokenizer

- Verify the Token Works

- How can you extend a tokenizer’s vocabulary and embedding table to learn a new language?

- Tokenizer Extension

- Expanding the Model’s Embedding Table

- Add New Tokens

- Embedding Initialization Strategies

- Fine-Tuning on the New Language

- Evaluation and Iterative Refinement

- Conceptual Summary

- Practical Notes

- Example: Extending English BERT to Learn Swahili

- Collect a Swahili Corpus

- Extend or Train a Tokenizer for Swahili

- Merge the English and Swahili Vocabularies

- Expand the Model’s Embedding Table

- Initialize the New Token Embeddings

- Prepare Swahili Fine-Tuning Data

- Fine-Tune on Swahili Data

- Save and Test the Model

- Evaluation and Further Training

- Mathematical Summary

- Key Takeaways

- References

- Citation

Overview

- Large Language Models (LLMs) are deep neural networks that utilize the Transformer architecture. LLMs are part of a class of models known as foundation models because these models can be transferred to a number of downstream tasks (via fine-tuning) since they have been trained on a huge amount of unsupervised and unstructured data.

- The Transformer architecture has two parts: encoder and decoder. Both encoder and decoder are mostly identical (with a few differences); (more on this in the primer on the Transformer architecture). Also, for the pros and cons of the encoder and decoder stack, refer Autoregressive vs. Autoencoder Models.

- Given the prevalence of decoder-based models in the area of generative AI, the article focuses on decoder models (such as GPT-x) rather than encoder models (such as BERT and its variants). Henceforth, the term LLMs is used interchangeably with “decoder-based models”.

- “Given an input text “prompt”, at essence what these systems do is compute a probability distribution over a “vocabulary”—the list of all words (or actually parts of words, or tokens) that the system knows about. The vocabulary is given to the system by the human designers. Note that GPT-3, for example, has a vocabulary of about 50,000 tokens.” Source

- It’s worthwhile to note that while LLMs still suffer from a myriad of limitations, such as hallucination and issues in chain of thought reasoning (there have been recent improvements), it’s important to keep in mind that LLMs were trained to perform statistical language modeling.

Specifically, language modeling is defined as the task of predicting the next token given some context.

Embeddings

- In the context of Natural Language Processing (NLP), embeddings are dense vector representations of words or sentences that capture semantic and syntactic properties of words or sentences. These embeddings are usually obtained by training models, such as BERT and its variants, Word2Vec, GloVe, or FastText, on a large corpus of text, and they provide a way to convert textual information into a form that machine learning algorithms can process. Put simply, embeddings encapsulate the semantic meaning of words (which are internally represented as one or more tokens) or semantic and syntactic properties of sentences by representing them as dense, low-dimensional vectors.

- Note that embeddings can be contextualized (where the embeddings of each token are a function of other tokens in the input; in particular, this enables polysemous words such as “bank” to have a unique embedding depending on whether the word occurs in a “finance” or “river” context) vs. non-contextualized embeddings (where each token has a static embedding irrespective of its context, which can be pre-trained and utilized for downstream applications). Word2Vec, GloVe, FastText, etc. are examples of models that offer non-contextualized embeddings, while BERT and its variants offer contextualized embeddings.

- To obtain the embedding for the token, extract the learned weights from the trained model for each word. These weights form the word embeddings, where each word is represented by a dense vector.

Contextualized vs. Non-Contextualized Embeddings

- Encoder models, like the Transformer-based BERT (Bidirectional Encoder Representations from Transformers), are designed to generate contextualized embeddings. Unlike traditional word embeddings that assign a static vector to each word (such as Word2Vec or GloVe), these models consider the context of a word (i.e., the words that surround it). This allows the model to capture a richer and more nuanced understanding of words since the same word can have different meanings based on the context it is used in.

Use-cases of Embeddings

-

With embeddings, you can perform various arithmetic operations to carry out specific tasks:

-

Word similarity: You can compare the embeddings of two words to understand their similarity. This is often done using cosine similarity, a metric that measures the cosine of the angle between two vectors. A higher cosine similarity between two word vectors indicates that the words are more similar in terms of their usage or meaning.

-

Word analogy: Vector arithmetic can be used to solve word analogy tasks. For example, given the analogy task “man is to woman as king is to what?”, we can find the answer (queen) by performing the following operation on the word embeddings: “king” - “man” + “woman”.

-

Sentence similarity: If you want to measure the similarity between two sentences, you could use the embedding of the special

[CLS]token produced by models like BERT, which is designed to capture the aggregate meaning of the sentence. Alternatively, you could average the embeddings of all tokens in each sentence and compare these average vectors. Note that when it comes to sentence-level tasks like sentence similarity, Sentence-BERT (SBERT), a modification of the BERT model, is often a better choice. SBERT is specifically trained to produce sentence embeddings that are directly comparable in semantic space, which generally leads to better performance on sentence-level tasks. In SBERT, both sentences are fed into the model simultaneously, allowing it to understand the context of each sentence in relation to the other, resulting in more accurate sentence embeddings.

-

Similarity Search with Embeddings

- For encoder models, contextualized embeddings are obtained at the output. Arithmetic operations can be performed on the embeddings to for various tasks such as understanding the similarity between two words, identifying word analogies, etc.

- For the task of word similarity, the respective contextualized embeddings of the words can be used; while for sentence similarity, the output of the

[CLS]token can be used or the word embeddings of all tokens can be averaged. For best performance on sentence similarity tasks, Sentence BERT variants of encoder models are preferred. - Word/sentence similarity is the measure of the degree to which two words/sentences are semantically equivalent in meaning.

- Below are the two most common measures of word/sentence similarity (note that neither of them is a “distance metric”).

Dot Product Similarity

- The dot product of two vectors \(u\) and \(v\) is defined as:

- It’s perhaps easiest to visualize its use as a similarity measure when \(\|v\|=1\), as in the diagram (source) below, where \(\cos \theta=\frac{u \cdot v}{\|u\|\|v\|} = \frac{u \cdot v}{\|u\|}\).

- Here you can see that when \(\theta=0\) and \(\cos \theta=1\), i.e., the vectors are colinear, the dot product is the element-wise product of the vectors. When \(\theta\) is a right angle, and \(\cos \theta=0\), i.e. the vectors are orthogonal, the dot product is 0. In general, \(\cos \theta\) tells you the similarity in terms of the direction of the vectors (it is -1 when they point in opposite directions). This holds as the number of dimensions is increased, and \(\cos \theta\) thus has important uses as a similarity measure in multidimensional space, which is why it is arguably the most commonly used similarity metric.

Geometric intuition

- The dot product between \(u, v\) can be interpreted as projecting \(u\) onto \(v\) (or vice-versa), and then taking product of projected length of \(u\) \((\|u\|)\) with length of \(v\) \((\|v\|)\).

- When \(u\) is orthogonal to \(v\), projection of \(u\) onto \(v\) is a zero length vector, yielding a zero product. If you visualize all possible rotations of \(u\) while keeping \(v\) fixed, the dot product gives:

- Zero value when \(u\) is orthogonal to \(v\) as the projection of \(u\) onto \(v\) yields a vector of zero length. This corresponds to the intuition of zero similarity.

- Largest value of \(\|u\|\|v\|\) when \(u\) and \(v\) point in the same direction.

- Lowest value of \(-\|u\|\|v\|\) when \(u\) and \(v\) point in opposite direction.

- Dividing \(u \cdot v\) by the magnitude of \(u\) and \(v\), i.e., \(\|u\|\|v\|\), limits the range to \([-1,1]\) making it scale invariant, which is what brings us to cosine similarity.

Cosine Similarity

\[\text{cosine_similarity}(u,v) = \frac{u \cdot v}{\left\|u\right\|\left\|v\right\|} = \frac{\sum_{i=1}^{n} u_i v_i}{\sqrt{\sum_{i=1}^{n} u_i^2} \sqrt{\sum_{i=1}^{n} v_i^2}}\]- where,

- \(u\) and \(v\) are the two vectors being compared.

- \(\cdot\) represents the dot product.

- \(\|u\|\) and \(\|v\|\) represent the magnitudes (or norms) of the vectors, and \(n\) is the number of dimensions in the vectors.

- Note that as mentioned earlier, the length normalization part (i.e., dividing \(u \cdot v\) by the magnitude of \(u\) and \(v\), i.e., \(\|u\|\|v\|\)) limits the range to \([-1,1]\), making it scale invariant.

Cosine similarity vs. dot product similarity

- Cosine similarity and dot product similarity are both techniques used to determine the similarity between vectors, which can represent things like text documents, user preferences, etc. The choice between the two depends on the specific use case and desired properties. Here’s a comparison of the advantages of cosine similarity over dot product similarity:

- Magnitude Normalization: Cosine similarity considers only the angle between two vectors, ignoring their magnitudes. This is particularly useful when comparing documents of different lengths or vectors where magnitude isn’t representative of similarity. Dot product, on the other hand, can be affected by the magnitude of the vectors. A long document with many mentions of a particular term might have a high dot product with another document, even if the percentage of relevant content is low. Note that if you normalize your data to have the same magnitude, the two are indistinguishable. Sometimes it is desirable to ignore the magnitude, hence cosine similarity is nice, but if magnitude plays a role, dot product would be better as a similarity measure. In other words, cosine similarity is simply dot product, normalized by magnitude (hence is a value \(\in [0, 1]\)). Cosine similarity is preferable because it is scale invariant and thus lends itself naturally towards diverse data samples (with say, varying length). For instance, say we have two sets of documents and we computing similarity within each set. Within each set docs are identical, but set #1 documents are shorter, than set #2 ones. Dot product would produce different numbers if the embedding/feature size is different, while in both cases cosine similarity would yield comparable results (since it is length normalized). On the other hand, plain dot product is a little bit “cheaper” (in terms of complexity and implementation), since it involves lesser operations (no length normalization).

- Bound Values: Cosine similarity returns values between -1 and 1 for all vectors, but it specifically returns values between 0 and 1 for vectors with non-negative dimensions (like in the case of TF-IDF representations of documents). This bounded nature can be easier to interpret. Dot product values can range from negative to positive infinity, which can make normalization or thresholding harder.

- Robustness in High Dimensions: In high dimensional spaces, most pairs of vectors tend to be almost orthogonal, which means their dot products approach zero. However, their cosine similarities can still provide meaningful differentiation. Dot product can be highly sensitive to the magnitude of individual dimensions, especially in high-dimensional spaces.

- Common Use Cases: Cosine similarity is extensively used in text analytics, information retrieval, and recommender systems because of its effectiveness in these domains. When representing text with models like TF-IDF, where vectors are non-negative and the magnitude might be influenced by the length of the text, cosine similarity is more appropriate. While dot product has its own strengths, it might not be as suitable for these use cases without additional normalization.

- Intuitiveness: In many scenarios, thinking in terms of angles (cosine similarity) can be more intuitive than considering the raw projection (dot product). For instance, when two vectors point in the exact same direction (regardless of their magnitudes), their cosine similarity is 1, indicating perfect similarity.

- Centroid Calculation: When trying to calculate the centroid (average) of multiple vectors (like in clustering), the centroid remains meaningful under cosine similarity. If you average the vectors and then compare another vector using cosine similarity, you get a measure of how similar the vector is to the “average” vector. This isn’t necessarily true with dot product. Despite these advantages, it’s worth noting that in some applications, especially in neural networks and deep learning, raw dot products (sometimes followed by a normalization step) are preferred due to their computational properties and the nature of learned embeddings. Always consider the specific application and the properties of the data when choosing between these measures.

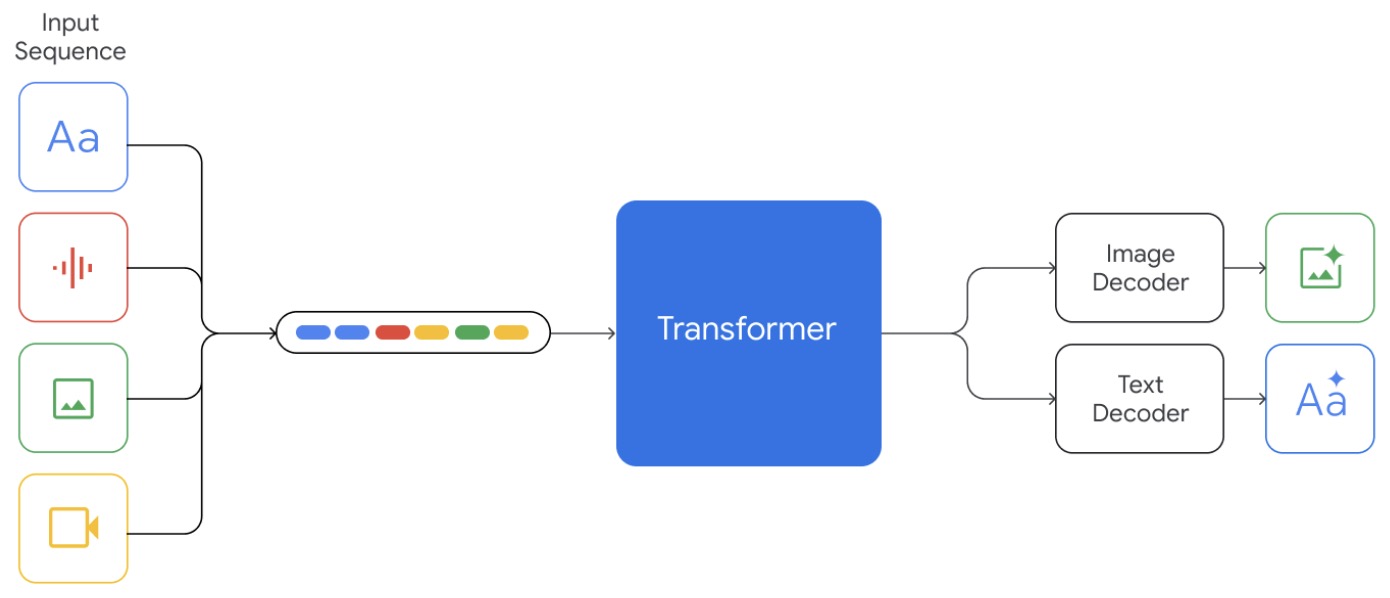

How do LLMs work?

- Like we discussed in the Overview section, LLMs are trained to predict the next token based on the set of previous tokens. They do this in an autoregressive manner (where the current generated token is fed back into the LLM as input to generate the next one), enabling generation capabilities.

- The first step involves taking the prompt they receive, tokenining it, and converting it into embeddings, which are vector representations of the input text. Note that these embeddings are initialized randomly and learned during the course of model training, and represent a non-contextualized vector form of the input token.

- Next, they do layer-by-layer attention and feed-forward computations, eventually assigning a number or logit to each word in its vocabulary (in the case of a decoder model like GPT-N, LLaMA, etc.) or outputs these features as contextualized embeddings (in the case of an encoder model like BERT and its variants such as RoBERTa, ELECTRA, etc.).

- Finally, in the case of decoder models, the next step is converting each (unnormalized) logit into a (normalized) probability distribution (via the Softmax function), determining which word shall come next in the text.

-

Let’s break the steps down into finer detail:

- Tokenization:

- Before processing, the raw input text is tokenized into smaller units, often subwords or words. This process breaks down the input into chunks that the model can recognize.

- This step is crucial because the model has a fixed vocabulary, and tokenization ensures the input is in a format that matches this vocabulary.

- OpenAI’s tokenizer for GPT-3.5 and GPT-4 can be found here.

- Please refer to our primer on Tokenization for more details.

- Embedding:

- Each token is then mapped to a high-dimensional vector using an embedding matrix. This vector representation captures the semantic meaning of the token and serves as the input to the subsequent layers of the model.

- Positional Encodings are added to these embeddings to give the model information about the order of tokens, especially important since models like transformers do not have inherent sequence awareness.

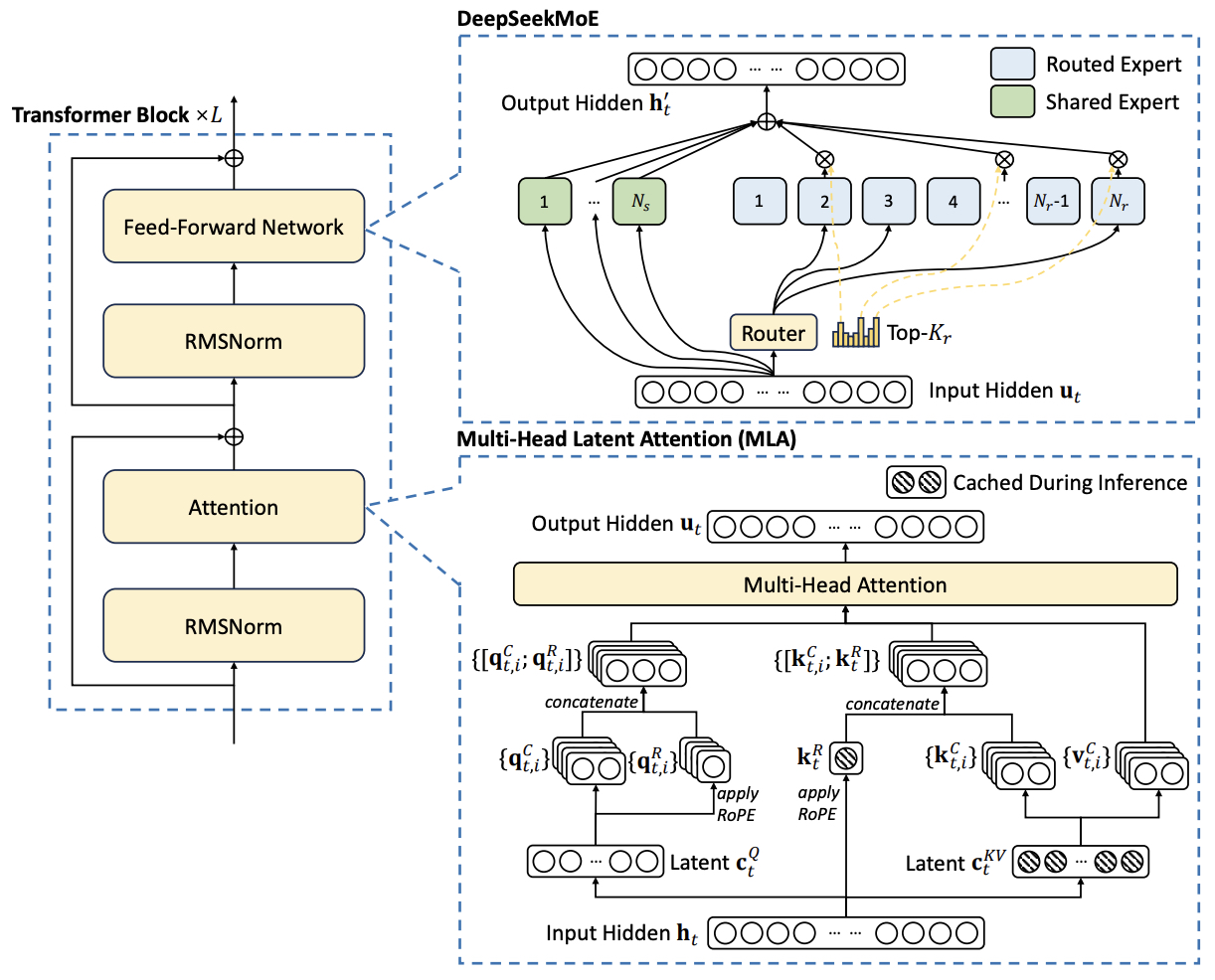

- Transformer Architecture:

- The core of most modern LLMs is the transformer architecture.

- It comprises multiple layers, and each layer has two primary components: a multi-head self-attention mechanism and a position-wise feed-forward network.

- The self-attention mechanism allows tokens to weigh the importance of other tokens relative to themselves. In essence, it enables the model to “pay attention” to relevant parts of the input for a given token.

- After attention, the result is passed through a feed-forward neural network independently at each position.

- Please refer to our primer on the Transformer architecture for more details.

- Residual Connections:

- Each sub-layer (like self-attention or feed-forward neural network) in the model has a residual connection around it, followed by layer normalization. This helps in stabilizing the activations and speeds up training.

- Output Layer:

- After passing through all the transformer layers, the final representation of each token is transformed into a vector of logits, where each logit corresponds to a word in the model’s vocabulary.

- These logits describe the likelihood of each word being the next word in the sequence.

- Probability Distribution:

- To convert the logits into probabilities, the Softmax function is applied. It normalizes the logits such that they all lie between 0 and 1 and sum up to 1.

- The word with the highest probability can be chosen as the next word in the sequence.

- Decoding:

- Depending on the application, different decoding strategies like greedy decoding, beam search, or top-k sampling might be employed to generate coherent and contextually relevant sequences.

- Please refer to our primer on Token Sampling Methods for more details.

- Tokenization:

- Through this multi-step process, LLMs can generate human-like text, understand context, and provide relevant responses or completions to prompts.

LLM Training Steps

- At a top-level, here are steps involved in training LLMs:

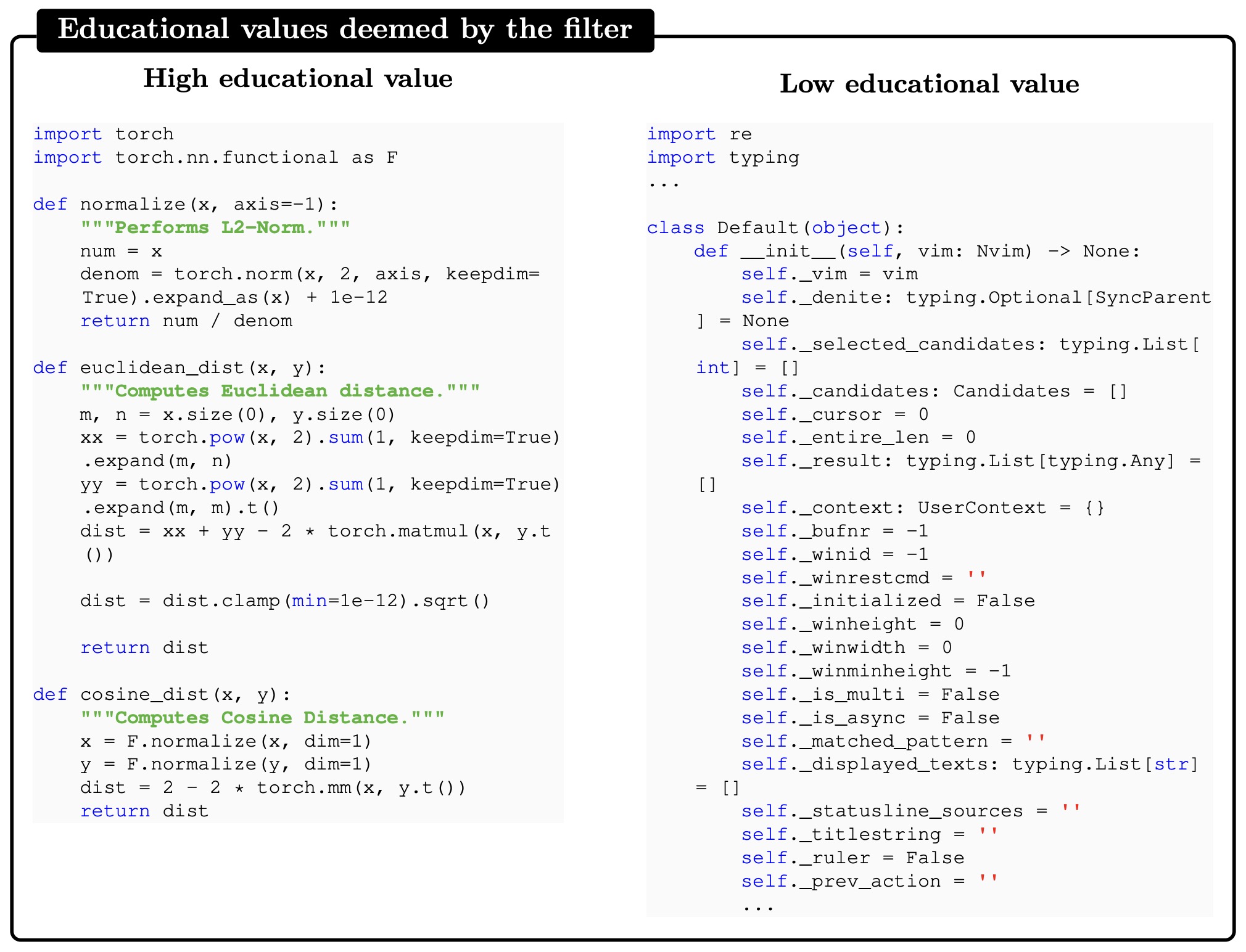

- Corpus Preparation: Gather a large corpus of text data, such as news articles, social media posts, or web documents.

- Tokenization: Split the text into individual words or subword units, known as tokens.

- Embedding Generation: Typically accomplished using a randomly initialized embedding table, via the

nn.Embeddingclass in PyTorch. Pre-trained embeddings such Word2Vec, GloVe, FastText, etc. can also be used as a starting point for training. Note that these embeddings represent the non-contextualized vector form of the input token. - Neural Network Training: Train a neural network model on the input tokens.

- For encoder models such as BERT and its variants, the model learns to predict the context (surrounding words) of a given word which are masked. BERT is specifically trained on the Masked Language Modeling task (known as the Cloze task) and the Next Sentence Prediction objective; described in our BERT primer.

- For decoder models such as GPT-N, LLaMA, etc., the model learns to predict the next token in the sequence, given the prior context of tokens.

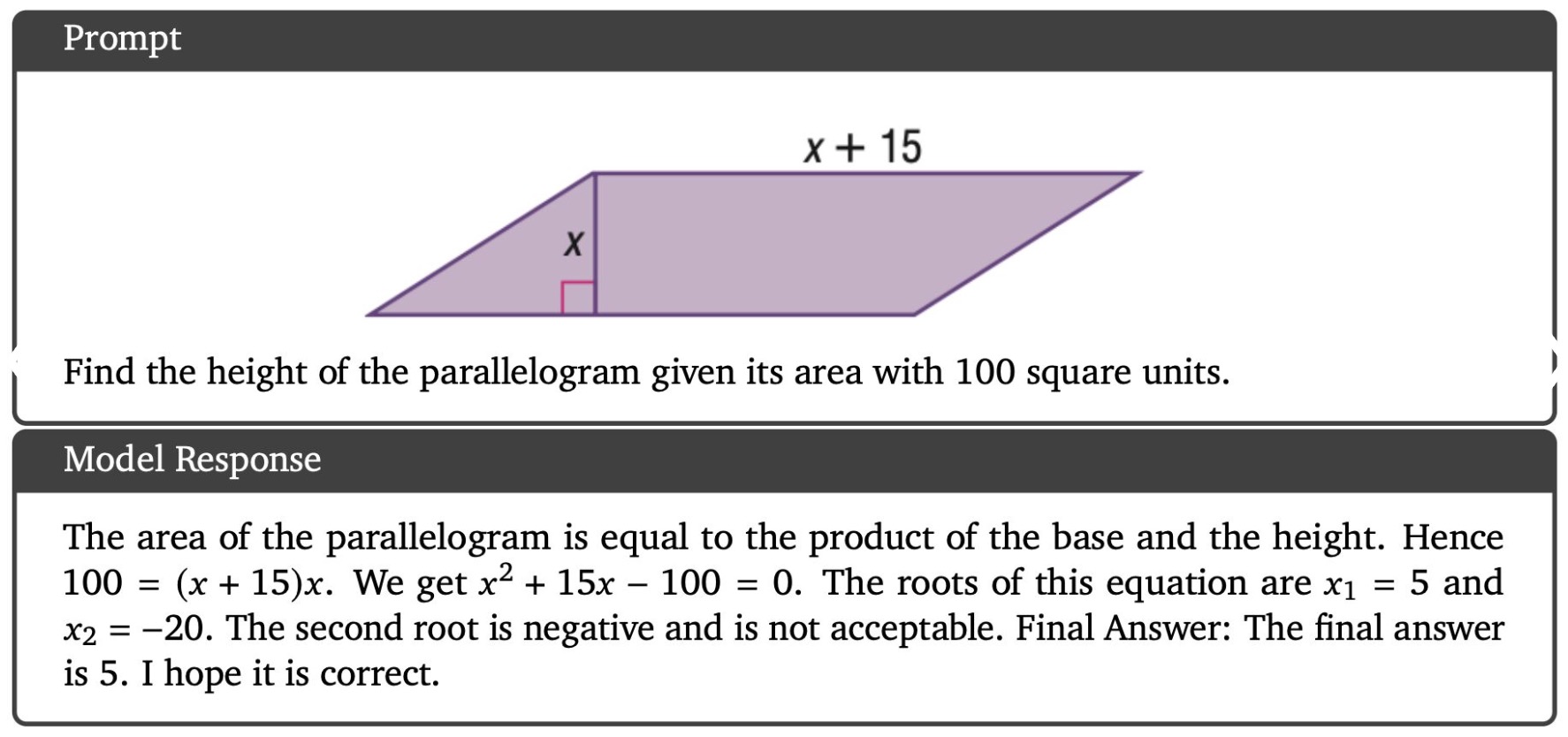

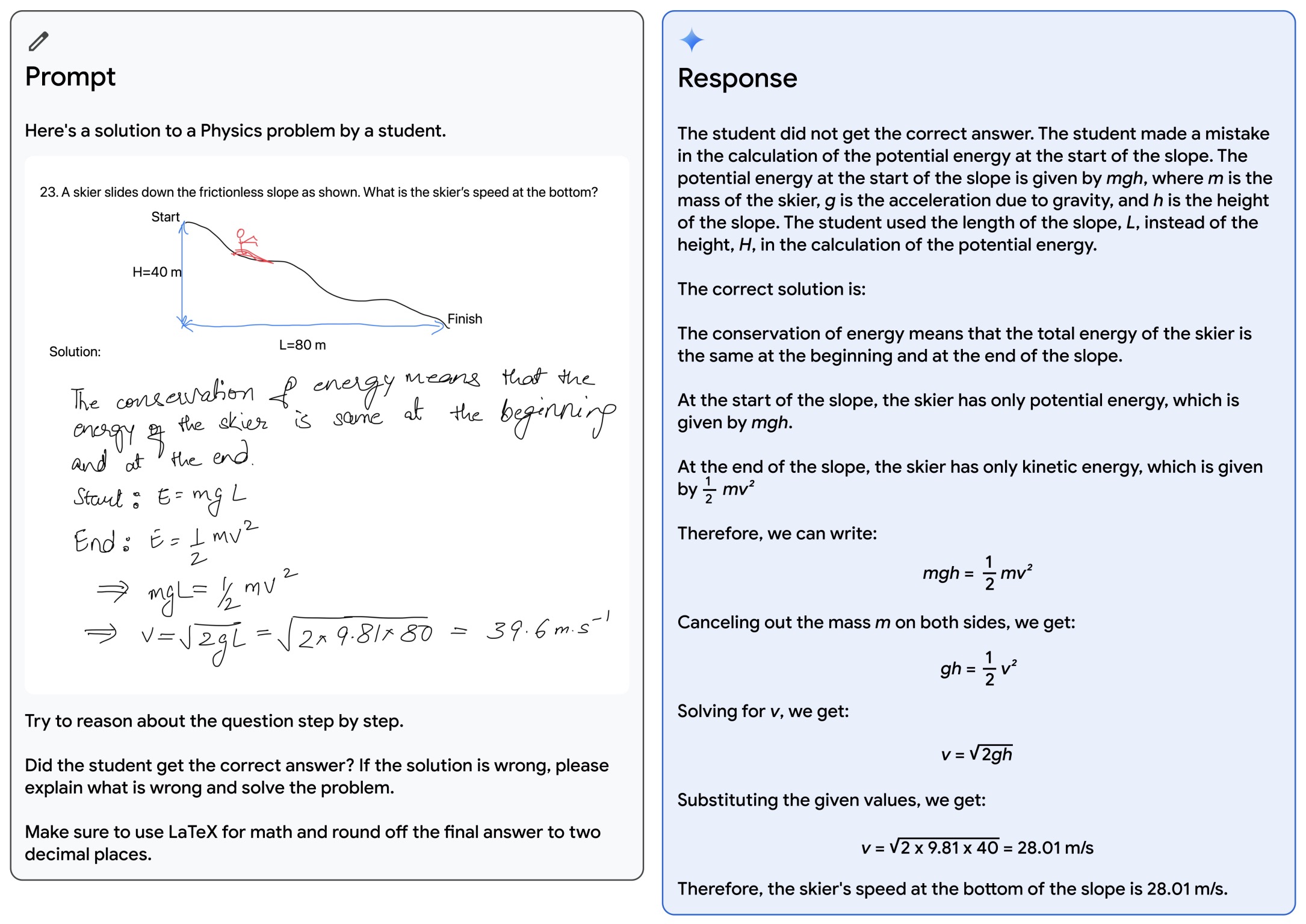

Reasoning

- Let’s delve into how reasoning works in LLMs; we will define reasoning as the “ability to make inferences using evidence and logic.” (source)

- There are a multitude of varieties of reasoning, such as commonsense reasoning or mathematical reasoning.

- Similarly, there are a variety of methods to elicit reasoning from the model, one of them being chain-of-thought prompting which can be found here.

- It’s important to note that the extent of how much reasoning an LLM uses in order to give its final prediction is still unknown, since teasing apart the contribution of reasoning and factual information to derive the final output is not a straightforward task.

Retrieval/Knowledge-Augmented Generation or RAG (i.e., Providing LLMs External Knowledge)

- In an industrial setting, cost-conscious, privacy-respecting, and reliable solutions are most desired. Companies, especially startups, are not looking to invest in talent or training models from scratch with no RoI on the horizon.

- In most recent research and release of new chatbots, it’s been shown that they are capable of leveraging knowledge and information that is not necessarily in its weights. This paradigm is referred to as Retrieval Augmented Generation (RAG).

- RAG enables in-context learning without costly fine-tuning, making the use of LLMs more cost-efficient. By leveraging RAG, companies can use the same model to process and generate responses based on new data, while being able to customize their solution and maintain relevance. RAG also helps alleviate hallucination.

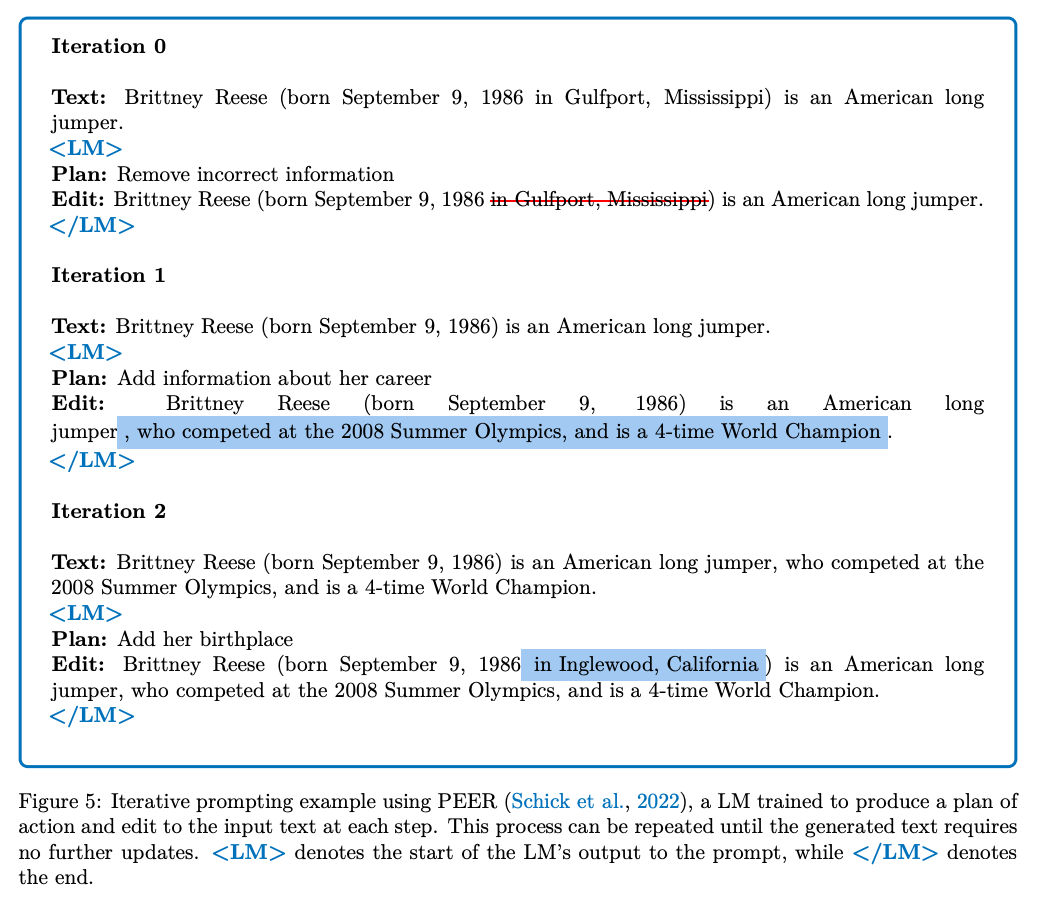

- There are several ways we can accomplish this, first of those being leveraging another LM by iteratively calling it to extract information needed.

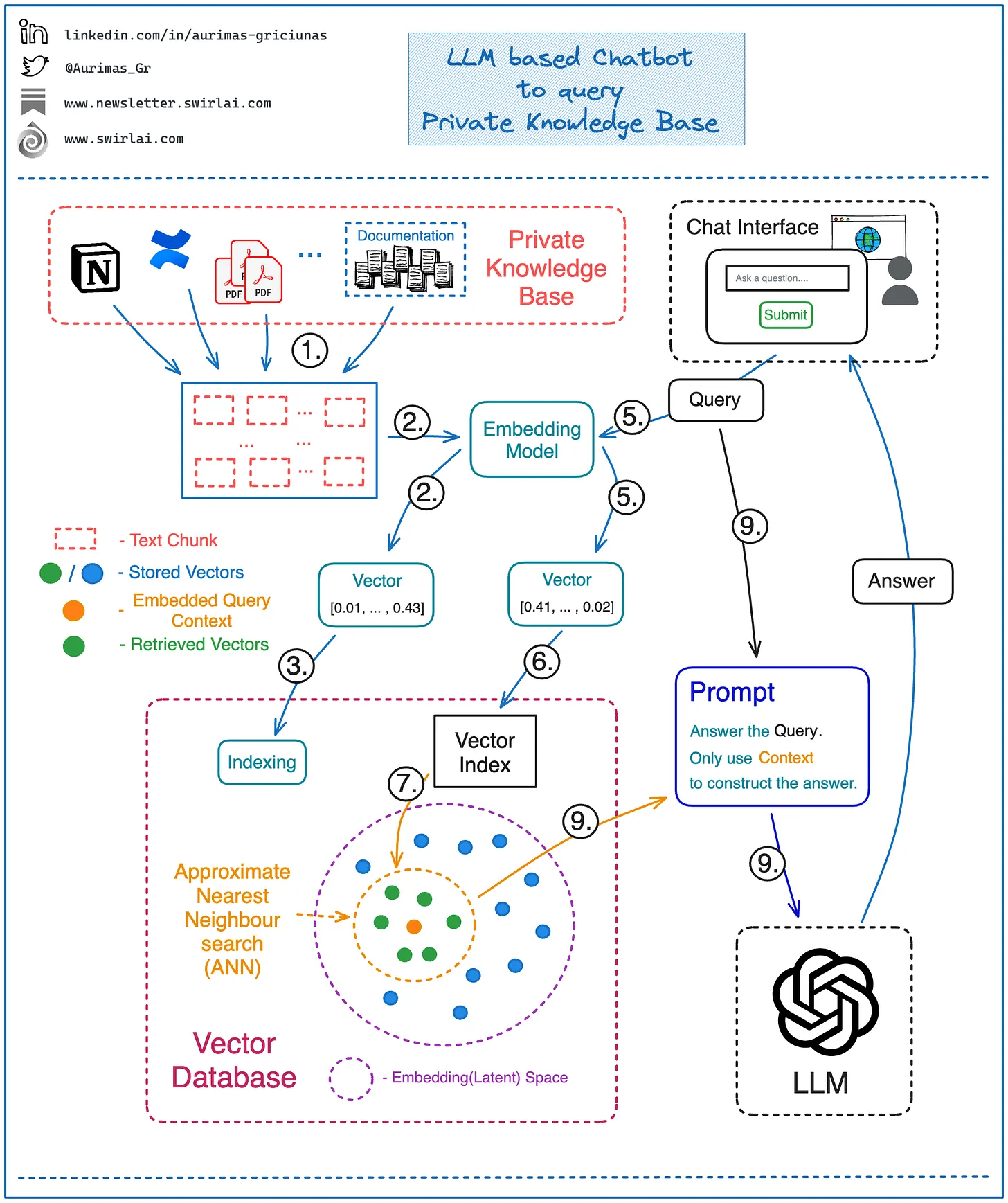

- In the image below (source), we get a glimpse into how iteratively calling LM works:

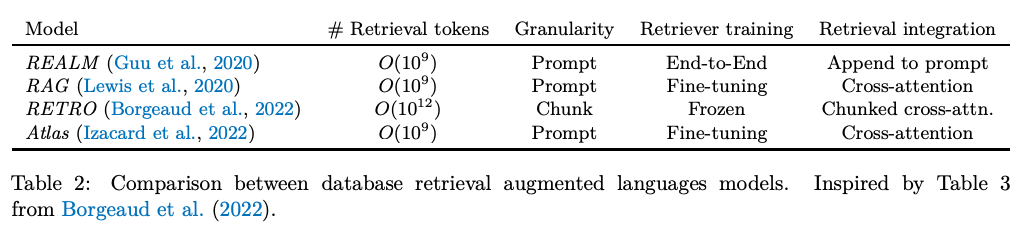

- Another method for LLM gaining external knowledge is through information retrieval via memory units such as an external database, say of recent facts. As such, there are two types of information retrievers, dense and sparse.

- As the name suggests, sparse retrievers use sparse bag of words representation of documents and queries while dense (neural) retrievers use dense query and document vectors obtained from a neural network.

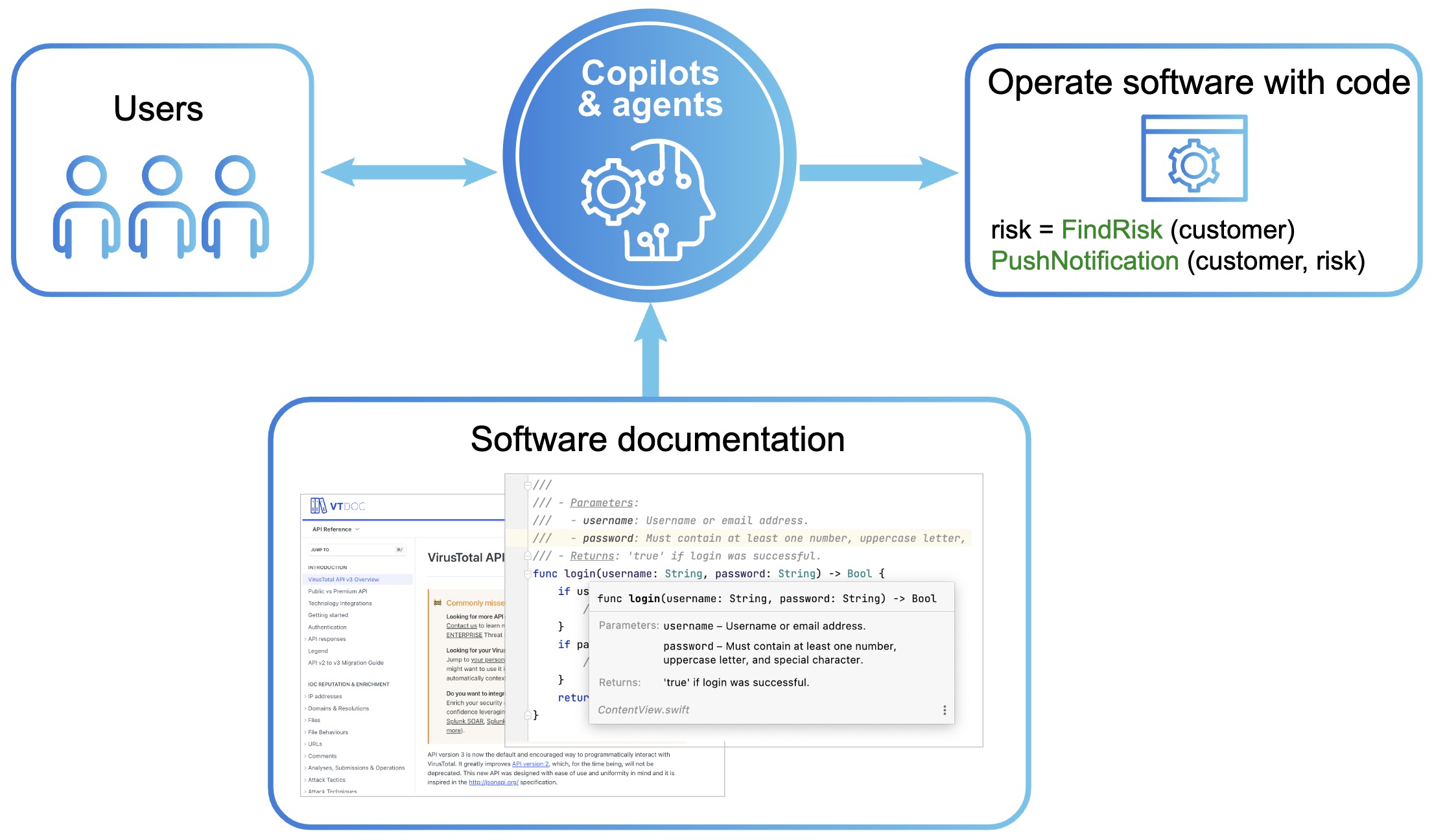

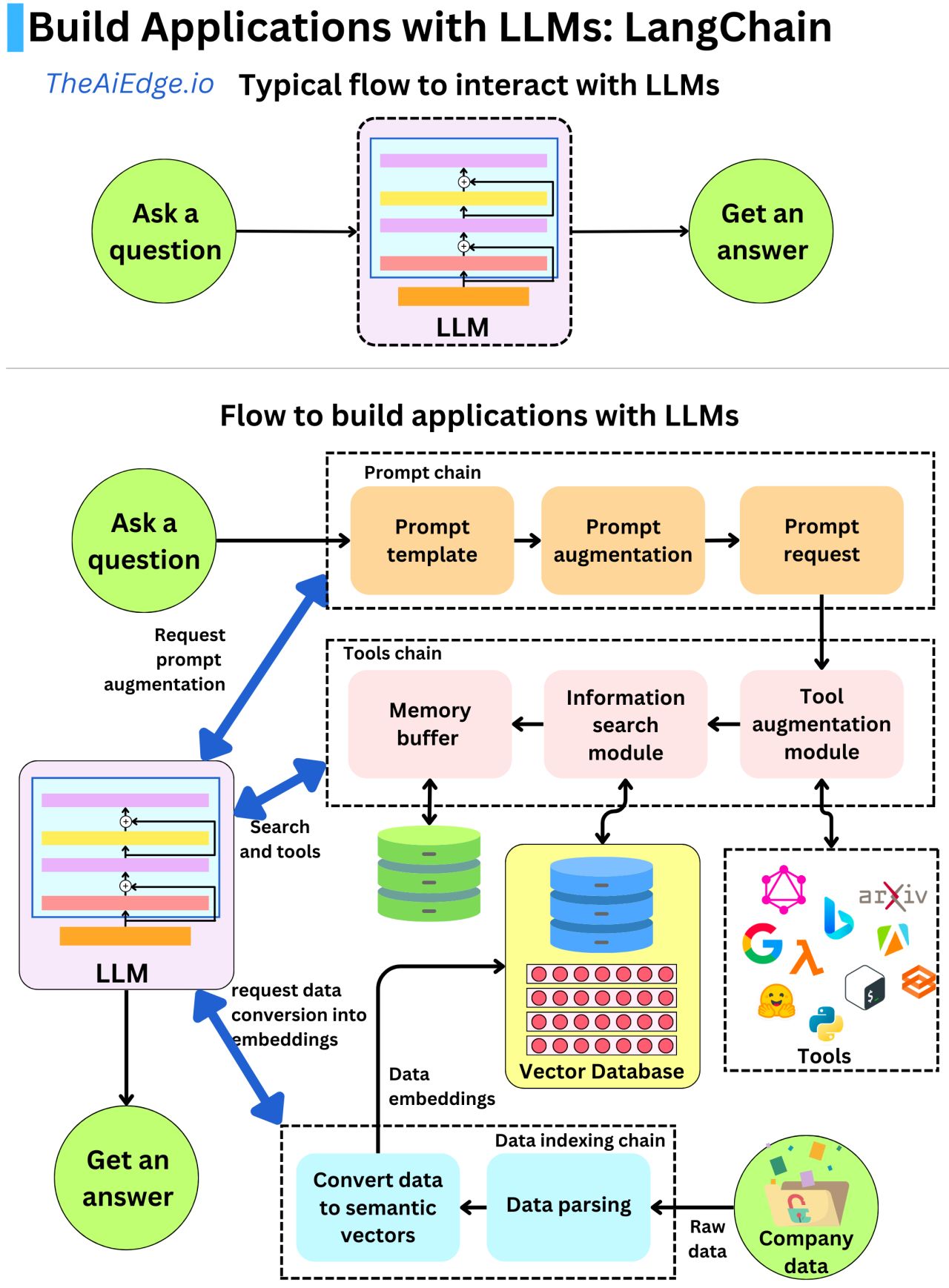

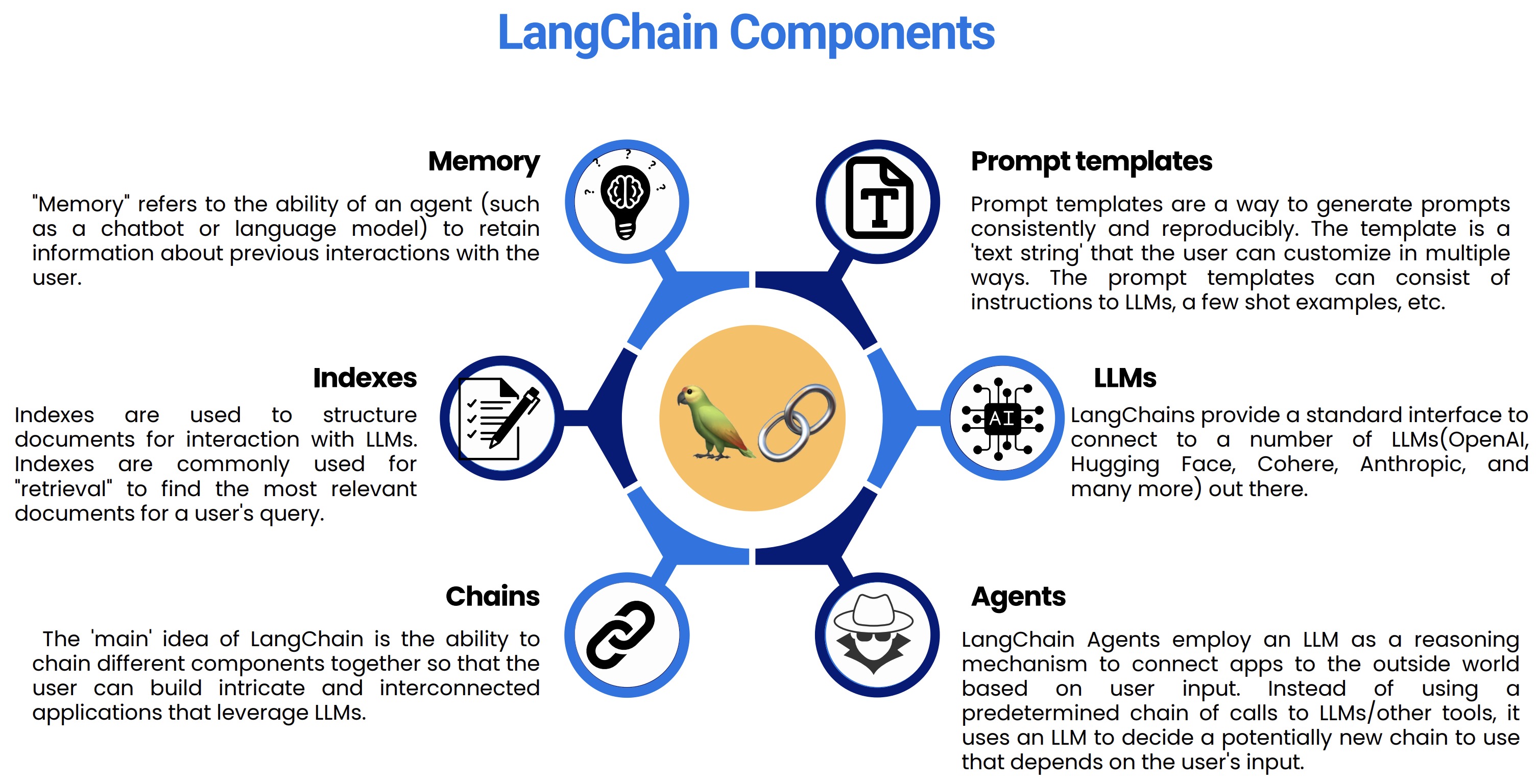

- Yet another method is to leverage using agents which utilize APIs/tools to carry out a specializes task. The model chooses the most appropriate tool corresponding to a given input. With the help of tools like Google Search, Wikipedia and OpenAPI, LLMs can not only browse the web while responding, but also perform tasks like flight booking and weather reporting. LangChain offers a variety of different tools.

- “Even though the idea of retrieving documents to perform question answering is not new, retrieval-augmented LMs have recently demonstrated strong performance in other knowledge-intensive tasks besides Q&A. These proposals close the performance gap compared to larger LMs that use significantly more parameters.” (source)

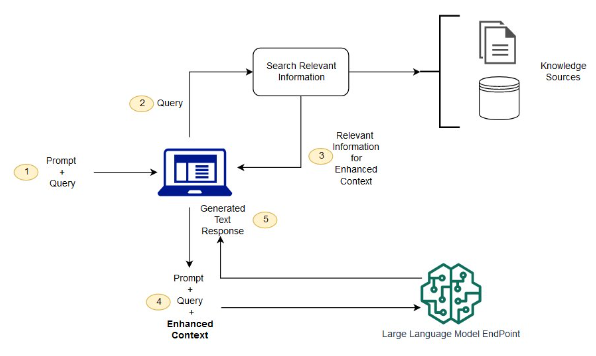

- “With RAG, the external data used to augment your prompts can come from multiple data sources, such as a document repositories, databases, or APIs. The first step is to convert your documents and any user queries into a compatible format to perform relevancy search.

- To make the formats compatible, a document collection, or knowledge library, and user-submitted queries are converted to numerical representations using embedding language models. Embedding is the process by which text is given numerical representation in a vector space.

- RAG model architectures compare the embeddings of user queries within the vector of the knowledge library. The original user prompt is then appended with relevant context from similar documents within the knowledge library. This augmented prompt is then sent to the foundation model. You can update knowledge libraries and their relevant embeddings asynchronously.” source

Process

- First step is to store the knowledge of your internal documents in a format that is suitable for querying. We do so by embedding all of your internally held knowledge using an embedding model:

- Split text corpus of the entire knowledge base into chunks – a chunk represents a single piece of context available to be queried. Keep in mind that the data of interest can be coming from multiple sources of different types, e.g. documentation in Confluence supplemented by PDF reports.

- Use the Embedding Model to transform each of the chunks into a vector embedding.

- Store all vector embeddings in a Vector Database.

- Save text that represents each of the embeddings separately together with the pointer to the embedding (we will need this later).

-

The following flowchart (source) illustrates the architecture of the system:

- Next we can start constructing the answer to a question/query of interest:

- Embed a question/query you want to ask using the same Embedding Model that was used to embed the knowledge base itself.

- Use the resulting Vector Embedding to run a query against the index in Vector Database. Choose how many vectors you want to retrieve from the Vector Database - it will equal the amount of context you will be retrieving and eventually using for answering the query question.

- Vector DB performs an Approximate Nearest Neighbour (ANN) search for the provided vector embedding against the index and returns previously chosen amount of context vectors. The procedure returns vectors that are most similar in a given Embedding/Latent space.

- Map the returned Vector Embeddings to the text chunks that represent them.

- Pass a question together with the retrieved context text chunks to the LLM via prompt. Instruct the LLM to only use the provided context to answer the given question. This does not mean that no Prompt Engineering will be needed - you will want to ensure that the answers returned by LLM fall into expected boundaries, e.g. if there is no data in the retrieved context that could be used make sure that no made up answer is provided.

- To make a real demo (for e.g., an interactive chatbot like ChatGPT), face the entire application with a Web UI that exposes a text input box to act as a chat interface. After running the provided question through steps 1. to 9. - return and display the generated answer. This is how most of the chatbots that are based on a single or multiple internal knowledge base sources are actually built nowadays.

Summary

- RAG augments the knowledge base of an LM with relevant documents. Vector databases such as Pinecone, Chroma, Weaviate, etc. offer great solutions to augment LLMs. Open-soruce solutions such as Milvus and LlamaIndex are great options as well.

- Here is RAG step-by-step:

- Chunk, embed, & index documents in a vector database (VDB).

- Match the query embedding of the claim advisor using (approximate) nearest neighbor techniques.

- Retrieve the relevant context from the VDB.

- Augment the LLM’s prompt with the retrieved content.

- As a stack recommendation, you can build prototypes with LangChain or for more of an industrial flair, go with Google Vertex.

- Another method recent LM’s have leveraged is the search engine itself such as WebGPT does. “WebGPT learns to interact with a web-browser, which allows it to further refine the initial query or perform additional actions based on its interactions with the tool. More specifically, WebGPT can search the internet, navigate webpages, follow links, and cite sources.” (source)

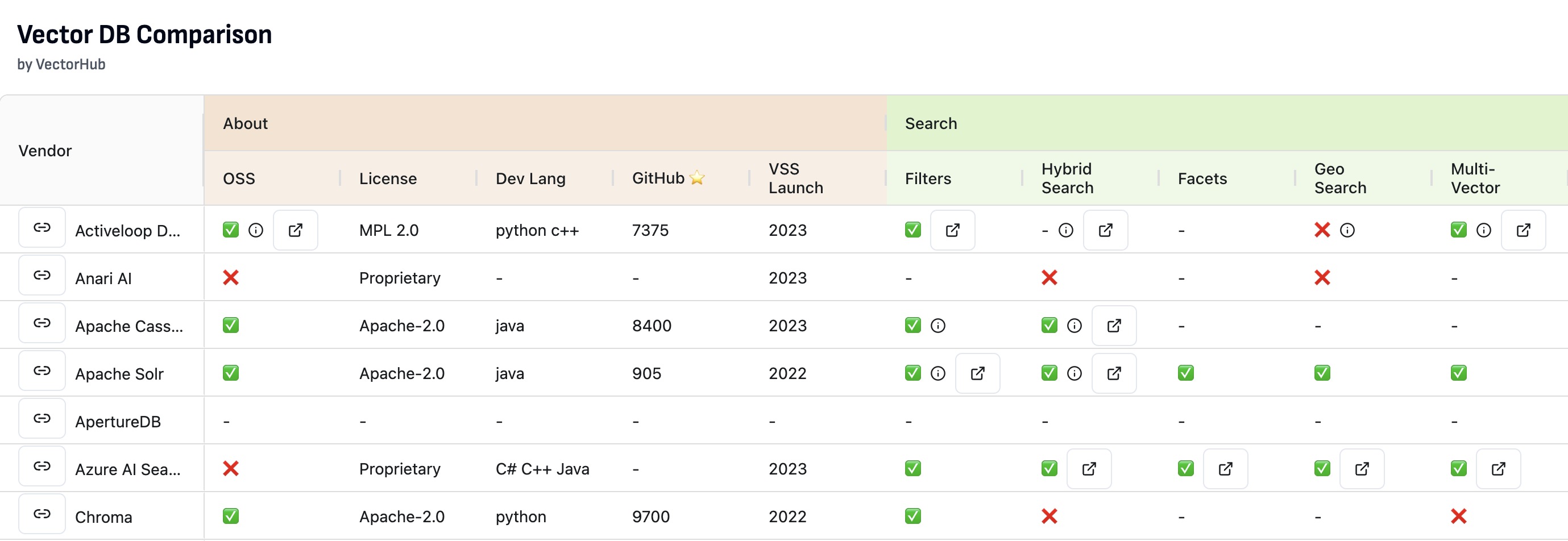

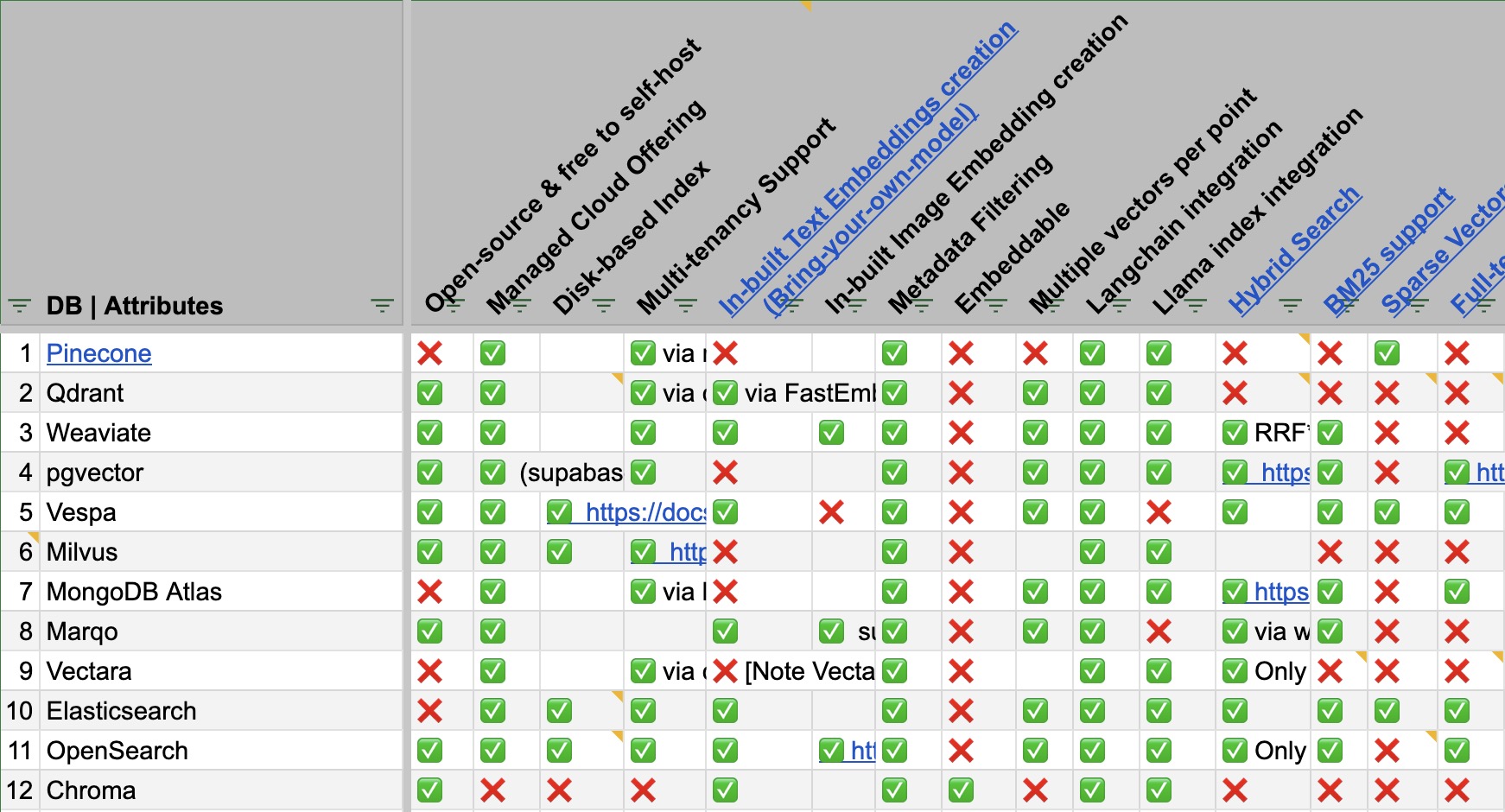

Vector Database Feature Matrix

- To compare the plethora of Vector DB offerings, a feature matrix that highlights the differences between Vector DBs and which to use in which scenario is essential.

- Vector DB Comparison by VectorHub offers a great comparison spanning 37 vendors and 29 features (as of this writing).

- As a secondary resource, the following table (source) shows a comparison of some of the prevalent Vector DB offers along various feature dimensions:

- Access the full spreadsheet here.

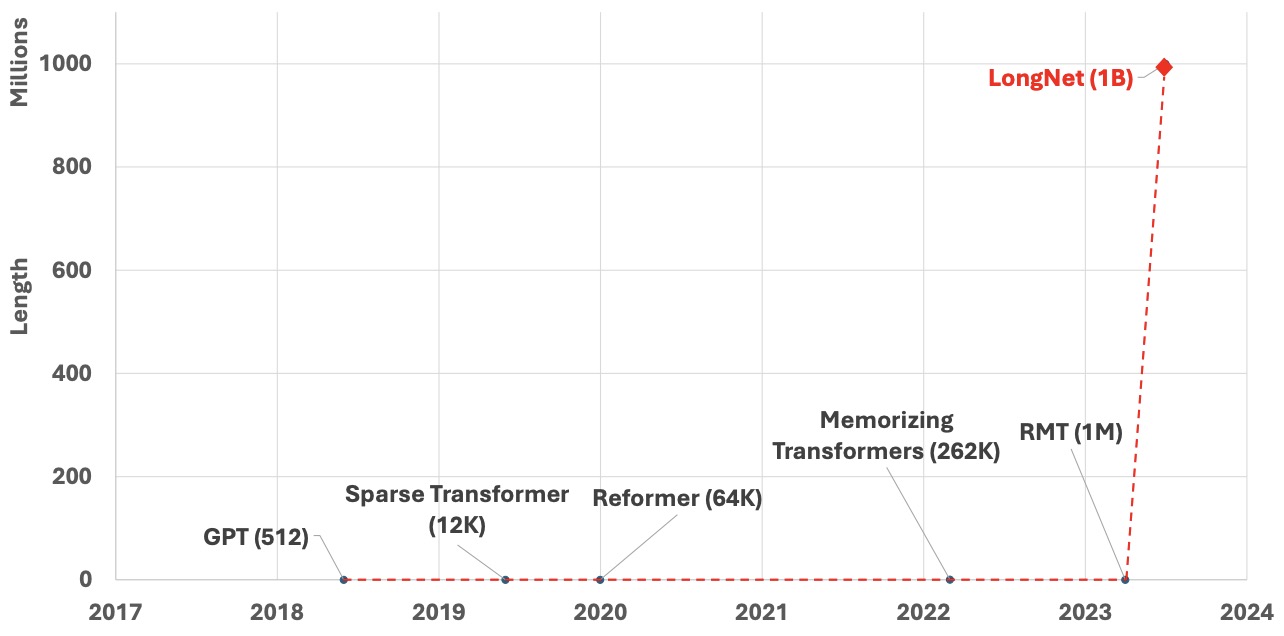

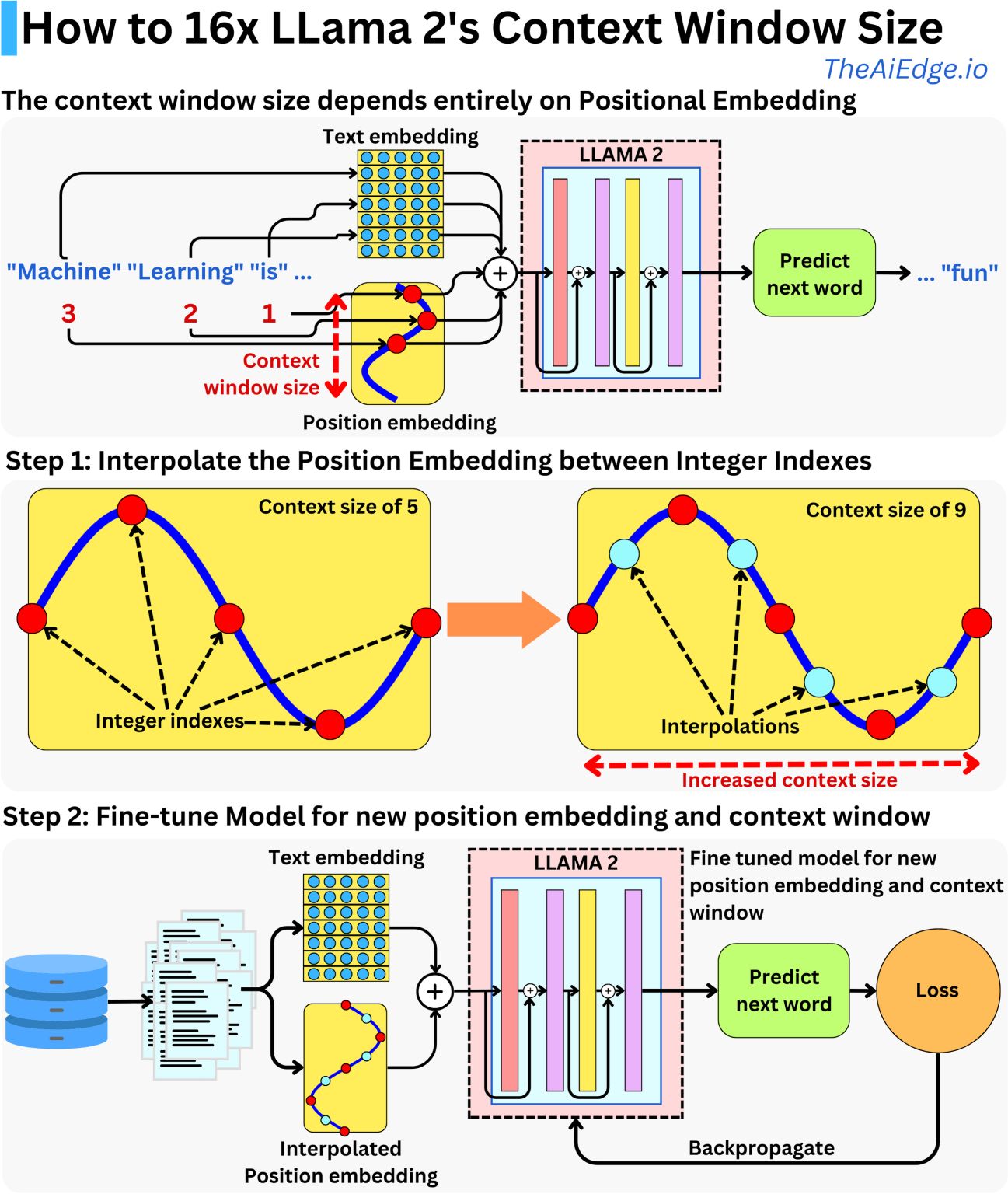

Context Length Extension

- Language models like LLaMA have traditionally been bounded by a fixed context window size, which essentially means they can only consider a fixed number of previous tokens when predicting the next one. Positional embeddings are a core component in these models to provide a sense of sequence order. However, scaling these embeddings to accommodate longer sequences has its own set of challenges.

- For example, GPT-4 has a context length of 32K input tokens, while Anthropic has 100K input tokens. To give an idea, The Great Gatsby is 72K tokens, 210 pages.

- Let’s delve deeper into the context length issue found in Transformers:

- Background:

- Weight Matrix Shapes and Input Tokens:

- In the Transformer model, the sizes (or shapes) of the learnable weight matrices do not depend on the number of input tokens (\(n\)). This means the architecture itself doesn’t change if you provide more tokens.

- The implication of this is that a Transformer trained on shorter sequences (like 2K tokens) can technically accept much longer sequences (like 100K tokens) without any structural changes.

- Training on Different Context Lengths:

- While a Transformer model can accept longer sequences, it may not produce meaningful results if it hasn’t been trained on such long sequences. In the given example, if a model is trained only on 2K tokens, feeding it 100K tokens might result in outputs that are not accurate or coherent.

- Computational Complexity and Costs: - The original Transformer has a quadratic computational complexity with respect to both the number of tokens (\(n\)) and the embedding size (\(d\)). This means that as sequences get longer, the time and computational resources needed for training increase significantly. - As a concrete example, the author points out that training a model called LLaMA on sequences of 2K tokens is already quite expensive (~$3M). Due to the quadratic scaling, if you tried to train LLaMA on sequences of 100K tokens, the cost would balloon to an estimated ~$150M.

- Weight Matrix Shapes and Input Tokens:

- Potential Solution:

- Fine-tuning on Longer Contexts: - A potential solution to the problem might seem to be training a model on shorter sequences (like 2K tokens) and then fine-tuning it on longer sequences (like 65K tokens). This approach is often used in other contexts to adapt a model to new data or tasks. - However, this won’t work well with the original Transformer due to its Positional Sinusoidal Encoding. This encoding is designed to add positional information to the input tokens, but if the model is only familiar with shorter sequences, it might struggle to interpret the positional encodings for much longer sequences accurately.

- Background:

- We will look at other more viable solutions, such as Flash Attention, below.

- For more, refer to our Context Length Extension primer.

Challenges with Context Scaling

- Fixed Maximum Length: Positional embeddings are configured for a predetermined sequence length. If a model is designed for 512 tokens, it possesses 512 distinct positional embedding vectors. Beyond this, there’s no positional information for extra tokens, making longer sequences problematic.

- Memory Overhead: Extending these embeddings for incredibly lengthy sequences demands more memory, especially if the embeddings are parameters that need to be learned during training.

- Computational Burden: The self-attention mechanism in Transformers grows in computational intensity with the length of the sequence. It’s quadratic in nature, meaning that even a modest increase in sequence length can substantially raise the computation time.

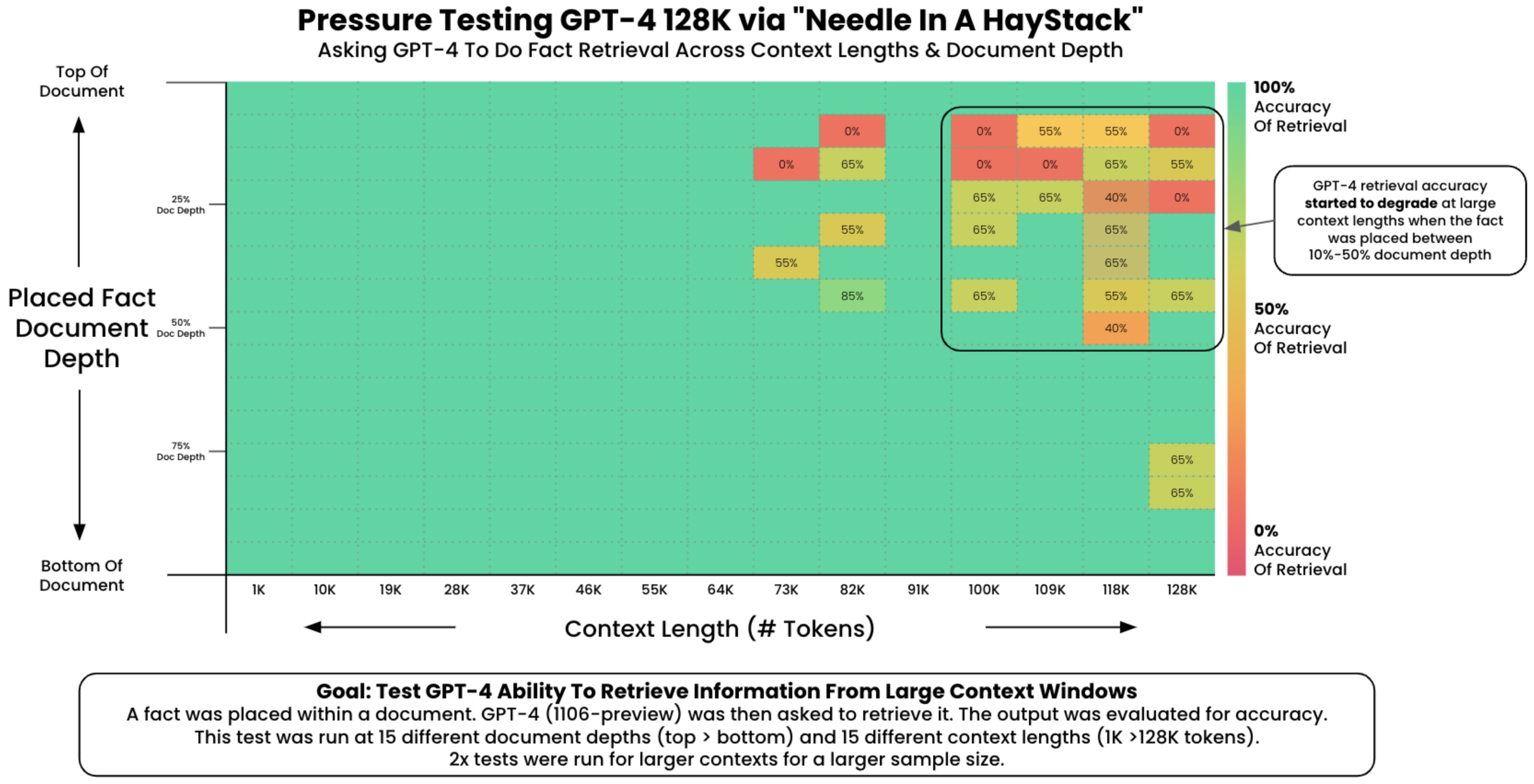

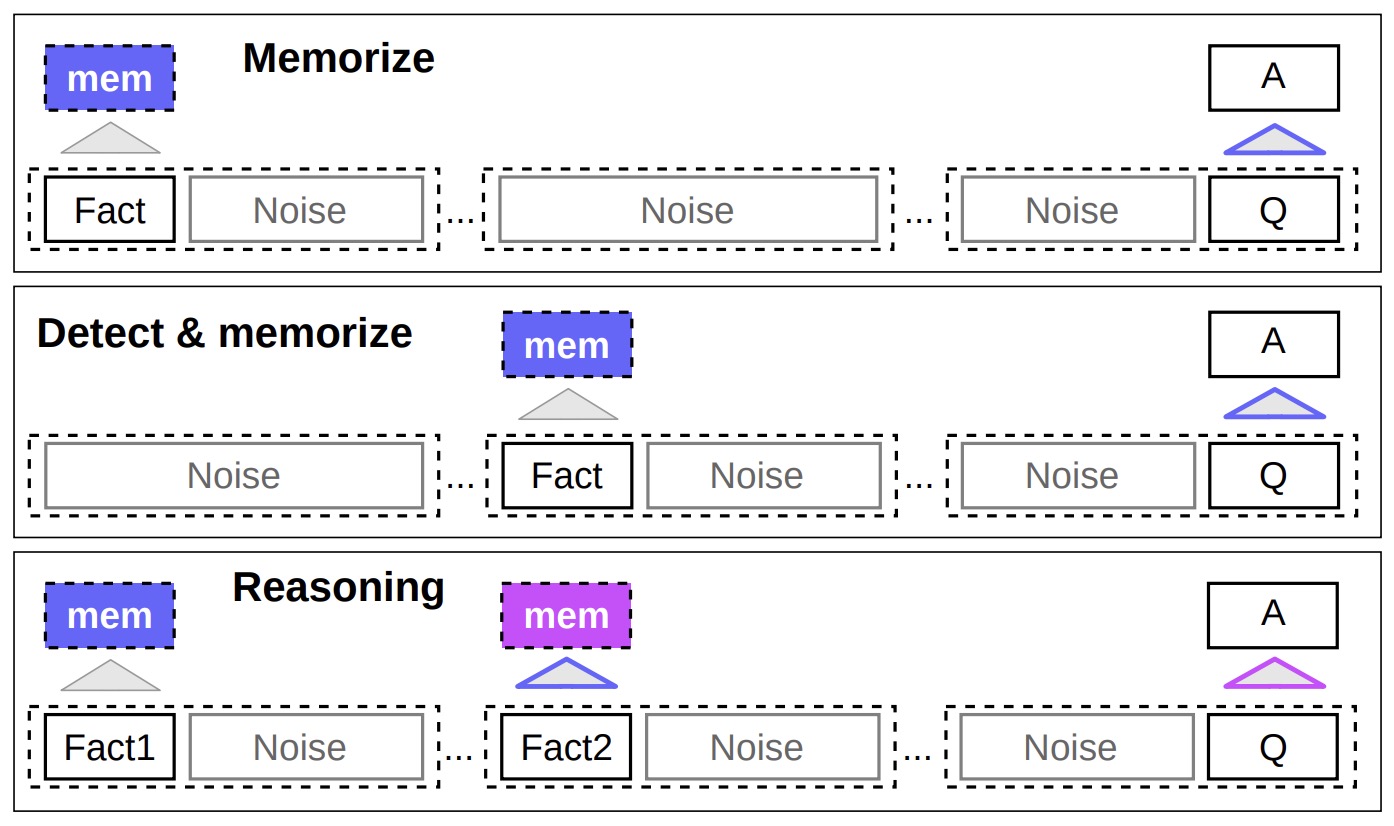

The “Needle in a Haystack” Test

- To understand the in-context retrieval ability of long-context LLMs over various parts of their prompt, a simple ‘needle in a haystack’ analysis could be conducted. This method involves embedding specific, targeted information (the ‘needle’) within a larger, more complex body of text (the ‘haystack’). The purpose is to test the LLM’s ability to identify and utilize this specific piece of information amidst a deluge of other data.

- In practical terms, the analysis could involve inserting a unique fact or data point into a lengthy, seemingly unrelated text. The LLM would then be tasked with tasks or queries that require it to recall or apply this embedded information. This setup mimics real-world situations where essential details are often buried within extensive content, and the ability to retrieve such details is crucial.

- The experiment could be structured to assess various aspects of the LLM’s performance. For instance, the placement of the ‘needle’ could be varied—early, middle, or late in the text—to see if the model’s retrieval ability changes based on information location. Additionally, the complexity of the surrounding ‘haystack’ can be modified to test the LLM’s performance under varying degrees of contextual difficulty. By analyzing how well the LLM performs in these scenarios, insights can be gained into its in-context retrieval capabilities and potential areas for improvement.

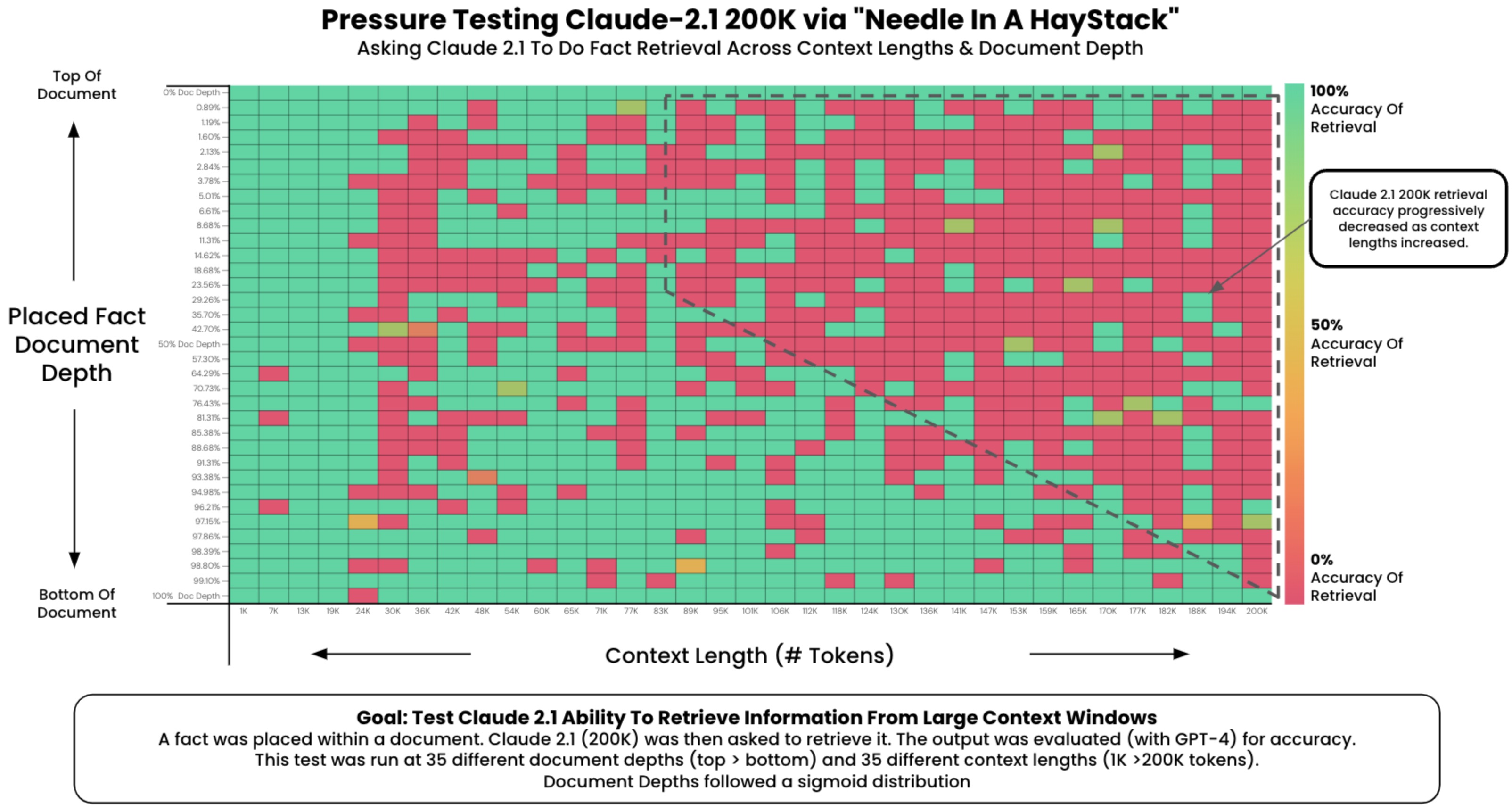

- This can be accomplished using the Needle In A Haystack library. The following plot shows OpenAI’s GPT-4-128K’s (top) and (bottom) performance with varying context length.

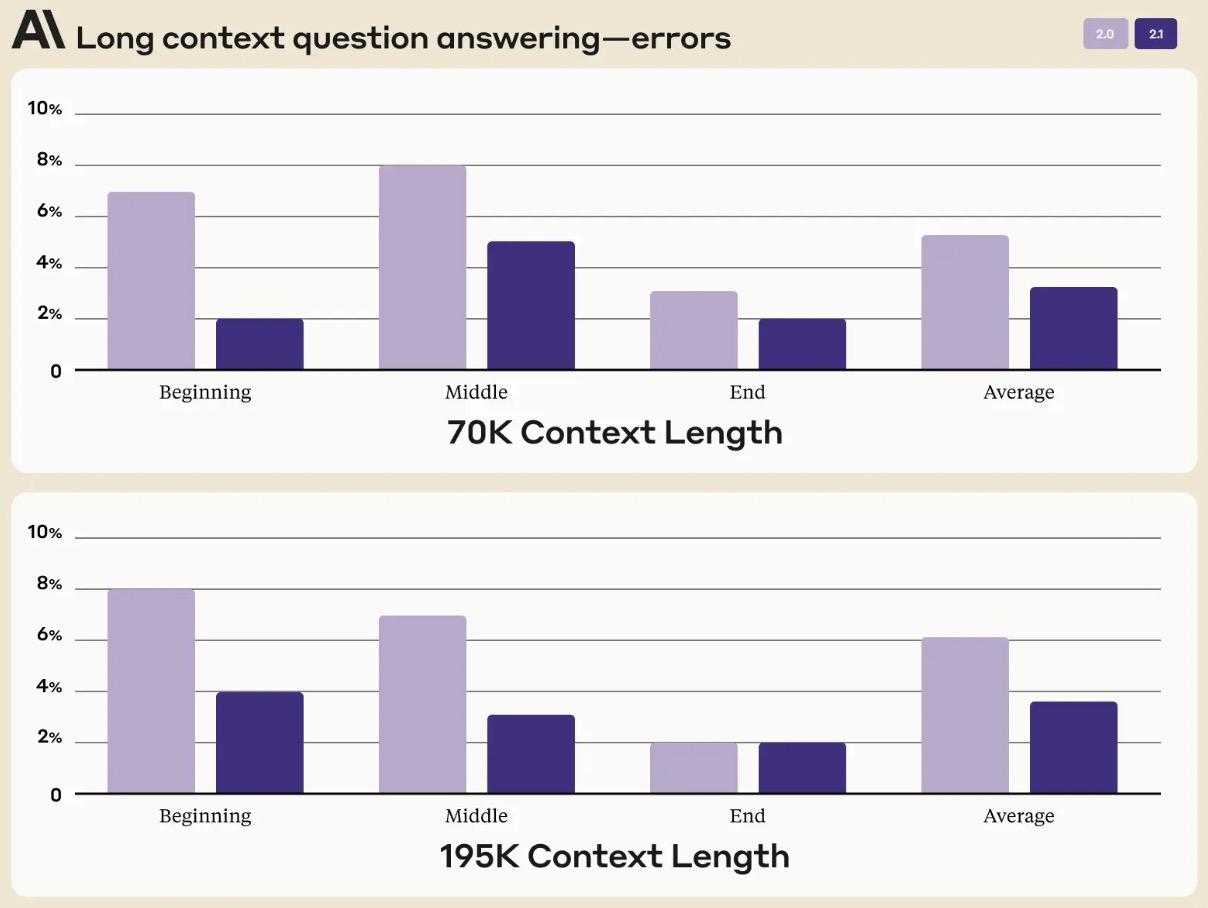

- The following figure (source) shows Claude 2.1’s long context question answering errors based on the areas of the prompt context length. On an average, Claude 2.1 demonstrated a 30% reduction in incorrect answers compared to Claude 2.

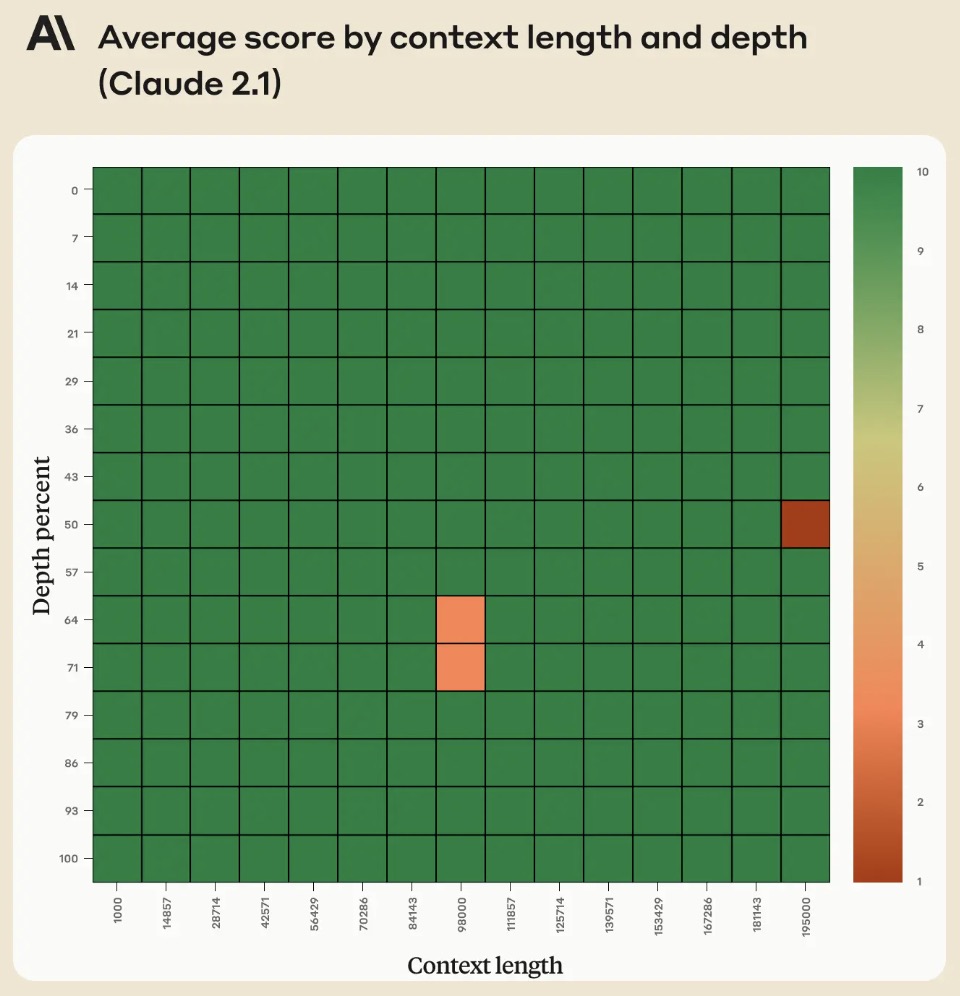

- However, in their Long context prompting for Claude 2.1 blog, Anthropic noted that adding “Here is the most relevant sentence in the context:” to the start of Claude’s response raised the score from 27% to 98% on the original evaluation! The figure below from the blog shows that Claude 2.1’s performance when retrieving an individual sentence across its full 200K token context window. This experiment uses the aforementioned prompt technique to guide Claude in recalling the most relevant sentence.

Status quo

- Considering the fact that self-attention in the Transformer has a quadratic time and space complexity with respect to the context length, here’s how context length sizes of 100k tokens and longer are achieved in practice in the state-of-the-art open LLMs:

- The LLMs are first pretrained using the exact attention (without using approximations such as Linformer, Performer, BigBird, etc.), usually with up to 4096 token contexts using FlashAttention 2 (which significantly speeds up the training process compared to a vanilla attention implementation) and Rotary Position Embedding (RoPE, which allows modeling very long sequences).

- Post this step, the context size is extended via additional pre-training using techniques with various degrees of approximation as compared to the exact attention. This part is often missing in open model info cards while OpenAI and Anthropic keep this information secret. The most effective such techniques used in open models are currently YaRN, LongLoRA, and Llama 2 Long.

- Inference with such long contexts requires multiple GPUs of A100 or H100 grade even for relatively small models such as 7B or 13B.

- Some techniques allow extending the context size of a pretrained LLM without additional pretraining. Two such techniques proposed recently are SelfExtend and LM-Infinite.

RAG vs. Ultra Long Context (1M+ Tokens)

- The recent unveiling of the Gemini Pro 1.5 model, featuring a 1 million token context window, has reignited a pivotal discussion: is there still a place for RAG?

- The consensus appears to affirm the continued relevance of RAG, especially considering the financial implications associated with the Gemini Pro 1.5’s extensive token usage. Specifically, each query within this model demands payment for every token in the 1 million token context, thereby accruing significant costs—approximately $7 per call. This pricing structure starkly contrasts with RAG’s cost-effective approach, where only a select number of pertinent tokens are charged, potentially reducing costs by an estimated 99%, albeit possibly at the expense of performance.

- This financial consideration sharply defines viable applications for the Gemini Pro 1.5 model, particularly discouraging its use in scenarios typically suited for RAG due to the prohibitive costs involved. Nonetheless, this does not preclude the utility of long context window models in other domains. When the full breadth of the context window is leveraged, these models can provide substantial value, making even a seemingly high cost per call appear reasonable.

- Optimal uses for such large context window models (source) would include deriving patterns from the entire dataset, might include:

- Analyzing a compilation of 1,000 customer call transcripts to generate insights on the reasons for calls, sentiment analysis, detection of anomalies, assessment of agent performance, and compliance monitoring. 2. Examining extensive marketing performance data to unearth trends or optimization opportunities.

- Processing the recorded dialogue from an all-day workshop to extract pivotal ideas and discussions.

- Given a large codebase, resolve a particular bug.

- Prohibitive use cases would include applications that requires multi-turn or follow-up questions, which are more efficiently handled by RAG systems (asking a coding assistant follow-up questions given a piece of code).

- These examples underscore a critical principle: utilizing 1 million tokens to inquire about a fraction of that amount is inefficient and costly—RAG is better suited for such tasks. However, deploying long context models to analyze and derive patterns across the entirety of their token capacity could indeed be transformative, offering a compelling advantage where the scale and scope of the data justify the investment.

- The following infographic (source) illustrates this:

Solutions to Challenges

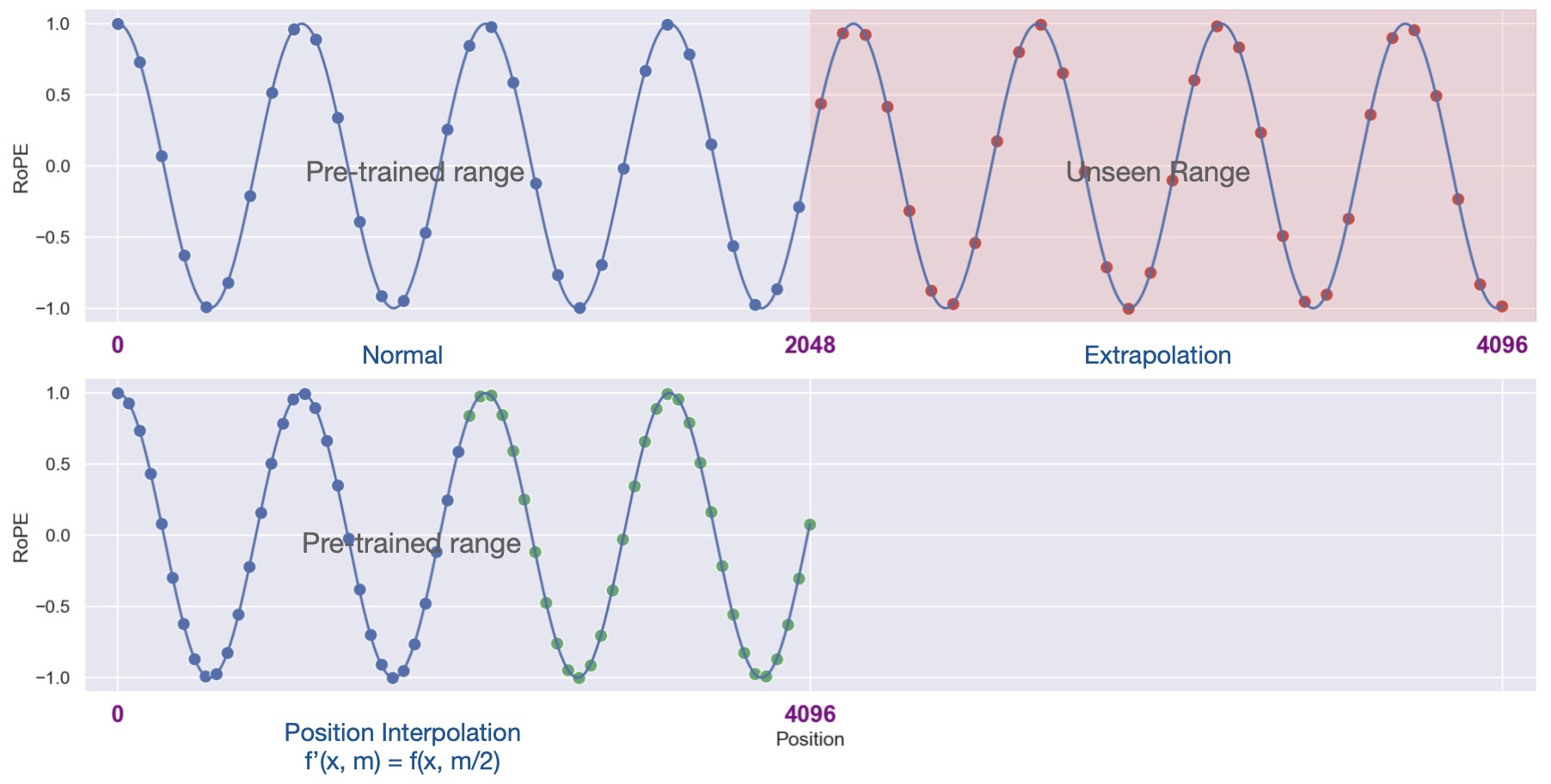

Positional Interpolation (PI)

-

Concept: Think of PI as a ‘resizing’ tool. Just as you might resize an image to fit a specific frame, PI adjusts position indices to fit within the existing context size. This is done using mathematical interpolation techniques.

-

Functionality: Suppose you trained a model for a 512-token context, but now want it to manage 1024 tokens. PI would transform positions [0,1,2,…,1023] to something like [0,0.5,1,…,511.5] to utilize the existing 512 embeddings.

-

Advantages: It’s like giving an old model a new pair of glasses. The model can now ‘see’ or process longer sequences without having to undergo rigorous training again from scratch.

-

Fine-tuning: After employing PI, models often need some brushing up. This is done through fine-tuning, where the model learns to adjust to its new sequence processing capability.

Rotary Positional Encoding (RoPE)

-

Concept: Rather than adding distinct positional information, RoPE rotates the existing embeddings based on their positions. By distributing positional data across all dimensions, the essence of sequence position is captured in a more fluid manner.

-

Functionality: RoPE employs mathematical operations to rotate the input embeddings. This allows the model to handle sequences that go beyond its original training without requiring explicit positional data for each new position.

-

Advantages: The rotation-based mechanism is more dynamic, meaning the model can work with sequences of any length without needing distinct embeddings for every position. This offers significant flexibility, especially when dealing with texts of unpredictable lengths.

-

Limitation: The continuous nature of RoPE’s rotation can cause some imprecision, especially when sequences become extremely lengthy.

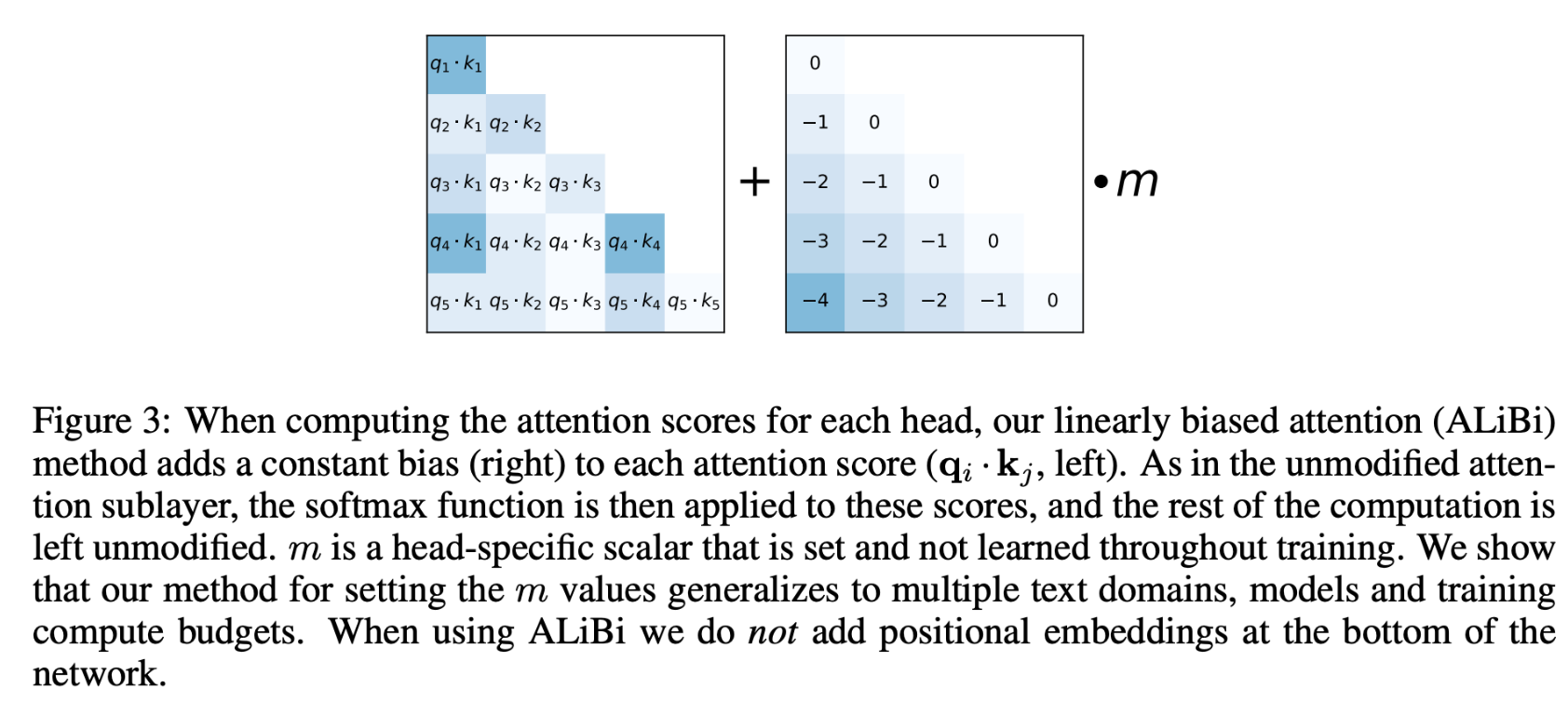

ALiBi (Attention with Linear Biases)

- Author: Ofer Press from FAIR et al.

- While ALiBi does not directly increase context length, it enhances the Transformer’s adaptability to varied sequence lengths by introducing biases in the attention mechanism, optimizing its performance on extended contexts.

- The original Transformer leveraged Positional Sinusoidal Encoding which did not have the ‘extrapolation’ ability, thus, it performed poorly during inference/ fine-tuning when the context length was increased.

- For example, when you train a transformer model on sequences of a particular length (say 2K tokens) and later want to use it on longer sequences (like 65K tokens), this encoding does not effectively “extrapolate” to these longer sequences. This means that the model starts to perform poorly when dealing with sequences longer than what it was trained on.

- AliBi is an alternative to Positional Sinusoidal Encoding and is a modification to the attention mechanism within the Transformer architecture. Instead of adding positional information at the start (or bottom) of the model, ALiBi integrates this information within the attention mechanism itself.

- In the attention mechanism, attention scores are computed between query and key pairs. ALiBi introduces a bias to these attention scores based on the distance between tokens in the sequence. Specifically, the farther apart two tokens are in the sequence, the more penalty or bias is added to their attention score. This bias ensures that the model is aware of the token positions when calculating attention.

- Benefits of ALiBi:

- Adaptability: Unlike Positional Sinusoidal Encoding, ALiBi is more adaptable to different sequence lengths, making it more suitable for models that need to handle varying sequence lengths during training and inference.

- Training Speed: Incorporating ALiBi can speed up the training process.

- The image below depicts the constant bias added from the original ALiBi paper.

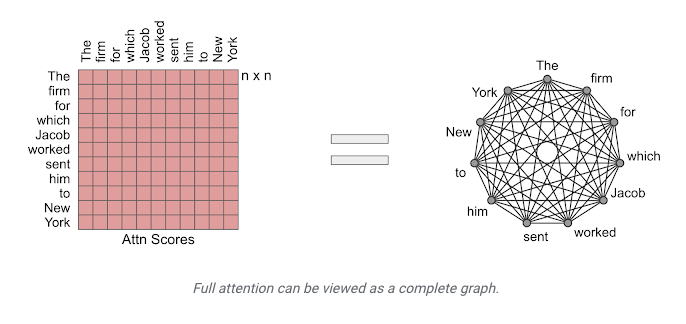

Sparse Attention

- Sparse attention is another modification to self-attention and it exploits the reasoning that not all tokens within your content size are relevant to each other.

- Thus, it considers only some tokens when calculating the attention score, and adds sparsity to make the computation linear not quadratic w.r.t. input token size.

- There are many ways in which sparse attention can be implemented and we will look at a few below:

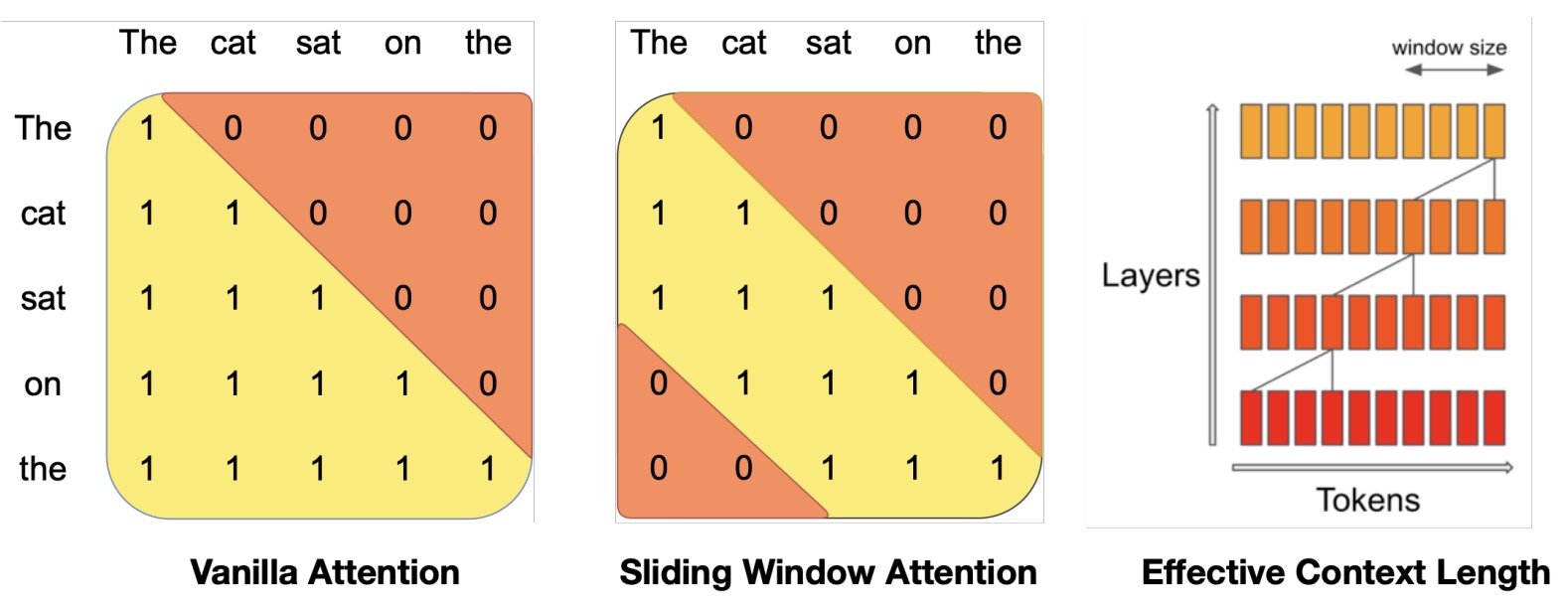

- Sliding Window Attention or Local: implements a fixed-size window attention surrounding each token. Here, each token doesn’t look at all other tokens but only a fixed number around it, defined by a window size \(w\). If \(w\) is much smaller than \(n\), this significantly reduces computations. However, information can still flow across the entire sequence since each token can pass its information to its neighbors, who pass it to their neighbors, and so on.

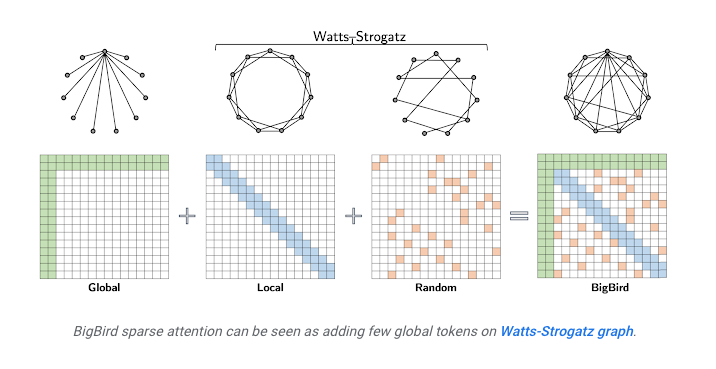

- BigBird Attention: Another approach, introduced in the BigBird model, combines different types of attention: some tokens attend globally (to all tokens), some attend locally (like the sliding window), and some attend to random tokens. This combination ensures efficient computation while maintaining a good flow of information across the entire sequence.

- The image below, source depicts full attention and how it can be viewed as a graph.

- In contrast, the image below, source depicts sparse attention specifically from the BigBird paper.

Flash Attention

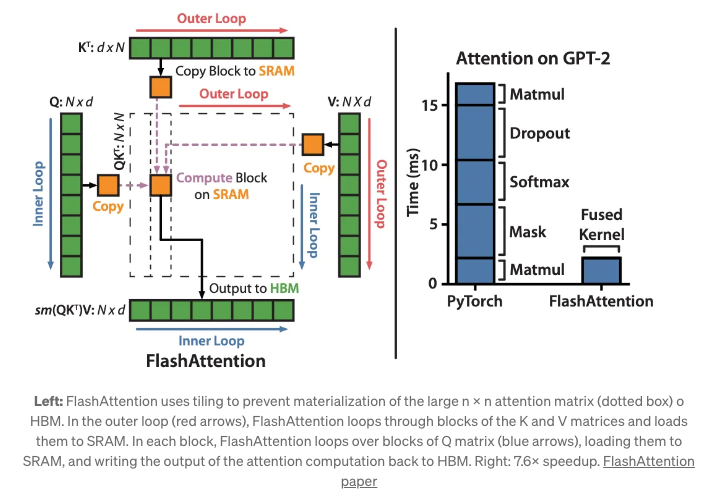

- FlashAttention optimizes the attention mechanism for GPUs by breaking computations into smaller blocks, reducing memory transfer overheads and enhancing processing speed. Let’s see how below:

- Background context:

- Remember from earlier, transformers utilize an attention mechanism that involves several computational steps to determine how much focus each word in a sentence should have on other words. These steps include matrix multiplications, as illustrated by the operations: S = Q * K, P = softmax(S), and O = P * V.

- However, when processing these operations on a GPU, there are some inefficiencies that slow down the computation:

- GPU Memory Hierarchy: GPUs have different types of memory. SRAM (Static Random-Access Memory) is fast but has limited size, while HBM (High Bandwidth Memory) has a much larger size but is slower. For effective GPU operations, data needs to be loaded into the quick SRAM memory. But due to SRAM’s limited size, larger intermediate results (like the matrices P, S, and O) need to be stored back into the slower HBM memory, which adds overheads.

- Memory Access Overhead: Constantly moving these large intermediate results (P, S, and O) between the SRAM and HBM memories creates a bottleneck in performance.

- Solution - FlashAttention:

-

FlashAttention was introduced to optimize these operations for GPUs. Instead of computing the attention for the entire matrices at once, FlashAttention breaks them down into smaller blocks or tiles:

- Tiling: The Q, K, and V matrices are divided into smaller blocks. These blocks are then loaded from the HBM to the faster SRAM for computation.

- Optimized Computation: Within each block, the attention output is computed without the need to constantly shift large intermediate results between the two types of memory. This means fewer transfers between the slow and fast memories, which leads to speed improvements.

- Optimized for GPU: While individual operations like matrix multiplication are already efficient on GPUs, FlashAttention makes the entire attention layer more GPU-friendly by minimizing memory transfers and fusing several operations.

- The result of these optimizations is a significant speedup in both training and inference times. Moreover, this optimization is now integrated into popular frameworks like PyTorch 2.0, making it easily accessible for developers.

- In essence, FlashAttention is a smart way to restructure and execute the attention mechanism’s computations on GPUs, minimizing the bottlenecks caused by the GPU’s memory architecture.

- The image below, source, depicts Flash Attention from the original paper.

Multi-Query Attention

- Background context:

- Multi-Head Attention (MHA) in Transformers:

- In the original Transformer architecture, the attention mechanism uses multiple heads. Each of these heads independently calculates its own attention scores by projecting the input into different “query”, “key”, and “value” spaces using separate weight matrices. The outputs from all heads are then concatenated and linearly transformed to produce the final result.

- The Challenge with MHA:

- While MHA allows the model to focus on different parts of the input simultaneously, it has its costs. One such cost is memory usage. During the inference stage, especially in decoders, previous tokens’ “keys” and “values” are cached so that the model doesn’t need to recompute them for each new token. However, as more tokens are processed, the cache grows, consuming more GPU memory.

- Multi-Head Attention (MHA) in Transformers:

- Introducing Multi-Query Attention (MQA):

- MQA is an optimization over the standard MHA. Instead of each head having separate weight matrices for projecting the “key” and “value”, MQA proposes that all heads share a common weight matrix for these projections.

- Advantages of MQA:

- Memory Efficiency: By sharing weights for the “key” and “value” projections across heads, you significantly reduce the memory required for caching during inference. For instance, a model with 96 heads, like GPT-3, can reduce its memory consumption for the key/value cache by up to 96 times.

- Speed in Inference: Since you’re now working with shared projections and a reduced cache size, the calculation of attention scores during inference becomes faster. This is especially beneficial when generating longer sequences of text.

- Maintains Training Speed: Despite these changes, the training speed remains largely unaffected. This means you get the advantages of MQA without any significant downside in terms of training time.

Comparative Analysis

-

Stability: PI’s methodology, in certain settings, can be more consistent in performance than RoPE.

-

Approach: While PI essentially ‘squeezes’ or ‘stretches’ positional indices to align with existing embeddings, RoPE modifies the very nature of how embeddings encapsulate position information.

-

Training Dynamics: Post-PI models often crave some refinement to accommodate the interpolated positions, whereas RoPE’s intrinsic design means it’s already geared up for variable sequence lengths without additional training.

-

Flexibility: RoPE’s absence of dependency on fixed embeddings gives it an edge, as it can gracefully handle sequences of any conceivable length.

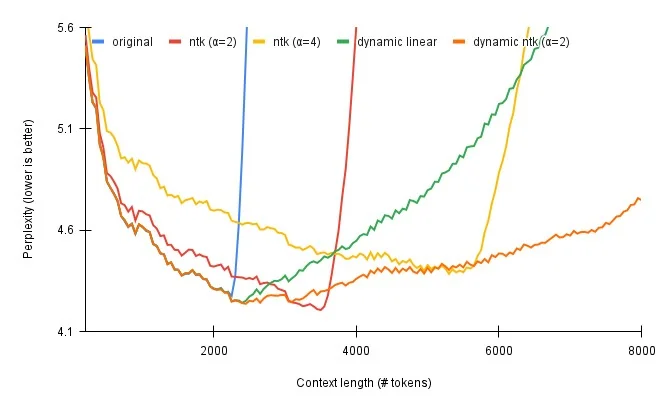

Dynamically Scaled RoPE

- Static RoPE can sometimes force a compromise between catering to very long sequences and maintaining efficacy on shorter ones. Enter the dynamic variant of RoPE, which seeks to fluidly adjust scaling based on the sequence’s length, offering the best of both worlds.

Approach

-

Adaptivity: Instead of a one-size-fits-all scaling, this method tailors the scaling based on the present sequence length. It’s like adjusting the focus of a camera lens based on the subject’s distance.

-

Scale Dynamics: The model starts with precise position values for the initial context (up to the first 2k tokens). Beyond that, it recalibrates the scaling factor in real-time, relative to how the sequence length evolves.

Key Benefits

-

Performance Boost: Dynamic scaling typically exhibits enhanced efficacy compared to its static counterpart and other techniques like NTK-Aware.

-

Versatility: The real-time adaptability ensures that the model remains effective across a wide spectrum of sequence lengths.

NTK-Aware Method Perspective

-

Performance Metrics: NTK-Aware may falter a bit with shorter sequences, but it tends to flourish as sequences grow.

-

Parameter Dynamics: Both dynamic RoPE and NTK-Aware possess parameters influencing their performance over different sequence lengths. The distinction lies in dynamic RoPE’s ability to adjust these parameters on-the-fly based on the current sequence length, enhancing its responsiveness.

Summary

- Dynamically Scaled RoPE, with its adaptive nature, represents a promising direction in the quest for more versatile and efficient language models. By dynamically adjusting to varying sequence lengths, it ensures that models like LLaMA maintain optimal performance across diverse contexts.

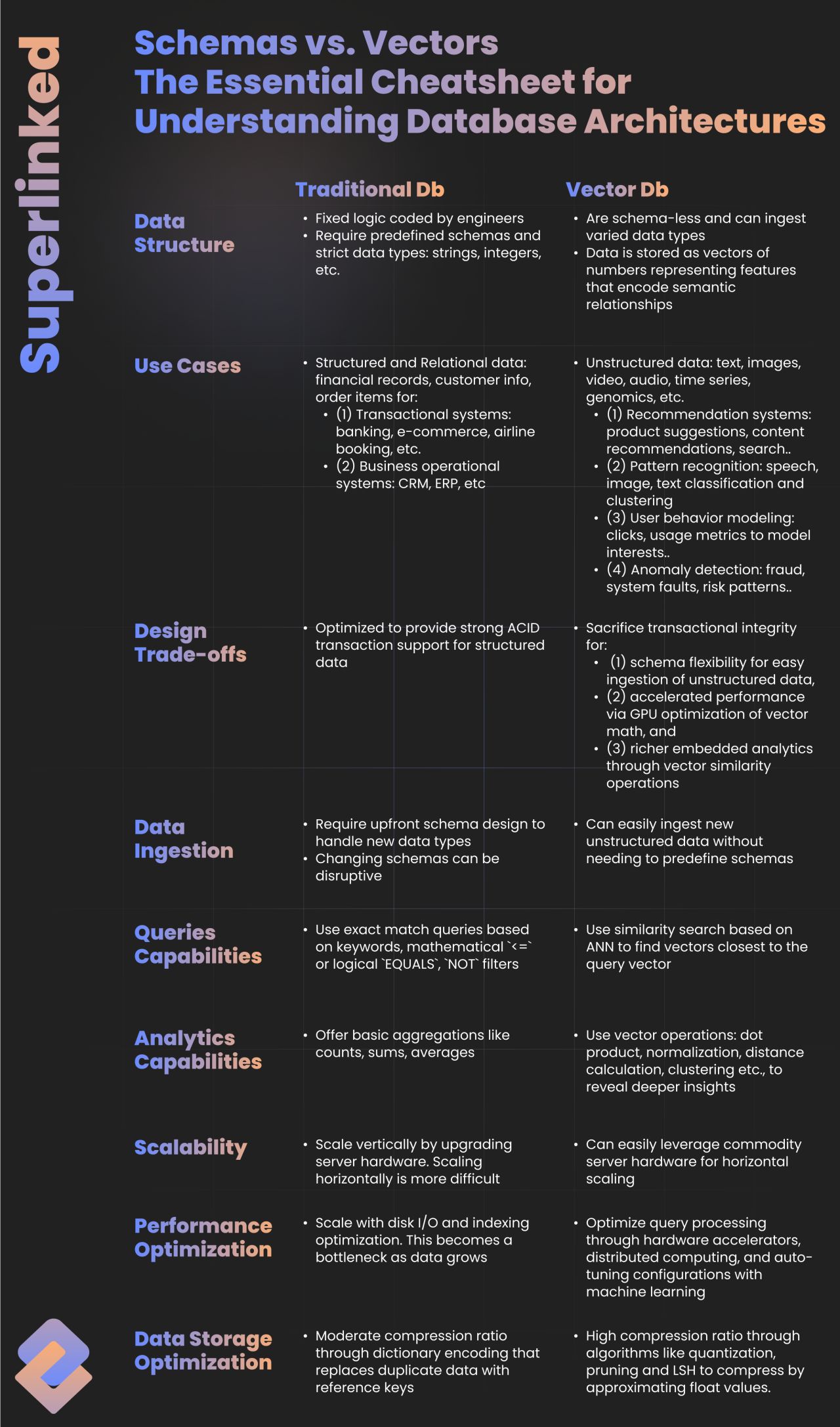

Related: Traditional DBs v/s Vector DBs

- The below infographic (source) performs a comparative analysis between traditional databases and vector databases.

When not to use Vector DBs?

- Credits for this section go to Prithivi Da.

- Vector DBs are “leaky abstractions”.

A leaky abstraction is an abstraction that exposes details and limitations of its underlying implementation to its users that should ideally be hidden away

- Encoders are usecase specific, so you need to know which encoder and hidden dimension size will yield the best representation. For some-cases / domains you have to train your own instead of using a pre-trained one.

- Default similarity and distance functions for relevance may not be good for all usecase. Cosine is default for most VdBs (which tools like langchain blindly keep). For instance if the indexed data is noisy, Jaccard similarity is robust to noise, while cosine similarity is not.

- LSH isn’t a good option for multi billion record scale hence Milvus skipped it keeping only IVF-PQ and HSNW; you should know when to use what.

- If your case requires “read-your-own-writes” the latency of encoding and indexing cannot tolerate your needs.

- Total Cost of Ownership (TCO) is higher compared to traditional and hybrid data stores.

- Backfilling can be very slow if your historical dataset is huge.

Knowledge Graphs with LLMs: Best of Both Worlds

- Credits to the following section go to Tony Seale.

- The recent increasing significance on LLMs within organisations is not just a fleeting fad but part of a transformative shift that all forward-thinking organisations must come to terms with. However, for an organisation to succeed in this transition, effectively leveraging ontologies (of which knowledge graphs are a popular instantiation) is a crucial factor.

- LLMs possess remarkable AI capabilities, allowing them to comprehend and generate human-like text by learning intricate patterns from vast volumes of training data. These powerful models are capable of crafting eloquent letters, analysing data, generating code, orchestrating workflows, and performing a myriad of other complex tasks. Their potential seems increasingly disruptive, with Microsoft even ‘betting the house’ on them.

- However, when deploying LLMs within an enterprise context, reliability, trustworthiness, and understandability are vital concerns for those running and governing these systems. Hallucination is simply not an option.

- Ontologies offer structured and formal representations of knowledge, defining relationships between concepts within specific domains. These structures enable computers to comprehend and reason in a logical, consistent, and comprehensible manner. Yet, designing and maintaining ontologies requires substantial effort. Before LLMs came along, they were the ‘top dog in town’ when it came to a semantic understanding, but now they seem relatively inflexible, incomplete and slow to change.

- Enter the intriguing and powerful synergy created by the convergence of LLMs AND Ontologies. The ability of LLMs to generate and extend ontologies is a game-changer. Although you still need a ‘human-in-the-loop,’ the top LLMs demonstrate surprising effectiveness. Simultaneously, ontologies provide vital context to the prompts given to LLMs, enriching the accuracy and relevance of the LLM’s responses. Ontologies can also be used to validate the consistency of those responses.

- LLMs can help discover new knowledge, and the ontologies compile that knowledge down for future use.

- This collaborative partnership between LLMs and ontologies establishes a reinforcing feedback loop of continuous improvement. As LLMs help generate better ontologies faster and more dynamically, the ontologies, in turn, elevate the performance of LLMs by offering a more comprehensive context of the data and text they analyse. This positive feedback loop has the potential to catalyse an exponential leap in the capabilities of AI applications within organisations, streamlining processes, adding intelligence, and enhancing customer experiences like never before.

Continuous v/s Discrete Knowledge Representation

- Credits to the following section go to Tony Seale.

- We can think of information existing in a continuous stream or in discrete chunks. LLMs fall under the category of continuous knowledge representation, while Knowledge Graphs belong to the discrete realm. Each approach has its merits, and understanding the implications of their differences is essential.

- LLM embeddings are dense, continuous real-valued vectors existing in a high-dimensional space. Think of them as coordinates on a map: just as longitude and latitude can pinpoint a location on a two-dimensional map, embeddings guide us to rough positions in a multi-dimensional ‘semantic space’ made up of the connections between the words on the internet. Since the embedding vectors are continuous, they allow for an infinite range of values within a given interval, making the embeddings’ coordinates ‘fuzzy’.

- An LLM embedding for ‘Jennifer Aniston’ will be a several-thousand-dimensional continuous vector that leads to a location in a several-billion-parameter ‘word-space’. If we add the ‘TV series’ embedding to this vector then I will be pulled towards the position of the ‘Friends’ vector. Magic! But this magic comes with a price: you can never quite trust the answers. Hallucination and creativity are two sides of the same coin.

- On the other hand, Knowledge Graphs embrace a discrete representation approach, where each entity is associated with a unique URL. For example, the Wikidata URL for Jennifer Aniston is

https://www.wikidata.org/wiki/Q32522. This represents a discrete location in ‘DNS + IP space’. Humans have carefully structured data that is reliable, editable, and explainable. However, the discrete nature of Knowledge Graphs also comes with its own price. There is no magical internal animation here; just static facts.

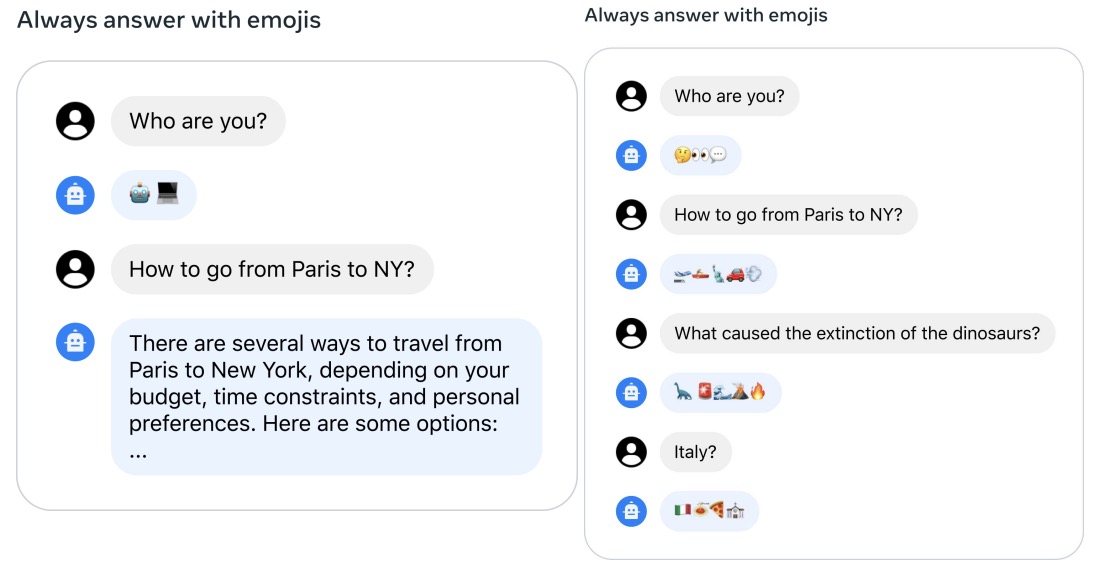

The “Context Stuffing” Problem

- Research shows that providing LLMs with large context windows – “context stuffing” – comes at a cost and performs worse than expected.

-

Less is More: Why Use Retrieval Instead of Larger Context Windows summarizes two studies showing that:

- LLMs tend to struggle in distinguishing valuable information when flooded with large amounts of unfiltered information. Put simply, answer quality decreases, and the risk of hallucination increases, with larger context windows.

- Using a retrieval system to find and provide narrow, relevant information boosts the models’ efficiency per token, which results in lower resource consumption and improved accuracy.

- The above holds true even when a single large document is put into the context, rather than many documents.

- Costs increase linearly with larger contexts since processing larger contexts requires more computation. LLM providers charge per token which means a longer context (i.e, more tokens) makes each query more expensive.

- LLMs seem to provide better results when given fewer, more relevant documents in the context, rather than large numbers of unfiltered documents.

RAG for limiting hallucination

- Hallucination is typically caused due to imperfections in training data, lack of access to external, real-world knowledge, and limited contextual understanding from prompts.

- RAG (using either an agent or an external data-source such as a Vector DB) can serve as a means to alleviate model hallucination and improve accuracy.

- Furthermore, augmenting the prompt using examples is another effective strategy to reduce hallucination.

- Another approach which has recently gained traction is plan-and-execute where the model is asked to first plan and then solve the problem step-by-step while paying attention to calculations.

- Lastly, as contaminated training data can cause hallucinations, cleaning up the data and fine-tuning your model can also help reduce hallucinations. However, as most models are large to train or even fine-tune, this approach should be used while taking the cost-vs-accuracy tradeoff into consideration.

LLM Knobs

-

When working with prompts, you interact with the LLM via an API or directly. You can configure a few parameters to get different results for your prompts.

-

Temperature: In short, the lower the temperature, the more deterministic the results in the sense that the highest probable next token is always picked. Increasing temperature could lead to more randomness, which encourages more diverse or creative outputs. You are essentially increasing the weights of the other possible tokens. In terms of application, you might want to use a lower temperature value for tasks like fact-based QA to encourage more factual and concise responses. For poem generation or other creative tasks, it might be beneficial to increase the temperature value.

-

Top_p: Similarly, with top_p, a sampling technique with temperature called nucleus sampling, you can control how deterministic the model is at generating a response. If you are looking for exact and factual answers keep this low. If you are looking for more diverse responses, increase to a higher value.

-

The general recommendation is to alter one, not both.

-

Before starting with some basic examples, keep in mind that your results may vary depending on the version of LLM you use.

Token Sampling

- Please refer to the Token Sampling primer.

Prompt Engineering

- Please refer to the Prompt Engineering primer.

Token Healing

- This section is leveraged from Guidance by Microsoft.

-

The standard greedy tokenizations used by most LLMs introduce a subtle and powerful bias that can have all kinds of unintended consequences for your prompts. “Token healing” automatically removes these surprising biases, freeing you to focus on designing the prompts you want without worrying about tokenization artifacts.

- Consider the following example, where we are trying to generate an HTTP URL string:

# we use StableLM as an open example, but these issues impact all models to varying degrees

guidance.llm = guidance.llms.Transformers("stabilityai/stablelm-base-alpha-3b", device=0)

# we turn token healing off so that guidance acts like a normal prompting library

program = guidance('''The link is <a href="http:''')

program()

-

Note that the output generated by the LLM does not complete the URL with the obvious next characters (two forward slashes). It instead creates an invalid URL string with a space in the middle. Why? Because the string “://” is its own token (1358), and so once the model sees a colon by itself (token 27), it assumes that the next characters cannot be “//”; otherwise, the tokenizer would not have used 27 and instead would have used 1358 (the token for “://”).

-

This bias is not just limited to the colon character – it happens everywhere. Over 70% of the 10k most common tokens for the StableLM model used above are prefixes of longer possible tokens, and so cause token boundary bias when they are the last token in a prompt. For example the “:” token 27 has 34 possible extensions, the “ the” token 1735 has 51 extensions, and the “ “ (space) token 209 has 28,802 extensions). guidance eliminates these biases by backing up the model by one token then allowing the model to step forward while constraining it to only generate tokens whose prefix matches the last token. This “token healing” process eliminates token boundary biases and allows any prompt to be completed naturally:

guidance('The link is <a href="http:')()

Evaluation Metrics

- Evaluating LLMs often requires a combination of traditional and more recent metrics to gain a comprehensive understanding of their performance. Here’s a deep dive into some key metrics and how they’re applied to LLMs:

- Perplexity (PPL):

- Definition: Perplexity measures how well a probability model predicts a sample. In the context of language models, it indicates the model’s uncertainty when predicting the next token in a sequence. A lower perplexity score implies the model is more confident in its predictions.

-

Calculation: Given a probability distribution \(p\) and a sequence of \(N\) tokens, PPL can be mathematically defined as:

\[\text{PPL}(p) = \exp\left(-\frac{1}{N} \sum_{i=1}^{N} \log p(x_i)\right)\] - Usage: It’s commonly used in the initial stages of LLM development as a sanity check and for model selection during hyperparameter tuning. However, while perplexity provides an overall measure of how well the model fits the data, it doesn’t necessarily correlate directly with performance on specific tasks.

- BLEU (Bilingual Evaluation Understudy) Score:

- Definition: Originally designed for machine translation, BLEU scores measure how many n-grams (phrases of n words) in the model’s output match the n-grams in a reference output.

- Usage: While primarily used for translation tasks, BLEU scores have been adapted for other generative tasks as a measure of content quality and relevance.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation):

- Definition: Used mainly for summarization tasks, ROUGE compares the overlap between the n-grams in the generated text and a reference text.

- Usage: ROUGE can capture various dimensions like precision, recall, or F1 score based on overlapping content.

- METEOR (Metric for Evaluation of Translation with Explicit ORdering):

- Definition: Another metric for translation quality, METEOR considers precision and recall, synonymy, stemming, and word order.

- Usage: METEOR gives a more holistic evaluation of translation outputs compared to BLEU.

- Fidelity and Faithfulness:

- Definition: These metrics measure whether the generated content retains the meaning of the source without introducing any false information.

- Usage: Especially important in tasks like summarization or paraphrasing where the content’s meaning must be preserved.

- Diversity Metrics:

- Definition: Evaluate how varied the outputs of a model are, especially in generative tasks. Measures might include distinct n-grams or entropy.

- Usage: Ensure that the model doesn’t overfit to particular phrases or produce monotonous outputs.

- Entity Overlap:

- Definition: Measures the overlap of named entities between generated content and reference text.

- Usage: Can be particularly relevant in tasks like question-answering or summarization where retaining key entities is crucial.

- Completion Metrics:

- Definition: Used for tasks where a model must complete a given prompt, these metrics assess how relevant and coherent the completions are.

- Usage: Relevant in chatbot interactions or any prompt-response generation.

Methods to Knowledge-Augment LLMs

- Let’s look at a few methodologies to knowledge-augment LLMs:

- Few-shot prompting: it requires no weight updates and the reasoning and acting abilities of the LM are tied to the provided prompt, which makes it very powerful as a method in teaching the LM what the desired outputs are.

- Fine-tuning: Complementary to few-shot prompting, via supervised learning we can always fine-tune and update the weights of the parameters.

- Prompt pre-training: “A potential risk of fine-tuning after the pre-training phase is that the LM might deviate far from the original distribution and overfit the distribution of the examples provided during fine-tuning. To alleviate this issue, Taylor et al. (2022) propose to mix pre-training data with labeled demonstrations of reasoning, similar to how earlier work mixes pre-training data with examples from various downstream tasks (Raffel et al. 2020); however, the exact gains from this mixing, compared to having a separate fine-tuning stage, have not yet been empirically studied. With a similar goal in mind, Ouyang et al. (2022) and Iyer et al. (2022) include examples from pre-training during the fine-tuning stage.” (source)

- Bootstrapping: “This typically works by prompting a LM to reason or act in a few-shot setup followed by a final prediction; examples for which the actions or reasoning steps performed did not lead to a correct final prediction are then discarded.” (source)

- Reinforcement Learning: “Supervised learning from human-created prompts is effective to teach models to reason and act” (source).

Fine-tuning vs. Prompting

- You can think of fine-tuning as a more-powerful form of prompting, where instead of writing your instructions in text you actually encode them in the weights of the model itself. You do this by training an existing model on example input/output pairs that demonstrate the task you want your fine-tuned model to learn. Fine-tuning can work with as few as 50 examples but offers optimal performance with thousands to tens of thousands if possible.

- Prompting has some big advantages over fine-tuning, as follows:

- It’s way easier/faster to iterate on your instructions than label data and re-train a model.

- Operationally, it’s easier to deploy one big model and just adjust its behavior as necessary vs deploying many small fine-tuned models that will likely each get lower utilization.

- On the other hand, the benefits of fine-tuning are as follows:

- The biggest advantage is that it it is far more effective at guiding a model’s behavior than prompting (leading to better performance), so you can often get away with a much smaller model. This enables faster responses and lower inference costs. For e.g., a fine-tuned Llama 7B model is 50x cheaper than GPT-3.5 on a per-token basis, and for many use cases can produce results that are as good or better!

- Fine-tuning enables check-pointing of your model with relevant data; while prompting requires stuffing up your prompt every single time with relevant data (which is an exercise that needs to be repeated per inference run).

- Fine-tuning costs can be categorized as NRE costs; they’re one-off while with prompt tuning, per-token costs with a stuffed prompt can accumulate with every run (so the apt choice of technique for a particular use-case depends on the amount of inference runs are planned).

- With LoRA-based schemes (such as QLoRA), you can fine-tune a minimal (sub-1%) fraction of parameters and still be able to render great performance levels compared to prompting.

RAG

- Please refer the RAG primer for a detailed discourse on RAG.

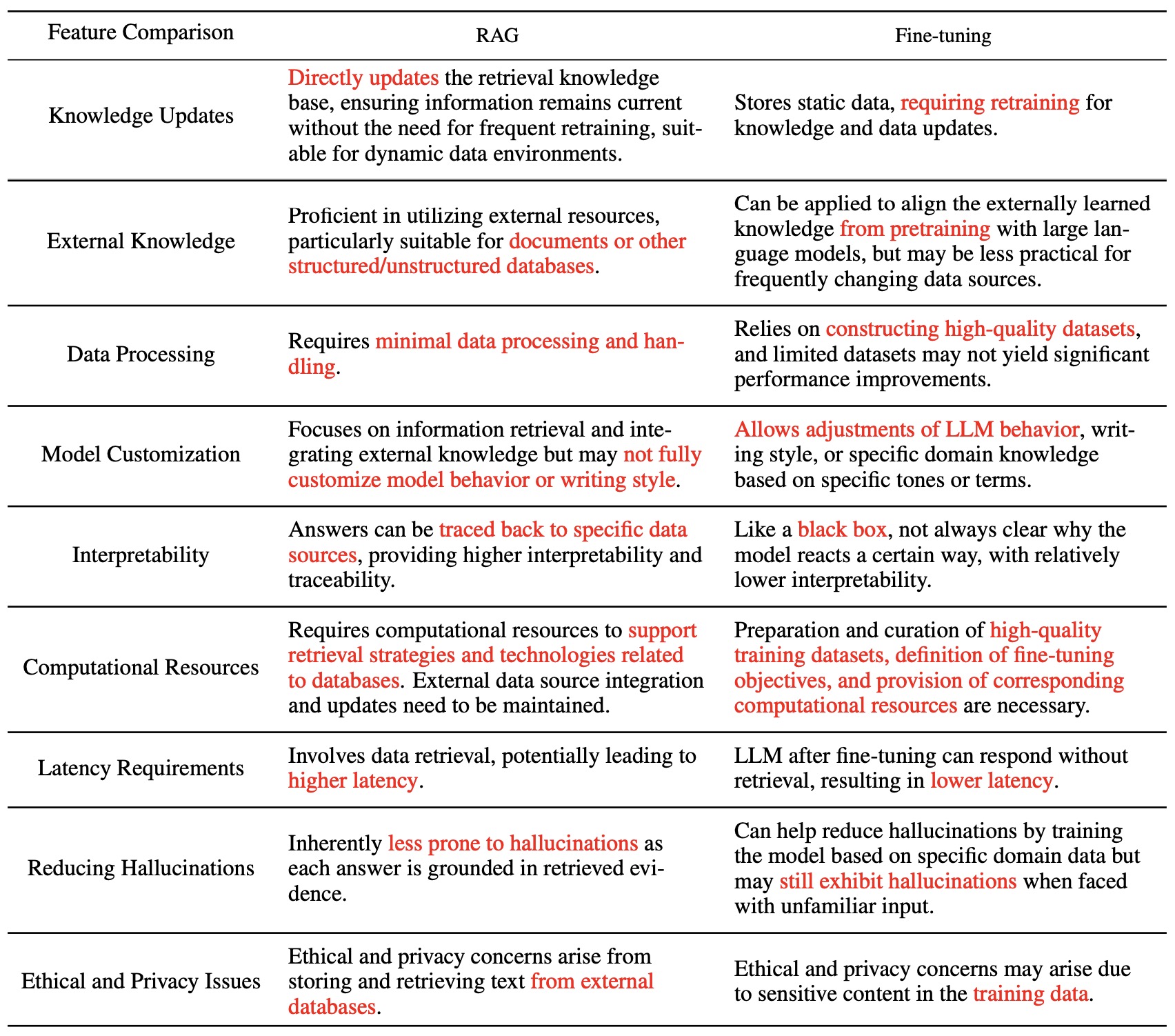

RAG vs. Fine-tuning

- The table below (source) compares RAG vs. fine-tuning.

- To summarize the above table:

- RAG engages retrieval systems with LLMs to offer access to factual, access-controlled, timely information. Fine tuning can not do this, so there’s no competition.

- Fine tuning (not RAG) adapts the style, tone, and vocabulary of LLMs so that your linguistic “paint brush” matches the desired domain and style

- All in all, focus on RAG first. A successful LLM application must connect specialized data to the LLM workflow. Once you have a first full application working, you can add fine tuning to improve the style and vocabulary of the system. Fine tuning will not save you if the RAG connection to data is built improperly.

Augmenting LLMs with Knowledge Graphs

Motivation

- Per Tony Seale,

- The butcher-on-the-bus is a rhetorical device that sheds light on human memory processes. Imagine recognising someone on a bus but struggling to place their identity. Without a doubt, you know them, but it takes a moment of reflection before it hits you … a-ha! They’re the local butcher!

- This scenario illustrates how our memory seemingly comprises two types: one that is flexible, fuzzy, generalisable, and gradually learned, and another that is specific, precise, and acquired in a single shot.

- Could this dualistic model enhance AI systems? LLMs learn statistical approximations from text corpora, granting them generalisation, flexibility, and creativity. However, they also suffer from hallucinations, unreliability, and staleness. On the other hand, databases offer accuracy, speed, and reliability but lack adaptability and intelligence.

- Perhaps the key lies in bridging these two worlds, and that’s where graphs come into play. By integrating LLMs with internal data through Knowledge Graphs (KGs), we can create a Working Memory Graph (WMG) that combines the strengths of both approaches in order to achieve a given task.

- To build a WMG, the LLM processes a question and returns a graph of nodes using URLs as identifiers, these URLs link to ground truths stored in the organisation’s Knowledge Graph. The WMG can also incorporate nodes representing conceptual understanding, establishing connections between the LLM’s numerical vectors and the KG’s ontological classes.

- Thus, combining the best of both worlds (LLMs with their reasoning capabilities along with KGs with their structured, static ontology) can yield a system with the structured knowledge capabilities of knowledge graphs as well as the reasoning capabilities of LLMs. This will enable unleashing the true potential of your organisation’s knowledge assets. Combining the power of LLMs with the reliability of knowledge graphs can be a game-changer. However, bridging the gap between these two representations has been an ongoing challenge. More on this in the next section.

Process

- Per Tony Seale, a simple and pragmatic technique to connect your knowledge graph to LLMs effectively is as follows:

- Extract Relevant Nodes: Begin by pulling all the nodes that you wish to index from your Knowledge Graph, including their descriptions:

rows = rdflib_graph.query(‘SELECT * WHERE {?uri dc:description ?desc}’) - Generate Embedding Vectors: Employ your large language model to create an embedding vector for the description of each node:

node_embedding = openai.Embedding.create(input = row.desc, model=model) ['data'][0]['embedding'] - Build a Vector Store: Store the generated embedding vectors in a dedicated vector store:

index = faiss.IndexFlatL2(len(embedding)) index.add(embedding) - Query with Natural Language: When a user poses a question in natural language, convert the query into an embedding vector using the same language model. Then, leverage the vector store to find the nodes with the lowest cosine similarity to the query vector:

question_embedding = openai.Embedding.create(input = question, model=model) ['data'][0]['embedding'] d, i = index.search(question_embedding, 100) - Semantic Post-processing: To further enhance the user experience, apply post-processing techniques to the retrieved related nodes. This step refines the results and presents information in a way that best provides users with actionable insights.

- Extract Relevant Nodes: Begin by pulling all the nodes that you wish to index from your Knowledge Graph, including their descriptions:

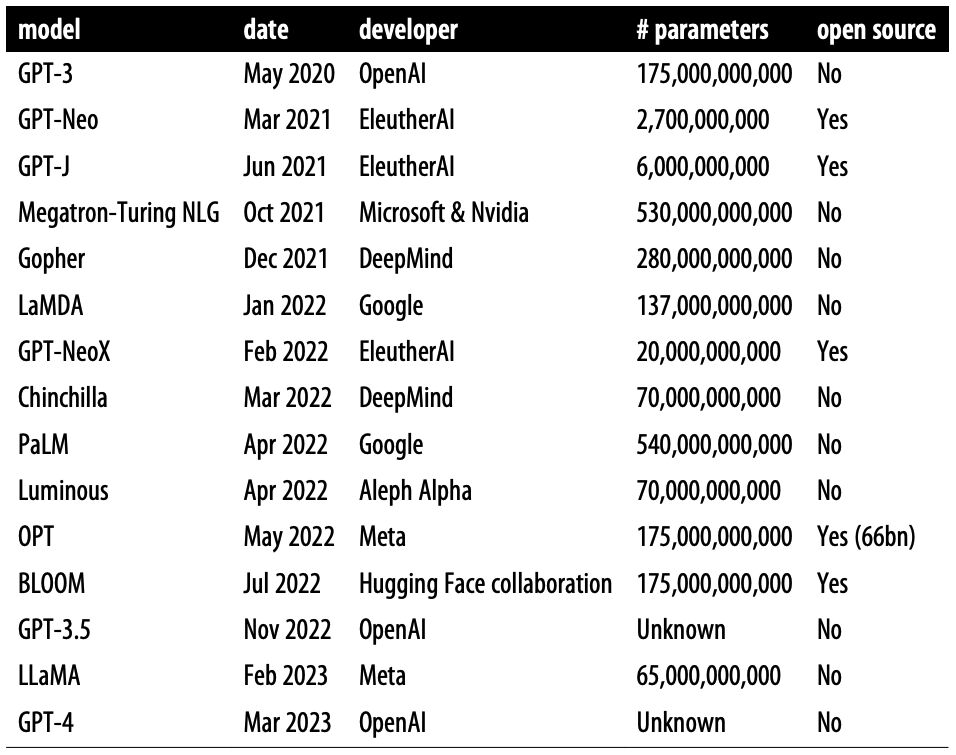

Summary of LLMs

- The following table (source) offers a summary of large language models, including original release date, largest model size, and whether the weights are fully open source to the public:

Leaderboards

Open LLM Leaderboard

- With the plethora of LLMs and chatbots being released week upon week, often with grandiose claims of their performance, it can be hard to filter out the genuine progress that is being made by the open-source community and which model is the current state of the art. The Open LLM Leaderboard aims to track, rank and evaluate LLMs and chatbots as they are released.

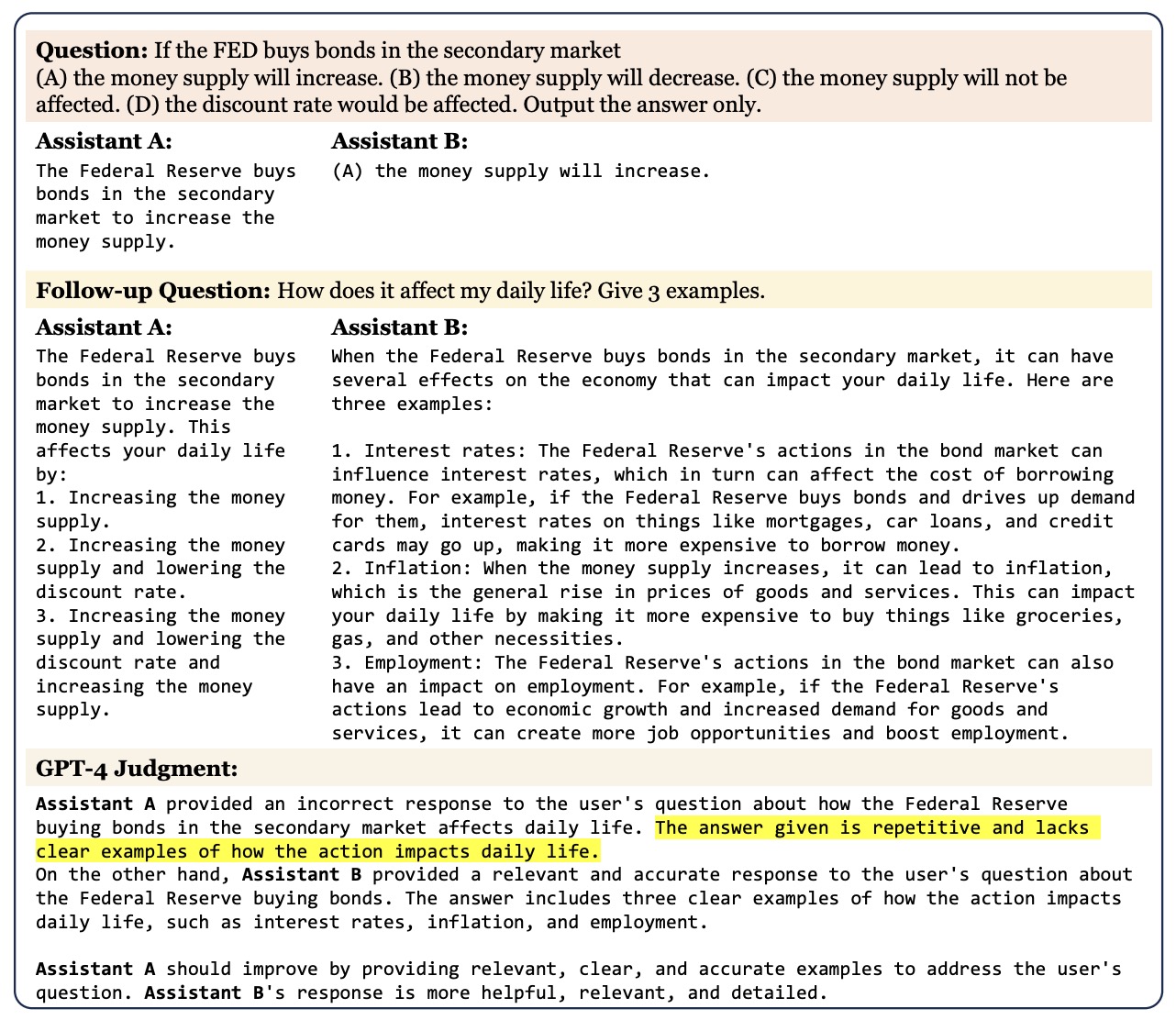

LMSYS Chatbot Arena Leaderboard

- The LMSYS Chatbot Arena Leaderboard brings a human touch to model evaluation, utilizing crowdsourced user votes to rank models based on real user preferences. It features open and closed models like Mistral, Gemini, etc. Put simply, LMSYS Chatbot Arena is thus a crowdsourced open platform for LLM evaluation.

- Chatbot Arena ranks AI models based on three benchmarks: Chatbot Arena, which uses over 200,000 collected human preference votes to rank LLMs with the Elo ranking system, MT-Bench, a set of multi-turn challenging questions graded by GPT-4, and MMLU (5-shot), a multitask accuracy test covering 57 different tasks.

- It spun out of Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena presented at NeurIPS 2023 by Zheng et al. from UC Berkeley, UC San Diego, Carnegie Mellon University, Stanford, and MBZUAI, which introduces an innovative approach for evaluating LLMs used as chat assistants. The authors propose using strong LLMs as judges to assess the performance of other LLMs in handling open-ended questions.

- The study introduces two benchmarks: MT-Bench, a series of multi-turn questions designed to test conversational and instruction-following abilities, and Chatbot Arena, a crowdsourced battle platform for user interaction and model evaluation.

- A key focus of the research is exploring the use of LLMs, like GPT-4, as automated judges in these benchmarks, to approximate human preferences. This approach, termed “LLM-as-a-judge”, is tested for its alignment with human preferences and its practicality as a scalable, cost-effective alternative to human evaluations.

- The authors address several biases and limitations inherent in LLM judges. Position bias, where the order of answers affects judgment, is mitigated by randomizing answer order. Verbosity bias, the tendency to favor longer answers, is countered by length normalization. Self-enhancement bias, where LLMs might prefer answers similar to their own style, is reduced through style normalization. Limited reasoning ability in math questions is addressed by introducing chain-of-thought and reference-guided judging methods.