Recommendation Systems • System Design

- Overview

- Glossary

- Cheat Sheet

- Components Cheatsheet

- Rate limiters

- Monitoring systems

- SQL vs NoSQL

- Blob storage

- Key value stores

- Load balancer

- Kafka

- Streaming Platform:

- Streaming Platform:

- Real-Time Stream Processing:

- Serverless Computing (Task Runners):

- Data Storage (Click Capture, Aggregated Data):

- Content Delivery Network (CDN):

- Analytics Service:

- MapReduce for Reconciliation:

- Caching Systems:

- Non-Relational Databases (NoSQL):

- Relational Databases (SQL):

- Columnar Databases:

- Parquet (Columnar File Format):

- Summary:

- System Design Cheatsheet

- Basic Steps

- Key topics for designing a system

- Web App System design considerations:

- Working Components of Front-end Architecture

- Embeddings

- Hashing trick

- Facebook Ads Ranking

- Twitter’s Recommendation Algorithm

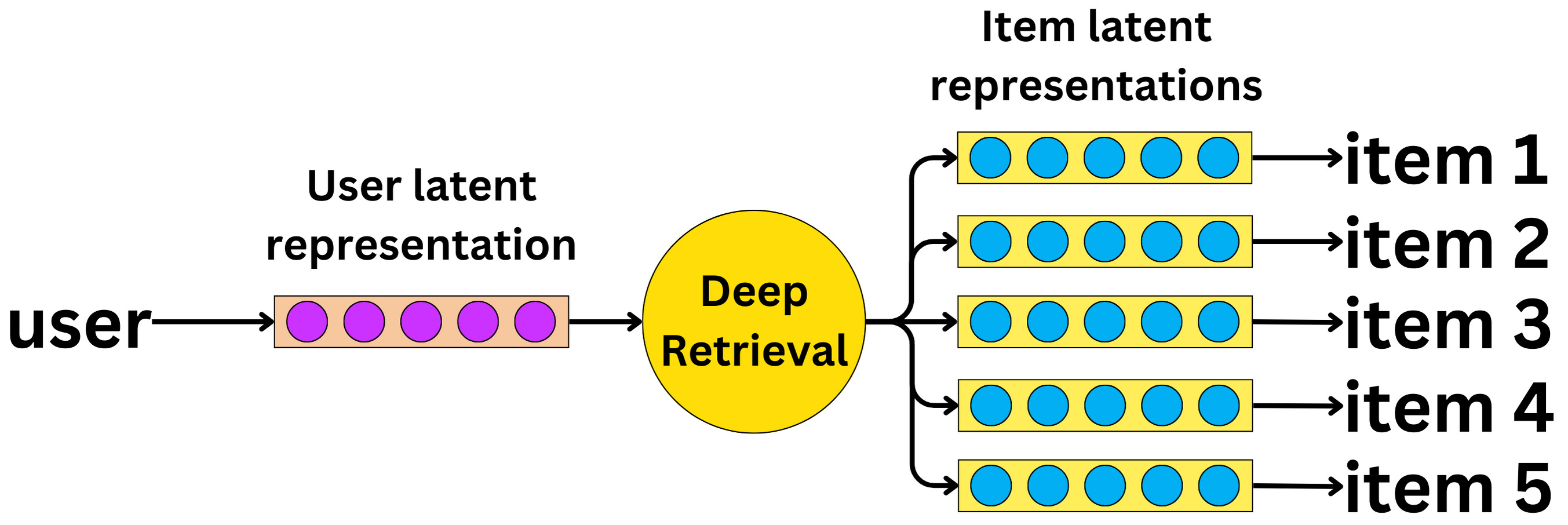

- Twitter’s Retrieval Algorithm: Deep Retrieval

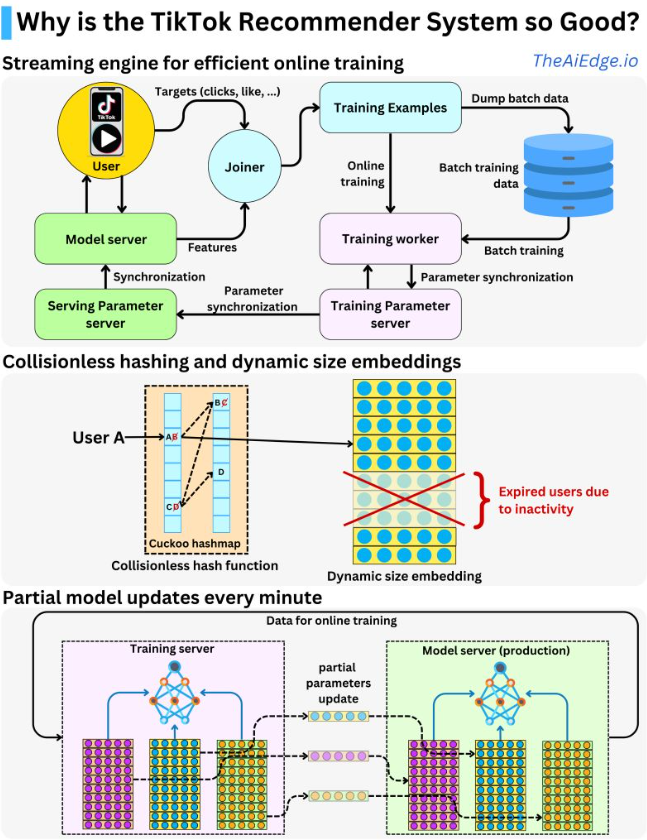

- TikTok Recommender System

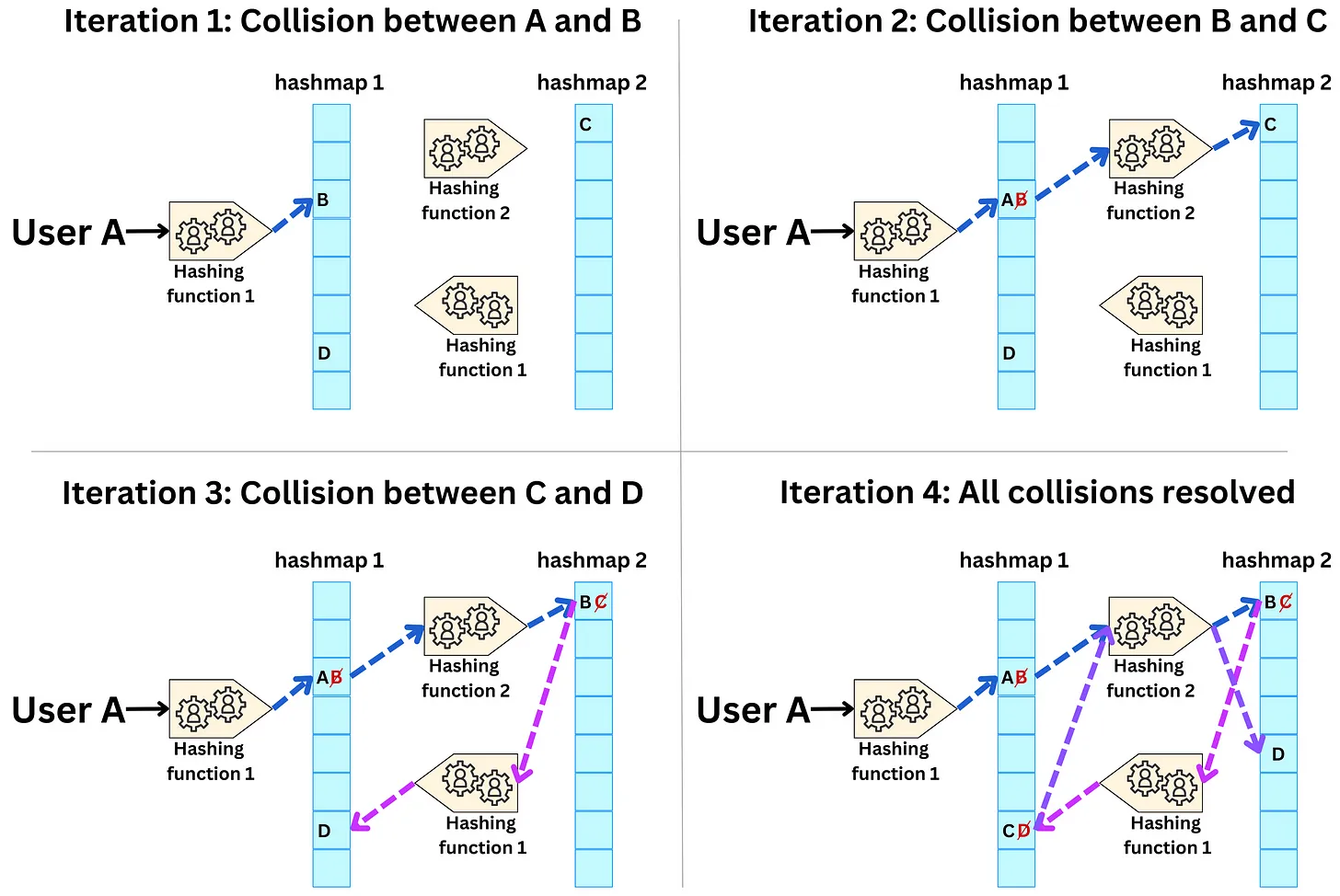

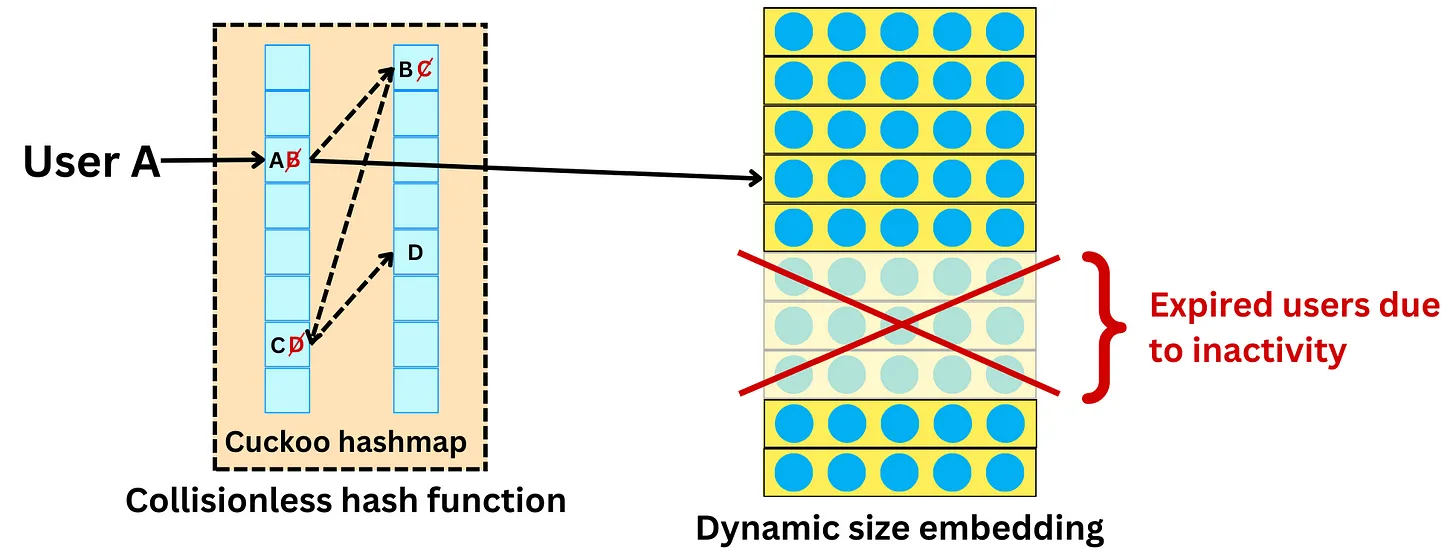

- Collisionless hashing

- Dynamic size embeddings

- Instantaneous updates during runtime

- Sparse variables:

- Conflated categories:

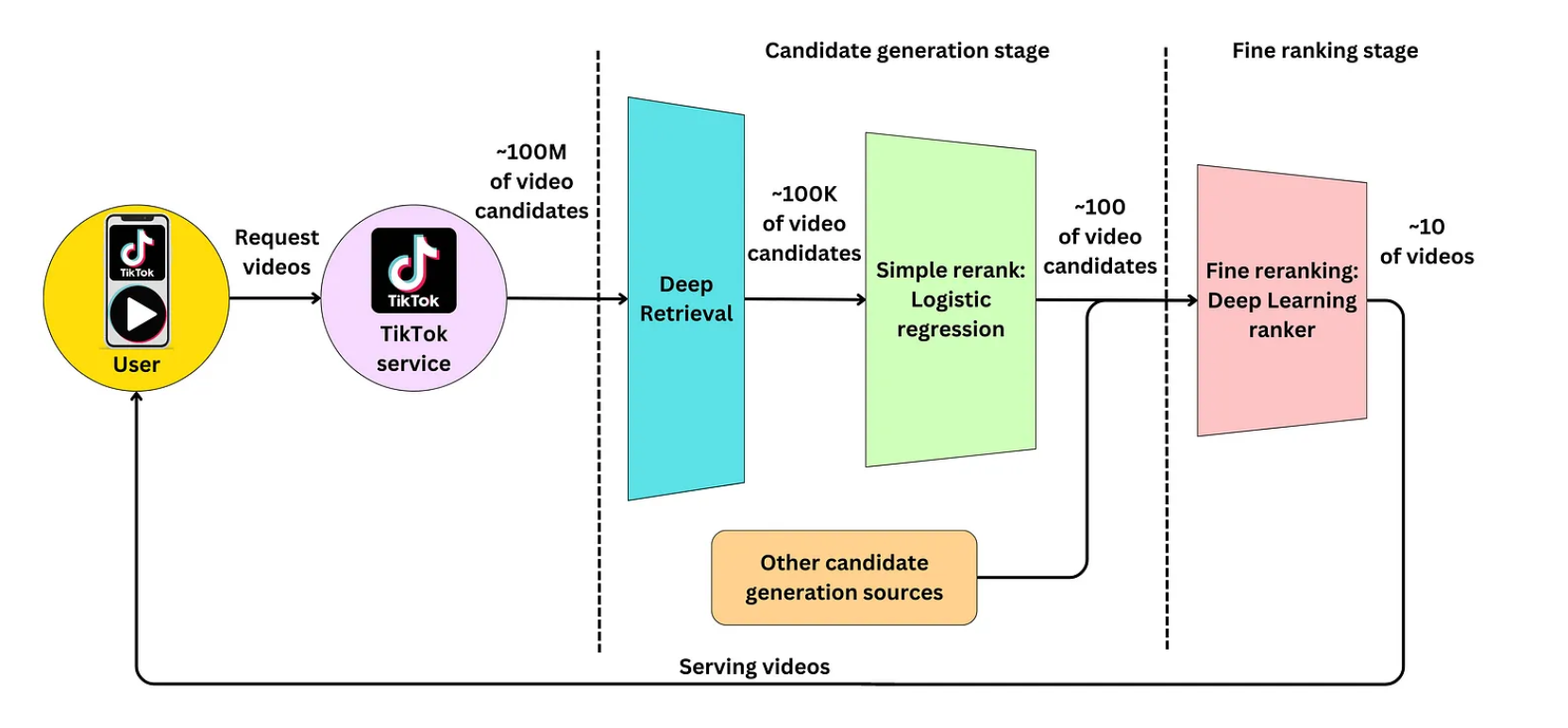

- Candidate generation stage

- Fine ranking stage

- Multi-gate Mixture of Experts

- Correcting selection bias

- Sampling the data for Offline training

- Real time streaming engine for online training

- Streaming engine architecture

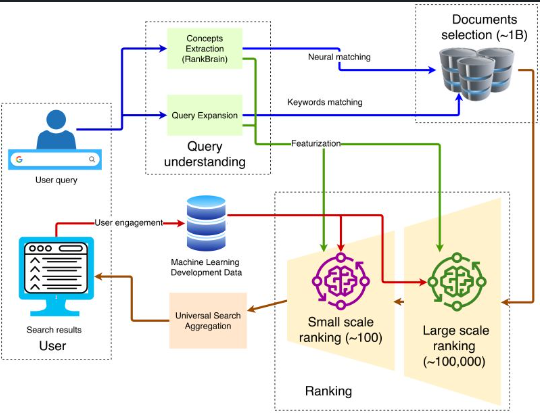

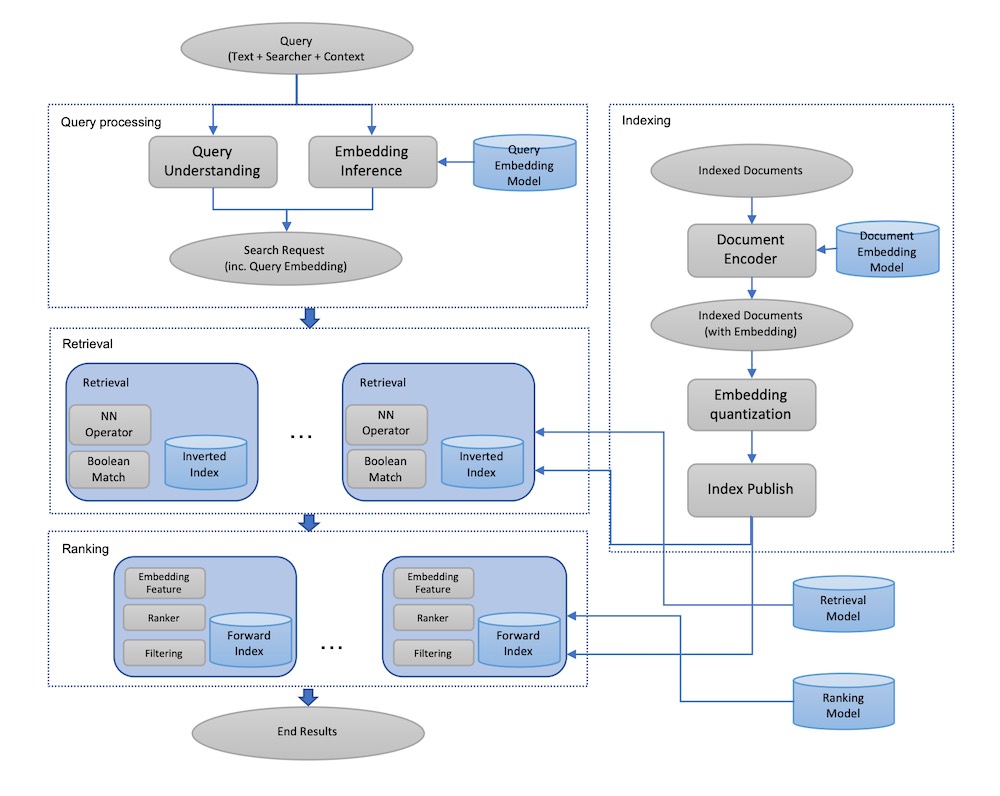

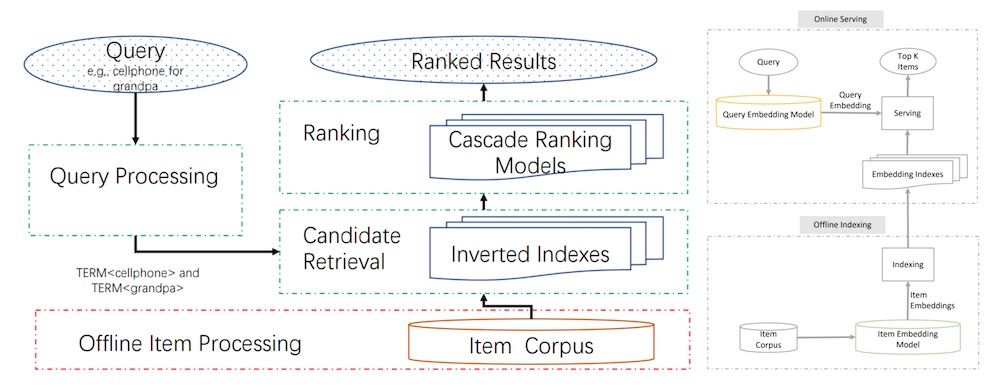

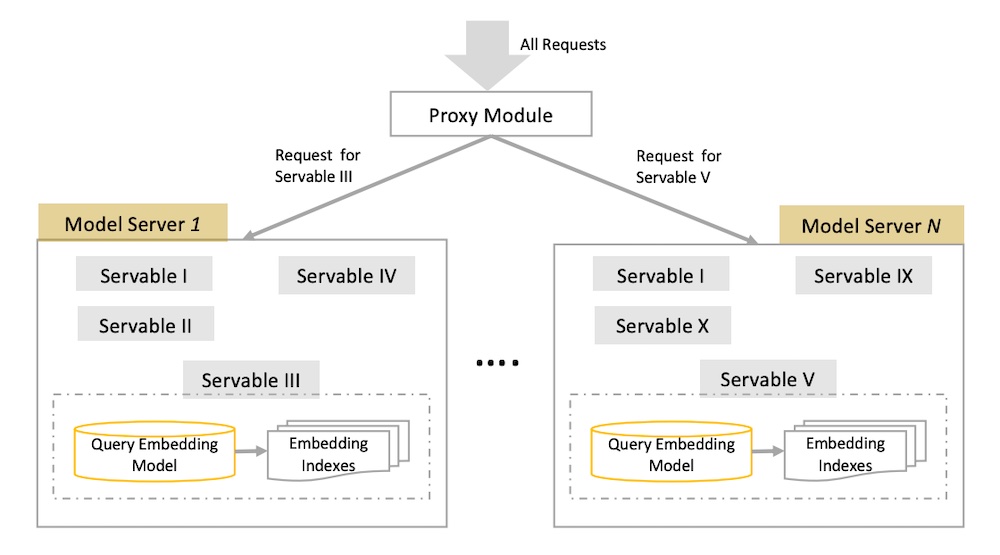

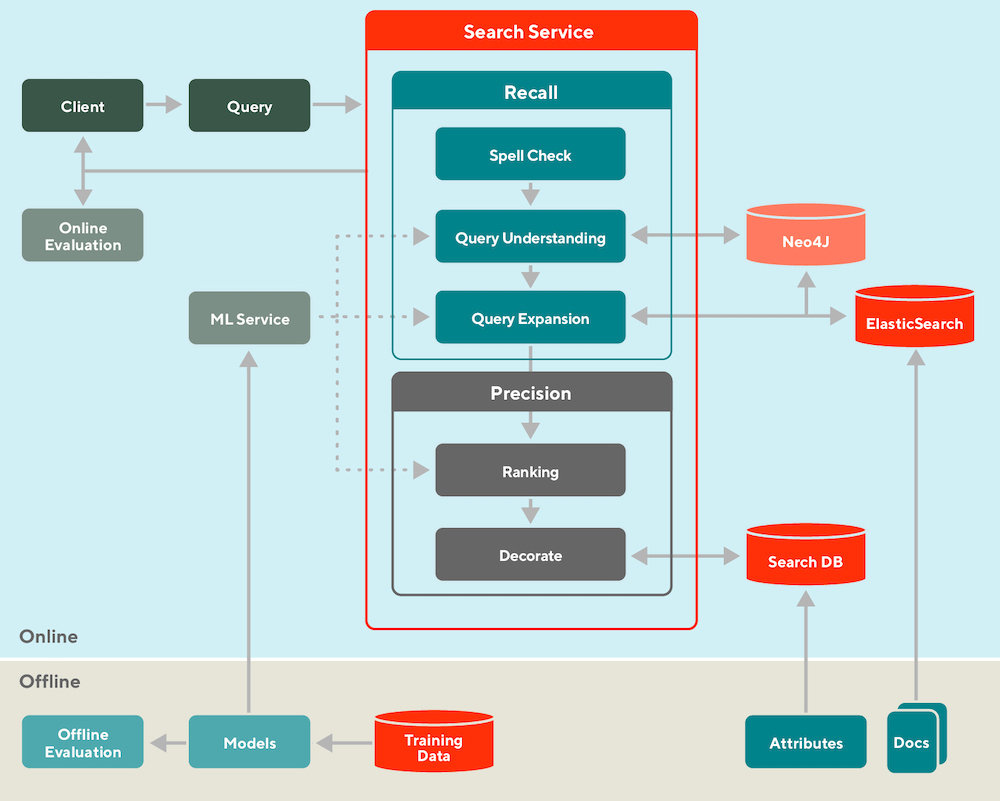

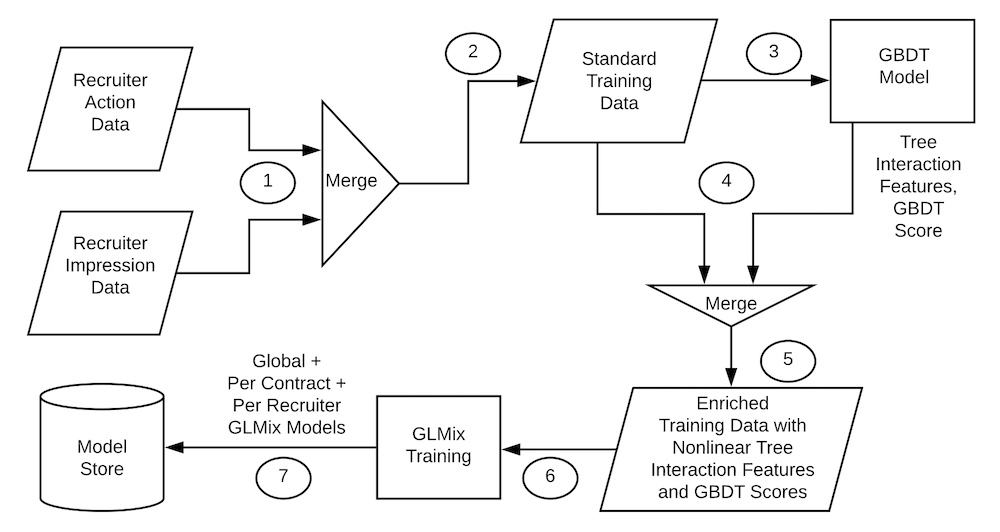

- Google Search - by Damien Benveniste, PHD

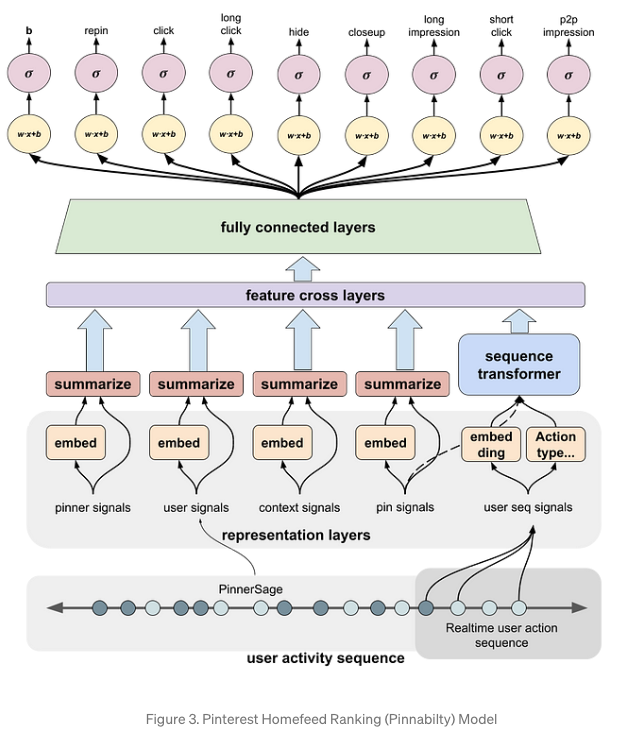

- Overview of current systems from Eugene Yan

- Alibab

- JD

- DoorDash

- Batch vs Real- time

- Fresh Content Needs More Attention: Multi-funnel Fresh Content Recommendation

Overview

- On this page we will look at the different types of recommender systems out there and focus on their system’s design.

- Specifically, we will delve deeper into the machine learning architecture and algorithms that make up these systems.

- Recommender systems usually follow a similar, multi-stage pattern of a candidate generation stage, retrieving, and ranking. The real magic is in how each of these steps are implemented.

- Some common vector embedding methods include: matrix factorization (MF), factorization machines (FM), DeepFM, and Field-aware FM (FFM).

- Recommender systems can be used to solve a variety of prediction problems, including:

- Rating prediction: In this problem, the goal is to predict the rating that a user will give to an item on a numerical scale, such as a 5-star rating system.

- Top-N recommendation: In this problem, the goal is to recommend a ranked list of N items to a user, based on their preferences and past interactions.

- Next item recommendation: In this problem, the goal is to recommend the next item that a user is likely to interact with, based on their current context and past interactions.

Glossary

Parquet- A Column-Oriented Data Format

- Apache Parquet is an open-source, columnar data file format designed for efficient storage and retrieval.

- Organizing data by column enhances compression, speeds analytics, and supports complex structures.

- Parquet’s benefits include space savings, faster queries, and compatibility with advanced nested data types, making it particularly effective for analytics tasks, as opposed to row-based formats like CSV.

- It’s a popular choice for interactive and serverless technologies such as AWS Athena and Google BigQuery, bringing efficiency and cost savings to large datasets.

Optimized Row Columnar (ORC) Format for Advertising Data

- The Optimized Row Columnar (ORC) file format, integrated with Apache Hive, excels in efficiently storing and analyzing large datasets, particularly for advertising purposes within the Hadoop ecosystem.

- ORC’s advantages include storage of extensive advertising data, rapid performance analysis, effective targeting, fraud detection, historical trend analysis, integration with various systems, scalability, and more, making it a crucial asset for modern advertising infrastructure platforms.

OLAP

- OLAP: OLAP functions enable the analysis of multidimensional data, which can be crucial in a context like advertising, where you want to understand user behavior, campaign performance, etc., across various dimensions like time, geography, demography, and so on.

- OLAP capabilities are most commonly associated with databases that support analytical processing, although some of the mentioned functions or their equivalents are available in various RDBMS (Relational Database Management Systems) as well.

- MySQL:

- MySQL, especially in its earlier versions, did not have robust OLAP functions. But over time, it has added window functions (starting from MySQL 8.0) similar to those in other databases. Functions like

RANK(),LEAD(),LAG(), and moving averages using window functions are supported. - Example:

SELECT advertiser, impressions, RANK() OVER(PARTITION BY advertiser ORDER BY impressions DESC) as rank FROM ad_data;

- MySQL, especially in its earlier versions, did not have robust OLAP functions. But over time, it has added window functions (starting from MySQL 8.0) similar to those in other databases. Functions like

- PostgreSQL:

- PostgreSQL has a more extensive set of OLAP functions compared to earlier versions of MySQL. It supports window functions and CTEs (Common Table Expressions) which can be used to create more complex OLAP-style queries.

- Example:

WITH MonthlySpend AS ( SELECT date_trunc('month', ad_date) as month, SUM(spend) as total_spend FROM ad_data GROUP BY date_trunc('month', ad_date) ) SELECT month, total_spend, LAG(total_spend) OVER(ORDER BY month) as last_month_spend FROM MonthlySpend;

- Oracle:

- Oracle has robust OLAP capabilities with a wide variety of OLAP and analytic functions. Functions like

ROLLUP,CUBE, and others are natively supported. - Example:

SELECT advertiser, SUM(impressions) as total_impressions FROM ad_data GROUP BY ROLLUP(advertiser);

- Oracle has robust OLAP capabilities with a wide variety of OLAP and analytic functions. Functions like

- SQL Server:

- SQL Server also has strong OLAP features and supports CTEs, window functions, and the

PIVOToperation which can be used for some OLAP-style queries. - Example:

SELECT advertiser, SUM(clicks) as total_clicks FROM ad_data GROUP BY GROUPING SETS(advertiser, ad_date);

- SQL Server also has strong OLAP features and supports CTEs, window functions, and the

- Databases designed explicitly for OLAP operations, like Microsoft Analysis Services, Oracle OLAP, or IBM Cognos, have even more advanced analytical capabilities, often with their own specialized query languages.

- Most of these functions are used in relational databases, but their implementation and exact syntax might vary across different databases. If you are considering using these functions, always consult the specific documentation for your RDBMS to ensure you’re using the correct syntax and to understand any limitations or specifics of that system.

Cheat Sheet

Components Cheatsheet

Rate limiters

- A rate limiter sets a limit for the number of requests a service will fulfill. It will throttle requests that cross this threshold.

- Rate limiters are an important line of defense for services and systems. They prevent services from being flooded with requests. By disallowing excessive requests, they can mitigate resource consumption.

Monitoring systems

- Monitoring systems are software that allow system administrators to monitor infrastructure. This building block of system design is important because it creates one centralized location for observing the overall performance of a potentially large system of computers in real time.

- Monitoring systems should have the ability to monitor factors such as:

- CPUs

- Server memory

- Routers

- Switches

- Bandwidth

- Applications

- Performance and availability of important network devices

SQL vs NoSQL

- When to pick a SQL database?

- If you are writing a stock trading, banking, or a Finance-based app or you need to store a lot of relationships, for instance, when writing a social networking app like Facebook, then you should pick a relational database. Here’s why:

- Transactions & Data Consistency

- If you are writing software that has anything to do with money or numbers, that makes transactions, ACID, data consistency super important to you. Relational DBs shine when it comes to transactions & data consistency. They comply with the ACID rule, have been around for ages & are battle-tested.

- Storing Relationships

- If your data has a lot of relationships like “friends in Seattle”, “friends who like coding” etc. There is nothing better than a relational database for storing this kind of data.

- Relational databases are built to store relationships. They have been tried & tested & are used by big guns in the industry like Facebook as the main user-facing database.

- Popular relational databases:

- MySQL

- Microsoft SQL Server

- PostgreSQL

- MariaDB

- When to pick a NoSQL database

- Here are a few reasons why you’d want to pick a NoSQL database:

- Handling A Large Number Of Read Write Operations

- Look towards NoSQL databases when you need to scale fast. For example, when there are a large number of read-write operations on your website and when dealing with a large amount of data, NoSQL databases fit best in these scenarios. Since they have the ability to add nodes on the fly, they can handle more concurrent traffic and large amounts of data with minimal latency.

- Running data analytics NoSQL databases also fit best for data analytics use cases, where we have to deal with an influx of massive amounts of data.

- Popular NoSQL databases:

- MongoDB

- Redis

- Cassandra

- HBASE

Blob storage

- Blob, or binary large object, storage is a storage solution for unstructured data. This data can be mostly any type: photos, audio, multimedia, executable code, etc.

- Blob storage uses flat data organization patterns, meaning there is no hierarchy of directories or sub-directories.

- Most blob storage services such as Microsoft Azure or AWS S3 are built around a rule that states “write once, read many” or WORM. This ensures that important data is protected since once the data is written it can be read but not changed.

- Blob stores are ideal for any application that is data heavy. Some of the most notable users of blob stores are:

- YouTube (Google Cloud Storage)

- Netflix (Amazon S3)

- Facebook (Tectonic)

- These services generate enormous amounts of data through large media files. It is estimated that YouTube alone generates a petabyte (1024 terabytes!) of data every day.

Key value stores

- A key value store or key value database are storage systems similar to hash tables or dictionaries. Hash tables and dictionaries are associative as they store information as a pair in the (key, value) format. Information can easily be retrieved and sorted as a result of every value being linked to a key.

- Key value stores are distributed hash tables (DHT).

- Distributed hash tables are just decentralized versions of hash tables. This means they share the key-value pair and lookup methods.

- The keys in a key value store treat data as a single opaque collection. The stored data could be a blob, server name, image, or anything the user wants to store. The values are referred to as opaque data types since they are effectively hidden by their method of storage. It is important that data types are opaque in order to support concepts like information hiding and object-oriented programming (OOP).

- Examples of contemporary, large-scale key value stores are Amazon’s DynamoDB and Microsoft Cassandra.

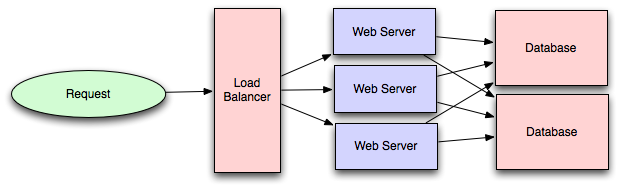

Load balancer

- Load balancing is a key building block of system design. It involves delegating tasks over a set width of resources.

- There may be millions of requests per second to a system on average. Load balancers ensure that all of these requests can be processed by dividing them between available servers.

- This way, the servers will have a more manageable stream of tasks, and it is less likely that one server will be overburdened with requests. Evenly distributing the computational load allows for faster response times and the capacity for more web traffic.

- Load balancers are a crucial part of the system design process. They enable several key properties required for modern web design.

- Scaling: Load balancers facilitate scaling, either up or down, by disguising changes made to the number of servers.

- Availability: By dividing requests, load balancers maintain availability of the system even in the event of a server outage.

- Performance: Directing requests to servers with low traffic decreases response time for the end user.

Kafka

- Apache Kafka as a Streaming Platform:

- While Kafka does function as a messaging system, it goes beyond simple message queuing by providing features that are crucial for handling real-time data streams, making it suitable for use as a streaming platform:

- Event Storage: Kafka retains data for a specified time period, allowing applications to consume data at their own pace. This feature is crucial for building historical data pipelines.

- Scalability: Kafka is designed to handle high throughput and can be scaled horizontally across multiple nodes or clusters, making it suitable for large-scale streaming scenarios.

- Fault Tolerance: Kafka replicates data across multiple brokers, ensuring data availability even in the face of hardware failures.

- Event Time Processing: Kafka supports event-time processing, which is a key requirement for many streaming use cases, such as windowed aggregations and accurate time-based analytics.

- Exactly-Once Processing: Kafka introduced support for exactly-once message processing, ensuring that events are neither lost nor duplicated during processing.

- Streams API: Kafka provides the Kafka Streams API, which enables developers to build complex streaming applications, including transformations, joins, and aggregations.

- Integration with Ecosystem: Kafka integrates well with other big data and streaming tools, allowing data to be ingested from various sources and consumed by different processing engines.

Streaming Platform:

Certainly, and I’ll incorporate Apache Flink into the explanation of streaming platforms:

Streaming Platform:

- A streaming platform is a software framework designed to process and analyze continuous streams of data in real-time.

-

It enables organizations to ingest, process, and respond to data as it arrives, allowing for timely insights and actions. Here are a few popular streaming platforms:

- Apache Kafka:

- Advantages: High throughput, scalable, fault-tolerant, and capable of handling millions of events per second.

- Disadvantages: Complex to set up and manage, requires tuning for optimal performance.

- Amazon Kinesis:

- Advantages: Fully managed, integrates well with AWS ecosystem, good scalability.

- Disadvantages: Less flexibility compared to Kafka, potentially higher costs.

- Apache Kafka:

-

Streaming Analytics Framework:

- Apache Flink:

- Advantages: A powerful open-source stream processing framework that supports both batch and stream processing, providing event-time processing, fault tolerance, and state management.

- Disadvantages: Requires more understanding of stream processing concepts, may have a steeper learning curve compared to simpler stream processing tools.

- Apache Flink:

Explanation:

-

Streaming platforms, such as Apache Kafka and Amazon Kinesis, serve as infrastructure for handling real-time data. They offer capabilities to ingest data, manage it, and distribute it to various consumers or processing pipelines.

-

Streaming Analytics Frameworks, like Apache Flink, extend the functionality of streaming platforms by allowing data processing, transformations, and complex analytics on the incoming data streams. These frameworks enable real-time data analysis and insights extraction.

-

In summary, streaming platforms and streaming analytics frameworks collectively empower organizations to harness the power of real-time data, making it possible to react swiftly to events, make informed decisions, and provide dynamic services based on the most up-to-date information.

Real-Time Stream Processing:

- Apache Flink:

- Advantages: Low latency, exactly-once processing semantics, strong community support.

- Disadvantages: Can be complex to configure, high resource consumption.

- Apache Storm:

- Advantages: Low-latency processing, scalable, reliable.

- Disadvantages: Lacks native exactly-once processing, older and losing community support.

Serverless Computing (Task Runners):

- AWS Lambda:

- Advantages: Fully managed, scales automatically, pay-per-use pricing.

- Disadvantages: Cold starts can add latency, limitations on execution time and resources.

- Azure Functions:

- Advantages: Integrates well with Microsoft products, flexible pricing, multiple language support.

- Disadvantages: Cold starts, less mature than AWS Lambda.

Data Storage (Click Capture, Aggregated Data):

- Amazon Redshift:

- Advantages: Fully managed, excellent for analytics, integrates with AWS.

- Disadvantages: Can be expensive, less suitable for unstructured data.

- Apache Cassandra:

- Advantages: Highly scalable, fault-tolerant, good for write-heavy workloads.

- Disadvantages: Complex to manage, potential consistency issues.

Content Delivery Network (CDN):

- Amazon CloudFront:

- Advantages: Integrates with other AWS services, global reach, good performance.

- Disadvantages: Costs can escalate, less flexibility in certain configurations.

- Cloudflare:

- Advantages: Strong security features, broad network, performance optimization features.

- Disadvantages: Some configurations can be complex, potential issues with specific ISPs.

Analytics Service:

- Tableau:

- Advantages: User-friendly, powerful visualization capabilities.

- Disadvantages: Can be expensive, heavy resource consumption.

- Power BI:

- Advantages: Integrates well with Microsoft products, robust reporting capabilities.

- Disadvantages: Less flexible with non-Microsoft products, licensing costs.

MapReduce for Reconciliation:

- Apache Hadoop:

- Advantages: Scalable, robust, well-suited for batch processing.

- Disadvantages: Complex to set up and manage, not suited for real-time processing.

- Apache Spark:

- Advantages: Faster than Hadoop, can handle real-time processing, supports various languages.

- Disadvantages: Requires substantial resources, can be complex to optimize.

Caching Systems:

- Redis:

- Advantages: High-performance, in-memory data store, supports various data structures, persistent.

- Disadvantages: Limited to available RAM, cluster mode can add complexity.

- Memcached:

- Advantages: Easy to use, fast, in-memory key-value store, suitable for simple caching needs.

- Disadvantages: No persistence, less versatile than Redis.

Non-Relational Databases (NoSQL):

- MongoDB:

- Advantages: Flexible schema, horizontal scalability, good for unstructured data.

- Disadvantages: Potential consistency issues, less suited for complex transactions.

- Apache Cassandra:

- Advantages: Write-optimized, highly scalable, distributed.

- Disadvantages: Read latency can be high, complex to manage.

Relational Databases (SQL):

- MySQL:

- Advantages: Well-established, wide community support, ACID compliant.

- Disadvantages: Scalability limitations, can struggle with very large datasets.

- PostgreSQL:

- Advantages: Extensible, strong consistency, robust feature set.

- Disadvantages: Can be resource-intensive, less suitable for write-heavy loads.

Columnar Databases:

- Amazon Redshift:

- Advantages: Optimized for analytics, compresses well, integrates with AWS ecosystem.

- Disadvantages: Less suited for transactional workloads, can be expensive.

- Apache HBase:

- Advantages: Scalable, good for real-time read/write access.

- Disadvantages: Complexity in management, consistency can be a challenge.

Parquet (Columnar File Format):

- Apache Parquet:

- Advantages: Efficient for analytics, supports schema evolution, compresses well.

- Disadvantages: Not designed for transactional workloads, less suitable for frequent writes.

Summary:

- Caching systems are crucial for improving read performance, with Redis being more versatile and Memcached being more lightweight.

- Non-Relational databases are ideal for unstructured or semi-structured data, where flexibility and scalability are key.

- Relational databases are preferred for structured data with ACID requirements.

- Columnar databases and Parquet files are optimized for analytical processing, where reading large volumes of data efficiently is vital.

Choosing the appropriate technology for each component requires a deep understanding of the specific use cases, data models, scalability requirements, and existing technological landscape.

Choosing the right technologies for each component depends on specific requirements, such as scalability, real-time processing needs, budget considerations, and existing technology stack. By understanding the advantages and disadvantages of each, you can tailor the design to fit the unique needs of the system.

System Design Cheatsheet

Picking the right architecture = Picking the right battles + Managing trade-offs

Basic Steps

1) Clarify and agree on the scope of the system

- User cases (description of sequences of events that, taken together, lead to a system doing something useful)

- Who is going to use it?

- How are they going to use it?

- Constraints

- Mainly identify traffic and data handling constraints at scale.

- Scale of the system such as requests per second, requests types, data written per second, data read per second)

- Special system requirements such as multi-threading, read or write oriented.

2) High level architecture design (Abstract design)

- Sketch the important components and connections between them, but don’t go into some details.

- Application service layer (serves the requests)

- List different services required. * Data Storage layer * eg. Usually a scalable system includes webserver (load balancer), service (service partition), database (master/slave database cluster) and caching systems.

3) Component Design

- Component + specific APIs required for each of them.

- Object oriented design for functionalities.

- Map features to modules: One scenario for one module.

- Consider the relationships among modules:

- Certain functions must have unique instance (Singletons)

- Core object can be made up of many other objects (composition).

- One object is another object (inheritance)

- Database schema design.

4) Understanding Bottlenecks

- Perhaps your system needs a load balancer and many machines behind it to handle the user requests. * Or maybe the data is so huge that you need to distribute your database on multiple machines. What are some of the downsides that occur from doing that?

- Is the database too slow and does it need some in-memory caching?

5) Scaling your abstract design

- Vertical scaling

- You scale by adding more power (CPU, RAM) to your existing machine.

- Horizontal scaling

- You scale by adding more machines into your pool of resources.

- Caching

- Load balancing helps you scale horizontally across an ever-increasing number of servers, but caching will enable you to make vastly better use of the resources you already have, as well as making otherwise unattainable product requirements feasible.

- Application caching requires explicit integration in the application code itself. Usually it will check if a value is in the cache; if not, retrieve the value from the database.

- Database caching tends to be “free”. When you flip your database on, you’re going to get some level of default configuration which will provide some degree of caching and performance. Those initial settings will be optimized for a generic usecase, and by tweaking them to your system’s access patterns you can generally squeeze a great deal of performance improvement.

- In-memory caches are most potent in terms of raw performance. This is because they store their entire set of data in memory and accesses to RAM are orders of magnitude faster than those to disk. eg. Memcached or Redis.

- eg. Precalculating results (e.g. the number of visits from each referring domain for the previous day),

- eg. Pre-generating expensive indexes (e.g. suggested stories based on a user’s click history)

- eg. Storing copies of frequently accessed data in a faster backend (e.g. Memcache instead of PostgreSQL.

- Load balancing

- Public servers of a scalable web service are hidden behind a load balancer. This load balancer evenly distributes load (requests from your users) onto your group/cluster of application servers.

- Types: Smart client (hard to get it perfect), Hardware load balancers ($$$ but reliable), Software load balancers (hybrid - works for most systems)

- Database replication

- Database replication is the frequent electronic copying data from a database in one computer or server to a database in another so that all users share the same level of information. The result is a distributed database in which users can access data relevant to their tasks without interfering with the work of others. The implementation of database replication for the purpose of eliminating data ambiguity or inconsistency among users is known as normalization.

- Database partitioning

- Partitioning of relational data usually refers to decomposing your tables either row-wise (horizontally) or column-wise (vertically).

- Map-Reduce

- For sufficiently small systems you can often get away with adhoc queries on a SQL database, but that approach may not scale up trivially once the quantity of data stored or write-load requires sharding your database, and will usually require dedicated slaves for the purpose of performing these queries (at which point, maybe you’d rather use a system designed for analyzing large quantities of data, rather than fighting your database).

- Adding a map-reduce layer makes it possible to perform data and/or processing intensive operations in a reasonable amount of time. You might use it for calculating suggested users in a social graph, or for generating analytics reports. eg. Hadoop, and maybe Hive or HBase.

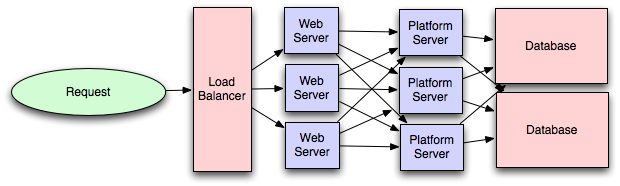

- Platform Layer (Services)

- Separating the platform and web application allow you to scale the pieces independently. If you add a new API, you can add platform servers without adding unnecessary capacity for your web application tier.

- Adding a platform layer can be a way to reuse your infrastructure for multiple products or interfaces (a web application, an API, an iPhone app, etc) without writing too much redundant boilerplate code for dealing with caches, databases, etc.

Key topics for designing a system

1) Concurrency

- Do you understand threads, deadlock, and starvation? Do you know how to parallelize algorithms? Do you understand consistency and coherence?

2) Networking

- Do you roughly understand IPC and TCP/IP? Do you know the difference between throughput and latency, and when each is the relevant factor?

3) Abstraction

- You should understand the systems you’re building upon. Do you know roughly how an OS, file system, and database work? Do you know about the various levels of caching in a modern OS?

4) Real-World Performance

- You should be familiar with the speed of everything your computer can do, including the relative performance of RAM, disk, SSD and your network.

5) Estimation

- Estimation, especially in the form of a back-of-the-envelope calculation, is important because it helps you narrow down the list of possible solutions to only the ones that are feasible. Then you have only a few prototypes or micro-benchmarks to write.

6) Availability & Reliability

- Are you thinking about how things can fail, especially in a distributed environment? Do know how to design a system to cope with network failures? Do you understand durability?

Web App System design considerations:

- Security (CORS)

- Using CDN

- A content delivery network (CDN) is a system of distributed servers (network) that deliver webpages and other Web content to a user based on the geographic locations of the user, the origin of the webpage and a content delivery server.

- This service is effective in speeding the delivery of content of websites with high traffic and websites that have global reach. The closer the CDN server is to the user geographically, the faster the content will be delivered to the user.

- CDNs also provide protection from large surges in traffic.

- Full Text Search

- Using Sphinx/Lucene/Solr - which achieve fast search responses because, instead of searching the text directly, it searches an index instead.

- Offline support/Progressive enhancement

- Service Workers

- Web Workers

- Server Side rendering

- Asynchronous loading of assets (Lazy load items)

- Minimizing network requests (Http2 + bundling/sprites etc)

- Developer productivity/Tooling

- Accessibility

- Internationalization

- Responsive design

- Browser compatibility

Working Components of Front-end Architecture

- Code

- HTML5/WAI-ARIA

- CSS/Sass Code standards and organization

- Object-Oriented approach (how do objects break down and get put together)

- JS frameworks/organization/performance optimization techniques

- Asset Delivery - Front-end Ops

- Documentation

- Onboarding Docs

- Styleguide/Pattern Library

- Architecture Diagrams (code flow, tool chain)

- Testing

- Performance Testing

- Visual Regression

- Unit Testing

- End-to-End Testing

- Process

- Git Workflow

- Dependency Management (npm, Bundler, Bower)

- Build Systems (Grunt/Gulp)

- Deploy Process

- Continuous Integration (Travis CI, Jenkins)

Links

Introduction to Architecting Systems for Scale

Scalable System Design Patterns

Scalable Web Architecture and Distributed Systems

What is the best way to design a web site to be highly scalable?

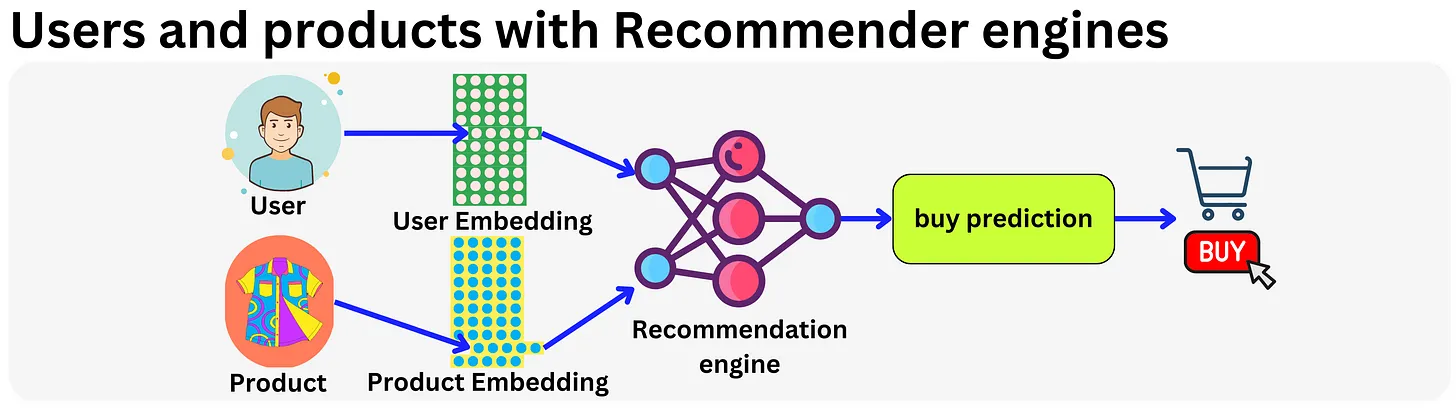

Embeddings

- Let’s start by talking about one of the most fundamental aspects of any model, it’s embedding.

- Embedding is just a lower dimensionality representation of the data. This makes it possible to perform efficient computations while minimizing the effect of the curse of dimensionality, providing more robust representations when it comes to overfitting.

- In practice, this is just a vector living in a “latent” or “semantic” space.

- One domain where embeddings changed everything is recommender engines. It all started with Latent Matrix Factorization methods made popular during the Netflix movie recommendation competition in 2009.

- The idea is to have a vector representation for each user and product and use that as base features. In fact, any sparse feature could be encoded within an embedding vector and modern recommender engines typically use hundreds of embedding matrices for different categorical variables.

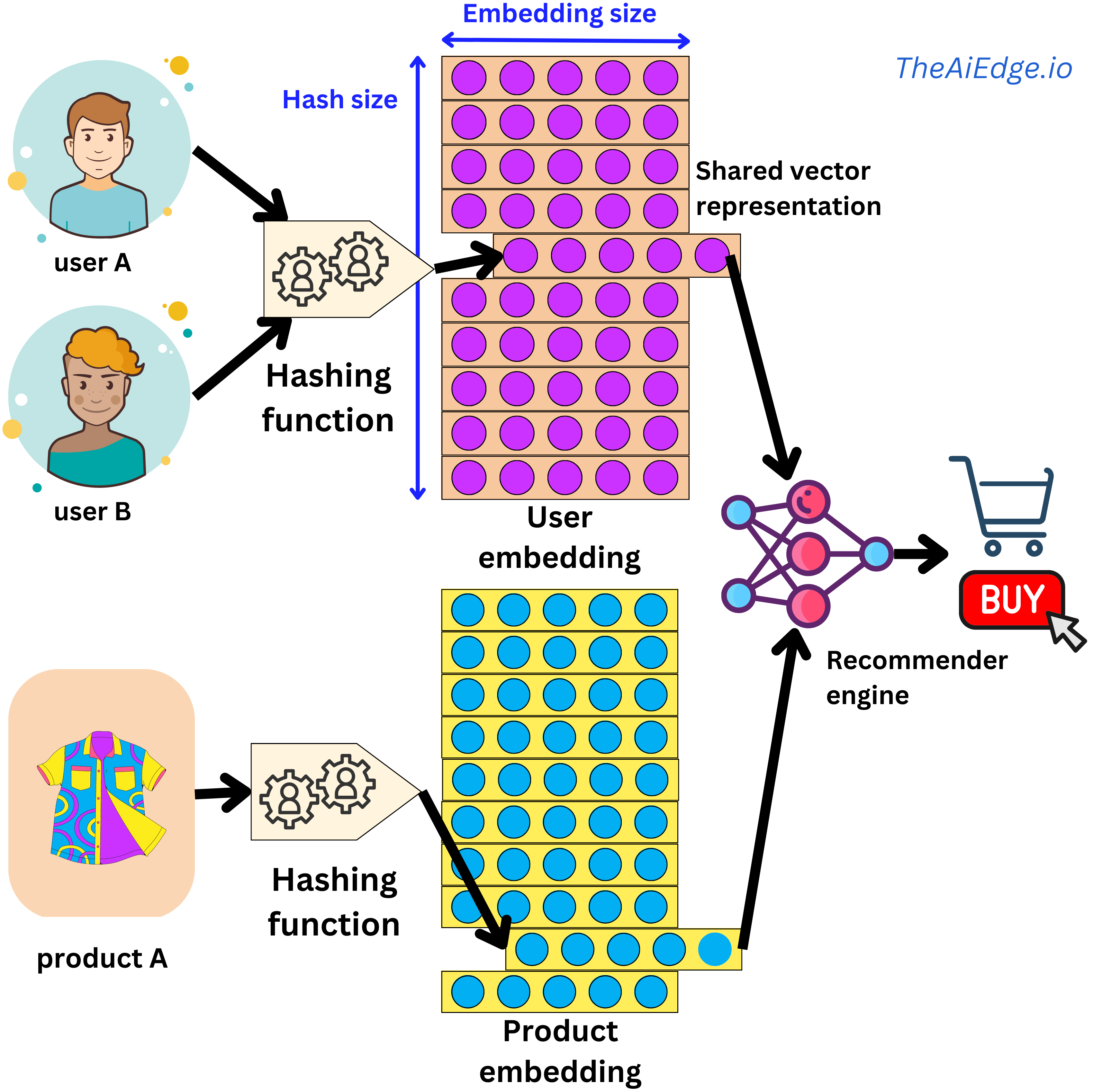

Hashing trick

- One typical problem with embeddings is that they can consume quite a bit of memory as the whole matrix tends to be loaded at once.

- In RecSys interviews, a common question is designing a model that would recommend ads to users. A novice answer would be to draw a simple recommender engine with a user embedding and an ads embedding, a couple of non-linear interactions and a “click-or-not” learning task. But the interviewer asked “but wait, we have billions of users, how is this model going to fit on a server?!”. A naive embedding encoding strategy will assign a vector to each of the categories seen during training, and an “unknown” vector for all the categories seen during serving but not at training time. That can be a relatively safe strategy for NLP problems if you have a large enough training set as the set of possible words or tokens can be quite static.

- However, in the case of recommender systems where you can potentially have hundreds of thousands of new users every day, squeezing all those new daily users into the “unknown” category would lead to poor experience for new customers. This is precisely the cold start problem!

- A typical way to handle this problem is to use the hashing trick (“Feature Hashing for Large Scale Multitask Learning“): you simply assign multiple users (or categories of your sparse variable) to the same latent representation, solving both the cold start problem and the memory cost constraint. The assignment is done by a hashing function, and by having a hash-size hyperparameter, you can control the dimension of the embedding matrix and the resulting degree of hashing collision.

- But wait, are we not going to decrease predictive performance by conflating different user behaviors? In practice the effect is marginal. Keep in mind that a typical recommender engine will be able to ingest hundreds of sparse and dense variables, so the hashing collision happening in one variable will be different from another one, and the content-based information will allow for high levels of personalization. But there are ways to improve on this trick. For example, at Meta they suggested a method to learn hashing functions to group users with similar behaviors (“Learning to Collide: Recommendation System Model Compression with Learned Hash Functions“). They also proposed a way to use multiple embedding matrices to efficiently map users to unique vector representations (“Compositional Embeddings Using Complementary Partitions for Memory-Efficient Recommendation Systems“). This last one is somewhat reminiscent of the way a pair (token, index) is uniquely encoded in a Transformer by using the position embedding trick.

- The hashing trick is heavily used in typical recommender system settings but not widely known outside that community!

Facebook Ads Ranking

- The below content is from Damien Benveniste’s LinkedIn post.

- At Meta, we were using many paradigms of Recommendation Engines for ADS RANKING.

- Conceptually, a recommender system is simple: you take a set of features for a user \(U\) and a set of features for an item \(I\) along with features \(C\) capturing the context at the time of the recommendation (time of the day, weekend / week day, …), and you match those features to an affinity event (e.g. did the user click on the ad or not): click or not = \(F(U, I, C)\).

- In the early days they started with Gradient Boosting models. Those models are good with dense features (e.g. age, gender, number of clicks in the last month, …) but very bad with sparse features (page Id, user Id, Ad Id, …). By the way, we often talk of the superiority of Tree based models for tabular data, well this is a real exception to the rule! Those sparse features are categorical features with literally billions of categories and very few sample events. For example, consider the time series of sequence of pages visited by a user, how do you build features to capture that information? That is why they moved to Deep Learning little by little where a page Id becomes a vector in an embedding and a sequence of page Ids can be encoded by transformers as a simple vector. And even with little information on that page, the embedding can provide a good guess by using similar user interactions to other pages.

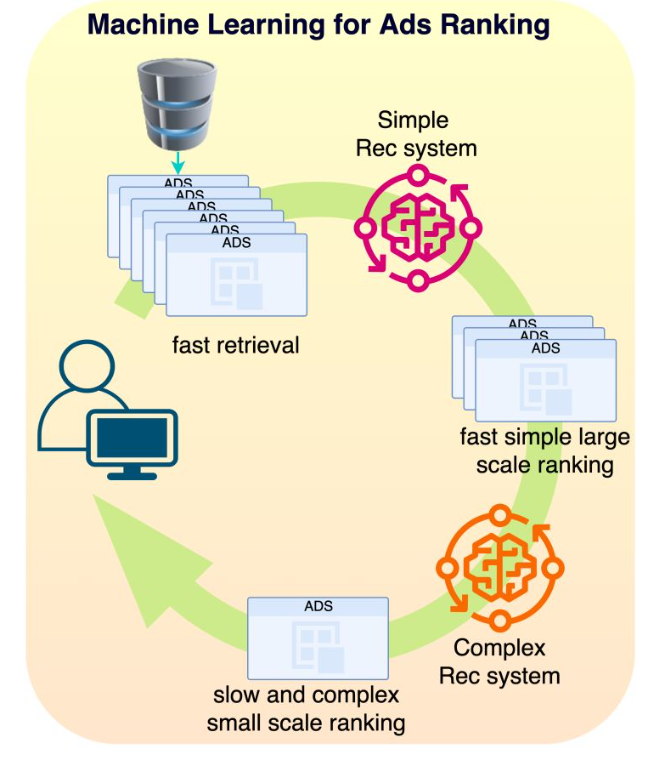

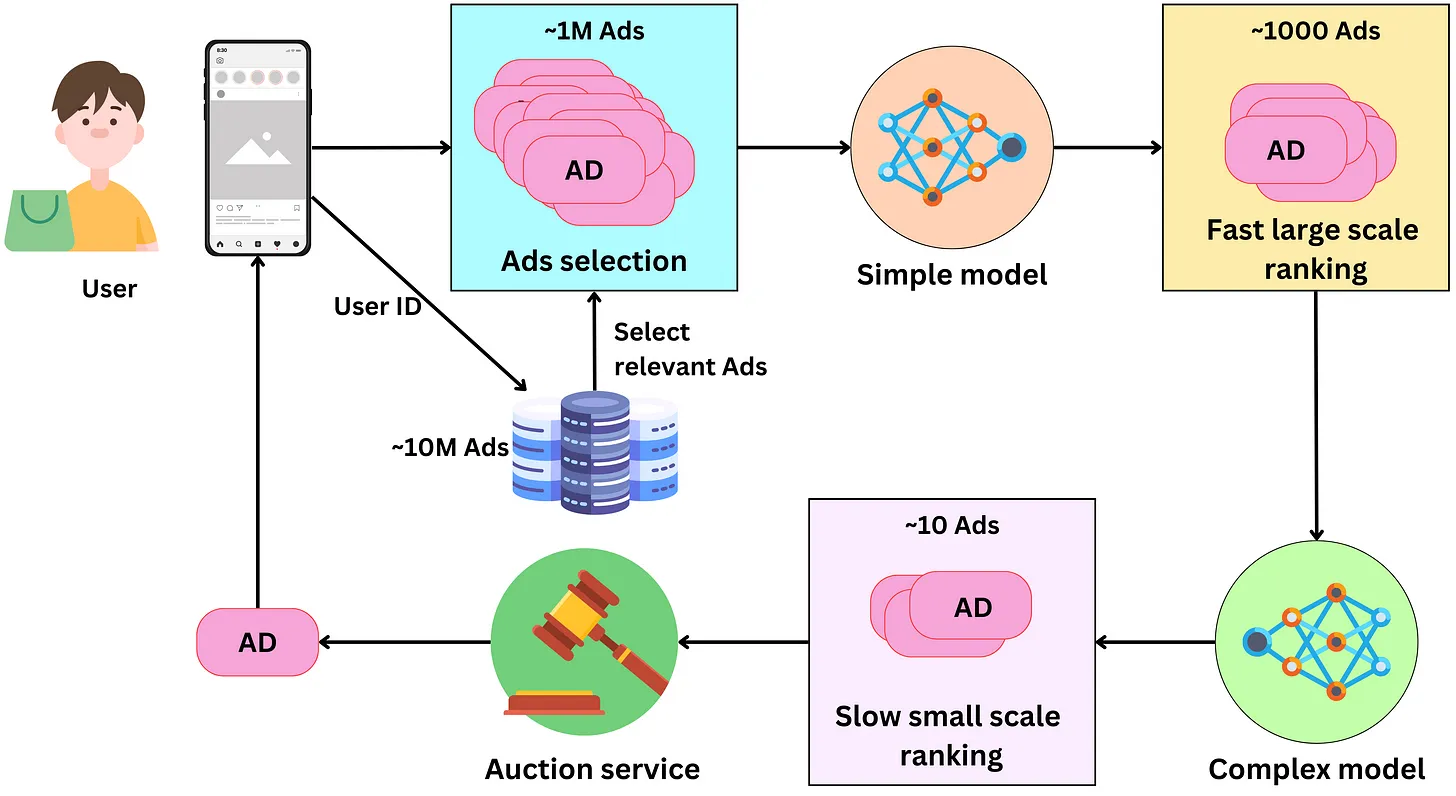

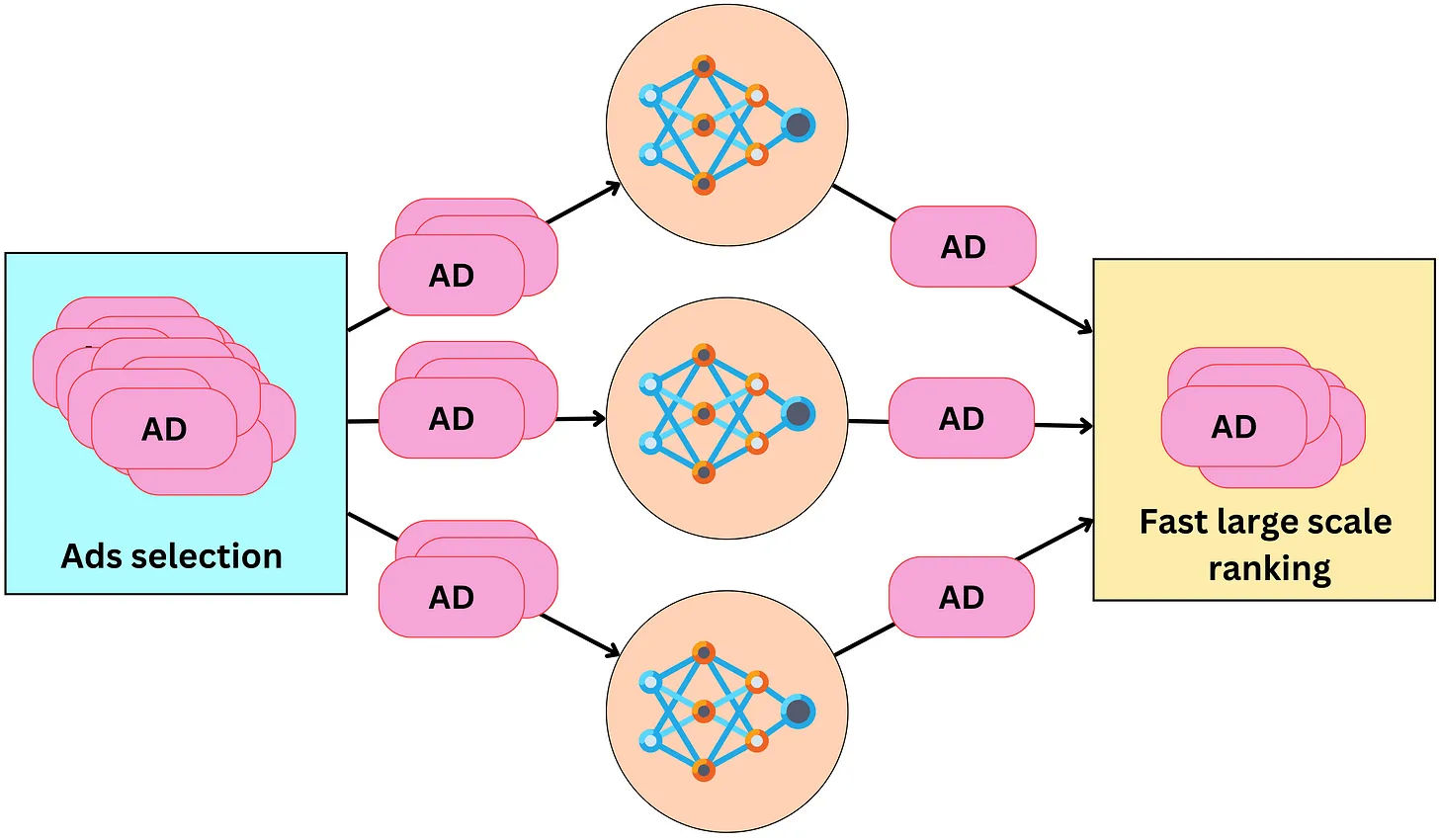

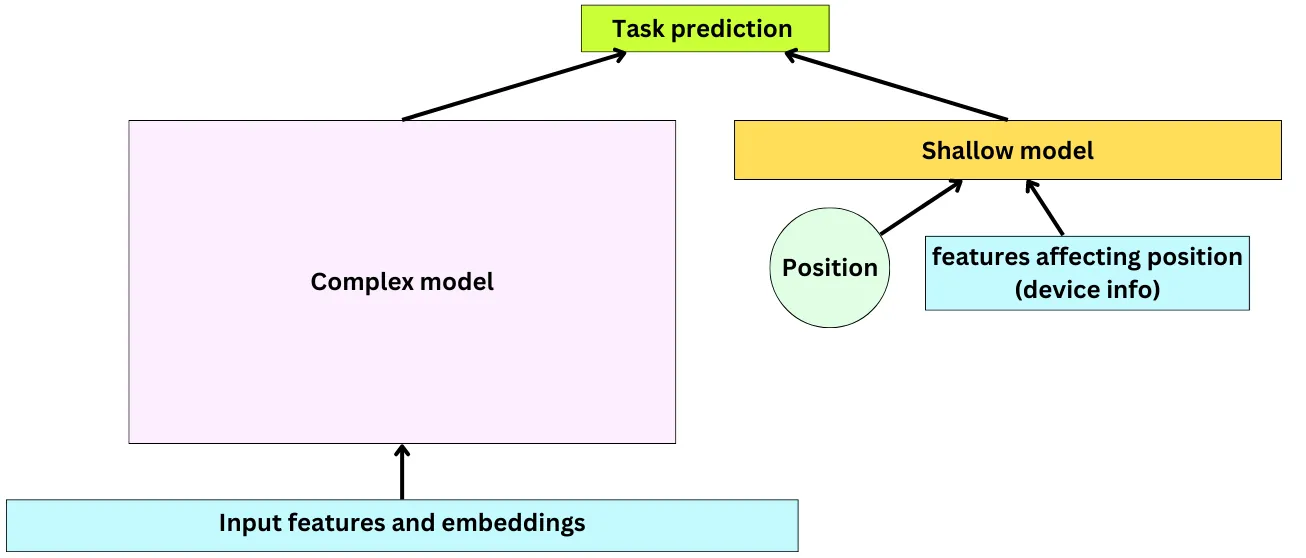

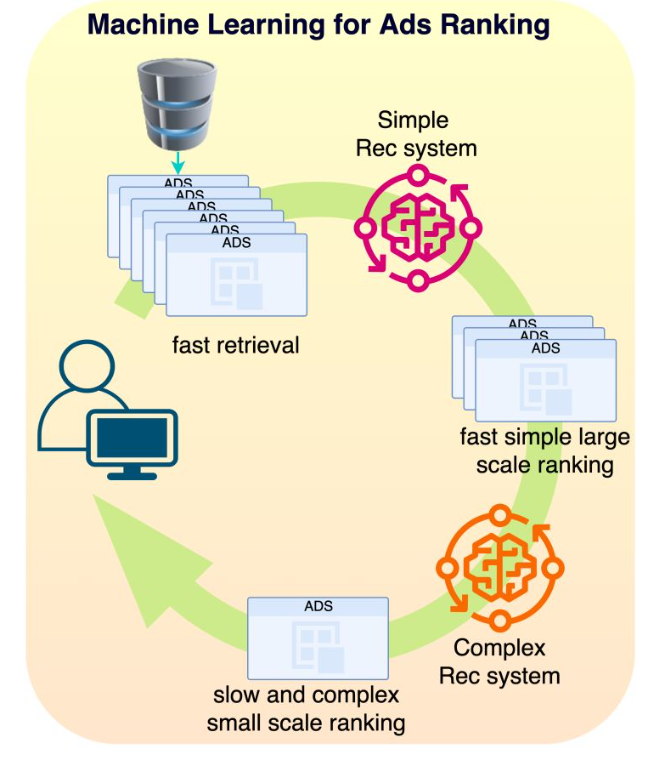

- Typical models we were using were Multi-task learning, Mixture of Experts or Multi-towers models. In Ads Ranking, the ranking happens in stages: first you select a sub-universe of ads (let’s say 1M ads) that relate to the user (very fast retrieval), then you select a subset of those ads (let’s say 1000 Ads) with a simple model (fast inference) and then you use a very complex model (slow inference) to rank the resulting ads as accurately as possible. The top ranked ad will be the one you see on your screen. We also used MIMO (multi-inputs multi-outputs) models to simultaneously train the simple and complex models for efficient two staged ranking.

- Today Facebook has about ~3 billion users and ~2 billion daily active users. It is the second largest ads provider in the world after Google. 95% of Meta’s revenue comes from ads and Facebook generates ~$70B every year while Instagram ~$50B in ads alone. There are ~15 million active advertising accounts, with approximately more than 15 million active ad campaigns running at any point in time.

- Facebook feeds contain a lists of posts interlaced with ads in between. Let’s Why is that specific ad shown to me?

Design overview

- Conceptually, choosing the right ad for the right user is pretty simple. We pull ads from a database, rank them according to how likely the user is to click on them, run an auction process to pick the best one, and we finally present it to the user.

- The process to present one ad on the user’s screen has the following components to it:

- Selecting ads: The ads are indexed in a database and a subset of them is retrieved for a specific user. At all times, there are between 10M and 100M ads and we need to filter away ads that are not relevant to the user. Meta has access to age, gender, location, or user’s interest data that can be used as keywords filters for fast ads retrieval. Additional context information can be used to further filter the selected ads. For example, if it is winter and the user lives in Canada, we could exclude ads for swim suits! We can expect this process to pull between 100K and 1M ads

- Fast large scale ranking: With that many ads, we need to rank them in stages. Typically there are two stages: a first fast (per ad) large scale ranking stage and a slow (per ad) small scale ranking one. The first stage needs to be able to handle a large amount of ads (from the ads selection step) while being relatively fast for a low latency process. The model has usually a low capacity and uses a subset of the features making it fast. The ads are scored with a probability of being clicked by the user and only the top ads are retained for the next step. Typically this process generates between 100 and 1000 ads.

- Slow small scale ranking: This step needs to handle a smaller amount of ads, so we can use a more complex model that is slower per ad. This model, being more complex, has better predictive performance than the one in the previous step, leading to a more optimal ranking of the resulting ads. We keep only the best ranking ads for the next step. At this point, we may have of the order of ~10 remaining ads.

- The auction: An advertiser only pays Facebook when the user clicks on the ad. During the campaign creation, the advertiser sets a daily budget for a certain period. From this, we can estimate the cost he is willing to pay per click (bid) and the remaining ads are ranked according to their bid and probability of click. The winning ad is presented to the user.

Architecture

- The models used in multi-stage ranking are recommender engines. They take as inputs a set of features about the user, the ads and the context, and they output the probability that the user will click on the ads.

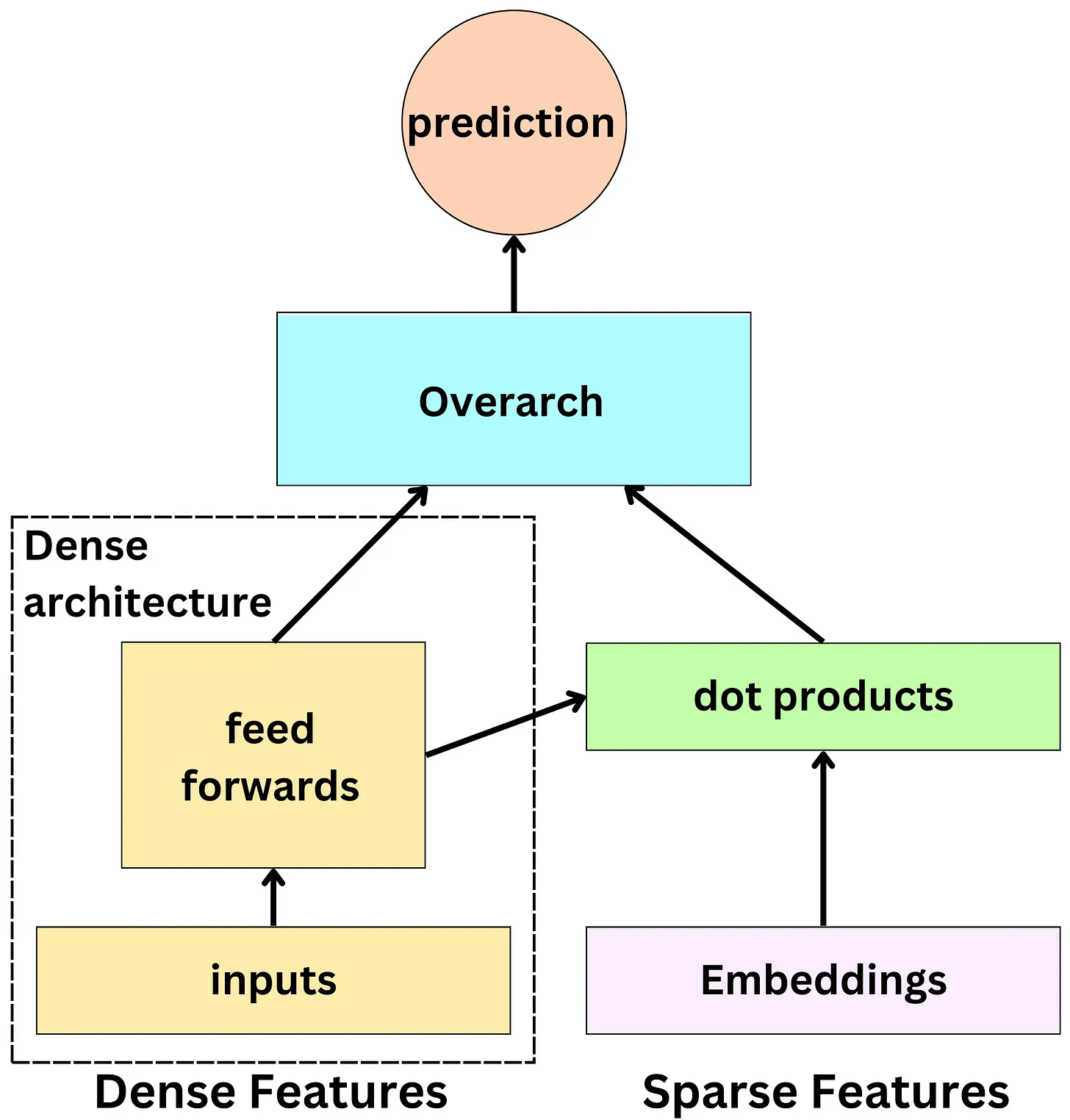

- The base architecture used at Facebook is the SparseNN architecture. The main components are as follows:

- The dense features: those are typically the continuous variables and low dimensionality categorical variables: age, gender, number of likes in the past week, …

- The sparse features: those are the high dimensionality categorical variables and they are represented by an embedding: user id, page id, ad id, …

- The dense architecture: dense features are fed to the dense architecture. Latent representations of dense features are derived from non-linear functions in dense architecture. The latent representations have the same size as sparse features’ embeddings.

- The dot product layer: reminiscent of the latent matrix factorization model, multiple dot products of pairs of dense and sparse embeddings are computed.

- The overarch: the resulting outputs are fed to a set of non-linear functions before producing a probability prediction.

Detailed architecture

- For recommender systems, there are several architecture paradigms used.

- Let’s see a few detailed below:

Multi-task learning architecture (“An Overview of Multi-Task Learning in Deep Neural Networks”)

- In the context of a recommender system, Multi-task learning (MTL) architecture can be used to improve the performance of the system by simultaneously learning to recommend multiple types of items to users.

- For example, consider a movie streaming platform that wants to recommend both movies and TV shows to its users. Traditionally, the platform would train separate recommender systems for movies and TV shows, but with MTL architecture, the platform can train a single neural network to recommend both movies and TV shows at the same time.

- In this case, the shared layers of the neural network would learn the shared features and characteristics of movies and TV shows, such as genre, actors, and directors. The task-specific layers for movies would learn the specific features and characteristics of movies that are important for making accurate recommendations, such as user ratings and movie length. Similarly, the task-specific layers for TV shows would learn the specific features and characteristics of TV shows that are important for making accurate recommendations, such as episode duration and the number of seasons.

- The neural network would be trained using loss functions specific to each task, such as mean squared error for predicting user ratings for movies, and cross-entropy loss for predicting the probability of a user watching a specific TV show.

- By using MTL architecture, the recommender system can leverage the shared structure and features across multiple types of items to improve the accuracy of recommendations for both movies and TV shows, while reducing the amount of data required to train each task.

Input

|

Shared Layers

|

/ | \

Movie-specific TV-show specific User-specific

Layers Layers Layers

| | |

Movie Loss TV-show Loss User Loss

| | |

Movie Output TV-show Output User Output

- In this architecture, the input to the neural network is user and item data, such as user ratings and item features. The shared layers of the neural network learn shared representations of the data, such as latent features that are common to both movies and TV shows.

- The movie-specific and TV-show specific layers are task-specific and learn features that are specific to movies and TV shows, respectively. For example, the movie-specific layers might learn to predict movie genres or directors, while the TV-show specific layers might learn to predict the number of seasons or the release date of a TV show.

- The user-specific layers learn features that are specific to each user, such as user preferences or viewing history. These layers take into account the user-specific data to personalize the recommendations for each user.

- Each task has its own loss function that measures the error between the predicted output and the actual output of the neural network for that task. Like we discussed earlier, the movie loss function might be mean squared error for predicting user ratings for movies, while the TV-show loss function might be cross-entropy loss for predicting the probability of a user watching a specific TV show.

- The outputs of the neural network are the predicted ratings or probabilities for each item, which are used to make recommendations to the user.

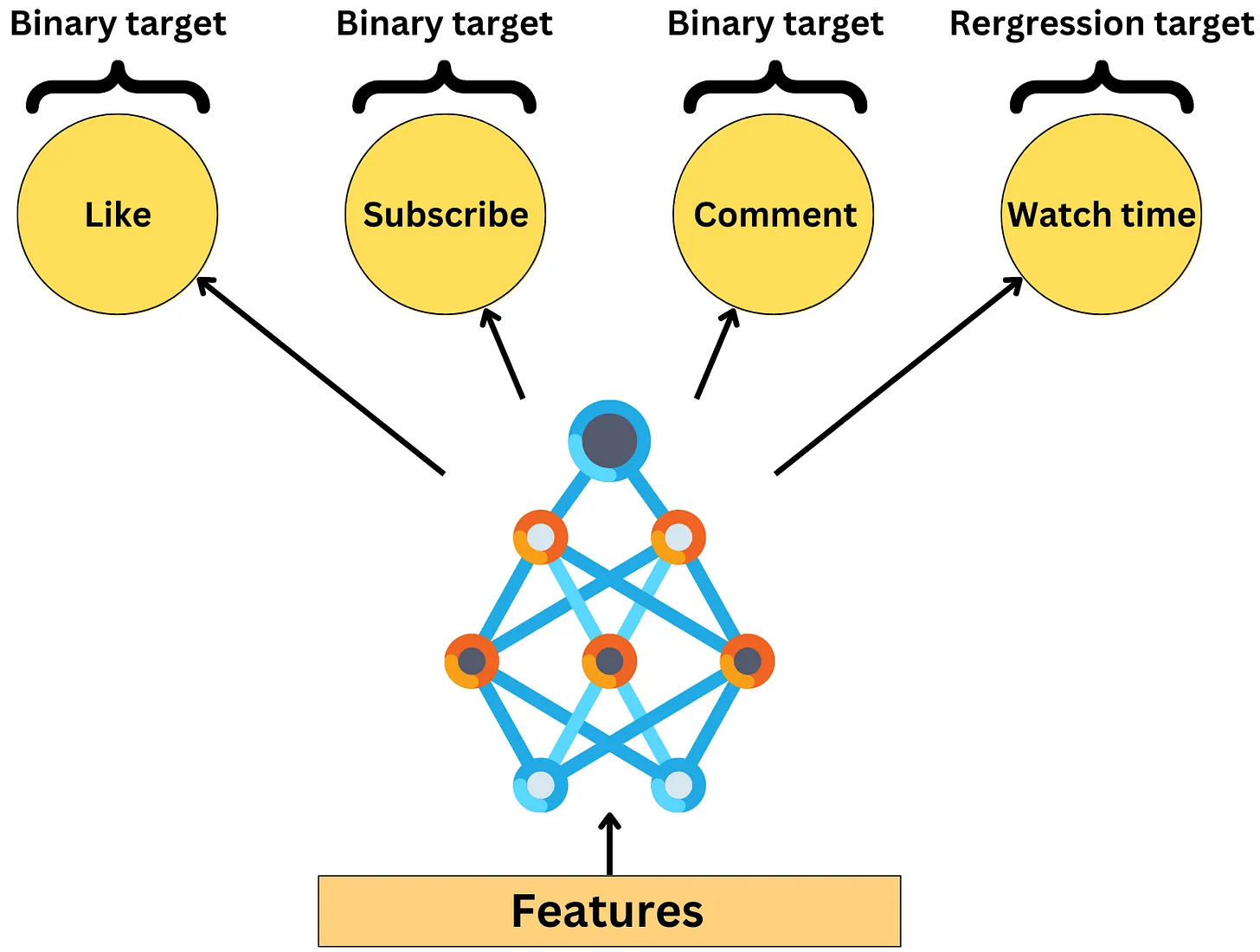

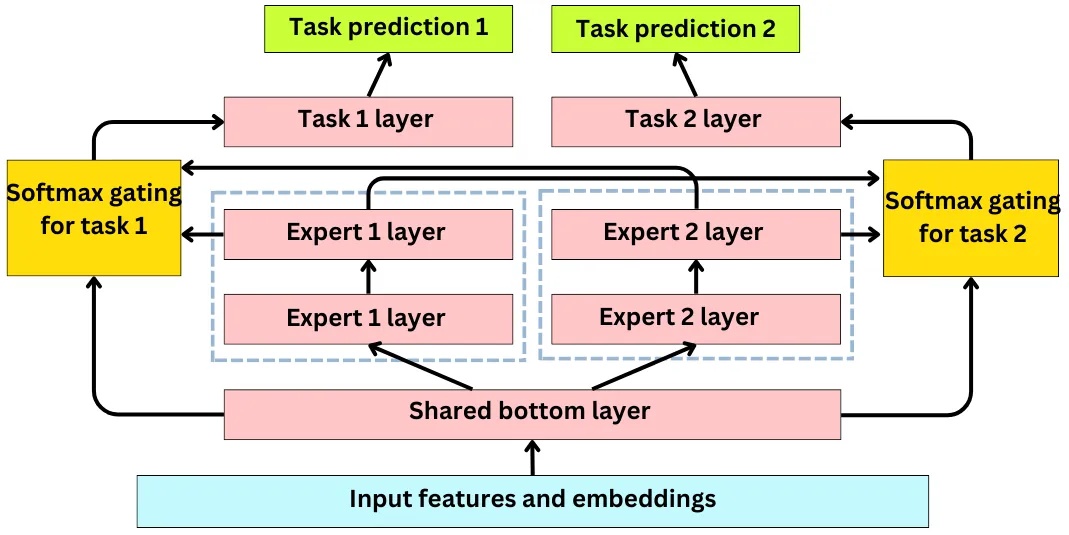

Mixture of experts (“Recommending What Video to Watch Next: A Multitask Ranking System”)

- Mixture of experts (MoE) is a type of neural network architecture that can be used for recommender systems to combine the predictions of multiple models or “experts” to make more accurate recommendations.

- In the context of a recommender system, MoE can be used to combine the predictions of multiple recommendation models that specialize in different types of recommendations. For example, one model might specialize in recommending popular items, while another model might specialize in recommending niche items.

- The MoE architecture consists of multiple “experts” and a “gate” network that learns to select which expert to use for a given input. Each expert is responsible for making recommendations for a subset of items or users. The gate network takes the input data and predicts which expert is best suited to make recommendations for that input.

- Here is an illustration of the MoE architecture for a recommender system:

Input

|

/ | \

Expert1 Expert2 Expert3

| | |

Output1 Output2 Output3

\ | /

\ | /

\ | /

Gate Network

|

Output

- In this architecture, the input is user and item data, such as user ratings and item features. The MoE consists of three experts, each of which is responsible for making recommendations for a subset of items or users.

- Each expert has its own output, which is a prediction of the user’s preference for the items in its subset. The gate network takes the input data and predicts which expert is best suited to make recommendations for that input. The gate network output is a weighted combination of the outputs of the three experts, where the weights are determined by the gate network.

-

The MoE architecture allows the recommender system to leverage the strengths of multiple models to make more accurate recommendations. For example, one expert might be good at recommending popular items, while another expert might be good at recommending niche items. The gate network learns to select the expert that is best suited for a given input, based on the user’s preferences and the item’s features.

- In this architecture, the input is user and item data, such as user ratings and item features. The MoE consists of three experts, each of which is responsible for making recommendations for a subset of items or users.

- Expert1 specializes in recommending popular movies, while Expert2 specializes in recommending niche TV shows, and Expert3 specializes in recommending new releases of both movies and TV shows. Each expert has its own output, which is a prediction of the user’s preference for the items in its subset.

- The gate network takes the input data and predicts which expert is best suited to make recommendations for that input. The gate network output is a weighted combination of the outputs of the three experts, where the weights are determined by the gate network.

- For example, if the input data indicates that the user has a history of watching popular movies and TV shows, the gate network might assign a higher weight to Expert1 and Expert2, and a lower weight to Expert3. The output of the MoE architecture is a ranked list of recommended items, which can include both movies and TV shows.

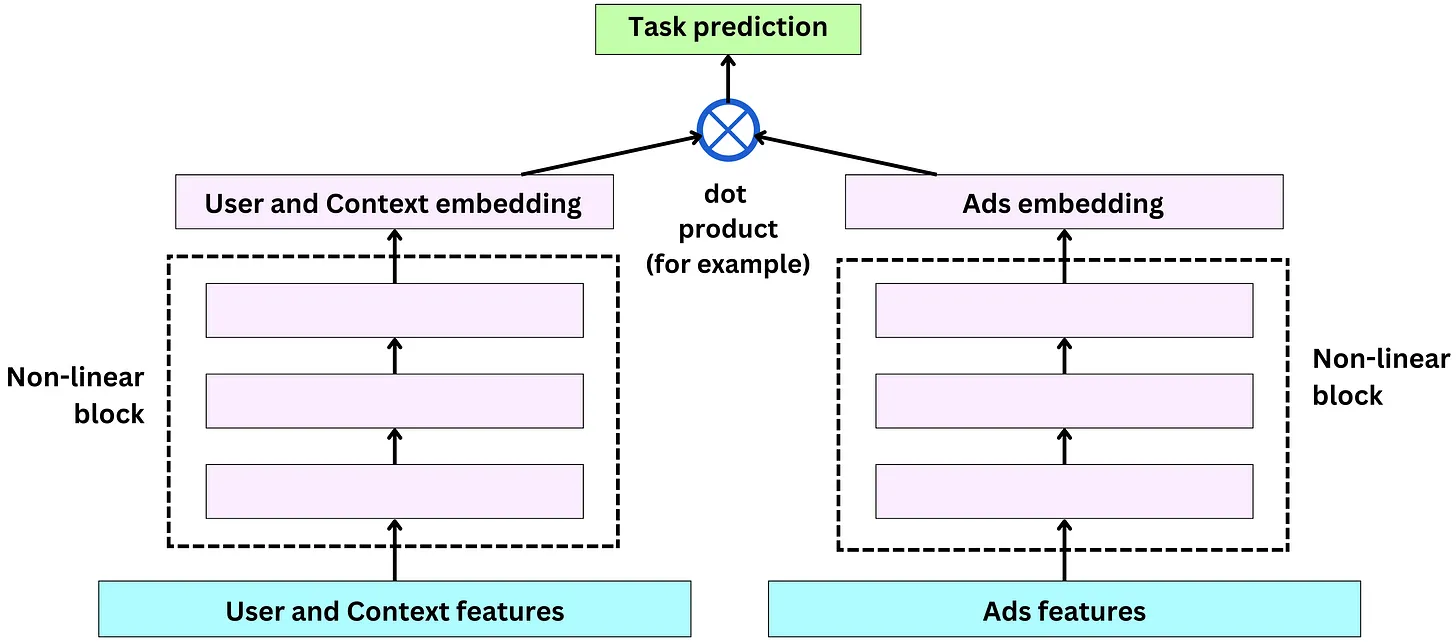

Multi-tower models (“Cross-Batch Negative Sampling for Training Two-Tower Recommenders”)

- Multi-tower models are a type of neural network architecture used in recommender systems to model the interactions between users and items. The name “multi-tower” refers to the fact that the architecture consists of multiple towers or columns, each of which represents a different aspect of the user-item interaction.

- In a two-tower architecture, there are two towers or columns: one tower represents the user and the other tower represents the item. Each tower consists of multiple layers of neurons, which can be fully connected or sparse.

- The user tower takes as input the user’s features, such as demographic information, browsing history, or social network connections, and processes them through the layers to produce a user embedding, which is a low-dimensional vector representation of the user.

- The item tower takes as input the item’s features, such as its genre, cast, or director, and processes them through the layers to produce an item embedding, which is a low-dimensional vector representation of the item.

- The user and item embeddings are then combined using a similarity function, such as dot product or cosine similarity, to produce a score that represents the predicted rating or likelihood of interaction between the user and the item.

- Here’s an example of a two-tower architecture for a movie recommender system:

Input

|

/ \

User Tower Movie Tower

/ \ / \

Layer Layer Layer Layer

| | | |

User Age User Gender Genre Director

| | | |

Layer Layer Layer Layer

| | | |

User Embedding Movie Embedding

\ /

\ /

Score

- In this architecture, the user tower and the movie tower are each represented by two layers of neurons. The user tower takes as input the user’s age and gender, which are processed through the layers to produce a user embedding. The movie tower takes as input the movie’s genre and director, which are processed through the layers to produce a movie embedding.

- The user and movie embeddings are then combined using a similarity function, such as dot product or cosine similarity, to produce a score that represents the predicted rating or likelihood of the user watching the movie.

- For example, if a user is a 30-year-old female, and the movie is a drama directed by Christopher Nolan, the user tower will produce a user embedding that represents the user’s age and gender, and the movie tower will produce a movie embedding that represents the movie’s genre and director. The user and movie embeddings are then combined using a similarity function to produce a score that represents the predicted rating or likelihood of the user watching the movie.

- The architecture can be extended to include more features, such as the user’s viewing history, the movie’s release date, or the user’s mood, to model more complex interactions between users and movies.

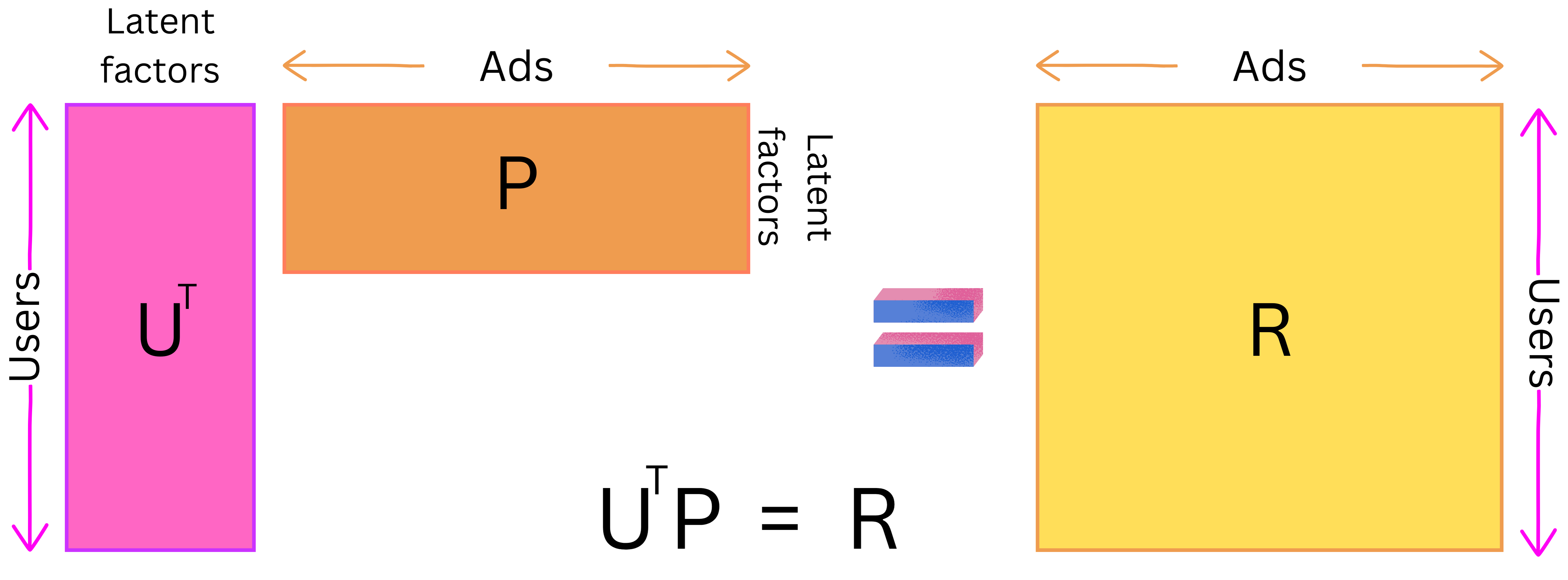

- As an example, the Two-tower paradigm is an extension of the more classical latent matrix factorization model. The latent matrix factorization model is a linear model where the users are represented by a matrix U and the ads by a matrix P such that the dot product R is as close as possible to the target to predict:

- Two-tower architecture allows dense and sparse features, and users (and context) and ads have their own non-linear towers. The tower output is used in a dot product to generate predictions:

Training data

Features

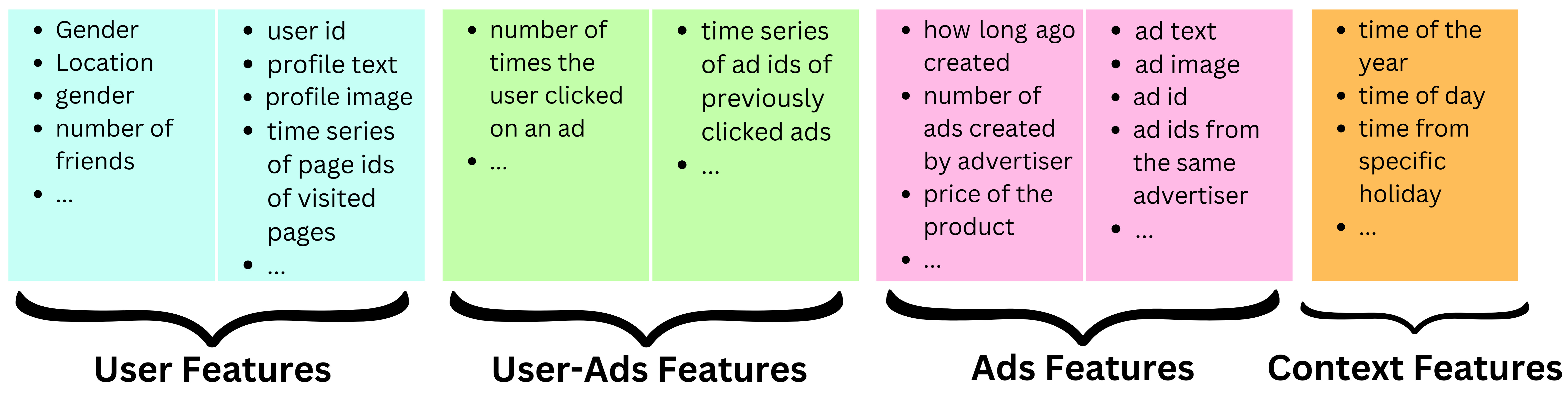

- When it comes to features, we need to think about the different actors of process: users, the ads and the context. Meta has ~10,000 features capturing those actors’ behavior. Let’s look at a few examples:

- User features: Dense features: age, gender, number of friends, number of friends with a specific interest, number of likes for pages related to a specific interest, etc.

- Embedding features: latent representation of the profile text, latent representation of the profile picture, etc.

- Sparse features: user id, time series of page ids of visited pages, user ids of closest friends, etc.

- Ads features

- Dense features: how long ago created, number of ads created by advertiser, price of the product, etc.

- Embedding features: latent representation of the ad text, latent representation of the ad image, etc.

- Sparse features: Ad id, ad ids from the same advertiser, etc.

- Context features

- Dense features: time of the year, time of day, time from specific holiday, election time of not, etc.

- User-ads interaction features

- Dense features: number of times the user clicked on an ad, number of times the user clicked on ads related to a specific subject, etc.

- Sparse features: time series of ad ids of previously clicked ads, time series of ad ids of previously seen ads, etc.

Training samples

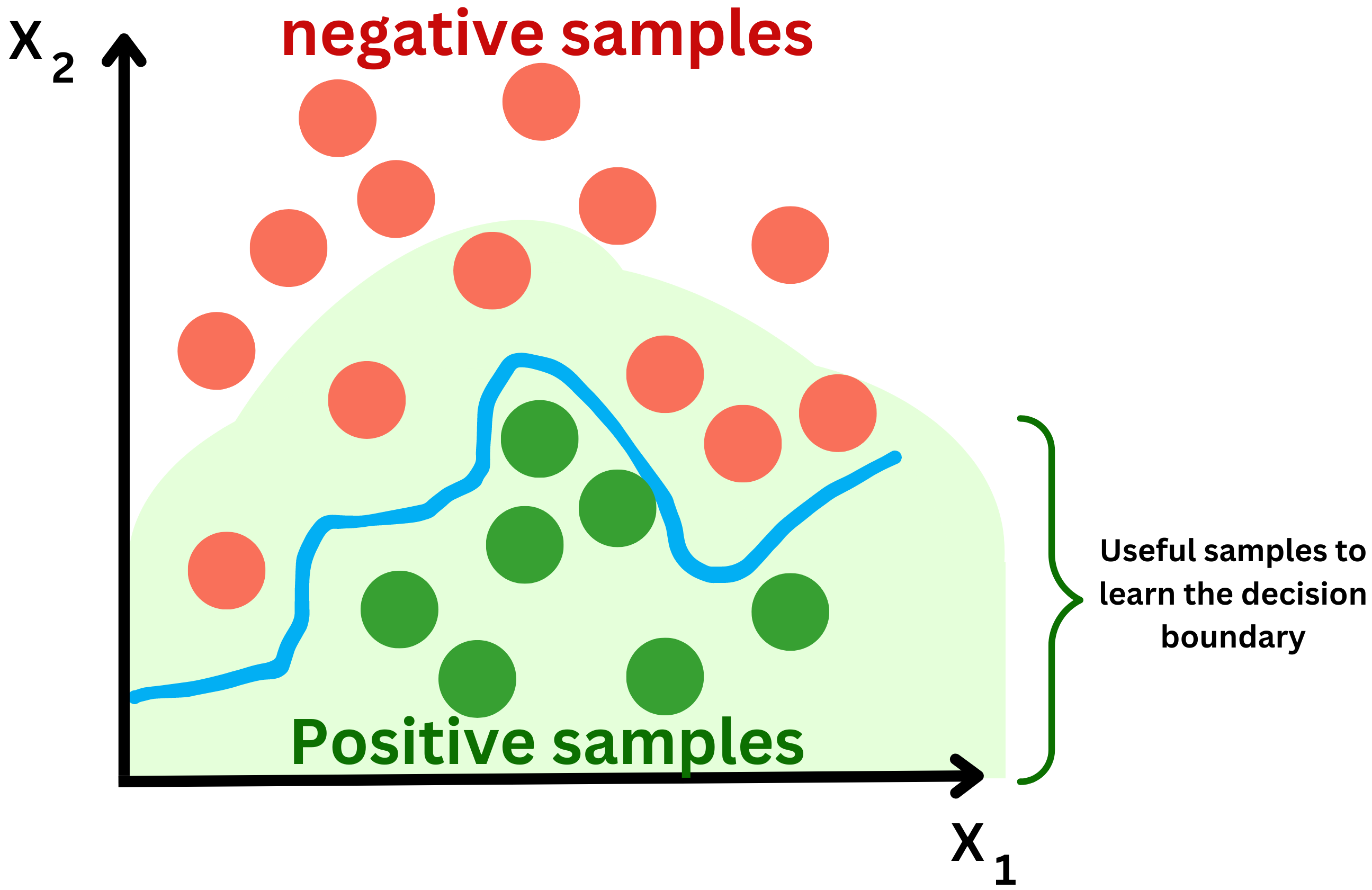

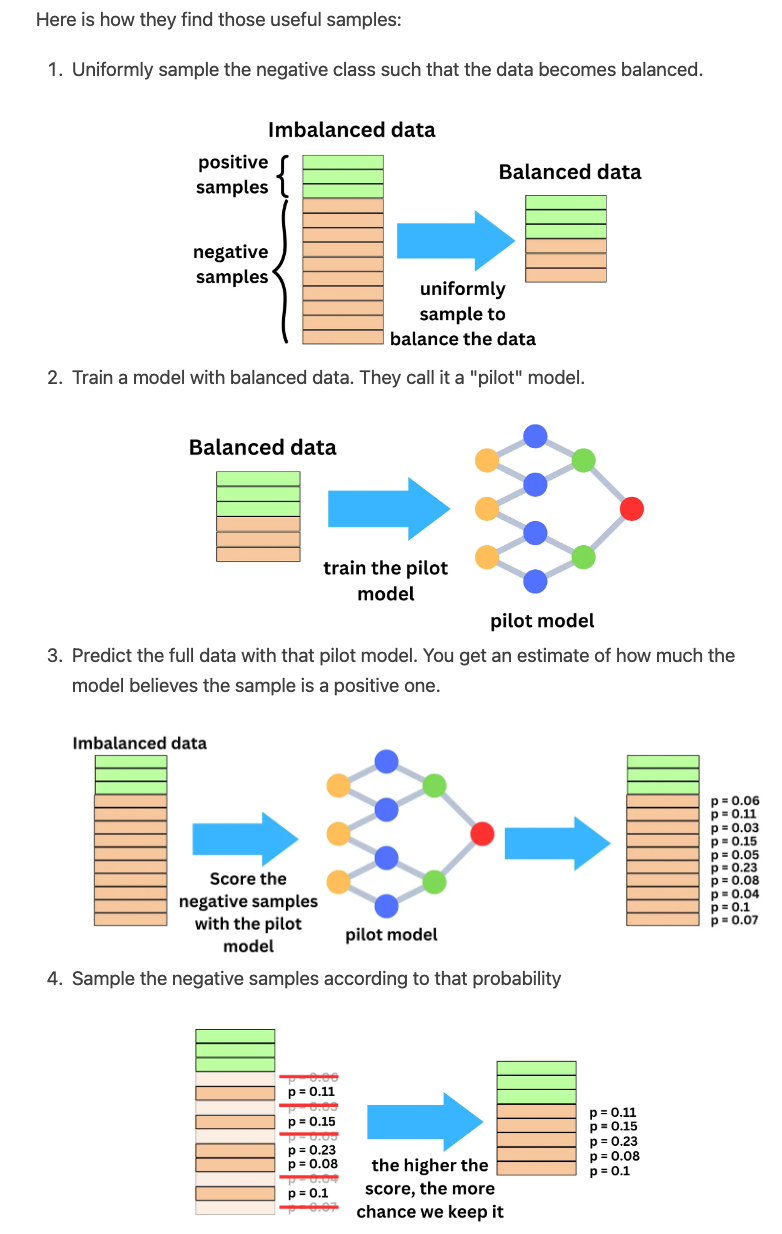

- The problem is a binary classification problem: will the user click or not? On Facebook, there are between 1B and 2B daily active users and each user sees on average 50 ads per day. That is ~3T ads shown per month. The typical click-through rate for ads is between 0.5% and 1%. If the ad click event represent a positive sample and an ad shown but not clicked, a negative sample, that is a very imbalanced data set. Considering the size of the data, we can safely sample down the negative samples to reduce the computational pressure during training and the imbalance of the target classes.

- In a recommender system, one common approach for training a model to make predictions is to use a binary classification framework, where the goal is to predict whether a user will interact with an item or not (e.g., click on an ad or purchase a product). However, in practice, the number of negative examples (i.e., examples where the user did not interact with an item) can be much larger than the number of positive examples (i.e., examples where the user did interact with an item). This can lead to an imbalance in the data distribution, which can affect the model’s performance.

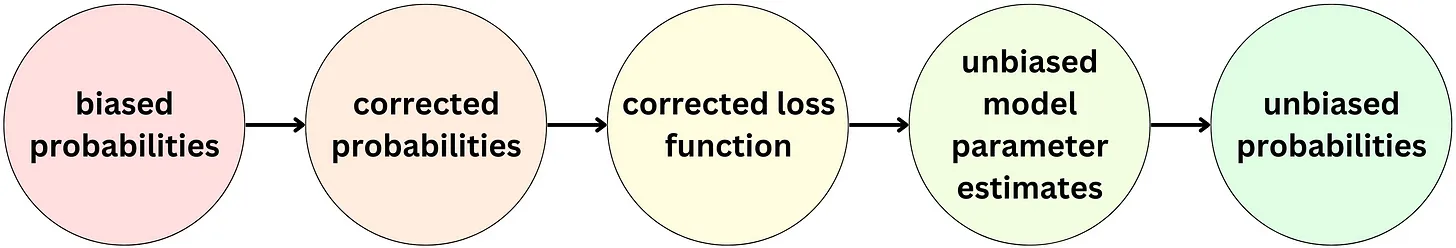

- To address this issue, one common technique is to downsample the negative examples to balance the class distribution. However, this downsampling can lead to biased probability estimates, as the probabilities of the positive examples will be overestimated, while the probabilities of the negative examples will be underestimated.

- To correct for this bias, a technique called probability calibration can be used. In this technique, the estimated probabilities are recalibrated to ensure that they are well calibrated with the true probability of the positive examples. One simple way to do this is to use a recalibration formula:

-

\[p' = p / (p + (1 - p) * s)\]

- where p is the estimated probability, s is the negative sampling rate (i.e., the ratio of negative examples to positive examples in the training data), and p’ is the recalibrated probability.

- Intuitively, this formula rescales the estimated probability p by adjusting it based on the negative sampling rate s, such that the resulting probability p’ is better calibrated with the true probability of the positive examples. This recalibrated probability can then be used in the auction process to determine which item to recommend to the user.

Metrics

Offline metrics

- Because the model is a binary classifier, it is usually trained with the cross-entropy loss function, and it is easy to use that metric to assess models.

- At Facebook, they actually use normalized entropy (“Normalized Cross-Entropy“) by normalizing the cross-entropy with the average cross-entropy.

- It is useful to assess across models and teams as anything above 1 is worse than random predictions.

- In the context of recommender systems, offline metrics refer to evaluation metrics that are computed using historical data, without actually interacting with users in real-time. These metrics are typically used during the model development and validation stage to measure the model’s performance and to compare different models.

Online metrics

- Once an engineer validates that the challenger model is better than the current one in production when it comes to the offline metrics, we can start the A/B testing process to validate the model on production data. The Ads Score metric used in production is a combination of the total revenue generated by the model and a quality metric measured by how often the users hide or report an ad.

- Online metrics refer to evaluation metrics that are computed in real-time using actual user interactions with the recommender system.

Low correlation of online and offline metrics

- The goal of using both offline and online metrics is to ensure that the models that perform well on offline metrics also perform well in production, where they interact with real users. However, in practice, there is often a low correlation between the performance of models on offline and online metrics.

- For example, a model that performs well on an offline metric, such as normalized entropy, may not perform well on an online metric, such as Ads Score, which measures how well the model performs in terms of user engagement and conversion. This could be due to various reasons, such as the difference between the data distribution in the offline and online environments, the presence of feedback loops in the online environment, and the lack of personalization in the offline experiments.

- To address this issue, active research is ongoing to create offline metrics that have a higher correlation with the online metrics. One approach is to incorporate user feedback and engagement data into the offline experiments to better simulate the online environment. Another approach is to use more advanced machine learning models, such as deep learning architectures, that can better capture the complex relationships between user behavior and item recommendations. Ultimately, the goal is to develop offline metrics that can better predict the performance of models in the online environment, and to improve the overall effectiveness of recommender systems.

The auction

The winning ad

- The auction process is used to reorder the top predicted ads taking into account the bid put on those ads by the advertisers and the quality of those ads.

- The bid is the maximum amount an advertiser is willing to pay for a single click of their ad.

- p(user will click) is the calibrated probability coming out of the recommender engine.

- Ad quality is an estimate of the quality of an ad coming out of another machine learning model. This model is trained on the events when users reported or hid an ad instead of click events.

- The advertiser is charged only when a user clicks on the ad, but the cost does not exactly correspond to the bid. The advertiser is charged the minimum price needed to win the auction with a markup of $0.01. This is called cost per click (CPC).

Bid computed

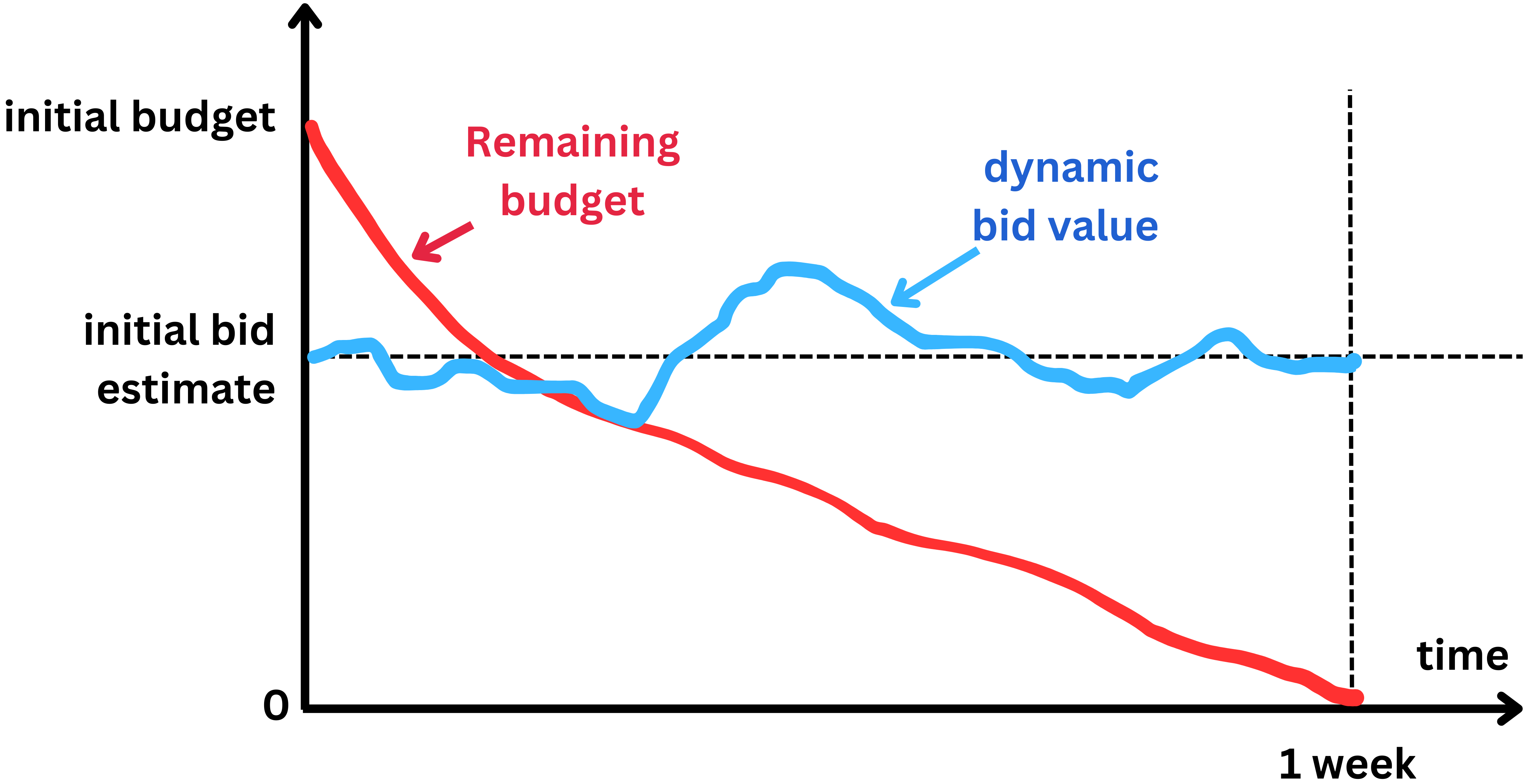

- When it comes to an ad campaign, the advertiser does not provide a bid value but a budget in dollar amount for certain period of time.

- For example $100 for one week. Because Facebook knows how many other campaigns are happening at the time, they can estimate how many times they are likely to show the ad, let’s say 5000 times per day, or 35,000 times in 7 days.

- If the click-through rate is 1%, the number of ad clicks will be 35,000 x 0.01 = 350. And $100 / 350 = $0.3. So based on budget and average click through rate statistics, Facebook can estimate an initial bid value.

- To ensure the ad click-through rate is in line with the set budget and the timeline, the bid value can be dynamically adjusted such that the budget is not exhausted too fast or too slowly. If the budget is consumed faster than expected the bid will be decreased by a small factor, and if the remaining budget is still too high, the bid can be artificially increased.

The Serving pipeline

Scaling

- The ranking process can easily be distributed to scale with the number of ads. The different models can be duplicated and the ads distributed among the different replicas. This reduce the latency of the overall process.

Pre-computing

- The ranking process does not need to be realtime. Being able to pre-compute the ads is cheaper since we don’t need to use as many machines to reduce the latency and we can use bigger models (so slower) which will improve the ranking quality. The reason we may want to be close to a real-time process is to capture the latest changes in the state of the user, ads or context.

- In reality, the features associated with those actors will change marginally in a few minutes The cost associated to be real-time may not be worth the gain, especially for users that rarely click on ads. There are several strategies we can adopt to reduce operating costs:

- In the context of advertising, the ranking process refers to the process of selecting and ordering the ads that will be displayed to a user based on their interests, preferences, and other relevant factors. This ranking process can be performed either in real-time, where the ads are selected and ordered on-the-fly as the user interacts with the platform, or in a pre-computed manner, where the ads are selected and ordered ahead of time and stored for future use.

- The statement “The ranking process does not need to be realtime” suggests that pre-computing the ads can be a more cost-effective approach than performing the ranking process in real-time. This is because pre-computing the ads allows for the use of bigger and more complex models that can improve the quality of the ad ranking, while reducing the number of machines needed to process the requests, thus reducing the cost.

- However, being close to a real-time process can be important in order to capture the latest changes in user behavior, ads, and context, and to ensure that the ads being displayed are relevant and engaging. This is particularly important for users who frequently click on ads and are more likely to engage with the platform.

- To balance the trade-off between the cost and the effectiveness of the ad ranking process, several strategies can be adopted. For example, one approach is to use a hybrid approach that combines pre-computed ads with real-time updates based on user behavior and contextual information. Another approach is to focus on improving the accuracy of the ad ranking models and algorithms, which can help to reduce the number of ads that need to be displayed to achieve the desired level of engagement. Finally, optimizing the infrastructure and resource allocation can also help to reduce the cost of performing the ad ranking process in real-time.

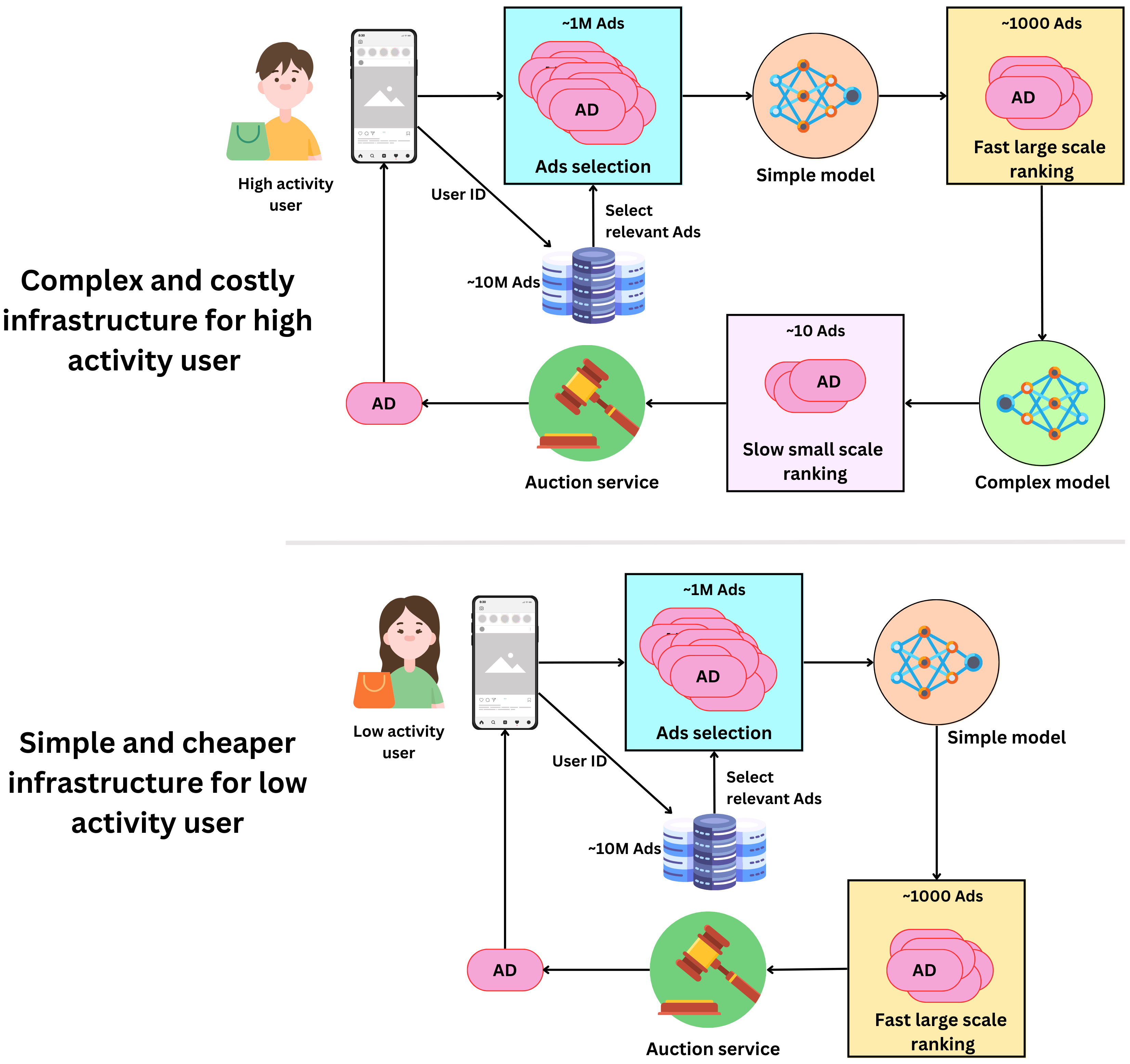

- Users can be tiered:

- some users, no matter what, will never click on an ad, while others will do so very often. For low activity users, we could set up a low cost / low accuracy infrastructure to minimize the cost per ranking for users that don’t generate much revenue. For high-activity users, the cost of accurate predictions is worth the gain.

- We can use more powerful models and low- latency infrastructures to increase their likelihood to click on ads.

- Users can be tiered:

- We don’t know much about new users:

- it might be unnecessary to try to generate accurate rankings for users we don’t know much about as the models will be likely to be wrong. Initially, newly created users could be classified as low activity users until we have enough data about them to generate accurate rankings.

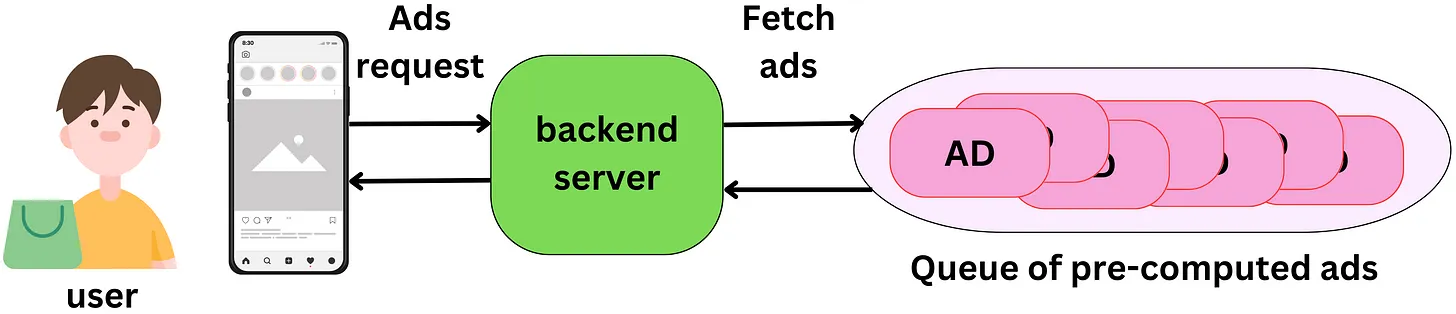

- Pre-computing ads for a user session:

- User sessions typically require multiple ads to be shown. It feels like a waste of resources to rank millions of ads every time an ad is shown. One ranking process can produce an array of ready-to-go ads for each user session.

- Finding the right triggers:

- If we want to pre-compute ads rankings a few minutes or hours in advance, we need to find the right triggers to do so.

- We could recompute the ads ranking in a scheduled manner, for example each hour we run the ranking for the 3B Facebook users. That seems like a waste of resources if the user doesn’t log in for a week let’s say!

- We could start the process at the moment the user logs in. This way the ads are ready by the time the user scrolls to the first ad position. This works if the ranking process is fast enough.

- We could recompute the ranking every time there is a significant change in the user’s state. For example the user liked a page related to a specific interest or registered for a new group. If the user is not very active, we could combine this strategy with the scheduled one by recalculating the ranking after a certain period.

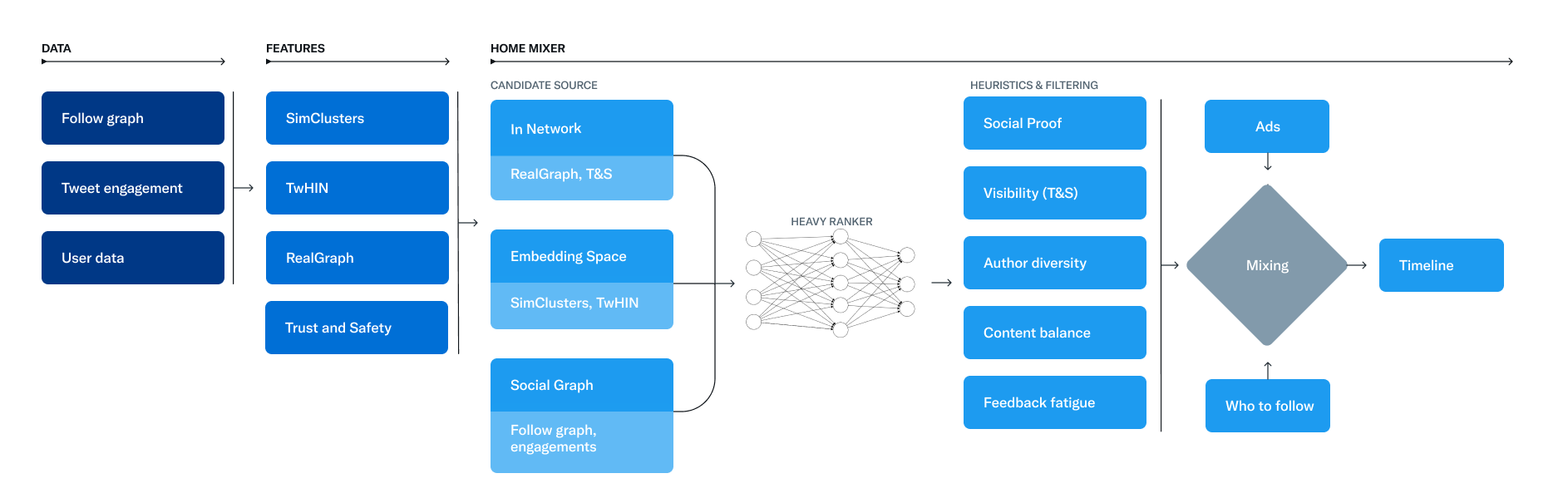

Twitter’s Recommendation Algorithm

- The foundation of Twitter’s recommendations is a set of core models and features that extract latent information from Tweet, user, and engagement data. These models aim to answer important questions about the Twitter network, such as, “What is the probability you will interact with another user in the future?” or, “What are the communities on Twitter and what are trending Tweets within them?” Answering these questions accurately enables Twitter to deliver more relevant recommendations.

- The recommendation pipeline is made up of three main stages that consume these features:

- Fetch the best Tweets from different recommendation sources in a process called candidate sourcing.

- Rank each Tweet using a machine learning model.

- Apply heuristics and filters, such as filtering out Tweets from users you’ve blocked, NSFW content, and Tweets you’ve already seen.

- The service that is responsible for constructing and serving the For You timeline is called Home Mixer. Home Mixer is built on Product Mixer, our custom Scala framework that facilitates building feeds of content. This service acts as the software backbone that connects different candidate sources, scoring functions, heuristics, and filters.

- This diagram below illustrates the major components used to construct a timeline:

- Let’s explore the key parts of this system, roughly in the order they’d be called during a single timeline request, starting with retrieving candidates from Candidate Sources.

Candidate Sources

- Twitter has several Candidate Sources that we use to retrieve recent and relevant Tweets for a user. For each request, we attempt to extract the best 1500 Tweets from a pool of hundreds of millions through these sources. We find candidates from people you follow (In-Network) and from people you don’t follow (Out-of-Network).

- Today, the For You timeline consists of 50% In-Network Tweets and 50% Out-of-Network Tweets on average, though this may vary from user to user.

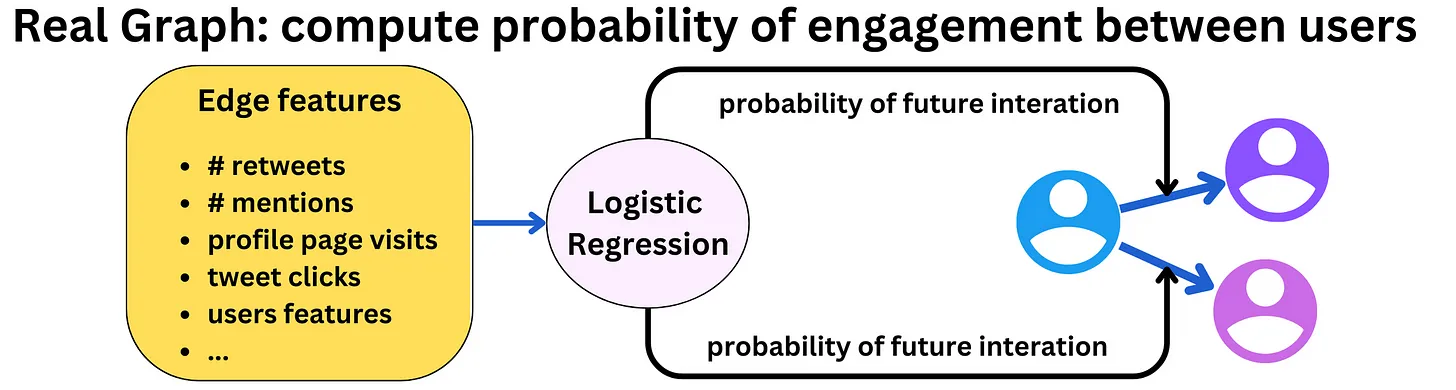

In-Network Source

- The In-Network source is the largest candidate source and aims to deliver the most relevant, recent Tweets from users you follow. It efficiently ranks Tweets of those you follow based on their relevance using a logistic regression model. The top Tweets are then sent to the next stage.

- The most important component in ranking In-Network Tweets is Real Graph. Real Graph is a model which predicts the likelihood of engagement between two users. The higher the Real Graph score between you and the author of the Tweet, the more of their tweets we’ll include.

- The In-Network source has been the subject of recent work at Twitter. We recently stopped using Fanout Service, a 12-year old service that was previously used to provide In-Network Tweets from a cache of Tweets for each user. We’re also in the process of redesigning the logistic regression ranking model which was last updated and trained several years ago!

Out-of-Network Sources

- Finding relevant Tweets outside of a user’s network is a trickier problem: How can we tell if a certain Tweet will be relevant to you if you don’t follow the author? Twitter takes two approaches to addressing this.

Social Graph

- Our first approach is to estimate what you would find relevant by analyzing the engagements of people you follow or those with similar interests.

- We traverse the graph of engagements and follows to answer the following questions:

- What Tweets did the people I follow recently engage with?

- Who likes similar Tweets to me, and what else have they recently liked?

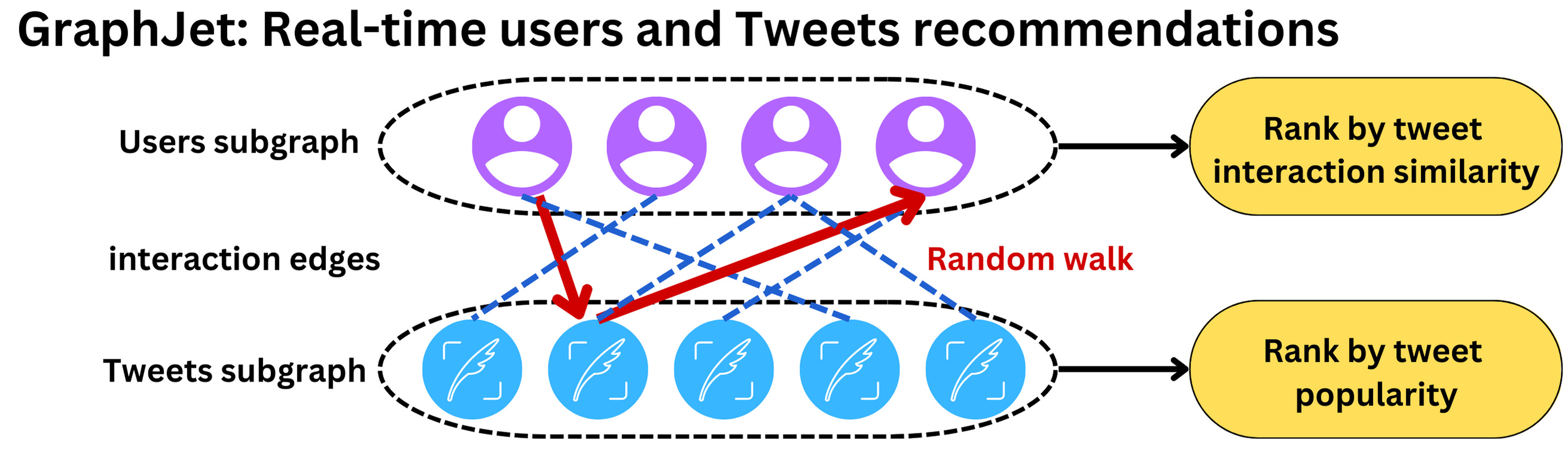

- We generate candidate Tweets based on the answers to these questions and rank the resulting Tweets using a logistic regression model. Graph traversals of this type are essential to our Out-of-Network recommendations; we developed GraphJet, a graph processing engine that maintains a real-time interaction graph between users and Tweets, to execute these traversals. While such heuristics for searching the Twitter engagement and follow network have proven useful (these currently serve about 15% of Home Timeline Tweets), embedding space approaches have become the larger source of Out-of-Network Tweets.

Embedding Spaces

- Embedding space approaches aim to answer a more general question about content similarity: What Tweets and Users are similar to my interests?

- Embeddings work by generating numerical representations of users’ interests and Tweets’ content. We can then calculate the similarity between any two users, Tweets or user-Tweet pairs in this embedding space. Provided we generate accurate embeddings, we can use this similarity as a stand-in for relevance.

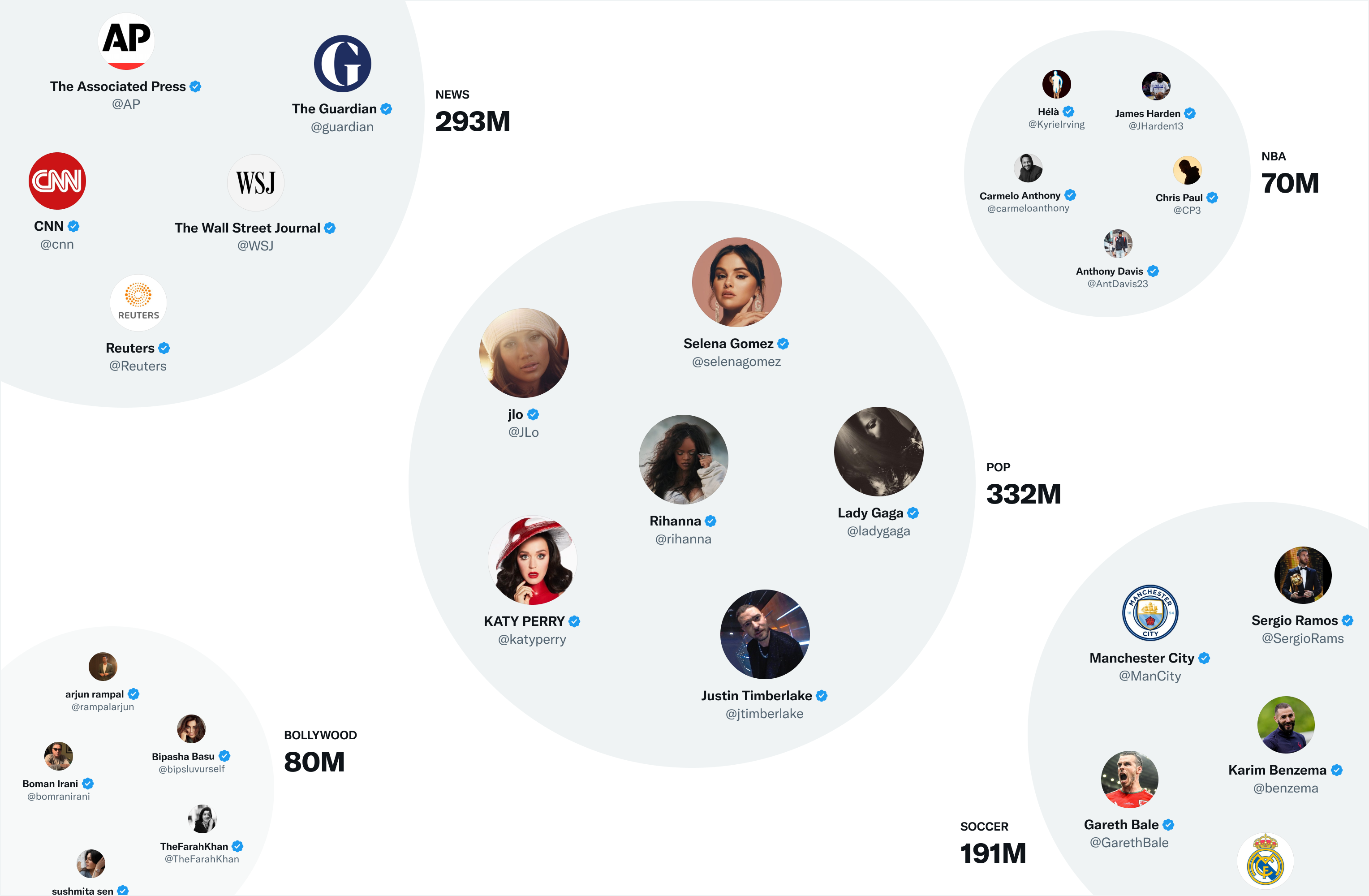

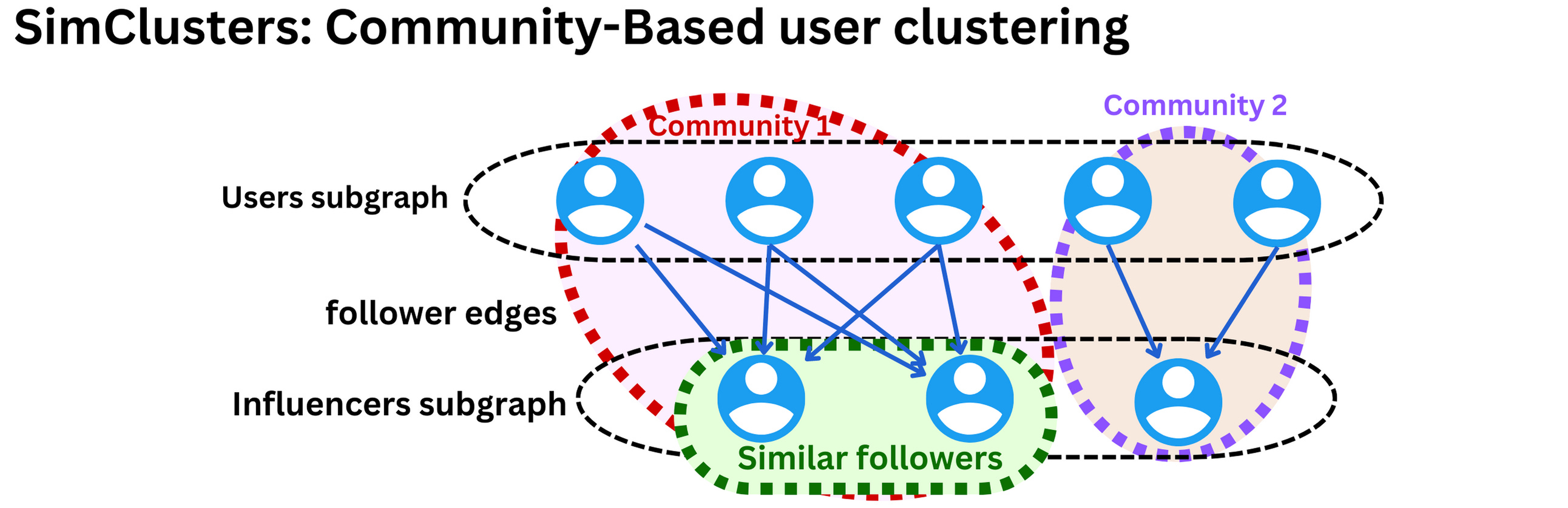

- One of Twitter’s most useful embedding spaces is SimClusters. SimClusters discover communities anchored by a cluster of influential users using a custom matrix factorization algorithm. There are 145k communities, which are updated every three weeks. Users and Tweets are represented in the space of communities, and can belong to multiple communities. Communities range in size from a few thousand users for individual friend groups, to hundreds of millions of users for news or pop culture. These are some of the biggest communities:

- We can embed Tweets into these communities by looking at the current popularity of a Tweet in each community. The more that users from a community like a Tweet, the more that Tweet will be associated with that community.

Ranking

- The goal of the For You timeline is to serve you relevant Tweets. At this point in the pipeline, we have ~1500 candidates that may be relevant. Scoring directly predicts the relevance of each candidate Tweet and is the primary signal for ranking Tweets on your timeline. At this stage, all candidates are treated equally, without regard for what candidate source it originated from.

- Ranking is achieved with a ~48M parameter neural network that is continuously trained on Tweet interactions to optimize for positive engagement (e.g. Likes, Retweets, and Replies). This ranking mechanism takes into account thousands of features and outputs ten labels to give each Tweet a score, where each label represents the probability of an engagement. We rank the Tweets from these scores.

Heuristics, Filters, and Product Features

- After the Ranking stage, we apply heuristics and filters to implement various product features. These features work together to create a balanced and diverse feed. Some examples include:

- Visibility Filtering: Filter out Tweets based on their content and your preferences. For instance, remove Tweets from accounts you block or mute.

- Author Diversity: Avoid too many consecutive Tweets from a single author.

- Content Balance: Ensure we are delivering a fair balance of In-Network and Out-of-Network Tweets.

- Feedback-based Fatigue: Lower the score of certain Tweets if the viewer has provided negative feedback around it.

- Social Proof: Exclude Out-of-Network Tweets without a second degree connection to the Tweet as a quality safeguard. In other words, ensure someone you follow engaged with the Tweet or follows the Tweet’s author.

- Conversations: Provide more context to a Reply by threading it together with the original Tweet.

- Edited Tweets: Determine if the Tweets currently on a device are stale, and send instructions to replace them with the edited versions.

Mixing and Serving

- At this point, Home Mixer has a set of Tweets ready to send to your device. As the last step in the process, the system blends together Tweets with other non-Tweet content like Ads, Follow Recommendations, and Onboarding prompts, which are returned to your device to display.

- The pipeline above runs approximately 5 billion times per day and completes in under 1.5 seconds on average. A single pipeline execution requires 220 seconds of CPU time, nearly 150x the latency you perceive on the app.

- The goal of our open source endeavor is to provide full transparency to you, our users, about how our systems work. We’ve released the code powering our recommendations that you can view here (and here) to understand our algorithm in greater detail, and we are also working on several features to provide you greater transparency within our app. Some of the new developments we have planned include:

- A better Twitter analytics platform for creators with more information on reach and engagement

- Greater transparency into any safety labels applied to your Tweets or accounts

- Greater visibility into why Tweets appear on your timeline

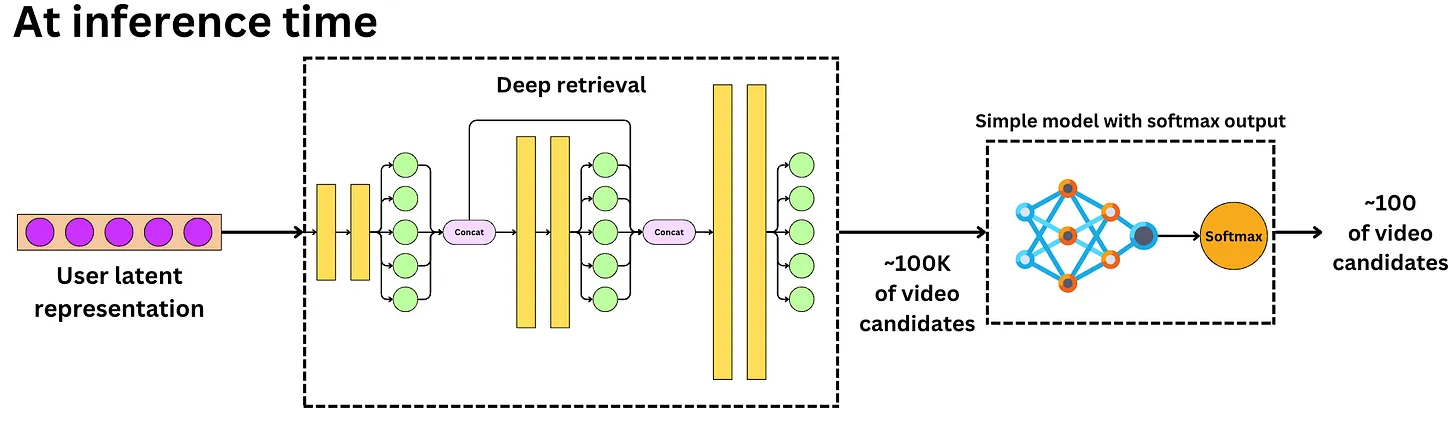

Twitter’s Retrieval Algorithm: Deep Retrieval

- The content here is from Damien Benveniste’s LinkedIn post.

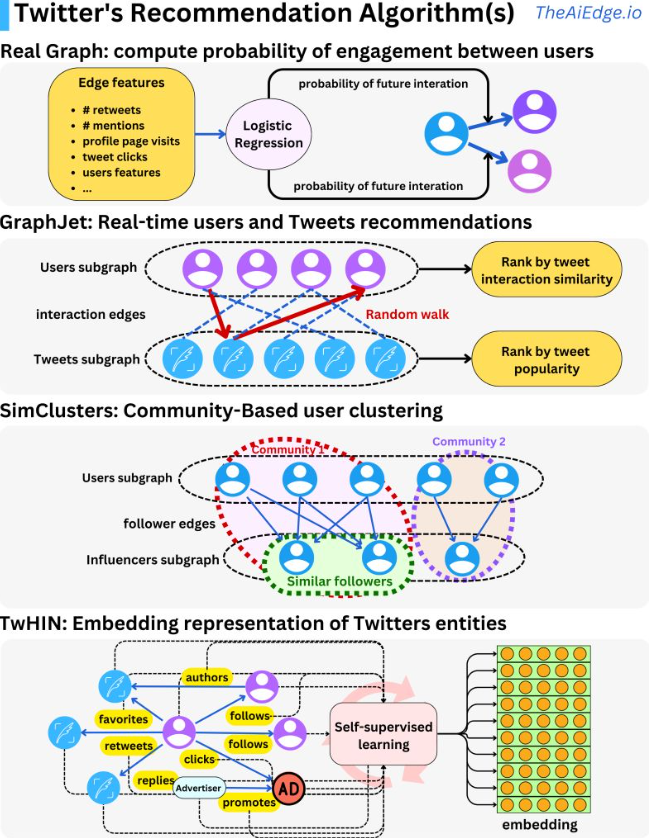

- At a high level, Twitter’s recsys works the same as most, it takes the “best” tweets, ranks them with an ML model, filters the unwanted tweets and presents it to a user.

- Let’s delve deeper into Twitter’s ML algorithm that go through multistage tweet selection processes for ranking.

- Twitter first selects about 1500 relevant tweets with 50% coming from your network and 50% from outside.

- For the 50% in your network, they then use Real Graph to rank people within your network which internally is a logistic regression algorithm running on Hadoop.

- The features used here are previous retweets, tweet interactions and user features and with these, they compute the probability that the user will interact with these users again.

- For the 50% out of network, they use GraphJet.

- “Different users and tweet interactions are captured over a certain time window and a SALSA algorithm (random walk on a bipartite graph) is run to understand the tweets that are likely to interest some users and how similar users are to each other. This process leads to metrics we can rank to select the top tweets.” (source)

- SimClusters algorithm: “clusters users into communities. The idea is to assign similarity metrics between users based on what influencers they follow. With those clusters, we can assign users’ tweets to communities and measure similarity metrics between users and tweets.” (source)

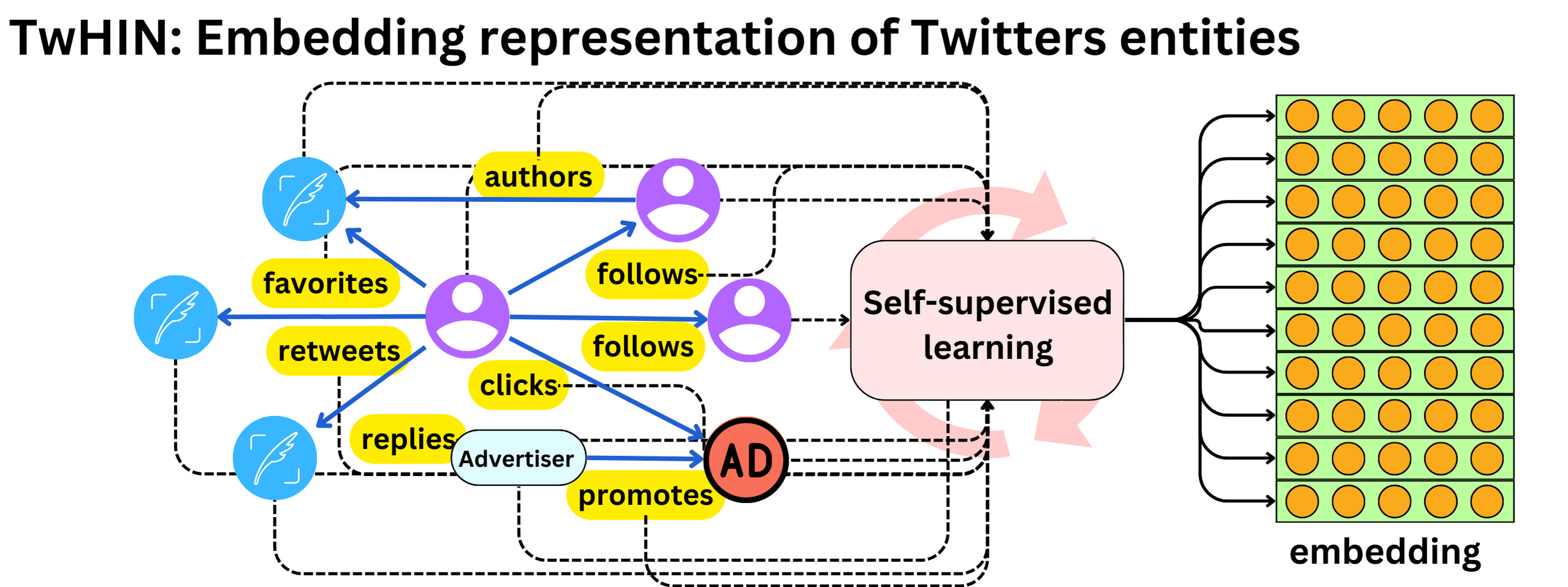

- TwHIN algorithm:is a more modern graph algorithm to compute latent representations (embeddings) of the different Twitter entities (users, tweets, ads, advertisers) and the relationships between those entities (clicks, follows, retweets, authors, etc.). The idea is to use contrastive learning to minimize the dot products of entities that interact in the graph and maximize the dot-product of entities that do not. (source)

- Once the recommendations are selected, they are ranked via a 48M parameters MaxNet model that includes thousands of features and 10 engagement labels to compute a final score to rank tweets.

- The image below (source) illustrates these different methodologies at play.

- Twitter just open-sourced its recommendation algorithm for personalized tweet feeds and it is pretty much what you would expect: you get the “best” tweets, rank them with a machine learning model, filter the unwanted tweets and present them to the user.

- That is the way that most recommender engines tend to be used: search engines, ads ranking, product recommendation, movie recommendation, etc. What is interesting is the way the different Twitter’s ML algorithms come together to be used as multistage tweet selection processes for the ranking process.

- The first step in the process is to select ~1500 relevant tweets, 50% coming from people in your network and 50% outside of it. Real Graph (“RealGraph: User Interaction Prediction at Twitter“) is used to rank people in your network. It is a logistic regression algorithm running on Hadoop. Using features like previous retweets, tweet interactions and user features we can compute the probability that a specific user will interact again with other users.

- For people outside your network, they use GraphJet, a real-time graph recommender system.

- Different users and tweet interactions are captured over a certain time window and a SALSA algorithm (random walk on a bipartite graph) is run to understand the tweets that are likely to interest some users and how similar users are to each other.

- SALSA is similar to a personalized PageRank algorithm but adapted to multiple types of objects recommendation. This process leads to metrics we can rank to select the top tweets.

- The SimClusters algorithm (“SimClusters: Community-Based Representations for Heterogeneous Recommendations at Twitter“) clusters users into communities.

- The idea is to assign similarity metrics between users based on what influencers they follow. With those clusters, we can assign users’ tweets to communities and measure similarity metrics between users and tweets.

- The TwHIN algorithm (“TwHIN: Embedding the Twitter Heterogeneous Information Network for Personalized Recommendation“) is a more modern graph algorithm to compute latent representations (embeddings) of the different Twitter entities (users, tweets, ads, advertisers) and the relationships between those entities (clicks, follows, retweets, authors, …). The idea is to use contrastive learning to minimize the dot products of entities that interact in the graph and maximize the dot-product of entities that do not. [Embeddings can be used to compute user-tweet similarity metrics and be used as features in other models.]

- Once those different sources of recommendation select a rough set of tweets, we rank them using a 48M parameters MaskNet model (“MaskNet: Introducing Feature-Wise Multiplication to CTR Ranking Models by Instance-Guided Mask“).

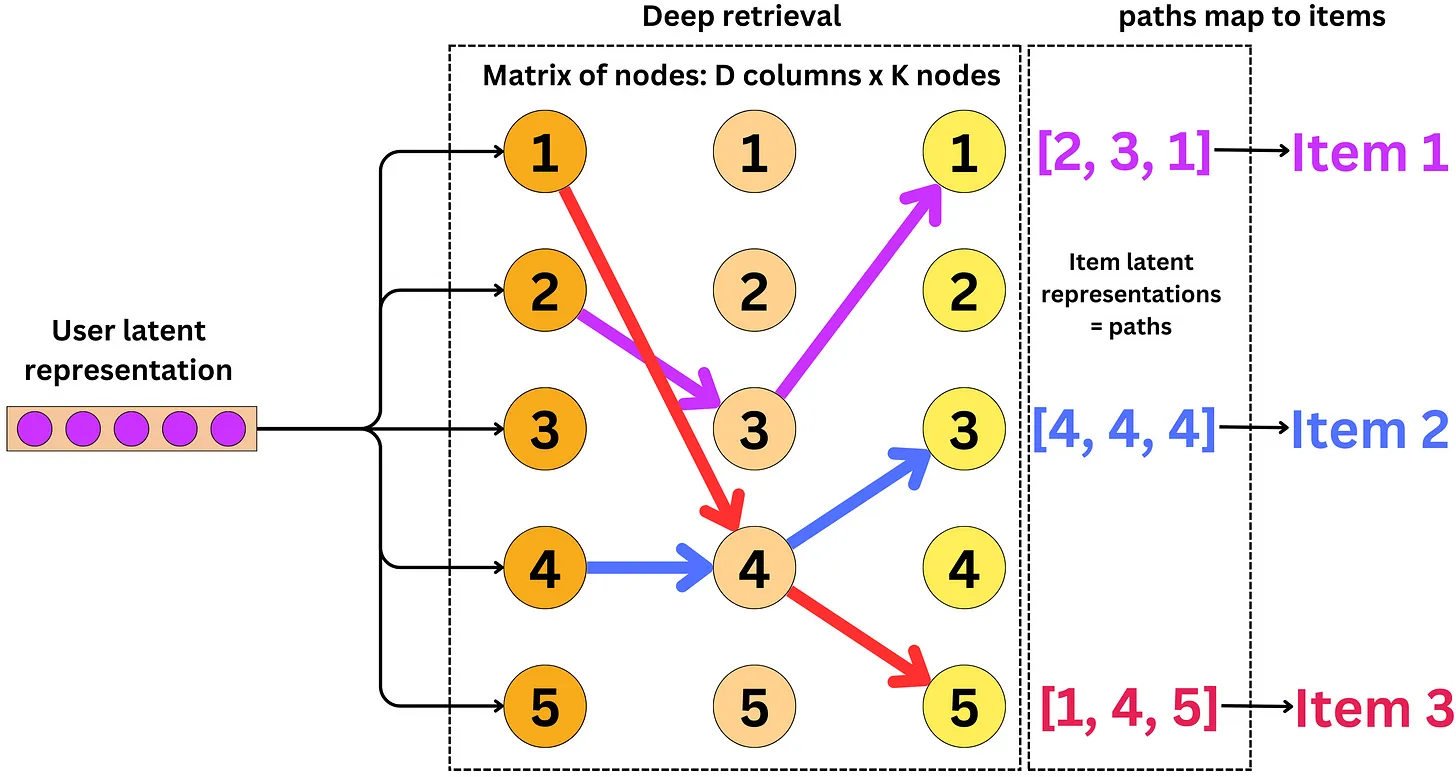

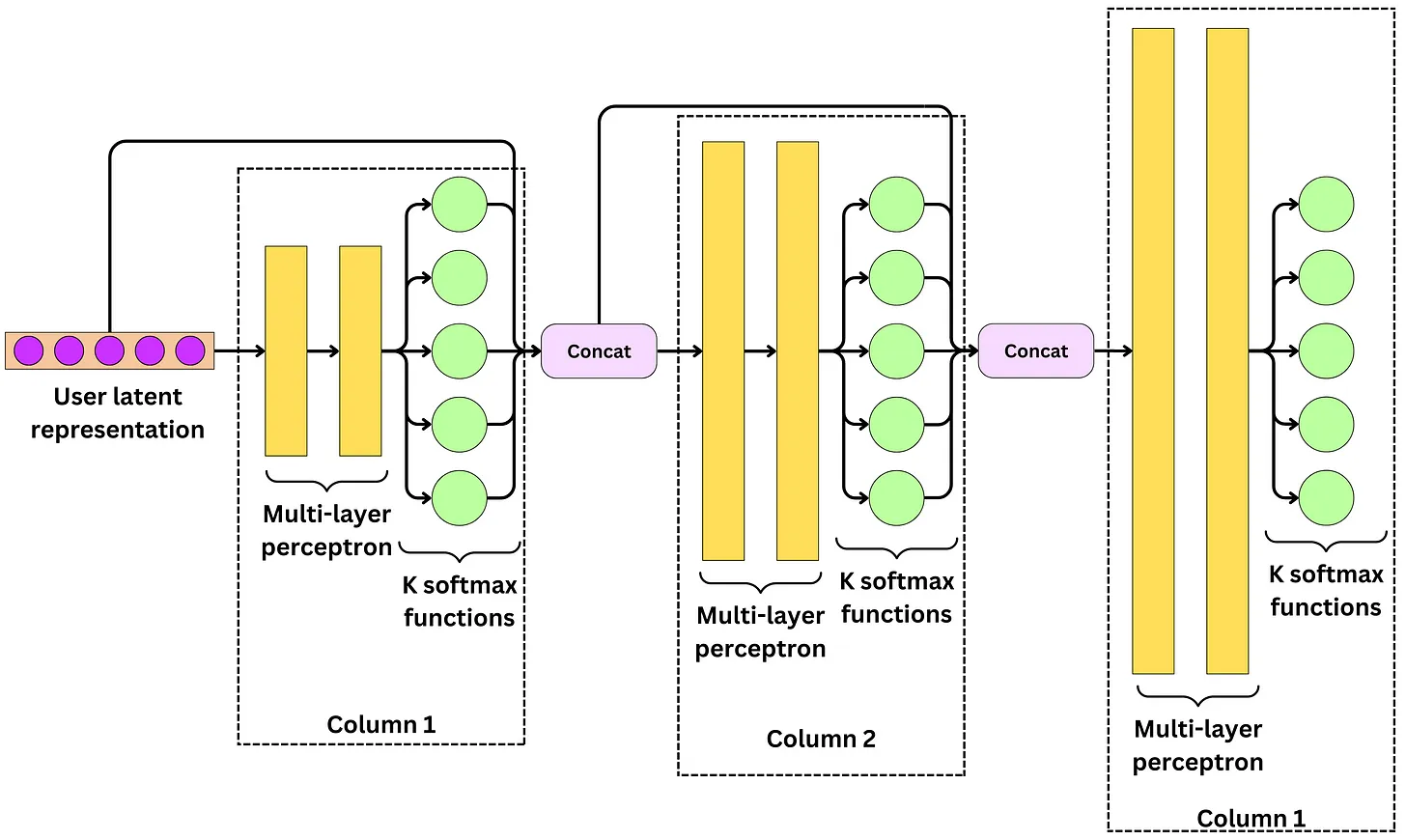

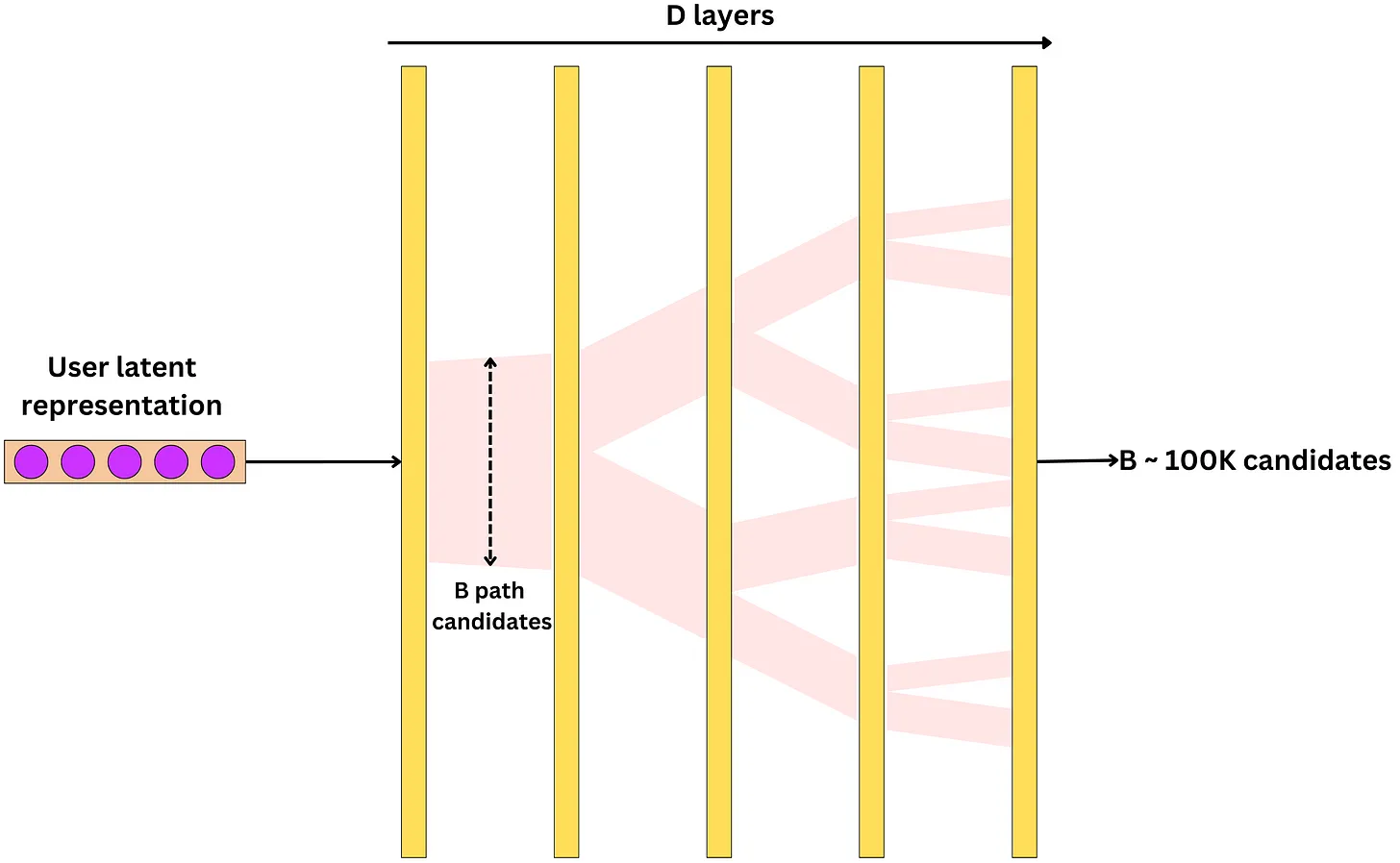

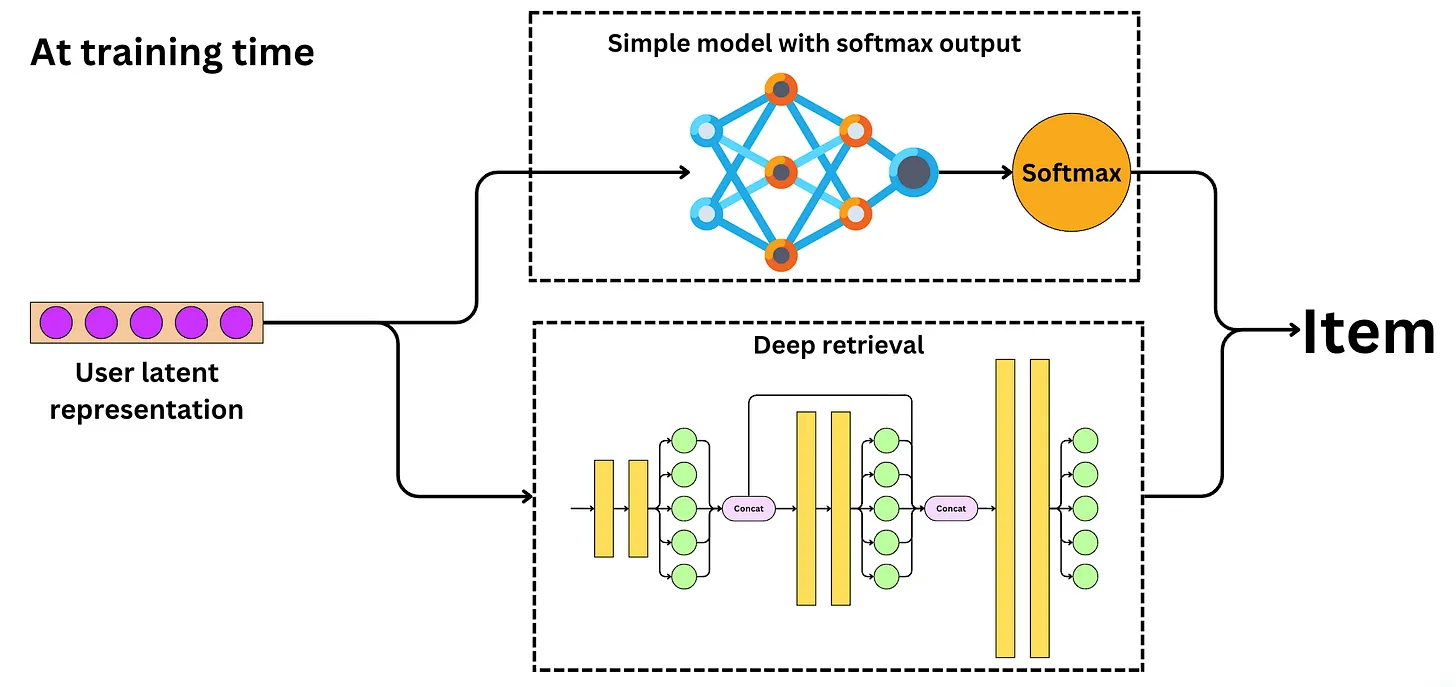

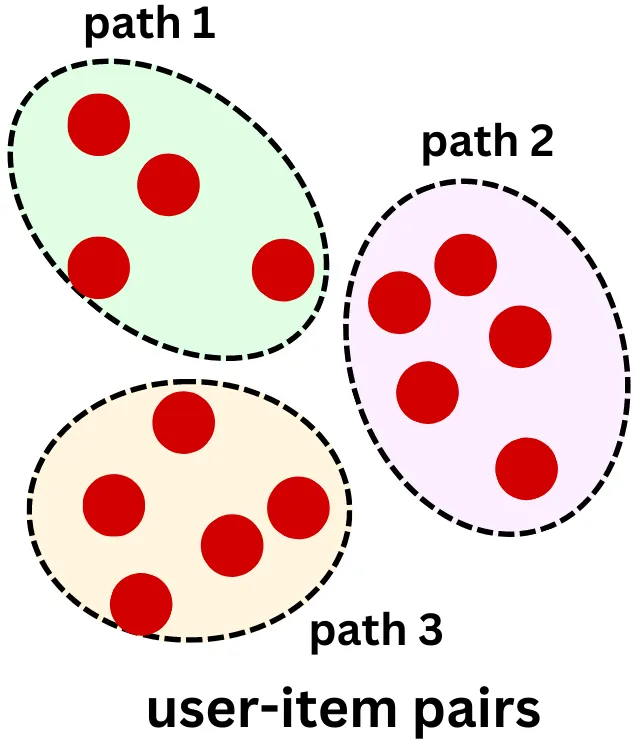

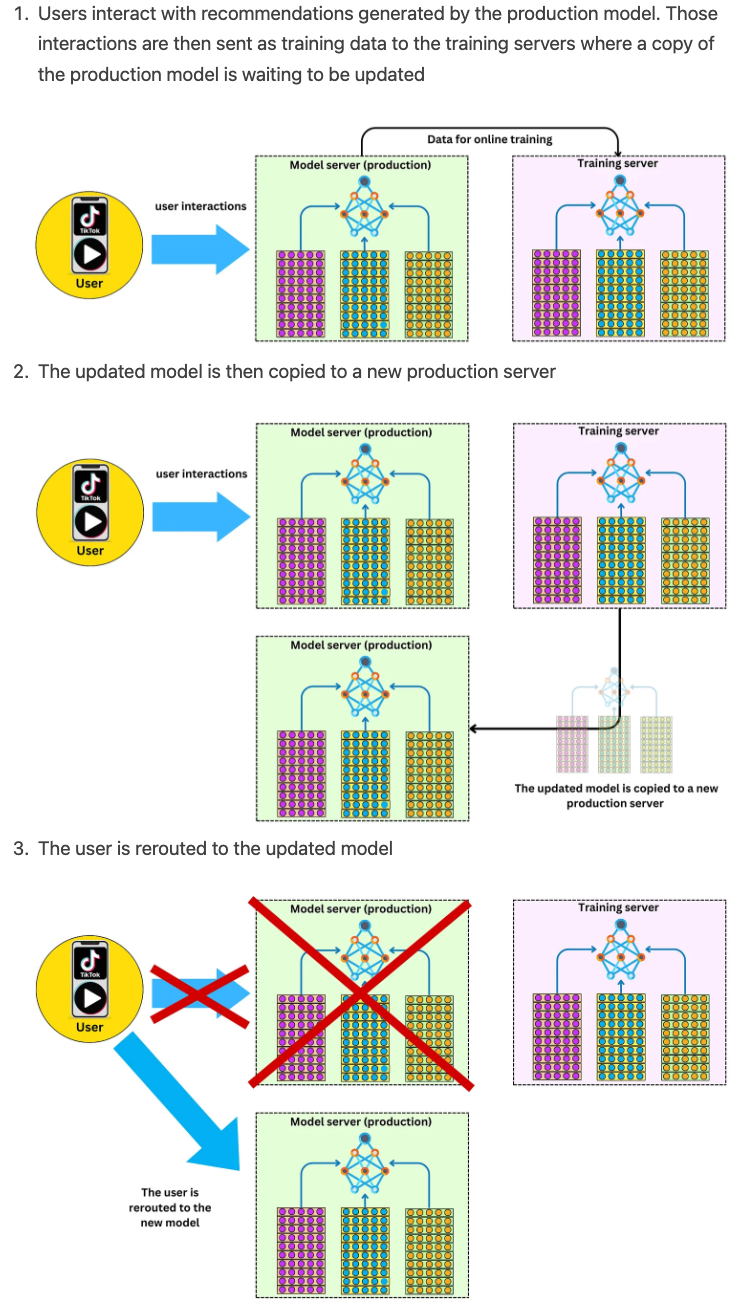

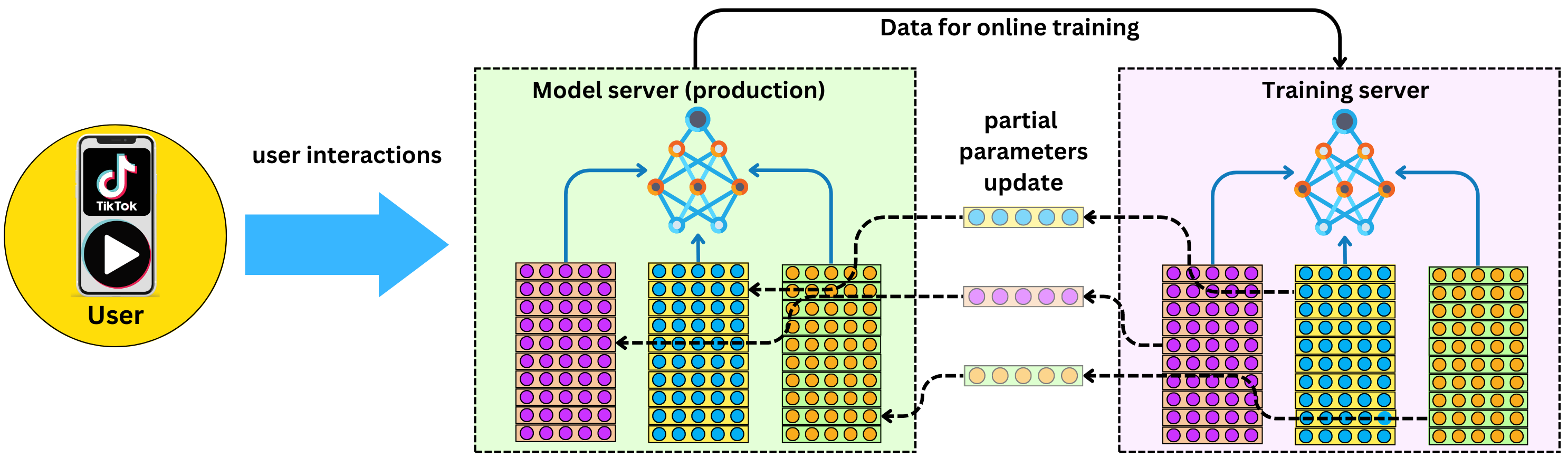

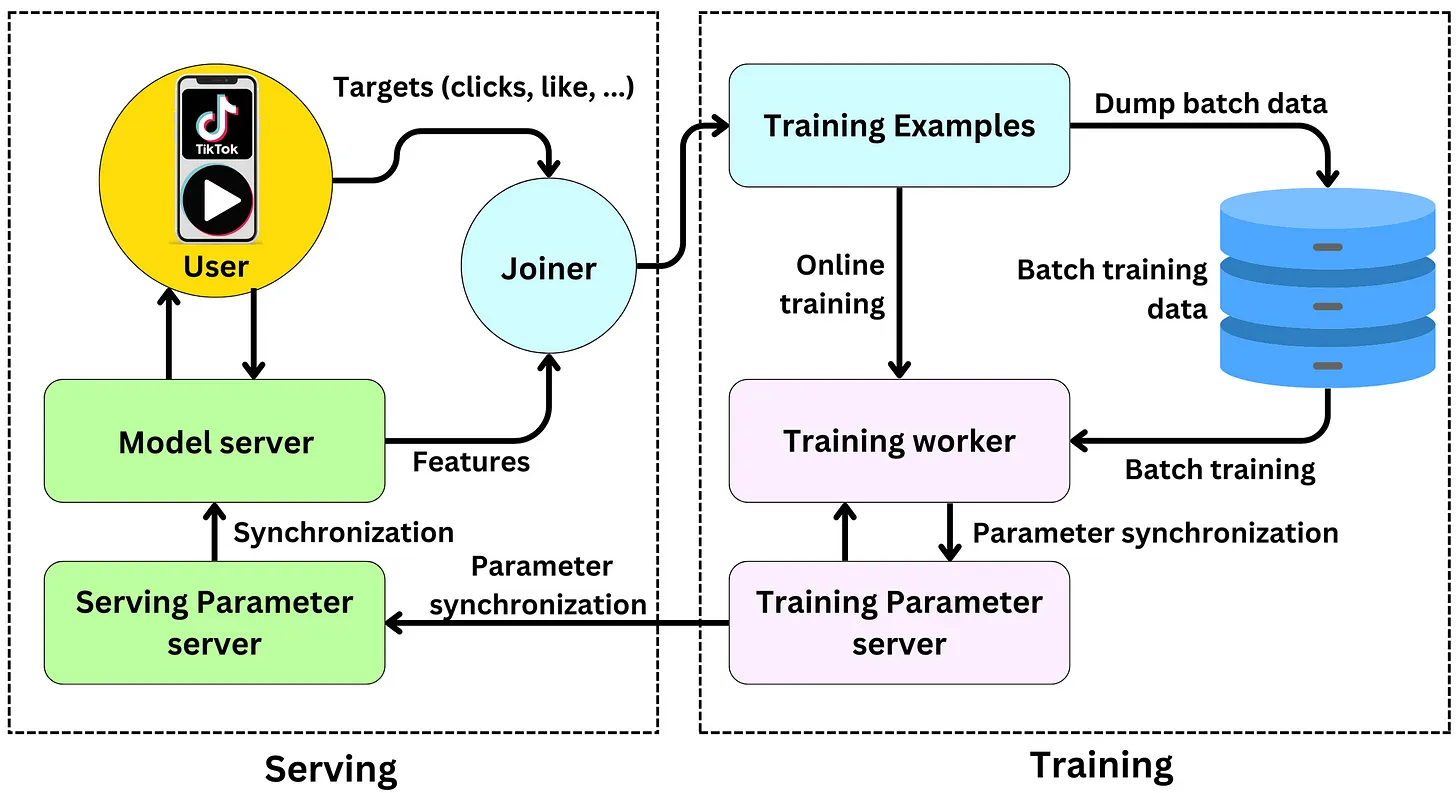

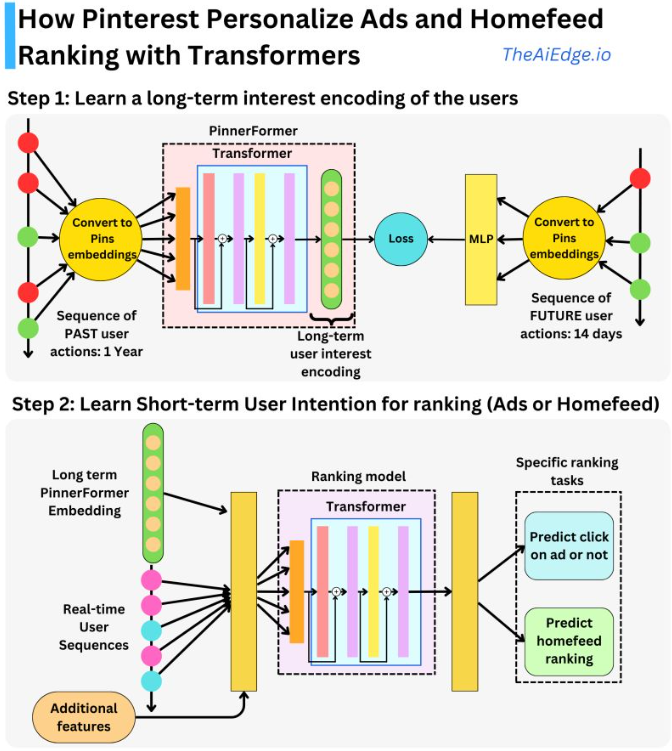

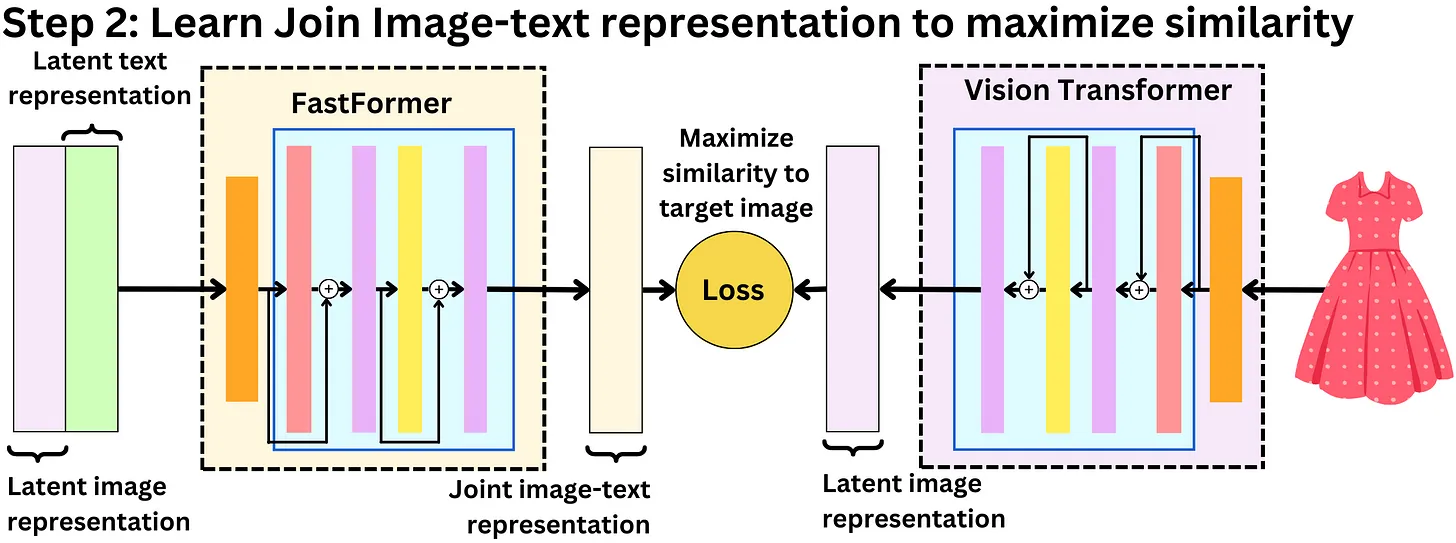

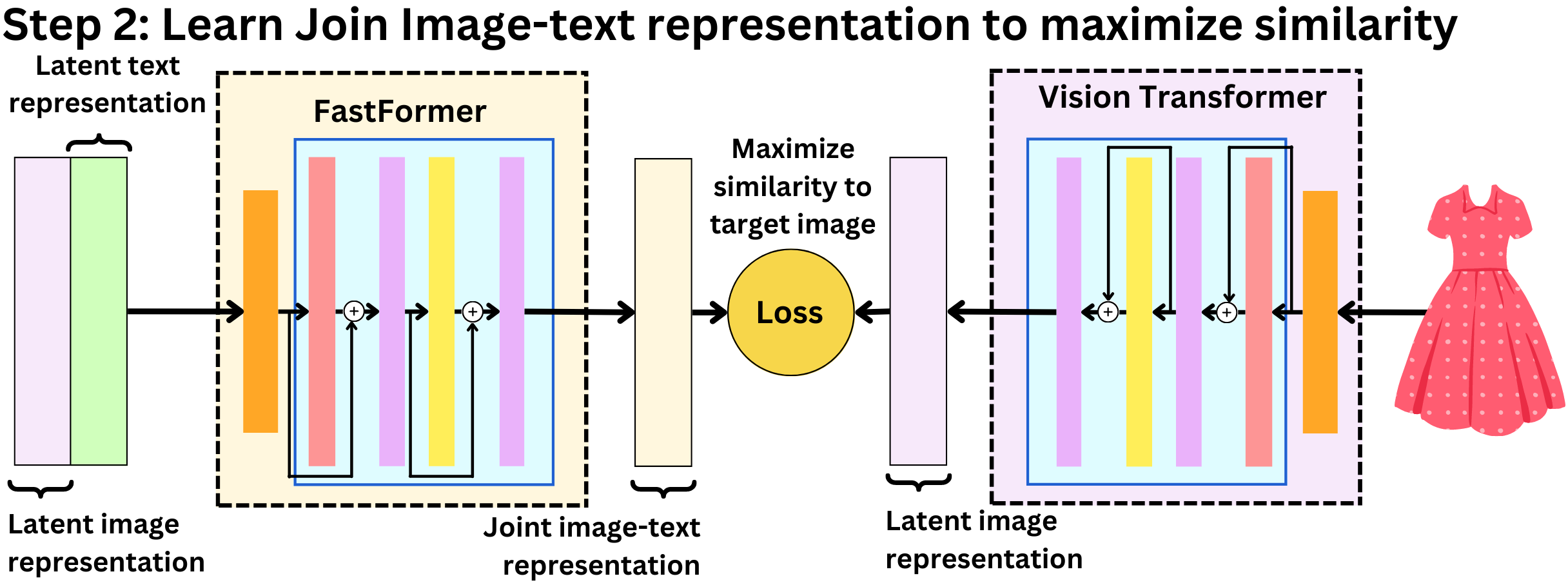

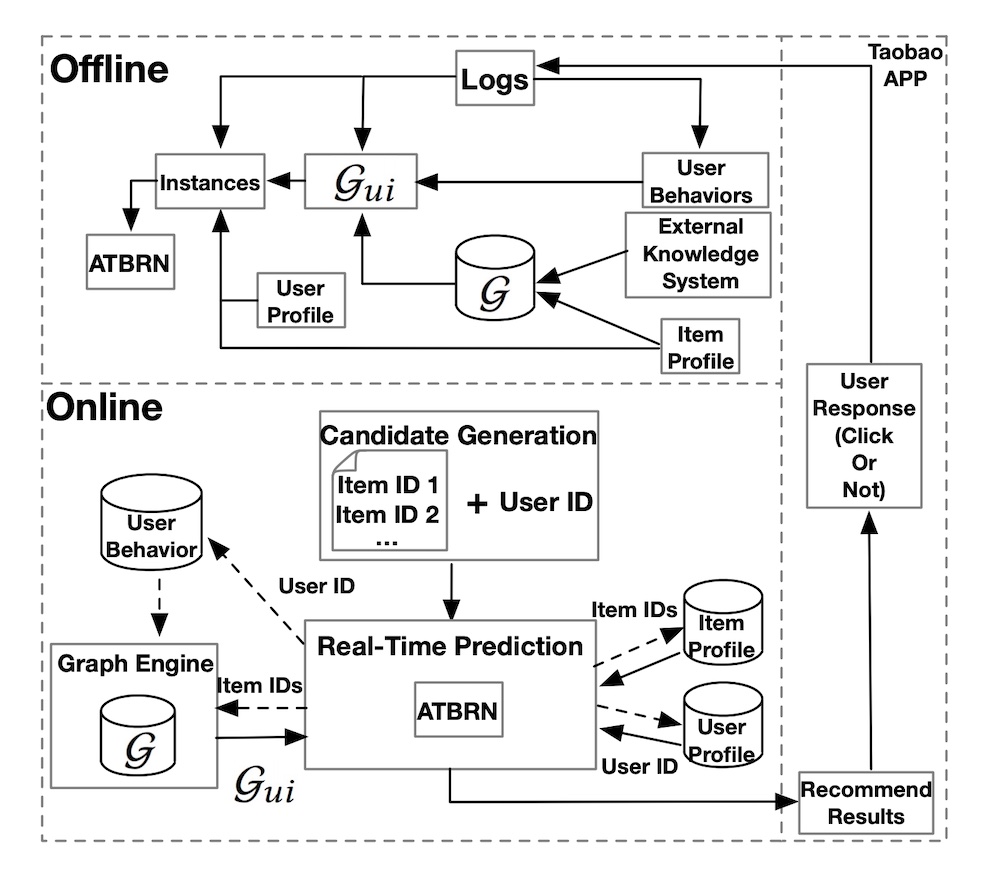

- Thousands of features and 10 engagement labels are used to compute a final score to rank tweets.