and

#tags.txt B-PER B-LOC …

### Structure of the dataset

- Download the original version on the [Kaggle](https://www.kaggle.com/abhinavwalia95/entity-annotated-corpus/data) website.

- **Download the dataset:** `ner_dataset.csv` on [Kaggle](https://www.kaggle.com/abhinavwalia95/entity-annotated-corpus/data) and save it under the `nlp/data/kaggle` directory. Make sure you download the simple version `ner_dataset.csv` and NOT the full version `ner.csv`.

- **Build the dataset:** Run the following script:

```python

python build_kaggle_dataset.py

- It will extract the sentences and labels from the dataset, split it into train / test / dev and save it in a convenient format for our model. Here is the structure of the data

kaggle/

train/

sentences.txt

labels.txt

test/

sentences.txt

labels.txt

dev/

sentences.txt

labels.txt

-

If this errors out, check that you downloaded the right file and saved it in the right directory. If you have issues with encoding, try running the script with Python 2.7.

-

Build the vocabulary: For both datasets,

data/smallanddata/kaggleyou need to build the vocabulary, with:

python build_vocab.py --data_dir data/small

- or:

python build_vocab.py --data_dir data/kaggle

Loading text data

- In NLP applications, a sentence is represented by the sequence of indices of the words in the sentence. For example if our vocabulary is

{'is':1, 'John':2, 'Where':3, '.':4, '?':5}then the sentence “Where is John ?” is represented as[3,1,2,5]. We read thewords.txtfile and populate our vocabulary:

vocab = {}

with open(words_path) as f:

for i, l in enumerate(f.read().splitlines()):

vocab[l] = i

-

In a similar way, we load a mapping

tag_mapfrom our labels fromtags.txtto indices. Doing so gives us indices for labels in the range \(\text{[0, 1, …, NUM_TAGS-1]}\). -

In addition to words read from English sentences,

words.txtcontains two special tokens: anUNKtoken to represent any word that is not present in the vocabulary, and aPADtoken that is used as a filler token at the end of a sentence when one batch has sentences of unequal lengths. -

We are now ready to load our data. We read the sentences in our dataset (either train, validation or test) and convert them to a sequence of indices by looking up the vocabulary:

train_sentences = []

train_labels = []

with open(train_sentences_file) as f:

for sentence in f.read().splitlines():

# replace each token by its index if it is in vocab

# else use index of UNK

s = [vocab[token] if token in self.vocab

else vocab['UNK']

for token in sentence.split(' ')]

train_sentences.append(s)

with open(train_labels_file) as f:

for sentence in f.read().splitlines():

# replace each label by its index

l = [tag_map[label] for label in sentence.split(' ')]

train_labels.append(l)

- We can load the validation and test data in a similar fashion.

Preparing a Batch

- This is where it gets fun. When we sample a batch of sentences, not all the sentences usually have the same length. Let’s say we have a batch of sentences

batch_sentencesthat is a Python list of lists, with its correspondingbatch_tagswhich has a tag for each token inbatch_sentences. We convert them into a batch of PyTorch Variables as follows:

# compute length of longest sentence in batch

batch_max_len = max([len(s) for s in batch_sentences])

# prepare a numpy array with the data, initializing the data with 'PAD'

# and all labels with -1; initializing labels to -1 differentiates tokens

# with tags from 'PAD' tokens

batch_data = vocab['PAD']*np.ones((len(batch_sentences), batch_max_len))

batch_labels = -1*np.ones((len(batch_sentences), batch_max_len))

# copy the data to the numpy array

for j in range(len(batch_sentences)):

cur_len = len(batch_sentences[j])

batch_data[j][:cur_len] = batch_sentences[j]

batch_labels[j][:cur_len] = batch_tags[j]

# since all data are indices, we convert them to torch LongTensors

batch_data, batch_labels = torch.LongTensor(batch_data), torch.LongTensor(batch_labels)

# convert Tensors to Variables

batch_data, batch_labels = Variable(batch_data), Variable(batch_labels)

- A lot of things happened in the above code. We first calculated the length of the longest sentence in the batch. We then initialized NumPy arrays of dimension

(num_sentences, batch_max_len)for the sentence and labels, and filled them in from the lists. -

Since the values are indices (and not floats), PyTorch’s Embedding layer expects inputs to be of the

Longtype. We hence convert them toLongTensor. -

After filling them in, we observe that the sentences that are shorter than the longest sentence in the batch have the special token

PADto fill in the remaining space. Moreover, thePADtokens, introduced as a result of packaging the sentences in a matrix, are assigned a label of-1. Doing so differentiates them from other tokens that have label indices in the range \(\text{[0, 1, …, NUM_TAGS-1]}\). This will be crucial when we calculate the loss for our model’s prediction, and we’ll come to that in a bit. - In our code, we package the above code in a custom

data_iteratorfunction. Hyperparameters are stored in a data structure called “params”. We can then use the generator as follows:

# train_data contains train_sentences and train_labels

# params contains batch_size

train_iterator = data_iterator(train_data, params, shuffle=True)

for _ in range(num_training_steps):

batch_sentences, batch_labels = next(train_iterator)

# pass through model, perform backpropagation and updates

output_batch = model(train_batch)

...

Recurrent network model

- Now that we have figured out how to load our sentences and tags, let’s have a look at the Recurrent Neural Network model. As mentioned in the section on tensors and variables, we first define the components of our model, followed by its functional form. Let’s have a look at the

__init__function for our model that takes in(batch_size, batch_max_len)dimensional data:

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self, params):

super(Net, self).__init__()

# maps each token to an embedding_dim vector

self.embedding = nn.Embedding(params.vocab_size, params.embedding_dim)

# the LSTM takens embedded sentence

self.lstm = nn.LSTM(params.embedding_dim, params.lstm_hidden_dim, batch_first=True)

# FC layer transforms the output to give the final output layer

self.fc = nn.Linear(params.lstm_hidden_dim, params.number_of_tags)

- We use an LSTM for the recurrent network. Before running the LSTM, we first transform each word in our sentence to a vector of dimension

embedding_dim. We then run the LSTM over this sentence. Finally, we have a fully connected layer that transforms the output of the LSTM for each token to a distribution over tags. This is implemented in the forward propagation function:

def forward(self, s):

# apply the embedding layer that maps each token to its embedding

s = self.embedding(s) # dim: batch_size x batch_max_len x embedding_dim

# run the LSTM along the sentences of length batch_max_len

s, _ = self.lstm(s) # dim: batch_size x batch_max_len x lstm_hidden_dim

# reshape the Variable so that each row contains one token

s = s.view(-1, s.shape[2]) # dim: batch_size*batch_max_len x lstm_hidden_dim

# apply the fully connected layer and obtain the output for each token

s = self.fc(s) # dim: batch_size*batch_max_len x num_tags

return F.log_softmax(s, dim=1) # dim: batch_size*batch_max_len x num_tags

-

The embedding layer augments an extra dimension to our input which then has shape

(batch_size, batch_max_len, embedding_dim). We run it through the LSTM which gives an output for each token of lengthlstm_hidden_dim. In the next step, we open up the 3D Variable and reshape it such that we get the hidden state for each token, i.e., the new dimension is(batch_size*batch_max_len, lstm_hidden_dim). Here the-1is implicitly inferred to be equal tobatch_size*batch_max_len. The reason behind this reshaping is that the fully connected layer assumes a 2D input, with one example along each row. -

After the reshaping, we apply the fully connected layer which gives a vector of

NUM_TAGSfor each token in each sentence. The output is alog_softmaxover the tags for each token. We uselog_softmaxsince it is numerically more stable than first taking the softmax and then the log. -

All that is left is to compute the loss. But there’s a catch - we can’t use a

torch.nn.lossfunction straight out of the box because that would add the loss from thePADtokens as well. Here’s where the power of PyTorch comes into play - we can write our own custom loss function!

Writing a custom loss function

- In the section on loading data batches, we ensured that the labels for the

PADtokens were set to-1. We can leverage this to filter out thePADtokens when we compute the loss. Let us see how:

def loss_fn(outputs, labels):

# reshape labels to give a flat vector of length batch_size*seq_len

labels = labels.view(-1)

# mask out 'PAD' tokens

mask = (labels >= 0).float()

# the number of tokens is the sum of elements in mask

num_tokens = int(torch.sum(mask).data[0])

# pick the values corresponding to labels and multiply by mask

outputs = outputs[range(outputs.shape[0]), labels]*mask

# cross entropy loss for all non 'PAD' tokens

return -torch.sum(outputs)/num_tokens

-

The input labels has dimension

(batch_size, batch_max_len)while outputs has dimension(batch_size*batch_max_len, NUM_TAGS). We compute a mask using the fact that allPADtokens inlabelshave the value-1. We then compute the Negative Log Likelihood Loss (remember the output from the network is already softmax-ed and log-ed!) for all the nonPADtokens. We can now compute derivates by simply calling.backward()on the loss returned by this function. -

Remember, you can set a breakpoint using

import pdb; pdb.set_trace()at any place in the forward function, loss function or virtually anywhere and examine the dimensions of the Variables, tinker around and diagnose what’s wrong. That’s the beauty of PyTorch :).

Selected methods

- PyTorch provides a host of useful functions for performing computations on arrays. Below, we’ve touched upon some of the most useful ones that you’ll encounter regularly in projects.

- You can find an exhaustive list of mathematical functions in the PyTorch documentation.

Tensor shape/size

- Unlike [NumPy], where

size()returns the total number of elements in the array across all dimensions,size()in PyTorch returns theshapeof an array.

import torch

a = torch.randn(2, 3, 5)

# Get the overall shape of the tensor

a.size() # Prints torch.Size([2, 3, 5])

a.shape # Prints torch.Size([2, 3, 5])

# Get the size of a specific axis/dimension of the tensor

a.size(2) # Prints 5

a.shape[2] # Prints 5

Initialization

- Presented below are some commonly used initialization functions. A full list can be found on the PyTorch documentation’s torch.nn.init page.

Static

torch.nn.init.zeros_()fills the input Tensor with the scalar value 0.torch.nn.init.ones_()fills the input Tensor with the scalar value 1.torch.nn.init.constant_()fills the input Tensor with the passed in scalar value.

import torch.nn as nn

a = torch.empty(3, 5)

nn.init.zeros_(a) # Initializes a with 0

nn.init.ones_(a) # Initializes a with 1

nn.init.constant_(a, 0.3) # Initializes a with 0.3

Standard normal

- Returns a tensor filled with random numbers from a normal distribution with mean 0 and variance 1 (also called the standard normal distribution).

import torch

torch.randn(4) # Returns 4 values from the standard normal distribution

torch.randn(2, 3) # Returns a 2x3 matrix sampled from the standard normal distribution

Xavier/Glorot

Uniform

- Fills the input Tensor with values according to the method described in Understanding the difficulty of training deep feed-forward neural networks - Glorot, X. & Bengio, Y. (2010), using a uniform distribution. The resulting tensor will have values sampled from \(\mathcal{U}(-a, a)\) where,

- Also known as Glorot initialization.

import torch.nn as nn

a = torch.empty(3, 5)

nn.init.xavier_uniform_(a, gain=nn.init.calculate_gain('relu')) # Initializes a with the Xavier uniform method

Normal

- Fills the input Tensor with values according to the method described in Understanding the difficulty of training deep feed-forward neural networks - Glorot, X. & Bengio, Y. (2010), using a normal distribution. The resulting tensor will have values sampled from \(\mathcal{N}(0, \text{std}^2)\) where,

- Also known as Glorot initialization.

import torch.nn as nn

a = torch.empty(3, 5)

nn.init.xavier_normal_(a) # Initializes a with the Xavier normal method

Kaiming/He

Uniform

- Fills the input Tensor with values according to the method described in Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification - He, K. et al. (2015), using a uniform distribution. The resulting tensor will have values sampled from \(\mathcal{U}(-\text{bound}, \text{bound})\) where,

- Also known as He initialization.

import torch.nn as nn

a = torch.empty(3, 5)

nn.init.kaiming_uniform_(a, mode='fan_in', nonlinearity='relu') # Initializes a with the Kaiming uniform method

Normal

- Fills the input Tensor with values according to the method described in Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification - He, K. et al. (2015), using a normal distribution. The resulting tensor will have values sampled from \(\mathcal{N}(0, \text{std}^2)\) where,

- Also known as He initialization.

import torch.nn as nn

a = torch.empty(3, 5)

nn.init.kaiming_normal_(a, mode='fan_out', nonlinearity='relu') # Initializes a with the Kaiming uniform method

Send Tensor to GPU

- To send a tensor (or model) to the GPU, you may use

tensor.cuda()ortensor.to(device):

import torch

t = torch.tensor([1, 2, 3])

a = t.cuda()

type(a) # Prints <class 'numpy.ndarray'>

# Send tensor to the GPU

a = a.cuda()

# Bring the tensor back to the CPU

a = a.cpu()

- Note that there is no difference between the two. Early versions of PyTorch had

tensor.cuda()andtensor.cpu()methods to move tensors and models from CPU to GPU and back. However, this made code writing a bit cumbersome:

if cuda_available:

x = x.cuda()

model.cuda()

else:

x = x.cpu()

model.cpu()

- Later versions of PyTorch introduced

tensor.to()that basically takes care of everything in an elegant way:

device = torch.device('cuda') if cuda_available else torch.device('cpu')

x = x.to(device)

model = model.to(device)

Convert to NumPy

- Both in PyTorch and TensorFlow, the

tensor.numpy()method is pretty much straightforward. It converts a tensor object into annumpy.ndarrayobject. This implicitly means that the converted tensor will be now processed on the CPU.

import torch

t = torch.tensor([1, 2, 3])

a = t.numpy() # array([1, 2, 3])

type(a) # Prints <class 'numpy.ndarray'>

# Send tensor to the GPU.

t = t.cuda()

b = t.cpu().numpy() # array([1, 2, 3])

type(b) # <class 'numpy.ndarray'>

- If you originally created a PyTorch Tensor with

requires_grad=True(note thatrequires_graddefaults toFalse, unless wrapped in ann.Parameter()), you’ll have to usedetach()to get rid of the gradients when sending it downstream for say, post-processing with NumPy, or plotting with Matplotlib/Seaborn. Callingdetach()beforecpu()prevents superfluous gradient copying. This greatly optimizes runtime. Note thatdetach()is not necessary ifrequires_gradis set toFalsewhen defining the tensor.

import torch

t = torch.tensor([1, 2, 3], requires_grad=True)

a = t.detach().numpy() # array([1, 2, 3])

type(a) # Prints <class 'numpy.ndarray'>

# Send tensor to the GPU.

t = t.cuda()

# The output of the line below is a NumPy array.

b = t.detach().cpu().numpy() # array([1, 2, 3])

type(b) # <class 'numpy.ndarray'>

tensor.item(): Convert Single Value Tensor to Scalar

- Returns the value of a tensor as a Python int/float. This only works for tensors with one element. For other cases, see

[tolist()](#tensortolist-convert-multi-value-tensor-to-scalar). - Note that this operation is not differentiable.

import torch

a = torch.tensor([1.0])

a.item() # Prints 1.0

a.tolist() # Prints [1.0]

tensor.tolist(): Convert Multi Value Tensor to Scalar

- Returns the tensor as a (nested) list. For scalars, a standard Python number is returned, just like with

[item()](#tensoritem-convert-single-value-tensor-to-scalar). Tensors are automatically moved to the CPU first if necessary. - Note that this operation is not differentiable.

a = torch.randn(2, 2)

a.tolist() # Prints [[0.012766935862600803, 0.5415473580360413],

# [-0.08909505605697632, 0.7729271650314331]]

a[0,0].tolist() # Prints 0.012766935862600803

Len

len()returns the size of the first dimension of the input tensor, similar to NumPy.

import torch

a = torch.Tensor([[1, 2], [3, 4]])

print(a) # Prints tensor([[1., 2.],

# [3., 4.]])

len(a) # 2

b = torch.Tensor([1, 2, 3, 4])

print(b) # Prints tensor([1., 2., 3., 4.])

len(b) # 4

Arange

- Return evenly spaced values within the half-open interval \([start, stop)\) (in other words, the interval including start but excluding stop).

- For integer arguments the function is equivalent to the Python built-in

rangefunction, but returns antensorrather than a list.

import torch

print(torch.arange(8)) # Prints tensor([0 1 2 3 4 5 6 7])

print(torch.arange(3, 8)) # Prints tensor([3 4 5 6 7])

print(torch.arange(3, 8, 2)) # Prints tensor([3 5 7])

# arange() works with floats too (but read the disclaimer below)

print(torch.arange(0.1, 0.5, 0.1)) # Prints tensor([0.1000, 0.2000, 0.3000, 0.4000])

- When using a non-integer step, such as \(0.1\), the results will often not be consistent. It is better to use

torch.linspace()for those cases as below.

Linspace

- Return evenly spaced numbers calculated over the interval \([start, stop]\).

- Starting PyTorch 1.11,

linspacerequires thestepsargument. Usesteps=100to restore the previous behavior.

import torch

print(torch.linspace(1.0, 2.0, steps=5)) # Prints tensor([1.0000, 1.2500, 1.5000, 1.7500, 2.0000])

View

- Returns a new tensor with the same data as the input tensor but of a different shape.

- For a tensor to be viewed, the following conditions must be satisfied:

- The new view size must be compatible with its original size and stride, i.e., each new view dimension must either be a subspace of an original dimension, or only span across original dimensions.

view()can be only be performed on contiguous tensors (which can be ascertained usingis_contiguous()). Otherwise, a contiguous copy of the tensor (e.g., viacontiguous()) needs to be used. When it is unclear whether aview()can be performed, it is advisable to use (reshape())[#reshape], which returns a view if the shapes are compatible, and copies the tensor (equivalent to calling contiguous()) otherwise.

import torch

a = torch.arange(4).view(2, 2)

print(a.view(4, 1)) # Prints tensor([[0],

# [1],

# [2],

# [3]])

print(a.view(1, 4)) # Prints tensor([[0, 1, 2, 3]])

- Passing in a

-1totorch.view()returns a flattened version of the array.

import torch

a = torch.arange(4).view(2, 2)

print(a.view(-1)) # Prints tensor([0, 1, 2, 3])

- The view tensor shares the same underlying data storage with its base tensor. No data movement occurs when creating a view, view tensor just changes the way it interprets the same data. This avoids explicit data copy, thus allowing fast and memory efficient reshaping, slicing and element-wise operations.

import torch

a = torch.rand(4, 4)

b = a.view(2, 8)

a.storage().data_ptr() == b.storage().data_ptr() # Prints True since `a` and `b` share the same underlying data.

- Note that modifying the view tensor changes the input tensor as well.

import torch

a = torch.rand(4, 4)

b = a.view(2, 8)

b[0][0] = 3.14

print(t[0][0]) # Prints tensor(3.14)

Transpose

- Returns a tensor that is a transposed version of input for 2D tensors. More generally, interchanges two axes of an array. In other words, the given dimensions

dim0anddim1are swapped. - The resulting out tensor shares its underlying storage with the input tensor, so changing the content of one would change the content of the other.

import torch

a = torch.randn(2, 3, 5)

a.size() # Prints torch.Size([2, 3, 5])

a.transpose(0, -1).shape # Prints torch.Size([5, 3, 2])

Swapaxes

- Alias for

torch.transpose(). This function is equivalent to NumPy’sswapaxesfunction.

import torch

a = torch.randn(2, 3, 5)

a.size() # Prints torch.Size([2, 3, 5])

a.swapdims(0, -1).shape # Prints torch.Size([5, 3, 2])

# swapaxes is an alias of swapdims

a.swapaxes(0, -1).shape # Prints torch.Size([5, 3, 2])

Permute

- Returns a view of the input tensor with its axes ordered as indicated in the input argument.

import torch

a = torch.randn(2, 3, 5)

a.size() # Prints torch.Size([2, 3, 5])

a.permute(2, 0, 1).size() # Prints torch.Size([5, 2, 3])

- Note that (i) using

vieworreshapeto restructure the array, and (ii)permuteortransposeto swap axes, can render the same output shape but does not necessarily yield the same tensor in both cases.

a = torch.tensor([[1, 2, 3], [4, 5, 6]])

viewed = a.view(3, 2)

perm = a.permute(1, 0)

viewed.shape # Prints torch.Size([3, 2])

perm.shape # Prints torch.Size([3, 2])

viewed == perm # Prints tensor([[ True, False],

# [False, False],

# [False, True]])

viewed # Prints tensor([[1, 2],

# [3, 4],

# [5, 6]])

perm # Prints tensor([[1, 4],

# [2, 5],

# [3, 6]])

Movedim

- Compared to

torch.permute()for reordering axes which needs positions of all axes to be explicitly specified, moving one axis while keeping the relative positions of all others is a common enough use-case to warrant its own syntactic sugar. This is the functionality that is offered bytorch.movedim().

import torch

a = torch.randn(2, 3, 5)

a.size() # Prints torch.Size([2, 3, 5])

a.movedim(0, -1).shape # Prints torch.Size([3, 5, 2])

# moveaxis is an alias of movedim

a.moveaxis(0, -1).shape # Prints torch.Size([3, 5, 2])

Randperm

- Returns a random permutation of integers from

0ton - 1.

import torch

torch.randperm(n=4) # Prints tensor([2, 1, 0, 3])

- As a practical use-case,

torch.randperm()helps select mini-batches containing data samples randomly as follows:

data[torch.randperm(data.shape[0])] # Assuming the first dimension of data is the minibatch number

Where

- Returns a tensor of elements selected from either

aorb, depending on the outcome of the specified condition. - The operation is defined as:

import torch

a = torch.randn(3, 2) # Initializes a as a 3x2 matrix using the the standard normal distribution

b = torch.ones(3, 2)

>>> a

tensor([[-0.4620, 0.3139],

[ 0.3898, -0.7197],

[ 0.0478, -0.1657]])

>>> torch.where(a > 0, a, b)

tensor([[ 1.0000, 0.3139],

[ 0.3898, 1.0000],

[ 0.0478, 1.0000]])

>>> a = torch.randn(2, 2, dtype=torch.double)

>>> a

tensor([[ 1.0779, 0.0383],

[-0.8785, -1.1089]], dtype=torch.float64)

>>> torch.where(a > 0, a, 0.)

tensor([[1.0779, 0.0383],

[0.0000, 0.0000]], dtype=torch.float64)

Reshape

- Returns a tensor with the same data and number of elements as the input, but with the specified shape. When possible, the returned tensor will be a view of the input. Otherwise, it will be a copy. Contiguous inputs and inputs with compatible strides can be reshaped without copying, but you should not depend on the copying vs. viewing behavior. It means that

torch.reshapemay return a copy or a view of the original tensor. - A single dimension may be

-1, in which case it’s inferred from the remaining dimensions and the number of elements in input.

import torch

a = torch.arange(4*10*2).view(4, 10, 2)

b = x.permute(2, 0, 1)

# Reshape works on non-contiguous tensors (contiguous() + view())

print(b.is_contiguous())

try:

print(b.view(-1))

except RuntimeError as e:

print(e)

print(b.reshape(-1))

print(b.contiguous().view(-1))

- While

torch.view()has existed for a long time,torch.reshape()has been recently introduced in PyTorch 0.4. When it is unclear whether aview()can be performed, it is advisable to usereshape(), which returns a view if the shapes are compatible, and copies (equivalent to callingcontiguous()) otherwise.

Concatenate

- Concatenates the input sequence of tensors in the given dimension. All tensors must either have the same shape (except in the concatenating dimension) or be empty.

import torch

x = torch.randn(2, 3)

print(x) # Prints a 2x3 matrix: [[ 0.6580, -1.0969, -0.4614],

# [-0.1034, -0.5790, 0.1497]]

print(torch.cat((x, x, x), 0)) # Prints a 6x3 matrix: [[ 0.6580, -1.0969, -0.4614],

# [-0.1034, -0.5790, 0.1497],

# [ 0.6580, -1.0969, -0.4614],

# [-0.1034, -0.5790, 0.1497],

# [ 0.6580, -1.0969, -0.4614],

# [-0.1034, -0.5790, 0.1497]]

print(torch.cat((x, x, x), 1)) # Prints a 2x9 matrix: [[ 0.6580, -1.0969, -0.4614,

# 0.6580, -1.0969, -0.4614,

# 0.6580, -1.0969, -0.4614],

# [-0.1034, -0.5790, 0.1497,

# -0.1034, -0.5790, 0.1497,

# -0.1034, -0.5790, 0.1497]]

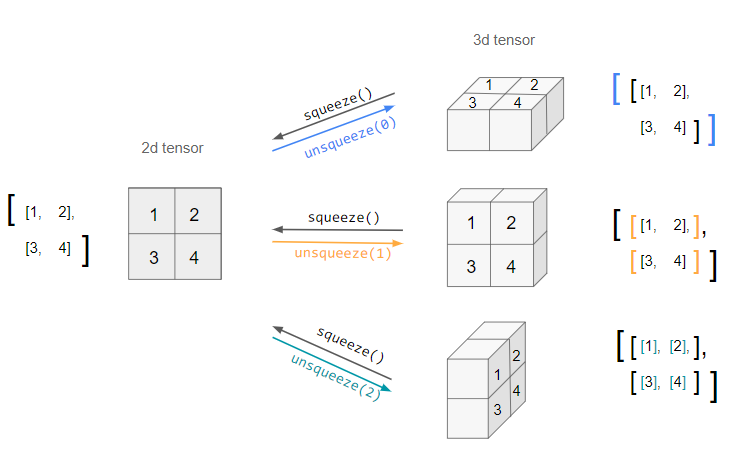

Squeeze

-

Similar to NumPy’s

np.squeeze(),torch.squeeze()removes all dimensions with size one from the input tensor. The returned tensor shares the same underlying data with this tensor. -

For example, if the input is of shape: \((A \times 1 \times B \times C \times 1 \times D)\) then the output tensor will be of shape: \((A \times B \times C \times D)\).

-

When an optional

dimargument is given totorch.squeeze(), a squeeze operation is done only in the given dimension. If the input is of shape: \((A \times 1 \times B)\),torch.squeeze(input, 0)leaves the tensor unchanged, buttorch.squeeze(input, 1)will squeeze the tensor to the shape \((A \times B)\). -

An important bit to note is that if the tensor has a batch dimension of size 1, then

torch.squeeze()will also remove the batch dimension, which can lead to unexpected errors. -

Here is a visual representation of what

torch.squeeze()andtorch.unsqueeze()do for a 2D matrix:

import torch

a = torch.zeros(2, 1, 2, 1, 2)

print(a.size()) # Prints torch.Size([2, 1, 2, 1, 2])

b = torch.squeeze(a)

print(b.size()) # Prints torch.Size([2, 2, 2])

b = torch.squeeze(a, 0)

print(b.size()) # Prints torch.Size([2, 1, 2, 1, 2])

b = torch.squeeze(a, 1)

print(b.size()) # Prints torch.Size([2, 2, 1, 2])

Unsqueeze

-

torch.unsqueeze()is the opposite oftorch.squeeze(). It inserts a dimension of size one at the specified position. The returned tensor shares the same underlying data with this tensor. -

A

dimargument within the range[-input.dim() - 1, input.dim() + 1)can be used. A negative value ofdimwill correspond totorch.unsqueeze()applied atdim = dim + input.dim() + 1.

import torch

a = torch.tensor([1, 2, 3, 4])

print(x.size()) # Prints torch.Size([4])

b = torch.unsqueeze(a, 0)

print(b) # Prints tensor([[1, 2, 3, 4]])

print(b.size()) # Prints torch.Size([1, 4])

b = torch.unsqueeze(a, 1)

print(b) # Prints tensor([[1],

# [2],

# [3],

# [4]])

print(b.size()) # torch.Size([4, 1])

-

Note that unlike

torch.squeeze(), thedimargument is required (and not optional) withtorch.unsqueeze(). -

A practical use-case of

torch.unsqueeze()is to add an additional dimension (usually the first dimension) for the batch number as shown in the example below:

import torch

# 3 channels, 32 width, 32 height

a = torch.randn(3, 32, 32)

# 1 batch, 3 channels, 32 width, 32 height

a.unsqueeze(dim=0).shape

Print Model Summary

- Printing the model prints a summary of the model including the different layers involved and their specifications.

from torchvision import models

model = models.vgg16()

print(model)

- The output in this case would be something as follows:

VGG (

(features): Sequential (

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU (inplace)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU (inplace)

(4): MaxPool2d (size=(2, 2), stride=(2, 2), dilation=(1, 1))

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU (inplace)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU (inplace)

(9): MaxPool2d (size=(2, 2), stride=(2, 2), dilation=(1, 1))

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU (inplace)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU (inplace)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU (inplace)

(16): MaxPool2d (size=(2, 2), stride=(2, 2), dilation=(1, 1))

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU (inplace)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU (inplace)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU (inplace)

(23): MaxPool2d (size=(2, 2), stride=(2, 2), dilation=(1, 1))

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU (inplace)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU (inplace)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU (inplace)

(30): MaxPool2d (size=(2, 2), stride=(2, 2), dilation=(1, 1))

)

(classifier): Sequential (

(0): Dropout (p = 0.5)

(1): Linear (25088 -> 4096)

(2): ReLU (inplace)

(3): Dropout (p = 0.5)

(4): Linear (4096 -> 4096)

(5): ReLU (inplace)

(6): Linear (4096 -> 1000)

)

)

- To get the representation tf.keras offers, use the

pytorch-summarypackage. This contains a lot more details of the model, including:- Name and type of all layers in the model.

- Output shape for each layer.

- Number of weight parameters of each layer.

- The total number of trainable and non-trainable parameters of the model.

- In addition, also offers the following bits not in the Keras summary:

- Input size (MB)

- Forward/backward pass size (MB)

- Params size (MB)

- Estimated Total Size (MB)

from torchvision import models

from torchsummary import summary

# Example for VGG16

vgg = models.vgg16()

summary(vgg, (3, 224, 224))

- The output in this case would be something as follows:

================================================================

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 224, 224] 1,792

ReLU-2 [-1, 64, 224, 224] 0

Conv2d-3 [-1, 64, 224, 224] 36,928

ReLU-4 [-1, 64, 224, 224] 0

MaxPool2d-5 [-1, 64, 112, 112] 0

Conv2d-6 [-1, 128, 112, 112] 73,856

ReLU-7 [-1, 128, 112, 112] 0

Conv2d-8 [-1, 128, 112, 112] 147,584

ReLU-9 [-1, 128, 112, 112] 0

MaxPool2d-10 [-1, 128, 56, 56] 0

Conv2d-11 [-1, 256, 56, 56] 295,168

ReLU-12 [-1, 256, 56, 56] 0

Conv2d-13 [-1, 256, 56, 56] 590,080

ReLU-14 [-1, 256, 56, 56] 0

Conv2d-15 [-1, 256, 56, 56] 590,080

ReLU-16 [-1, 256, 56, 56] 0

MaxPool2d-17 [-1, 256, 28, 28] 0

Conv2d-18 [-1, 512, 28, 28] 1,180,160

ReLU-19 [-1, 512, 28, 28] 0

Conv2d-20 [-1, 512, 28, 28] 2,359,808

ReLU-21 [-1, 512, 28, 28] 0

Conv2d-22 [-1, 512, 28, 28] 2,359,808

ReLU-23 [-1, 512, 28, 28] 0

MaxPool2d-24 [-1, 512, 14, 14] 0

Conv2d-25 [-1, 512, 14, 14] 2,359,808

ReLU-26 [-1, 512, 14, 14] 0

Conv2d-27 [-1, 512, 14, 14] 2,359,808

ReLU-28 [-1, 512, 14, 14] 0

Conv2d-29 [-1, 512, 14, 14] 2,359,808

ReLU-30 [-1, 512, 14, 14] 0

MaxPool2d-31 [-1, 512, 7, 7] 0

Linear-32 [-1, 4096] 102,764,544

ReLU-33 [-1, 4096] 0

Dropout-34 [-1, 4096] 0

Linear-35 [-1, 4096] 16,781,312

ReLU-36 [-1, 4096] 0

Dropout-37 [-1, 4096] 0

Linear-38 [-1, 1000] 4,097,000

================================================================

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

-

Input size (MB): 0.57

Forward/backward pass size (MB): 218.59

Params size (MB): 527.79

Estimated Total Size (MB): 746.96

-

Got it. Below is the same section, but with inline end-of-line comments (aligned and indented in the same style as the rest of the primer), instead of block comments.

You can drop this directly into the document.

Keepdim (Keeping Reduced Dimensions)

-

While not a PyTorch function,

keepdimis an argument commonly used across many PyTorch reduction operations (e.g.,sum,mean,max,min,argmax) reduce the dimensionality of a tensor by default. Settingkeepdim=Truepreserves the reduced dimension with size 1 instead of removing it. -

This is especially important when:

- You want consistent tensor ranks throughout a pipeline

- You rely on broadcasting in later operations

- You want to avoid manual reshaping with

unsqueeze() - You compute batch-wise statistics, normalization, masking, or attention scores

-

By default, reduced dimensions are removed. Using

keepdim=Truekeeps those dimensions as size-1 axes, preventing shape mismatches and making downstream code simpler and more readable.

Basic Syntax

torch.sum(input, dim=..., keepdim=True)

torch.mean(input, dim=..., keepdim=True)

torch.max(input, dim=..., keepdim=True)

Example 1: Sum With and Without keepdim

import torch

x = torch.tensor([[1., 2., 3.],

[4., 5., 6.]]) # tensor([[1., 2., 3.],

# [4., 5., 6.]])

s1 = torch.sum(x, dim=1) # tensor([ 6., 15.])

s1.shape # torch.Size([2])

s2 = torch.sum(x, dim=1, keepdim=True) # tensor([[ 6.],

# [15.]])

s2.shape # torch.Size([2, 1])

-

Interpretation:

keepdim=False\(\rightarrow\) shape(2)keepdim=True\(\rightarrow\) shape(2, 1)

Example 2: Mean and Broadcasting

x = torch.randn(4, 5) # shape: torch.Size([4, 5])

mean_no_keep = x.mean(dim=1) # shape: torch.Size([4])

mean_keep = x.mean(dim=1, keepdim=True) # shape: torch.Size([4, 1])

y1 = x - mean_no_keep # RuntimeError (shape mismatch)

y2 = x - mean_keep # shape: torch.Size([4, 5])

-

Why this works:

(4,5) - (4,)cannot broadcast(4,5) - (4,1)broadcasts across columns

Example 3: Max Values and Indices

x = torch.tensor([[1., 7., 3.],

[4., 2., 9.]]) # tensor([[1., 7., 3.],

# [4., 2., 9.]])

values, indices = torch.max(x, dim=1, keepdim=True)

values # tensor([[7.],

# [9.]])

indices # tensor([[1],

# [2]])

- Both outputs preserve the reduced dimension.

Example 4: keepdim vs. unsqueeze

a = x.sum(dim=1, keepdim=True) # shape: torch.Size([2, 1])

b = x.sum(dim=1).unsqueeze(1) # shape: torch.Size([2, 1])

- Both are equivalent, but

keepdim=Trueis shorter and clearer.

End-to-End Data to Model Pipeline

-

Modern machine learning systems rely on a carefully orchestrated sequence of stages that transform raw, unstructured data into trained, deployable models capable of inference and decision-making. This end-to-end data-to-model pipeline is the backbone of both research experimentation and production-scale AI systems. It ensures that data is consistently prepared, models are trained reproducibly, and evaluations are conducted rigorously across multiple iterations of experimentation.

-

At a high level, the pipeline consists of five interconnected stages:

- Data Pre-processing: cleaning, transforming, and structuring raw inputs into a model-ready format.

- Model Definition and Architecture Design: implementing the neural network or algorithm that will learn from the data.

- Training Loop and Optimization: defining how the model learns via loss computation, backpropagation, and gradient updates.

- Evaluation and Validation: measuring model performance using appropriate metrics and datasets.

- Deployment and Monitoring: serving trained models in production and tracking their behavior over time.

-

Each component in this chain depends on the quality and reproducibility of the previous step. For example, inconsistencies in preprocessing—such as incorrect normalization or tokenization—can lead to unstable training or biased model behavior downstream. Conversely, well-engineered preprocessing pipelines can significantly accelerate convergence and improve generalization.

-

In practice, PyTorch provides a flexible ecosystem that supports every stage of this workflow. It offers modular abstractions for dataset handling (

torch.utils.data), model construction (torch.nn.Module), training orchestration (torch.optim,torch.autograd), and evaluation tools. Together, these modules enable practitioners to prototype and scale ML pipelines efficiently, from experimental notebooks to distributed training environments. -

The first and most foundational stage—data pre-processing—lays the groundwork for all subsequent steps. It determines how efficiently the model can learn meaningful patterns from input data. The following section explores this phase in depth, detailing the principles, abstractions, and best practices for pre-processing both NLP, vision, and audio datasets in PyTorch.

Data Pre-processing

Overview

-

Data pre-processing is the first and most critical step in the deep learning pipeline. In PyTorch, pre-processing prepares raw input (images, text, tabular, or multimodal) into a form suitable for tensors, which are the basic data units that neural networks operate on.

-

Poorly pre-processed data often leads to:

- Slower convergence or failure to converge.

- Model overfitting or underfitting.

- Poor generalization.

-

The pre-processing stage differs for images, text, and audio, but shares the same conceptual flow:

- Loading raw data from disk or external sources.

- Cleaning — handling missing, noisy, or invalid samples.

- Transformation — resizing, normalization, encoding, or augmentation.

- Conversion to tensors compatible with the model.

- Batch preparation for efficient GPU computation.

Key Abstractions for Pre-processing

torch.utils.data.Dataset

- Abstract class representing a dataset.

-

You subclass it and override:

__len__: returns the number of samples.__getitem__: returns a single data sample.

torchtext.transforms

-

Purpose: Provides composable, production-ready text pre-processing utilities that convert raw textual data into tensor representations for NLP models.

-

Key capabilities include:

-

Tokenization and normalization:

- Split raw text into tokens using pretrained tokenizers (e.g., BERT, GPT2) or custom tokenization pipelines.

- Case normalization, punctuation removal, and optional subword encoding.

-

Vocabulary and numericalization:

VocabTransformmaps tokens to integer IDs using a defined vocabulary.- Supports handling of unknown (

<unk>) and padding (<pad>) tokens. - Facilitates training of embeddings and transformer-based models.

-

Padding and sequence management:

Truncate,PadTransform, andToTensorstandardize sequence lengths for batching.- Enables easy collation using

torchtext.functional.to_tensor()or custom collate functions.

-

Composable transform chains:

- Similar to

torchvision, multiple text transformations can be chained usingtorchtext.transforms.Sequential.

- Similar to

-

-

Example:

from torchtext import transforms

from torchtext.vocab import build_vocab_from_iterator

# Custom augmentation functions

class RandomWordDropout:

"""Randomly drops words with a given probability."""

def __init__(self, p=0.1):

self.p = p

def __call__(self, tokens):

return [tok for tok in tokens if random.random() > self.p]

class SynonymReplacement:

"""Simple synonym replacement using a lookup dictionary."""

def __init__(self, synonym_dict, p=0.1):

self.synonym_dict = synonym_dict

self.p = p

def __call__(self, tokens):

augmented = []

for tok in tokens:

if tok in self.synonym_dict and random.random() < self.p:

augmented.append(random.choice(self.synonym_dict[tok]))

else:

augmented.append(tok)

return augmented

# Example synonym mapping

synonyms = {

"good": ["great", "excellent", "nice"],

"bad": ["terrible", "awful", "poor"]

}

# Suppose 'vocab' is built from your corpus

vocab = build_vocab_from_iterator([["this", "is", "a", "good", "sample"]], specials=["<unk>", "<pad>"])

vocab.set_default_index(vocab["<unk>"])

text_transform = transforms.Sequential(

# Step 1: Randomly replace words with synonyms (30% probability)

SynonymReplacement(synonyms, p=0.3),

# Step 2: Randomly drop words from the sequence (20% probability)

RandomWordDropout(p=0.2),

# Step 3: Convert tokens into integer IDs using the predefined vocabulary

transforms.VocabTransform(vocab),

# Step 4: Truncate sequences longer than 512 tokens to a fixed maximum length

transforms.Truncate(512),

# Step 5: Convert the list of token IDs into a tensor and pad to uniform length

transforms.ToTensor(padding_value=vocab['<pad>'])

)

tokens = ["this", "is", "a", "sample"]

tensorized = text_transform(tokens)

-

Integration with data pipeline:

- Used in NLP datasets like IMDB, AG News, or custom text corpora.

- Can be combined with

torch.utils.data.Datasetfor tokenized text pipelines and efficient batch collation. - Outputs tensors compatible with RNNs, CNNs, and Transformer architectures.

torchvision.transforms

-

Purpose: Handles image and video transformations efficiently, both for data normalization and augmentation during training. It provides a powerful composition framework for chaining multiple transformations using

transforms.Compose. -

Key capabilities include:

-

Geometric transformations:

Resize,CenterCrop,RandomCrop,RandomHorizontalFlip,Rotate, etc.- Commonly used for resizing images to match model input size and augmenting data variability.

-

Color and intensity transformations:

Normalize,ColorJitter,Grayscale,RandomAdjustSharpness, etc.- Used to adjust brightness, contrast, saturation, and sharpness for robustness.

-

Tensor conversion and PIL integration:

ToTensor()converts a PIL image or NumPy array to a normalized PyTorch tensor (values scaled to [0, 1]).ToPILImage()allows converting back to image format for visualization.

-

Augmentation pipelines:

- Supports stochastic transformations during training for better generalization.

RandomApplyandRandomChoicecan randomize transform sequences.

-

Video support:

- Extended transforms for temporal augmentation (

RandomResizedCropVideo,NormalizeVideo, etc.) intorchvision.transforms._transforms_video.

- Extended transforms for temporal augmentation (

-

-

Example:

from torchvision import transforms

image_transform = transforms.Compose([

# Step 1: Resize the input image so the shorter side is 256 pixels

transforms.Resize(256),

# Step 2: Crop the central 224×224 region from the resized image

transforms.CenterCrop(224),

# Step 3: Randomly flip the image horizontally (helps with data augmentation)

transforms.RandomHorizontalFlip(),

# Step 4: Convert the PIL image to a PyTorch tensor and scale pixel values to [0, 1]

transforms.ToTensor(),

# Step 5: Normalize tensor using ImageNet channel means and standard deviations

# (this standardization helps models pretrained on ImageNet converge better)

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

-

Integration with data pipeline:

- Used inside the

__getitem__method of aDatasetsubclass to ensure each image is transformed at load time. - The transformed tensors can then be directly batched using

torch.utils.data.DataLoaderfor efficient GPU training.

- Used inside the

torchaudio.transforms

-

Purpose: Provides transformations and utilities for audio signal processing, converting raw waveforms into representations suitable for neural networks.

-

Key capabilities include:

-

Waveform transformations:

Resample,Vol,TimeStretch,PitchShift, etc.- Used to adjust sampling rates, tempo, and pitch or to augment data diversity.

-

Spectrogram and feature extraction:

Spectrogram,MelSpectrogram,MFCC,AmplitudeToDB.- Convert time-domain waveforms into frequency-domain features.

- Common in ASR (Automatic Speech Recognition) and music analysis.

-

Augmentation and masking:

FrequencyMasking,TimeMaskinghelp simulate noise and missing information for better generalization.- Especially beneficial in low-resource audio tasks.

-

I/O integration:

torchaudio.load()reads and returns(waveform, sample_rate)directly as tensors.- Supports a variety of audio formats (WAV, MP3, FLAC, etc.).

-

-

Example:

import torchaudio

from torchaudio import transforms as T

waveform, sample_rate = torchaudio.load("speech.wav")

audio_transform = T.Compose([

# Step 1: Resample the raw audio waveform to a consistent 16 kHz sampling rate

T.Resample(orig_freq=sample_rate, new_freq=16000),

# Step 2: Convert the waveform into a Mel-spectrogram (frequency–time representation)

# Uses 64 Mel filter banks to capture perceptually relevant frequency information

T.MelSpectrogram(sample_rate=16000, n_mels=64),

# Step 3: Apply frequency masking (randomly masks frequency bands)

# Helps the model generalize to variations in spectral features

T.FrequencyMasking(freq_mask_param=15),

# Step 4: Apply time masking (randomly masks time segments)

# Improves robustness to temporal distortions or missing frames

T.TimeMasking(time_mask_param=35),

# Step 5: Convert the Mel-spectrogram power values to decibel (dB) scale

# Produces log-scaled features commonly used in speech and audio models

T.AmplitudeToDB()

])

mel_spectrogram = audio_transform(waveform)

-

Integration with training pipeline:

- The transformed tensor (

mel_spectrogram) can be directly used as input for CNN or Transformer-based models. - Seamlessly integrates with

torch.utils.data.Datasetfor dynamic, on-the-fly augmentation during data loading. - Suitable for tasks such as speech recognition, audio classification, or speaker verification.

- The transformed tensor (

torch.utils.data.DataLoader

-

Takes a

Datasetand handles:- Batching

- Shuffling

- Parallel loading using

num_workers

-

Essential for GPU efficiency (avoids CPU bottlenecks).

Pre-processing for Vision Data

Loading and Cleaning

- Image data are typically in JPEG or PNG format.

-

Cleaning includes:

- Removing corrupt files.

- Ensuring consistent channels (e.g., converting grayscale to RGB).

- Resizing to a common resolution (e.g., 224×224).

Normalization

- Neural networks perform best when inputs are normalized to zero mean and unit variance.

-

For an image tensor \(I\) with pixel values in \([0, 1]\):

\[I' = \frac{I - \mu}{\sigma}\]- where \(\mu\) and \(\sigma\) are per-channel means and standard deviations.

- Typical ImageNet normalization constants:

mean = [0.485, 0.456, 0.406]

std = [0.229, 0.224, 0.225]

Data Augmentation

-

Used to increase the diversity of training examples and reduce overfitting.

-

Common augmentations:

RandomResizedCropColorJitterRandomHorizontalFlipRandomRotationRandomErasing

-

See torchvision.transforms documentation for the full list.

Conversion to Tensor

- Convert PIL images to PyTorch tensors in \([C, H, W]\) format.

- Scale pixel values to \([0, 1]\) using

ToTensor().

Pre-processing for Text Data

Tokenization

- Tokenization splits raw text into tokens.

-

Modern NLP pipelines often use subword tokenization methods, such as:

- Example sentence:

“PyTorch is awesome!”

\(\rightarrow\) Tokens:

["pytorch", "is", "awesome", "!"]

Vocabulary Building

-

Each unique token gets mapped to an integer ID.

-

Example:

{'<PAD>':0, '<UNK>':1, 'pytorch':2, 'is':3, 'awesome':4, '!':5}

Handling Variable-Length Sequences

-

Different sentences have different lengths — we pad or truncate to a fixed length \(L\).

- If sentence length < \(L\) \(\rightarrow\) pad with

<PAD>tokens. - If sentence length > \(L\) \(\rightarrow\) truncate.

- If sentence length < \(L\) \(\rightarrow\) pad with

Embedding Lookup

-

Each token index is converted into a dense vector representation:

\[x_i = E[w_i]\]- where \(E\) is the embedding matrix and \(w_i\) is the token index.

-

This is handled in PyTorch with:

nn.Embedding(num_embeddings, embedding_dim)

Masking

- A mask tensor marks which tokens are padding. This ensures that padding tokens don’t contribute to loss or attention weights during training.

Pre-processing for Audio Data

Loading and Cleaning

-

Audio data are typically stored in WAV, MP3, or FLAC formats.

-

PyTorch provides

torchaudio.load()for direct reading of these files, returning a tuple(waveform, sample_rate)where the waveform is a tensor of shape[channels, time]. -

Common cleaning steps include:

- Removing or skipping corrupted audio files.

- Resampling all clips to a consistent sample rate (e.g., 16 kHz) for uniformity.

- Converting stereo audio to mono when multi-channel information is not required.

- Trimming or padding clips to a fixed duration for batch processing.

import torchaudio

waveform, sample_rate = torchaudio.load("speech.wav")

waveform = torchaudio.functional.resample(waveform, orig_freq=sample_rate, new_freq=16000)

Normalization (Standardization)

-

Normalization ensures that the waveform amplitude is within a suitable numeric range for stable learning. A common approach, known as standardization, rescales the waveform to have zero mean and unit variance, as given by

\[x' = \frac{x - \mu}{\sigma}\]- where \(\mu\) and \(\sigma\) are the mean and standard deviation of the waveform.

- This process ensures that all samples contribute equally during training.

-

Alternatively, amplitude can be normalized by dividing by the maximum absolute value to fit within \([-1, 1]\), which preserves the waveform’s relative shape but constrains its dynamic range.

-

After transformation into spectrograms, normalization is often applied in decibel space using:

T.AmplitudeToDB()

Feature Extraction

-

Raw waveforms are not directly suitable for many deep learning models. They are typically converted into time–frequency representations that capture spectral features.

-

Common feature transforms:

Spectrogram: Converts waveform to magnitude-frequency domain.MelSpectrogram: Maps frequencies onto the Mel scale for perceptual alignment.MFCC: Computes Mel-Frequency Cepstral Coefficients, widely used in speech recognition.DeltaandDeltaDelta: Compute first and second derivatives to capture temporal change.

from torchaudio import transforms as T

audio_transform = T.MelSpectrogram(

sample_rate=16000,

n_mels=64,

n_fft=1024,

hop_length=256

)

mel_spectrogram = audio_transform(waveform)

Data Augmentation

-

Audio augmentation increases data diversity and improves generalization under noisy or varied recording conditions.

-

Common augmentations include:

-

SpecAugment techniques:

FrequencyMasking(freq_mask_param=15)— randomly masks frequency bands.TimeMasking(time_mask_param=35)— randomly masks time intervals.

-

Temporal transforms:

TimeStretch— changes speed without affecting pitch.PitchShift— alters pitch while preserving duration.

-

Amplitude transforms:

Vol— adjusts loudness levels randomly.

-

-

Example:

from torchaudio import transforms as T

augment = T.Compose([

T.FrequencyMasking(freq_mask_param=15),

T.TimeMasking(time_mask_param=35),

T.Vol(gain=(0.5, 1.5))

])

augmented_spectrogram = augment(mel_spectrogram)

Conversion to Tensor and Batching

-

Audio tensors are already returned in PyTorch’s tensor format by

torchaudio.load(). -

For batch processing, shorter clips are padded and longer clips truncated to maintain consistent time dimensions.

-

Batched tensors follow shape

[batch_size, channels, time]for waveform inputs or[batch_size, channels, freq, time]for spectrograms. -

Padding can be applied using:

torch.nn.utils.rnn.pad_sequence(list_of_waveforms, batch_first=True)

Integration with Models

-

After pre-processing, tensors can be fed into:

- CNNs (for spectrogram-based classification).

- RNNs or Transformers (for speech recognition).

- Pretrained audio encoders such as Wav2Vec2, HuBERT, or Whisper.

Data Quality Checks

-

Before proceeding to model training:

- Verify dataset split integrity (train/val/test non-overlap).

- Visualize samples to catch label mismatches.

- Ensure normalization and padding/truncation logic works as expected.

- Detect data imbalance and decide on resampling or weighted losses.

-

A detailed discourse on data quality can be found in our Data Quality/Filtering primer.

Tabular Summary

| Modality | Key Steps | Common Transforms | Notes |

|---|---|---|---|

| Text | Tokenize $$\rightarrow$$ Augment $$\rightarrow$$ Numericalize $$\rightarrow$$ Pad $$\rightarrow$$ Mask | SynonymReplacement, RandomWordDropout, NoiseInjection, VocabTransform, Truncate, PadTransform |

Apply augmentation before numericalization; handle OOVs with <UNK> |

| Vision | Resize $$\rightarrow$$ Normalize $$\rightarrow$$ Augment $$\rightarrow$$ Tensor | Resize, Normalize, ToTensor, RandomCrop |

Use per-channel normalization |

| Audio | Load $$\rightarrow$$ Resample $$\rightarrow$$ Feature Extract $$\rightarrow$$ Augment $$\rightarrow$$ Normalize | MelSpectrogram, AmplitudeToDB, FrequencyMasking, TimeMasking |

Ensure consistent sampling rate and duration |

| Shared | Cleaning, batching, shuffling | Dataset, DataLoader |

Use multiprocessing in DataLoader |

FAQs

- Why do we normalize image data, and why are the ImageNet mean/std values often used as defaults?

- Normalization stabilizes learning by ensuring all features have similar dynamic ranges. ImageNet’s statistics are widely used because many pretrained models are trained on ImageNet, and maintaining the same normalization helps when fine-tuning those models.

- What happens if you skip padding for NLP data?

- Batches cannot be represented as tensors since tensors require fixed dimensions. Without padding, PyTorch cannot stack sentences of different lengths into a batch tensor.

- Why do we use subword tokenization instead of simple whitespace tokenization?

- Subword tokenization handles out-of-vocabulary (OOV) words and rare word morphologies better. It splits unknown or compound words into smaller known units, improving generalization.

- Why is data augmentation not applied during evaluation?

- Augmentation introduces randomness intended for training robustness. During evaluation, we need deterministic, unaltered inputs to measure model performance consistently.

References and Further Reading

- PyTorch official docs: torch.utils.data

- torchtext.transforms

- torchvision.transforms

- torchaudio.transforms documentation

- SpecAugment: A Simple Data Augmentation Method for Speech Recognition (Park et al., 2019)

- Wav2Vec 2.0: A Framework for Self-Supervised Learning of Speech Representations (Baevski et al., 2020)

- A Guide to PyTorch Data Loading

- Understanding WordPiece and BPE Tokenization

- BPE by Sennrich et al. (2016)

- SentencePiece by Kudo & Richardson (2018)

Practical Implementation – Data Pre-processing

- This section shows two fully worked examples: one for NLP and one for Vision, followed by a FAQs section for conceptual discussion.

NLP Data Pre-processing – Text Classification Example

- Below is a simple pre-processing pipeline for a text classification dataset using

torchtext.

import torch

from torch.utils.data import Dataset, DataLoader

from torchtext.vocab import build_vocab_from_iterator

from torch.nn.utils.rnn import pad_sequence

import re

# ----------------------------------------------------------

# 1. Tokenizer

# ----------------------------------------------------------

# - Purpose: Convert raw text into a list of clean tokens (words).

# - Steps:

# * Convert text to lowercase for uniformity.

# * Remove all punctuation and special characters using regex.

# * Split text into individual word tokens (by whitespace).

def tokenize(text):

text = re.sub(r"[^a-zA-Z0-9\s]", "", text.lower())

return text.split()

# ----------------------------------------------------------

# 2. Sample corpus

# ----------------------------------------------------------

# - Example dataset for binary classification (e.g., sentiment, relevance).

# - Each text string corresponds to one data sample, with label 0 or 1.

texts = [

"Large Language Models are powerful AI models",

"Deep learning is transformational",

"Neural networks can generalize",

"PyTorch simplifies model training"

]

labels = [1, 0, 1, 0]

# ----------------------------------------------------------

# 3. Build vocabulary

# ----------------------------------------------------------

# - The vocabulary assigns each unique token an integer index.

# - This is critical for converting tokens into numerical form that models can process.

# - The special tokens:

# * <pad>: used for sequence padding (makes sequences same length in a batch).

# * <unk>: represents out-of-vocabulary tokens (unknown words).

vocab = build_vocab_from_iterator(map(tokenize, texts), specials=["<pad>", "<unk>"])

vocab.set_default_index(vocab["<unk>"]) # All unseen tokens map to <unk>

# ----------------------------------------------------------

# 4. Numericalization

# ----------------------------------------------------------

# - Converts a list of string tokens into a tensor of integer IDs using the vocabulary.

# - Example:

# Input: ["deep", "learning", "is", "transformational"]

# Output: tensor([5, 9, 3, 8]) # based on vocab indices

def numericalize(text):

return torch.tensor([vocab[token] for token in tokenize(text)], dtype=torch.long)

# ----------------------------------------------------------

# 5. Dataset definition

# ----------------------------------------------------------

# - Custom Dataset class wraps the tokenized text and corresponding labels.

# - Required methods:

# * __getitem__(self, idx): returns one sample (text tensor, label tensor).

# * __len__(self): returns total number of samples.

class TextDataset(Dataset):

def __init__(self, texts, labels):

self.texts = texts

self.labels = labels

def __getitem__(self, idx):

# Returns a tuple: (numericalized text tensor, label tensor)

return numericalize(self.texts[idx]), torch.tensor(self.labels[idx])

def __len__(self):

return len(self.texts)

# Instantiate dataset object

dataset = TextDataset(texts, labels)

# ----------------------------------------------------------

# 6. Collate function for padding

# ----------------------------------------------------------

# - Purpose: dynamically pad variable-length text sequences in a batch.

# - pad_sequence: ensures all sequences in the batch are the same length.

# - padding_value: index corresponding to <pad> token in the vocabulary.

def collate_fn(batch):

# Unzip the batch (list of (text, label) pairs) into separate sequences and labels

texts, labels = zip(*batch)

# Pad sequences so all have equal length (batch_first=True $$\rightarrow$$ shape [batch, seq_len])

padded = pad_sequence(texts, batch_first=True, padding_value=vocab["<pad>"])

# Stack labels into a single tensor

return padded, torch.stack(labels)

# ----------------------------------------------------------

# 7. DataLoader

# ----------------------------------------------------------

# - Combines dataset and collate function to create mini-batches.

# - Handles:

# * Shuffling for random sampling each epoch.

# * Batch creation.

# * Optional parallel data loading via num_workers.

loader = DataLoader(dataset, batch_size=2, collate_fn=collate_fn, shuffle=True)

# ----------------------------------------------------------

# 8. Inspect one mini-batch

# ----------------------------------------------------------

# - Demonstrates the final structure after pre-processing.

# - Each batch has:

# * x_batch: tensor of shape [batch_size, sequence_length]

# * y_batch: tensor of shape [batch_size]

for x_batch, y_batch in loader:

print(f"Input batch shape: {x_batch.shape}") # e.g., (2, seq_len)

print(f"Label batch shape: {y_batch.shape}") # e.g., (2,)

break

# Can also do the following in place of the above block:

# ----------------------------------------------------------

# 8. Inspect one mini-batch (using next(iter(loader)))

# ----------------------------------------------------------

# - Demonstrates how to manually fetch a single batch from a DataLoader.

# - This approach is equivalent to running one iteration of the loop.

# - Useful for debugging or inspecting batch structure and tensor shapes using one batch only.

# Create an iterator over the DataLoader

# batch_iter = iter(loader)

# Fetch the first batch (x_batch, y_batch)

# x_batch, y_batch = next(batch_iter)

# Inspect the shapes of tensors

# print(f"Input batch shape: {x_batch.shape}") # e.g., (2, seq_len)

# print(f"Label batch shape: {y_batch.shape}") # e.g., (2,)

-

Concepts Illustrated:

- Text cleaning and tokenization.

- Vocabulary building and OOV handling.

- Batch collation with dynamic padding using

pad_sequence. - Labels and sequences packed into tensors ready for embedding lookup.

Vision Data Pre-processing – CIFAR-10 Example

- This example uses torchvision to build a reusable pre-processing and data-loading pipeline.

import torch

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

# ----------------------------------------------------------

# 1. Define data transformations

# ----------------------------------------------------------

# These define how images are preprocessed before feeding into the model.

# Training transforms include augmentations for better generalization,

# while validation/test transforms remain deterministic for fair evaluation.

train_transforms = transforms.Compose([

transforms.RandomHorizontalFlip(), # Randomly flip images horizontally (helps learn invariance)

transforms.RandomRotation(10), # Apply small random rotations to simulate varied orientations

transforms.ToTensor(), # Convert PIL image $$\rightarrow$$ Tensor with shape (C, H, W), values in [0,1]

transforms.Normalize(mean=(0.5, 0.5, 0.5), # Normalize RGB channels (center around 0, scale to ~[-1,1])

std=(0.5, 0.5, 0.5))

])

test_transforms = transforms.Compose([

transforms.ToTensor(), # Convert test images to tensor (no random augmentations)

transforms.Normalize(mean=(0.5, 0.5, 0.5),

std=(0.5, 0.5, 0.5))

])

# ----------------------------------------------------------

# 2. Load dataset

# ----------------------------------------------------------

# Automatically downloads and prepares CIFAR-10 dataset if not already present.

# - train=True $$\rightarrow$$ loads training split (50,000 images)

# - train=False $$\rightarrow$$ loads test split (10,000 images)

# - transform=... $$\rightarrow$$ applies preprocessing pipeline on-the-fly

train_data = datasets.CIFAR10(root='data', train=True, download=True, transform=train_transforms)

test_data = datasets.CIFAR10(root='data', train=False, download=True, transform=test_transforms)

# ----------------------------------------------------------

# 3. Prepare DataLoaders

# ----------------------------------------------------------

# DataLoader efficiently handles batching, shuffling, and multiprocessing.

# - batch_size=64 $$\rightarrow$$ each batch contains 64 images

# - shuffle=True $$\rightarrow$$ reshuffles data at each epoch to reduce overfitting

# - num_workers=4 $$\rightarrow$$ uses 4 subprocesses for parallel data loading

train_loader = DataLoader(train_data, batch_size=64, shuffle=True, num_workers=4)

test_loader = DataLoader(test_data, batch_size=64, shuffle=False, num_workers=4)

# ----------------------------------------------------------

# 4. Inspect one batch

# ----------------------------------------------------------

# Retrieve a single mini-batch using an iterator.

# - iter(train_loader) returns an iterator over batches

# - next(...) yields the first batch (images and labels)

images, labels = next(iter(train_loader))

# Print tensor shapes for verification

# CIFAR-10 images: (64 samples, 3 color channels, 32x32 resolution)

print(f"Batch shape: {images.shape}") # Expected $$\rightarrow$$ (64, 3, 32, 32)

print(f"Label shape: {labels.shape}") # Expected $$\rightarrow$$ (64,)

-

Concepts Illustrated:

- Data augmentation (

RandomHorizontalFlip,RandomRotation) only applied to training data. - Normalization keeps values in a range suitable for model convergence.

DataLoaderbatches and parallelizes loading withnum_workers.

- Data augmentation (

FAQs

- Why separate train and test transforms in vision pipelines?

- Training transformations (augmentations) simulate data diversity and improve generalization. Test transforms must be deterministic to ensure consistent evaluation metrics.

- Why is normalization critical before feeding data into a neural network?

- Normalization standardizes feature scales. It keeps gradients balanced during backpropagation and helps models converge faster. Without it, models may learn unstable weight updates.

- Why use

num_workers > 0in DataLoader?- Setting

num_workersallows data loading to happen in parallel across CPU cores. This prevents the GPU from idling while waiting for batches to be loaded and preprocessed.

- Setting

- What is the purpose of a custom

collate_fnin NLP DataLoaders?- Sequences vary in length.

collate_fnpads sequences to a fixed length per batch so that they can be stacked into a tensor, while also optionally creating masks to ignore padding during loss computation.

- Sequences vary in length.

- How can you extend this pipeline for multilingual text?

- Integrate tokenizers like SentencePiece or HuggingFace’s AutoTokenizer, trained on multilingual corpora. SentencePiece handles text as raw byte sequences, making it more language-agnostic and effective for languages without clear word boundaries. AutoTokenizer is useful because it automatically loads the correct pretrained tokenizer configuration (e.g., BPE, WordPiece, or SentencePiece) for multilingual models, simplifying setup and ensuring compatibility.

- Why not pre-pad all sequences globally before training?

- Global padding wastes computation and memory for short sentences. Dynamic per-batch padding (via

collate_fn) ensures efficiency while preserving batch-level alignment.

- Global padding wastes computation and memory for short sentences. Dynamic per-batch padding (via

- What happens if we forget to call

.set_default_index()for<unk>tokens?- Any out-of-vocabulary token will raise an error instead of mapping to

<unk>. This breaks robustness when unseen words appear during validation or inference.

- Any out-of-vocabulary token will raise an error instead of mapping to

- Why use

ToTensor()afterPILtransforms?ToTensor()converts the image from \([H, W, C]\) format with pixel values in \([0, 255]\) to \([C, H, W]\) format with normalized floats in \([0, 1]\). PyTorch models expect this tensor format.

- What are alternatives to torchvision for custom vision datasets?

PilloworOpenCVfor manual image preprocessing.albumentationsfor advanced augmentations (blur, affine transformations, contrast shifts).- Custom PyTorch transforms for domain-specific needs (e.g., medical images, satellite imagery).

- How can you visualize pre-processed batches for debugging?

- Use

matplotlibto plot a few tensor samples:

import matplotlib.pyplot as plt import torchvision images, _ = next(iter(train_loader)) grid = torchvision.utils.make_grid(images[:8], nrow=4) plt.imshow(grid.permute(1, 2, 0)) plt.show()- Visual inspection is crucial to confirm augmentations and normalization behave as intended.

- Use

Model Training/Fine-tuning and Evaluation Workflow

- This section walks through training loop design, fine-tuning using LoRA, evaluation strategies, and monitoring techniques in PyTorch, with code and conceptual explanations.

Overview

-

After pre-processing, the next phase in an ML pipeline is model training and evaluation.

-

The goals here are:

- Define model architectures suitable for the task.

- Build a robust and efficient training loop.

- Evaluate performance and prevent overfitting.

- Save and restore model checkpoints.

-

A well-structured training and evaluation workflow ensures reproducibility, scalability, and easier debugging.

Core Components of a Training Workflow

-

A minimal PyTorch training pipeline has these components:

- Model Definition – subclass

nn.Module. - Loss Function – e.g., CrossEntropyLoss for classification.

- Optimizer – e.g., Adam or SGD.

- Training Loop – iterate over batches, compute loss, and update weights.

- Validation Loop – measure generalization performance.

- Checkpointing – save the best model.

- Early Stopping – stop training when validation stops improving.

- Model Definition – subclass

Example: NLP Model Training Workflow

- Here’s a lightweight text classification model using embeddings and an RNN.

import torch

import torch.nn as nn

import torch.optim as optim

# ----------------------------------------------------------

# 1. Define RNN-based text classification model

# ----------------------------------------------------------

class RNNClassifier(nn.Module):

def __init__(self, vocab_size, embed_dim, hidden_dim, num_classes, pad_idx):

super().__init__()

# Embedding layer converts token IDs into dense vector representations

self.embedding = nn.Embedding(vocab_size, embed_dim, padding_idx=pad_idx)

# GRU captures temporal dependencies in token sequences

self.rnn = nn.GRU(embed_dim, hidden_dim, batch_first=True)

# Fully connected output layer for classification logits

self.fc = nn.Linear(hidden_dim, num_classes)

def forward(self, x):

# x shape: (batch_size, seq_len)

embedded = self.embedding(x) # $$\rightarrow$$ (batch_size, seq_len, embed_dim)

_, h = self.rnn(embedded) # h: (1, batch_size, hidden_dim)

return self.fc(h.squeeze(0)) # $$\rightarrow$$ (batch_size, num_classes)

# ----------------------------------------------------------

# 2. Instantiate model, loss, and optimizer

# ----------------------------------------------------------

vocab_size = len(vocab) # Number of tokens in vocabulary

model = RNNClassifier(

vocab_size=vocab_size,

embed_dim=64,

hidden_dim=128,

num_classes=2,

pad_idx=vocab["<pad>"]

)

criterion = nn.CrossEntropyLoss() # Cross-entropy for classification

optimizer = optim.Adam(model.parameters(), lr=1e-3) # Adaptive optimizer

# ----------------------------------------------------------

# 3. Define Evaluation Function (Validation Loop)

# ----------------------------------------------------------

def evaluate_nlp_model(model, loader, criterion):

"""

Evaluates model performance on a validation or test DataLoader.

Returns average loss and accuracy.

"""

model.eval() # Disable dropout, batchnorm updates

total_loss, correct, total = 0.0, 0, 0

with torch.no_grad(): # Disable gradient computation for faster inference

for x_batch, y_batch in loader:

outputs = model(x_batch)

loss = criterion(outputs, y_batch)

total_loss += loss.item()

# Get predicted labels (index of max logit)

preds = outputs.argmax(dim=1)

correct += (preds == y_batch).sum().item()

total += y_batch.size(0)

avg_loss = total_loss / len(loader)

accuracy = correct / total

return avg_loss, accuracy

# ----------------------------------------------------------

# 4. Define Training Loop

# ----------------------------------------------------------

def train_nlp_model(model, train_loader, val_loader, criterion, optimizer, epochs=5):

"""

Trains the NLP model and evaluates on validation data per epoch.

"""

best_val_loss = float('inf')

for epoch in range(epochs):

model.train() # Enable training mode

total_loss = 0.0

# Loop over mini-batches

for x_batch, y_batch in train_loader:

optimizer.zero_grad()

outputs = model(x_batch)

loss = criterion(outputs, y_batch)

loss.backward()

optimizer.step()

total_loss += loss.item()

# Compute average training loss

avg_train_loss = total_loss / len(train_loader)

# Evaluate on validation data after each epoch

val_loss, val_acc = evaluate_nlp_model(model, val_loader, criterion)

print(f"Epoch {epoch+1}: train_loss={avg_train_loss:.3f}, "

f"val_loss={val_loss:.3f}, val_acc={val_acc:.3f}")

# Save model if validation loss improves

if val_loss < best_val_loss:

best_val_loss = val_loss

torch.save(model.state_dict(), "best_rnn_model.pt")

print("✅ Saved best model checkpoint.")

-

Concepts Illustrated:

- RNN-based sequence modeling.

- Use of

padding_idxin embeddings ensures loss masking for padding tokens, i.e., padding tokens don’t affect gradients. - Batch-first data flow for better readability.

Example: Vision Classification Training Loop

import torch

import torch.nn as nn

import torch.optim as optim

# ----------------------------------------------------------

# 1. Define a simple CNN model for CIFAR-10 classification

# ----------------------------------------------------------

class CNN(nn.Module):

def __init__(self):

super().__init__()

# Sequential container for defining the full network in order

self.net = nn.Sequential(

# First convolutional block:

# - Input: 3 channels (RGB images)

# - Output: 32 feature maps

# - Kernel: 3x3, stride=1, padding=1 (to preserve spatial size)