Reinforcement Learning

- Overview

- Basics of Reinforcement Learning

- Offline vs. Online Reinforcement Learning

- Types of Reinforcement Learning

- Value-Based Methods

- Policy-Based Methods

- Actor–Critic Methods

- Model-Based Reinforcement Learning

- Model-Free Reinforcement Learning

- On-Policy vs. Off-Policy Reinforcement Learning

- Deep Reinforcement Learning

- Deep Value-Based Methods

- Deep Policy-Based Methods

- Deep Actor–Critic Methods

- Deep Model-Based Methods

- Hybrid and Meta Deep Reinforcement Learning Methods

- Practical Considerations

- Tools and Frameworks for Deep Reinforcement Learning

- Policy Optimization for LLMs

- Model Roles

- Refresher: Notation Mapping between Classical RL and Language Modeling

- Policy Model

- Reference Model

- Reward Model

- Value Model

- Optimizing the Policy

- Integration of Policy, Reference, Reward, and Value Models in RLHF

- Model Roles

- Policy Evaluation

- Challenges of Reinforcement Learning

- Exploration vs. Exploitation Dilemma

- Sample Inefficiency

- Sparse and Delayed Rewards

- High-Dimensional State and Action Spaces

- Long-Term Dependencies and Credit Assignment

- Stability and Convergence

- Safety and Ethical Concerns

- Generalization and Transfer Learning

- Computational Resources and Scalability

- Reward Function Design

- FAQs

- References

- Citation

Overview

-

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make sequential decisions by interacting with an environment. The goal of the agent is to maximize cumulative rewards over time by learning which actions yield the best outcomes in different states of the environment. Unlike supervised learning, where models are trained on labeled data, RL focuses on exploration and exploitation: the agent must explore various actions to discover high-reward strategies while exploiting what it has learned to achieve long-term success.

-

In RL, the agent, environment, actions, states, and rewards are fundamental components. At each step, the agent observes the state of the environment, chooses an action based on its policy (its strategy for selecting actions), and receives a reward that guides future decision-making. The agent’s objective is to learn a policy that maximizes the expected cumulative reward, typically by using techniques such as dynamic programming, Monte Carlo methods, or temporal-difference learning.

-

Deep RL extends traditional RL by leveraging deep neural networks to handle complex environments with high-dimensional state spaces. This allows agents to learn directly from raw, unstructured data, such as pixels in video games or sensors in robotic control. Deep RL algorithms, like Deep Q-Networks (DQN) and policy gradient methods (e.g., Proximal Policy Optimization, Group Relative Policy Optimization (GRPO), etc.), have achieved breakthroughs in domains like playing video games at superhuman levels, robotics, and autonomous driving.

-

This primer provides an introduction to the foundational concepts of RL, explores key algorithms, and outlines how deep learning techniques enhance the power of RL to tackle real-world, high-dimensional problems.

Basics of Reinforcement Learning

-

RL is a type of machine learning where an agent learns to make decisions by interacting with an environment. Unlike supervised learning, where a model learns from a fixed dataset of labeled examples, RL focuses on learning from the consequences of actions rather than from predefined correct behavior. The interaction between the agent and the environment is guided by the concepts of states, actions, rewards, and policies, which form the foundation of RL. The agent seeks to maximize cumulative rewards by exploring different actions and learning which ones yield the best outcomes over time.

-

Deep RL extends this framework by incorporating neural networks to handle high-dimensional, complex problems that traditional RL methods struggle with. By using deep learning techniques, Deep RL can tackle challenges like visual input or other high-dimensional data, allowing it to solve problems that are intractable for classical RL approaches. This combination of RL and neural networks enables agents to perform well in more complex environments with minimal manual intervention.

Key Components of Reinforcement Learning

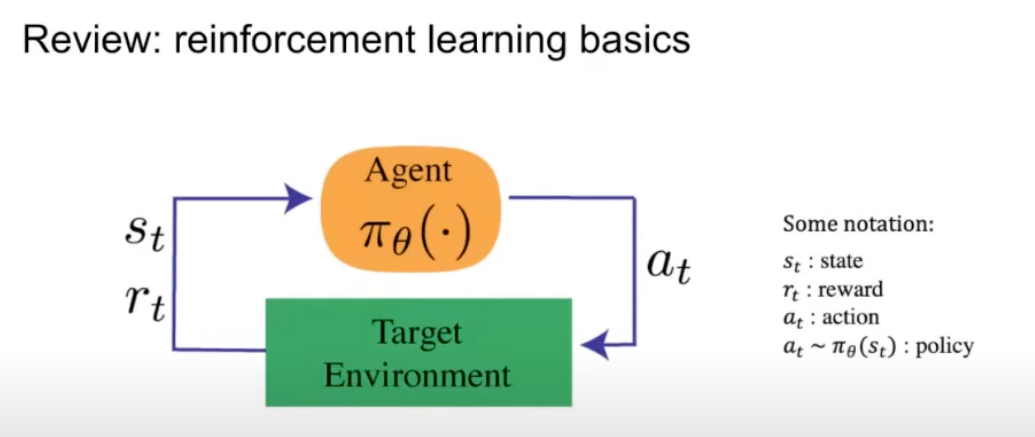

- At the core of RL is the interaction between an agent and an environment, as shown in the diagram below (source):

-

In this interaction, the agent takes actions in the environment and receives feedback in the form of states and rewards. The goal is for the agent to learn a strategy, or policy, that maximizes the cumulative reward over time.

-

Here are the critical components of RL:

-

Agent/Learner: The agent is the learner or decision-maker. It is responsible for selecting actions based on the current state of the environment.

-

Environment: Everything the agent interacts with. The environment defines the rules of the game, transitioning from one state to another based on the agent’s actions.

-

State (\(s\)): A representation of the environment at a particular point in time. States encapsulate all the information that the agent needs to know to make a decision. For example, in a video game, a state might be the current configuration of the game board.

-

Action (\(a\)): A decision taken by the agent in response to the current state. In each state, the agent must choose an action from a set of possible actions, which will affect the future state of the environment.

-

Reward (\(r\)): A scalar value that the agent receives from the environment after taking an action. The reward provides feedback on how good or bad an action was in that particular state. The agent’s objective is to maximize the cumulative reward over time, often referred to as the return.

-

Policy (\(\pi\)): A policy is the strategy the agent uses to determine the actions to take based on the current state. It is implemented as a mapping from states to action probabilities. It can be tabular, i.e., a simple lookup table mapping states to actions, or it can be more complex, such as a neural network in the case of deep RL. The policy can be deterministic (always taking the same action for a given state) or stochastic (taking different actions with some probability).

-

Value Function: The value function estimates how good (i.e., how much total expected reward) it is to be in a particular state (in which case, it is called the state-value function) or to take a specific action in that state (in which case, it is called the action-value function). It does so by accounting for both the immediate reward and the expected future rewards from subsequent states, helping the agent understand long-term reward potential rather than focusing only on immediate rewards. While value functions are of two types (i.e., state-value function and action-value function), the state-value function is also commonly called the value function. The relationship between the two is given by: \(V^{\pi}(s) = \sum_a \pi(a \mid s) Q^{\pi}(s, a)\), which means the value of a state under policy \(\pi\) is the expected action-value, averaged over all possible actions the policy might take in that state.

-

State-Value Function (V-function): Denoted as \(V^{\pi}(s)\), the state-value function measures the expected return (total discounted reward) starting from state \(s\) and then following policy \(\pi\) thereafter. It is formally defined as \(V^{\pi}(s) = \mathbb{E}_{\pi}\left[\sum_{t=0}^{\infty} \gamma^t r_t \mid s_0 = s\right]\), where \(\pi\) is the policy, \(\gamma \in [0,1)\) is the discount factor weighting future rewards, \(r_t\) is the reward received at time step \(t\), and the expectation \(\mathbb{E}_{\pi}[\cdot]\) is taken over trajectories generated by following policy \(\pi\).

-

Action-Value Function (Q-function): Denoted as \(Q(s, a)\) (where \(Q\) stands for “quality”), the action-value function measures the expected return for taking action \(a\) in state \(s\) and then following the policy \(\pi\) thereafter. It plays a central role in algorithms like Q-learning and Deep Q-Networks (DQN). It is formally defined as \(Q^{\pi}(s, a) = \mathbb{E}_{\pi}\left[\sum_{t=0}^{\infty} \gamma^t r_t \mid s_0 = s, a_0 = a\right]\), where the terms have the same meanings as above, except that the expectation begins from both a given state \(s\) and an initial action \(a\).

-

Advantage Function (\(A\)): The advantage function quantifies how much better taking a specific action \(a\) in state \(s\) is compared to the average action according to the policy. Put simply, the advantage function measures how much better the actual return was from a particular state than what was expected from that state before acting. It is is commonly used in policy gradient methods such as Actor-Critic and Proximal Policy Optimization (PPO) to reduce variance in gradient estimates. It is defined as \(\hat{A}(s, a) = Q(s, a) - V(s)\), where \(Q(s, a)\) is the action-value function (the expected return for taking action \(a\) in state \(s\) and then following the policy), and \(V(s)\) is the state-value function (the expected return from state \(s\) when following the policy).

-

Return (\(G\)): The total accumulated reward from a given time step onward, often discounted to prioritize near-term rewards \(G_t = \sum_{k=0}^{\infty} \gamma^k r_{t+k+1}\), where \(G\) stands for “gain” and \(\gamma\) is the discount factor that determines how much future rewards are valued relative to immediate rewards.

-

Discount Factor (\(\gamma\)): A scalar between 0 and 1 that controls the importance of future rewards. Smaller values make the agent myopic (focusing on immediate rewards), while larger values encourage long-term planning. Typically set closer to 1 to focus on long-term rewards rather than immediate ones.

-

Exploration vs. Exploitation: The trade-off between exploring new actions to discover potentially better rewards and exploiting known actions that already yield high rewards. Balancing these two is crucial for effective learning.

-

Trajectory/Episode/Rollout: A sequence of states, actions, rewards, and next states from the beginning of an episode to its termination, representing one complete interaction of the agent with the environment.

-

Temporal-Difference (TD) Error: The difference between the predicted value of a state and the observed reward plus the estimated value of the next state. It is used to update value estimates dynamically in methods like TD-learning, where the TD error is given by \(\delta_t = r_t + \gamma V(s_{t+1}) - V(s_t)\), with \(r_t\) as the immediate reward, \(\gamma\) the discount factor, and \(V(s_t)\) and \(V(s_{t+1})\) being the predicted values of the current and next states respectively.

-

Replay Buffer (Experience Replay): In Deep RL, a replay buffer stores past transitions (state, action, reward, next state) for sampling during training. This helps break correlation between consecutive samples—since experiences are drawn randomly rather than sequentially—allowing the agent to learn from a more diverse and independent set of experiences, which improves data efficiency and stabilizes training.

-

Actor-Critic Architecture: A hybrid approach combining a policy-based (actor) component that selects actions and a value-based (critic) component that evaluates them. The critic’s feedback stabilizes the actor’s learning.

-

The Bellman Equation

-

The Bellman Equation is a fundamental concept in RL, used to describe the relationship between the value of a state and the value of its successor states. It breaks down the value function into immediate rewards and the expected value of future states.

-

For a given policy \(\pi\), the state-value function \(V^{\pi}(s)\) can be written as:

\[V^{\pi}(s) = \mathbb{E}_\pi \left[ r_t + \gamma V^{\pi}(s_{t+1}) \mid s_t = s \right]\]- where:

- \(V^{\pi}(s)\) is the value of state \(s\) under policy \(\pi\),

- \(r_t\) is the reward received after taking an action at time \(t\),

- \(\gamma\) is the discount factor (0 ≤ \(\gamma\) ≤ 1) that determines the importance of future rewards,

- \(s_{t+1}\) is the next state after taking an action from state \(s\).

- where:

-

This equation expresses that the value of a state \(s\) is the immediate reward \(r_t\) plus the discounted value of the next state \(V^{\pi}(s_{t+1})\). The Bellman equation is central to many RL algorithms, as it provides the basis for recursively solving the optimal value function.

The RL Process: Trial and Error Learning

- The agent interacts with the environment in a loop:

- At each time step, the agent observes the current state of the environment.

- Based on this state, it selects an action according to its policy.

- The environment transitions to a new state, and the agent receives a reward.

- The agent uses this feedback to update its policy, gradually improving its decision-making over time.

- This process of learning from trial and error allows the agent to explore different actions and outcomes, eventually finding the optimal policy that maximizes the long-term reward.

Mathematical Formulation: Markov Decision Process (MDP)

- RL problems are typically framed as Markov Decision Processes (MDP), which provide a mathematical framework for modeling decision-making where outcomes are partly random and partly under the control of the agent. An MDP is defined by:

- States (\(S\)): The set of all possible states in the environment.

- Actions (\(A\)): The set of all possible actions the agent can take.

- Transition function (\(P\)): The probability distribution \(P(s_{t+1} \mid s_t, a_t)\) describing transitions from one state to another given an action.

- Reward function (\(R\)): The immediate reward \(R(s_t, a_t, s_{t+1})\) received after transitioning between states.

- Discount factor (\(\gamma\)): A scalar \(\gamma \in [0,1]\) controlling the importance of future rewards; values close to \(0\) emphasize immediate rewards, while values close to \(1\) emphasize long-term rewards.

-

The agent’s goal is to learn a policy \(\pi(s)\) that maximizes the expected cumulative reward (i.e., expected return), often expressed as:

\[G_t = \sum_{k=0}^{\infty} \gamma^k r_{t+k+1}\]- where:

- \(G_t\) is the total return starting from time step \(t\),

- \(\gamma\) is the discount factor,

- \(r_{t+k+1}\) is the reward received at time \(t+k+1\).

- where:

Offline vs. Online Reinforcement Learning

Offline Reinforcement Learning

-

Definition: Offline RL, also known as batch RL, refers to a RL paradigm where the agent learns solely from a pre-collected dataset of experiences without any interaction with the environment during training.

- Key Characteristics:

- Static Dataset: The dataset typically consists of tuples (state, action, reward, next state) that are collected by a specific policy, which could be suboptimal or from a combination of multiple policies.

- No Real-Time Interaction: Unlike online RL, the agent does not have the ability to gather new data or explore unknown parts of the state space.

- Policy Evaluation and Improvement: The primary goal is to learn a policy that generalizes well to the environment when deployed, leveraging the provided static data.

- Advantages:

- Safety and Cost-Effectiveness: Offline RL eliminates the risks and costs associated with real-world interactions, making it particularly valuable in -itical applications like healthcare or autonomous vehicles.

- Utilization of Historical Data: It allows researchers to leverage existing datasets, such as logs from previously deployed systems, for policy improvement without further data collection efforts.

- Challenges:

- Distributional Shift: The learned policy may take actions that lead to parts of the state space not covered in the dataset, resulting in poor performance (extrapolation error).

- Dependence on Dataset Quality: The effectiveness of the learning process is highly sensitive to the diversity and representativeness of the dataset.

- Overfitting: The agent might overfit to the static dataset, leading to poor generalization in unseen scenarios.

- Techniques to Address Challenges:

- Conservative Algorithms: Methods like Conservative Q-Learning (CQL) restrict the agent from overestimating out-of-distribution actions.

- Uncertainty Estimation: Leveraging uncertainty-aware models to avoid relying on poorly represented regions of the dataset.

- Offline-Optimized Models: Algorithms such as Batch Constrained Q-Learning (BCQ) and Behavior Regularized Actor-Critic (BRAC) are designed specifically for offline settings.

- Use Cases:

- Healthcare: Training models on patient treatment records to recommend actions without real-time experimentation.

- Autonomous Driving: Leveraging driving logs to improve decision-making policies without the risks of on-road testing.

- Robotics: Using pre-recorded demonstration data to teach robots tasks without additional data collection.

Online Reinforcement Learning

-

Definition: Online RL involves continuous interaction between the agent and the environment during training. The agent collects data through trial and error, allowing it to refine its policy iteratively in real time.

- Key Characteristics:

- Active Data Collection: The agent explores the environment to gather new experiences, enabling adaptation to dynamic or previously unseen states.

- Feedback Loop: There is a direct link between the agent’s actions, the environment’s responses, and policy improvement.

- Exploration-Exploitation Tradeoff: Balancing the exploration of new actions and the exploitation of learned strategies is a critical aspect of online RL.

- Advantages:

- Dynamic Adaptation: The agent can dynamically adapt to changes in the environment, ensuring robust performance.

- Optimal Exploration: By actively engaging with the environment, the agent can learn optimal strategies even in highly complex state spaces.

- Challenges:

- Exploration Risks: Excessive exploration can lead to suboptimal or dangerous actions, particularly in high-stakes applications.

- Resource-Intensive: Online RL requires significant computational and environmental resources due to real-time interaction.

- Stability and Convergence: Ensuring stable learning and avoiding divergence are ongoing research challenges.

- Techniques to Address Challenges:

- Exploration Strategies: Methods like epsilon-greedy, softmax exploration, or intrinsic motivation frameworks guide effective exploration.

- Stability Enhancements: Algorithms like Proximal Policy Optimization (PPO) and Trust Region Policy Optimization (TRPO) improve convergence stability.

- Efficient Learning: Techniques like prioritized experience replay and model-based RL improve data efficiency.

- Use Cases:

- Robotics: Training robots in simulated environments with the ability to transfer learned policies to the real world.

- Games: Developing agents that play video games, such as AlphaGo or OpenAI Five, through millions of simulated interactions.

- Dynamic Systems: Adapting to real-world systems with changing conditions, such as stock trading or energy management.

Comparative Analysis

| Aspect | Offline RL | Online RL |

|---|---|---|

| Data Source | Fixed, pre-collected dataset | Real-time interaction |

| Exploration | Not possible; constrained by dataset | Required |

| Learning | Static learning from a fixed dataset | Dynamic and iterative |

| Environment Access | No interaction during training | Continuous interaction |

| Main Challenges | Distributional shift, dataset quality | Exploration-exploitation balance, stability |

| Efficiency | Efficient with quality datasets | Resource-intensive |

| Use Cases | Healthcare, autonomous driving, robotics | Games, robotics, dynamic systems |

Hybrid Approaches

- Hybrid RL approaches combine the strengths of both paradigms. A typical strategy involves:

- Offline Pretraining: Using offline RL to initialize the agent’s policy with a high-quality dataset.

- Online Fine-Tuning: Allowing the agent to interact with the environment to refine its policy and improve performance further.

- Advantages:

- Combines safety and efficiency of offline training with the adaptability of online learning.

- Accelerates convergence by leveraging prior knowledge from pretraining.

- Examples:

- Autonomous Driving: Pretraining on driving logs followed by fine-tuning in simulation or controlled environments.

- Healthcare: Learning from historical patient data and adapting through real-time interactions in clinical trials.

Types of Reinforcement Learning

-

RL encompasses a family of methods that differ in how they represent knowledge about the environment, update that knowledge, and derive decision policies. At its essence, RL aims to learn an optimal policy \(\pi^*(a \mid s)\) that maximizes the expected cumulative reward:

\[J(\pi) = \mathbb{E}_\pi \left[ \sum_{t=0}^{\infty} \gamma^t r_t \right]\]- where \(\gamma \in [0,1]\) is the discount factor weighting future rewards, and \(r_t\) is the reward at time \(t\).

- Classical RL refers to the family of foundational RL algorithms that learn from interaction or modeled experience using explicit value functions, policies, and environment models—without relying on deep neural networks for function approximation.

- While classical RL methods provide the theoretical foundation for sequential decision-making and control, modern deep RL extends these principles by leveraging neural networks to approximate value functions and policies in complex, high-dimensional environments. A detailed discourse on deep RL is available in the Deep Reinforcement Learning section.

-

The following are the principal categories of classical RL techniques, each of which will be explored in detail in subsequent subsections. While these categories are often presented separately, they are not entirely independent—many RL algorithms combine ideas across them. For example, actor–critic methods merge policy-based and value-based principles, and both model-based and model-free approaches can be implemented using either value-based or policy-based learning. In other words, model-based/model-free defines how an agent learns from or about the environment, while value-based/policy-based defines what the agent learns to optimize its behavior.

-

Value-Based Methods: Value-based methods estimate the value of states or state–action pairs and derive an optimal policy by choosing actions that maximize these values. A foundational example is Q-learning by Watkins & Dayan (1992).

-

Policy-Based Methods: Policy-based methods directly optimize the agent’s policy \(\pi(a \mid s)\) using gradient-based techniques without explicitly estimating value functions. A seminal contribution in this area is the REINFORCE algorithm by Williams (1992).

-

Actor–Critic Methods: Actor–Critic methods combine value-based and policy-based principles by maintaining two components: an actor that proposes actions and a critic that evaluates them. This structure was formalized by Barto, Sutton & Anderson (1983).

-

Model-Based Methods: Model-based RL algorithms explicitly learn or use a model of the environment’s dynamics \(P(s' \mid s,a)\) and reward function \(R(s,a)\) to enable planning and decision-making. The approach originates from policy iteration and value iteration introduced by Howard (1960).

-

Model-Free Methods: Model-free methods dispense with explicit environment modeling and instead learn directly from interaction data, adjusting their estimates of value or policy from experience tuples \((s,a,r,s')\). A canonical example is SARSA by Rummery & Niranjan (1994).

-

On-Policy vs. Off-Policy Learning: This distinction describes whether an agent learns from data generated by its own policy or another policy. On-policy methods (e.g., SARSA) update based on their current behavior, while off-policy methods (e.g., Q-learning) learn from experiences generated by a different policy (Precup, Sutton & Singh, 2000).

-

Value-Based Methods

- Value-based methods form the cornerstone of RL. Their core principle is to learn value functions that estimate how good it is for an agent to be in a given state or to perform a specific action in that state.

- These methods do not learn policies directly; instead, they infer the optimal policy from the learned values by choosing actions that maximize expected future rewards.

Foundations of Value Functions

- Two central value functions define this class of methods:

-

State-Value Function:

\[V^{\pi}(s) = \mathbb{E}_{\pi} \left[ \sum_{t=0}^{\infty} \gamma^t r_t \mid S_0 = s \right]\]- This represents the expected cumulative reward when starting from state \(s\) and following policy \(\pi\) thereafter.

-

Action-Value Function:

\[Q^{\pi}(s,a) = \mathbb{E}_{\pi} \left[ \sum_{t=0}^{\infty} \gamma^t r_t \mid S_0 = s, A_0 = a \right]\]- This quantifies the expected return when taking action \(a\) in state \(s\) and then following policy \(\pi\).

-

The optimal policy \(\pi^*\) can then be derived as:

\[\pi^*(s) = \arg\max_a Q^*(s,a)\]- where \(Q^*(s,a)\) is the optimal action-value function.

Dynamic Programming (DP)

-

Dynamic Programming represents the earliest and most theoretically grounded approach to solving RL problems. It assumes that a complete model of the environment is known—specifically, the transition probabilities \(P(s' \mid s,a)\) and reward function \(R(s,a)\).

-

Introduced by Bellman (1957), DP methods are built upon the Bellman Optimality Equation, which recursively expresses the relationship between the value of a state and the values of its successor states:

-

Two major DP algorithms are:

- Value Iteration: Alternates between evaluating and improving the value function until convergence to \(V^*(s)\).

- Policy Iteration: Alternates between policy evaluation (estimating \(V^{\pi}\)) and policy improvement (updating \(\pi\)) until the policy stabilizes.

-

DP is exact and guaranteed to converge for finite MDPs, but it is computationally infeasible in large state spaces due to the curse of dimensionality.

-

Key Reference:

- Howard (1960): introduced policy iteration as a computationally efficient refinement to Bellman’s DP framework.

Monte Carlo (MC) Methods

-

Monte Carlo methods learn value functions from experience, without requiring a model of the environment. They estimate expected returns by averaging the actual returns observed after complete episodes of experience.

-

For a state \(s\), the Monte Carlo estimate of the value is:

-

where \(G_i\) is the total return following the \(i^{th}\) visit to \(s\), and \(N(s)\) is the number of visits to \(s\).

-

Advantages:

- Model-free: no need for transition probabilities.

- Simple and unbiased estimates after enough samples.

-

Limitations:

- Requires episodes to terminate (not suitable for continuing tasks).

- Slow convergence due to reliance on complete trajectories.

-

Key References:

- Samuel (1959): introduced early machine learning ideas based on Monte Carlo updates in checkers.

- Sutton & Barto (1998): formalized Monte Carlo methods in modern RL.

Temporal Difference (TD) Learning

-

Temporal Difference learning blends the key ideas of Monte Carlo and Dynamic Programming — learning directly from raw experience without requiring a model, and updating value estimates based on bootstrapping from other estimates.

-

The core update rule for TD(0) is:

- Here, the agent updates its estimate of \(V(S_t)\) using the observed reward plus the discounted value of the next state, rather than waiting for the episode to finish.

-

TD learning provides the foundation for most modern value-based algorithms, including SARSA and Q-Learning.

-

Advantages::

- Online, incremental updates.

- Works for both episodic and continuing tasks.

- Converges faster than Monte Carlo in many settings.

-

Key References::

- Sutton (1988): introduced Temporal Difference Learning, establishing the bridge between prediction and control.

- Watkins & Dayan (1992): extended TD ideas to Q-Learning, the most influential off-policy control algorithm.

Comparative Analysis

| Method | Model Requirement | Update Type | Sample Efficiency | Key References |

|---|---|---|---|---|

| Dynamic Programming | Requires full model | Full backup | High computational cost | Bellman (1957), Howard (1960) |

| Monte Carlo | Model-free | Episodic, complete return | Low | Samuel (1959), Sutton & Barto (1998) |

| Temporal Difference (TD) | Model-free | Bootstrapped incremental | High | Sutton (1988), Watkins & Dayan (1992) |

Policy-Based Methods

-

While value-based methods focus on estimating the long-term value of states or state–action pairs, policy-based methods take a more direct approach: they learn a parameterized policy that maps states to actions and optimize it to maximize expected return.

-

These methods are particularly useful in environments with continuous or stochastic action spaces, where value-based techniques like Q-learning are difficult to apply effectively.

Policy Representation and Objective

- In policy-based RL, the agent’s behavior is represented by a stochastic policy \(\pi_\theta(a \mid s)\), parameterized by \(\theta\). The goal is to find parameters that maximize the expected return:

- Unlike value-based methods, which derive a policy indirectly from learned value estimates, policy-based approaches directly optimize this objective by computing its gradient with respect to the parameters \(\theta\).

The Policy Gradient Theorem

- The key insight enabling policy optimization is the Policy Gradient Theorem (Sutton et al., 2000).

- It provides a way to estimate the gradient of the expected return without differentiating through the environment’s dynamics:

- This formulation allows gradient ascent on \(J(\theta)\) using trajectories sampled from the current policy.

- Intuitively, the update increases the probability of actions that yield higher returns and decreases it for less rewarding ones.

REINFORCE Algorithm

- The REINFORCE algorithm (Williams, 1992) is the simplest and most influential policy gradient method.

- It estimates the gradient using complete episodes of experience, updating the policy parameters as follows:

-

where:

- \(\alpha\) is the learning rate,

- \(G_t = \sum_{k=t}^{T} \gamma^{k-t} r_k\) is the return following time \(t\).

-

The algorithm works by reinforcing (increasing the probability of) actions that lead to higher observed returns.

Baseline Reduction

- Because the variance of gradient estimates can be large, REINFORCE often includes a baseline \(b(s_t)\), typically the state value \(V^{\pi}(s_t)\), to reduce variance without introducing bias:

- This concept laid the foundation for later actor–critic methods, where the critic effectively serves as a learned baseline.

Natural Policy Gradient (NPG)

Standard gradient ascent can be inefficient in policy space due to curvature distortions caused by parameterization. The Natural Policy Gradient method, introduced by Kakade (2001), addresses this by using the Fisher information matrix \(F(\theta)\) to compute updates invariant to the parameter scaling:

\[\theta \leftarrow \theta + \alpha F(\theta)^{-1} \nabla_\theta J(\theta)\]This ensures that updates are taken in directions that respect the geometry of the policy distribution, leading to faster and more stable convergence.

Advantages and Limitations

-

Advantages:

- Naturally handles continuous and stochastic action spaces.

- Enables stochastic exploration without explicit noise.

- Offers smooth policy improvement without discontinuities.

-

Limitations:

- High variance in gradient estimates.

- Often requires large numbers of trajectories for accurate estimation.

- Sensitive to hyperparameters like learning rate and baseline design.

Comparative Analysis

| Method | Core Idea | Handles Continuous Actions | Key Innovation | Key References |

|---|---|---|---|---|

| Policy Gradient (PG) | Optimize policy parameters via expected return gradient | Yes | Policy Gradient Theorem | Sutton et al. (2000) |

| REINFORCE | Use sampled returns to update policy probabilities | Yes | Monte Carlo estimation of policy gradient | Williams (1992) |

| Natural Policy Gradient | Adjust gradient using Fisher information for invariance | Yes | Geometric optimization in policy space | Kakade (2001) |

Actor–Critic Methods

-

Actor–Critic methods bridge the conceptual gap between value-based and policy-based RL. While policy-based methods optimize the policy directly and value-based methods estimate the expected return, actor–critic frameworks do both simultaneously.

-

They maintain two distinct components:

- The Actor, which updates the policy parameters in the direction suggested by the critic’s evaluation.

- The Critic, which estimates value functions and provides a baseline to stabilize and guide policy updates.

-

This architecture allows actor–critic methods to combine the low variance of value-based updates with the expressive flexibility of policy-based optimization.

Conceptual Foundation

- The actor–critic approach builds upon the policy gradient theorem and temporal difference (TD) learning.

-

At time \(t\), the policy \(\pi_\theta(a \mid s)\) selects an action, and the critic evaluates it using a value function \(V_w(s)\) or \(Q_w(s,a)\), parameterized by weights \(w\).

-

The actor updates its policy parameters according to:

\[\theta \leftarrow \theta + \alpha \nabla_\theta \log \pi_\theta(a_t \mid s_t) , \delta_t\]- where \(\delta_t\) is the TD error, defined as:

- This TD error acts as a critic signal, indicating whether the action taken was better or worse than expected.

The Advantage Function

- To improve stability and efficiency, actor–critic methods often use the advantage function, which measures how much better an action \(a\) is compared to the average action in a given state:

- Using the advantage function instead of raw returns reduces variance in policy gradient estimates, leading to smoother learning.

- The resulting update rule becomes:

- This formulation unifies the critic’s evaluative feedback with the actor’s improvement mechanism.

Classical Actor–Critic Algorithms

- The actor–critic paradigm originated with the Adaptive Heuristic Critic (AHC) architecture proposed by Barto, Sutton & Anderson (1983).

-

It introduced the two-network idea — one learning to evaluate (critic) and another learning to control (actor).

-

Subsequent developments expanded this framework into more specialized variants:

-

Incremental Natural Actor–Critic (INAC): Proposed by Peters & Schaal (2008), INAC integrated natural gradient concepts (from Kakade, 2001) to improve convergence stability in actor–critic settings.

-

Continuous Actor–Critic Learning Automaton (CACLA): Introduced by Van Hasselt & Wiering (2007), CACLA extended actor–critic methods to continuous action domains by updating the actor only when the TD error is positive — i.e., when the action performed better than expected.

-

Asynchronous Advantage Actor–Critic (A3C): Although later extended into deep RL, its theoretical roots lie in classical actor–critic formulations. The A3C framework applied parallelism to stabilize policy updates based on advantage estimation, conceptually descending from earlier work on synchronous actor–critic learning.

-

Policy Evaluation and Improvement Cycle

-

Actor–Critic algorithms can be seen as implementing a generalized policy iteration (GPI) process — alternating between:

-

Policy Evaluation: The critic estimates \(V^{\pi}(s)\) or \(Q^{\pi}(s,a)\) using TD learning or Monte Carlo rollouts.

-

Policy Improvement: The actor updates \(\pi_\theta(a \mid s)\) using gradient ascent based on the critic’s feedback.

-

-

This dynamic mirrors classical policy iteration by Howard (1960), but operates incrementally and stochastically, enabling online learning in complex environments.

Advantages and Limitations

-

Advantages:

- Combines the strengths of policy and value methods (low bias, low variance).

- Suitable for continuous action spaces.

- Supports online and incremental learning.

- Naturally extends to partially observable and stochastic domains.

-

Limitations:

- Sensitive to critic accuracy; unstable when critic is poorly estimated.

- Requires careful tuning of learning rates for actor and critic.

- Can exhibit oscillatory dynamics if updates are not synchronized.

Comparative Analysis

| Method | Core Idea | Advantage Function | Continuous Actions | Key References |

|---|---|---|---|---|

| Actor–Critic (AHC) | Two-network structure: actor (policy) and critic (value) | Optional | Yes | Barto, Sutton & Anderson (1983) |

| INAC | Combines actor–critic with natural gradients for stability | Yes | Yes | Peters & Schaal (2008) |

| CACLA | Updates actor only for positive TD errors | Implicit | Yes | Van Hasselt & Wiering (2007) |

| GPI View | Alternating evaluation and improvement loops | Yes | General | Howard (1960) |

Model-Based Reinforcement Learning

-

Model-Based Reinforcement Learning (MBRL) refers to a family of techniques that explicitly learn or exploit a model of the environment’s dynamics to predict future states and rewards, enabling planning and sample-efficient policy optimization. Unlike model-free methods that learn purely from experience, model-based approaches simulate potential futures to guide decision-making.

-

This distinction makes model-based methods conceptually closer to optimal control theory and planning algorithms used in operations research and robotics.

The Environment Model

-

The central concept in model-based RL is the Markov Decision Process (MDP) model, represented by:

- Transition Function: \(P(s' \mid s,a) = \Pr(S_{t+1}=s' \mid S_t=s, A_t=a)\)

- Reward Function: \(R(s,a) = \mathbb{E}[r_{t+1} \mid s,a]\)

-

With access to these functions, one can compute expected returns, plan trajectories, and compute optimal policies using classical algorithms such as Value Iteration and Policy Iteration introduced by Howard (1960).

-

The model can either be:

- Given (known dynamics): The environment is fully specified, as in many simulated domains.

- Learned (unknown dynamics): The agent estimates \(P(s' \mid s,a)\) and \(R(s,a)\) from collected experience.

Planning with a Model

- Given a known model, the agent can perform planning — evaluating and improving policies without interacting with the real environment.

- This is accomplished by recursively solving the Bellman Optimality Equation:

- and deriving the corresponding optimal policy:

- This class of methods, encompassing policy iteration and value iteration, forms the foundation of model-based reasoning and exact planning in small-scale or deterministic environments.

Learning the Model

- In more realistic settings, the transition and reward models are not known a priori.

- In such cases, the agent must learn an approximate model from experience:

-

Learning these models transforms the RL problem into a supervised learning task, where the goal is to predict next states and rewards from observed transitions \((s,a,s',r)\).

-

Model-learning can use:

- Tabular frequency estimates (in small discrete environments),

- Regression or Gaussian processes (Deisenroth & Rasmussen, 2011),

- or function approximators (in continuous spaces).

The Dyna Architecture

-

A seminal hybrid framework combining learning, planning, and acting was proposed in Dyna by Sutton (1990). Dyna integrates:

- Model learning: Build an internal model from experience.

- Planning: Generate synthetic experiences from the model to update the value function.

- Real experience: Continue updating from actual environment interactions.

-

This allows the agent to perform imaginary rollouts using its learned model, accelerating learning while maintaining adaptability.

-

Formally, Dyna’s process alternates between:

-

Direct RL update (from real experiences):

\[Q(s,a) \leftarrow Q(s,a) + \alpha [r + \gamma \max_{a'} Q(s',a') - Q(s,a)]\] -

Simulated updates (using the learned model \(\hat{P}, \hat{R}\)):

\[\tilde{Q}(s,a) \leftarrow \tilde{Q}(s,a) + \alpha [\hat{R}(s,a) + \gamma \max_{a'} \tilde{Q}(\hat{s}',a') - \tilde{Q}(s,a)]\]

-

-

This integration of planning and learning was foundational to later sample-efficient RL systems.

Strengths and Challenges

-

Advantages:

- Sample efficiency: Learns faster due to simulated experience.

- Planning capability: Can evaluate long-term effects before acting.

-

Flexibility: Unifies learning and control.

-

Challenges:

- Model bias: Imperfect models can lead to suboptimal or unstable policies.

- Complexity: Model estimation adds computational and representational burden.

- Scalability: Accurate models are difficult in large or stochastic environments.

Comparative Analysis

| Method | Requires Model | Planning Component | Sample Efficiency | Key References |

|---|---|---|---|---|

| Value/Policy Iteration | Yes (known model) | Full backups | High (exact) | Howard (1960) |

| Learned Models | Estimated from data | Yes | Moderate | Deisenroth & Rasmussen (2011) |

| Dyna Architecture | Yes (learned) | Integrated | High | Sutton (1990) |

Model-Free Reinforcement Learning

-

Model-Free Reinforcement Learning (MFRL) refers to a broad class of algorithms that learn optimal behavior without explicitly modeling the environment’s dynamics. Instead of estimating transition probabilities \(P(s' \mid s,a)\) or reward functions \(R(s,a)\), model-free agents learn value functions or policies directly from raw experience tuples \((s, a, r, s')\).

-

This makes MFRL algorithms simpler and more general, at the expense of sample efficiency. They form the practical foundation for most online RL systems and are closely tied to the concept of trial-and-error learning.

Foundations

- In a model-free setting, the agent’s objective remains to learn an optimal policy \(\pi^*(a \mid s)\) that maximizes the expected return:

- However, since the agent does not possess an explicit model of the environment, it must approximate this expectation using empirical experience collected through exploration.

- Learning proceeds incrementally by adjusting estimates of value functions or policies based on observed temporal-difference (TD) errors.

On-Policy vs. Off-Policy Learning

-

A key distinction in model-free RL is how experiences are gathered and used:

-

On-Policy Methods: Learn from actions taken by the current policy (e.g., SARSA). The agent learns to evaluate and improve the same policy it uses for exploration.

-

Off-Policy Methods: Learn from actions generated by a different policy (e.g., Q-Learning). This allows leveraging historical or exploratory data for more efficient learning.

-

-

This dichotomy was formalized by Precup, Sutton & Singh (2000), who introduced importance sampling corrections to enable off-policy evaluation.

SARSA: On-Policy TD Control

- SARSA (State–Action–Reward–State–Action), proposed by Rummery & Niranjan (1994), is an on-policy temporal-difference control algorithm.

- It updates the action-value function \(Q(s,a)\) based on the transition sequence \((s_t, a_t, r_t, s_{t+1}, a_{t+1})\):

-

This update reflects the return expected from continuing to act according to the current policy, which makes it safer and more stable for non-stationary environments, though sometimes slower to converge.

-

Key properties:

- Evaluates the current (behavior) policy directly.

- Naturally balances exploration and exploitation.

- More robust under stochasticity.

Q-Learning: Off-Policy TD Control

- Q-Learning, introduced by Watkins & Dayan (1992), is the archetypal off-policy model-free algorithm.

- It estimates the optimal action-value function \(Q^*(s,a)\) by updating toward the maximum value achievable from the next state, regardless of the current behavior policy:

-

This formulation separates policy evaluation (learning from exploratory behavior) from policy improvement (acting greedily with respect to \(Q\)), enabling learning from arbitrary data sources or replay buffers.

-

Key properties:

- Converges to \(Q^*\) under standard assumptions (finite state-action space, decaying learning rate).

- Highly flexible — can learn from off-policy or logged data.

- The foundation for most modern off-policy algorithms, including Deep Q-Networks (DQN).

Exploration Strategies

-

Model-free RL requires effective exploration to ensure sufficient coverage of the state–action space. Common strategies include:

- \(\epsilon\)-Greedy Exploration:

- With probability \(1 - \varepsilon\), choose the greedy action; with probability \(\varepsilon\), pick a random one.

- Balances exploitation of known high-value actions with exploration of new ones.

- Softmax / Boltzmann Exploration:

- Selects actions probabilistically according to their estimated Q-values: \(P(a \mid s) = \frac{e^{Q(s,a)/\tau}}{\sum_{b} e^{Q(s,b)/\tau}}\)

- where \(\tau\) controls exploration temperature.

- Upper Confidence Bounds (UCB):

- Encourages exploration of actions with higher uncertainty in their value estimates.

- \(\epsilon\)-Greedy Exploration:

-

These techniques are crucial for preventing premature convergence to suboptimal policies, especially in stochastic or large environments.

Strengths and Limitations

-

Advantages:

- Simpler and easier to implement than model-based methods.

- No need for an explicit environment model.

- Robust across varied environments and tasks.

-

Limitations:

- Poor sample efficiency due to reliance on real experience.

- Limited ability to plan or simulate long-term outcomes.

- Exploration–exploitation trade-offs can be difficult to tune.

Comparative Analysis

| Algorithm | Policy Type | Model Requirement | Learning Type | Key References |

|---|---|---|---|---|

| SARSA | On-policy | Model-free | TD control | Rummery & Niranjan (1994) |

| Q-Learning | Off-policy | Model-free | TD control | Watkins & Dayan (1992) |

| Off-Policy Evaluation | Off-policy | Model-free | Importance sampling | Precup, Sutton & Singh (2000) |

On-Policy vs. Off-Policy Reinforcement Learning

-

In RL, a critical design choice is how experience is collected and used to update the agent’s knowledge. This gives rise to two fundamental paradigms — on-policy and off-policy learning — which differ in the relationship between the policy being improved and the policy being used to generate data.

-

These paradigms span across value-based, policy-based, and actor–critic methods, and understanding their trade-offs is essential for algorithm design and stability.

Core Distinction

-

Let:

- \(\pi\) denote the target policy, i.e., the policy being optimized, and

- \(\mu\) denote the behavior policy, i.e., the policy used to generate experience data.

-

Then:

-

On-Policy Learning: \(\pi = \mu\) The agent learns from data generated by its current policy.

-

Off-Policy Learning: \(\pi \neq \mu\) The agent learns from data collected under a different policy (e.g., past versions of itself, exploratory policies, or logged data).

-

-

This distinction influences the agent’s stability, efficiency, and ability to reuse old experiences.

On-Policy Learning

-

In on-policy methods, the agent continuously improves the same policy it uses to interact with the environment. This ensures consistency between learning and behavior, but requires ongoing exploration and data collection.

-

Mathematically, for a policy \(\pi\), the value function satisfies:

- A classical example is SARSA (Rummery & Niranjan, 1994), which updates its Q-values based on the actual next action taken by the same policy:

- This results in a learning process that closely tracks the policy’s real performance — leading to greater stability, though potentially slower convergence.

Off-Policy Learning

-

In off-policy methods, the agent can learn from experience generated by another policy, allowing it to leverage past data, demonstrations, or exploration strategies.

-

For example, Q-Learning (Watkins & Dayan, 1992) uses the behavior policy to collect data, but learns about the optimal (greedy) target policy:

- Here, the agent’s learning policy (greedy) differs from its behavior policy (exploratory) — enabling data reuse, offline learning, and greater flexibility.

Importance Sampling for Off-Policy Correction

- Off-policy learning introduces distribution mismatch between the target policy \(\pi\) and behavior policy \(\mu\).

- To correct for this bias, importance sampling re-weights returns by the probability ratio of target and behavior policies:

-

The corrected value estimate becomes:

\[V^{\pi}(s_t) = \mathbb{E}_{\mu} \left[ \rho_t , G_t \right]\]- where \(G_t = \sum_{k=t}^{\infty} \gamma^{k-t} r_k\) is the observed return.

-

This technique allows off-policy algorithms to learn about arbitrary target policies from diverse datasets — a foundation for offline RL and batch learning.

-

Key reference: Precup, Sutton & Singh (2000).

Bias–Variance Trade-Off

- The two paradigms exhibit complementary characteristics:

| Property | On-Policy | Off-Policy |

|---|---|---|

| Bias | Low (samples match learning policy) | Potentially high (distribution mismatch) |

| Variance | Moderate | High (due to importance weights) |

| Sample Efficiency | Low (requires fresh data) | High (reuses past experiences) |

| Stability | High | Can be unstable without correction |

| Applicability | Online / continual learning | Offline / batch learning |

- In practice, hybrid approaches such as actor–critic or experience replay systems combine both paradigms to balance stability and efficiency.

Examples of On- and Off-Policy Algorithms

| Algorithm | Type | Method Class | Learning Mechanism | Key References |

|---|---|---|---|---|

| SARSA | On-Policy | Value-Based | TD update using actual next action | Rummery & Niranjan (1994) |

| REINFORCE | On-Policy | Policy-Based | Monte Carlo gradient using own policy | Williams (1992) |

| Actor–Critic (A2C) | On-Policy | Hybrid | TD-based advantage estimation | Barto, Sutton & Anderson (1983) |

| Q-Learning | Off-Policy | Value-Based | Bootstrapped max operator | Watkins & Dayan (1992) |

| Dyna-Q | Off-Policy | Model-Based | Synthetic rollouts with Q-learning | Sutton (1990) |

| Off-Policy Policy Gradient (OPPG) | Off-Policy | Policy-Based | Importance-weighted gradient updates | Degris, White & Sutton (2012) |

Takeaways

-

On-policy methods excel in stability and interpretability, making them ideal for online learning in dynamic environments. Off-policy methods, in contrast, enable data efficiency and reusability, powering modern offline RL and experience replay systems.

-

Both paradigms are fundamental to RL’s evolution — their interplay forming the theoretical basis for hybrid algorithms such as actor–critic, Dyna, and deep variants like DDPG and SAC in later generations.

Deep Reinforcement Learning

-

Deep Reinforcement Learning (Deep RL) refers to the integration of deep neural networks with RL, enabling agents to operate in high-dimensional, raw-input spaces (such as images or sensor feeds) and learn complex policies or value functions with minimal manual feature engineering. Classical RL methods (value-based, policy-based, model-based etc.) provided the foundational theory; Deep RL extends these by using neural networks as function approximators for value functions, policies, or models.

-

In Deep RL, one often writes:

\[\pi_\theta(a \mid s), V_w(s), Q_w(s,a)\]- where \(\theta, w\) are deep network parameters. The networks can approximate large or continuous state and action spaces, enabling Deep RL to surpass classical tabular or linear-function-approximation RL in many applications.

-

Below are the major families of deep RL techniques which have defined the landscape of deep RL, with each representing a distinct way of integrating neural networks with the RL paradigm.

Deep Value-Based Methods

-

These methods extend classical value-based RL by approximating \(Q(s,a)\) (or \(V(s)\)) via deep neural networks and selecting actions greedily (or nearly so) from those networks.

- Deep Q-Network (DQN), introduced in “Human-level control through deep RL” by Mnih et al. (2015), showed an agent learning to play Atari 2600 games from raw pixels.

- Variants include Double DQN, Dueling networks, prioritized experience replay, etc.

Deep Policy-Based Methods

-

In this family, the policy \(\pi_\theta(a \mid s)\) is parameterized by a deep network and optimized directly via policy gradients, bypassing explicit value-function estimation (though value functions may still be used as baselines).

- Policy Gradient Methods (function‐approximation context) by Sutton et al. (2000) — although not “deep” per se, this work laid the basis for deep policy-gradient RL.

- Later deep-policy work includes algorithms like TRPO, PPO, etc.

Deep Actor–Critic Methods

-

These methods combine deep policy networks (actor) with deep value or Q-networks (critic). The critic evaluates the current policy, and the actor uses this feedback to update. They offer the expressiveness of deep policies with the stability of value-based evaluation.

- One deep actor–critic method: Deep Deterministic Policy Gradient (DDPG) by Lillicrap et al. (2015) — handles continuous action spaces using an actor–critic architecture (commonly referenced in Deep RL surveys).

- More recent deep actor–critics include SAC, TD3, etc.

Deep Model-Based Methods

- Here, deep networks are used to learn models of the environment \(\hat P(s' \mid s,a), \hat R(s,a)\), or latent dynamics, which enable planning or simulation in high-dimensional spaces.

Deep Value-Based Methods

- Deep Value-Based methods extend classical value-based RL—such as Q-learning—by using deep neural networks to approximate the value function \(Q(s,a)\).

- This innovation enables agents to operate in high-dimensional observation spaces (like raw images), overcoming the limitations of tabular and linear methods that dominated early RL research.

Background: From Q-Learning to Deep Q-Learning

- In classical Q-learning, the optimal action-value function satisfies the Bellman Optimality Equation:

- However, maintaining a tabular representation of \(Q(s,a)\) becomes infeasible in large or continuous state spaces.

- Deep Value-Based methods overcome this by parameterizing \(Q(s,a)\) as a deep neural network \(Q_\theta(s,a)\), trained to minimize the Temporal Difference (TD) error:

- Here, \(\theta^-\) represents the parameters of a target network, updated periodically to stabilize training.

Deep Q-Network (DQN)

- The Deep Q-Network (DQN) introduced by Mnih et al. (2015) marked a watershed moment for RL.

-

By integrating convolutional neural networks with Q-learning, DQN achieved human-level control on Atari 2600 games from raw pixel inputs.

-

DQN introduced two key innovations to stabilize learning:

- Experience Replay: Transitions \((s,a,r,s')\) are stored in a replay buffer and sampled uniformly to break correlation between sequential updates.

- Target Network: A separate network \(Q_{\theta^-}\) is used for target computation, updated less frequently to prevent divergence.

- The combined algorithm iteratively minimizes the TD loss above, leading to stable convergence in high-dimensional settings.

Double DQN

- One major limitation of the original DQN was overestimation bias in value updates due to the use of \(\max_{a'} Q(s',a')\) both for action selection and evaluation.

- To address this, Double DQN by van Hasselt et al. (2016) decouples these steps:

- This reduces overestimation and yields more accurate Q-value estimates, improving both stability and performance.

Dueling Network Architecture

-

The Dueling DQN architecture by Wang et al. (2016) decomposes the Q-function into two separate estimators:

- A state-value function \(V(s)\)

- An advantage function \(\hat{A}(s,a)\)

-

The combined Q-function is then reconstructed as:

- This structure improves learning efficiency by allowing the network to learn which states are valuable, independent of the specific actions.

Prioritized Experience Replay

- Standard DQN samples uniformly from the replay buffer, treating all transitions equally.

- Prioritized Experience Replay by Schaul et al. (2016) instead samples transitions with probability proportional to their TD error magnitude:

-

This focuses updates on transitions where the model is most surprised, improving data efficiency and convergence rates.

-

To correct for the bias introduced by non-uniform sampling, importance sampling weights are applied:

Extensions and Variants

-

Several extensions of DQN further improved stability and performance:

- NoisyNet DQN (Fortunato et al., 2018): adds parameterized noise for exploration.

- Rainbow DQN (Hessel et al., 2018): integrates multiple DQN enhancements (Double DQN, Dueling, Prioritized Replay, Noisy Nets, Distributional RL, and N-Step Returns).

- Distributional DQN (Bellemare et al., 2017): learns a distribution over returns rather than a scalar expected value.

Comparative Analysis

| Algorithm | Key Idea | Core Innovation | Reference |

|---|---|---|---|

| DQN | Deep neural approximation of Q-function | Replay buffer, target network | Mnih et al., 2015 |

| Double DQN | Reduces overestimation bias | Decouples selection and evaluation | van Hasselt et al., 2016 |

| Dueling DQN | Decomposes value and advantage | Separate value and advantage streams | Wang et al., 2016 |

| Prioritized Replay | Sample important transitions | Weighted replay sampling | Schaul et al., 2016 |

| Rainbow DQN | Combines all improvements | Unified architecture | Hessel et al., 2018 |

Deep Policy-Based Methods

- While value-based methods estimate \(Q(s,a)\) or \(V(s)\) and act greedily with respect to those values, policy-based RL directly optimizes a parameterized policy \(\pi_\theta(a \mid s)\) to maximize expected return. This direct optimization allows the handling of continuous or stochastic action spaces and yields smoother learning dynamics.

- Deep Policy-Based Methods extend classical policy-gradient ideas by representing \(\pi_\theta(a \mid s)\) as a deep neural network, enabling end-to-end learning from high-dimensional inputs such as images or sensor data.

Policy Gradient Theorem

- The goal is to maximize the expected return:

- The Policy Gradient Theorem (Sutton et al., 2000) gives the gradient of (J(\theta)):

- This elegant result allows gradient-based optimization of policies without differentiating through the environment dynamics.

REINFORCE Algorithm

- The REINFORCE algorithm by Williams (1992) is the foundational Monte-Carlo policy-gradient method.

-

It estimates the gradient using complete episode returns:

\[\nabla_\theta J(\theta) \approx \sum_t \nabla_\theta \log \pi_\theta(a_t \mid s_t) , (G_t - b)\]- where \(G_t\) is the empirical return and \(b\) is a baseline (often the mean return) that reduces variance without biasing the gradient.

- Despite high variance, REINFORCE provides an unbiased estimator and demonstrates the feasibility of learning stochastic deep policies.

Variance Reduction and Baselines

-

To make policy-gradient learning practical, variance-reduction techniques are crucial:

- State-Value Baselines: Replace raw return \(G_t\) with an estimate of the advantage \(\hat{A_t} = Q_t - V_t\), where \(V_t\) is a learned value baseline.

- Generalized Advantage Estimation (GAE): Introduced by Schulman et al., 2016,

- GAE computes a bias-variance-controlled estimator of advantage by exponentially weighting multi-step TD errors:

-

This innovation enabled the training stability of modern deep policy-gradient algorithms.

Trust Region Policy Optimization (TRPO)

- One challenge in policy-gradient methods is catastrophic policy collapse due to overly large updates.

- TRPO, proposed by Schulman et al., 2015, constrains the policy step within a trust region to ensure monotonic improvement:

- This optimization ensures conservative updates, improving stability across large neural-network policies.

Proximal Policy Optimization (PPO)

- PPO, by Schulman et al., 2017, is a policy-gradient algorithm designed to balance learning stability and efficiency. It improves on Trust Region Policy Optimization (TRPO) by enforcing a soft trust region through a clipped surrogate objective, which discourages updates that move the policy too far from its previous version.

-

The clipped objective is defined as:

\[L^{\text{CLIP}}(\theta) = \mathbb{E}_t \left[ \min \Big( r_t(\theta) \hat{A}_t, \text{clip}\big(r_t(\theta), 1-\epsilon, 1+\epsilon\big) \hat{A}_t \Big) \right]\]- \(r_t(\theta) = \frac{\pi_\theta(a_t \mid s_t)}{\pi_{\theta_{\text{old}}}(a_t \mid s_t)}\): the probability ratio between new and old policies.

- \(\hat{A}_t\): the advantage estimate, typically \(r_t - V_\psi(s_t)\), showing how much better the taken action was than expected.

- \(\epsilon\): a small constant (often 0.1–0.3) controlling the trust-region width.

- The

minoperator ensures that the policy update never increases the objective beyond what the clipped surrogate allows, preventing overly large steps that can harm performance.

- Intuitively, PPO encourages policy improvement proportional to the advantage while clipping the update if the new policy deviates too far from the old one. This yields a stable and robust optimization process widely used in RLHF for aligning large language models.

Entropy Regularization and Exploration

- To encourage exploration and avoid premature convergence to deterministic policies, entropy regularization augments the objective:

- where \(\mathcal{H}(\pi) = -\sum_a \pi(a \mid s)\log\pi(a \mid s)\).

- This technique, introduced in Soft Actor–Critic and earlier A3C methods, keeps the policy sufficiently stochastic to explore effectively.

Comparative Analysis

| Algorithm | Key Idea | Stability Technique | Reference |

|---|---|---|---|

| REINFORCE | Monte-Carlo policy-gradient | Baseline subtraction | Williams (1992) |

| TRPO | Trust-region constrained updates | KL-divergence constraint | Schulman et al., 2015 |

| PPO | Clipped surrogate objective | Implicit trust-region | Schulman et al., 2017 |

| GAE | Low-variance advantage estimator | λ-weighted TD residuals | Schulman et al., 2016 |

| Entropy Regularization | Exploration through stochasticity | Entropy bonus | A3C / SAC families |

Deep Actor–Critic Methods

-

Actor–Critic methods combine the advantages of value-based and policy-based RL by maintaining two distinct components:

- Actor: A policy network \(\pi_\theta(a \mid s)\) that selects actions.

- Critic: A value or Q-network \(V_w(s)\) or \(Q_w(s,a)\) that estimates expected returns and provides feedback to the actor.

- The actor updates its parameters to maximize the critic’s estimated value, while the critic updates to better predict the returns observed from the actor’s behavior.

- Deep Actor–Critic methods extend this paradigm using deep neural networks for both components, enabling scalability to complex, continuous, or high-dimensional environments.

Theoretical Foundation

-

The policy gradient for an actor–critic setup is given by:

\[\nabla_\theta J(\theta) = \mathbb{E}_{s_t,a_t \sim \pi_\theta} \left[ \nabla_\theta \log \pi_\theta(a_t \mid s_t) , \hat{A}(s_t,a_t) \right]\]- where \(\hat{A}(s_t,a_t)\) is the advantage estimate that quantifies how much better action \(a_t\) is compared to the average performance at state \(s_t\).

-

The critic learns this advantage by minimizing a regression loss, typically using Temporal Difference (TD) learning:

- Thus, the actor improves its policy using gradients from the critic’s evaluation, creating a feedback loop that balances bias (from bootstrapping) and variance (from sampling).

Asynchronous Advantage Actor–Critic (A3C)

-

The A3C algorithm, introduced by Mnih et al. (2016), demonstrated that multiple agents (workers) can interact with independent environment instances in parallel, asynchronously updating a shared global model.

-

Each worker learns both an actor and a critic, using an advantage-based update:

- This asynchronous setup increases data throughput and decorrelates experience, enabling training without replay buffers.

- A3C achieved state-of-the-art performance on a variety of Atari and continuous control benchmarks.

Advantage Actor–Critic (A2C)

- The A2C algorithm is a synchronous variant of A3C that aggregates gradients from multiple parallel environments before performing a single update.

-

Although less parallelized, A2C offers improved training stability and is widely used in implementations such as OpenAI Baselines.

- The advantage function is often estimated using Generalized Advantage Estimation (GAE) (Schulman et al., 2016), which balances bias and variance for stable learning.

Deep Deterministic Policy Gradient (DDPG)

- For continuous control tasks (e.g., robotic movement), discrete action selection is infeasible.

- DDPG, introduced by Lillicrap et al. (2015), extends the actor–critic framework to deterministic policies:

- The actor is updated using the gradient of the critic’s Q-value:

-

DDPG employs:

- A replay buffer for decorrelated training data,

- Target networks for stable updates, and

- Ornstein–Uhlenbeck noise for exploration in continuous spaces.

-

This made DDPG a foundational algorithm for robotic and control applications.

Twin Delayed DDPG (TD3)

- While DDPG is powerful, it suffers from overestimation bias similar to Q-learning.

- TD3, by Fujimoto et al. (2018), mitigates this through three improvements:

- Clipped Double Q-Learning: Two critics are trained, and the smaller Q-value is used for the target.

- Target Policy Smoothing: Adds noise to target actions for robustness.

- Delayed Policy Updates: Updates the actor less frequently than the critic for stability.

-

Target computation in TD3 becomes:

\[y = r + \gamma \min_{i=1,2} Q_{w_i'}(s', \mu_{\theta'}(s') + \epsilon)\]- where \(\epsilon \sim \text{clip}(\mathcal{N}(0, \sigma), -c, c)\).

Soft Actor–Critic (SAC)

- The Soft Actor–Critic (SAC) algorithm, proposed by Haarnoja et al. (2018), extends actor–critic learning to the maximum-entropy RL framework, optimizing not only expected returns but also policy entropy:

- This encourages exploration by maximizing randomness in action selection while maintaining performance.

- SAC combines off-policy replay buffers with entropy regularization and is one of the most sample-efficient continuous control algorithms available.

Comparative Analysis

| Algorithm | Policy Type | Exploration | Stability Mechanism | Key Reference |

|---|---|---|---|---|

| A3C | Stochastic | Parallel workers | Asynchronous updates | Mnih et al., 2016 |

| A2C | Stochastic | Parallel rollout | Synchronous gradient averaging | Schulman et al., 2016 |

| DDPG | Deterministic | OU noise | Target networks, replay buffer | Lillicrap et al., 2015 |

| TD3 | Deterministic | Policy smoothing | Double critics, delayed updates | Fujimoto et al., 2018 |

| SAC | Stochastic | Maximum entropy | Entropy regularization | Haarnoja et al., 2018 |

- Deep Actor–Critic methods form the backbone of modern Deep RL systems, bridging discrete and continuous domains while balancing stability, efficiency, and exploration.

- They underpin much of the progress in robotics, game-playing, and large-scale simulation-based learning.

Deep Model-Based Methods

- Deep Model-Based Reinforcement Learning (MBRL) integrates the predictive structure of classical model-based RL with the representational power of deep neural networks.

-

Rather than learning purely through trial and error, the agent first learns an internal world model—a neural approximation of the environment’s dynamics and rewards—and then plans or trains policies within this learned model.

- This approach promises greater sample efficiency, safety, and generalization, since much of the learning occurs through simulated rollouts rather than direct environment interaction.

The Model-Based RL Framework

-

An MBRL system typically learns three components:

\[\hat{P}_\phi(s' \mid s,a), \quad \hat{R}_\phi(s,a), \quad \pi_\theta(a \mid s)\]- where \(\hat{P}_\phi\) is a learned transition model, \(\hat{R}_\phi\) is a reward predictor, and \(\pi_\theta\) is the policy.

-

The model can be explicit (predicting next states) or latent (predicting compact internal representations).

-

Training alternates between:

- Collecting real experience using the current policy,

- Updating the learned model \((\hat{P}_\phi, \hat{R}_\phi)\), and

- Improving \(\pi_\theta\) via rollouts simulated inside the model.

-

This inner simulation loop enables learning with fewer real interactions—a major advantage over model-free Deep RL.

World Models

- World Models, introduced by Ha & Schmidhuber (2018), pioneered neural latent-world modeling for RL.

-

Their framework decomposed the agent into:

- VAE: encodes high-dimensional observations into a latent space,

- MDN-RNN: predicts latent transitions over time, and

- Controller: a small policy trained entirely in the latent world.

- This demonstrated that an agent could learn a compact generative model of the environment and achieve competitive control using simulated experience alone.

Model-Based Policy Optimization (MBPO)

- MBPO, by Janner et al. (2019), refined model-based learning by coupling short model rollouts with off-policy policy optimization.

-

Instead of long, error-prone simulated trajectories, MBPO performs brief rollouts from real states sampled from the replay buffer.

-

Formally, it optimizes:

\[J(\theta) = \mathbb{E}_{(s,a,r,s')\sim\mathcal{D}_{\text{real}} \cup \mathcal{D}_{\text{model}}} \left[ r + \gamma V_{\pi_\theta}(s') \right]\]- where \(\mathcal{D}_{\text{model}}\) contains transitions generated by the learned dynamics \(\hat{P}_{\phi}\).

- This hybrid dataset balances realism and data efficiency, producing state-of-the-art sample efficiency among model-based algorithms.

Dreamer and Latent Dynamics Models

- The Dreamer family of algorithms, beginning with Hafner et al. (2019), introduced latent imagination-based planning.

-

Dreamer learns a Recurrent State-Space Model (RSSM) to represent dynamics in a compact latent space, enabling policy updates entirely through “dreamed” trajectories without interacting with the environment.

- Subsequent versions (Dreamer V2 and V3) improved scalability to visual and continuous-control tasks, achieving human-level or super-human performance on benchmarks such as Atari and DMControl.

MuZero

- MuZero, introduced by Schrittwieser et al. (2020), combined deep model-based learning with Monte Carlo Tree Search (MCTS) while discarding explicit environment modeling.

-

Instead of predicting next observations, MuZero learns latent dynamics sufficient for accurate planning in the representation space.

-

Its core components are:

- A representation network (h_\theta) mapping observations to latent states,

- A dynamics network (g_\theta) predicting next latent states and rewards, and

- A prediction network (f_\theta) estimating policy and value from latent states.

-

These networks are trained jointly to minimize:

\[L = \sum_t \big[ (l^r_t + l^v_t + l^p_t) + c \mid \mid \theta \mid \mid ^2 \big]\]- where \(l^r_t, l^v_t, l^p_t\) denote reward, value, and policy losses, respectively.

- MuZero achieved state-of-the-art results on Atari, Go, chess, and shogi—matching or surpassing AlphaZero’s performance without direct access to the environment’s rules.

Advantages and Challenges

-

Advantages:

- High sample efficiency by leveraging learned models for synthetic experience.

- Enhanced planning ability and interpretability via internal simulation.

- Feasibility in real-world robotics and resource-constrained settings.

-

Challenges:

- Model bias—compounding errors in long rollouts can degrade policy quality.

- Training instability due to non-stationary data and shared model–policy optimization.

- High computational cost for large-scale latent dynamics models.

Comparative Analysis

| Algorithm | Key Idea | Learning Paradigm | Reference |

|---|---|---|---|

| World Models | Latent-space world modeling | Unsupervised generative world | Ha & Schmidhuber (2018) |

| MBPO | Short-horizon model rollouts + off-policy learning | Hybrid real + simulated data | Janner et al. (2019) |

| Dreamer | Latent imagination-based planning | Recurrent state-space model | Hafner et al. (2019) |

| MuZero | Latent dynamics for tree-search planning | Model-based with implicit rules | Schrittwieser et al. (2020) |

- Deep model-based methods close the loop between perception, prediction, and planning, combining the analytical rigor of model-based control with the generalization power of deep networks.

- They represent a key direction toward more data-efficient, interpretable, and human-like decision-making systems.

Hybrid and Meta Deep Reinforcement Learning Methods

- While the earlier categories of Deep Reinforcement Learning (Deep RL) isolate specific mechanisms—value prediction, policy optimization, or world modeling—many recent advances emerge from hybrid approaches that combine these paradigms.

- In parallel, meta-learning frameworks extend deep RL to settings where agents must adapt quickly to new environments or tasks by leveraging prior experience.

Hybrid Reinforcement Learning

-

Hybrid RL methods aim to exploit the complementary strengths of different learning paradigms:

- Value-based components provide stable, sample-efficient bootstrapping.

- Policy-based components enable smooth updates and stochastic exploration.

- Model-based components offer foresight through predictive dynamics.

-

Together, these elements create multi-objective, multi-stream, and off-policy architectures capable of scaling to massive environments.

Actor-Learner Architectures)

- IMPALA, introduced by Espeholt et al. (2018), scales actor–critic learning to distributed settings.

-

It separates actors, which generate trajectories in parallel environments, from a central learner, which updates shared parameters using an off-policy correction method called V-trace.

- The V-trace targets correct the discrepancy between behavior policy \(\mu\) and target policy \(\pi\):

- where \(\rho_t = \min(\bar{\rho}, \frac{\pi(a_t \mid x_t)}{\mu(a_t \mid x_t)})\).

- IMPALA enabled scalable training across thousands of environments with stable, near-linear performance gains.

Distributed DQN)

- R2D2, proposed by Kapturowski et al. (2019), extended DQN to recurrent networks for partially observable environments.

-

It combines:

- A distributed architecture similar to IMPALA,

- Experience replay for off-policy learning, and

-

Recurrent state-tracking through LSTM layers.

- This combination of value learning, sequence modeling, and distributed execution yields strong performance in tasks requiring memory, such as DeepMind Lab and Atari with partial observability.

Model-Based Control

-

Other hybrid frameworks explicitly merge model-based planning with policy learning, e.g.:

- PlaNet by Hafner et al. (2019): learns a latent dynamics model for planning continuous-control actions.