Primers • Reasoning in LLMs

- Overview

- Invoking reasoning in LLMs

- Prompting-Based Reasoning

- Decoding and Aggregation-Based Reasoning

- Search-Based Reasoning

- Tool-Augmented Reasoning

- Reinforcement Learning-Based Reasoning

- WebGPT

- DeepSeek-R1

- Reinforcement Learning for Tool-Integrated Reasoning

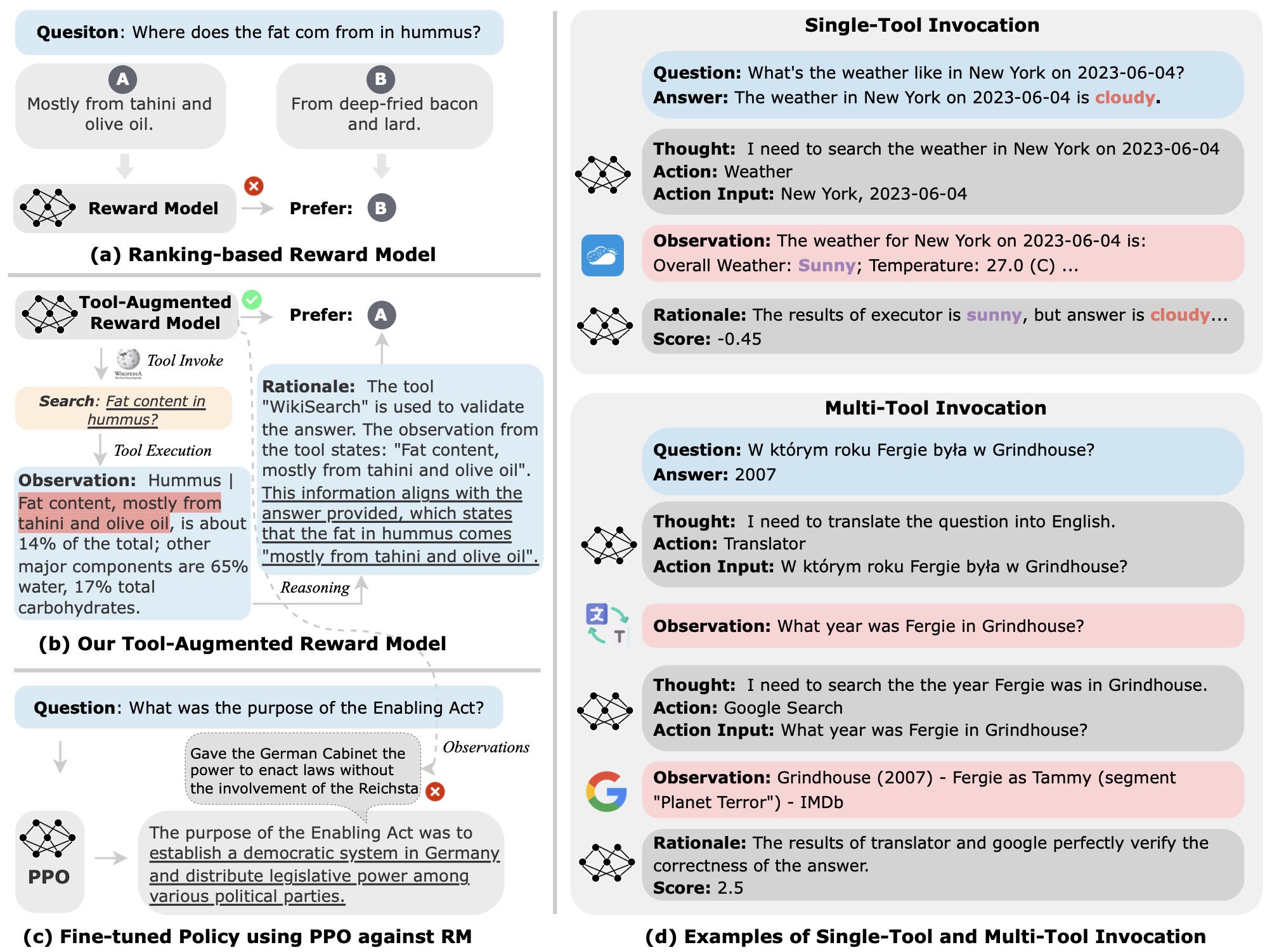

- Tool-Augmented Reward Modeling (Themis)

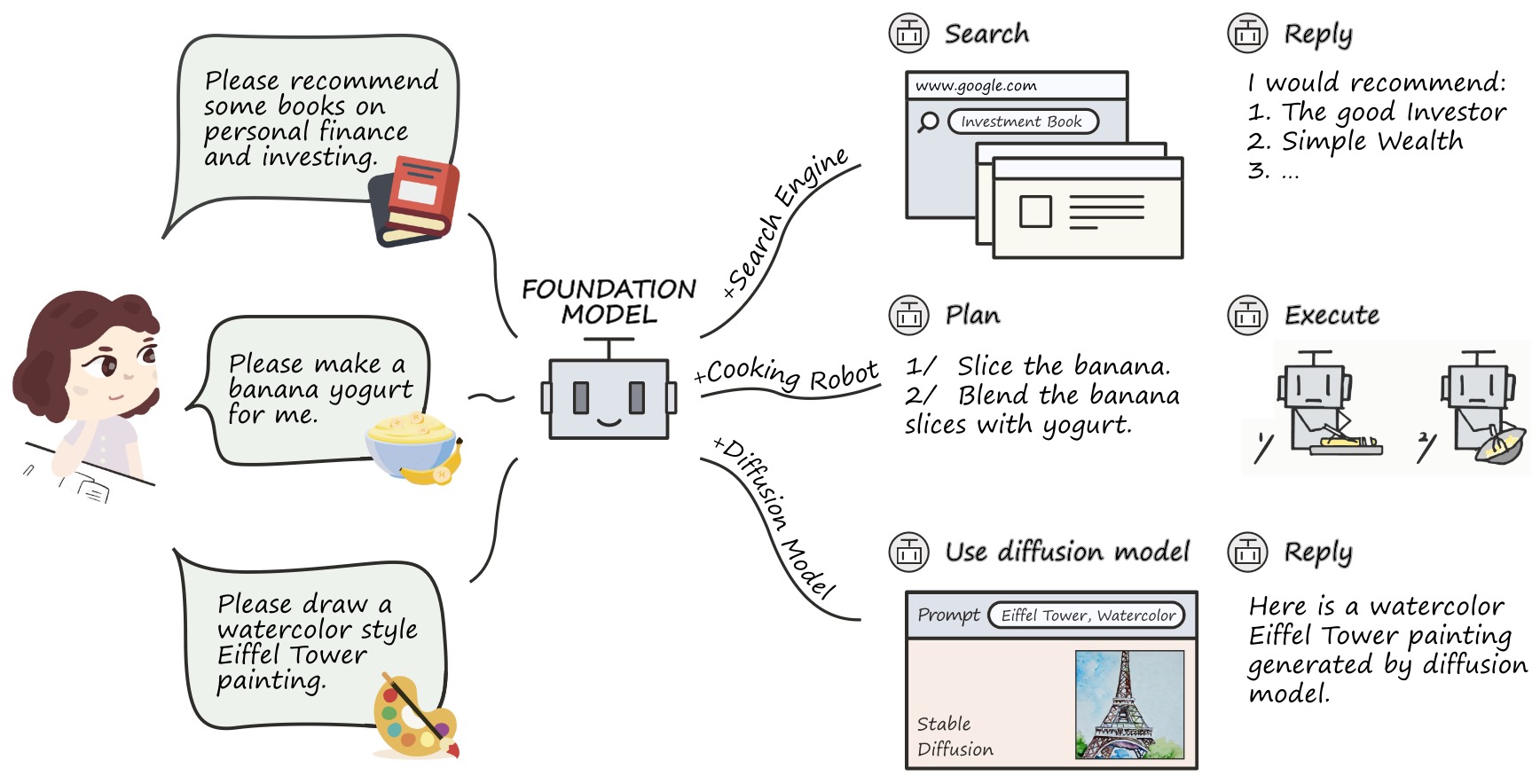

- Tool Learning with Foundation Models

- The “Aha” Moment and Emergent Reasoning

- Evaluation of reasoning using datasets

- GSM8K (grade-school math reasoning)

- MATH (competition-level mathematical reasoning)

- AIME and IMO: Mathematical Olympiad–Level Reasoning

- ARC and Science QA Benchmarks (ARC-AGI-1 and ARC-AGI-2)

- OpenThoughts3: Large-Scale Open Reasoning Dataset

- DROP and Numerical Reading-Comprehension Reasoning

- BIG-bench and BIG-bench Hard

- MMLU and AGIEval (Knowledge + Reasoning Exam Benchmarks)

- HELM and Holistic Multi-Metric Reasoning Evaluation

- Multimodal reasoning and factuality

- Summary of reasoning evaluation datasets and their interrelations

- Open challenges and future directions

- Bringing it together—end-to-end blueprints for reasoning systems (small, medium, large budgets)

- Failure analysis—diagnosing and fixing reasoning errors

- Further Reading

- References

- Citation

Overview

-

Large Language Models (LLMs) are sequence models that learn a conditional distribution over tokens. Given a context \(x_{<t}\), an LLM parameterized by \(\theta\) predicts the next token via:

\[p_\theta(x_t \mid x_{<t})=\mathrm{softmax}\left(f_\theta(x_{<t})\right)\] -

Reasoning, in this primer, is the process by which an LLM instantiates and manipulates intermediate structures to transform inputs into solutions that satisfy constraints beyond surface-level pattern completion. A useful abstraction is to introduce latent “thoughts” \(z\) and write:

\[p_\theta(y\mid x)=\sum_{z} p_\theta(y\mid x,z) p_\theta(z\mid x)\]- where \(z\) ranges over intermediate steps such as plans, subgoals, tool calls, or formal derivations. Externalizing \(z\) in the output (for example, step-by-step rationales) is one way we can probe, debug, and improve this process.

-

From an architectural standpoint, modern LLMs implement this computation with transformers introduced in Attention Is All You Need by Vaswani et al. (2017). Pretraining objectives and representations popularized by BERT by Devlin et al. (2018) helped establish bidirectional text understanding, while today’s generative LLMs typically use decoder-only transformers. Scaling trends such as power-law loss curves in Scaling Laws for Neural Language Models by Kaplan et al. (2020) and compute-optimal training in Training Compute-Optimal Large Language Models (“Chinchilla”) by Hoffmann et al. (2022) explain why larger, better-trained models often exhibit stronger reasoning—although how “reasoning” emerges remains an active debate, with claims of sharp emergent abilities in Emergent Abilities of Large Language Models by Wei et al. (2022) and counter-arguments that such “emergence” can be a metric artifact in Are Emergent Abilities of Large Language Models a Mirage? by Schaeffer et al. (2023).

-

A practical working definition that guides the rest of this primer is that reasoning in LLMs can be understood as learned, compositional computation over latent steps \(z\) that yields verifiable conclusions.

-

This definition is agnostic to whether steps are printed as Chain-of-Thought (CoT), searched over as a tree, executed as code, or kept internal.

What counts as “reasoning” for LLMs?

-

Deductive reasoning: Deriving logically necessary conclusions from premises, e.g., symbolic algebra, formal proofs, or rule application.

-

Inductive reasoning: Generalizing patterns from examples, e.g., few-shot extrapolation, schema induction, or pattern completion that yields testable hypotheses.

-

Abductive reasoning: Inferring the most plausible explanation for observations (hypothesis selection under uncertainty), common in diagnosis and root-cause analysis.

-

Procedural reasoning: Planning and multi-step control in which the model decomposes tasks, executes actions (possibly via tools), and revises plans.

-

These categories are not mutually exclusive; many benchmarks interleave them.

Interfaces that elicit reasoning

-

Researchers have discovered prompting and decoding interfaces that expose or amplify the latent steps \(z\).

-

Chain-of-thought (CoT): Providing or eliciting step-by-step rationales improves multi-step problem solving, as shown in Chain-of-Thought Prompting Elicits Reasoning in Large Language Models by Wei et al. (2022) and Zero-shot CoT (“Let’s think step by step”) in Large Language Models are Zero-Shot Reasoners by Kojima et al. (2022). Self-Consistency Improves CoT Reasoning in Language Models by Wang et al. (2022) samples multiple reasoning paths and marginalizes to a consensus.

-

Search over thoughts: Tree of Thoughts: Deliberate Problem Solving with Large Language Models by Yao et al. (2023) treats intermediate steps as nodes and performs lookahead/backtracking, bridging LLM inference with classic heuristic search.

-

Reasoning and acting: ReAct: Synergizing Reasoning and Acting in Language Models by Yao et al. (2022) interleaves reasoning traces with tool or environment actions, enabling information gathering and plan revision.

The role of scaling and the “aha” phenomenon

-

Scaling laws predict smoother loss improvements with model/data/compute, yet qualitative “jumps” in task performance are often reported. Two perspectives coexist:

-

Emergence view: Some abilities appear only beyond certain scales or training regimes, as argued by Wei et al. (2022).

-

Measurement view: Apparent discontinuities arise from metric non-linearities or data scarcity, per Schaeffer et al. (2023).

-

-

In either view, improved interfaces and training often turn tacit competence into explicit problem solving. For instance, RL specialized to reward intermediate solution quality, as in DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning by Guo et al. (2025), reports substantial gains on math and logic without supervised rationales, highlighting how credit assignment can shape useful latent computations.

A minimal mathematical lens

-

Training typically minimizes the expected negative log-likelihood (cross-entropy) \(\mathcal{L}(\theta)=\mathbb{E}_{x\sim \mathcal{D}}\left[-\sum_{t}\log p_\theta(x_t\mid x_{<t})\right]\).

-

Reasoning-oriented inference augments this with structure over \(z\):

-

CoT-style sampling: Sample \(z^{(k)}\sim p_\theta(z\mid x)\) and select \(\hat{y}\) by majority or confidence weighting \(\hat{y}=\arg\max_y \sum_{k} w \left(z^{(k)}\right), p_\theta(y\mid x,z^{(k)})\).

-

Search over thoughts: Define a scoring function \(s(z_{1:t})\) and expand nodes to maximize expected downstream reward, akin to beam/A* variants over textual states.

-

Reinforcement Learning for reasoning: Optimize \(\theta\) against a task reward \(R(y,z)\), shaping \(p_\theta(z\mid x)\) toward productive step structures rather than purely imitating text.

-

Invoking reasoning in LLMs

-

Reasoning in LLMs is not an automatic or always-on capability—it is typically invoked through specific interfaces or training strategies that elicit structured intermediate computation. In other words, while an LLM can always generate text, certain modes of interaction encourage it to perform reasoning-like processes internally or externally.

-

Invoking reasoning can be viewed as shaping the latent variable \(z\) in the generative formulation \(p_\theta(y \mid x)=\sum_z p_\theta(y \mid x,z)p_\theta(z \mid x)\), so that the model generates more useful or verifiable intermediate structures (the “thoughts” \(z\)) instead of directly producing the final answer \(y\).

-

At a top level, there are several paradigms (and methodologies per paradigm) to invoke reasoning:

-

Prompting-based: Purely contextual reasoning induction through examples and instructions within the prompt, without modifying model parameters or architecture.

- Chain-of-Thought (CoT) prompting: Encourages explicit step-by-step reasoning traces, guiding models to decompose complex problems into interpretable intermediate steps.

- Implicit reasoning via in-context composition: Induces structured reasoning by presenting compositional examples that demonstrate multi-step problem-solving directly within the input context.

-

Decoding and aggregation-based \(\rightarrow\) Ensemble reasoning through sampling and consensus.

- Decoding and aggregation-based reasoning: Samples diverse reasoning paths via stochastic decoding and aggregates results through voting, confidence scoring, or verifier-based consensus.

- Reflection and self-verification loops: Iteratively critiques, revises, and improves its own reasoning outputs using self-feedback, enhancing correctness and logical consistency.

-

Search-based \(\rightarrow\) Explicit reasoning exploration guided by evaluation.

- Tree-of-Thoughts (ToT) prompting/search: Expands reasoning as a branching search tree of partial thoughts, evaluating and pruning paths to find coherent solutions.

- Monte Carlo Tree Search (MCTS)-based reasoning: Conducts stochastic rollouts and value backpropagation to balance exploration and exploitation, refining reasoning through simulated decision trajectories.

-

Tool-augmented \(\rightarrow\) Hybrid symbolic–neural reasoning.

- ReAct frameworks: Integrates reasoning with environment actions, enabling models to think, act, and observe dynamically during problem-solving.

- Toolformer-based reasoning: Enables models to autonomously decide when and how to call external APIs or tools during inference, integrating symbolic computation, retrieval, or execution for improved factuality and reasoning precision.

-

RL-based \(\rightarrow\) Learning to reason through reward optimization.

- Reinforcement learning for reasoning (e.g., DeepSeek-R1): Optimizes reasoning strategies using reward feedback to align reasoning depth, accuracy, and efficiency across diverse tasks.

-

-

Each of these methods aims to transform a generic text generator into a compositional problem-solver, either through prompting, decoding, or training modification.

Methodologies for Invoking Reasoning in LLMs

- There are several overarching paradigms by which reasoning can be invoked in LLMs. Each family emphasizes a different mechanism—whether through prompting, decoding, exploration, tool use, or learning signals. Below, the principal methodologies are organized into five broad families.

Prompting-Based Reasoning

-

Prompting-based approaches induce reasoning by structuring the input context to make intermediate thinking explicit or implicitly compositional. These methods rely purely on contextual cues rather than architectural or training modifications. Examples below:

-

Chain-of-Thought (CoT) Prompting: Introduced by Wei et al. (2022), CoT explicitly elicits step-by-step reasoning traces, guiding the model to externalize intermediate computations before giving the final answer. Formally, the model predicts

\[\hat{y} = \arg\max_y \sum_z p_\theta(y, z | x)\]- where \(z\) denotes latent reasoning traces approximated through explicit textual reasoning.

-

Zero-Shot and Few-Shot CoT: As shown by Kojima et al. (2022), adding simple triggers like “Let’s think step by step” can induce reasoning behavior even without demonstrations, revealing latent reasoning priors in large models.

-

Implicit Reasoning via In-Context Composition: From Brown et al. (2020), LLMs can implicitly perform reasoning by inferring structured input–output mappings from few-shot examples. This process is latent, with reasoning occurring in attention dynamics rather than explicit text.

- Implicit composition thus shows that LLMs can internalize algorithmic reasoning even without producing verbalized steps.

-

Decoding and Aggregation-Based Reasoning

-

Decoding strategies strengthen reasoning robustness by sampling and aggregating multiple reasoning paths rather than trusting a single deterministic chain. They treat reasoning as probabilistic inference over latent cognitive trajectories. Examples below:

-

Self-Consistency Decoding: Wang et al. (2022) proposed sampling multiple reasoning chains and aggregating their final answers to approximate Bayesian marginalization:

\[\hat{y} = \arg\max_y \sum_{k=1}^{K} \mathbb{I}[y^{(k)} = y],\]- where \(y^{(k)}\) are outcomes from diverse reasoning samples. This approach reduces variance and enhances robustness on multi-step tasks.

-

Reflection and Self-Verification Loops: Frameworks such as Reflexion (Shinn et al. (2023)) and Self-Refine (Madaan et al. (2023)) introduce iterative critique–revise cycles, allowing models to assess and improve their reasoning traces:

\[x \xrightarrow{\text{reason}} (z, y) \xrightarrow{\text{reflect}} c \xrightarrow{\text{revise}} (z', y')\]- Each loop refines the reasoning toward correctness or coherence.

-

RCOT and Critic–Judge Systems: Zhang et al. (2024) and Zhou et al. (2023) formalized structured reflective reasoning where critic models evaluate reasoning traces. This improves factual accuracy and consistency through meta-evaluation.

-

Search-Based Reasoning

-

Search-based reasoning treats reasoning as explicit exploration through a structured search space. Instead of committing to one reasoning chain, the model maintains and expands a frontier of partial thoughts guided by learned or heuristic values. Examples below:

-

Tree-of-Thoughts (ToT) Prompting: Yao et al. (2023) generalized CoT into a search tree of reasoning steps, where partial “thoughts” are evaluated and expanded. This transforms reasoning from a linear chain to a controlled exploration process guided by heuristic value estimates.

-

Monte Carlo Tree Search (MCTS) and Value-Guided Variants: Building on Tree-of-Thoughts, these methods treat reasoning trajectories as nodes in a decision tree, using stochastic rollouts and value estimates \(V_\phi(z_{1:t})\) to select the most promising branches:

\[z_{t+1} \sim \pi_\theta(z_t | z_{<t}), \quad V_\phi(z_{1:t}) \approx \mathbb{E}[R | z_{1:t}]\]- This search-based framing bridges symbolic planning with neural reasoning and underlies deliberative reasoning systems that combine exploration, pruning, and value-guided selection.

-

Tool-Augmented and Interaction-Based Reasoning

-

This family connects internal reasoning with external information or computational tools, turning static text prediction into interactive cognition. Examples below:

-

ReAct Frameworks (Reason + Act): Yao et al. (2022) proposed alternating between internal “Thought” and external “Action” steps:

\[x \rightarrow \text{Thought}_1 \rightarrow \text{Action}_1 \rightarrow \text{Observation}_1 \rightarrow \cdots \rightarrow y\]- This structure enables reasoning intertwined with API calls, search, or tool execution.

-

Tool-Augmented Reasoning: Schick et al. (2023) (Toolformer) and Gao et al. (2022) (PAL) demonstrated that LLMs can autonomously learn to invoke external tools like Python interpreters or search engines, grounding reasoning in verifiable computation.

\[\pi_\theta(a_t | s_t) = \begin{cases} \text{generate thought } z_t & \text{if } a_t = \text{think},\\ \text{call tool } \mathcal{T}_i(s_t) & \text{if } a_t = \text{act} \end{cases}\] -

PAL (Program-Aided Language Models): Delegates subproblems to code snippets, merging natural-language reasoning with executable verification. This hybrid reasoning yields higher factuality and transparency.

-

Reflexion Agents: Shinn et al. (2023) extended ReAct-style systems with reflective feedback, enabling models to self-correct and improve during tool-based interactions.

-

Reinforcement Learning-Based Reasoning

-

Reinforcement Learning (RL) frames reasoning as policy optimization over reasoning trajectories, where models learn to maximize rewards reflecting correctness, efficiency, or verifiability. Examples below:

-

DeepSeek-R1 (Correctness-Guided Policy Optimization): Guo et al. (2025) introduced a RL framework for reasoning that eliminates the need for human-annotated rationales. Instead, the model learns directly from correctness-based feedback signals that are objectively verifiable. The optimization objective is defined as:

\[\mathcal{J}(\theta) = \mathbb{E}_{x, z, y \sim p \theta(\cdot|x)} [R(y, z)]\]-

Key Components:

-

Reward Structure:

-

The reward function integrates both correctness and computational efficiency:

\(R(y, z) = \mathbb{I}[\text{correct}(y)] - \lambda \text{cost}\)z\(\)

- where:

- \(\mathbb{I}[\text{correct}(y)]\) indicates whether the model’s answer \(y\) matches the ground truth, while \(\text{cost}\)z\(\) measures the resource usage (e.g., number of reasoning steps or token length) of the reasoning trace \(z\).

- The hyperparameter \(\lambda\) balances the trade-off between accuracy and efficiency.

- where:

-

-

Policy Learning without Rationales:

- Unlike earlier reasoning models that rely on process-level/step-level human supervision or rationalized trajectories, DeepSeek-R1 learns purely from outcome-level rewards.

- This shifts the training paradigm from imitating explanations to discovering effective reasoning trajectories through exploration and optimization.

-

Conciseness and Verifiability:

- By penalizing longer or redundant reasoning paths, the model learns to favor concise solutions.

- The reward design implicitly biases the policy toward interpretable and verifiable reasoning traces, improving robustness across mathematical and symbolic reasoning tasks.

-

Empirical Findings:

- DeepSeek-R1 demonstrates that outcome-based RL—when paired with reward shaping—can achieve reasoning quality comparable to process-supervised models.

- The study highlights that reinforcement-driven optimization can uncover reasoning strategies aligned with correctness and minimal computational cost, without explicit human process supervision.

-

-

-

Process vs. Outcome Supervision: Lightman et al. (2023) and OpenAI (2023) demonstrated that step-level (i.e., process-level) correctness rewards improve reliability and stability compared to outcome-level rewards.

-

Tool-Augmented RLHF: Nakano et al. (2021) extended RLHF to web-based environments.

-

In WebGPT, Nakano et al. implemented Reinforcement Learning with Human Feedback (RLHF)** over a browser-driven interface, where the model navigated and queried the web to retrieve supporting evidence before answering. Reward models trained on human preferences compared answers and their citations, encouraging factual accuracy and verifiability through policy optimization with proximal policy optimization (PPO). This demonstrated that verifiable, tool-grounded reasoning could be operationalized within an RL framework.

-

Building on that foundation, Themis (Li et al., 2024) proposed tool-augmented reward modeling, allowing reward models themselves to call external APIs—calculators, translators, or search engines—during preference evaluation. The hybrid loss function

- unifies pairwise ranking with autoregressive reasoning supervision. Themis improves factuality, arithmetic precision, and interpretability, showing +17.7 % accuracy gain on tool-based datasets and a 7.3 % TruthfulQA improvement over standard reward models.

-

-

Tool Learning with Foundation Models: Qin et al. (2024) surveyed tool-augmented reasoning as a general paradigm, viewing foundation models as controllers that plan subtasks, invoke APIs, and optimize through feedback loops. RL-based reasoning appears within a broader reasoning–action–observation–reward cycle, emphasizing transparency, adaptability, and safety.

-

Reflexion (Verbal RL): Shinn et al. (2023) interpreted reflection as verbal reinforcement learning, where self-generated critiques act as linguistic rewards guiding iterative improvement.

-

Prompting-Based Reasoning

- Prompting strategies elicit reasoning through the design of the input context rather than architectural or training changes. These methods rely on the model’s ability to externalize thought patterns when given structured cues.

Chain-of-Thought (CoT) prompting

-

The CoT methodology explicitly elicits step-by-step reasoning before producing an answer. Instead of directly predicting the output \(y\) from input \(x\), the model is guided to generate intermediate steps \(z_1, z_2, \ldots, z_k\) that form a coherent reasoning chain:

\[x \rightarrow z_1 \rightarrow z_2 \rightarrow \cdots \rightarrow z_k \rightarrow y\] - This approach was introduced in Chain-of-Thought Prompting Elicits Reasoning in Large Language Models by Wei et al. (2022). The key contribution of Wei et al. was to show that few-shot exemplars containing reasoning traces (〈input, reasoning, answer〉) dramatically improve reasoning performance. By providing examples of multi-step reasoning in the prompt, large models could successfully decompose problems into intermediate steps.

- In contrast, Large Language Models are Zero-Shot Reasoners by Kojima et al. (2022) later demonstrated that the same multi-step reasoning could be triggered even without exemplars—by simply appending the phrase “Let’s think step by step,” enabling zero-shot reasoning. While Wei et al. highlighted reasoning as an emergent property of scale through structured exemplars, Kojima et al. (2022) revealed that linguistic cues alone can unlock latent reasoning abilities already present in pretrained LLMs.

Mechanism

- Prompt-level induction: The prompt includes exemplars where the reasoning is explicit (Wei et al. (2022)).

- Latent structure exposure: The model learns to externalize intermediate computation as natural language.

-

Generalization: Even without supervision, the model generalizes to unseen reasoning tasks (as shown by Kojima et al. (2022)).

-

Formally, CoT modifies inference to condition on a reasoning trace \(z\):

\[\hat{y} = \arg\max_y \sum_z p_\theta(y, z \mid x)\]-

where:

- \(x\): the input question or problem statement presented to the model.

- \(y\): the final output or predicted answer generated by the model.

- \(z\): the intermediate reasoning trace, consisting of one or more steps \(z_1, z_2, \ldots, z_k\).

- \(p_\theta(y, z \mid x)\): the joint probability of producing a reasoning sequence \(z\) and final answer \(y\) given input \(x\), parameterized by model weights \(\theta\).

- \(\sum_z\): marginalization over all possible reasoning paths, representing the model’s implicit consideration of multiple reasoning trajectories.

- \(\arg\max_y\): selects the answer \(y\) with the highest overall likelihood after integrating over possible reasoning traces.

- \(\hat{y}\): the final selected output predicted by the model.

-

- When CoT prompting is used, the summation is approximated by sampling one or several \(z\) sequences explicitly.

Variants

- Zero-shot CoT: Introduced by Kojima et al. (2022), who found that simply prompting with “Let’s think step by step” elicits reasoning in the absence of any few-shot exemplars, proving that LLMs are zero-shot reasoners capable of multi-step inference without examples.

- Few-shot CoT: Proposed by Wei et al. (2022), which relies on a few explicit reasoning demonstrations in the prompt to teach structured decomposition of problems.

- Multi-CoT aggregation: Proposed in Self-Consistency Improves Chain of Thought Reasoning in Language Models by Wang et al. (2022), combines multiple reasoning traces to improve robustness and consistency. By sampling diverse reasoning paths and aggregating their outcomes—through majority voting, confidence weighting, or entailment-based filtering—this approach mitigates random errors in individual chains and enhances overall answer reliability, particularly on complex or ambiguous reasoning tasks. A detailed discourse on this topic is available in the Decoding and Aggregation-Based Reasoning section.

Advantages

- Readable, auditable reasoning process.

- Enables interpretability and debugging.

- Boosts performance on tasks requiring intermediate computation.

Limitations

- Prone to verbosity and “overthinking.”

- Can expose internal biases and hallucinations in intermediate steps.

- Sensitive to prompt wording and length.

Implicit Reasoning via In-Context Composition

- Implicit reasoning via in-context composition refers to the ability of LLMs to perform structured reasoning without being explicitly instructed to reason step-by-step. Instead of producing overt “thoughts” or intermediate rationales, the model implicitly composes reasoning patterns from the examples, instructions, and latent structure provided in the prompt.

- This phenomenon underlies few-shot learning and in-context learning (ICL), first formalized in Language Models are Few-Shot Learners by Brown et al. (2020).

- In short, implicit reasoning through in-context composition reveals that LLMs can simulate reasoning procedures internally—demonstrating that reasoning is not only something models can “say,” but also something they can do silently.

Core Idea

- During in-context learning, an LLM observes examples of input–output pairs in the prompt:

Example 1: x₁ → y₁

Example 2: x₂ → y₂

...

Query: xₙ → ?

-

Although no parameter updates occur, the model constructs an internal algorithm that maps inputs to outputs based on patterns in the examples. This implicit mechanism acts as a temporary reasoning program embedded within the attention dynamics of the transformer.

-

Mathematically, the model approximates:

\[p_\theta(y_n | x_n, \mathcal{C}) = f_\theta(x_n; \mathcal{C})\]- where the context \(\mathcal{C} = {(x_i, y_i)}_{i=1}^{n-1}\) acts as a soft prompt encoding the reasoning structure.

Mechanism

-

Pattern induction The attention mechanism identifies regularities across examples in the prompt (e.g., logical rules, transformations, or operations).

-

Implicit composition The model learns to simulate an algorithm consistent with those examples without explicit symbolic representation.

-

Generalization When applied to the query, the model executes the induced procedure on-the-fly, effectively performing reasoning within the hidden activations rather than the output text.

Evidence of Implicit Reasoning

-

Several studies show that LLMs can encode algorithmic reasoning purely through in-context composition:

- Transformers as Meta-Learners by von Oswald et al. (2023): demonstrates that transformers approximate gradient descent in activation space, effectively learning “how to learn” from examples.

-

Rethinking In-Context Learning as Implicit Bayesian Inference by Xie et al. (2022): formalizes ICL as a Bayesian posterior update over latent hypotheses \(h\), as follows:

\[p(h|x_{1:n}, y_{1:n}) \propto p(h)\prod_i p(y_i|x_i, h)\] - What Learning Algorithms Can Transformers Implement? by Akyürek et al. (2023): shows that transformers can instantiate implicit gradient-based learners and execute reasoning-like adaptations.

-

These findings imply that reasoning does not necessarily require explicit verbalization—it can occur within the model’s hidden computation.

Examples

- In-context arithmetic reasoning (e.g., “2 + 3 = 5, 4 + 5 = 9, 6 + 7 = ?”) where the model infers the pattern without showing intermediate steps.

- Logical pattern induction (e.g., mapping “A\(\rightarrow\)B, B\(\rightarrow\)C, therefore A\(\rightarrow\)C”) purely from example structure.

- Code pattern imitation: reproducing unseen programming functions after seeing analogous examples in the context.

Advantages

- Efficiency: No need for verbose intermediate reasoning.

- Speed: Faster inference due to single-pass computation.

- Adaptivity: Learns task-specific reasoning patterns dynamically from the prompt.

Limitations

- Opacity: Reasoning is latent and not interpretable.

- Fragility: Sensitive to prompt order, formatting, and example selection.

- Limited generalization: Implicit algorithms often fail outside the statistical range of given examples.

Relationship to Explicit Reasoning

- Implicit reasoning complements explicit reasoning (like CoT) along a spectrum:

| Type | Reasoning Representation | Interpretability | Example |

|---|---|---|---|

| Explicit | Textual steps visible in output | High | “Let’s think step by step” |

| Implicit | Reasoning internal to activations | Low | Few-shot induction, analogy |

- Recent work (Learning to Reason with Language Models by Zelikman et al. (2022)) suggests that both can coexist: explicit reasoning can teach the model to develop implicit reasoning circuits that persist even when steps are hidden.

Decoding and Aggregation-Based Reasoning

-

Decoding and aggregation-based reasoning conceptualizes reasoning as a process of exploring multiple candidate reasoning trajectories during decoding and aggregating their outcomes to reach a consensus answer. Rather than committing to a single deterministic reasoning chain, these methods embrace stochastic diversity—sampling multiple reasoning paths via temperature-controlled decoding or beam search—and then consolidate the results through majority voting, weighted aggregation, or external verification (say with a different model, e.g., another LLM as a judge).

-

The central premise is that LLMs encode a distribution over many plausible reasoning paths; by sampling and marginalizing across this space, one can recover more reliable and consistent conclusions. This approach bridges statistical ensembling and reasoning robustness, effectively reducing variance and mitigating local hallucinations.

-

Representative methods in this family include Self-Consistency Decoding by Wang et al. (2022), Majority-Vote CoT, Verifier-Guided Decoding by Lightman et al. (2023), Weighted Self-Consistency, and Mixture-of-Reasoners / Ensemble CoT strategies. Together, they embody an ensemble-based philosophy of reasoning—achieving reliability not through a single flawless chain, but through statistical agreement among many plausible reasoning hypotheses.

Self-Consistency Decoding

-

Self-Consistency Decoding builds upon CoT prompting by introducing stochastic reasoning diversity—instead of generating a single reasoning chain, the model samples multiple independent reasoning paths and aggregates their final answers to reach a more reliable conclusion.

-

This method was proposed in Self-Consistency Improves Chain-of-Thought Reasoning in Language Models by Wang et al. (2022).

Core Idea

-

LLMs can produce many plausible reasoning paths \(z^{(1)}, z^{(2)}, \ldots, z^{(K)}\) for the same input \(x\). Each path ends with a potential answer \(y^{(k)}\). Rather than trusting the first decoded path (which may be incorrect due to randomness or local bias), the model aggregates across samples to find the most self-consistent answer.

-

Formally, this can be written as:

\[\hat{y} = \arg\max_{y} \sum_{k=1}^{K} \mathbb{I}[y^{(k)} = y]\]-

where:

- \(\hat{y}\): the final predicted answer obtained by selecting the most frequently occurring outcome among sampled reasoning paths.

- \(y\): a candidate answer being evaluated for consistency across reasoning trajectories.

- \(y^{(k)}\): the final output generated from the \(k^{th}\) reasoning chain \(z^{(k)}\).

- \(K\): the total number of sampled reasoning paths (i.e., the number of independent reasoning attempts by the model).

- \(\mathbb{I}[y^{(k)} = y]\): an indicator function that equals 1 if the answer from the \(k^{th}\) reasoning path matches \(y\), and 0 otherwise.

- \(\sum_{k=1}^{K} \mathbb{I}[y^{(k)} = y]\): counts how many times each candidate answer \(y\) appears across all reasoning samples.

- \(\arg\max_{y}\): selects the answer \(y\) that occurs most frequently (the mode) among the \(K\) generated reasoning paths, ensuring self-consistency through aggregation.

-

-

In practice, \(K\) ranges from 5 to 50 samples depending on model size and task complexity.

Mechanism

-

Sampling phase: Use temperature sampling (e.g., \(T = 0.7\)) to generate diverse reasoning traces \(z^{(k)}\).

-

Aggregation phase: Extract the final answers \(y^{(k)}\) and perform majority voting or probabilistic marginalization.

-

Selection phase: Choose the most frequent answer (or a weighted consensus based on log-probabilities).

- This implicitly integrates over multiple latent reasoning variables \(z\), approximating the marginalization in

Intuition

-

Different reasoning paths represent samples from the model’s internal “belief distribution” over possible reasoning chains. Self-Consistency acts as a Bayesian marginalization step, improving robustness to local hallucinations and premature reasoning collapses.

-

Empirically, the method yields substantial gains on multi-step arithmetic and logic benchmarks such as GSM8K, MultiArith, and StrategyQA.

Advantages

- Reduces the variance and brittleness of individual CoT runs.

- Encourages exploration of diverse reasoning paths.

- Significantly improves accuracy on reasoning tasks without changing model parameters.

Limitations

- Computationally expensive (requires many samples).

- Inefficient for tasks where answers are non-discrete or continuous.

- Aggregation may fail if reasoning errors are systematic across samples.

Reflection and Self-Verification Loops

-

Reflection and self-verification methods extend reasoning by allowing a model to analyze, critique, and improve its own outputs. Rather than generating a single reasoning trace and final answer, the model iteratively reviews its reasoning, identifies potential errors, and either revises the reasoning or re-generates the answer.

-

This meta-cognitive process—analogous to human self-checking—is central to recent efforts to make reasoning both more reliable and more factual.

-

A key paper introducing this paradigm is Reflexion: Language Agents with Verbal Reinforcement Learning by Shinn et al. (2023), and Self-Refine: Iterative Refinement with Self-Feedback by Madaan et al. (2023).

Core Idea

-

Reflection frameworks conceptualize reasoning as an iterative loop between generation, evaluation, and revision. A single pass through the LLM may produce a reasoning chain \(z\) and output \(y\), but the model can further reflect on its own reasoning by generating a self-critique \(c\) that identifies flaws or inconsistencies.

-

This process can be formalized as:

- Each iteration ideally brings the reasoning trace closer to correctness or coherence.

Mechanism

-

Initial reasoning phase: The model generates a reasoning chain and provisional answer.

-

Reflection phase: The model (or a secondary evaluator) reviews the reasoning for logical, factual, or procedural errors. Example prompt: “Examine the above reasoning carefully. Identify mistakes or unsupported steps, and propose corrections.”

-

Revision phase: The model generates a new reasoning chain incorporating the critique. Optionally, feedback can be looped over multiple rounds.

-

Termination: The loop ends when a confidence threshold or reflection limit is reached.

Theoretical Framing

-

Reflection can be viewed as approximate gradient descent in the space of reasoning traces, where the model updates its “beliefs” about a solution through internal self-assessment.

-

Given an initial reasoning trace \(z^{(0)}\), the update rule can be seen as:

\[z^{(t+1)} = \text{Refine}\big(z^{(t)}, \text{Critique}(z^{(t)})\big)\]- where Critique is an operator producing feedback and Refine modifies the reasoning accordingly.

-

This closely parallels iterative inference in classical optimization and meta-learning frameworks.

Variants

- Reflexion (Shinn et al. (2023)): Uses verbal reinforcement (self-generated critique and reward).

- Self-Refine (Madaan et al. (2023)): Separates roles into task solver, feedback provider, and reviser.

- Critic–Judge systems (Zhou et al. (2023)): Introduces a secondary “critic” model to evaluate and score reasoning traces.

- RCOT (Reflective Chain-of-Thought) (Zhang et al. (2024)): Adds structured self-correction within CoT reasoning.

Advantages

- Improves factual correctness and logical soundness of reasoning chains.

- Encourages interpretable, auditable reasoning corrections.

- Can operate with minimal supervision—feedback is model-generated.

Limitations

- Computationally expensive due to iterative passes.

- Susceptible to feedback loops—reflections may amplify minor errors.

- Quality of reflection depends heavily on prompt design and model calibration.

Relationship to RL and CoT

-

Reflection complements RL and CoT:

- Like RL, it provides a feedback signal, but in natural language form rather than scalar rewards.

- Like CoT, it operates at the level of reasoning traces, but introduces a meta-layer of critique.

-

This synergy is foundational in modern autonomous reasoning agents that continuously self-improve through reflection cycles.

Search-Based Reasoning

-

Search-based reasoning extends CoT and Tree-of-Thought paradigms by formalizing reasoning as an explicit search or planning process through a structured state space of partial thoughts. Rather than producing a single reasoning trajectory, the model dynamically explores multiple hypotheses, evaluates their promise, and selectively expands the most promising reasoning branches. This approach transforms reasoning from sequence generation into strategic exploration—closer to the deliberative search processes in classical AI.

-

The key insight behind search-based reasoning is that complex reasoning tasks (e.g., mathematical proofs, algorithmic puzzles, or multi-hop reasoning) often require exploring alternative reasoning directions, pruning dead-ends, and backtracking—capabilities absent from purely linear text generation.

-

This family includes Tree-of-Thoughts (ToT) by Yao et al. (2023), Monte Carlo Tree Search (MCTS)-augmented reasoning, value-guided search frameworks, and hybrid plan–execute–evaluate reasoning systems that embed search within or atop language model inference.

Tree-of-Thoughts (ToT) Prompting

-

Tree-of-Thoughts (ToT) generalizes CoT prompting into a structured search process over multiple reasoning paths. Instead of committing to a single linear reasoning chain, ToT explores a branching search tree where each node corresponds to a partial “thought,” and branches represent possible continuations of reasoning.

-

This approach was introduced in Tree of Thoughts: Deliberate Problem Solving with Large Language Models by Yao et al. (2023).

Core Idea

-

CoT prompting treats reasoning as a single sampled trajectory:

\[x \rightarrow z_1 \rightarrow z_2 \rightarrow \cdots \rightarrow z_T \rightarrow y\]- while ToT treats reasoning as an exploration problem over multiple possible continuations at each step:

-

The model explicitly evaluates partial thoughts \(z_{1:t}\) using a heuristic function or value model, guiding the expansion toward promising reasoning directions.

Mechanism

- Thought generation:

- The model generates candidate continuations for the current thought, e.g., \(z_t^{(1)}, z_t^{(2)}, \ldots, z_t^{(b)}\)

- Evaluation:

- Each partial reasoning sequence \(z_{1:t}\) is scored by the model itself or a learned value function \(V_\phi(z_{1:t})\), estimating expected success.

- Search algorithm:

- Employs strategies such as breadth-first search (BFS), depth-first search (DFS), or Monte Carlo Tree Search (MCTS) to explore reasoning paths selectively.

- Selection:

- The final answer is derived from the highest-valued complete reasoning path or an ensemble of top candidates.

-

Mathematically, this resembles a policy/value formulation:

\[z_{t+1} \sim \pi_\theta(z_t \mid z_{1:t}) \quad \text{and} \quad V_\phi(z_{1:t}) \approx \mathbb{E}[R \mid z_{1:t}]\]- where \(R\) is a reward for a correct or high-quality final output.

Example

-

For a math problem such as “Find the smallest integer satisfying …”, the ToT procedure may branch into:

- Thought A: Try algebraic manipulation.

- Thought B: Try substitution.

- Thought C: Try bounding argument.

-

The model evaluates which partial derivation yields progress and prunes unpromising branches, effectively performing deliberate reasoning.

Advantages

- Encourages exploration over multiple reasoning directions, avoiding early commitment to incorrect logic.

- Enables planning and backtracking, crucial for complex reasoning.

- Integrates well with external evaluators or reward functions.

Limitations

- Computationally expensive: exponential search space mitigated only by pruning heuristics.

- Requires a reliable evaluation function to score partial reasoning.

- Harder to parallelize and tune compared to CoT or Self-Consistency.

Relation to Other Methods

-

Tree-of-Thoughts bridges the gap between:

- CoT (single deterministic reasoning chain), and

- Search-based reasoning in classical AI (state-space exploration, planning).

-

In this sense, it operationalizes the idea that reasoning should be deliberative, not merely associative.

Monte Carlo Tree Search (MCTS)-based Reasoning

Core Idea

-

Monte Carlo Tree Search (MCTS)-based reasoning refines search-based reasoning by using stochastic simulations to balance exploration and exploitation over the reasoning space. Each node in the search tree represents a partial reasoning trace \(z_{1:t} = (z_1, z_2, \ldots, z_t)\), and edges represent possible next reasoning steps \(z_{t+1}\). Unlike simple breadth-first or depth-first traversal, MCTS uses probabilistic sampling to explore promising reasoning branches while still allocating some computation to less-visited ones, ensuring a balance between discovering new reasoning paths and refining strong candidates.

-

Formally, reasoning unfolds as a growing search tree \(\mathcal{T}\):

\[\mathcal{T} = { z_{1:t} \mid z_{1:t-1} \in \mathcal{T},\ z_t \in \text{Expand}(z_{1:t-1}) }\]- where the Expand step is guided by the LLM’s conditional distribution \(p_\theta(z_t \mid z_{1:t-1}, x)\), and the evaluation function \(V_\phi(z_{1:t})\) estimates how promising each partial reasoning sequence is.

-

MCTS then uses simulated rollouts—partial reasoning trajectories extended to completion—to estimate downstream rewards, which are backpropagated through the tree to update value and visit counts. The algorithm repeatedly selects nodes using an upper-confidence bound (UCB) criterion that trades off exploration and exploitation:

\[a^* = \arg\max_a \left( Q(s, a) + c \sqrt{\frac{\log N(s)}{N(s, a) + 1}} \right)\]- where \(Q(s, a)\) is the average reward for taking reasoning step \(a\) in state \(s\), \(N(s, a)\) the number of visits, and \(c\) a temperature constant controlling exploration.

-

This process continues until reasoning trajectories reach terminal states—complete solutions \(y\)—and the highest-valued trace or ensemble of top traces is selected as the model’s output.

Mechanism

-

Selection: From the root node, traverse the tree by selecting the child that maximizes the UCB criterion, balancing high-value and underexplored reasoning branches.

-

Expansion: When an underexplored node is reached, the model generates several possible next reasoning steps \(z_t^{(1)}, z_t^{(2)}, \ldots, z_t^{(b)} \sim p_\theta(z_t \mid z_{1:t-1}, x)\), forming new branches for exploration.

-

Simulation (Rollout): The model continues reasoning (deterministically or stochastically) until reaching a terminal output \(y\), producing a full reasoning chain \(z_{1:T}\).

-

Evaluation: The resulting trace is scored via a value estimator \(V_\phi(z_{1:T})\) or a domain-specific verifier (e.g., math correctness, code execution success).

-

Backpropagation: The value score is propagated upward, updating \(Q(s, a)\) and visit counts \(N(s, a)\) along the path, gradually refining the search policy.

-

Selection of Final Output: After sufficient iterations, the reasoning path with the highest cumulative value (or visit count) is chosen as the final answer.

Theoretical Framing

-

MCTS-based reasoning can be interpreted as an approximate Bayesian inference mechanism, marginalizing over reasoning paths by repeated stochastic sampling and value-based weighting. It formalizes reasoning as a policy–value system:

\[z_{t+1} \sim \pi_\theta(z_t \mid z_{1:t}, x), \quad V_\phi(z_{1:t}) \approx \mathbb{E}[R \mid z_{1:t}]\]- where \(\pi_\theta\) is the reasoning policy and \(V_\phi\) the expected reward estimator.

-

This structure directly parallels AlphaZero-style planning in RL: reasoning steps are “moves,” the value function measures progress toward correctness, and search iterations improve reasoning through self-guided exploration.

Example: Mathematical Problem Solving

-

Consider a geometry proof question. A linear CoT might pursue a single argument, but an MCTS-based reasoner could simulate multiple reasoning directions:

- Branch A: Attempt to derive relations via similar triangles.

- Branch B: Substitute coordinates and apply algebraic constraints.

- Branch C: Explore symmetry arguments for simplification.

-

Each branch is evaluated through rollouts—checking consistency or partial correctness—and promising directions are expanded further, while unproductive branches are pruned. Over multiple iterations, the search converges on the most coherent reasoning trace, yielding deliberate and explainable reasoning rather than heuristic guessing.

Variants and Extensions

- LLM-MCTS (Yao et al. (2024)): Combines MCTS with Tree-of-Thought reasoning, using the LLM both for expansion and value estimation.

- Verifier-Guided MCTS: Integrates external verifiers to provide precise reward signals at rollout, improving pruning accuracy.

- Value-Guided MCTS: Employs a trained value model \(V_\phi\) (similar to process reward models) to estimate reasoning quality before rollout.

- Hybrid Planning Frameworks: Combine symbolic planners (A*, BFS) with MCTS exploration to scale reasoning in code, logic, or multi-agent environments.

Advantages

- Balances exploration and exploitation, avoiding premature convergence.

- Can discover nonlinear, multi-path reasoning solutions.

- Scales naturally to complex reasoning where evaluating partial progress is feasible.

- Compatible with verifier-guided or reward-shaped supervision, enabling hybrid reasoning pipelines.

Limitations

- High computational cost: repeated rollouts and evaluations are expensive.

- Value-model sensitivity: incorrect scoring can misdirect exploration.

- Context window saturation: maintaining multiple partial traces taxes memory.

- Diminishing returns: excessive exploration may not improve accuracy proportionally.

Relationship to Other Reasoning Methods

- MCTS generalizes Tree-of-Thoughts (ToT) by adding quantitative evaluation and stochastic rollouts, bridging symbolic search and probabilistic reasoning.

- It operationalizes planning in reasoning space, complementing RL-based reasoning (which learns heuristics) and Self-Consistency decoding (which averages independent samples rather than guided rollouts).

- Conceptually, MCTS moves LLM reasoning closer to explicit deliberation and decision-making, marking a key step from narrative reasoning toward search-based intelligence.

Tool-Augmented Reasoning

-

Tool-Augmented Reasoning extends an LLM’s capabilities beyond internal text-based inference by integrating external computational and retrieval tools into its reasoning process. Rather than relying solely on its learned parameters, a tool-augmented model can decide when to think and when to act—delegating parts of the reasoning process to verifiable, executable systems such as Python interpreters, search engines, databases, or APIs.

-

This paradigm effectively transforms an LLM into a reasoning orchestrator, coordinating multiple symbolic or functional modules to perform grounded, verifiable, and compositional reasoning. The LLM maintains the high-level reasoning flow in natural language but defers specific sub-tasks—such as numerical calculation, factual lookup, or logical evaluation—to specialized external systems.

-

The formalism for tool-augmented reasoning can be expressed as a hybrid reasoning policy:

\[\pi_\theta(a_t \mid s_t) = \begin{cases} \text{generate reasoning step } z_t, & \text{if } a_t = \text{think}, \\ \text{invoke tool } \mathcal{T}_i(s_t), & \text{if } a_t = \text{act} \end{cases}\]- where \(s_t\) is the current reasoning state, and \(\mathcal{T}_i\) denotes a callable external tool.

-

This formulation underpins several reasoning systems that merge symbolic and neural components, including ReAct (Yao et al. (2022)), Toolformer (Schick et al. (2023)), PAL (Gao et al. (2022)), and Gorilla (Patil et al. (2023)). Together, these systems exemplify the shift from static reasoning models toward interactive and compositional reasoning frameworks that can interface with the external world.

ReAct: Reason and Act Framework

Core Idea

-

ReAct (Reason + Act) introduces a structured reasoning framework in which language models interleave internal reasoning (“thoughts”) with external actions (“acts”). Rather than producing a single reasoning chain internally, the model alternates between cognitive reasoning steps and environment interactions, enabling active exploration, retrieval, and verification.

-

This concept was formalized in ReAct: Synergizing Reasoning and Acting in Language Models by Yao et al. (2022), where an LLM engages in iterative cycles of thinking, acting, and observing, following the trajectory:

- Each thought is an internal deliberation; each action interacts with an external environment (e.g., a search query or calculator call); and each observation provides feedback that informs the next reasoning step.

Mechanism

- Prompt Structure:

- The model is trained or prompted to alternate explicitly between “Thought:” and “Action:” stages.

- Example:

Thought: I should verify this fact. Action: search("When was the Theory of Relativity proposed?") Observation: 1905. Thought: That confirms Einstein’s 1905 paper. - Execution and Feedback:

- Each “Action” triggers a system-level call (search, API, or computation). The resulting observation is appended to the prompt context, grounding the model’s next reasoning step.

- Iterative Reasoning Loop:

-

This continues until the model converges on a final conclusion or the task’s stopping condition is met.

-

Formally, the reasoning trajectory is:

\[\tau = (x, {(t_i, a_i, o_i)}_{i=1}^T, y)\]- where \(t_i\) are reasoning traces, \(a_i\) are actions, and \(o_i\) are observations.

-

Theoretical Framing

-

ReAct operationalizes reasoning as a policy over both thoughts and actions:

\[\pi_\theta(t_i, a_i \mid s_i)\]- where \(s_i\) is the model’s current state (context + prior outputs).

-

This allows the model to perform goal-directed reasoning, selectively gathering new information, evaluating results, and iteratively refining its understanding—essentially turning passive inference into interactive cognition.

Advantages

- Enables active information acquisition, reducing dependence on memorized knowledge.

- Produces interpretable reasoning traces with explicit thought–action–observation sequences.

- Scales naturally to multi-step, real-world tasks involving dynamic environments.

Limitations

- Requires reliable execution infrastructure for handling tool calls and feedback.

- Susceptible to looping behaviors if not properly constrained.

- Context windows can become crowded with intermediate observations.

Extensions

- Reflexion (Shinn et al. (2023)): Adds self-evaluation and verbal RL to the ReAct cycle.

- AutoGPT / LangChain Agents (2023–2024): Build upon ReAct’s iterative structure to enable multi-step autonomous task execution and planning.

Toolformer and Self-Supervised Tool Learning

Core Idea

-

Toolformer: Language Models Can Teach Themselves to Use Tools by Schick et al. (2023) introduced a paradigm shift in self-supervised tool-augmented reasoning, where the model autonomously learns when and how to call external tools—without explicit supervision or hand-crafted prompts. Unlike ReAct, which depends on prompting and external orchestration, Toolformer integrates tool usage directly into the model’s generative policy, turning tool invocation into a learned reasoning behavior rather than a manually structured loop.

-

The central insight of Toolformer is that language models can self-label their own tool-use data: by inserting API calls into text and evaluating whether the resulting completion improves likelihood under the model’s own distribution. Through this mechanism, the model discovers not just how to use a tool, but when its invocation enhances reasoning performance.

-

This process transforms the model from a passive generator into an autonomous reasoning-controller that dynamically invokes external functions as part of its internal reasoning process.

Mechanism

- Candidate Tool Identification:

- The model is exposed to a set of tools—e.g., calculator, Wikipedia search, translation API, or question-answering module.

- Self-Supervised Data Generation:

- Toolformer uses the base LLM to generate potential API calls within text (e.g.,

call("calculate(3*7)")) and then evaluates whether including the resulting API output improves the log-likelihood of the original completion.

- Toolformer uses the base LLM to generate potential API calls within text (e.g.,

- Filtering and Fine-Tuning:

- Only API calls that improve model likelihood are retained. The model is then fine-tuned on these augmented examples, learning to integrate tools naturally during inference.

- Inference-Time Behavior:

-

During generation, the model autonomously decides when to invoke a tool. Tool outputs are inserted inline and directly influence subsequent reasoning steps.

-

Formally, the tool-augmented generation process is modeled as:

\[p(y|x) = \sum_{\mathcal{T}} p_\theta(y, \mathcal{T}(x))\]- where the model implicitly marginalizes over possible tool calls \(\mathcal{T}\) to produce the most likely reasoning continuation.

-

Theoretical Framing

-

Toolformer operationalizes compositional reasoning through differentiable decision-making over discrete actions (tool invocations). Each tool call acts as a functional composition step within the model’s reasoning trace, turning the sequence generation process into a form of neurosymbolic program synthesis.

-

By learning tool invocation autonomously, Toolformer bridges the gap between in-context reasoning and procedural reasoning, internalizing the interface between language and computation.

Representative Systems

- Toolformer (Schick et al. (2023)): The foundational framework for self-supervised tool usage across multiple APIs.

- PAL (Program-Aided Language Models) (Gao et al. (2022)): Delegates structured reasoning to Python execution, using LLMs to generate executable programs rather than answers directly.

- Gorilla (Patil et al. (2023)): Extends the concept to large-scale API access, enabling natural-language-to-API mapping for thousands of real-world endpoints.

- LLM-Augmented Reasoning (LLM-AR) (Paranjape et al. (2023)): Integrates tool selection and programmatic reasoning within retrieval-augmented inference pipelines.

- ToolBench (Huang et al. (2023)): Provides a benchmark for evaluating tool-use generalization and the efficiency of learned tool invocation.

Advantages

- Autonomous learning: No human annotation required for tool-use examples.

- Improved factuality: External tools provide non-parametric computation and verifiable results.

- Composable reasoning: Tool invocation integrates seamlessly into text generation.

- Scalable: Supports continual integration of new tools through additional fine-tuning on newly self-labeled tool-use data, without modifying the model’s architecture.

Limitations

- Requires reliable APIs and error-tolerant execution infrastructure.

- Self-supervised signal can bias toward frequent or high-likelihood calls, underusing rare but useful tools.

- Tool call latency and context-length constraints can affect real-time reasoning.

Relationship to ReAct and RL

- While ReAct structures reasoning via explicit prompts and environment interaction, Toolformer internalizes the decision to use tools via training-time self-supervision.

- RL methods, such as DeepSeek-R1, can complement Toolformer by learning optimal tool invocation policies via reward feedback rather than likelihood improvement.

Reinforcement Learning-Based Reasoning

- RL approaches frame reasoning as policy optimization over reasoning trajectories. The model learns to generate structured, verifiable chains that maximize explicit or implicit rewards.

- RL for reasoning treats reasoning as a goal-directed policy optimization problem, where the model learns to produce multi-step reasoning traces that maximize a task-specific reward. Rather than relying only on imitation of reasoning traces (as in supervised fine-tuning or CoT), this approach uses reward signals—explicit or implicit—to guide models toward useful intermediate reasoning behaviors.

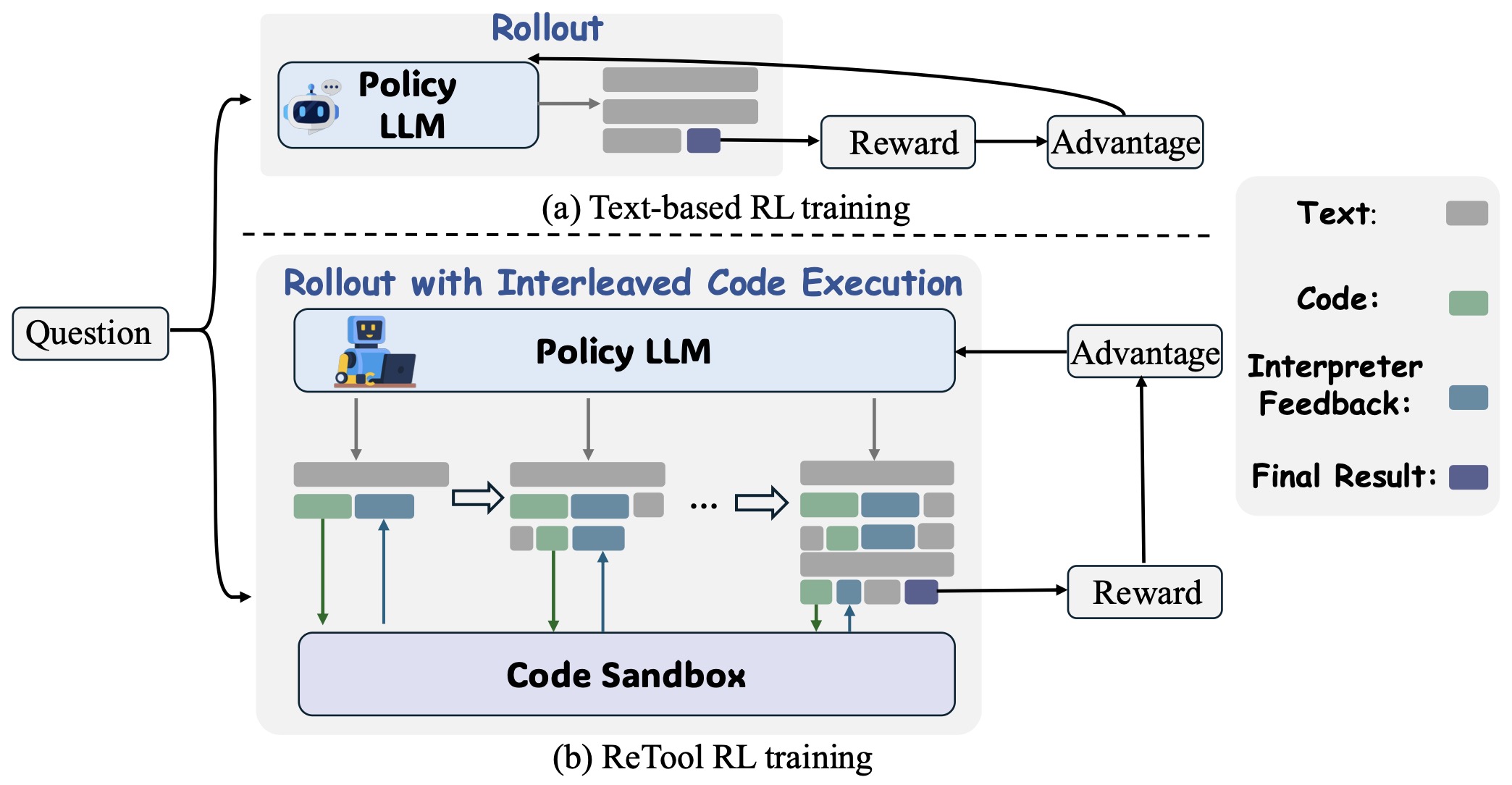

- Emerging agentic reasoning paradigms extend this RL framing to encompass tool-integrated, interactive, and judgmental reasoning. Recent systems such as ToRL, ReTool, and SimpleTIR demonstrate how reinforcement learning enables LLMs to act as autonomous agents that reason through iterative tool use—executing, verifying, and refining their own outputs via external environments (e.g., code interpreters or search engines). This tool-integrated reasoning (TIR) broadens the feasible reasoning space beyond text-only models by breaking the “invisible leash” that constrains standard RL within the model’s pretraining distribution, as formally analyzed in Understanding Tool-Integrated Reasoning. Furthermore, reinforcement-trained reasoning extends beyond task-solving to include LLM-as-judge and reward-model architectures, where models evaluate or shape reasoning quality through learned, hierarchical feedback loops—constituting early forms of reasoning beyond general-purpose LLMs.

WebGPT

- The paper WebGPT: Browser-assisted Question-answering with Human Feedback by Nakano et al. (2021) represents one of the earliest large-scale implementations of reinforcement learning from human feedback (RLHF) for reasoning. It extends LLMs beyond static text reasoning by introducing a controlled web-browsing interface, enabling them to search, navigate, and cite external information sources while answering questions.

- By combining human preference modeling with interactive tool use, WebGPT laid the foundation for verifiable, tool-augmented reasoning systems.

Core Idea

- WebGPT extends the RL-style reasoning paradigm by equipping an LLM with a controlled web-browser interface: the model can query, click, scroll, and quote webpages in a simulated browsing environment (OpenAI).

- It then uses human feedback (via a reward model trained on pairwise comparisons) to select its best answers. In this way, the model’s reasoning trajectories include external information retrieval and verification steps, making reasoning more grounded and verifiable.

Mechanism

- The model begins with imitation learning (behavior cloning) on human browsing demonstrations, learning to execute search \(\rightarrow\) navigate \(\rightarrow\) quote chains.

- Next, a separate reward model is trained to predict human preference among answer–reference pairs. Answers are harvested via browsing and then compared.

- Finally, the policy is refined via rejection sampling (and optionally RL) with respect to that reward model: the top-ranked answers by the reward model are selected.

-

Browsing environment: At each time step \(t\) the model is given browser state \(s_t\), chooses an action \(a_t \in {\text{Search}, \text{Click}, \text{Scroll}, \text{Quote}}\), and obtains the next state \(s_{t+1}\). After \(T\) steps, it produces answer \(y\) with supporting references \(z\). The reward model assigns \(R(y,z)\). The training objective is to increase the probability of trajectories leading to high \(R\).

\[\mathcal{J}(\theta) = \mathbb{E}_{(x, (z,y) \sim \pi \theta(\cdot|x))} [R(y,z)]\]- where \(x\) is the question and \(z\) is the set of quoted references.

- Use of citations: The model is required to produce citations so that humans can verify factual accuracy.

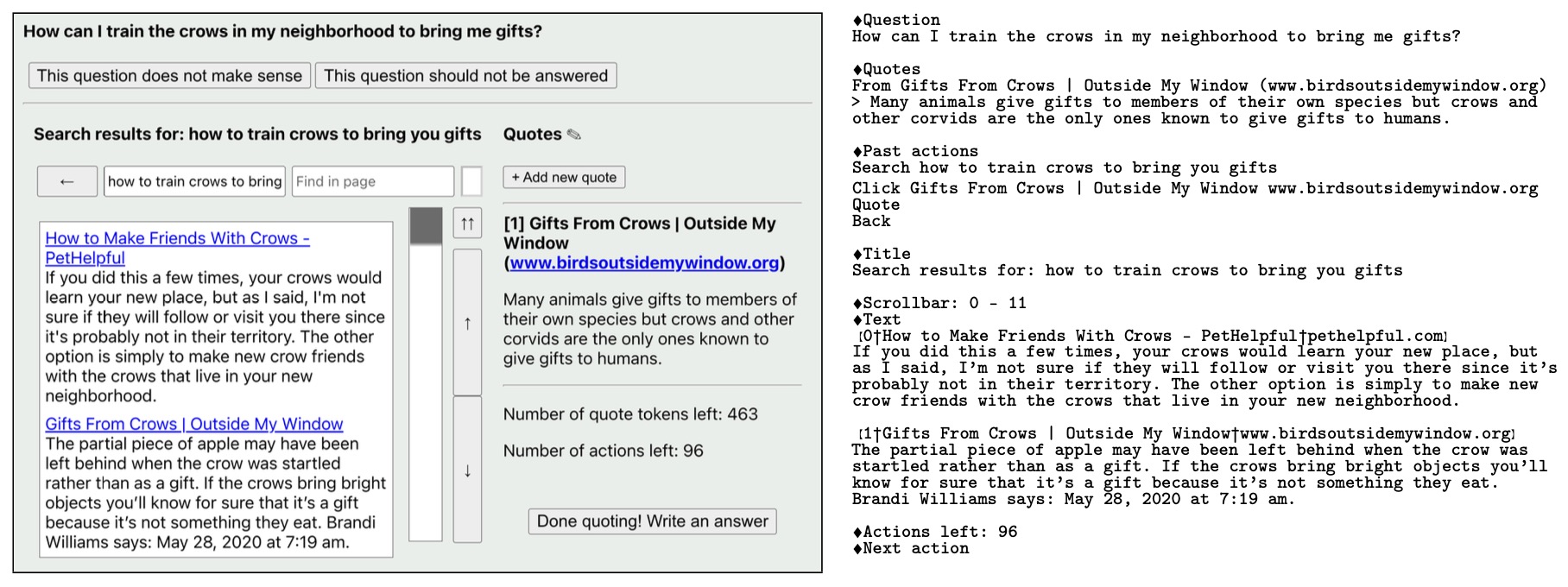

- The following figure (source) shows the text-based browsing interface used in WebGPT, where a language model interacts with the environment via commands such as “search,” “scroll,” or “quote” to collect references during question answering.

Implementation Details

- The base model is a fine-tuned version of GPT-3 (various sizes).

- Demonstration dataset: approximately 6K human browsing demonstrations collected via the browsing interface.

- Pairwise comparisons: approximately 21.5K human preference labels comparing two model‐generated answers (with references) to train the reward model.

- The browsing actions are limited to a text-browser interface (e.g., using the Bing Web Search API) to avoid full web access risks.

- In evaluation, the best model’s answers were preferred 56 % of the time to human demonstrators and 69 % of the time to the highest-voted Reddit answer on the ELI5 dataset.

Significance for RL-Based Reasoning

-

WebGPT advances RL-based reasoning in several ways:

- It moves beyond static, text-only reasoning chains to include interactive retrieval and verification, thus more closely approximating real-world reasoning.

- It operationalizes the notion of verifiable reasoning trajectories by requiring citations and human-preferenced ranking.

- It shows that LLMs can improve reasoning (in this case long-form QA) via reward-driven optimization of interactive reasoning policies, not just via next-token prediction.

Limitations and Considerations

- The browsing environment is constrained (text‐only, limited actions) and may still not fully capture open-web complexity.

- The reward model remains human-preference based and can inherit biases or noise.

- Credit assignment across long browsing trajectories remains challenging (a general RL-reasoning issue).

- Scalability: Collecting demonstrations and preference data is expensive; deployment in open domains still faces reliability issues.

DeepSeek-R1

- The most prominent example of RL for reasoning is DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning by Guo et al. (2025).

Core Idea

-

DeepSeek-R1 applies RL to improve reasoning performance without supervised rationales. The model learns to generate intermediate steps that lead to verifiably correct outcomes, using a reinforcement signal that rewards correct or efficient reasoning trajectories.

-

Formally, for a given problem \(x\), reasoning trace \(z\), and final answer \(y\),

\(R(y, z) = \mathbb{I}[\text{correct}(y)] - \lambda , \text{cost}\)z\(\)

- … and the objective is to maximize the expected reward:

- The model parameters are updated using RL methods such as policy gradient or Proximal Policy Optimization (PPO), as used in RLHF by Christiano et al. (2017) and its language-model applications in InstructGPT by Ouyang et al. (2022).

Mechanism

- Base model:

- Start with a pretrained LLM capable of multi-step reasoning (e.g., instruction-tuned).

-

Reward design:

- Outcome-based rewards: correctness of final answer.

- Process-based rewards: alignment with logical or stylistic reasoning norms.

- Efficiency penalties: shorter, more coherent chains get higher reward.

- Policy optimization:

- Update the model parameters \(\theta\) to maximize expected reward using policy-gradient methods.

-

The gradient estimate is:

\[\nabla_\theta \mathcal{J}(\theta) = \mathbb{E}[(R - b)\nabla_\theta \log p_\theta(y,z|x)]\]- where \(b\) is a baseline to reduce variance.

- Iterative refinement: Feedback from reward models, verification models, or external evaluators is used to shape the model’s reasoning distribution.

DeepSeek-R1 Highlights

- No human-annotated rationales: The system learns reasoning emergently through reward shaping.

- Curriculum design: Rewards evolve from simple tasks (e.g., arithmetic) to complex reasoning (e.g., proofs, logical deduction).

- Outcome: Demonstrated significant improvements on mathematical and logic benchmarks, outperforming supervised CoT-trained baselines.

Theoretical Framing

- Reasoning is formalized as sequential decision-making with hidden intermediate states:

- The RL agent (the LLM) learns to compose “thoughts” that maximize expected cumulative reward, rather than likelihood of training text. This bridges text prediction and deliberate reasoning via credit assignment.

Advantages

- Encourages reasoning structures that generalize beyond training distributions.

- Does not require labeled step-by-step data.

- Enables automated self-improvement through reward feedback.

Limitations

- Reward specification is delicate—poorly designed rewards can lead to reasoning shortcuts or gaming behavior.

- High computational cost due to exploration and rollouts.

- Credit assignment remains challenging for long reasoning chains.

Related Work

- Reflexion by Shinn et al. (2023): integrates self-reflective RL to iteratively improve reasoning quality.

- Constitutional AI by Bai et al. (2022): replaces human feedback with rule-based evaluators to align reasoning.

- Tool-Augmented RLHF by Nakano et al. (2021): incorporates tool usage (e.g., code execution) into reward computation.

- In summary, RL-based reasoning represents a shift from pattern completion to goal-directed optimization, allowing models to discover reasoning patterns that are not explicitly demonstrated in the data.

DeepSeek-R1: Practical takeaways and design patterns

-

DeepSeek-R1 reframed “reasoning” as a policy-optimization problem: start from a capable base model, define reward signals that prefer verifiable reasoning, and use RL to shape the latent steps \(z\) so that correct, readable chains become high-probability trajectories. The core lesson is operational: if you can score intermediate or final products reliably, you can push an LLM from pattern completion toward deliberate computation. For context on the method and results, see DeepSeek-R1 by Guo et al. (2025).

-

What DeepSeek-R1 actually optimizes:

-

At a high level, R1 maximizes expected reward over sampled chains:

\[\mathcal{J}(\theta)=\mathbb{E}_{x\sim\mathcal{D},z,y\sim p_\theta(\cdot\mid x)}\big[R(y,z)\big]\]-

where \(R\) blends correctness checks (exact answer, executable solver success), parsimony/format constraints, and sometimes readability penalties. In practice, implementations report variants of PPO/GRPO–style policy gradients:

\[\nabla_\theta\mathcal{J}(\theta)\approx \mathbb{E}\big[(R-b),\nabla_\theta\log p_\theta(y,z\mid x)\big]\]- … with a baseline \(b\) for variance reduction. R1 also uses staged training (e.g., cold-start data before RL) to stabilize exploration and improve “readability” of chains. See the paper for the multi-stage schedule and comparisons to o1-style models.

-

-

-

Why process supervision still matters:

- Even when you train only on outcome rewards, a verified step signal improves stability and sample efficiency. A practical alternative or complement is process reward modeling (PRM): label or auto-label whether each step is correct, then reward step sequences. This was shown to beat outcome-only supervision on MATH in Let’s Verify Step by Step by Lightman et al. (2023) and the accompanying OpenAI report by OpenAI (2023).

-

A minimal R1-style recipe you can reproduce:

- Collect tasks with verifiable end states (GSM8K, AIME, MATH). Build an automatic checker \(V\) that returns 1 when answers or traces pass.

- Train a small verifier or PRM if you can: \(V(z_t)\in[0,1]\) for each step. Use it either as reward shaping \(\sum_t V(z_t)\) or as a filter at decode time.

- Warm-start with supervised or distilled rationales to avoid unreadable chains; then switch to RL for exploration.

- Optimize a composite reward \(R=\lambda_1\text{Correct}(y)+\lambda_2\sum_{t}\text{StepOK}(z_t)-\lambda_3\text{Length}(z)-\lambda_4\text{FormatViolations}(z)\), tuning \(\lambda_i\) for your domain.

- During inference, marginalize over latent thoughts with a small self-consistency budget \(K\) and pick via verifier-guided selection—per Self-Consistency by Wang et al. (2022).

-

Design patterns that travel well beyond R1:

-

Reward the thing you can check: If you can compile problems to executable checks, outcome-only RL is often enough to induce useful structure; add PRM when you need reliability. Evidence: process supervision consistently outperforms outcome supervision on math reasoning.

-

Stage your training: Short supervised warm-ups (few curated traces) can prevent RL from converging on unreadable or language-mixed chains before formatting penalties kick in. DeepSeek-R1 explicitly reports multi-stage training to address readability and stability.

-

Keep decoding and training consistent: If you will use verifier-guided selection at inference, train with that verifier “in the loop” (e.g., as a reward or rejection sampler) to reduce train–test mismatch.

-

Prefer execution and tools over narration where possible: Program-aided solving (e.g., Python) shrinks the search space and makes rewards less noisy; combine with ReAct-style tool calls when tasks need retrieval or computation, as in ReAct by Yao et al. (2022).

-

Budget your “thinking.: Use a small \(K\) for self-consistency, then select with \(V\). You approximate \(\hat{y}=\arg\max_y \sum_{k=1}^{K}\mathbb{I} \big[y^{(k)}=y\big]\), without exploding cost—again following Wang et al. (2022).

-

-

Operational pitfalls and guardrails:

-

Reward hacking and shortcutting: If the checker can be gamed (format cues, guessable ranges), the policy will exploit it. Rotate perturbations and adversarial seeds; log chains alongside rewards. DeepSeek-R1 notes emergent but sometimes messy behaviors under pure RL.

-

Over-deliberation and cost blow-ups: RL-trained reasoners may produce unnecessarily long chains. Penalize chain length and add early-stop verifiers; at inference, cap steps and prune with a threshold on \(V\).

-

Verification bottlenecks: Human step labels do not scale. Borrow from PRM800K and template-based auto-labeling when feasible, and fall back to outcome-only rewards with strong executors; see Lightman et al. (2023).

-

-

Where R1 fits in the broader landscape:

- R1-style RL sits between explicit prompting methods (CoT, self-consistency) and full agentic loops (ReAct/tools). It supplies a training-time force that makes those inference-time interfaces work more reliably: prompts elicit better chains, verifiers select more often-correct ones, and tools ground intermediate steps. That combination—policy shaping + marginalization + verification—is, to date, the most reliable way to turn text generators into auditable reasoners. For the primary R1 paper, see Guo et al. (2025); for process supervision foundations, see Lightman et al. (2023) and OpenAI’s report by OpenAI (2023).

Reinforcement Learning for Tool-Integrated Reasoning

- Tool-Integrated Reasoning (TIR) marks a paradigm shift in how LLMs perform complex reasoning tasks. Instead of relying solely on text-based inference, TIR enables models to invoke external tools—such as code interpreters, APIs, databases, or symbolic solvers—within their reasoning trajectories.

-

Through this mechanism, models alternate between linguistic reasoning and computational execution, forming a hybrid cognitive process that grounds natural language thought in verifiable computation. Formally, a TIR process can be expressed as:

\[s_t = {r_1, c_1, o_1, \ldots, r_t, c_t, o_t},\]- where \(r_t\) is a reasoning step, \(c_t\) a tool command, and \(o_t = I(c_t)\) the corresponding output from an interpreter \(I\).

-

This framework establishes a closed loop of reason → act → verify, significantly reducing hallucination and enabling models to achieve self-correction through external validation.

-

- Tool-Integrated Reinforcement Learning (TIRL) extends this paradigm by introducing reinforcement learning (RL) into the TIR loop. In TIRL, models are not merely taught to use tools—they learn when and how to use them optimally through trial, feedback, and reward.

-

The integration of RL allows the model to optimize over both symbolic actions (tool invocations) and linguistic reasoning steps, guided by reward functions that capture correctness, efficiency, and interpretability:

\[J(\theta) = \mathbb{E}_{\pi_\theta} \left[\sum_{t=0}^{T} \gamma^t r(s_t, a_t, o_t)\right]\]- where the policy \(\pi_\theta(a_t \mid s_t)\) produces both reasoning and tool actions.

-

This combination yields models capable of adaptive computation—deciding dynamically whether to reason internally or delegate computation externally for optimal task success.

-

-

This section surveys three recent and complementary frameworks that embody the TIRL paradigm:

- ToRL by Li et al. (2025): introduces Tool-Oriented Reinforcement Learning for code-augmented mathematical reasoning, coupling RL with symbolic execution for error correction and precision.

- ReTool by Feng et al. (2025): establishes a two-phase RL pipeline where LLMs interleave reasoning with real-time code execution, optimizing outcome-based rewards for verifiable solutions.

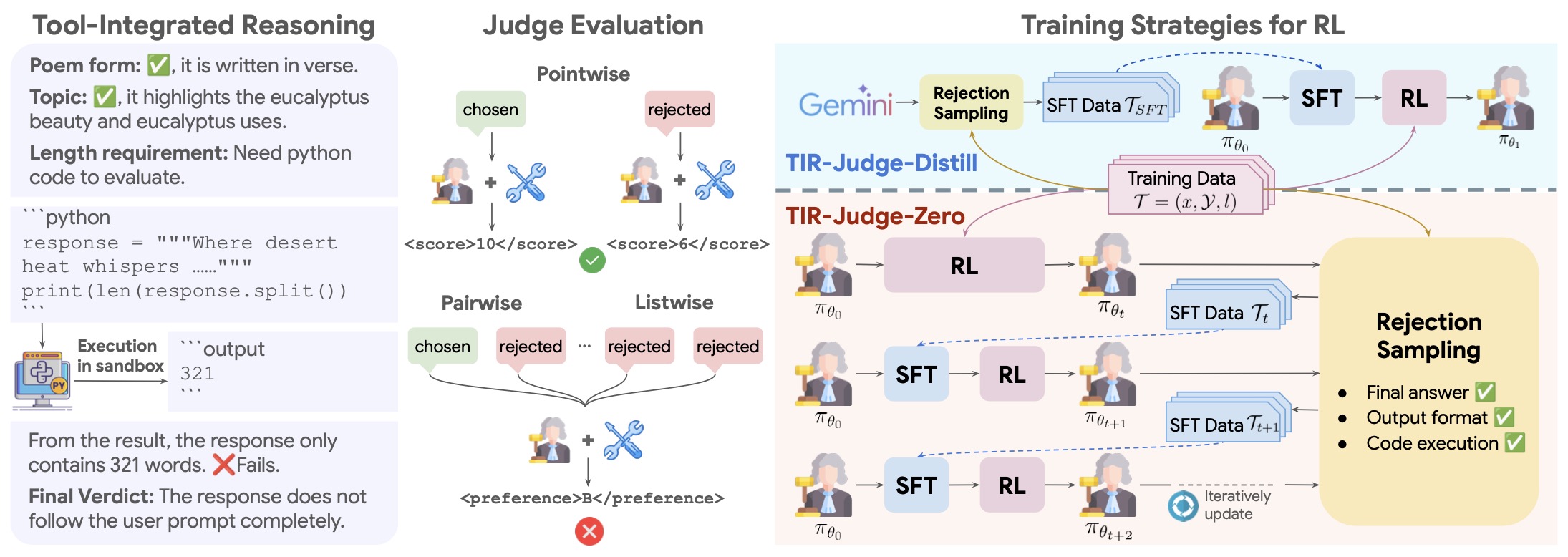

- TIR-Judge by Xu et al. (2025): extends the TIRL concept to evaluation agents, training LLM-based judges that reason, execute verification code, and learn reward functions for truthful, consistent assessment.

- Together, TIR and TIRL form the backbone of next-generation agentic intelligence—where reasoning is no longer passive text generation, but an interactive, executable, and self-optimizing process grounded in real-world feedback.

Tool-Integrated Reinforcement Learning (ToRL)

-

The Tool-Integrated Reinforcement Learning (ToRL) framework by Li et al. (2025) directly scales reinforcement learning from base models—without supervised fine-tuning—to autonomously acquire computational tool usage.

-

Unlike earlier Tool-Integrated Reasoning (TIR) approaches such as MathCoder by Wang et al. (2023) or ToRA by Gou et al. (2023), ToRL does not rely on distilled tool trajectories. Instead, the model learns tool strategies through reward-driven exploration from scratch.

Core Idea

-

ToRL enables unrestricted RL exploration with embedded interpreters (e.g., Python) for solving mathematical reasoning problems. Through repeated interaction between analytical reasoning and code execution, the model learns to balance symbolic reasoning with computational accuracy.

-

ToRL employs GRPO, setting the rollout batch size to 128 and generating 16 samples per problem.

-

In ToRL, this formulation allows the model to optimize end-to-end tool-use behavior purely through reinforcement signals, without explicit imitation of tool trajectories. It further supports exploration-driven learning, where even incorrect executions can contribute useful gradient signals toward improving reasoning-tool coordination.

-

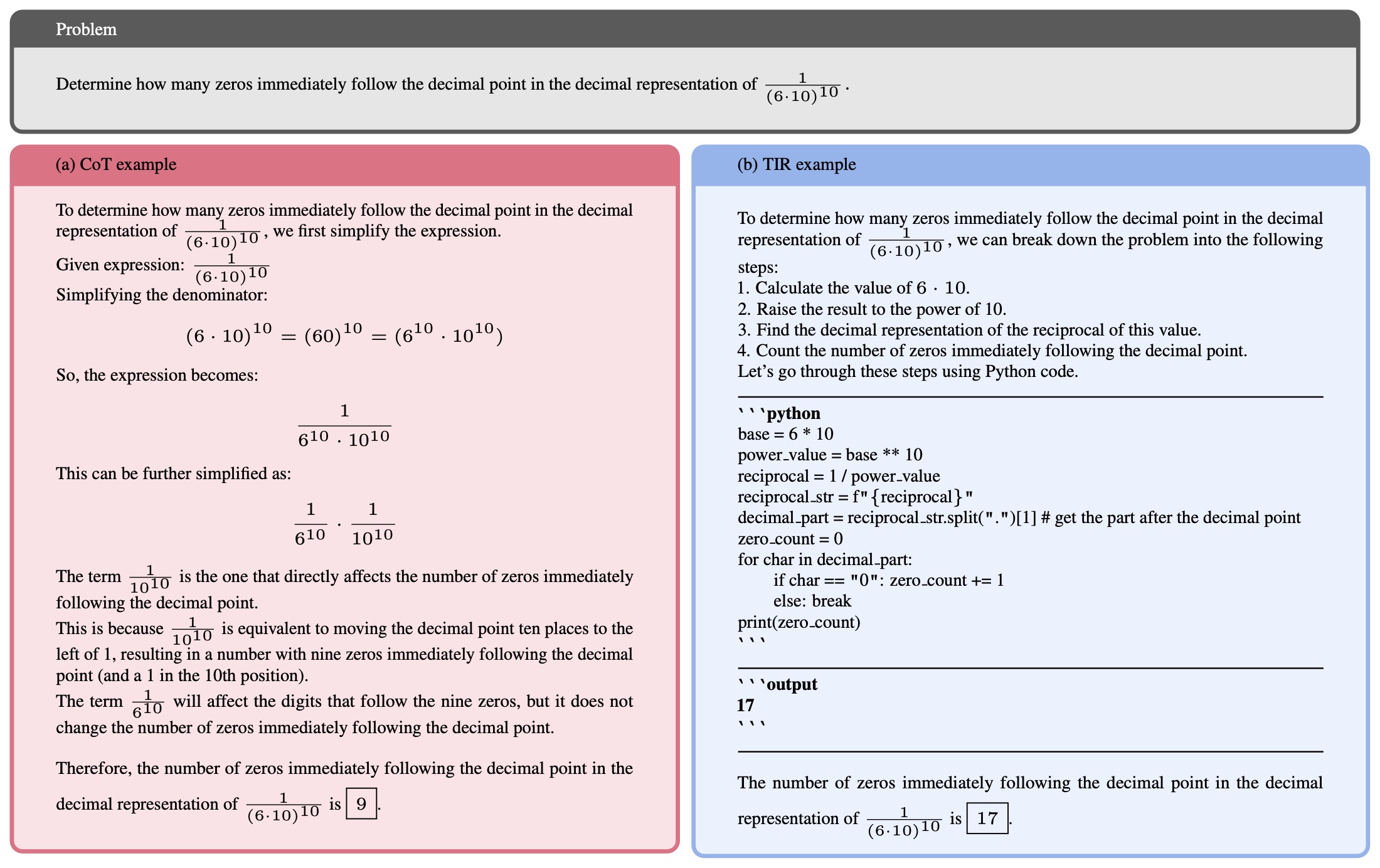

The following figure (source) shows an example of CoT and TIR solution of the problem. TIR enables the model to write code and call an interpreter to obtain the output of the executed code, and then perform further reasoning based on the execution results.

Dataset and Training Pipeline

- ToRL constructs a 28k-instance dataset from MATH, NuminaMATH, and DeepScaleR, filtering for verifiable numerical tasks and excluding open-ended proofs.

- Training is performed directly on Qwen2.5-Math base models (1.5B, 7B), without prior fine-tuning. The RL loop enables exploration of tool-use trajectories, guided solely by outcome-based rewards.

Emergent Behaviors

-

ToRL demonstrates several self-organizing cognitive behaviors:

- Code usage evolution: the share of tasks solved via code rises as RL progresses.

- Self-regulation: the model autonomously detects and avoids ineffective code patterns.