Primers • Parameter Efficient Fine-Tuning

- Overview

- Parameter-Efficient Fine-Tuning (PEFT)

- Advantages

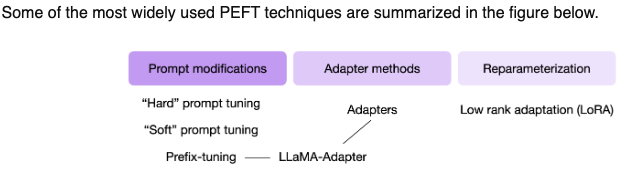

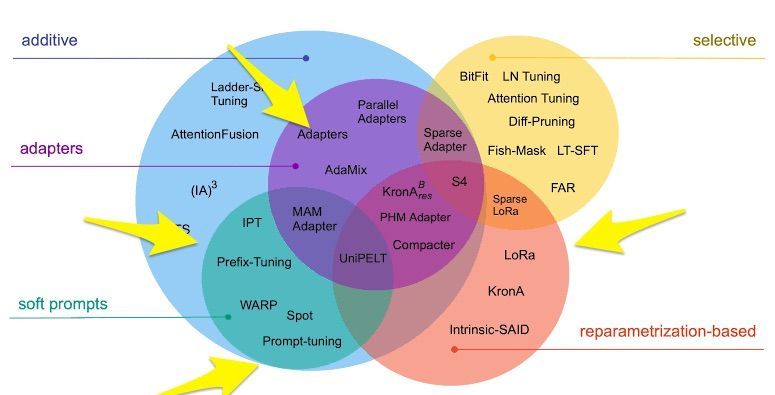

- PEFT methods

- Prompt Modifications

- Adapters

- Reparameterization

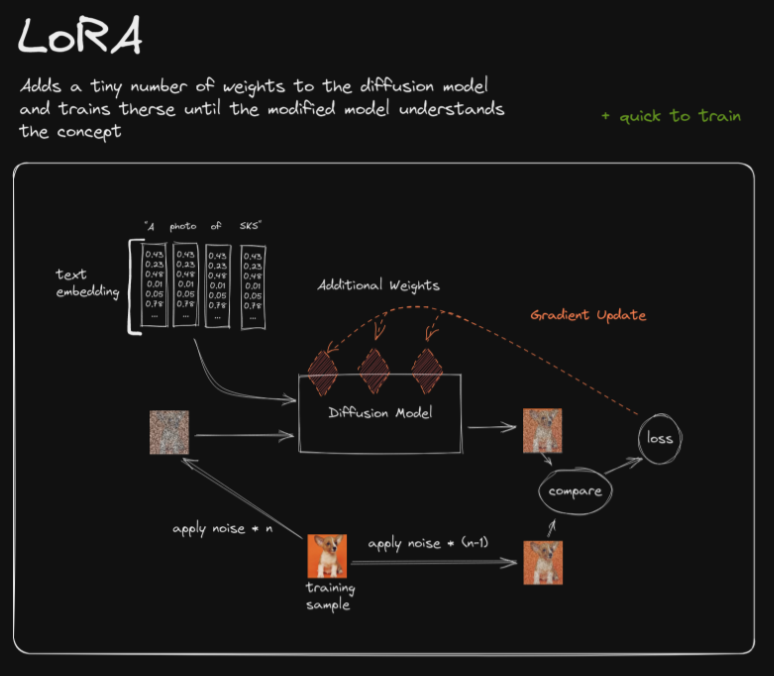

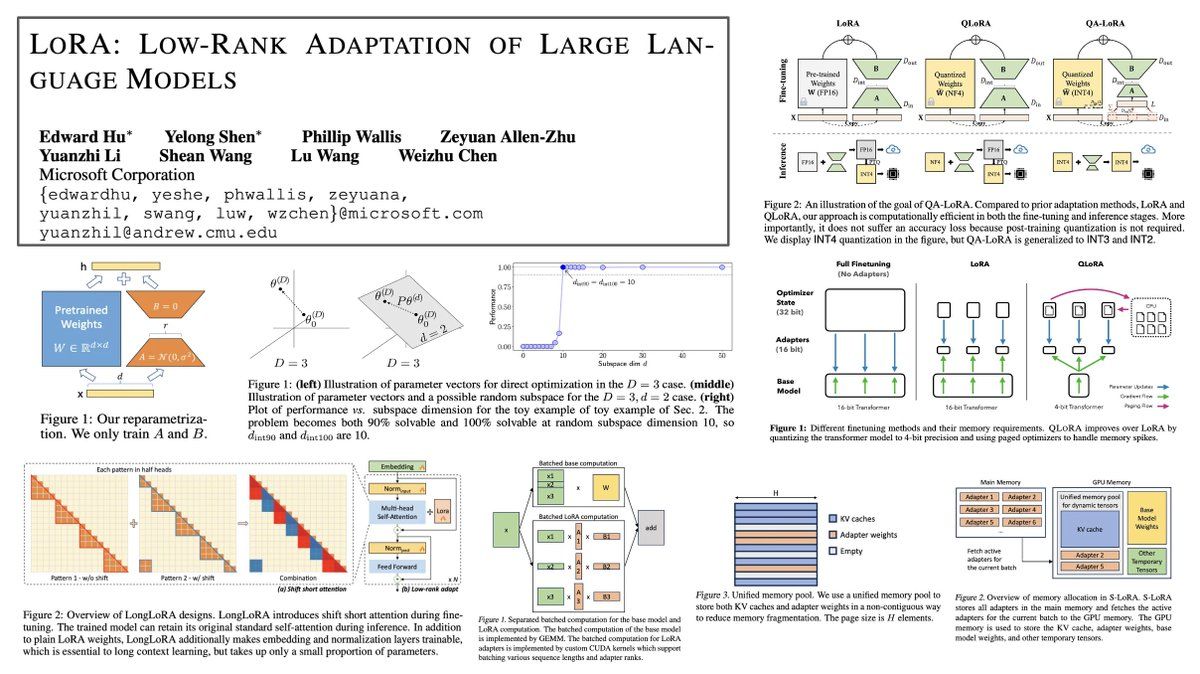

- Low-Rank Adaptation (LoRA)

- Background

- Overview

- Advantages

- Limitations

- Hyperparameters

- How does having a low-rank matrix in LoRA help the fine-tuning process?

- How does low-rank constraint introduced by LoRA inherently act as a form of regularization, especially for the lower layers of the model?

- How does LoRA help avoid catastrophic forgetting?

- How does multiplication of two low-rank matrices in LoRA lead to lower attention layers being impacted less than higher attention layers?

- In LoRA, why is \(A\) initialized using a Gaussian and \(B\) set to 0?

- For a given task, how do we determine whether to fine-tune the attention layers or feed-forward layers?

- Assuming we’re fine-tuning attention weights, which specific attention weight matrices should we apply LoRA to?

- Is there a relationship between setting scaling factor and rank in LoRA?

- How do you determine the optimal rank \(r\) for LoRA?

- How do LoRA hyperparameters interact with each other? Is there a relationship between LoRA hyperparameters?

- Why does a higher rank make it the easier to overfit?

- Does LoRA adapt weights in all layers?

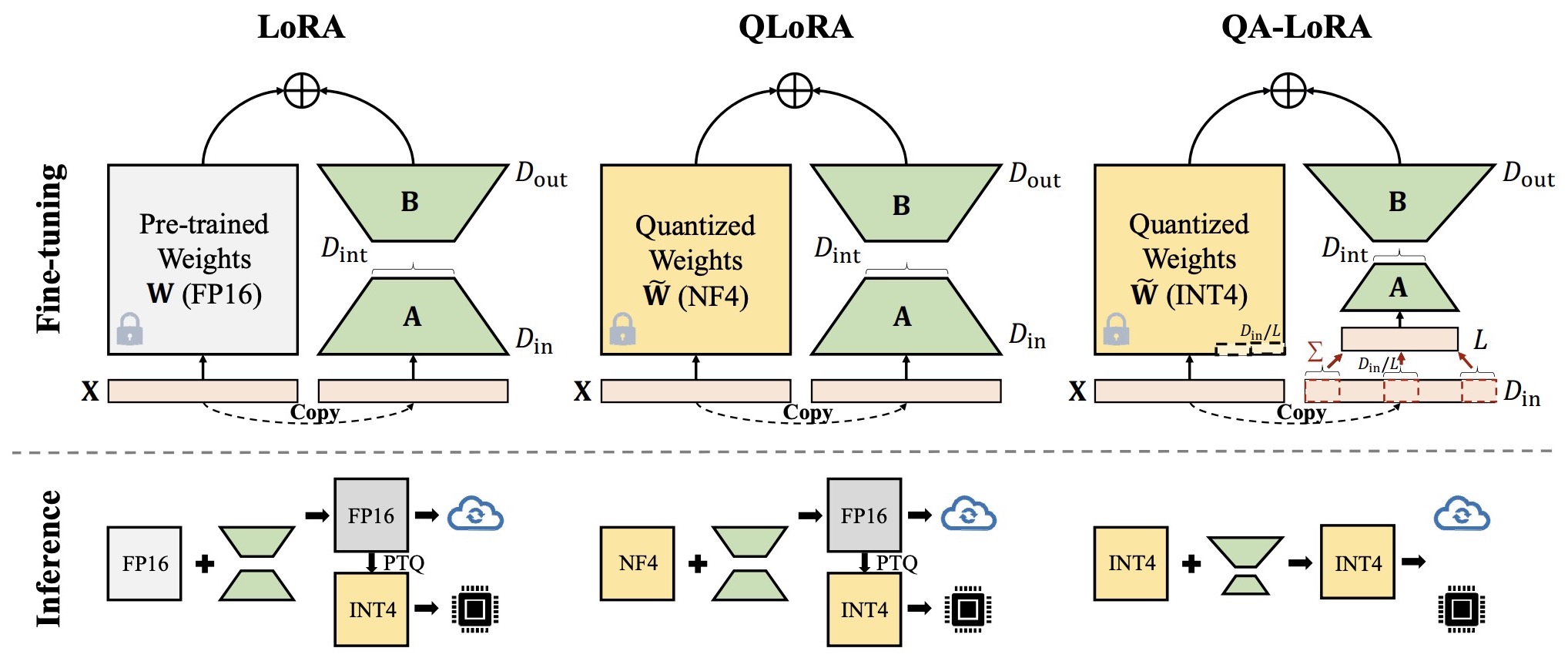

- Quantized Low-Rank Adaptation (QLoRA)

- Quantization-Aware Low-Rank Adaptation (QA-LoRA)

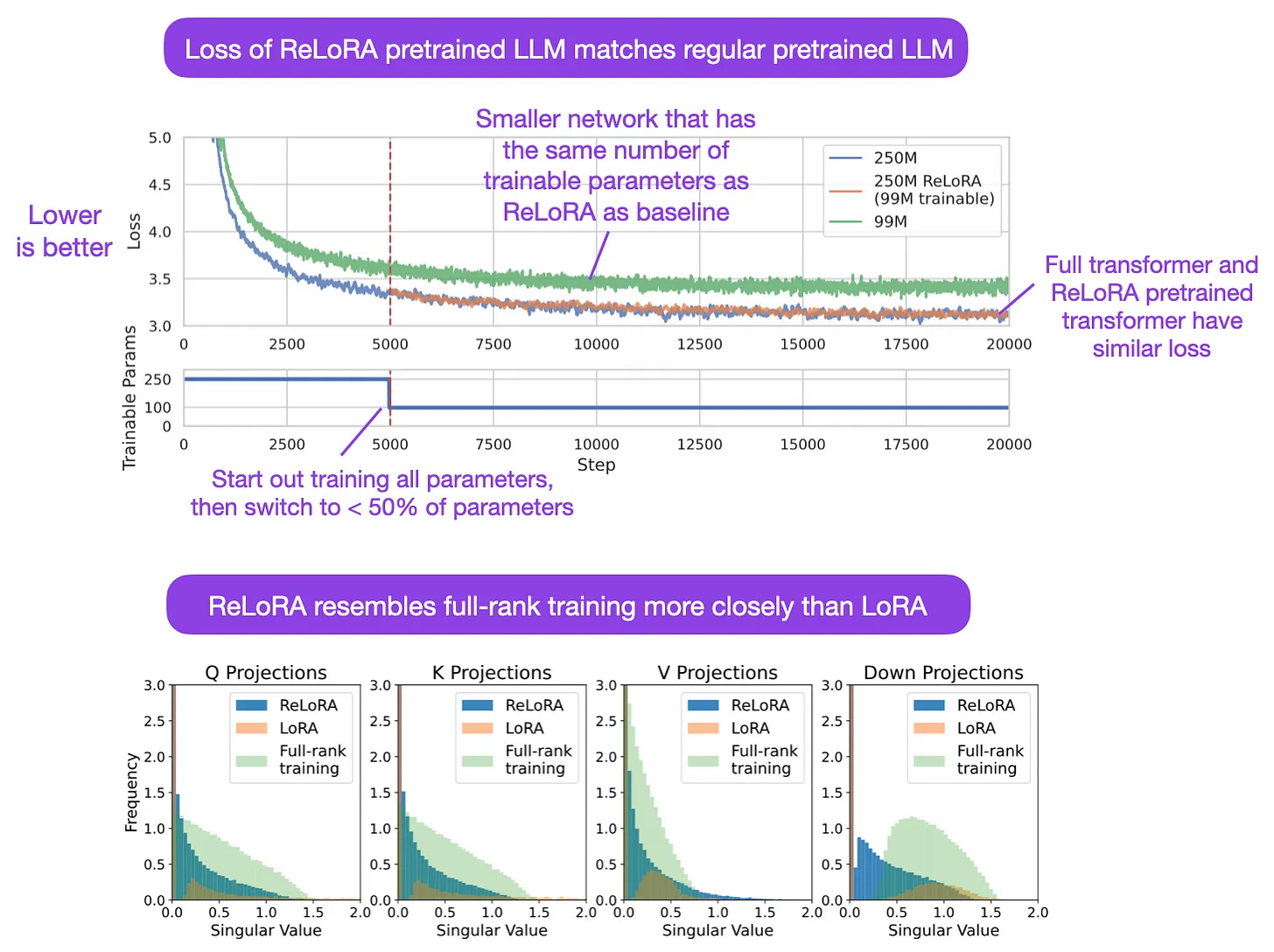

- Refined Low-Rank Adaptation (ReLoRA)

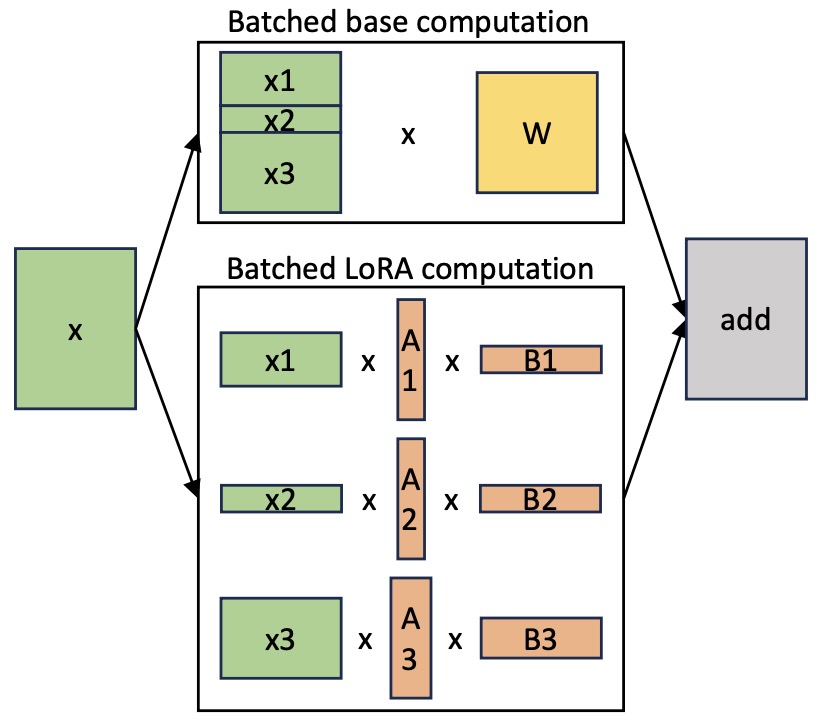

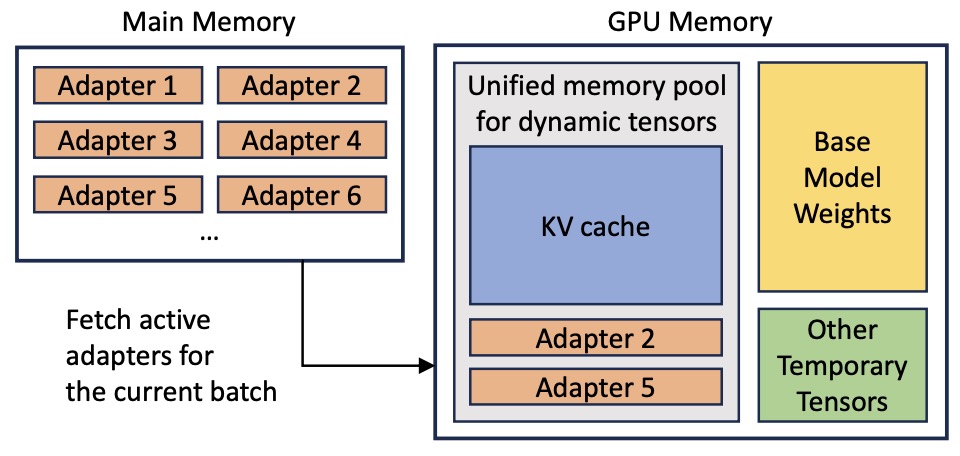

- S-LoRA: Serving Thousands of Concurrent LoRA Adapters

- Weight-Decomposed Low-Rank Adaptation (DoRA)

- Summary of LoRA Techniques

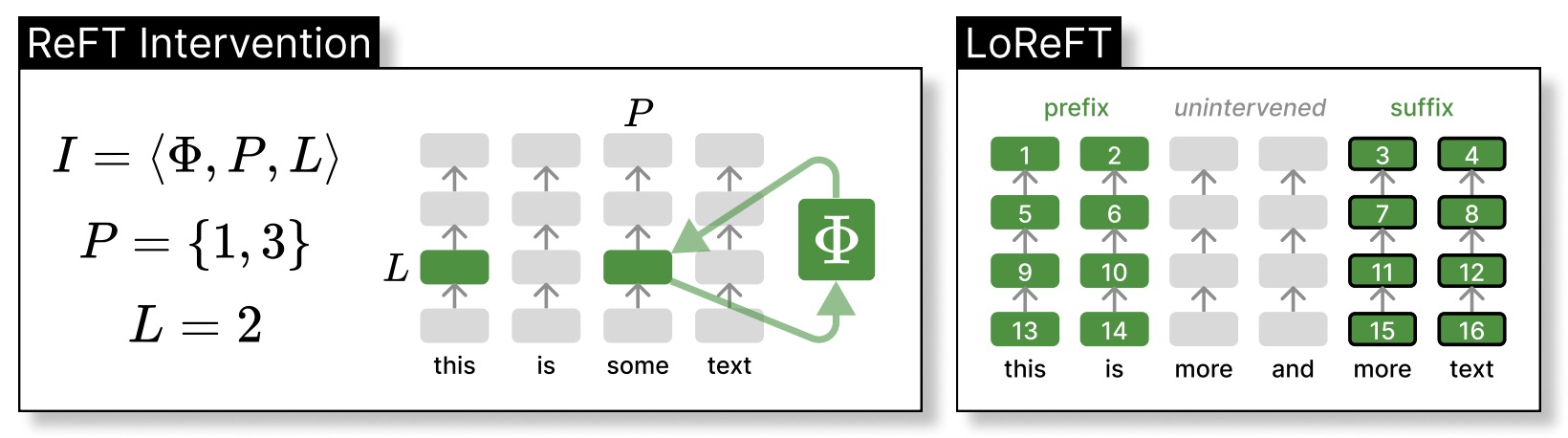

- Low-rank Linear Subspace ReFT (LoReFT)

- Stratified Progressive Adaptation Fine-tuning (SPAFIT)

- BitFit

- NOLA

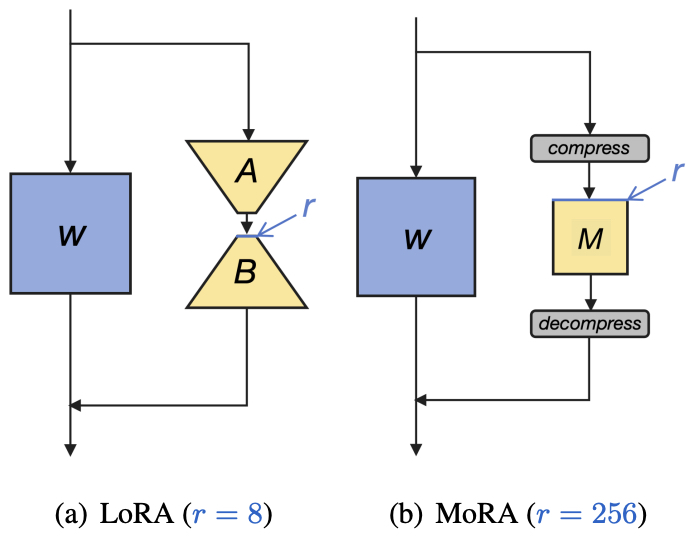

- Matrix of Rank Adaptation (MoRA)

- Low-Rank Adaptation (LoRA)

- Which PEFT Technique to Choose: A Mental Model

- Comparative Analysis of Popular PEFT Methods

- Practical Tips for Finetuning LLMs Using LoRA

- Related: Surgical fine-tuning

- References

- Citation

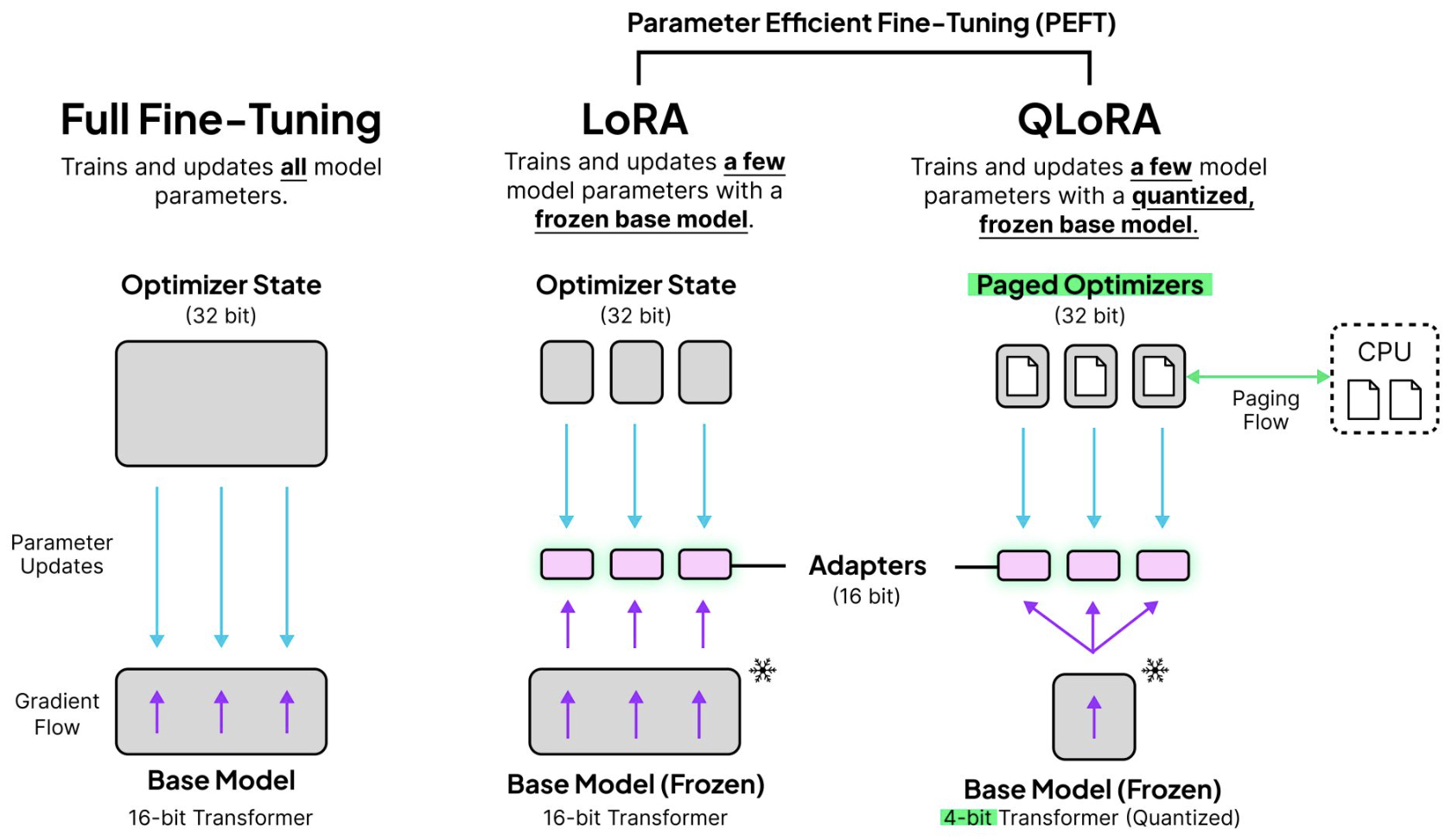

Overview

- Fine-tuning of large pre-trained models on downstream tasks is called “transfer learning”.

- While full fine-tuning pre-trained models on downstream tasks is a common, effective approach, it is an inefficient approach to transfer learning.

- The simplest way out for efficient fine-tuning could be to freeze the networks’ lower layers and adapt only the top ones to specific tasks.

- In this article, we’ll explore Parameter Efficient Fine-Tuning (PEFT) methods that enable us to adapt a pre-trained model to downstream tasks more efficiently – in a way that trains lesser parameters and hence saves cost and training time, while also yielding performance similar to full fine-tuning.

Parameter-Efficient Fine-Tuning (PEFT)

- Let’s start off by defining what parameter-efficient fine-tuning is and give some context on it.

- Parameter-efficient fine-tuning is particularly used in the context of large-scale pre-trained models (such as in NLP), to adapt that pre-trained model to a new task without drastically increasing the number of parameters.

- The challenge is this: modern pre-trained models (like BERT, GPT, T5, etc.) contain hundreds of millions, if not billions, of parameters. Fine-tuning all these parameters on a downstream task, especially when the available dataset for that task is small, can easily lead to overfitting. The model may simply memorize the training data instead of learning genuine patterns. Moreover, introducing additional layers or parameters during fine-tuning can drastically increase computational requirements and memory consumption.

- As mentioned earlier, PEFT allows to only fine-tune a small number of model parameters while freezing most of the parameters of the pre-trained LLM. This helps overcome the catastrophic forgetting issue that full fine-tuned Large Language Models (LLMs) face where the LLM forgets the original task it was trained on after being fine-tuned.

- The image below (source) gives a nice overview of PEFT and its benefits.

Advantages

- Parameter-efficient fine-tuning is useful due the following reasons:

- Reduced computational costs (requires fewer GPUs and GPU time).

- Faster training times (finishes training faster).

- Lower hardware requirements (works with cheaper GPUs with less VRAM).

- Better modeling performance (reduces overfitting).

- Less storage (majority of weights can be shared across different tasks).

Practical use-case

- Credits to the below section go to Pranay Pasula.

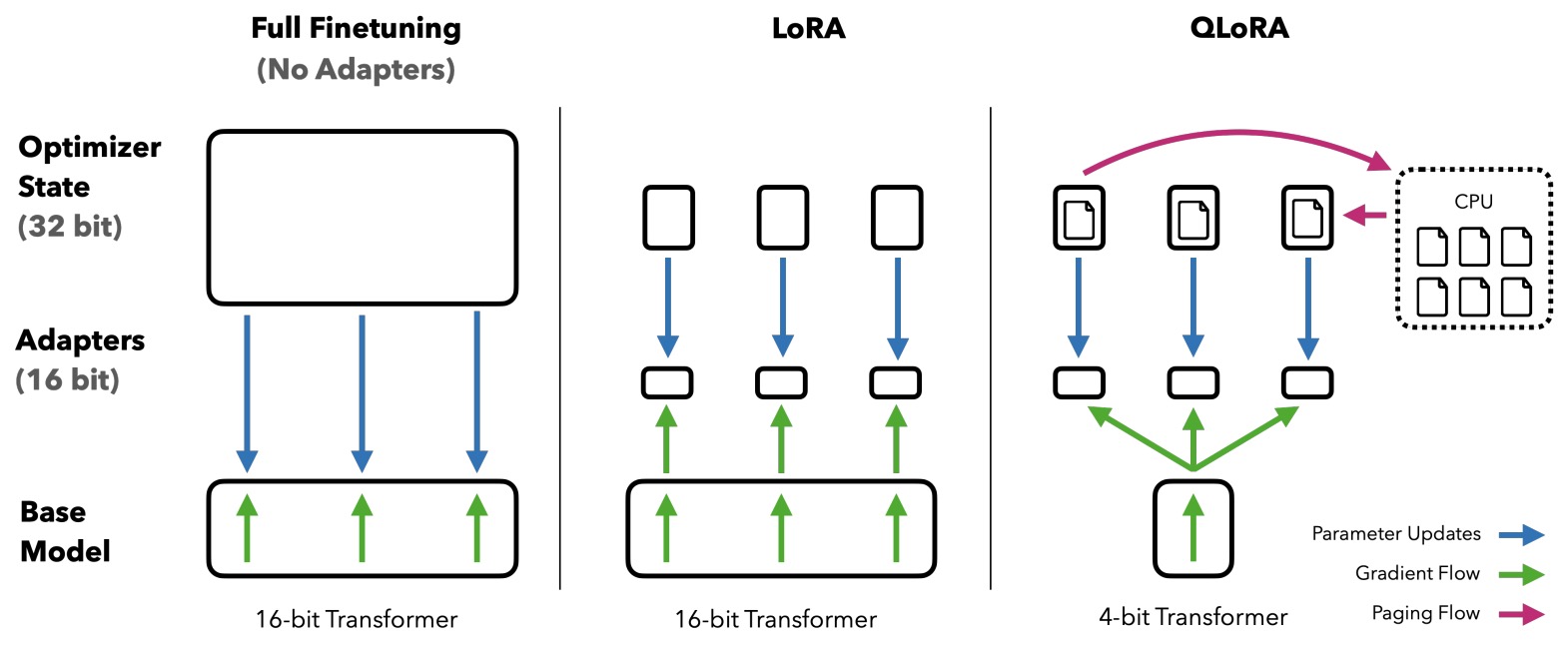

- PEFT obviates the need for 40 or 80GB A100s to make use of powerful LLMs. In other words, you can fine-tune 10B+ parameter LLMs for your desired task for free or on cheap consumer GPUs.

- Using PEFT methods like LoRA, especially 4-bit quantized base models via QLoRA, you can fine-tune 10B+ parameter LLMs that are 30-40GB in size on 16GB GPUs. If it’s out of your budget to buy a 16GB GPU/TPU, Google Colab occasionally offers a 16GB VRAM Tesla T4 for free. Remember to save your model checkpoints every now and then and reload them as necessary, in the event of a Colab disconnect/kernel crash.

- If you’re fine-tuning on a single task, the base models are already so expressive that you need only a few (~10s-100s) of examples to perform well on this task. With PEFT via LoRA, you need to train only a trivial fraction (in this case, 0.08%), and though the weights are stored as 4-bit, computations are still done at 16-bit.

- Note that while a good amount of VRAM is still needed for the fine-tuning process, using PEFT, with a small enough batch size, and little gradient accumulation, can do the trick while still retaining ‘

FP16’ computation. In some cases, the performance on the fine-tuned task can be comparable to that of a fine-tuned 16-bit model. - Key takeaway: You can fine-tune powerful LLMs to perform well on a desired task using free compute. Use a <10B parameter model, which is still huge, and use quantization, PEFT, checkpointing, and provide a small training set, and you can quickly fine-tune this model for your use case.

PEFT methods

- Below, we will delve into individual PEFT methods and delve deeper into their nuances.

Prompt Modifications

Soft Prompt Tuning

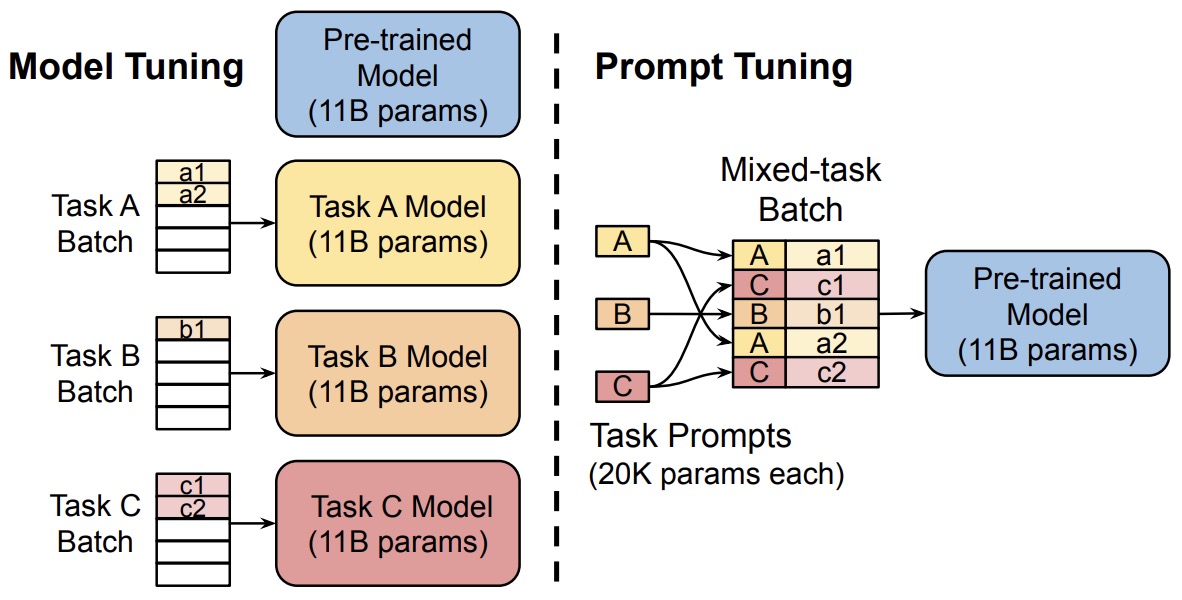

- First introduced in the The Power of Scale for Parameter-Efficient Prompt Tuning; this paper by Lester et al. introduces a simple yet effective method called soft prompt tuning, which prepends a trainable tensor to the model’s input embeddings, essentially creating a soft prompt to condition frozen LLMs to perform specific downstream tasks. Unlike the discrete text prompts, soft prompts are learned through backpropagation and can be fine-tuned to incorporate signals from any number of labeled examples.

- Soft prompt tuning only requires storing a small task-specific prompt for each task, and enables mixed-task inference using the original pre-trained model.

- The authors show that prompt tuning outperforms few-shot learning by a large margin, and becomes more competitive with scale.

- This is an interesting approach that can help to effectively use a single frozen model for multi-task serving.

- Model tuning requires making a task-specific copy of the entire pre-trained model for each downstream task and inference must be performed in separate batches. Prompt tuning only requires storing a small task-specific prompt for each task, and enables mixed-task inference using the original pretrained model. With a T5 “XXL” model, each copy of the tuned model requires 11 billion parameters. By contrast, our tuned prompts would only require 20,480 parameters per task—a reduction of over five orders of magnitude – assuming a prompt length of 5 tokens.

- Thus, instead of using discrete text prompts, prompt tuning employs soft prompts. Soft prompts are learnable and conditioned through backpropagation, making them adaptable for specific tasks.

- Prompt Tuning offers many benefits such as:

- Memory-Efficiency: Prompt tuning dramatically reduces memory requirements. For instance, while a T5 “XXL” model necessitates 11 billion parameters for each task-specific model, prompt-tuned models need a mere 20,480 parameters (assuming a prompt length of 5 tokens).

- Versatility: Enables the use of a single frozen model for multi-task operations.

- Performance: Outshines few-shot learning and becomes more competitive as the scale grows.

Soft Prompt vs. Prompting

- Soft prompt tuning and prompting a model with extra context are both methods designed to guide a model’s behavior for specific tasks, but they operate in different ways. Here’s how they differ:

- Mechanism:

- Soft Prompt Tuning: This involves introducing trainable parameters (soft prompts) that are concatenated or added to the model’s input embeddings. These soft prompts are learned during the fine-tuning process and are adjusted through backpropagation to condition the model to produce desired outputs for specific tasks.

- Prompting with Extra Context: This method involves feeding the model with handcrafted or predefined text prompts that provide additional context. There’s no explicit fine-tuning; instead, the model leverages its pre-trained knowledge to produce outputs based on the provided context. This method is common in few-shot learning scenarios where the model is given a few examples as prompts and then asked to generalize to a new example.

- Trainability:

- Soft Prompt Tuning: The soft prompts are trainable. They get adjusted during the fine-tuning process to optimize the model’s performance on the target task.

- Prompting with Extra Context: The prompts are static and not trainable. They’re designed (often manually) to give the model the necessary context for the desired task.

- Use Case:

- Soft Prompt Tuning: This method is particularly useful when there’s a need to adapt a pre-trained model to various downstream tasks without adding significant computational overhead. Since the soft prompts are learned and optimized, they can capture nuanced information necessary for the task.

- Prompting with Extra Context: This is often used when fine-tuning isn’t feasible or when working with models in a zero-shot or few-shot setting. It’s a way to leverage the vast knowledge contained in large pre-trained models by just guiding their behavior with carefully crafted prompts.

- In essence, while both methods use prompts to guide the model, soft prompt tuning involves learning and adjusting these prompts, whereas prompting with extra context involves using static, handcrafted prompts to guide the model’s behavior.

Prefix Tuning

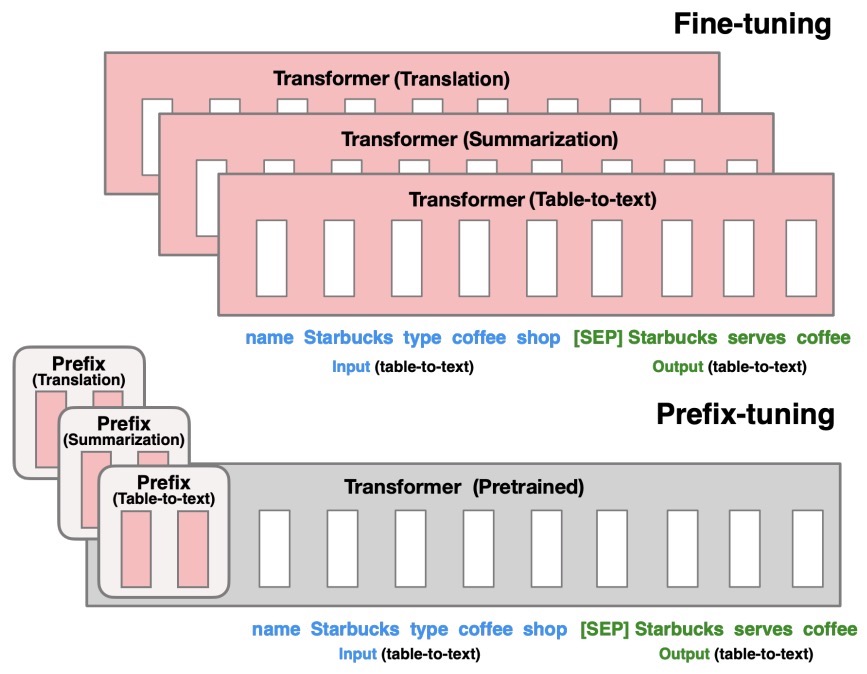

- Proposed in Prefix-Tuning: Optimizing Continuous Prompts for Generation, prefix-tuning is a lightweight alternative to fine-tuning for natural language generation tasks, which keeps language model parameters frozen, but optimizes a small continuous task-specific vector (called the prefix).

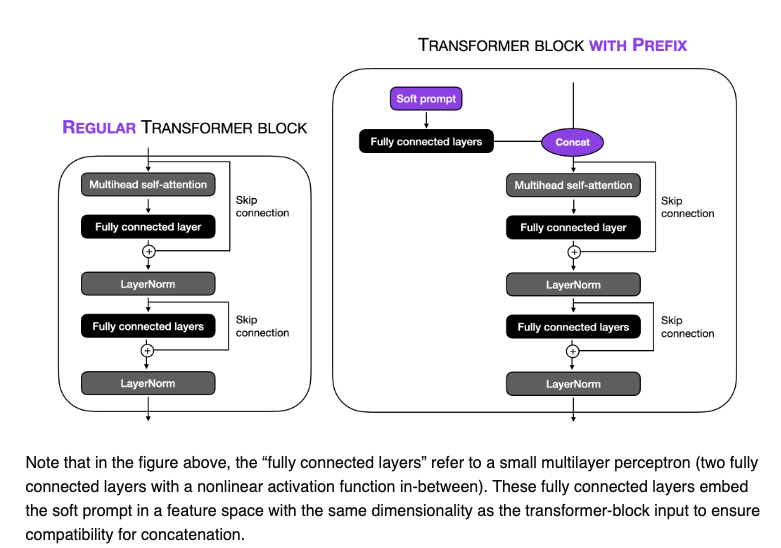

- Instead of adding a soft prompt to the model input, it prepends trainable parameters to the hidden states of all transformer blocks. During fine-tuning, the LM’s original parameters are kept frozen while the prefix parameters are updated.

- Prefix-tuning draws inspiration from prompting, allowing subsequent tokens to attend to this prefix as if it were “virtual tokens”.

- The figure below from the paper shows that fine-tuning (top) updates all Transformer parameters (the red Transformer box) and requires storing a full model copy for each task. They propose prefix-tuning (bottom), which freezes the Transformer parameters and only optimizes the prefix (the red prefix blocks). Consequently, prefix-tuning only need to store the prefix for each task, making prefix-tuning modular and space-efficient. Note that each vertical block denote transformer activations at one time step.

-

They apply prefix-tuning to GPT-2 for table-to-text generation and to BART for summarization. They find that by learning only 0.1% of the parameters, prefix-tuning obtains comparable performance in the full data setting, outperforms fine-tuning in low-data settings, and extrapolates better to examples with topics unseen during training.

-

The image below (source) illustrate how in prefix tuning, trainable tensors are addted to each transformer block instead of only in the input embedding.

Hard prompt tuning

- Hard prompt tuning directly modifies the input prompt to the model. This can involve a vast multitude of things such as:

- We can add examples of outputs we expect from the prompt

- We can add tags specifically relating to our task at hand

- In essence, it is just the modification of the string input, or prompt, to the model.

Adapters

- Adapter layers, often termed “Adapters”, add minimal additional parameters to the pretrained model. These adapters are inserted between existing layers of the network.

- Adapters is a PEFT technique shown to achieve similar performance as compared to tuning the top layers while requiring as fewer parameters as two orders of magnitude.

- Adapter-based tuning simply inserts new modules called “adapter modules” between the layers of the pre-trained network.

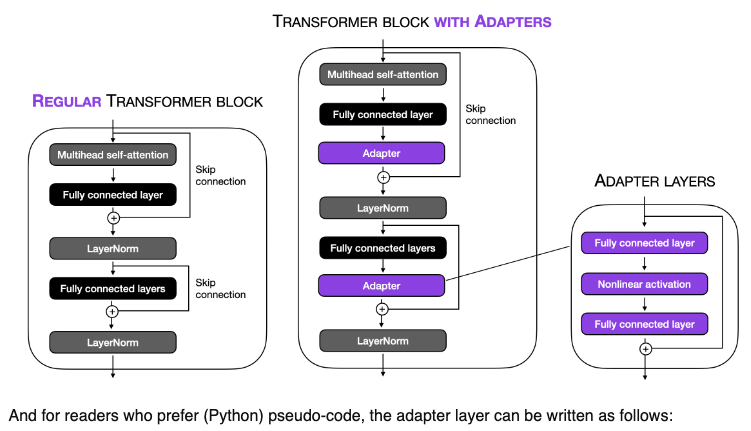

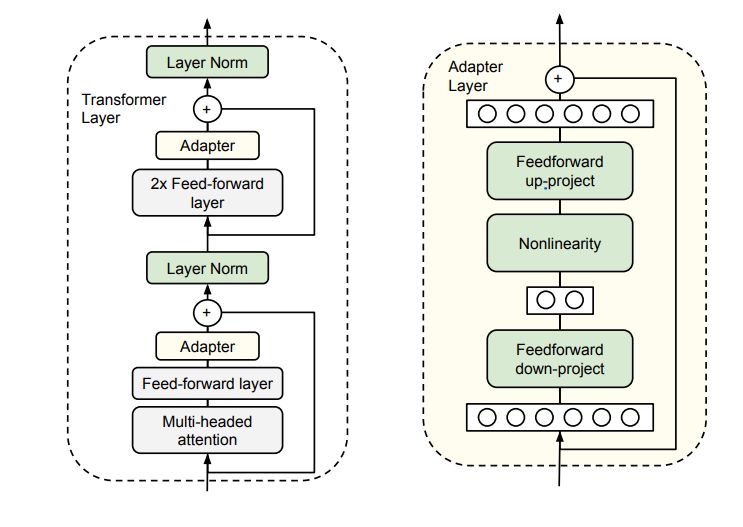

- The image below (source) illustrates this concept for the transformer block:

- During fine-tuning, only the parameters of these adapter layers are updated, while the original model parameters are kept fixed. This results in a model with a small number of additional parameters that are task-specific.

- Keeping the full PT model frozen, these modules are the only optimizable ones while fine-tuning – this means only a very few parameters are introduced per task yielding “compact” models.

- They offer many benefits such as:

- Parameter-Efficiency: By keeping the main model frozen and only updating the adapter layers, a minimal number of parameters are added per task. This results in compact models that are memory-efficient.

- Performance: Despite the small parameter footprint, adapters often achieve performance comparable to conventional fine-tuning.

-

The adapter module consists of two fully connected layers with a bottleneck structure. This structure is inspired by autoencoders, which are designed to encode information into a compressed representation and then decode it back to its original form.

- Here’s how the parameter efficiency is achieved:

-

Bottleneck Structure: The first layer of the adapter reduces the dimensionality of the input (e.g., from 1024 to 24 dimensions). This drastic reduction means that the information from the original 1024 dimensions must be compressed into just 24 dimensions. The second layer then projects these 24 dimensions back to the original 1024 dimensions.

-

Reduction in Parameters: This bottleneck approach significantly reduces the number of parameters. In your example, the total number of parameters introduced by the adapter is 49,152 (from the computation 1024x24 + 24x1024). If we were to use a single fully connected layer to project a 1024-dimensional input to a 1024-dimensional output directly, it would require 1,048,576 parameters (1024x1024).

-

Efficiency Analysis: By using the adapter approach, the number of parameters is substantially lower. Comparing 49,152 parameters to 1,048,576 parameters shows a dramatic reduction, making the adapter much more efficient in terms of parameter usage.

-

Why is this Beneficial?: This efficiency is particularly beneficial when fine-tuning large pre-trained models. Instead of retraining or adapting the entire network (which would be computationally expensive and memory-intensive), adapters allow for targeted adjustments with far fewer additional parameters. This makes the process more manageable and practical, especially when resources are limited.

- The adapter’s bottleneck structure allows it to achieve similar functionality (adapting the model to new tasks or data) as a full-sized layer would, but with a significantly reduced number of parameters. This efficiency makes adapters a popular choice for fine-tuning large pre-trained models in a resource-effective manner.

What is an Adapter Module?

- Let’s look at the application of the adapter module in the transformer architecture in three points:

- The adapter module (right) first projects the original \(d\)-dimensional features into a smaller \(m\)-dimensional vector, applies a non-linearity, and then projects it back to \(d\) dimensions.

- As can be seen, the module features a skip-connection - With it in place, when the parameters of the projection layers are initialized to near-zero which eventually leads to near identity initialization of the module. This is required for stable fine-tuning and is intuitive as with it, we essentially do not disturb the learning from pre-training.

- In a transformer block (left), the adapter is applied directly to the outputs of each of the layers (attention and feedforward).

How do you decide the value of \(m\)?

- The size \(m\) in the Adapter module determines the number of optimizable parameters and hence poses a parameter vs performance tradeoff.

- The original paper experimentally investigates that the performance remains fairly stable across varying adapter sizes \(m\) and hence for a given model a fixed size can be used for all downstream tasks.

LLaMA-Adapters

-

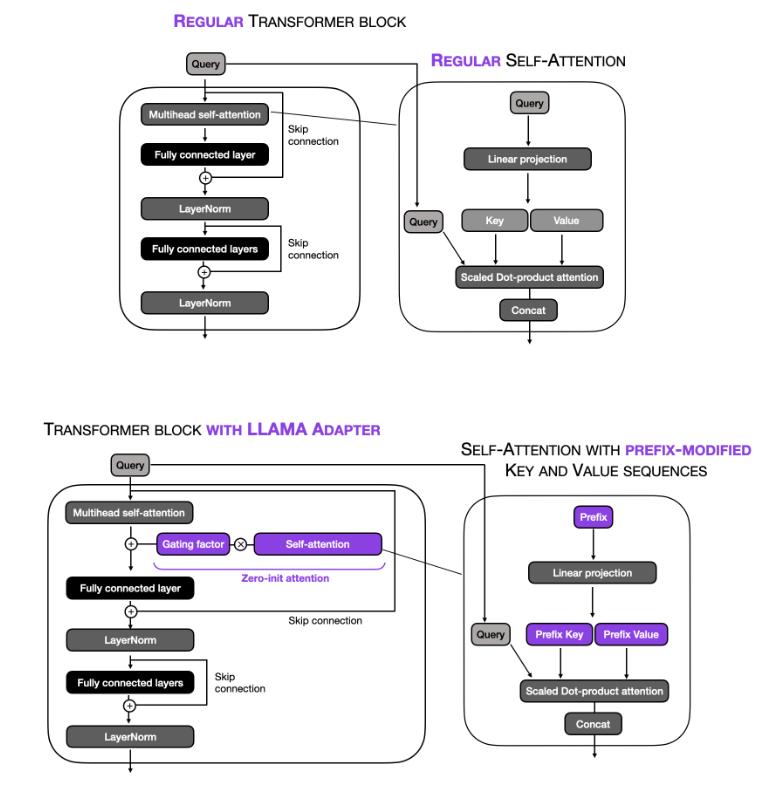

This paper introduces an efficient fine-tuning method called LLaMA-Adapter. This method is designed to adapt the LLaMA model into an instruction-following model with high efficiency in terms of resource usage and time. Key aspects of this paper include:

-

Parameter Efficiency: LLaMA-Adapter introduces only 1.2 million learnable parameters on top of the frozen LLaMA 7B model, which is significantly fewer than the full 7 billion parameters of the model. This approach leads to a more efficient fine-tuning process both in terms of computational resources and time, taking less than one hour on 8 A100 GPUs.

-

Learnable Adaption Prompts: The method involves appending a set of learnable adaption prompts to the input instruction tokens in the higher transformer layers of LLaMA. These prompts are designed to adaptively inject new instructions into the frozen LLaMA while preserving its pre-trained knowledge, effectively guiding the subsequent contextual response generation.

-

Zero-initialized Attention Mechanism: To avoid disturbances from randomly initialized adaption prompts, which can harm fine-tuning stability and effectiveness, the paper proposes a zero-initialized attention mechanism with a learnable gating factor. This mechanism allows for a stable learning process and progressive incorporation of instructional signals during training. It ensures that the newly acquired instructional signals are effectively integrated into the transformer while retaining the pre-trained knowledge of LLaMA.

-

Generalization and Multi-modal Reasoning: LLaMA-Adapter is not only effective for language tasks but can also be extended to multi-modal instructions, allowing for image-conditioned LLaMA models. This capability enables superior reasoning performance on benchmarks like ScienceQA and COCO Caption. Additionally, the approach has demonstrated strong generalization capacity in traditional vision and language tasks.

-

-

In summary, the LLaMA-Adapter represents a significant advancement in the field of parameter-efficient fine-tuning of large LLMs. Its innovative use of learnable adaption prompts and zero-initialized attention mechanism provides a highly efficient method for adapting pre-trained models to new tasks and domains, including multi-modal applications.

-

The image below (source) illustrates this concept below.

Reparameterization

Low-Rank Adaptation (LoRA)

Background

Rank of a Matrix

- The rank of a matrix is a measure of the number of linearly independent rows or columns in the matrix.

- If a matrix has rank 1, it means all rows or all columns can be represented as multiples of each other, so there’s essentially only one unique “direction” in the data.

- A full-rank matrix has rank equal to the smallest of its dimensions (number of rows or columns), meaning all rows and columns are independent.

-

On a related note, a matrix is said to be rank-deficient if it does not have full rank. The rank deficiency of a matrix is the difference between the lesser of the number of rows and columns, and the rank. For more, refer Wikipedia: Rank.

-

Example:

- Consider the following 3x3 matrix \(A\):

-

Step-by-Step to Determine the Rank:

-

Row Reduction: To find the rank, we can use Gaussian elimination to transform the matrix into its row echelon form, making it easier to see linearly independent rows.

After row-reducing \(A\), we get:

\[A = \begin{bmatrix} 1 & 2 & 3 \\ 0 & -3 & -6 \\ 0 & 0 & 0 \end{bmatrix}\] -

Count Independent Rows: Now we look at the rows with non-zero entries:

- The first row \([1, 2, 3]\) is non-zero.

- The second row \([0, -3, -6]\) is also non-zero and independent of the first row.

- The third row is all zeros, which does not contribute to the rank.

Since there are two non-zero, independent rows in the row echelon form, the rank of \(A\) is 2.

-

-

Explanation:

- The rank of 2 indicates that only two rows or columns in \(A\) contain unique information, and the third row (or column) can be derived from a combination of the other two. Essentially, this matrix can be thought of as existing in a 2-dimensional space rather than a full 3-dimensional space, despite its 3x3 size.

- In summary:

- The rank of matrix \(A\) is 2.

- This rank tells us the matrix’s actual dimensionality in terms of its independent information.

Related: Rank of a Tensor

- While LoRA injects trainable low-rank matrices, it is important to understand rank in the context of tensors as well.

-

The rank of a tensor refers to the number of dimensions in the tensor. This is different from the rank of a matrix, which relates to the number of linearly independent rows or columns. For tensors, rank simply tells us how many dimensions or axes the tensor has.

-

Explanation with Examples:

- Scalar (Rank 0 Tensor):

- A scalar is a single number with no dimensions.

- Example:

5or3.14 - Shape:

()(no dimensions) - Rank: 0

- Vector (Rank 1 Tensor):

- A vector is a one-dimensional array of numbers.

- Example:

[3, 7, 2] - Shape:

(3,)(one dimension with 3 elements) - Rank: 1

- Matrix (Rank 2 Tensor):

- A matrix is a two-dimensional array of numbers, like a table.

- Example: \(\begin{bmatrix} 1 & 2 & 3 \\ 4 & 5 & 6 \end{bmatrix}\)

- Shape:

(2, 3)(two dimensions: 2 rows, 3 columns) - Rank: 2

- 3D Tensor (Rank 3 Tensor):

- A 3D tensor can be thought of as a “stack” of matrices, adding a third dimension.

- Example: \(\begin{bmatrix} \begin{bmatrix} 1 & 2 & 3 \\ 4 & 5 & 6 \end{bmatrix}, \begin{bmatrix} 7 & 8 & 9 \\ 10 & 11 & 12 \end{bmatrix} \end{bmatrix}\)

- Shape:

(2, 2, 3)(three dimensions: 2 matrices, each with 2 rows and 3 columns) - Rank: 3

- 4D Tensor (Rank 4 Tensor):

- A 4D tensor might represent multiple “stacks” of 3D tensors.

- Example: In deep learning, a 4D tensor is commonly used to represent batches of color images, with dimensions

[batch size, channels, height, width]. - Shape:

(10, 3, 64, 64)for a batch of 10 images, each with 3 color channels and a resolution of 64x64. - Rank: 4

- Scalar (Rank 0 Tensor):

- General Rule:

- Rank = Number of dimensions (or axes) of the tensor.

- Why Rank Matters:

- The rank of a tensor tells us about its structural complexity and the data it can represent. Higher-rank tensors can represent more complex data structures, which is essential in fields like deep learning, physics simulations, and data science for handling multi-dimensional data.

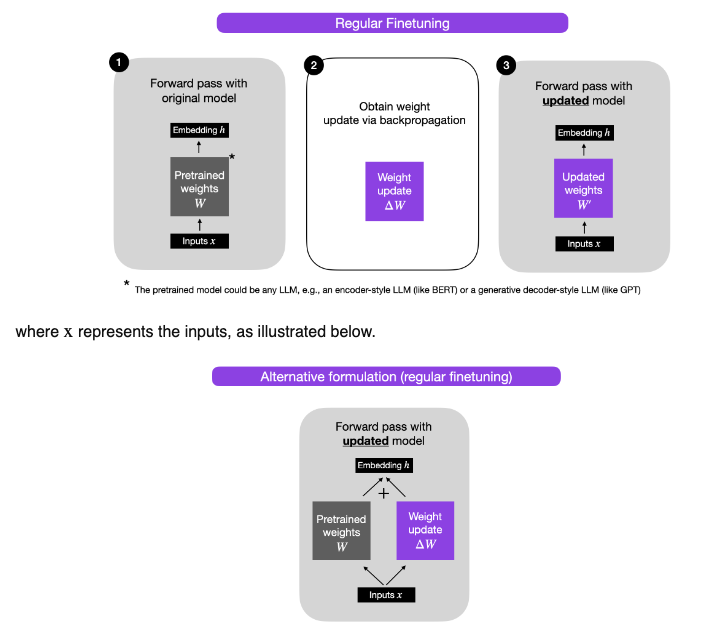

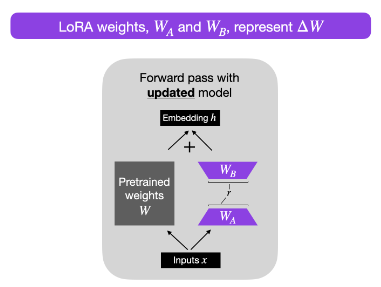

Overview

-

Intrinsic Rank Hypothesis:

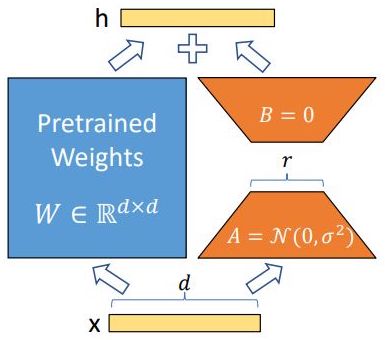

- Low-Rank Adaptation (LoRA) is motivated by the hypothesis that the updates to a model’s weights during task-specific adaptation exhibit a low “intrinsic rank.” This suggests that the weight changes required for effective adaptation are inherently low-dimensional. Thus, LoRA constrains these updates by representing them through low-rank decomposition matrices, enabling efficient adaptation without fine-tuning all model parameters.

-

As illustrated in the figure below from the paper, LoRA leverages this by introducing two trainable low-rank matrices, \(A\) and \(B\), which capture the adaptation. \(A\) (the down-projection matrix) projects the input into a lower-dimensional subspace, while \(B\) (the up-projection matrix) maps it back to the original dimension. Per the LoRA paper by Hu et al. (2021), the matrix \(A\) is initialized with random Gaussian noise (i.i.d. samples from a normal distribution), while the matrix \(B\) is initialized to zeros so that \(\Delta W = B A\) starts as the zero matrix. During training, the product of \(A\) and \(B\) forms a low-rank update matrix that is added to the original, pre-trained weight matrix \(W\) to produce the adapted model output \(h\). This approach allows for efficient adaptation by modifying only a small subset of parameters while keeping the pre-trained weights \(W\) frozen.

- This product, \(BA\), is low-rank because the rank of a matrix product is at most the minimum rank of the two factors. For instance, if \(B\) is a \(d \times r\) matrix and \(A\) is an \(r \times d\) matrix, where \(r\) is much smaller than \(d\), the resulting product \(BA\) will have a maximum rank of \(r\), regardless of the dimensions of \(d\). This means the update to \(W\) is constrained to a lower-dimensional space, efficiently capturing the essential information needed for adaptation.

- For example, if \(d = 1000\) and \(r = 2\), the update matrix \(BA\) will have a rank of at most 2 (since the rank of a product cannot exceed the rank of its factors), significantly reducing the number of parameters and the computational overhead required for fine-tuning. This means \(BA\) is a low-rank approximation that captures only the most essential directions needed for adaptation, thus enabling efficient updates without full matrix adjustments while keeping the pre-trained weights \(W\) frozen.

- Process:

- LoRA fundamentally changes the approach to fine-tuning large neural networks by introducing a method to decompose high-dimensional weight matrices into lower-dimensional forms, preserving essential information while reducing computational load. Put simply, LoRA efficiently fine-tunes large-scale neural networks by introducing trainable low-rank matrices, simplifying the model’s complexity while retaining its robust learning capabilities.

- LoRA is similar to methods like Principal Component Analysis (PCA) and Singular Value Decomposition (SVD), leveraging the observation that weight updates during fine-tuning are often rank-deficient. By imposing a low-rank constraint on these updates, LoRA enables a lightweight adaptation process that captures the most critical directions or “components” in the weight space. This means the model can retain essential knowledge from its pre-training phase while focusing on the specific nuances of the new task, resulting in efficient adaptation with a smaller memory and computational footprint.

- During backpropagation, only the low-rank LoRA matrices (A and B) receive gradient updates; the original pretrained weights remain frozen. Gradients flow through the combined effective weight (W = W_0 + BA), but only the LoRA-specific parameters (A and B) have

requires_grad=True, ensuring that only these low-rank components are optimized while the base model weights remain unchanged.

- Application:

- LoRA’s primary application is in the efficient fine-tuning of large neural networks, particularly in transformer-based architectures. In practice, LoRA identifies key dimensions within the original weight matrix that are crucial for a specific task, significantly reducing the adaptation’s dimensionality.

- Instead of fine-tuning all weights, which is computationally prohibitive for large models, LoRA introduces two trainable low-rank matrices, \(A\) and \(B\), in specific layers of the transformer (e.g., in the query and value projections of the attention mechanism). These matrices are optimized during training while keeping the core model parameters frozen. This architecture adjustment means that only a fraction of the original parameters are actively updated, thus lowering memory usage and enabling faster computations.

- By focusing only on the most relevant dimensions for each task, LoRA enables the deployment of large models on hardware with limited resources and facilitates task-switching by updating only the low-rank matrices without retraining the entire model.

- Benefits:

- LoRA provides several advantages that make it particularly suitable for practical use in industry and research settings where resource constraints are a concern. The primary benefit is in memory and computational efficiency.

- By keeping the majority of the model’s parameters frozen and only updating the low-rank matrices, LoRA significantly reduces the number of trainable parameters, leading to lower memory consumption and faster training times. This reduction in training complexity also means that fewer GPUs or lower-spec hardware can be used to fine-tune large models, broadening accessibility.

- Additionally, LoRA avoids introducing any additional latency during inference because, once the low-rank matrices have been trained, they can be merged back into the pre-trained weights without altering the architecture.

- This setup makes LoRA ideal for environments where models need to switch between tasks frequently, as only the task-specific low-rank weights need to be loaded, allowing the model to quickly adapt to new tasks or datasets with minimal overhead.

- In Summary:

- LoRA represents a highly efficient approach to fine-tuning, striking a balance between the depth of knowledge encapsulated in large pre-trained models and the need for targeted adaptation to new tasks or data.

- By leveraging the low intrinsic rank of weight updates, LoRA retains the model’s robustness and generalization capability while allowing for quick, cost-effective adaptations. This approach redefines efficiency in the era of massive LLMs, making it feasible to use and adapt large-scale pre-trained models across various applications and domains without the extensive computational burden associated with traditional fine-tuning.

- As a recap of traditional finetuning vs. LoRA (source):

- LoRA Without Regret by John Schulman of Thinking Machines shows that, when applied to all layers with the right hyperparameters, LoRA can match full fine-tuning in performance and sample efficiency across most post-training scenarios while being significantly more compute- and memory-efficient.

Advantages

Parameter Efficiency

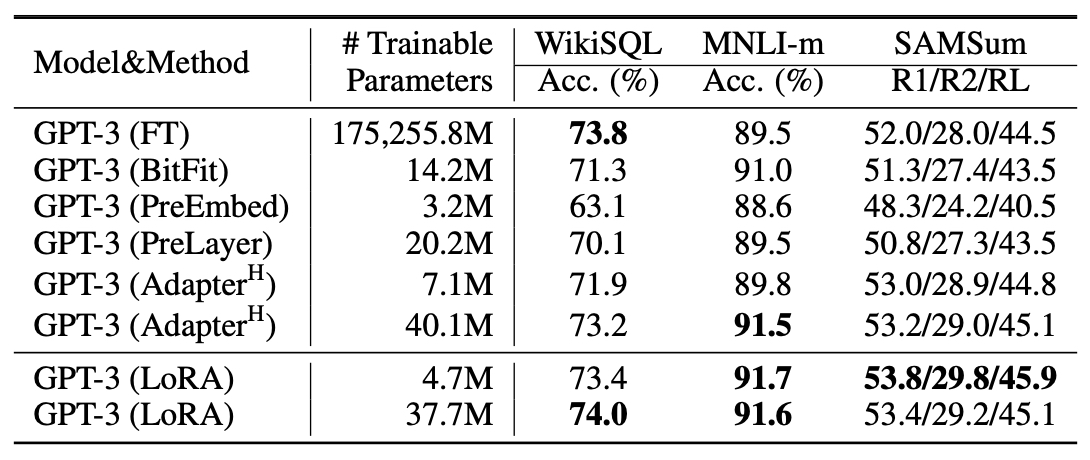

- Compared to full fine-tuning GPT-3 175B with Adam, LoRA can reduce the number of trainable parameters by 10,000 times. Specifically, this means that LoRA only fine-tunes approximately 0.01% of the parameters of the original model.

- The below table from the LoRA paper indicates that for GPT-3 with LoRA, we see that we only fine-tune \(\frac{4.7}{175255} \times 100 = 0.002\%\) and \(\frac{38}{175255} \times 100 = 0.02\%\) parameters.

GPU Memory (and Storage) Savings

- Compared to full fine-tuning GPT-3 175B with Adam, LoRA can reduce the GPU memory requirement by 3 times. Specifically, this means that LoRA fine-tunes the original model with 33% of the memory.

- For a large Transformer trained with Adam, LoRA reduces VRAM usage by up to two-thirds by avoiding the need to store optimizer states for the frozen parameters. On GPT-3 175B, VRAM consumption during training drops from 1.2TB to 350GB. When adapting only the query and value projection matrices with a rank \(r = 4\), the checkpoint size decreases significantly from approximately 350GB to 35MB. This efficiency allows training with significantly fewer GPUs and avoids I/O bottlenecks.

Efficient Task Switching

- Task switching is more cost-effective as only the LoRA weights need swapping, enabling the creation of numerous customized models that can be dynamically swapped on machines storing the pre-trained weights in VRAM.

Faster Training Speed

- Training speed also improves by 25% compared to full fine-tuning, as the gradient calculation for the vast majority of the parameters is unnecessary.

No additional inference latency

- LoRA ensures no additional inference latency when deployed in production by allowing explicit computation and storage of the combined weight matrix \(W = W_0 + BA\). During inference, this approach uses the pre-computed matrix \(W\), which includes the original pre-trained weights \(W_0\) and the low-rank adaptation matrices \(B\) and \(A\). This method eliminates the need for dynamic computations during inference.

- When switching to another downstream task, the pre-trained weights \(W_0\) can be quickly restored by subtracting the current low-rank product \(BA\) and adding the new task-specific low-rank product \(B' A'\). This operation incurs minimal memory overhead and allows for efficient task switching without impacting inference speed. By merging the low-rank matrices with the pre-trained weights in advance, LoRA avoids the extra computational burden during real-time inference (unlike adapters), ensuring latency remains on par with that of fully fine-tuned models.

Limitations

- While LoRA offers significant advantages in terms of parameter efficiency and memory savings, it also has some limitations. One notable limitation is the complexity involved in batching inputs for different tasks when using distinct low-rank matrices \(A\) and \(B\). If the goal is to absorb \(A\) and \(B\) into the combined weight matrix \(W\) to avoid additional inference latency, it becomes challenging to batch inputs from different tasks in a single forward pass. This is because each task would require a different set of \(A\) and \(B\) matrices, complicating the batching process.

- Additionally, although it is possible to avoid merging the weights and dynamically select the appropriate LoRA modules for each sample in a batch, this approach is more feasible in scenarios where latency is not a critical concern. This workaround does not fully address the need for seamless integration when low-latency inference is required across multiple tasks.

- In summary, while LoRA provides a highly efficient adaptation method, the complexity in handling multiple tasks simultaneously and the need for careful management of low-rank matrices during batching are important considerations for its practical deployment.

Hyperparameters

- LoRA-specific hyperparameters include rank (\(r\)) and alpha (\(\alpha\)). Others, while still used for LoRA-based fine-tuning, such as learning rate (lr), dropout probability (\(p\)), and batch size (\(N\)), are more generic to deep learning-based model training/fine-tuning. Here’s a detailed explanation of each:

Rank (\(r\))

-

Description: In LoRA, instead of fine-tuning the full weight matrix, the weight updates are modeled as a low-rank approximation. Specifically, the weight update matrix \(\Delta W\) is decomposed into two smaller matrices, \(A \in \mathbb{R}^{d \times r}\) and \(B \in \mathbb{R}^{r \times k}\), where \(r\) is much smaller than \(d\) or \(k\). The rank (\(r\)) of matrices \(A\) and \(B\) – one of the core hyperparameters in LoRA – represents the rank of the low-rank decomposition applied to the weight matrices. The new weight is then modeled as:

\[W = W_0 + \Delta W = W_0 + A \cdot B\] - Role: The rank controls the dimensionality of the low-rank matrices and hence the number of additional parameters introduced during fine-tuning.

-

Interpretation: Lower values of \(r\) will impose stronger restrictions on how much the weight matrices can adapt, potentially limiting the model’s flexibility but greatly reducing the computational and memory footprint. Higher values of \(r\) allow for more expressive updates but increase the number of parameters and computation required.

-

Equation: In matrix form, for any original weight matrix \(W_0 \in \mathbb{R}^{d \times k}\), the adapted weight update is expressed as:

\[\Delta W = A \cdot B\]- where, \(A \in \mathbb{R}^{d \times r}\) and \(B \in \mathbb{R}^{r \times k}\), where \(r \ll d, k\).

-

Typical Values: 2–16, depending on the size of the model and the complexity of the task. In most tasks, a small rank (e.g., 4 or 8) provides a good trade-off between performance and efficiency.

-

Higher Values: For more complex tasks, larger models, or cases where the pretrained model diverges significantly from the specialized task, higher rank values (e.g., 32, 64, or 128) may be used. Examples include:

- Adapting a general language model to legal contract review, where formal, domain-specific syntax and terminology dominate.

- Fine-tuning for biomedical question answering or clinical note summarization, which involves specialized jargon not well represented in general corpora.

- Tuning for code generation in a low-resource or proprietary programming language.

-

Adapting to historical or archaic language for cultural heritage and digitization tasks.

- These scenarios benefit from higher-rank LoRA modules due to the substantial gap between the pretraining data and the target domain, requiring more capacity to learn meaningful adaptations.

Scaling Factor (\(\alpha\))

-

Description: \(\alpha\) is a scaling factor applied to the LoRA updates. Specifically, it scales the low-rank updates \(A \cdot B\) before adding them to the base weight matrix \(W_0\). The weight update rule becomes:

\[W = W_0 + \frac{\alpha}{r} \cdot (A \cdot B)\] -

Role: The purpose of \(\alpha\) is to control the magnitude of the low-rank updates to prevent the model from diverging too far from the pre-trained weights. By dividing \(\alpha\) by the rank \(r\), LoRA ensures that the update magnitude is normalized according to the size of the low-rank decomposition. This is crucial because a larger rank would introduce more freedom for updates, and the division by \(r\) keeps the updates in check.

-

Interpretation: A higher \(\alpha\) means that the low-rank updates will have a larger impact on the final weight, while a smaller \(\alpha\) means the low-rank updates will contribute less to the adapted model. The division by \(r\) helps keep the effect of the low-rank update consistent across different choices of rank.

-

Equation: The weight update is now written as:

\[\Delta W = \frac{\alpha}{r} \cdot (A \cdot B)\] -

Typical Values: Common values for \(\alpha\) are in the range of 1–32. The typical recommendation is to set \(\alpha = \frac{r}{\text{base rank}}\), where \(\text{base rank}\) is a predetermined scale for the model.

Dropout Probability (\(p\))

-

Description: Dropout is a regularization technique used to prevent overfitting, and it is applied in the LoRA framework as well. The dropout probability (\(p\)) refers to the probability with which a particular element in the low-rank matrices \(A\) and \(B\) is randomly set to zero during training. Dropout is typically used to reduce overfitting by introducing noise during training.

-

Role: The role of dropout in LoRA is to regularize the low-rank weight updates and ensure they do not overfit to the fine-tuning data. By randomly zeroing out parts of the matrices, the model learns more robust and generalizable updates.

-

Interpretation: Higher values of dropout probability \(p\) imply more aggressive regularization, which can reduce overfitting but also may slow down learning. Lower values of \(p\) imply less regularization and could potentially lead to overfitting on small datasets.

-

Equation: The dropout operation is typically represented as:

\[A_{dropped} = A \odot \text{Bernoulli}(1-p)\]- where, \(\odot\) denotes element-wise multiplication, and \(\text{Bernoulli}(1-p)\) is a binary mask where each element is independently drawn from a Bernoulli distribution with probability \(1 - p\).

-

Typical Values: Dropout probabilities \(p\) are typically set between 0.0 (no dropout) and 0.3 for LoRA tasks.

-

Learning Rate (\(\eta\))

-

Description: The learning rate is a fundamental hyperparameter in any optimization process, and it determines the step size at which the model’s parameters are updated during training. In the context of LoRA, it controls the update of the low-rank matrices \(A\) and \(B\) rather than the full model weights.

-

Role: The learning rate governs how fast or slow the low-rank matrices adapt to the new task. A high learning rate can lead to faster convergence but risks overshooting the optimal solution, while a small learning rate can provide more stable convergence but might take longer to adapt to the new task.

-

Interpretation: A higher learning rate might be used in the early stages of fine-tuning to quickly adapt to the new task, followed by a lower rate to refine the final performance. However, too high a learning rate may destabilize training, especially when \(\alpha\) is large.

-

Equation: The update to the low-rank parameters follows the standard gradient descent update rule:

\[\theta_{t+1} = \theta_t - \eta \cdot \nabla_{\theta} L\]Where \(L\) is the loss function, \(\nabla_{\theta} L\) is the gradient of the loss with respect to the low-rank parameters \(\theta\), and \(\eta\) is the learning rate.

-

Typical Values: Learning rates for LoRA typically range from \(10^{-5}\) to \(10^{-3}\), depending on the model, the task, and the scale of adaptation needed.

-

Batch Size (\(N\))

-

Description: The batch size is the number of examples that are passed through the model at one time before updating the weights. It is a crucial hyperparameter for stabilizing the training process.

-

Role: In LoRA, the batch size affects how stable and efficient the low-rank adaptation process is. A larger batch size can stabilize the gradient estimates and speed up convergence, while smaller batches introduce more noise into the gradient, which may require a smaller learning rate to maintain stability.

-

Interpretation: Smaller batch sizes allow for faster updates but with noisier gradients, whereas larger batch sizes reduce noise but require more memory. Finding the right balance is important for both computational efficiency and effective adaptation.

-

Equation: The loss for a given batch of size \(N\) is averaged over the batch:

\[L_{\text{batch}} = \frac{1}{N} \sum_{i=1}^{N} L_i\]- where, \(L_i\) is the loss for the \(i\)-th example in the batch.

-

Typical Values: Batch sizes can vary widely depending on the available hardware resources. Typical values range from 8 to 64.

-

Summary

- The main hyperparameters involved in LoRA—rank (\(r\)), alpha (\(\alpha\)), dropout probability (\(p\)), learning rate (\(\eta\)), and batch size (\(N\))—are crucial for controlling the behavior and effectiveness of LoRA. By adjusting these parameters, LoRA can offer an efficient way to fine-tune large pre-trained models with significantly reduced computational costs and memory usage while maintaining competitive performance. Each of these hyperparameters impacts the trade-off between model flexibility, computational efficiency, and training stability.

- These hyperparameters are interconnected, especially scaling factor and rank; changes in one can require adjustments in others; more on this in the section on Is There a Relationship Between Setting Scaling Factor and Rank in LoRA?. Effective tuning of these parameters is critical for leveraging LoRA’s capabilities to adapt large models without extensive retraining.

How does having a low-rank matrix in LoRA help the fine-tuning process?

- In LoRA, a low-rank matrix is a matrix with a rank significantly smaller than its full dimensionality, which enables efficient and focused adjustments to model parameters. This lightweight adaptation mechanism allows large LLMs to learn new tasks without overfitting by capturing only the most essential adjustments, thus optimizing both information representation and parameter efficiency.

What is a Low-rank Matrix?

- A matrix is considered low-rank when its rank (the number of independent rows or columns) is much smaller than its dimensions. For example, a 1000x1000 matrix with rank 10 is low-rank because only 10 of its rows or columns contain unique information, and the others can be derived from these. This smaller rank indicates that the matrix contains a limited variety of independent patterns or directions, meaning it has a reduced capacity to capture complex relationships.

Low-Rank in LoRA Context

- In LoRA, low-rank matrices are introduced to fine-tune large LLMs with fewer trainable parameters. Here’s how it works:

- Adding Low-Rank Matrices: LoRA adds small, low-rank matrices to the model’s layers (typically linear or attention layers). These matrices serve as “adaptation” layers that adjust the original layer’s output.

- Freezing the Original Weights: The original model weights remain frozen during fine-tuning. Only the low-rank matrices are trained, which reduces the number of parameters to update.

- By limiting the rank of these new matrices, LoRA effectively limits the number of patterns they can represent. For instance, a rank-5 matrix in a high-dimensional space can only capture 5 independent directions, which forces the model to learn only essential, low-dimensional adjustments without becoming too complex.

Example

- Suppose we have a pre-trained model layer represented by a 512x512 matrix (common in large LLMs). Instead of fine-tuning this large matrix directly, LoRA adds two low-rank matrices, \(A\) and \(B\), with dimensions 512x10 and 10x512, respectively. Here:

- The product \(A \times B\) has a rank of 10, much smaller than 512.

- This product effectively adds a low-rank adaptation to the original layer, allowing it to adjust its output in just a few key directions (10 in this case), rather than making unrestricted adjustments.

Why rank matters

- The rank of the LoRA matrices directly affects the model’s ability to learn task-specific patterns:

- Lower Rank: Imposes a strong constraint on the model, which helps it generalize better and reduces the risk of overfitting.

- Higher Rank: Provides more flexibility but also increases the risk of overfitting, as the model can learn more complex adjustments that may fit the fine-tuning data too closely.

How does low-rank constraint introduced by LoRA inherently act as a form of regularization, especially for the lower layers of the model?

- In LoRA, the low-rank constraint serves as a built-in regularization mechanism by limiting the model’s flexibility during fine-tuning. This constraint especially impacts lower layers, which are designed to capture general, foundational features. By further restricting these layers, LoRA minimizes their adaptation to task-specific data, reducing the risk of overfitting. This regularization preserves the model’s foundational knowledge in the lower layers, while allowing the higher layers—where task-specific adjustments are most beneficial—to adapt more freely.

Low-Rank Constraint as Regularization

-

Low-Rank Matrices Limit Complexity: By adding only low-rank matrices to the model’s layers, LoRA restricts the model’s capacity to represent highly complex, task-specific patterns. A low-rank matrix has fewer independent “directions” or dimensions in which it can vary. This means that the model, even when fine-tuned, can only make adjustments within a constrained range, learning broad, generalizable patterns rather than memorizing specific details of the training data. This limited capacity serves as a form of regularization, preventing the model from overfitting.

-

Reduced Sensitivity to Noisy Patterns: Low-rank matrices inherently ignore minor or highly detailed variations in the training data, focusing only on dominant, overarching patterns. This makes LoRA less sensitive to the idiosyncrasies of the fine-tuning dataset, enhancing the model’s robustness and generalization ability.

Effect on Lower Layers

- The lower layers of a neural network, especially in a transformer model, are primarily responsible for extracting general-purpose features from the input data. In LLMs, for example:

- Lower layers capture basic syntactic relationships, such as sentence structure and word dependencies.

- These layers learn representations that are widely applicable across tasks and domains.

- Because these lower layers are already optimized to represent broad, generalizable patterns from pre-training, they are naturally less flexible and more constrained in what they capture compared to higher layers, which focus on more task-specific details. Adding a low-rank constraint in LoRA further reinforces this effect:

-

Enhanced Regularization on Lower Layers: Since lower layers are already constrained to capture only general patterns, the addition of a low-rank constraint essentially adds a second layer of regularization. This means that these layers become even less likely to adapt in ways that would compromise their general-purpose functionality. The low-rank constraint reinforces their role as foundational feature extractors, preserving their generalization capability while preventing overfitting on the specific details of the fine-tuning data.

-

Minimal Disruption of Pre-Trained Knowledge: The low-rank adaptation in LoRA ensures that lower layers maintain the knowledge they acquired during pre-training. Because these layers are regularized by the low-rank constraint, they are less likely to overfit to new data patterns introduced during fine-tuning. This preservation of pre-trained knowledge is crucial for maintaining the model’s transferability to other tasks or domains, as lower layers retain their broad, foundational representations.

Why This Matters for Generalization

- When fine-tuning with LoRA:

- Higher Layers Adapt More Easily: Higher layers, being closer to the output, are more adaptable and can more readily accommodate task-specific changes introduced during fine-tuning.

- Lower Layers Remain Generalized: Lower layers, reinforced by the low-rank constraint, retain their focus on general patterns. This balanced approach helps the model generalize well to unseen data because the lower layers still provide robust, general-purpose representations while the higher layers adapt to the new task.

How does LoRA help avoid catastrophic forgetting?

-

LoRA helps prevent catastrophic forgetting by fine-tuning large pre-trained models in a way that preserves their foundational knowledge while allowing for task-specific adaptations. Catastrophic forgetting occurs when fine-tuning neural networks, particularly large pre-trained models, causes them to overwrite or disrupt previously learned information, reducing performance on earlier tasks. LoRA mitigates this risk through a few key strategies:

- Freezing Original Weights: The core model parameters remain untouched, preserving the base knowledge and preventing interference.

- Introducing Low-Rank Matrices: These matrices have limited capacity, focusing solely on task-specific adjustments, which allows the model to adapt to new tasks without losing general knowledge.

- Targeting Specific Layers: LoRA typically modifies higher attention layers, avoiding disruption to fundamental representations in lower layers.

- Parameter-Efficient, Modular Adaptation: LoRA’s modular design allows for reversible, task-specific adjustments, making it suitable for flexible multi-task and continual learning.

-

Through this approach, LoRA enables large models to adapt efficiently to new tasks while retaining previously learned information, which is especially valuable for applications requiring retention of prior knowledge.

Freezing the Original Weights

- One of the core aspects of LoRA is that it freezes the original model weights and adds new, low-rank matrices that handle the fine-tuning process:

- The frozen original weights retain the model’s general knowledge from pre-training. This means that core information, patterns, and representations acquired from extensive pre-training on large datasets remain unaffected.

- Since only the low-rank matrices are adjusted for the new task, there is no direct modification of the original weights. This minimizes the risk of overwriting or disrupting the knowledge captured in those weights.

- By keeping the original parameters intact, LoRA avoids catastrophic forgetting in a way that typical fine-tuning (where the original weights are updated) does not.

Low-Rank Adaptation Layers for Task-Specific Adjustments

- LoRA introduces low-rank matrices as additional layers to the model, which have the following properties:

- Limited Capacity: Low-rank matrices have a constrained capacity to represent new information, which forces them to focus only on essential, task-specific adaptations. This means they cannot significantly alter the underlying model’s behavior, preserving the broader general knowledge.

- Focused Adaptation: By adding task-specific information via low-rank matrices rather than altering the model’s entire parameter space, LoRA ensures that the new task-specific changes are confined to these auxiliary matrices. This helps the model adapt to new tasks without losing its prior knowledge.

Layer-Specific Impact

- LoRA often targets specific layers in the model, commonly the attention layers:

- Higher Attention Layers: These layers (closer to the output) are responsible for more task-specific representations and are typically the ones modified by LoRA. This selective adaptation means that the deeper, more task-general features in lower layers are left intact, reducing the risk of catastrophic forgetting.

- Minimal Lower-Layer Impact: Since lower layers (closer to the input) remain unchanged or minimally affected, the model retains the general-purpose, foundational features learned during pre-training, which are crucial for generalization.

- This selective impact allows LoRA to introduce new, task-specific representations while preserving fundamental information, balancing new task learning with knowledge retention.

Parameter-Efficient Fine-Tuning

- LoRA is designed for parameter-efficient fine-tuning, meaning it uses a fraction of the parameters that traditional fine-tuning would require:

- LoRA adds only a small number of new parameters through the low-rank matrices. This efficiency keeps the model changes lightweight, making it less likely to interfere with the original model’s representations.

- The low-rank constraint also regularizes the fine-tuning process, helping to prevent overfitting to the new task, which can indirectly support retention of general knowledge. Overfitting can cause catastrophic forgetting if the model becomes too specialized, as it loses flexibility in dealing with tasks beyond the fine-tuning data.

Easy Reversibility

- Since LoRA’s approach is to add new matrices rather than alter the original model’s weights, it makes it easy to revert the model to its original state or apply it to different tasks:

- The low-rank matrices can be removed or swapped out without affecting the base model. This modularity allows for rapid switching between tasks or models, making it easy to adapt the model to different tasks while maintaining the pre-trained knowledge.

- This adaptability is particularly useful for multi-task learning or continual learning, as it allows LoRA-enhanced models to apply distinct low-rank adaptations for different tasks without compromising the model’s underlying pre-trained knowledge.

Modular and Reusable Adapters

- With LoRA, fine-tuning for different tasks can be achieved by creating different low-rank matrices for each new task:

- These modular, reusable matrices enable task-specific tuning without overwriting previous adaptations or the original model. This is especially valuable for applications where the model needs to perform multiple tasks or domains interchangeably.

- By associating each task with its own set of low-rank matrices, LoRA enables the model to maintain knowledge across tasks without interference, effectively circumventing catastrophic forgetting.

How does multiplication of two low-rank matrices in LoRA lead to lower attention layers being impacted less than higher attention layers?

- In LoRA, the use of low-rank matrices enables efficient, controlled updates by selectively applying them to specific layers—primarily in the higher attention layers rather than the lower ones. This targeted approach allows the model to adjust effectively to task-specific nuances in these higher layers, which capture more complex patterns and contextual information, while preserving the general features encoded in the lower layers. By focusing fine-tuning efforts on the higher layers, LoRA minimizes overfitting and retains foundational knowledge from pre-training, making it an efficient and effective fine-tuning strategy.

Role of Low-Rank Matrices in LoRA

- LoRA adds two low-rank matrices, \(A\) and \(B\), to certain layers, typically in the form:

\(W_{\text{new}} = W + A \times B\)

- where:

- \(W\) is the original (frozen) weight matrix in the model layer.

- \(A\) and \(B\) are low-rank matrices (with ranks much smaller than the original dimensionality of \(W\)), creating a low-rank adaptation.

- where:

- The product \(A \times B\) has a limited rank and thus introduces only a restricted adjustment to \(W\). This adjustment constrains the layer to learn only a few independent patterns rather than a full set of complex, task-specific transformations.

Higher Attention Layers: Task-Specific Focus

- In large models, higher attention layers (closer to the output) tend to capture task-specific, abstract features, while lower attention layers (closer to the input) capture general, reusable patterns. By applying LoRA-based fine-tuning primarily to higher attention layers:

- The model’s low-rank adaptation focuses on high-level, task-specific adjustments rather than modifying general representations.

- Higher layers, which already deal with more specific information, are more sensitive to the small adjustments made by \(A \times B\) since they directly influence task-related outputs.

- In practice, LoRA-based fine-tuning modifies these higher layers more significantly because these layers are more directly responsible for adapting the model to new tasks. Lower layers, in contrast, require less task-specific adjustment and retain their general-purpose features.

Limited Capacity of Low-Rank Matrices and Layer Impact

- The low-rank matrices \(A\) and \(B\) have limited expressive power (due to their low rank), meaning they can only introduce a small number of directional adjustments in the weight space. This limited capacity aligns well with higher layers because:

- Higher layers don’t need drastic changes but rather subtle adjustments to fine-tune the model to specific tasks.

- The constraint imposed by low-rank matrices helps avoid overfitting by restricting the number of learned patterns, which is ideal for the high-level, abstract representations in higher layers.

- For lower layers, which capture broad, general-purpose features, such limited adjustments don’t significantly impact the model. Lower layers still operate with the general features learned during pre-training, while higher layers adapt to task-specific details.

Why Lower Layers are Less Affected

- Lower layers in the attention stack are less impacted by LoRA’s low-rank updates because:

- They are often not fine-tuned at all in LoRA-based setups, preserving the general features learned during pre-training.

- Even when fine-tuned with low-rank matrices, the limited capacity of \(A \times B\) is not sufficient to drastically alter their broader, foundational representations.

In LoRA, why is \(A\) initialized using a Gaussian and \(B\) set to 0?

- In LoRA, the initialization strategy where matrix \(A\) is initialized with a Gaussian distribution and matrix \(B\) is set to zero is crucial for ensuring a smooth integration of the adaptation with minimal initial disruption to the pre-trained model. This approach is designed with specific goals in mind:

Preserving Initial Model Behavior

- Rationale: By setting \(B\) to zero, the product \(\Delta W = BA\) initially equals zero. This means that the adapted weights do not alter the original pre-trained weights at the beginning of the training process.

- Impact: This preserves the behavior of the original model at the start of fine-tuning, allowing the model to maintain its pre-trained performance and stability. The model begins adaptation from a known good state, reducing the risk of drastic initial performance drops.

Gradual Learning and Adaptation

- Rationale: Starting with \(\Delta W = 0\) allows the model to gradually adapt through the updates to \(B\) during training. This gradual adjustment is less likely to destabilize the model than a sudden, large change would.

- Impact: As \(B\) starts updating from zero, any changes in the model’s behavior are introduced slowly. This controlled adaptation is beneficial for training dynamics, as it allows the model to incrementally learn how to incorporate new information effectively without losing valuable prior knowledge.

Ensuring Controlled Updates

- Rationale: Gaussian initialization of \(A\) provides a set of initial values that, while random, are statistically regularized by the properties of the Gaussian distribution (such as having a mean of zero and a defined variance). This regularity helps in providing a balanced and predictable set of initial conditions for the adaptation process.

- Impact: The Gaussian distribution helps ensure that the values in \(A\) are neither too large nor too biased in any direction, which could lead to disproportionate influence on the updates when \(B\) begins to change. This helps in maintaining a stable and effective learning process.

Focused Adaptation

- Rationale: The low-rank matrices \(A\) and \(B\) are intended to capture the most essential aspects of the new data or tasks relative to the model’s existing capabilities. By starting with \(B = 0\) and \(A\) initialized randomly, the learning focuses on identifying and optimizing only those aspects that truly need adaptation, as opposed to re-learning aspects that the model already performs well.

-

Impact: This focus helps optimize training efficiency by directing computational resources and learning efforts towards making meaningful updates that enhance the model’s capabilities in specific new areas.

- This initialization strategy supports the overall goal of LoRA: to adapt large, pre-trained models efficiently with minimal resource expenditure and without compromising the foundational strengths of the original model. This approach ensures that any new learning builds on and complements the existing pre-trained model structure.

For a given task, how do we determine whether to fine-tune the attention layers or feed-forward layers?

- Deciding whether to fine-tune the attention layers or the feed-forward (MLP) layers in a model adapted using LoRA involves several considerations. These include the nature of the task, the model architecture, and the distribution of parameters between attention and feed-forward layers.

- Note that the LoRA paper originally only adapted the attention weights for downstream tasks and froze the MLP modules (so they are not trained in downstream tasks) both for simplicity and parameter-efficiency. Thus, the number of attention weights relative to feed-forward weights can impact the choice of .

- Here are some key factors to guide this decision:

Nature of the Task

- Task Requirements: Attention mechanisms are particularly effective for tasks that benefit from modeling relationships between different parts of the input, such as sequence-to-sequence tasks or tasks requiring contextual understanding. If the task demands strong relational reasoning or context sensitivity, fine-tuning attention layers might be more beneficial.

- Feed-Forward Layer Role: MLPs generally focus on transforming the representation at individual positions without considering other positions. They are effective for tasks requiring more substantial non-linear transformation of features. If the task demands significant feature transformation at individual positions, MLPs may need adaptation.

Model Architecture

- Proportion of Parameters: In transformer architectures, MLPs typically contain a larger number of parameters compared to attention mechanisms (of the order of 2x to 5x). For example, in standard configurations like those seen in BERT or GPT models, the MLPs can contain around three times more parameters than the attention layers.

- Impact on Efficiency: Because MLPs are parameter-heavy, fine-tuning them can significantly increase the number of trainable parameters, impacting training efficiency and computational requirements. If parameter efficiency is a priority, you might opt to adapt only the attention layers, as originally done in the LoRA approach.

Computational Constraints

- Resource Availability: The decision can also be influenced by available computational resources. Adapting attention layers only can save computational resources and training time, making it a preferable option when resources are limited.

- Balance of Adaptation and Performance: If computational resources allow, experimenting with both components can be useful to understand which contributes more to performance improvements on specific tasks.

Empirical Testing

- A/B Testing: One effective way to determine the optimal strategy for a specific model and task is to conduct empirical tests where you fine-tune the attention layers alone, the MLP layers alone, and both together in different experiments to compare the performance impacts.

- Performance Metrics: Monitoring key performance metrics specific to the task during these tests will guide which components are more critical to fine-tune.

Task-Specific Research and Insights

-

Literature and Benchmarks: Insights from research papers and benchmarks on similar tasks can provide guidelines on what has worked well historically in similar scenarios. For example, tasks that require nuanced understanding of input relationships (like question answering or summarization) might benefit more from tuning attention mechanisms.

-

In summary, the choice between tuning attention or MLP layers depends on the specific demands of the task, the model’s architecture, the balance of parameters, and empirical results. Considering these aspects can help in making a decision that optimizes both performance and efficiency.

Assuming we’re fine-tuning attention weights, which specific attention weight matrices should we apply LoRA to?

- The question of which attention weight matrices in the transformer architecture should be adapted using LoRA to optimize performance on downstream tasks is central to maximizing the effectiveness of parameter usage, especially when dealing with large models like GPT-3. Based on the findings reported in the LoRA paper and the specific experiment mentioned, here’s a detailed explanation and recommendation:

Context and Setup

- The LoRA paper explores the adaptation of various weight matrices within the self-attention module of GPT-3 under a limited parameter budget. With a constraint of 18 million trainable parameters, the authors tested different configurations of adapting the weights associated with the query (\(W_q\)), key (\(W_k\)), value (\(W_v\)), and output (\(W_o\)) matrices. This setup allows for a comparison of the effectiveness of adapting different combinations of weights at varying ranks.

Experimental Findings

- Parameter Allocation: The experiment considered adapting individual weight types at a rank of 8 and combinations of weights at lower ranks (4 and 2) due to the fixed parameter budget. This arrangement allowed assessing whether it is more beneficial to distribute the available parameters across multiple weight types or concentrate them on fewer weights at a higher rank.

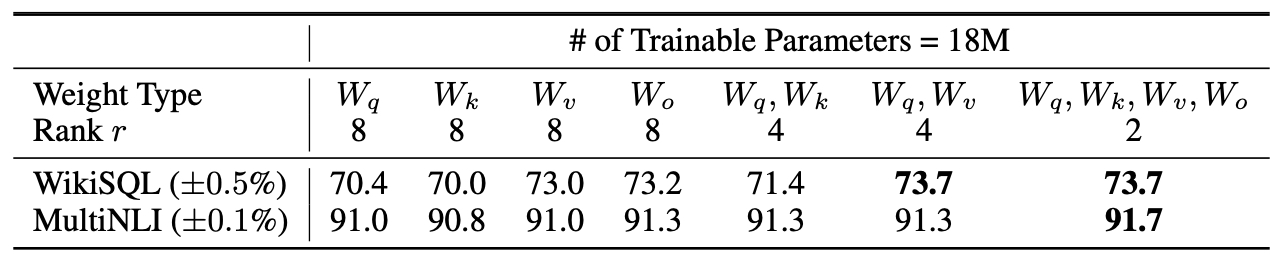

- Performance Metrics: The validation accuracies on the WikiSQL and MultiNLI datasets served as the primary performance indicators. The results show varying degrees of success depending on which weights were adapted and how the ranks were distributed. The table below from the LoRA paper shows validation accuracy on WikiSQL and MultiNLI after applying LoRA to different types of attention weights in GPT-3, given the same number of trainable parameters. Adapting both \(W_q\) and \(W_v\) gives the best performance overall. They find the standard deviation across random seeds to be consistent for a given dataset, which they report in the first column.

Key Results and Recommendations

- Single vs. Multiple Weight Adaptation: Adapting single weight matrices (\(W_q\), \(W_k\), \(W_v\), or \(W_o\) individually) at a higher rank generally resulted in lower performance compared to adapting combinations of weights at a reduced rank. Specifically, putting all parameters in ∆\(W_q\) or ∆\(W_k\) alone did not yield optimal results.

- Optimal Combination: The combination of adapting both \(W_q\) and \(W_v\) at a rank of 4 emerged as the most effective strategy, achieving the highest validation accuracies on both datasets. This suggests a balanced approach to distributing the parameter budget across multiple types of attention weights, rather than focusing on a single type, leads to better performance.

- Effectiveness of Rank Distribution: The result indicates that a lower rank (such as 4) is sufficient to capture essential adaptations in the weights, making it preferable to spread the parameter budget across more types of weights rather than increasing the rank for fewer weights.

Conclusion and Strategy for Applying LoRA

- Based on these findings, when applying LoRA within a limited parameter budget, it is advisable to:

- Distribute Parameters Across Multiple Weights: Focus on adapting multiple types of attention weights (such as \(W_q\) and \(W_v\)) rather than a single type, as this approach leverages the synergistic effects of adapting multiple aspects of the attention mechanism.

- Use Lower Ranks for Multiple Weights: Opt for a lower rank when adapting multiple weights to ensure that the parameter budget is used efficiently without compromising the ability to capture significant adaptations.

- This strategy maximizes the impact of the available parameters by enhancing more dimensions of the self-attention mechanism, which is crucial for the model’s ability to understand and process input data effectively across different tasks.

Is there a relationship between setting scaling factor and rank in LoRA?

- In the LoRA framework, the relationship between the scaling factor \(\alpha\) and the rank \(r\) of the adaptation matrices \(A\) and \(B\) is an important consideration for tuning the model’s performance and managing how adaptations are applied to the pre-trained weights. Both \(\alpha\) and \(r\) play significant roles in determining the impact of the low-rank updates on the model, and their settings can influence each other in terms of the overall effect on the model’s behavior.

Understanding \(\alpha\) and \(r\)

- Scaling Factor \(\alpha\): This parameter scales the contribution of the low-rank updates \(\Delta W = BA\) before they are applied to the original model weights \(W\). It controls the magnitude of changes introduced by the adaptation, effectively modulating how aggressive or subtle the updates are.

- Rank \(r\): This determines the dimensionality of the low-rank matrices \(A\) and \(B\). The rank controls the expressiveness of the low-rank updates, with higher ranks allowing for more complex adaptations but increasing computational costs and potentially the risk of overfitting.

Relationship and Interaction

- Balancing Impact: A higher rank \(r\) allows the model to capture more complex relationships and nuances in the adaptations, potentially leading to more significant changes to the model’s behavior. In such cases, \(\alpha\) might be adjusted downward to temper the overall impact, ensuring that the modifications do not destabilize the model’s pre-trained knowledge excessively.

- Adjusting for Subtlety: Conversely, if the rank \(r\) is set lower, which constrains the flexibility and range of the updates, \(\alpha\) may need to be increased to make the limited updates more impactful. This can help ensure that the adaptations, though less complex, are sufficient to achieve the desired performance improvements.

- Experimental Tuning: The optimal settings for \(\alpha\) and \(r\) often depend on the specific task, the dataset, and the desired balance between adapting to new tasks and retaining generalizability. Experimentation and validation are typically necessary to find the best combination.

Practical Considerations

- Overfitting vs. Underfitting: Higher ranks with aggressive scaling factors can lead to overfitting, especially when the model starts fitting too closely to nuances of the training data that do not generalize well. Conversely, too low a rank and/or too conservative an \(\alpha\) might lead to underfitting, where the model fails to adapt adequately to new tasks.

- Computational Efficiency: Higher ranks increase the number of parameters and computational costs. Balancing \(\alpha\) and \(r\) can help manage computational demands while still achieving meaningful model improvements.

Conclusion

- The relationship between \(\alpha\) and \(r\) in LoRA involves a delicate balance. Adjusting one can necessitate compensatory changes to the other to maintain a desired level of adaptation effectiveness without sacrificing the model’s stability or performance. Understanding how these parameters interact can significantly enhance the strategic deployment of LoRA in practical machine learning tasks.

How do you determine the optimal rank \(r\) for LoRA?

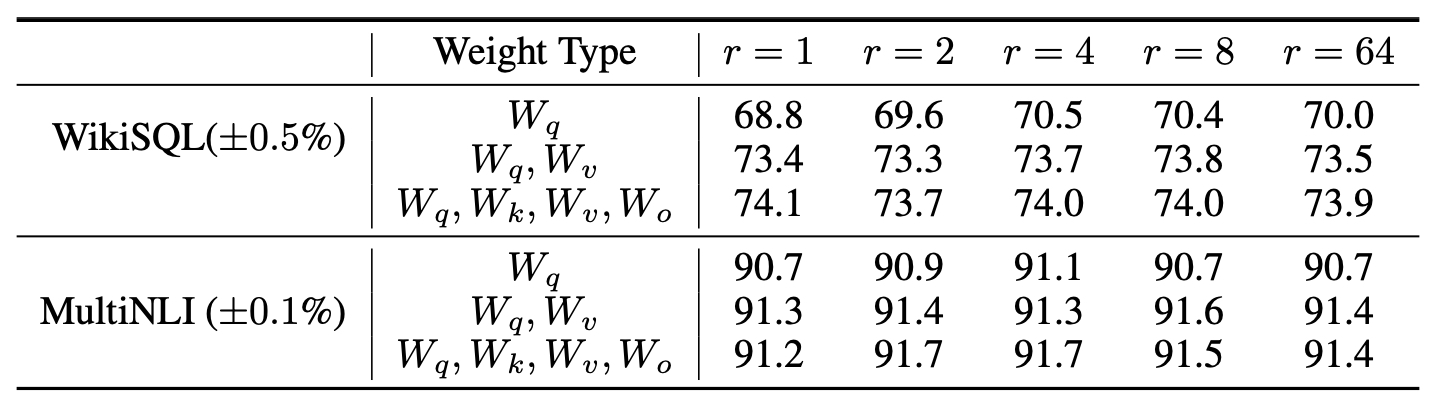

- The optimal rank \(r\) for LoRA is influenced by the specific task and the type of weight adaptation. Based on the results reported in the paper from the experiments on the WikiSQL and MultiNLI datasets:

- For WikiSQL:

- When adapting only \(W_q\), the optimal rank is \(r = 4\), with a validation accuracy of 70.5%.

- When adapting \(W_q\) and \(W_v\), the optimal rank is \(r = 8\), with a validation accuracy of 73.8%.

- When adapting \(W_q, W_k, W_v, W_o\), the optimal ranks are \(r = 4\) and \(r = 8\), both achieving a validation accuracy of 74.0%.

- For MultiNLI:

- When adapting only \(W_q\), the optimal rank is \(r = 4\), with a validation accuracy of 91.1%.

- When adapting \(W_q\) and \(W_v\), the optimal rank is \(r = 8\), with a validation accuracy of 91.6%.

- When adapting \(W_q, W_k, W_v, W_o\), the optimal ranks are \(r = 2\) and \(r = 4\), both achieving a validation accuracy of 91.7%.

- For WikiSQL:

- The table below from the paper shows the validation accuracy on WikiSQL and MultiNLI with different rank \(r\) by adapting \(\left\{W_q, W_v\right\}\), \(\left\{W_q, W_k, W_v, W_c\right\}\), and just \(W_q\) for a comparison.. To our surprise, a rank as small as one suffices for adapting both \(W_q\) and \(W_v\) on these datasets while training \(W_q\) alone needs a larger \(r\).

- In summary, while the optimal rank \(r\) varies depending on the dataset and the type of weight adaptation, a rank of \(r = 4\) or \(r = 8\) generally yields the best performance. Specifically, a rank of \(r = 4\) is often sufficient for single weight types like \(W_q\), and a rank of \(r = 8\) is more effective for adapting multiple weight types such as \(W_q\) and \(W_v\).

- However, a small \(r\) cannot be expected to work for every task or dataset. Consider the following thought experiment: if the downstream task were in a different language than the one used for pre-training, retraining the entire model (similar to LoRA with \(r = d_{model}\)) could certainly outperform LoRA with a small \(r\).

- In summary, selecting a rank that is too high can counteract the benefits of the low-rank adaptation by allowing the model to become overly complex and fit the training data too precisely. Conversely, choosing a rank that’s too low may limit the model’s ability to capture necessary information, leading to underfitting. Therefore, setting the rank in LoRA fine-tuning involves finding a balance: enough capacity to adapt to new data without overfitting.

How do LoRA hyperparameters interact with each other? Is there a relationship between LoRA hyperparameters?

-

There is a significant relationship among the hyperparameters in the Low-Rank Adaptation (LoRA) technique, particularly how they interact and influence each other to affect the adaptation and performance of the model. Understanding the interactions between these hyperparameters is crucial for effectively tuning the model to achieve desired behaviors and performance improvements. Here’s a detailed breakdown of the primary hyperparameters in LoRA and how they are interrelated:

- Rank and Scaling Factor: