Primers • Multi-GPU Parallelism

Multi-GPU Parallelism

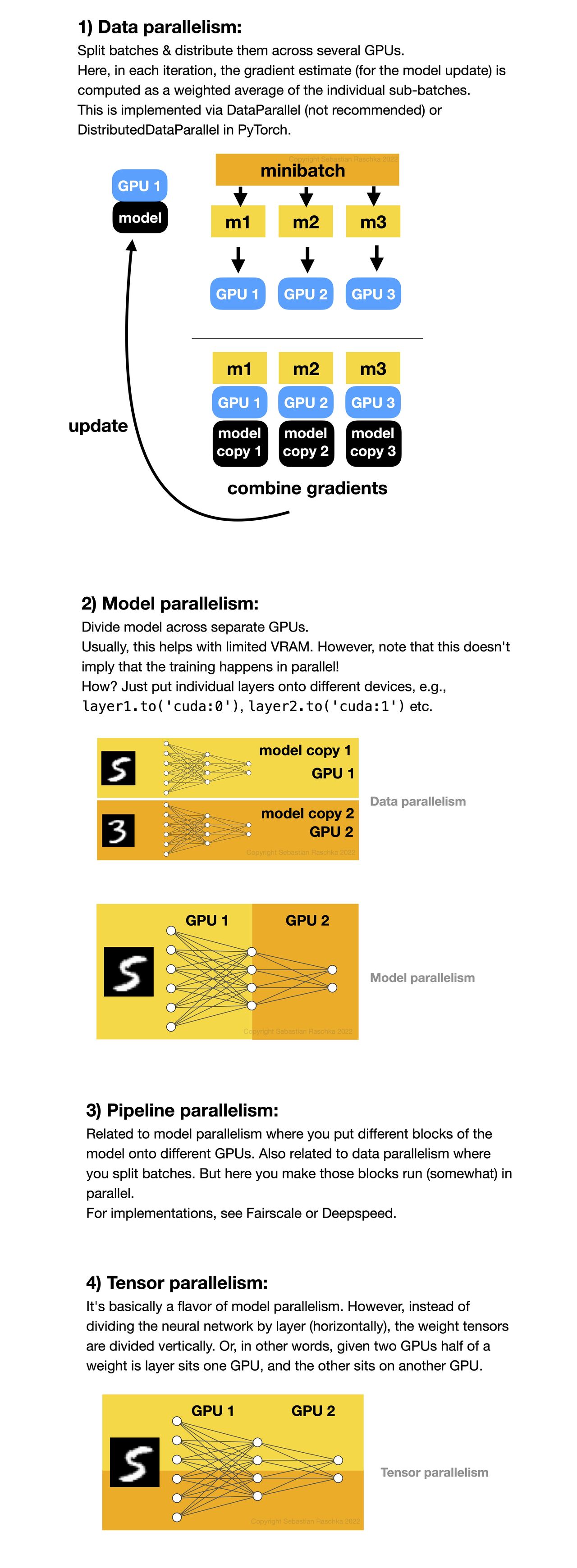

- Here is a quick overview of the four different paradigms for multi-GPU training.

- Data parallelism

- Model parallelism

- Pipeline parallelism

- Tensor parallelism

- Credits to Sebastian Raschka for the infographic below.

Citation

If you found our work useful, please cite it as:

@article{Chadha2020DistilledModelCompression,

title = {Model Compression},

author = {Chadha, Aman},

journal = {Distilled AI},

year = {2020},

note = {\url{https://aman.ai}}

}