Primers • Model Compression for On-Device AI

- Background

- Overview

- Quantization

- Background: Precision

- Background: Matrix Multiplication in GPUs

- Definition

- Types of Quantization

- Dequantization Considerations

- Quantization Workflows

- Benefits and Limitations

- Mitigation Strategies

- Weights vs. Activation Quantization

- Quantization with PyTorch

- Comparative Analysis

- Compute vs. Memory Bottlenecks

- Modern Quantization Techniques

- GPTQ: Quantization with Second-Order Error Compensation

- SmoothQuant

- Activation-Aware Weight Quantization (AWQ)

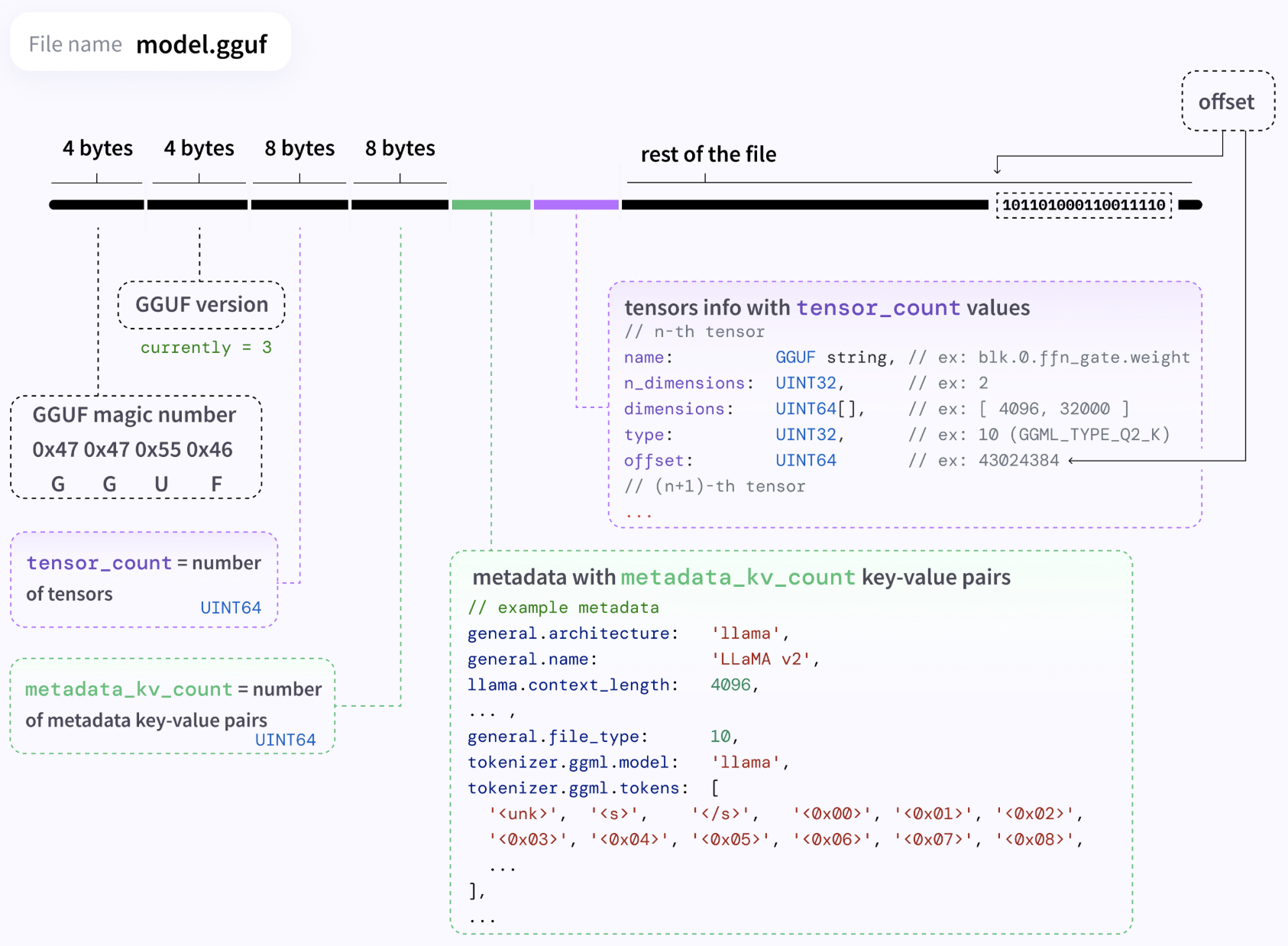

- GGUF Quantization (Legacy, K‑Quants, I‑Quants)

- AWEQ: Activation‑Weight Equalization

- EXL2 Quantization

- SpinQuant

- FPTQuant

- Palettization (Weight Clustering)

- What to Use When?

- Comparative Analysis

- Multimodal Quantization

- Why VLM Quantization Is More Complex

- Quantizing the Visual Backbone

- Quantizing the Language Backbone

- Cross-Modal Projection and Fusion Layer Quantization

- Quantization-Aware Training (QAT) in VLMs

- Calibration and Evaluation in VLMs

- Hybrid and Mixed-Precision Quantization

- Tooling Support

- Comparative Analysis of LLMs vs. VLM Quantization

- Device and Operator Support across Frameworks

- Choosing the right quantization approach

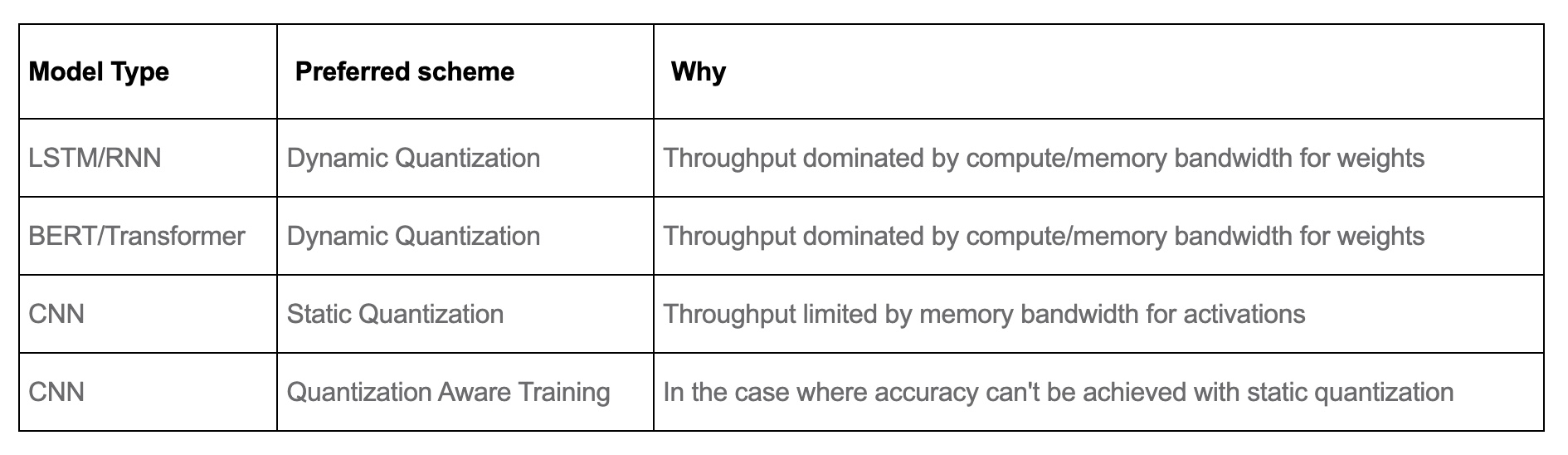

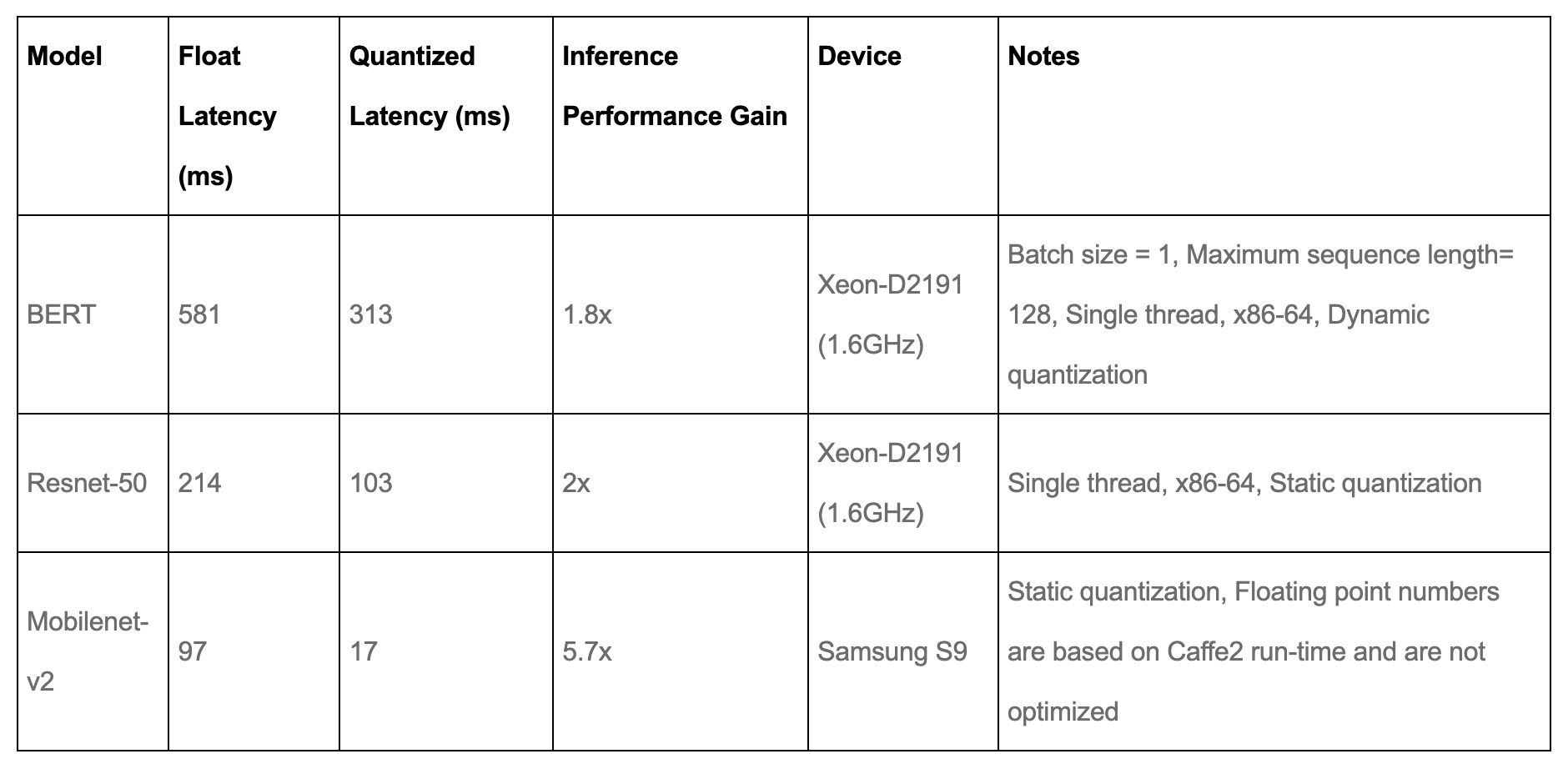

- Performance Results

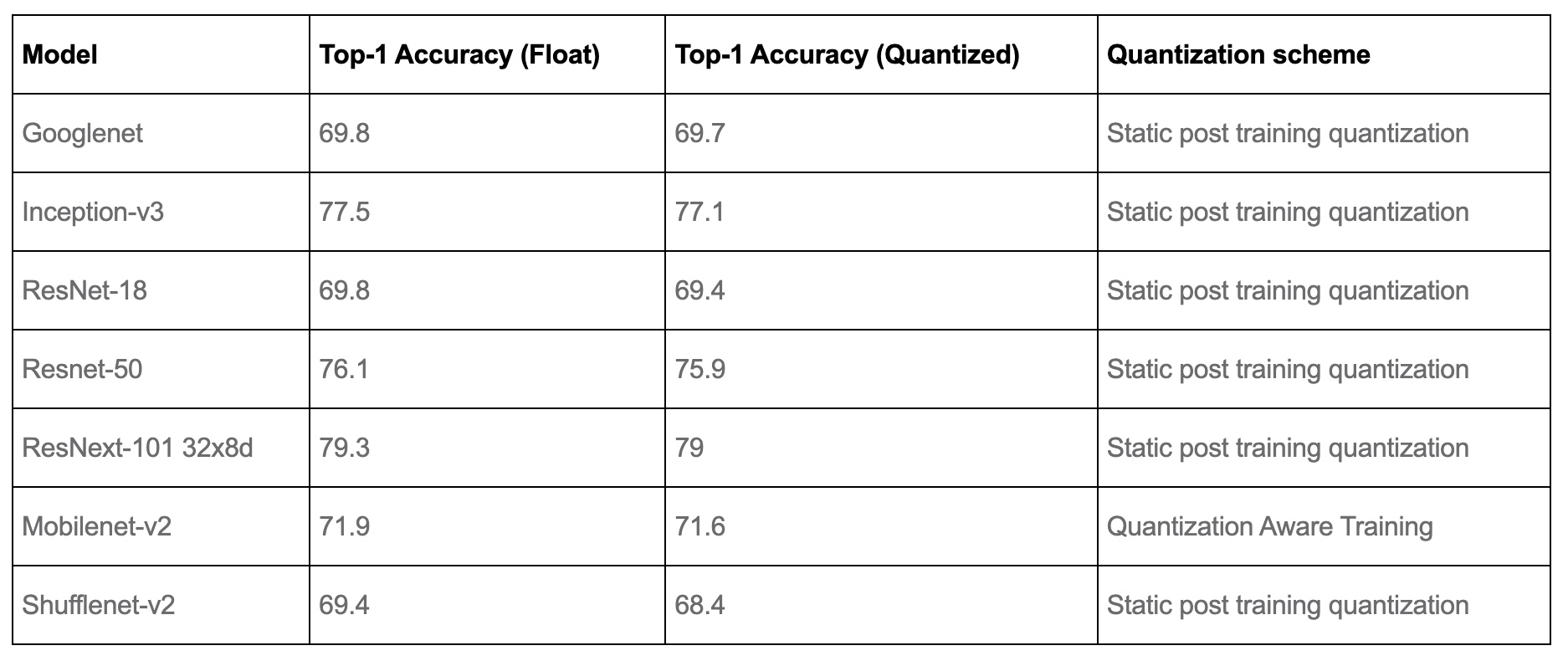

- Accuracy results

- Popular Quantization Libraries

- How Far Can Quantization Be Pushed?

- Further Reading

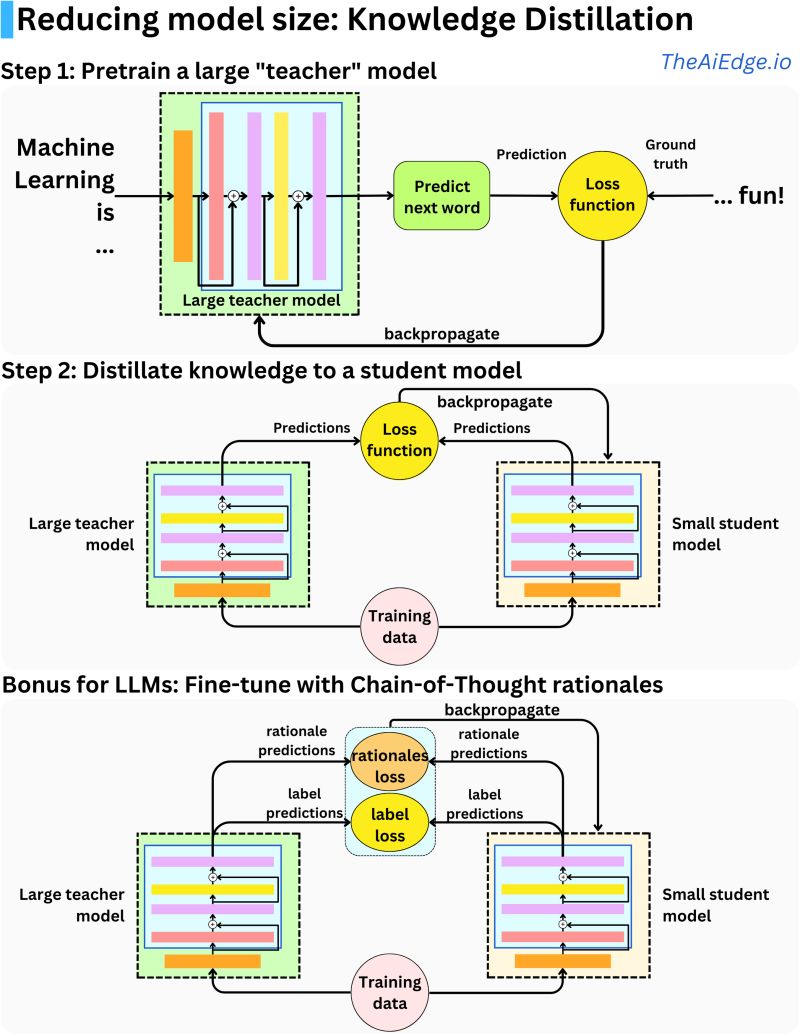

- Knowledge Distillation

- Mechanism

- Types of Knowledge Distillation

- Distillation Modes

- Why Use Knowledge Distillation Instead of Training Small Models from Scratch?

- Why Knowledge Distillation Works

- Distillation in Practice

- Reverse Distillation

- Weak Supervision via Distillation

- Compute vs. Memory Bottlenecks

- Limitations and Challenges

- Model Pruning

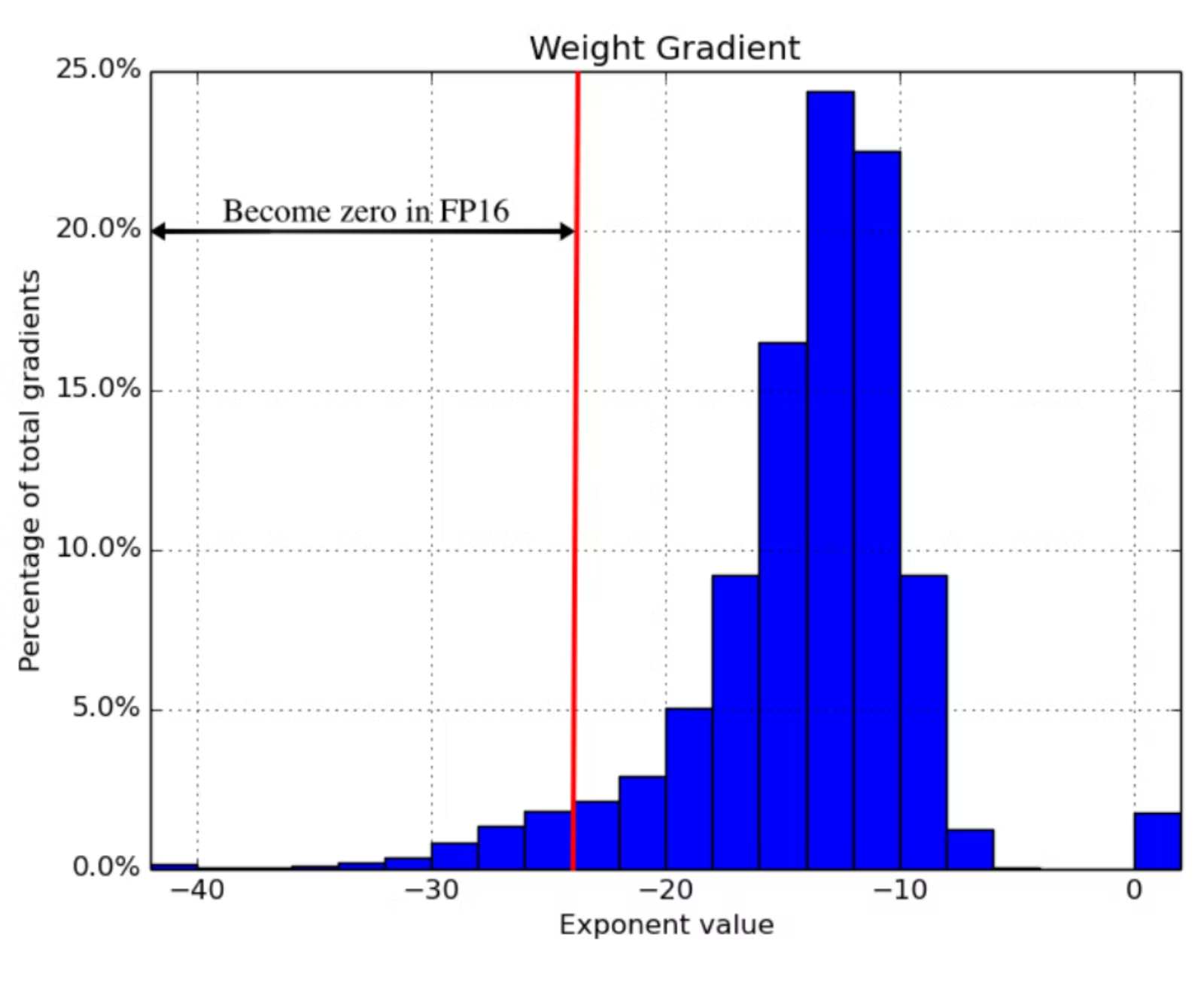

- Mixed Precision Training

- Low-Rank Decomposition & Adaptation

- Lightweight Model Design

- What to use When?

- Combining Model Compression Techniques

- Further Reading

- References

- Citation

Background

-

Modern generative models often contain between 100 billion to 1 trillion parameters. Since each parameter (as a 32-bit float) consumes 4 bytes, the memory footprint can scale from 400 GB to over 4 TB. This makes them prohibitively large for deployment on edge devices, where memory and compute resources are highly constrained. Furthermore, deploying machine learning models directly on edge devices such as smartphones, tablets, or embedded systems offers key advantages in privacy, latency, and user experience. On-device processing ensures that data remains local to the device, significantly reducing the risk of exposure from data transmission or centralized storage breaches. This is particularly critical for applications in computer vision and conversational AI, where interactions often involve personal or sensitive information.

-

However, the computational and memory demands of modern machine learning models—especially large language models—pose a major barrier to efficient on-device deployment. These models are typically too large and resource-intensive to run in real-time on devices with limited hardware capabilities. As models continue to scale in size and complexity, challenges such as increased latency, power consumption, and hardware constraints become even more pronounced.

-

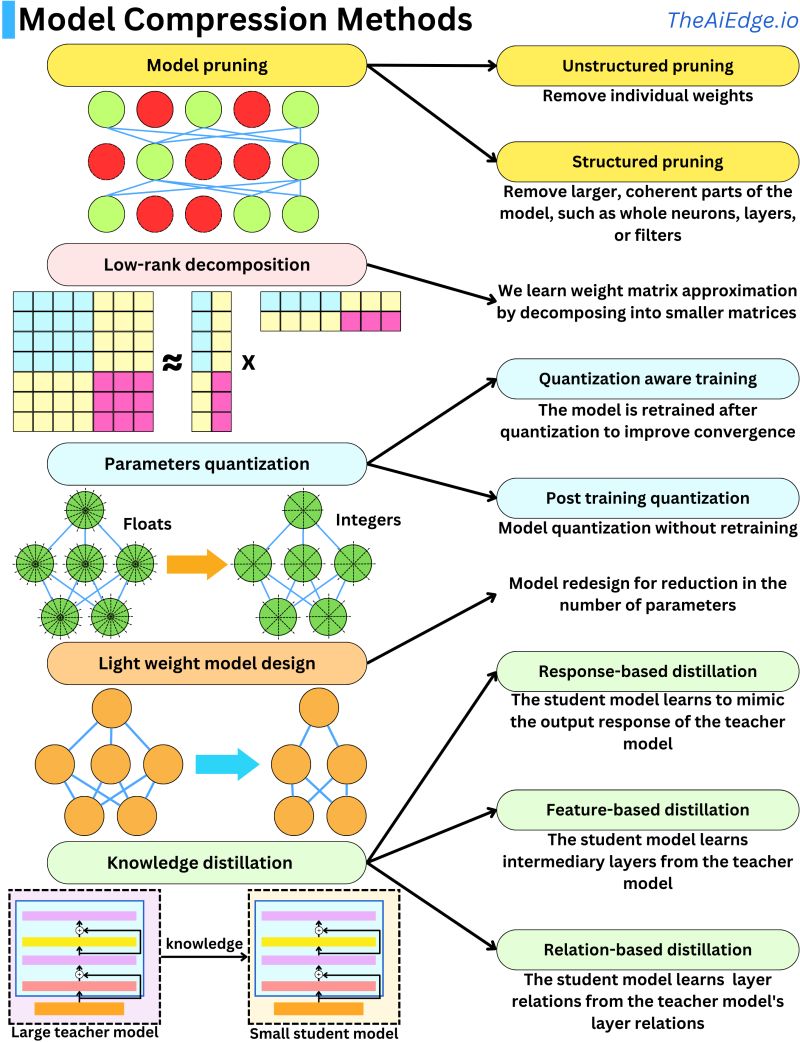

To address these limitations, a wide array of model compression and optimization techniques has been developed. These include model quantization (static, dynamic, and quantization-aware training), structured and unstructured pruning, knowledge distillation (response-based, feature-based, relation-based), low-rank decomposition, activation-aware quantization, operator fusion, and mixed precision training. Advanced post-training quantization methods such as AWQ, SmoothQuant, SpinQuant, AWEQ, and FPTQuant further push the boundaries of efficient model deployment.

-

Collectively, these techniques aim to reduce model size, lower computational complexity, and accelerate inference—without significantly compromising accuracy. By enabling smaller, faster, and more power-efficient models, these strategies make it increasingly feasible to run advanced AI applications directly on user devices, supporting both better privacy and smoother user experiences.

Overview

-

To enable on-device AI, a wide range of model compression techniques have been developed. Below, we visually and conceptually summarize the five core strategies widely used in industry and research.

- Model Quantization

-

Quantization reduces the precision of weights and activations, typically from 32-bit floats to 8-bit integers, yielding up to a 4× reduction in model size and significant speed-ups using optimized kernels.

- Post-training quantization applies precision reduction directly on a trained model and may include heuristic corrections (e.g., bias correction, per-channel scaling).

- Quantization-aware training (QAT) simulates quantization noise during training, allowing the model to adapt and maintain higher accuracy under reduced precision.

- Advanced quantization strategies like AWQ, SmoothQuant, and AWEQ refine post-training quantization by adjusting scaling factors or reweighting attention layers.

- A detailed discourse on this topic is available in the Quantization section.

-

- Knowledge Distillation

-

Knowledge distillation trains a compact student model to mimic a larger teacher model.

- Response-based distillation focuses on matching the output logits or probabilities.

- Feature-based distillation aligns intermediate representations between teacher and student.

- Relation-based distillation preserves inter-feature dependencies (e.g., attention maps).

- Distillation is often combined with other techniques (e.g., quantization or pruning) to maximize efficiency.

- A detailed discourse on this topic is available in the Knowledge Distillation section.

-

- Model Pruning

-

Pruning reduces model size by removing weights that have minimal impact on overall performance.

- Unstructured pruning eliminates individual weights based on their magnitude (e.g., L1/L2 norm) or gradient impact (e.g., first or second derivative of the loss).

- Structured pruning goes further by removing entire neurons, filters, or layers, which can directly lead to faster inference on hardware accelerators.

- In practice, pruning is often iterative: prune, retrain, evaluate, and repeat to recover performance loss.

- A detailed discourse on this topic is available in the Model Pruning section.

-

- Low-Rank Decomposition

-

Many neural networks, especially transformer-based architectures, contain large weight matrices that can be approximated as a product of smaller matrices.

- For example, an N×N matrix can often be replaced by two N×k matrices (with \(k << N\)), reducing space complexity from \(O(N^2)\) to \(O(N \cdot k\)).

- Methods like SVD (singular value decomposition) or CP decomposition are used here.

- In practice, fine-tuning the decomposed model is essential to restore original performance.

- A detailed discourse on this topic is available in the Low-Rank Decomposition section.

-

- Lightweight Model Design

-

Rather than compressing an existing model, lightweight design focuses on creating efficient architectures from the ground up.

- Examples include MobileBERT, DistilBERT, TinyBERT, and ConvNeXt-T, which use smaller embedding sizes, depth-wise separable convolutions, fewer transformer blocks, or other architectural efficiencies.

- Empirical design choices are often guided by NAS (Neural Architecture Search) or latency-aware loss functions.

- A detailed discourse on this topic is available in the Lightweight Model Design section.

-

- The illustration below (source) summarizes how these different compression methods contribute to reducing model size and enabling efficient deployment across platforms, including on-device scenarios.

Quantization

Background: Precision

-

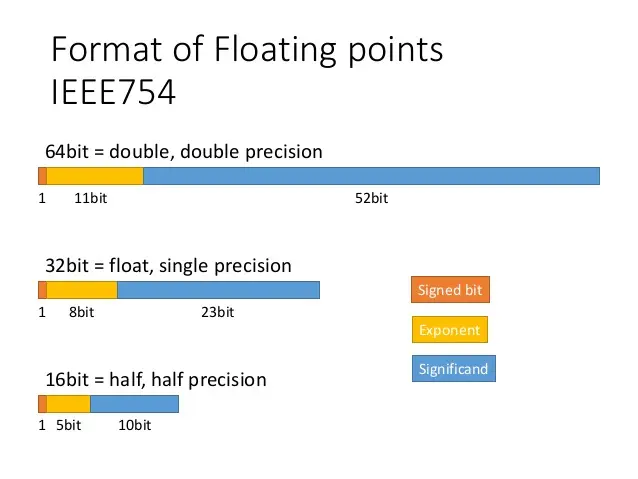

Before diving into quantization, it’s essential to understand precision—specifically, how computers represent decimal numbers like

1.0151or566132.8. Since we can conceive of infinitely precise values (like π), but only have limited space in memory, there’s a fundamental trade-off between precision (how many significant digits can be stored) and size (how many bits are used to represent the number). -

In computer engineering, these values are stored as floating point numbers, governed by the IEEE 754 floating point standard. This specification defines how bits are allocated to represent the sign, exponent, and mantissa (also called the significand, which holds the meaningful digits).

-

Floating point formats vary by their bit-width, and each level of precision has a different rounding error margin:

- Double-precision (

fp64) – 64 bits, max rounding error of approximately \(2^{-52}\). - Single-precision (

float32) – 32 bits, max rounding error of approximately \(2^{-23}\). - Half-precision (

float16) – 16 bits, max rounding error of approximately \(2^{-10}\).

- Double-precision (

-

For a deeper exploration, check out the PyCon 2019 talk: “Floats are Friends: making the most of IEEE754.00000000000000002”.

-

In practice:

- Python defaults to using

fp64for itsfloattype. - PyTorch, which is optimized for performance and memory efficiency, defaults to

float32.

- Python defaults to using

-

Understanding these formats is crucial when moving on to concepts like mixed precision training, where models leverage different floating point types to balance performance and accuracy.

-

Maarten Grootendorst’s A Visual Guide to Quantization offers a visuals to help you develop an intuition about the methodologies, use cases, and the principles behind quantization.

IEEE 754 Floating Point Standard

-

The IEEE 754 standard defines the binary representation of floating point numbers used in nearly all modern hardware and programming environments. A floating-point number is composed of three parts:

- Sign bit (\(S\)): 1 bit indicating positive or negative.

- Exponent (\(E\)): Encodes the range (scale) of the number.

- Mantissa or significand (\(M\)): Encodes the precision.

-

The general representation for a binary floating-point number is:

-

Each format—half, single, and double—allocates a different number of bits to these components, balancing precision and range against memory usage and compute requirements.

-

The following figure (source) shows the IEEE 754 standard formats for floating-point numbers, illustrating the bitwise layout of the signed bit, exponent, and significand across double (64-bit), single (32-bit), and half (16-bit) precision representations.

Half-Precision (float16)

- Bit layout: 1 sign bit, 5 exponent bits, 10 mantissa/significand bits.

- Total bits: 16

- Exponent bias: 15

- Dynamic range: Approximately \(6 \times 10^{-5}\) to \(6.5 \times 10^4\)

-

Half-precision is mostly used during inference, especially in low-power or memory-constrained environments such as mobile devices or embedded hardware. It offers limited range and precision, and is generally not suitable for training deep networks unless special care is taken (e.g., using loss scaling or

float32accumulations). -

GPU Considerations:

- Many GPUs, like NVIDIA’s Volta, Turing, Ampere, Hopper, Blackwell, include specialized hardware units called Tensor Cores optimized for

float16operations. float16can be processed at higher throughput thanfloat32, enabling significant speedups for matrix multiplications during inference.- Often paired with Mixed-Precision Training (MPT), where activations and weights are stored in

float16, but gradients are accumulated infloat32.

- Many GPUs, like NVIDIA’s Volta, Turing, Ampere, Hopper, Blackwell, include specialized hardware units called Tensor Cores optimized for

Single-Precision (float32)

- Bit layout: 1 sign bit, 8 exponent bits, 23 mantissa bits.

- Total bits: 32

- Exponent bias: 127

- Dynamic range: Approximately \(1.4 \times 10^{-45}\) to \(3.4 \times 10^{38}\)

-

float32is the default numerical format for most deep learning frameworks and hardware. It provides a good balance between precision and range, making it robust for both training and inference. -

GPU Considerations:

- Supported natively on all modern GPUs.

- Most general-purpose ALUs (Arithmetic Logic Units) on the GPU are designed to process

float32efficiently. - Slower than

float16orbfloat16in terms of throughput and power usage but more accurate.

Double-Precision (float64)

- Bit layout: 1 sign bit, 11 exponent bits, 52 mantissa bits.

- Total bits: 64

- Exponent bias: 1023

- Dynamic range: Approximately \(5 \times 10^{-324}\) to \(1.8 \times 10^{308}\)

float64is typically used in scientific computing, numerical simulations, and applications requiring high precision. It is rarely used in deep learning because its benefits are minimal for most ML tasks, while the compute and memory costs are high.

Brain Floating Point (bfloat16)

- Bit layout: 1 sign bit, 8 exponent bits, 7 mantissa bits

- Total bits: 16

- Exponent bias: 127 (same as

float32) - Dynamic range: Approximately \(1.2 \times 10^{-38}\) to \(3.4 \times 10^{38}\)

-

bfloat16(Brain Floating Point 16) was introduced by Google for training deep neural networks. Unlikefloat16, which reduces both exponent and mantissa bits,bfloat16keeps the same exponent width asfloat32(8 bits) but reduces the mantissa to 7 bits. -

This design retains the dynamic range of

float32, which makes it far more robust to underflow/overflow issues during training compared tofloat16. -

However, the precision is reduced, since fewer mantissa bits mean fewer significant digits are preserved. Despite this, it performs well in practice for training large models.

-

bfloat16is ideal for training tasks where:- High dynamic range is important

- Some loss of precision can be tolerated (e.g., in early layers or gradients)

- Lower memory and compute overhead is desired compared to

float32

-

It is commonly used in mixed-precision training, often with accumulations in

float32to improve numerical stability. -

Use cases:

- Large-scale model training (e.g., LLMs)

- TPUs (Google Cloud), and newer GPUs from NVIDIA, AMD, and Intel that support native

bfloat16ops

GPU/TPU Considerations

-

float16:

- Supported by specialized hardware units (Tensor Cores) in NVIDIA Volta, Turing, Ampere, Hopper, and Blackwell architectures.

- Offers higher throughput and lower memory usage, especially during inference.

- Typically used in mixed-precision training with

float32accumulations to maintain stability.

-

bfloat16:

- Natively supported on Google TPUs, NVIDIA Ampere and newer (e.g., A100, H100), Intel Habana Gaudi accelerators, and select AMD GPUs.

- Enables high dynamic range similar to

float32while halving memory usage. - Increasingly adopted in training large models where

float16may encounter stability issues. - Like

float16,bfloat16is also used in mixed-precision training pipelines.

-

float32:

- Universally supported across all GPU architectures.

- Offers the best balance between range and precision.

- Slower and more memory-intensive compared to

float16andbfloat16.

-

float64:

- Rare in deep learning; primarily used in scientific computing.

- Most GPUs support it at much lower throughput.

- Often omitted entirely from inference workloads due to cost.

Comparative Summary

| Format | Bits | Exponent Bits | Mantissa Bits | Bias | Range (Approx.) | GPU Usage |

|---|---|---|---|---|---|---|

| float16 | 16 | 5 | 10 | 15 | \(10^{-5}\) to \(10^{4}\) | Fast inference, mixed precision |

| bfloat16 | 16 | 8 | 7 | 127 | \(10^{-38}\) to \(10^{38}\) | Training + inference, high range |

| float32 | 32 | 8 | 23 | 127 | \(10^{-45}\) to \(10^{38}\) | Default for training |

| float64 | 64 | 11 | 52 | 1023 | \(10^{-324}\) to \(10^{308}\) | Rare in ML, slow on GPU |

- This foundation in floating point formats prepares us to understand quantization—where bit-widths are reduced even further (e.g., 8-bit, 4-bit, or binary)—to achieve efficient computation with minimal loss in model performance.

Background: Matrix Multiplication in GPUs

-

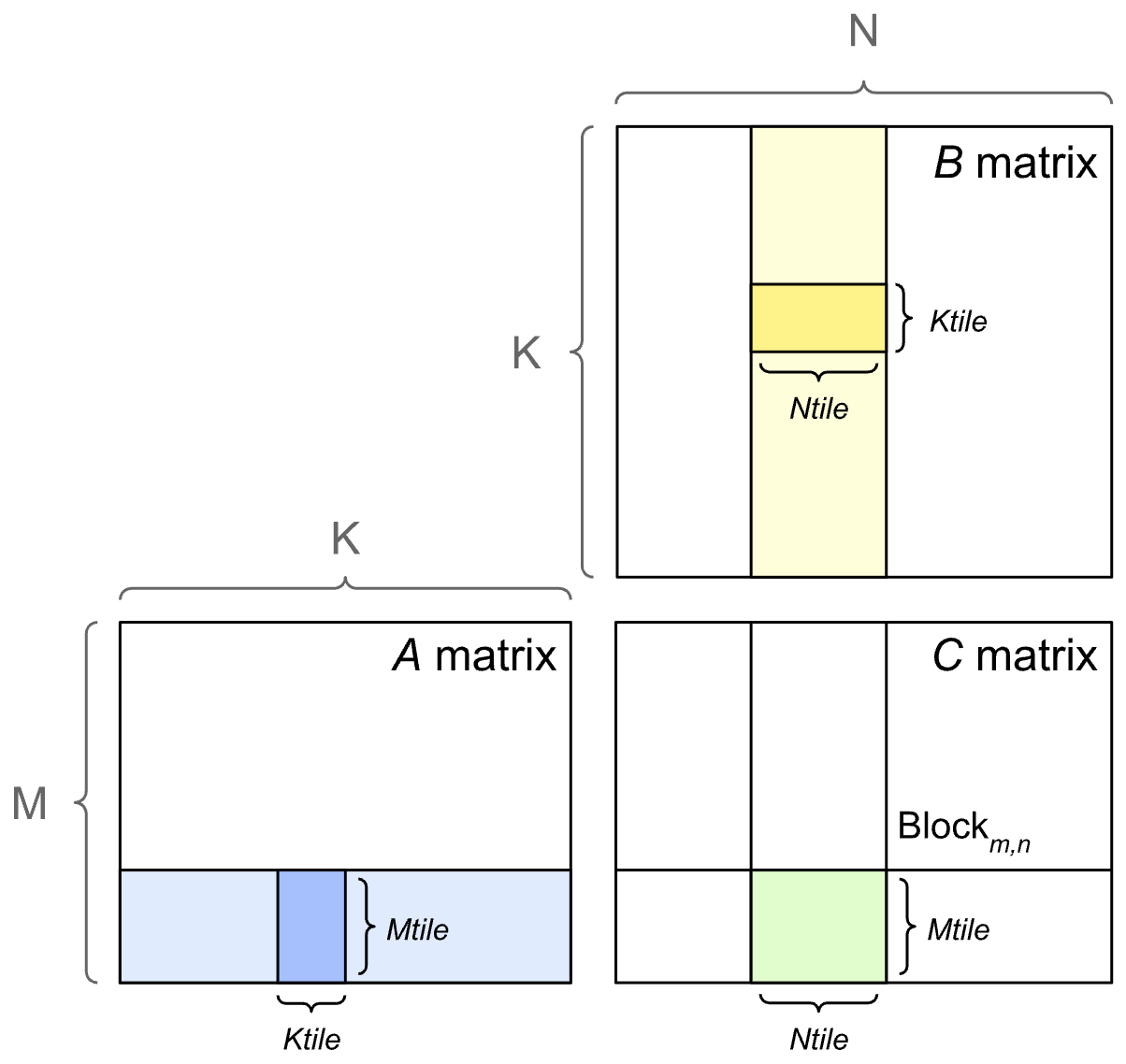

Efficient matrix multiplication is at the heart of modern deep learning acceleration on GPUs. This section provides a high-level view of how matrix-matrix multiplications are implemented and optimized on GPU hardware, with special focus on tiling, Tensor Cores, and the performance implications of quantization.

-

Matrix multiplications, especially General Matrix Multiplications (GEMMs), are a core computational primitive in deep learning workloads. Whether in fully connected layers, convolutions (via

im2col), or attention mechanisms, these operations are executed billions of times during training and inference. As such, optimizing GEMM performance is essential for efficient neural network execution, particularly on GPUs. -

To execute GEMMs efficiently, GPUs partition the output matrix into tiles. Each tile corresponds to a submatrix of the result and is computed by a thread block. The GPU steps through the input matrices along the shared dimension (K) in tiles, performing multiply-accumulate operations and writing the results into the corresponding tile of the output matrix. The illustration below (source) shows the tiled outer product approach to GEMMs.

-

Thread blocks are mapped to the GPU’s streaming multiprocessors (SMs), the fundamental compute units that execute instructions in parallel. Each SM can process one or more thread blocks concurrently, depending on the available resources and occupancy.

-

Performance in GPU matrix multiplication is often bounded by one of two factors:

- Compute (math) bound: When the arithmetic intensity (FLOPs per byte) is high enough that math operations dominate runtime.

- Memory bound: When the operation requires frequent memory access compared to math operations, limiting throughput.

-

Whether a given GEMM is compute- or memory-bound depends on the matrix dimensions (\(M, N, K\)) and the hardware’s characteristics. For example, matrix-vector products (where either \(M\) = 1 or \(N\) = 1) are typically memory-bound due to their low arithmetic intensity.

-

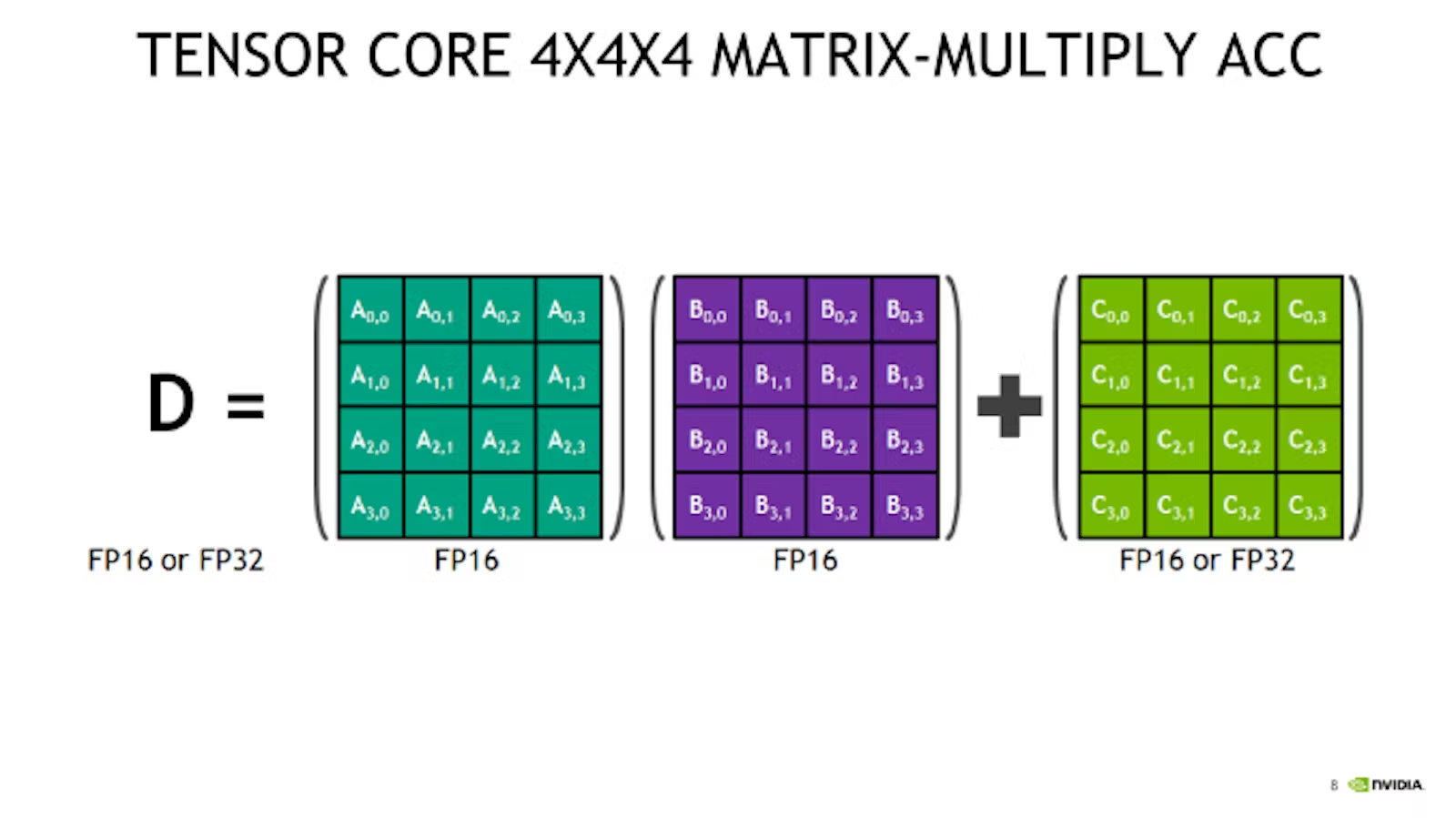

Modern NVIDIA GPUs include specialized hardware units called Tensor Cores, which are designed to accelerate GEMMs involving low-precision data types such as

float16,bfloat16, andint8. Tensor Cores perform small matrix multiplications in parallel and require that the matrices’ dimensions align with certain multiples (e.g., 8 forfloat16, 16 forint8) to achieve peak performance. For instance, on Ampere and newer architectures like Hopper or Blackwell, aligning dimensions to larger multiples (e.g., 64 or 128 elements) often yields even better throughput. -

Matrix dimensions that are not aligned to tile sizes lead to tile quantization, where some tiles carry less useful work, reducing efficiency. Similarly, if the total number of tiles is not an even multiple of the number of GPU SMs, wave quantization can cause underutilization. Both effects can significantly degrade performance despite identical algorithmic complexity.

-

To address this, libraries like cuBLAS employ heuristics or benchmarking to select optimal tile sizes, balancing between tile reuse (large tiles) and parallelism (many small tiles). Larger tiles tend to be more efficient due to better data reuse, but may reduce parallel occupancy on smaller problems.

-

In summary, matrix multiplication performance on GPUs is a delicate balance between compute, memory bandwidth, and architecture-aware tiling strategies. Quantization not only affects data representation but also interacts intricately with the underlying matrix multiplication engine and GPU efficiency.

Under-the-hood

- Modern GPUs are capable of performing numerical computations more efficiently using 16-bit or 8-bit formats—such as

float16,bfloat16, and the emergingfloat8,float6, andfloat4—with minimal loss in model performance. Mixed precision training strategically leverages these lower-precision formats to accelerate computation and reduce memory consumption, while preserving high-precision (float32) for numerically sensitive variables and operations to ensure convergence and model integrity (cf. numerical stability in the section on Mixed Precision Overview). - NVIDIA’s GPUs, from Volta onward, offer specialized hardware units known as Tensor Cores. These units are optimized for dense matrix operations and drastically improve throughput when leveraging reduced-precision data types. For developers using PyTorch, the

torch.cuda.ampmodule offers automatic mixed-precision training functionality, simplifying adoption with minimal code edits. This automates casting, loss scaling, and fallback to high precision where necessary, ensuring both performance and stability during training.

How Tensor Cores Work

-

Tensor Cores serve as specialized hardware designed to accelerate matrix multiplications—critical operations in forward and backward neural network passes. A standard Tensor Core can perform operations such as multiply-and-accumulate on small tiles of data (e.g., 4×4) in reduced-precision formats (e.g.,

float16,bfloat16), or more recent mixed-precision variants using integer types. -

Crucially, if model tensors remain in

float32in the absence of mixed-precision data handling, the Tensor Cores remain unused and the GPU fails to attain its full performance potential. Enabling automatic mixed precision is therefore essential to utilize these units effectively. -

In summary, as NVIDIA’s GPU microarchitectures have progressed from Volta through Blackwell, Tensor Cores have become increasingly versatile—offering a widening array of lower-precision formats and hardware optimizations. To fully exploit their capabilities, developers must adopt mixed precision training frameworks (such as AMP), ensuring that compute and memory resources are used optimally while preserving model fidelity.

-

Tensor Core architectures have evolved across NVIDIA’s GPU microarchitectures:

- Volta introduced first-generation Tensor Cores, supporting

float16matrix-multiply-accumulate (MMA) fused operations. - Turing brought second-generation Tensor Cores, adding support for

int8andint4operations, as well as warp-level synchronous MMA primitives and early AI applications such as DLSS. - Hopper (e.g., H100) features fourth-generation Tensor Cores with native

float8precision in the Transformer Engine, yielding up to 4× faster training on models such as GPT‑3 (175B) compared to previous Tensor Core models. - Blackwell advances to fifth‑generation Tensor Cores, introducing support for sub‑byte floating-point formats including

float4and FP6, alongsidefloat8/bfloat16/float16andint8support. These “Ultra Tensor Cores” incorporate micro‑tensor scaling techniques to fine-tune performance and accuracy—doubling attention-layer throughput and increasing AI FLOPs by 1.5× compared to earlier Blackwell versions.

- Volta introduced first-generation Tensor Cores, supporting

-

The following figure (source) depicts the fundamental computational pattern executed by a Tensor Core—a fused MMA on small matrix tiles, typically of size 4×4 in early architectures. In this operation, two input matrices (\(A\) and \(B\)), stored in a reduced-precision format such as

float16orbfloat16, are multiplied together. The results of these element-wise multiplications are then summed and accumulated directly into a third matrix (\(C\)), which may be stored in eitherfloat16,bfloat16,float32, or, in newer architectures,float8orfloat4. This fusion of multiplication and accumulation into a single hardware instruction eliminates the need to store intermediate results in memory, drastically reducing memory bandwidth requirements and increasing throughput. Larger GEMM (General Matrix Multiply) operations are implemented by tiling them into many such MMA operations executed in parallel across the GPU’s Tensor Cores.

Definition

- Quantization in the context of deep learning refers to the process of reducing the numerical precision of a model’s parameters (weights) and/or intermediate computations (activations). Typically, models are trained and stored using 32-bit floating-point (

float32) precision. Quantization replaces these high-precision values with lower-precision representations—such as 16-bit floating point (float16), 8-bit integer (int8), 4-bit integer, or binary formats in more extreme scenarios. The primary goals are to reduce model size, improve memory and compute efficiency, and accelerate inference—particularly on hardware that supports low-precision arithmetic. - The primary goal of quantization is to enhance inference speed. In contrast, as will be discussed in the section on Mixed Precision Training, the goal of Automatic Mixed Precision (AMP) is to reduce training time. Quantization is effective, in part, because modern neural networks are typically highly over-parameterized and exhibit robustness to minor numerical perturbations. With appropriate calibration and suitable tools, lower-precision representations can approximate the full-precision model closely enough for practical deployment.

Types of Quantization

-

There are two general categories of quantization:

-

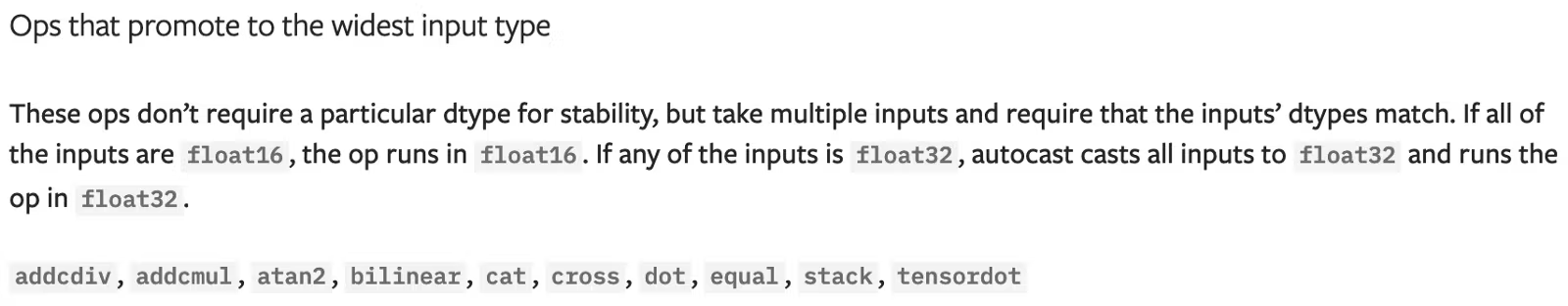

Floating-point quantization: This reduces the bit-width of floating-point values—for example, converting from

float32tofloat16orbfloat16. These formats retain the same general IEEE 754 structure (sign, exponent, mantissa) but use fewer bits, reducing precision and dynamic range. This kind of quantization is primarily used for inference on GPUs and accelerators optimized for low-precision floating-point math (e.g., NVIDIA Tensor Cores). -

Integer quantization: This maps floating-point values to fixed-point integer representations (e.g.,

int8oruint8). This type requires an additional transformation using scale and zero-point to linearly approximate real values using integers, enabling integer-only arithmetic during inference on CPUs and certain edge devices.

-

Integer Quantization

-

Integer quantization is typically implemented as a learned linear transformation (i.e., linear mapping) defined by two parameters: scale and zero-point.

-

The scale is a floating-point multiplier that determines the resolution or step size between adjacent quantized integer values.

-

The zero-point is an integer offset that aligns a real-valued zero to the corresponding integer value in the quantized (i.e., target) range. This allows for asymmetric distributions, where zero is not necessarily centered.

- The forward quantization formula (

floattoint) is:

q = round(x / scale) + zero_point- The reverse dequantization formula (

inttofloat) is:

x = scale * (q - zero_point) - The forward quantization formula (

-

As an example:

- Suppose we want to quantize floating-point values in the range \([-1.0, 1.0]\) to 8-bit unsigned integers (

uint8, range 0–255). The mapping would look like:

scale = (max - min) / (quant_max - quant_min) = (1.0 - (-1.0)) / (255 - 0) ≈ 0.00784 zero_point = round(0 - min / scale) = round(0 - (-1.0 / 0.00784)) = 128- This means that the floating-point value 0.0 maps to 128, 1.0 maps to 255, and -1.0 maps to 0. Intermediate values are linearly interpolated. This transformation enables low-bit integer operations that approximate floating-point behavior.

- Suppose we want to quantize floating-point values in the range \([-1.0, 1.0]\) to 8-bit unsigned integers (

-

Non-Linear Integer Quantization Methods

-

While linear quantization using scale and zero‑point is the most common, non‑linear quantization methods are also employed in integer quantization to better match real-world data distributions. These methods typically apply to integer quantization, as they redefine how integer values map to real numbers:

-

Logarithmic quantization uses exponentially spaced quantization levels—e.g. powers of two—providing better representation across a wide dynamic range. This method is non‑linear and particularly used for integer-only inference pipelines. It is not relevant for floating‑point quantization, which already uses a non‑uniform exponent-based scale inherently built into its representation.

-

K-means or cluster-based quantization groups floating-point values into clusters, mapping each to its centroid—another non-linear approach for integer quantization or weight sharing schemes.

-

Learned transformations, such as LSQ (Learned Step Size Quantization) and its non-uniform variant nuLSQ, optimize quantization step sizes or level spacing via backpropagation. These methods are applied to integer quantization of weights and activations (e.g., 2‑, 3‑, or 4‑bit integer quantization) and involve non-linear quantizer parameterization.

-

In summary, non-linear quantization techniques are relevant for integer quantization workflows, where they redefine integer mapping to better match value distributions. Floating‑point quantization (e.g., float32 \(\rightarrow\) float16/bfloat16), while structurally non-linear due to its exponent/mantissa hierarchy, does not employ these learned or clustering-based non-linear integer mapping schemes.

-

Floating-Point Quantization

- Floating-point quantization is implemented by truncating or rounding the mantissa and exponent fields in IEEE754 representation—e.g. conversion from

float32tofloat16orbfloat16—preserving the format structure but reducing bit-width. This form of quantization (i.e., bit‑width reduction) is non-linear in effect because the quantization steps vary by exponent range: numbers near zero have finer granularity than large values due to the floating-point exponent scaling. - This approach aligns with a well-known model in signal quantization theory often referred to as the compressor–quantizer–expander model. In this framework, the exponential scaling of floating-point numbers acts as a compressor that non-linearly maps real values into a domain where uniform quantization (truncation of mantissa bits) is applied. The quantizer then discretizes the mantissa (hidden quantizer), and the expander step reconstructs the approximate value from the compressed representation. This structure enables efficient representation of a wide dynamic range with relatively coarse quantization, especially benefiting smaller values close to zero.

- Common APIs include casting methods like

model.half()in PyTorch and PyTorch’s support forfloat16static quantization configs (e.g.float16_static_qconfig). Floating-point quantization halves memory footprint with minimal accuracy loss on GPU inference platforms.

Dequantization Considerations

- Dequantization is not always needed during inference, and its necessity depends on the type of quantization and the underlying hardware. In integer-only quantization pipelines—commonly used for inference on mobile CPUs or edge devices—computations are performed entirely in the integer domain (e.g.,

int8oruint8), and dequantization is typically only applied at the final stage, such as for logits or output activations. This avoids floating-point operations altogether during inference.- However, in hybrid quantization workflows, where some layers are quantized (e.g., to

int8) and others remain in higher precision (float32orfloat16), intermediate dequantization is required at the layer boundaries to enable compatibility between quantized and non-quantized components. This is common in models that cannot fully tolerate quantization across all layers due to accuracy degradation or unsupported ops. - In contrast, when quantizing to lower-precision floating-point formats like

float16, dequantization is not needed at all, because these formats are still natively supported by GPU hardware. For example, NVIDIA Tensor Cores are optimized forfloat16(andbfloat16) matrix operations, so models usingfloat16quantization can be executed directly end-to-end without converting back tofloat32. All computations remain in low-precision floating-point format, maintaining performance while avoiding the complexity of dequantization logic entirely.

- However, in hybrid quantization workflows, where some layers are quantized (e.g., to

Quantization Workflows

-

There are three main workflows/approaches to apply quantization:

-

Dynamic / Runtime Quantization: This method quantizes model weights statically (e.g. to

int8orfloat16), while activations remain in full precision until runtime, where they are quantized dynamically at each inference step right before computation. It requires no calibration dataset and no fine‑tuning, making it the easiest quantization method provided by PyTorch. It is particularly effective for models dominated by weight‑heavy layers such astorch.nn.Linear, recurrent layers (nn.LSTM,nn.GRU), and transformers. In PyTorch, this is implemented via the functionquantize_dynamic, for example:import torch quantized_model = torch.ao.quantization.quantize_dynamic( model_float32, {torch.nn.Linear, torch.nn.LSTM}, dtype=torch.qint8 )- With this approach, the quantized model is memory‑efficient and can accelerate inference for NLP architectures, often with negligible accuracy loss compared to PTQ—though with lower benefit on convolution‑heavy vision models.

- Look up the Dynamic / Runtime Quantization section for a detailed discourse on this topic.

-

Post-Training Quantization (PTQ): Converts a fully trained high-precision model to a lower-precision format without retraining. PTQ typically uses a calibration dataset to compute appropriate

scaleandzero-pointvalues using strategies like min-max range or percentile clipping. It is simple to use and well-supported in frameworks such as TensorFlow Lite and PyTorch, but may incur accuracy loss—particularly for sensitive or activation-heavy layers.- From the TensorFlow post-training quantization documentation:

We generally recommend 16-bit floats for GPU acceleration and 8-bit integer for CPU execution.

- This reflects hardware preferences:

float16enables faster matrix multiplications on GPU accelerators like Tensor Cores, whileint8is more efficient on CPU architectures with dedicated integer units such as integer SIMD extensions (e.g., AVX, NEON, VNNI). - Look up the Post-Training Quantization section for a detailed discourse on this topic.

-

Quantization-Aware Training (QAT): In QAT, quantization effects—specifically the non-linearity introduced by rounding and clipping—are simulated during training, allowing the model to adapt. The model behaves as though it operates in lower precision during the forward pass, using the quantization formula above with fake quantization modules (e.g.,

FakeQuantizein PyTorch ortf.quantization.fake_quant_with_min_max_varsin TensorFlow). These modules apply quantization and dequantization logic using scale and zero-point. However, backpropagation remains in full precision. In other words, gradients and parameter updates are still computed using fullfloat32precision. This allows the model to adapt to quantization-induced noise, often resulting in better accuracy retention compared to PTQ. QAT is especially useful when targeting very low-bit formats (such as 4-bit or lower) or when quantizing sensitive components like attention layers in transformers or deploying models in high-accuracy applications.- Look up the Quantization‑aware Training (QAT) section for a detailed discourse on this topic.

-

Benefits and Limitations

- Quantization can lead to significant reductions in efficiency, both in terms of memory footprint and computational load. For example, converting

float32weights toint8reduces storage requirements by 75%, and on supported hardware, can improve inference speed by 2× to 4×. These benefits are amplified on edge devices with limited memory, power, and compute such as mobile phones, IoT sensors, embedded processors, and accelerators that support low-precision execution.

While quantization offers compelling advantages, it can also suffer from practical limitations. Quantization might not work uniformly well across all architectures or layers since some operators (powering these layers and architectures) might not be supported in quantized form on all hardware targets. Put simply, some hardware can lack native support for certain quantized operators, since they typically have to be implemented individually. Operators like group convolutions, custom layers, normalization approaches (say LayerNorm), etc. may fall back to

float32or require custom low-level kernels, potentially limiting compatibility or efficiency on target platforms. Lastly, layers with small value ranges, heavy outliers, or complex nonlinear interactions may require higher precision (e.g.,float16orfloat32) to avoid accuracy degradation.

Mitigation Strategies

-

Selective strategies like per-channel, per-group, per-layer, per-tensor, and mixed-precision quantization are commonly used to mitigate limitations such as accuracy loss, hardware incompatibilities, and uneven value distributions across layers.

-

Per-channel quantization (also referred to as channel-wise quantization): This approach assigns separate scale and zero-point values to each output channel of a weight tensor. It is particularly effective in convolutional and linear layers where each output channel (or filter) may have significantly different weight distributions. By capturing channel-wise variations in magnitude, it provides better quantization accuracy, especially in vision models like ResNet, MobileNet, and EfficientNet, as well as transformer-based architectures such as BERT and GPT. PyTorch implements this using the

torch.per_channel_affinequantization scheme. -

Per-group quantization (also referred to as group-wise quantization): A compromise between per-tensor and per-channel quantization. Channels are divided into groups, with each group sharing quantization parameters. This reduces the overhead of storing separate scale/zero-point values for every channel, while still preserving more distributional information than per-tensor quantization. It is particularly useful in resource-constrained deployment scenarios where memory and compute costs must be balanced against accuracy. Though not always exposed via high-level APIs in frameworks like PyTorch, this strategy is supported in certain hardware accelerators and vendor-specific toolchains (e.g., Qualcomm, MediaTek, Xilinx).

-

Per-layer quantization (also referred to as layer-wise quantization): This applies the same quantization parameters (scale and zero-point) across an entire layer’s output or weight tensor. For example, all outputs of a linear layer or all weights of a convolution kernel are quantized using a single shared set of parameters. This method is computationally efficient and requires minimal additional metadata, making it widely used in low-resource settings or for fast prototyping. However, it often leads to higher quantization error in layers with highly varied internal distributions.

-

Per-tensor quantization (also referred to as tensor-wise quantization): A special case of per-layer quantization where quantization is applied uniformly across an entire tensor (typically weight or activation). A single scale and zero-point are calculated for the full tensor, regardless of dimensionality or channel boundaries. This is the simplest and most lightweight quantization method, requiring minimal bookkeeping and fast execution. While effective for layers with narrow and uniform value ranges, it can result in significant information loss when used on tensors with wide dynamic range or uneven channel statistics. This method is often the default in early-stage quantization workflows or on hardware that does not support fine-grained schemes.

-

Mixed-precision quantization: Instead of applying uniform quantization across all model layers, mixed-precision quantization selectively retains higher-precision (e.g.,

float32orfloat16) computation in layers that are sensitive to quantization noise—such as attention heads, layer normalization, or output classifiers. Other layers, particularly early convolution blocks or MLPs, can be safely quantized toint8or lower. This approach enables developers to achieve a favorable trade-off between accuracy and efficiency. Mixed-precision is supported by most modern inference engines including TensorRT, TVM, XNNPACK, and PyTorch FX Graph Mode Quantization.

Weights vs. Activation Quantization

-

Why they’re not the same: Weights and activations have fundamentally different roles and constraints during quantization.

-

Weights are static once training completes. Since they do not vary across inputs, they may be quantized offline using fixed calibration data or analytically via heuristics such as min‑max scaling or percentile statistics. This allows more aggressive optimization techniques such as per‑channel quantization or non‑uniform quantization, and values may be precomputed and stored in compact low‑precision formats like

int8,int4, or binary. -

Activations, by contrast, are dynamic and input‑dependent. Their value ranges vary based on data processed during inference. Therefore, activation quantization must accommodate runtime variability. Two common modes exist:

-

Static Activation Quantization: Calibration datasets estimate typical activation ranges (min/max or histogram‑based) per layer. These statistics are then used to assign fixed scale/zero‑point pairs for quantized representation, using observers such as

MinMaxObserveror histogram‑based observers. -

Dynamic Activation Quantization: During inference, scale and zero‑point values are computed on‑the‑fly from input‑dependent statistics (e.g. dynamic min/max per batch). This avoids calibration datasets but may add latency due to runtime computation.

-

-

-

Handling outliers: Outliers in activation or weight distributions can drastically degrade quantization quality by skewing range and reducing effective precision. Several mitigation strategies include:

-

Percentile‑based Clipping: Rather than using absolute min/max, activations may be clipped to a percentile‑based range (e.g. 99.9%) to discard extreme outliers. Techniques include KL divergence minimization or MSE‑based clipping, used in frameworks such as PyTorch or TensorFlow Lite.

-

Per‑Channel Quantization: Instead of applying a single scale across a tensor, per‑channel quantization assigns unique scale and zero‑point values per output channel. This adapts to local distribution variations, particularly in convolutional or linear layers. In PyTorch, this is implemented via PerChannelMinMaxObserver or similar observers using

torch.per_channel_affineschemes. Core functions includetorch.quantize_per_channelandtorch.fake_quantize_per_channel_affineto simulate quantization.-

Per‑channel quantization is especially effective in:

- Convolutional neural networks (e.g. ResNet, MobileNet, EfficientNet, YOLO), where filter/channels have distinct distribution ranges.

- Transformer architectures (e.g. multi‑head attention or feed‑forward layers), where weight and activation distributions vary widely across heads or projection layers.

-

-

Learned Scale Factors: Methods like Learned Step Size Quantization (LSQ), introduced in the paper “Learned Step Size Quantization” by Esser et al. (2020), enable scale parameters to be optimized via backpropagation during fine‑tuning. This adaptive scaling is especially beneficial in models with skewed distributions—such as transformer-based language models, where operations like softmax produce skewed outputs.

-

-

Advanced Weight Handling:

-

Per‑Group Quantization: Instead of full per‑channel (i.e. one scale per channel), per‑group quantization assigns one scale per group of channels (e.g. N channels or per row). This balances granularity and memory overhead and is prevalent in formats like ONNX or TensorRT.

-

Activation‑Aware Weight Quantization (AWQ): AWQ techniques tailor weight quantization ranges and groupings using activation patterns observed during calibration. Rather than uniformly quantizing weights, these methods use sensitivity analysis to allocate bit budgets or adjust grouping for performance‑critical weights.

-

Zero‑Point Optimization: For symmetric quantization (typically weight tensors), zero‑point is fixed at zero. For asymmetric quantization—commonly used for activations—the zero‑point shifts the quantized range. Some frameworks (e.g. ONNX, TensorFlow Lite) allow fine‑grained control over zero‑point alignment, which influences both accuracy and hardware compatibility.

-

-

In practice, weight and activation quantization are applied jointly but with distinct parameter sets and calibration workflows. Modern toolkits support fine-grained configuration, including:

- PyTorch’s torch.quantization and torch.ao.quantization modules;

- TensorFlow Model Optimization Toolkit (quantization guide) supporting calibration APIs and quantization-aware training;

- NVIDIA TensorRT, which enables layer-wise quantization, quantization-aware training, and PTQ via its TensorRT SDK documentation and the Torch‑TensorRT Model Optimizer;

- Intel Neural Compressor, an open‑source framework offering post‑training static, dynamic, and quantization‑aware training workflows for PyTorch and TensorFlow (Intel Neural Compressor documentation).

Effective quantization requires balancing statistical rigor, hardware compatibility, and architecture sensitivity. Activations require runtime awareness, while weights benefit from static optimization—and both may leverage learned or adaptive scaling to maintain fidelity in low‑bit regimes.

Quantization with PyTorch

-

Quantization in PyTorch enables the execution of computations and memory accesses with reduced-precision data types, typically

int8, leading to improvements in model efficiency, inference speed, and memory footprint. PyTorch provides comprehensive support for quantization, starting from version 1.3, through an API that integrates seamlessly with the existing eager execution model. -

Quantized Tensor Representation

-

PyTorch introduces special data types for quantized tensors, enabling the representation of weights and activations in reduced precision (typically

int8, and sometimesfloat16). These tensors can be operated on via quantized kernels available undertorch.nn.quantizedandtorch.nn.quantized.dynamic. These quantized operations allow for a 4× reduction in model size and 2-4× improvements in memory bandwidth and inference latency, depending on the hardware and model structure. -

Quantization in PyTorch relies on calibration, which is the process of gathering statistics on representative inputs to determine optimal quantization parameters (such as scale and zero-point). These parameters are used in quantization functions of the form

round(x / scale) + zero_point, enabling a linear mapping between floating point and integer domains.

-

-

Quantization Backends

- PyTorch leverages optimized backend libraries to execute quantized operations efficiently. FBGEMM (Facebook’s GEMM library) is optimized for server environments (x86 CPUs), while QNNPACK is designed for mobile and embedded environments. These are analogous to BLAS/MKL libraries in floating-point computation and are integrated automatically based on the target deployment platform.

-

Numerical Stability and Mixed Precision

- One challenge in quantization is maintaining numerical stability, particularly for operations involving accumulation or exponentiation. To address this, PyTorch supports mixed-precision training and inference using the

torch.cuda.ampmodule. AMP (Automatic Mixed Precision) allows portions of the model to be cast totorch.float16while retainingtorch.float32for operations requiring higher precision, improving performance with minimal loss of accuracy. Although initially introduced for CUDA GPUs, mixed-precision techniques are distinct from quantization but can be complementary in certain scenarios.

- One challenge in quantization is maintaining numerical stability, particularly for operations involving accumulation or exponentiation. To address this, PyTorch supports mixed-precision training and inference using the

-

Quantization Techniques in PyTorch

-

PyTorch provides three primary quantization workflows under the

torch.quantizationnamespace, often referred to collectively as “eager mode quantization”: - Dynamic Quantization:

-

Weights are statically quantized and stored in int8 format, while activations are dynamically quantized at runtime before computation. This method requires minimal code changes and no calibration data. It is most effective for models dominated by linear layers (e.g., LSTM, GRU, Transformer-based models).

-

Example:

torch.quantization.quantize_dynamic(model, {torch.nn.Linear}, dtype=torch.qint8) -

- Post-Training Quantization:

-

Both weights and activations are quantized. This approach requires calibration, where representative input data is passed through the model to collect statistics via observer modules. Operator fusion (e.g., Conv + ReLU) and per-channel quantization are supported to improve performance and accuracy.

-

Example sequence:

model.qconfig = torch.quantization.get_default_qconfig('fbgemm') torch.quantization.prepare(model, inplace=True) # Run calibration with representative data torch.quantization.convert(model, inplace=True) -

- Quantization-Aware Training (QAT):

-

This technique inserts fake-quantization modules during training, simulating quantization effects in both forward and backward passes. It typically yields the highest post-quantization accuracy, especially in cases where model accuracy is sensitive to quantization noise.

-

Example sequence:

model.qconfig = torch.quantization.get_default_qat_qconfig('fbgemm') torch.quantization.prepare_qat(model, inplace=True) # Train model torch.quantization.convert(model.eval(), inplace=True) -

-

-

Operator and Layer Coverage

- Quantization support varies by method. Dynamic quantization supports layers like Linear and RNNs, while static and QAT methods support a broader set including Conv, ReLU, BatchNorm (via fusion), and more. FX Graph Mode Quantization (a newer, graph-level approach not covered here) further expands operator support and streamlines workflows.

-

For additional guidance and end-to-end examples, refer to the official PyTorch blog: Introduction to Quantization on PyTorch.

Dynamic / Runtime Quantization

-

Dynamic quantization is one of the most simple quantization techniques in PyTorch, particularly suitable for models where most computation occurs in linear layers—such as transformer models (e.g. BERT) or recurrent networks (e.g. LSTM)—because these operations are dominated by matrix multiplications, which benefit significantly from

int8acceleration without requiring quantized convolutions. -

In dynamic quantization, model weights are converted from 32-bit floating point (

float32) to a lower precision format such asint8and are permanently stored in this quantized form. Activations, however, remain infloat32format until runtime. At inference time, these activations are dynamically quantized toint8immediately before the corresponding computation (i.e., matrix multiplication or linear operation) is executed. After the operation, the result is stored back infloat32. This hybrid approach enables significant performance gains—such as reduced latency and memory usage—while maintaining reasonable model accuracy.

The aim of dynamic quantization is thus to save compute through faster arithmetic, rather than primarily to reduce storage needs.

-

Unlike static quantization or quantization-aware training (QAT), dynamic quantization requires no calibration dataset or retraining. This makes it ideal when representative data is unavailable or ease of deployment is paramount.

-

Quantization parameters (scale and zero-point) for activations are determined dynamically at each invocation based on the input data range, while weights use fixed scale and zero-point values computed ahead of time. As such, since only the model weights are quantized ahead of time, while activations remain in

float32and are quantized dynamically at runtime based on input data, dynamic quantization is often referred to as a data-free or weight-only quantization method during preparation. -

PyTorch provides the API

torch.ao.quantization.quantize_dynamic(...)for applying dynamic quantization:- A model (

torch.nn.Module) - A specification of target layer types or names (commonly

{nn.Linear, nn.LSTM}) - A target dtype (e.g.,

torch.qint8).

- A model (

-

Only supported layer types are quantized—primarily

nn.Linearand RNN variants; convolutional layers (e.g.,nn.Conv2d) are not supported by dynamic quantization. -

This approach is particularly effective for transformer and RNN models, where inference throughput is limited by memory-bound weight matrices. For example, quantizing BERT with dynamic quantization often yields up to 4× reduction in model size and measurable speedups in CPU inference latency.

Dynamic Quantization vs. Post-Training Quantization

- Unlike Post-Training Quantization, dynamic quantization does not use minmax observers or any calibration mechanism. Activation ranges are computed dynamically at runtime based on actual input data, so no observers are required during model preparation.

Example Workflow

- Below is an example illustrating a typical dynamic quantization workflow:

import torch

from torch import nn

from torch.ao.quantization import quantize_dynamic

# Assume `model` is a pretrained floating‑point nn.Module, in eval mode

model.eval()

# Apply dynamic quantization to Linear and LSTM layers

quantized_model = quantize_dynamic(

model,

{nn.Linear, nn.LSTM},

dtype=torch.qint8

)

# Run inference: activations will be quantized at runtime

input_data = torch.randn(batch_size, seq_length, feature_dim)

output = quantized_model(input_data)

Workflow Explanation

model.eval()ensures deterministic behavior during quantization.quantize_dynamic(...)replaces supported layers with their dynamic quantized implementations.- Activations remain in

float32until needed. - At runtime, activations are quantized to

int8on-the-fly, and computations are performed withint8weights and mixed precision accumulators. - After the operation, results return to

float32.

Typical Benefits

- Model size reduced by ~75%, thanks to

int8weights. - Latency improvements, especially on CPU-bound operations.

- No calibration or fine-tuning required.

Notes & Trade‑offs

- Dynamic quantization does not support convolution or custom layers unless manually wrapped.

- Dynamic quantization handles input distributions that vary widely more gracefully than static quantization, which uses fixed calibration ranges.

- For CNN models or workloads where activations must also be quantized ahead of time, static quantization or QAT may yield better performance and accuracy.

Further Reading

- A comprehensive end-to-end tutorial for dynamic quantization on BERT is available here.

- For a more general example and advanced usage guide, see the dynamic quantization tutorial.

- The full API documentation for

torch.quantization.quantize_dynamicis available here.

Post-Training Quantization

-

Post-Training Quantization (PTQ) is a technique in PyTorch that enables the conversion of a model’s weights and activations from floating-point (typically

float32) to 8-bit integers (int8), significantly improving inference efficiency in terms of speed and memory usage. This method is particularly well-suited for deployment scenarios on both server and edge devices, where latency and resource constraints are critical. -

To facilitate this process, PyTorch inserts special modules known as observers into the model. These modules capture the activation ranges at various points in the network. Once sufficient data has been passed through the model during calibration, the observers record min-max values or histograms (depending on the observer type), which are then used during quantization.

-

A key benefit of static quantization is that it allows quantized values to be passed between operations directly, eliminating the need for costly float-to-int and int-to-float conversions at each layer. This optimization significantly reduces runtime overhead and enables end-to-end execution in

int8. -

PyTorch also supports several advanced features to further improve the effectiveness of static quantization:

-

Observers:

- Observer modules are used to collect statistics on activations and weights during calibration. These can be customized to suit different data distributions or quantization strategies. PyTorch provides default observers like

MinMaxObserverandHistogramObserver, and users can register them via the model’sqconfig. - Observers are inserted using

torch.quantization.prepare.

- Observer modules are used to collect statistics on activations and weights during calibration. These can be customized to suit different data distributions or quantization strategies. PyTorch provides default observers like

-

Operator Fusion:

- PyTorch supports the fusion of multiple operations (e.g., convolution + batch normalization + ReLU) into a single fused operator. This reduces memory access overhead and improves both runtime performance and numerical stability.

- Modules can be fused using

torch.quantization.fuse_modules.

-

Per-Channel Weight Quantization:

- Instead of applying the same quantization parameters across all weights in a layer, per-channel quantization independently quantizes each output channel (particularly in convolution or linear layers). This approach improves accuracy while maintaining the performance benefits of quantization.

- Final conversion to the quantized model is done using

torch.quantization.convert.

-

Post-Training Quantization vs. Dynamic Quantization

- Unlike dynamic quantization, which quantizes activations on-the-fly during inference, static quantization requires an additional calibration step. This calibration involves running representative data through the model to collect statistics on the distribution of activations. These statistics guide the quantization process by determining appropriate scaling factors and zero points for each tensor.

- Put simply, in PTQ, while weights are quantized ahead of time, activations are quantized using calibration data collected via observers, enabling fully quantized inference across all layers.

Example Workflow

- Below is an example illustrating a typical PTQ workflow:

import torch

import torch.quantization

# Step 1: Define or load the model

model = ... # assume a pre-trained model is loaded

# Step 2: Set the quantization configuration

# Choose backend depending on target device

model.qconfig = torch.quantization.get_default_qconfig('qnnpack') # for ARM/mobile

# model.qconfig = torch.quantization.get_default_qconfig('fbgemm') # for x86/server

# Step 3: Fuse modules (e.g., Conv + BN + ReLU)

model_fused = torch.quantization.fuse_modules(model, [['conv', 'bn', 'relu']])

# Step 4: Insert observer modules

model_prepared = torch.quantization.prepare(model_fused)

# Step 5: Calibrate the model with representative data

# For example, run a few batches of real or synthetic inputs

model_prepared(example_batch)

# Step 6: Convert to a quantized model

model_quantized = torch.quantization.convert(model_prepared)

- This static quantization pipeline can yield 2× to 4× speedups in inference latency and a 4× reduction in model size, with minimal degradation in accuracy when calibrated effectively.

Workflow Explanation

-

After the example workflow, here is a breakdown of each step and its purpose:

- Model Preparation: The model must be in eval mode (

model.eval()) so that observers and quantization stubs function deterministically. Depending on the backend,model.qconfig = torch.ao.quantization.get_default_qconfig('x86')or'qnnpack'sets the appropriate quantization configuration. - Operator Fusion: Use

torch.quantization.fuse_modulesto merge modules like Conv‑BatchNorm‑ReLU into a single fused operator. This improves numerical stability and reduces redundant quant‑dequant steps. - Observer Insertion: Invoke

torch.quantization.prepareto automatically insert observer modules (e.g.,MinMaxObserver). These record activation statistics during the calibration phase. - Calibration: Run representative real-world input data through the prepared model to collect min/max or histogram statistics via observers. Approximately 100‑200 mini‑batches often suffice for good calibration.

- Conversion to Quantized Model: Use

torch.quantization.convertto replace observed layers with quantized counterparts, applying pre-determined scales and zero points. The resulting model executes end‑to‑end inint8arithmetic.

- Model Preparation: The model must be in eval mode (

Typical Benefits

- Model size is typically reduced by ≈4× (since

int8requires only 1 byte per parameter instead of 4) and memory bandwidth requirements drop significantly. - Inference latency improves—often 2× to 4× faster than float32—by eliminating repeated float‑int conversions and enabling optimized integer kernels on CPU and mobile.

- Enables uniform quantized execution across the network, which improves cache locality and enables hardware acceleration on supported platforms.

Notes & Trade‑offs

- Requires a representative calibration dataset. If the input distribution drifts significantly, fixed quantization ranges may degrade accuracy over time.

- Slight accuracy loss compared to floating‑point baseline—although typically small (~1‑2%)—especially on highly non-linear or sensitive models. For critical accuracy use‑cases, Quantization Aware Training may be more suitable.

- Not all operators are supported for eager/static quantization. While convolution, linear, and RNN layers are supported, custom or unsupported layers may need manual handling or fallbacks. Per-channel quantization support is available, but requires proper qconfig settings.

- The quantization workflow in PyTorch uses either Eager Mode or FX Graph Mode. FX mode can automate fusion and support functional operators, but may require model refactoring. Eager Mode offers more manual control but with limited operator coverage.

Quantization‑aware Training (QAT)

-

QAT is the most accurate among PyTorch’s three quantization techniques for static quantization. With QAT, all weights and activations are subject to “fake quantization” during both forward and backward passes: values are rounded to simulate

int8quantization, while computations remain in floating‑point. Consequently, weight updates occur with full awareness that the model will eventually operate inint8. As a result, models trained with QAT generally achieve higher post‑quantization accuracy than those produced by post‑training quantization or dynamic quantization. -

The principle is straightforward: the training process is informed about the ultimate quantized inference format. During training, activations and weights are rounded appropriately, so gradient flow reflects the quantization effects. However, the backpropagation itself—the gradient descent—is executed using full‑precision arithmetic.

-

After QAT training and conversion, the final model stores both weights and activations in the

int8quantized format, making it suitable for efficient inference on quantization-compatible hardware. -

To implement QAT in PyTorch’s eager‑mode workflow, one typically follows these steps:

- Fuse suitable modules (e.g. Conv+ReLU, Conv+BatchNorm) via

torch.quantization.fuse_modules. - Insert

QuantStubandDeQuantStubmodules to manage quantization boundaries. - Assign

.qconfigto modules—e.g. viatorch.quantization.get_default_qat_qconfig('fbgemm')or'qnnpack'. - Prepare the model using

torch.ao.quantization.prepare_qat()ortorch.quantization.prepare_qat(). - Train or fine‑tune the model in training mode.

- After training, apply

torch.ao.quantization.convert()ortorch.quantization.convert()to produce the fully quantizedint8model.

- Fuse suitable modules (e.g. Conv+ReLU, Conv+BatchNorm) via

-

A code snippet in PyTorch invoking QAT:

qat_model.qconfig = torch.quantization.get_default_qat_qconfig('fbgemm')

torch.quantization.prepare_qat(qat_model, inplace=True)

# train or fine‑tune qat_model ...

quantized_model = torch.quantization.convert(qat_model.eval(), inplace=False)

- The fake quantization modules, which simulate the effects of quantization during both forward and backward passes, internally use observers (e.g.,

MinMaxObserverorHistogramObserver) to track activation and weight ranges during training. These fake quantization modules, which are inserted during training, are typically replaced with real quantized operators in the converted model.

Quantization‑aware Training vs. Post-Training Quantization vs. Dynamic Quantization

- Note that unlike dynamic quantization (which only quantizes weights statically and activations on-the-fly during inference), QAT simulates quantization for both weights and activations during training. This allows the model to learn parameters that are robust to quantization-induced errors introduced at inference time. Put simply, it allows the parameters to adapt to quantization noise during inference and typically results in significantly better accuracy, especially for models with activation-sensitive layers such as convolutional networks.

- Furthermore, unlike post-training quantization, QAT does not require a separate calibration phase after training. Instead, it uses the observer modules during training itself to learn and track the necessary quantization parameters, effectively integrating calibration into the training loop.

Example Workflow

- This sub‑section illustrates a complete workflow for applying static QAT to a convolutional neural network (e.g. ResNet18):

import torch

import torch.nn as nn

import torch.quantization

from torch.quantization import QuantStub, DeQuantStub

class MyModel(nn.Module):

def __init__(self):

super().__init__()

self.quant = QuantStub()

self.conv = nn.Conv2d(3, 16, kernel_size=3, stride=1, padding=1)

self.bn = nn.BatchNorm2d(16)

self.relu = nn.ReLU()

self.fc = nn.Linear(16*32*32, 10)

self.dequant = DeQuantStub()

def forward(self, x):

x = self.quant(x)

x = self.relu(self.bn(self.conv(x)))

x = x.flatten(1)

x = self.fc(x)

x = self.dequant(x)

return x

# 1. Load pre‑trained or float‑trained model

model = MyModel()

model.eval()

# 2. Fuse conv, bn, relu

torch.quantization.fuse_modules(model, [['conv','bn','relu']], inplace=True)

# 3. Attach QAT config

model.qconfig = torch.quantization.get_default_qat_qconfig('fbgemm')

# 4. Prepare QAT

torch.quantization.prepare_qat(model, inplace=True)

# 5. Fine‑tune QAT model

model.train()

# run training loop for several epochs ...

# 6. Convert to quantized model

quantized_model = torch.quantization.convert(model.eval(), inplace=False)

# 7. Evaluate quantized_model for accuracy and inference performance

Workflow Explanation

- This workflow enforces quantization effects during training by simulating rounding and clamping via fake quantization modules.

QuantStubandDeQuantStubdemarcate where data transitions between float and quantized domains.qconfigcontrols observer placement and quantization schemes (e.g. symmetric vs affine, per‑tensor vs per‑channel). - Fake quantization is active during training, guiding the network to adapt to the constraints of int8 inference arithmetic. Only after fine‑tuning does

convert()replace fake quant modules with actual quantized operators for efficientint8inference execution.

Typical Benefits

- Higher quantized accuracy than post‑training static or dynamic quantization, often reducing performance degradation to minimal levels.

- Improved robustness to quantization noise, particularly important for convolutional networks and vision models.

- Retains compression benefits: reduced model size (≈25% of float model) and faster inference on hardware optimized for

int8.

Notes & Trade‑offs

- QAT requires additional training or fine‑tuning, increasing overall development time.

- Careful scheduling is needed: a small learning rate is recommended to avoid instability introduced by straight‑through estimator (STE) approximations.

- Model preparation steps such as layer fusion and correct placement of quant stubs are critical. Missing fusions can degrade accuracy.

- Not all operators or model architectures are fully quantization‑aware; some require manual adaptation.

- The quantized model behavior may differ subtly from the fake‑quant version: as reported, the output of a real quantized model may diverge slightly from fake‑quant during testing on toy models.

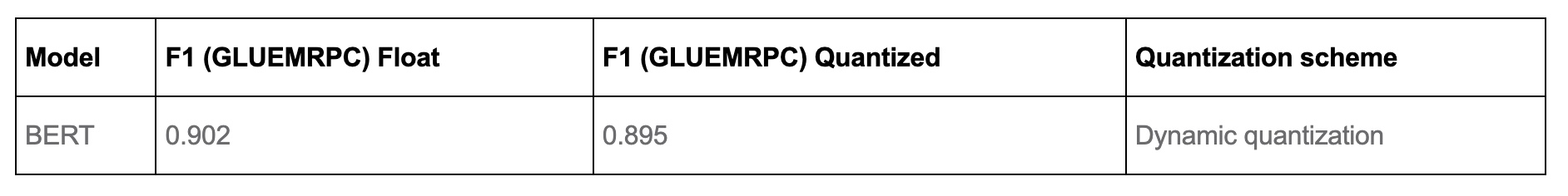

Comparative Analysis

- Here is a detailed comparative analysis of Dynamic Quantization, PTQ, and QAT:

| Aspect | Dynamic / Runtime Quantization | Post-Training Quantization (PTQ) | Quantization-Aware Training (QAT) |

|---|---|---|---|

| Primary Use Case | Fast, easy quantization of models with primarily linear operations (e.g. LSTM, BERT) | Quantization of convolutional or more complex models with moderate accuracy tradeoff | High-accuracy quantization for models sensitive to quantization (e.g. CNNs, object detectors) |

| Requires Retraining? | No | No | Yes |

| Requires Calibration Data? | No | Yes (to collect activation statistics) | No separate calibration; statistics are collected during training |

| When Activations Are Quantized | At runtime (dynamically before each operation) | Statically using observer statistics from calibration | During training using fake quantization modules |

| Quantization of Weights | Done ahead of time (static) | Done ahead of time (static) | Simulated during training, finalized during conversion |

| Quantization of Activations | Dynamically quantized at inference time | Statically quantized using calibration ranges | Simulated via fake quantization during training |

| Typical Accuracy | Moderate loss (acceptable for linear-dominant models) | Slight to moderate loss | Minimal loss; best accuracy among all methods |

| Complexity of Setup | Very low | Moderate | High |

| Computation Format During Training | Full float32 | Full float32 | Simulated int8 via fake quantization in float32 |

| Final Inference Format | int8 weights, float32 activations outside ops | int8 weights and activations | int8 weights and activations |

| Deployment Readiness | Easy and quick to apply, suitable for rapid deployment | Requires calibration workflow | Requires full training pipeline |

| Main Benefit | Faster inference via int8 compute; no data or retraining needed | Reduced latency and memory with moderate setup | Maximum accuracy with full int8 inference |

| Target Operators | Mostly linear layers (e.g. nn.Linear, nn.LSTM) |

Broad operator support with fused modules (e.g. Conv+ReLU) |

Full model coverage with operator fusion and training |

| Memory Footprint Reduction | Partial (activations still float32) | Full (weights and activations in int8) | Full (weights and activations in int8) |

| Primary Optimization Goal | Compute efficiency (faster matmuls), leading to latency savings | Latency and memory savings | Accuracy preservation under quantization |

| Example Usage | torch.quantization.quantize_dynamic |

prepare, calibrate, convert |

prepare_qat, train, convert |

Compute vs. Memory Bottlenecks

-

Deep learning performance is typically constrained by one of two primary bottlenecks: compute (arithmetic throughput) or memory (bandwidth and capacity). The balance between them depends on the hardware architecture, the model’s structure, and the numerical precision used.

-

Compute-Bound Workloads:

-

A workload is compute-bound when the GPU/CPU spends most of its time performing arithmetic operations rather than waiting for data from memory. This is common in:

- Large matrix multiplications with high arithmetic intensity (high FLOPs-to-bytes ratio).

- Dense layers and convolution layers with large channel counts and large kernel sizes.

- Transformer attention mechanisms with large batch sizes or long sequence lengths.

-

In compute-bound scenarios, lowering the precision of operands (e.g.,

float32\(\rightarrow\)float16orint8) allows hardware to execute more operations per clock cycle. For example:- NVIDIA Tensor Cores can deliver up to 2×–4× the throughput for

float16orbfloat16GEMMs compared tofloat32. - Integer accelerators (e.g.,

int8SIMD or systolic arrays) can achieve even higher gains, especially on CPUs or edge NPUs.

- NVIDIA Tensor Cores can deliver up to 2×–4× the throughput for

-

By reducing the number of bits per operand, quantization directly increases the number of multiply-accumulate operations that can be executed in parallel within the same silicon area and clock period.

-

-

Memory-Bound Workloads:

-

A workload is memory-bound when the processor spends more time fetching/storing data than performing arithmetic. This is common when:

- The layer has small arithmetic intensity, such as pointwise operations or small matrix-vector products.

- Batch sizes are small, reducing the amount of computation per data load.

- Model parameters or activations exceed on-chip cache capacity, forcing frequent DRAM access.

-

Memory-bound operations are limited by memory bandwidth and latency rather than raw compute throughput. Here, quantization helps by:

- Reducing memory footprint: Lower precision reduces the size of weights and activations (e.g.,

float32\(\rightarrow\)int8cuts memory use by 75%). - Improving cache locality: More parameters fit in L1/L2 cache or shared memory, reducing expensive DRAM fetches.

- Increasing effective bandwidth: Smaller data transfers mean more elements can be moved per memory transaction.

- Reducing memory footprint: Lower precision reduces the size of weights and activations (e.g.,

-

On many edge devices, memory-bound layers see the largest relative speedups from quantization because external DRAM bandwidth is a critical bottleneck.

-

-

Mixed Bottleneck Scenarios:

-

Many real-world models contain both compute-bound and memory-bound regions:

- Early convolution layers in vision models often run close to peak compute throughput, benefiting most from Tensor Core–accelerated low-precision compute.

- Later layers with smaller spatial dimensions but large channel counts may become memory-bound, benefiting more from reduced memory bandwidth pressure than raw FLOP gains.

- Transformer feed-forward layers can be compute-bound, while embedding lookups or normalization layers can be memory-bound.

-

In such cases, mixed-precision quantization can optimize each region separately—keeping sensitive, low-intensity operations in higher precision while aggressively quantizing compute-heavy layers.

-

-

Where Quantization Delivers the Most Impact:

-

Quantization is most impactful when:

- The model runs on hardware with specialized low-precision units (Tensor Cores,

int8MAC units,float8engines). - Memory bandwidth is a limiting factor (common in mobile SoCs, edge AI chips, or when serving many inference requests in parallel).

- Model size exceeds cache capacity, leading to frequent DRAM access.

- Deployment constraints demand both latency and memory footprint reductions (e.g., real-time inference on embedded systems).

- The model runs on hardware with specialized low-precision units (Tensor Cores,

-

-

In summary, quantization addresses compute bottlenecks by enabling more operations per cycle and memory bottlenecks by reducing data transfer volume and improving cache utilization. Understanding which bottleneck dominates for a given layer or model is key to selecting the right quantization strategy.

Modern Quantization Techniques

GPTQ: Quantization with Second-Order Error Compensation

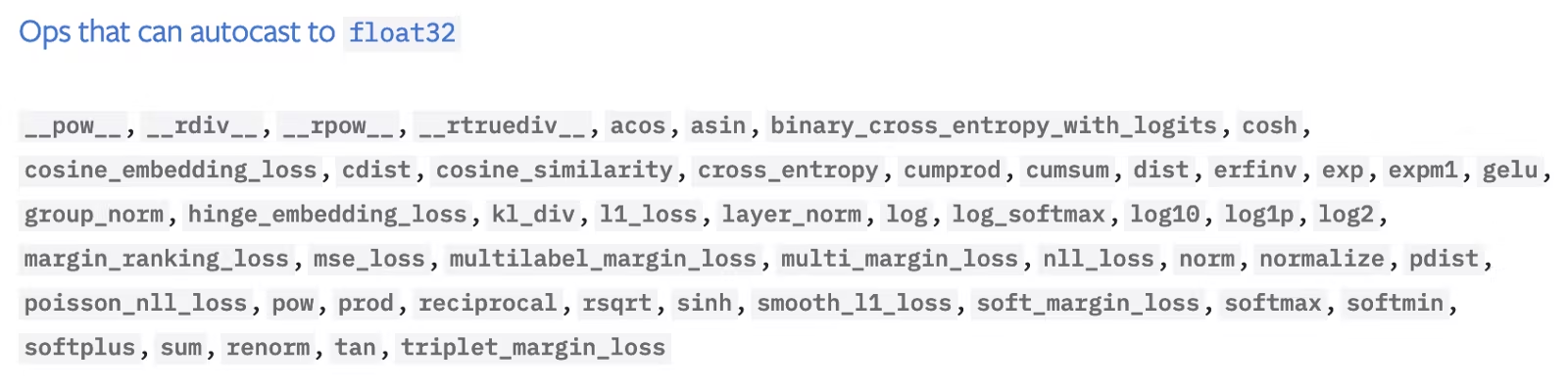

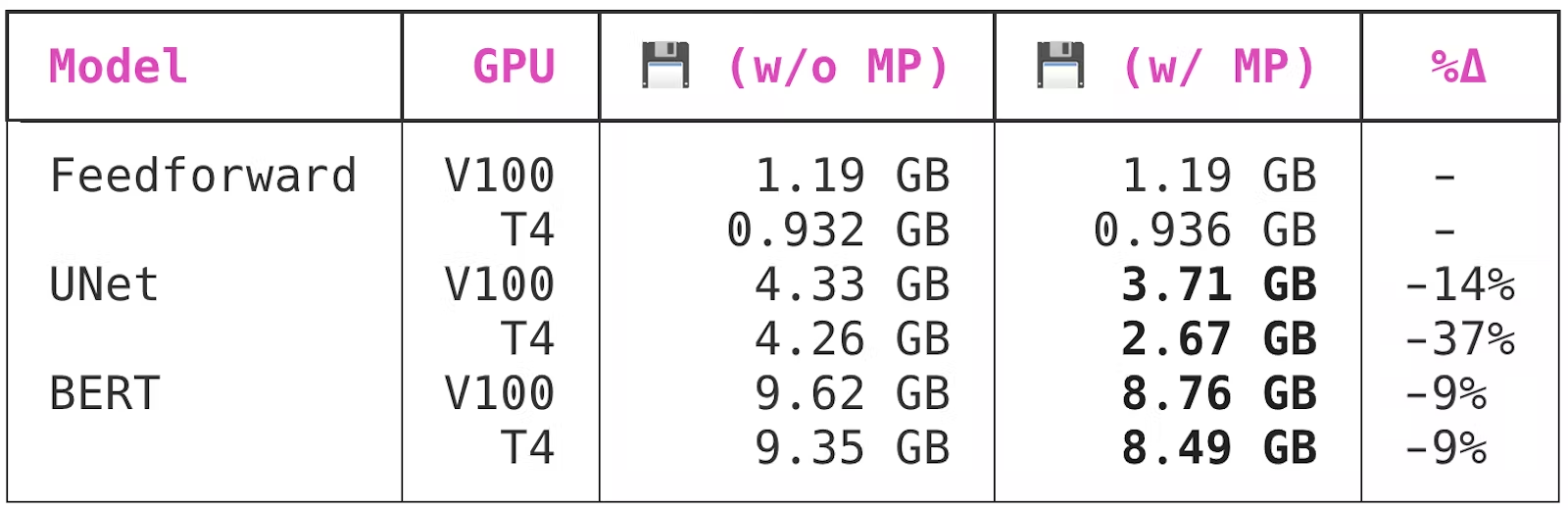

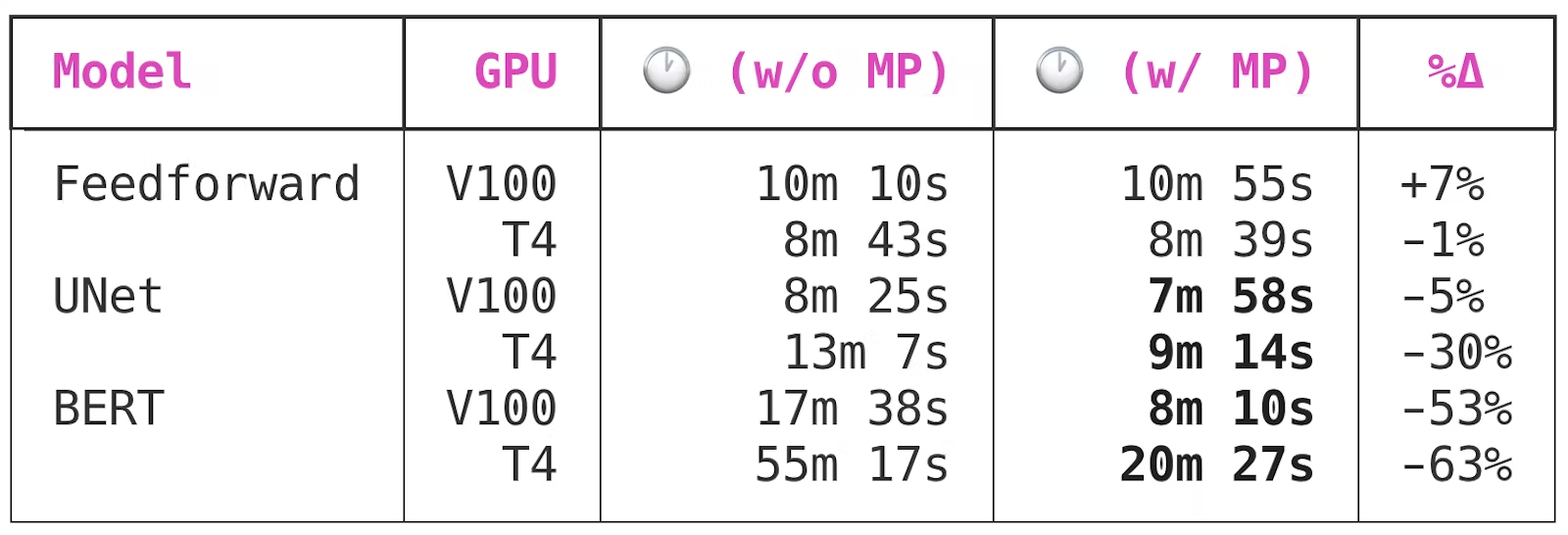

-