Models • Alpaca

Overview

-

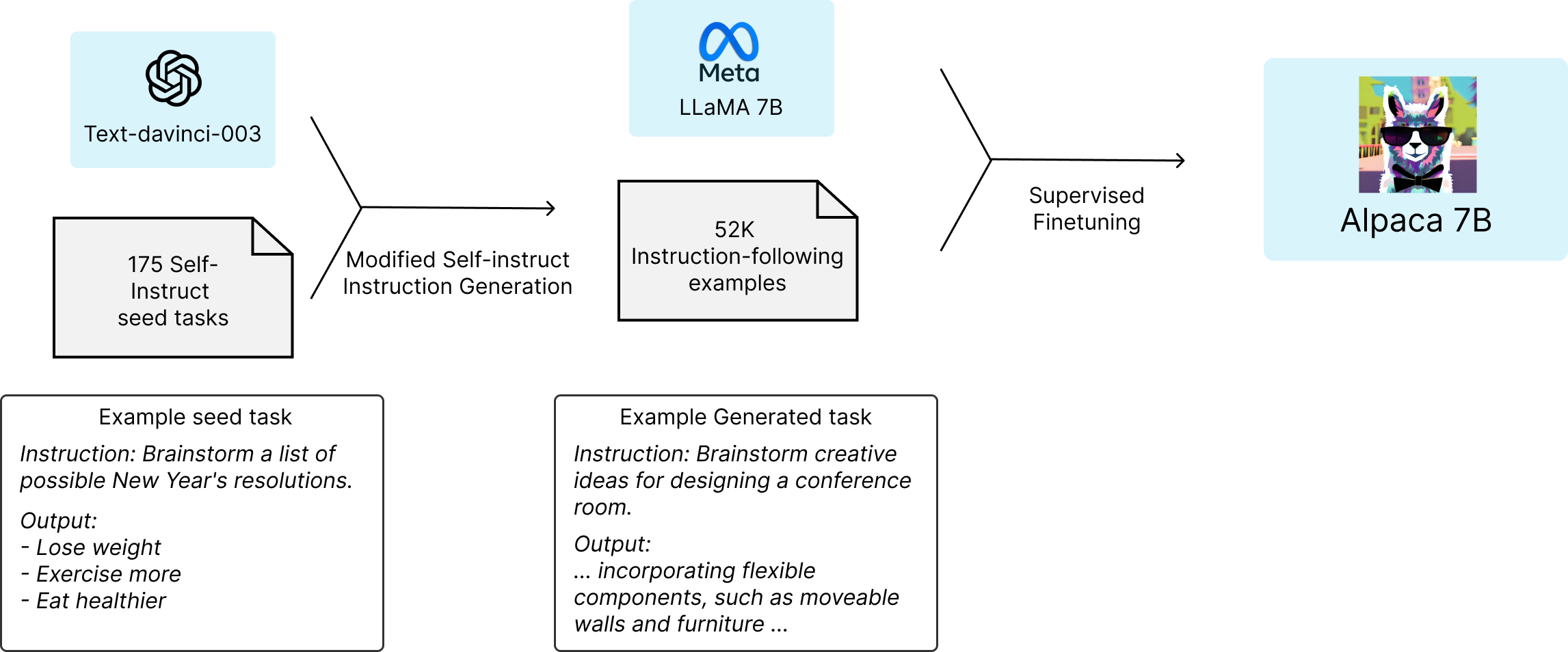

Stanford’s Alpaca is an instruction-finetuned 7B language transformer based on the 7B LLaMA GPT-3 alternative by Meta released in mid-March 2023.

-

Instead of using reinforcement learning with human feedback (RLHF), they take a supervised approach using 52k instruction-output pairs.

-

Instead of using human-generated instruction-output pairs, they retrieve the data by querying the GPT-3-based text-davinci-003 model. So, Alpaca essentially uses a form of weakly supervised or knowledge-distillation-flavored finetuning.*

-

The training recipe is available on GitHub, and according to the authors, it can be replicated with 8 A100 GPUs and a ~$600 budget.

-

Note that this can be competitive with human annotations. For example, in the Self-Instruct paper, the authors found that bootstrapping a model on its own generations can result in performance competitive with InstructGPT.