Primers • Agents

- Overview

- The “Agentic AI Moment”

- The Agentic Workflow

- Workflows vs. Agents

- The Agent Framework

- Agentic Design Patterns

- Reflection

- Function/Tool/API Calling

- Planning

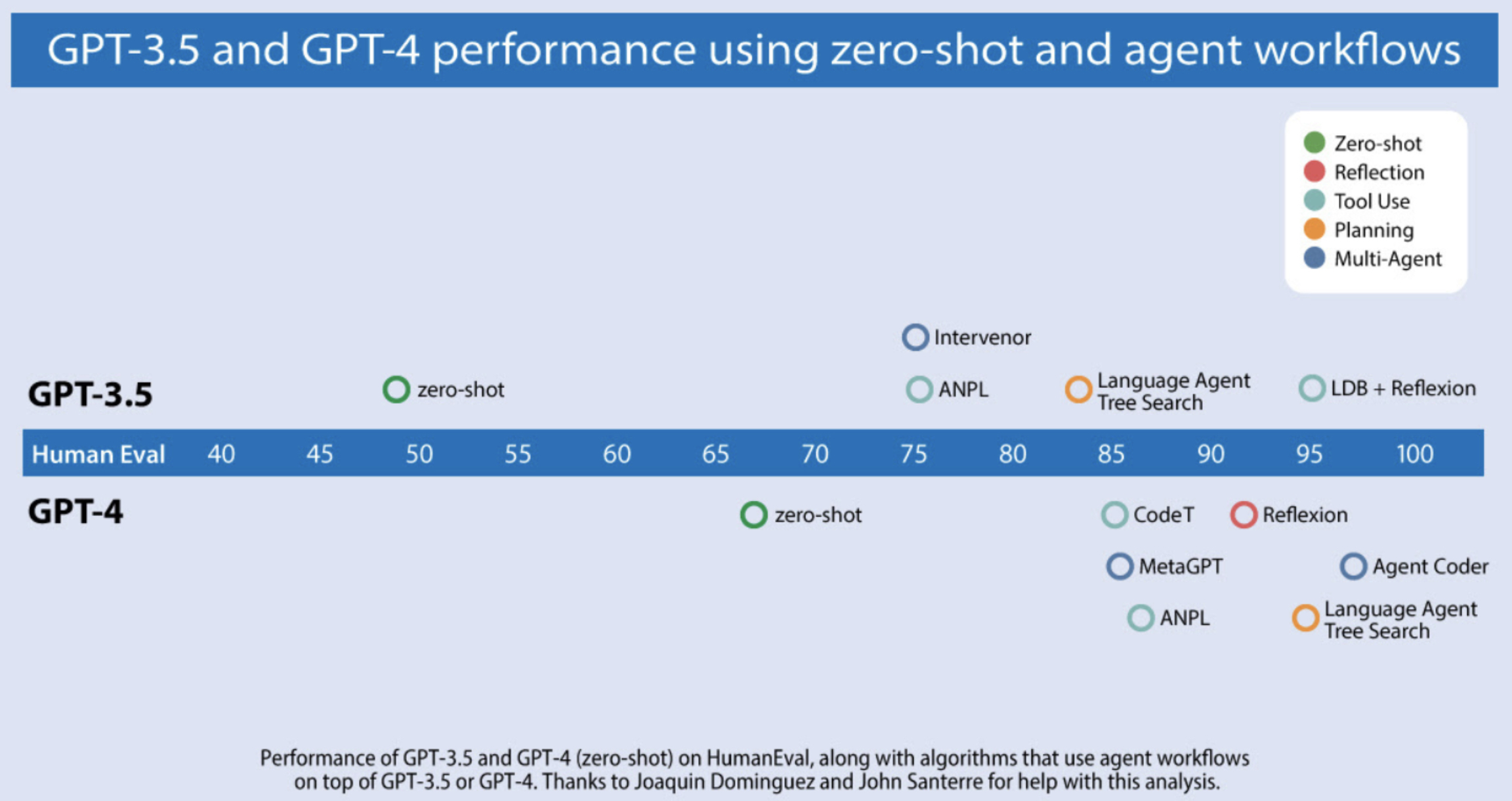

- Multi-agent Collaboration

- Implementation

- Agentic Workflow Patterns

- Single-Agent Systems vs. Multi-Agent Systems

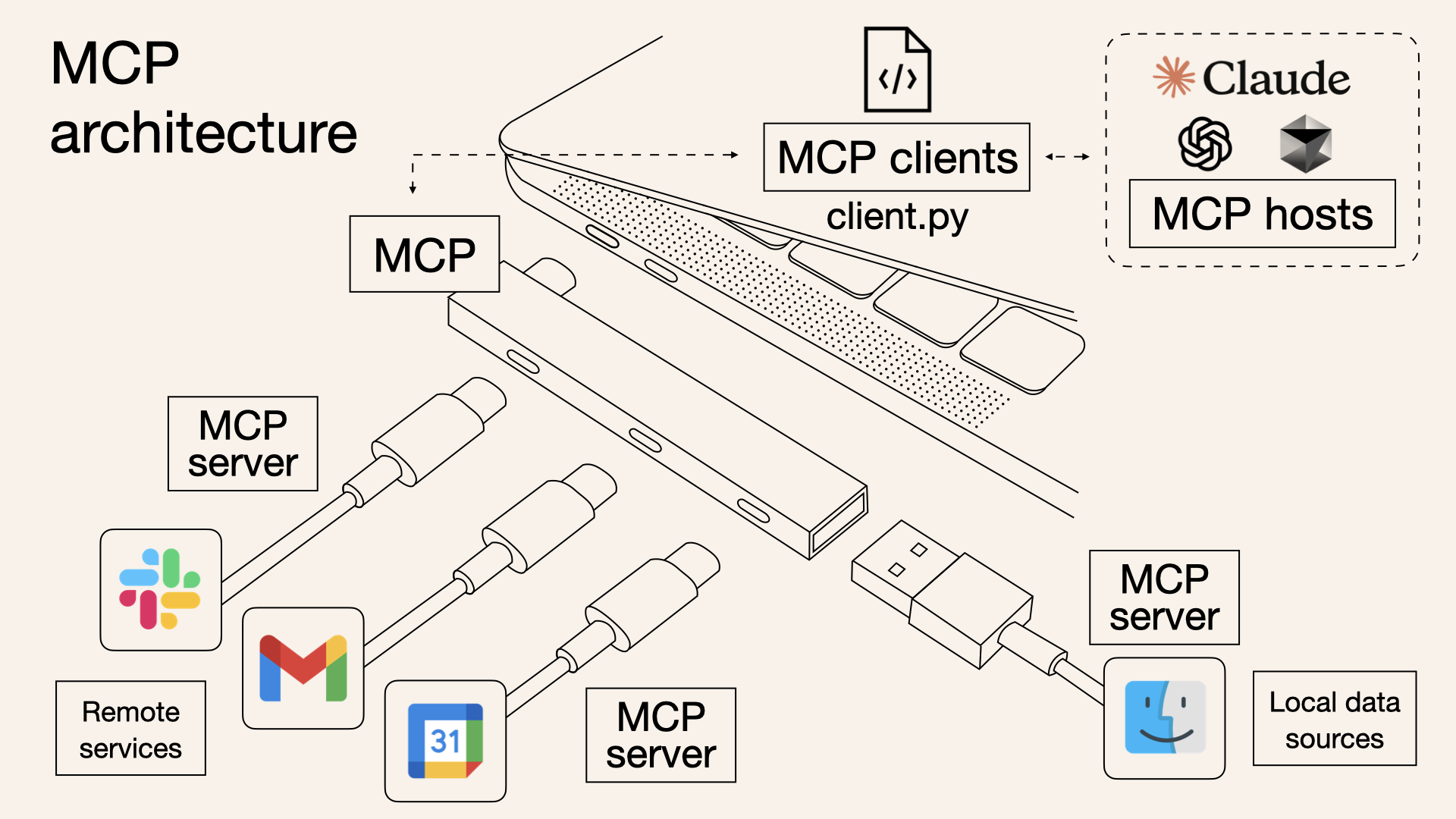

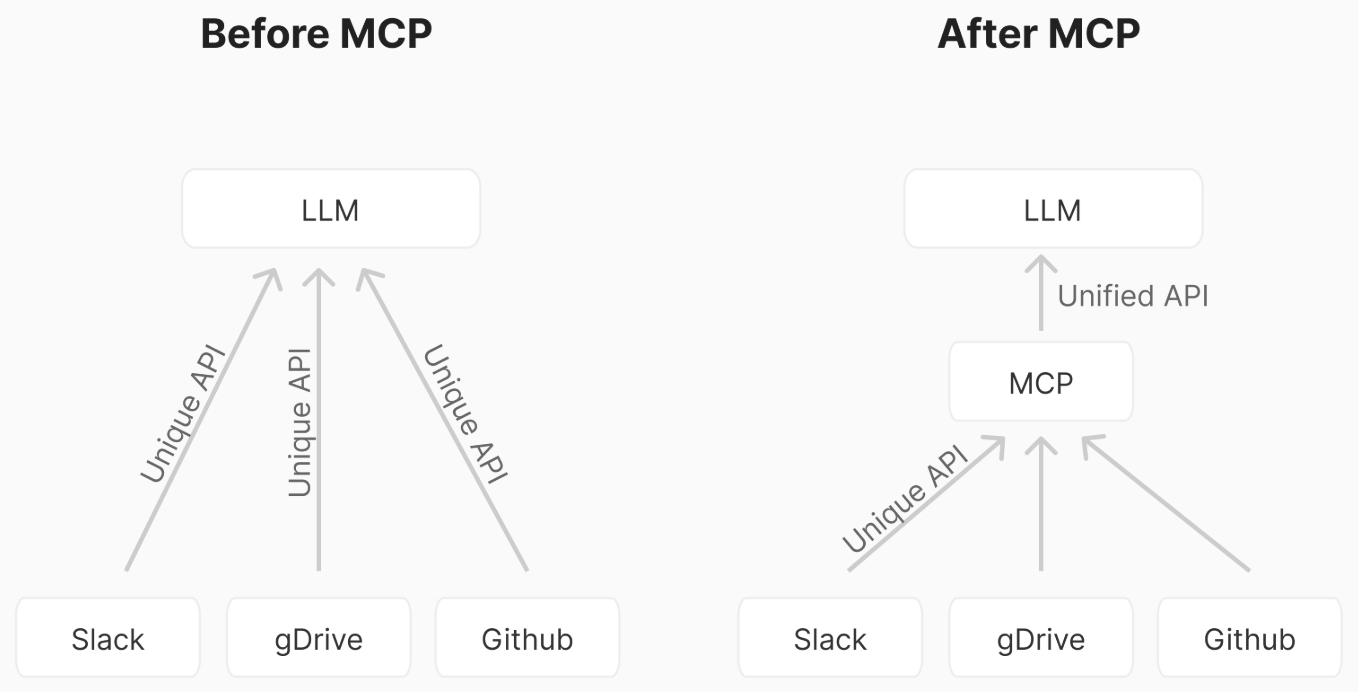

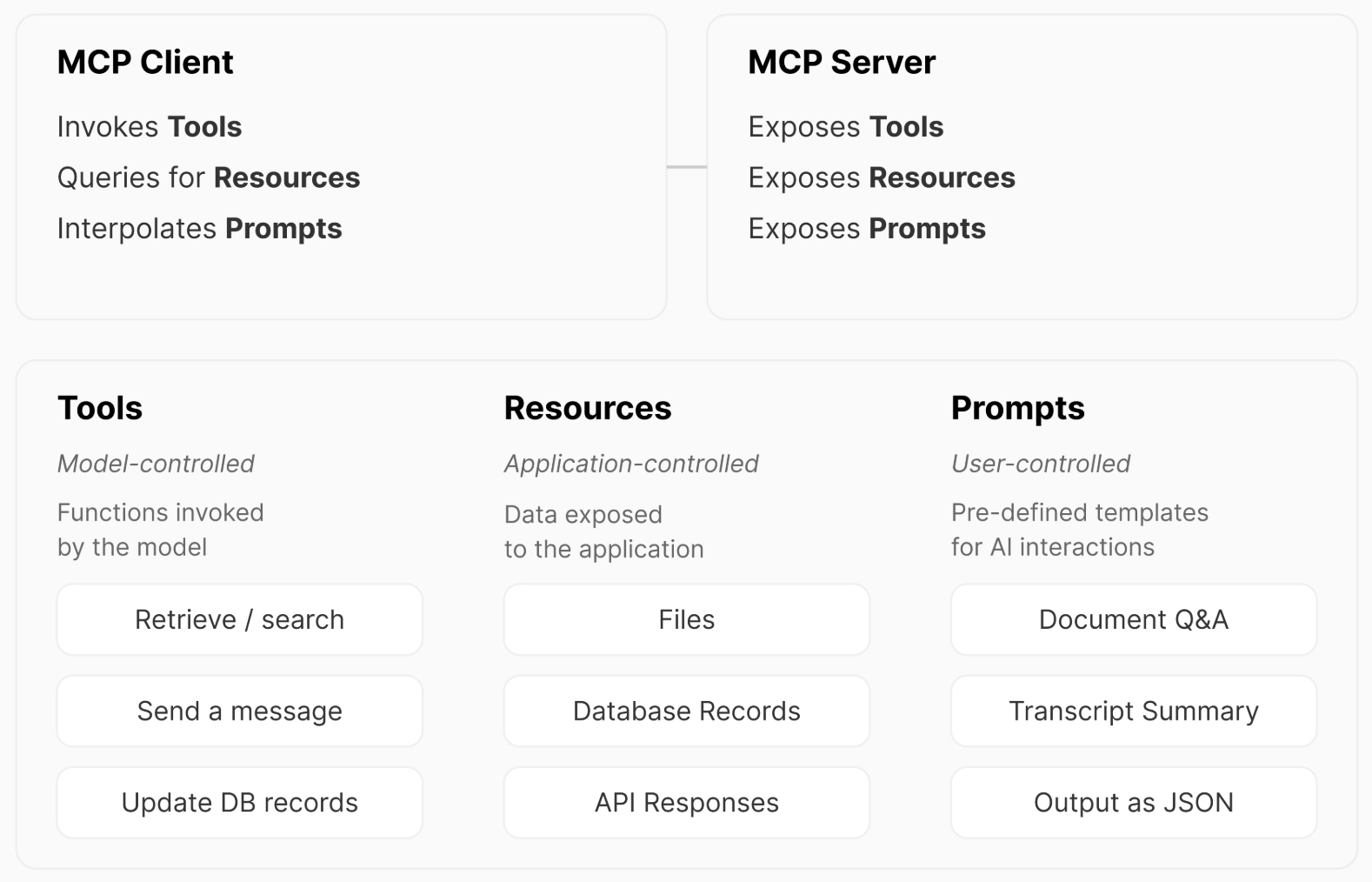

- Model Context Protocol (MCP)

- Overview

- Why MCP?

- General Architecture

- How MCP Works

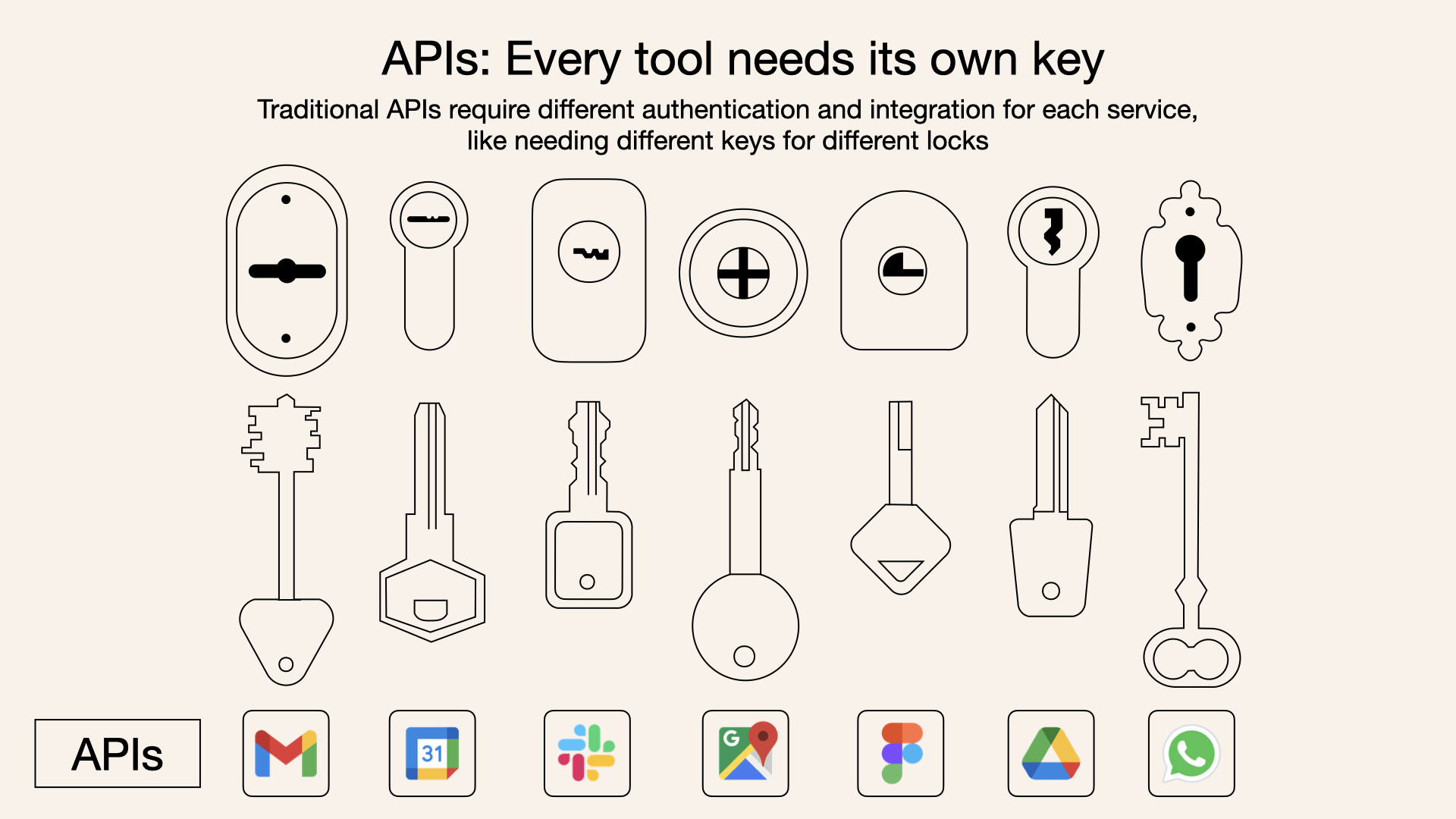

- MCP vs. API

- Security, Updates, and Authentication

- Getting Started with MCP: High-Level Steps

- Use-Cases of MCP in Real-World Development Scenarios

- Automating Feature Development from Ticket to Implementation

- Intelligent Ticket and Task Management

- Automated Communication with Relevant Stakeholders

- Smart Staging, Commit Messages, and PR Creation

- Automated Debugging and Console Log Access

- Integration with Personal Task Management Tools

- Automated Project Announcements

- MCP Servers List

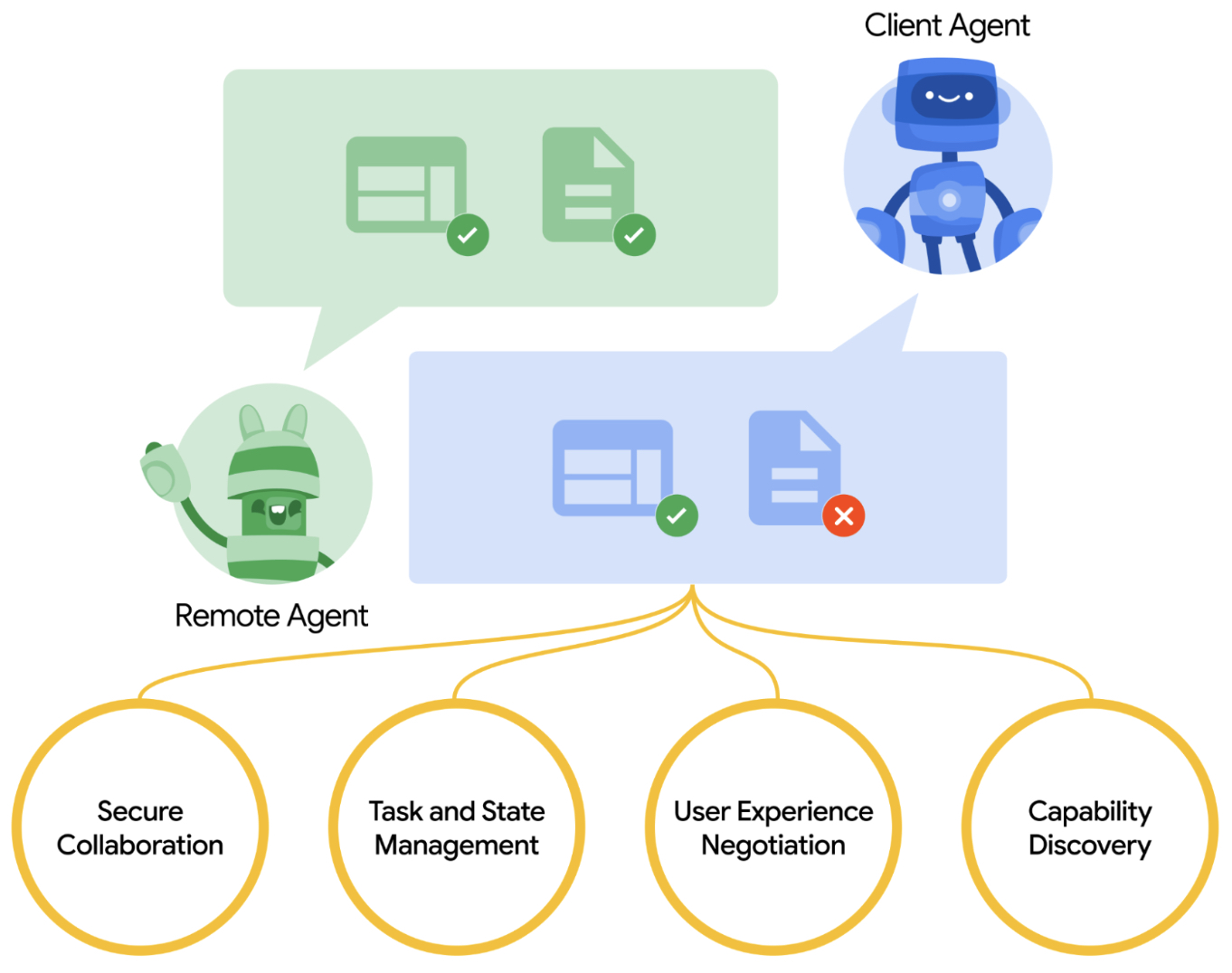

- Agent2Agent (A2A) Protocol

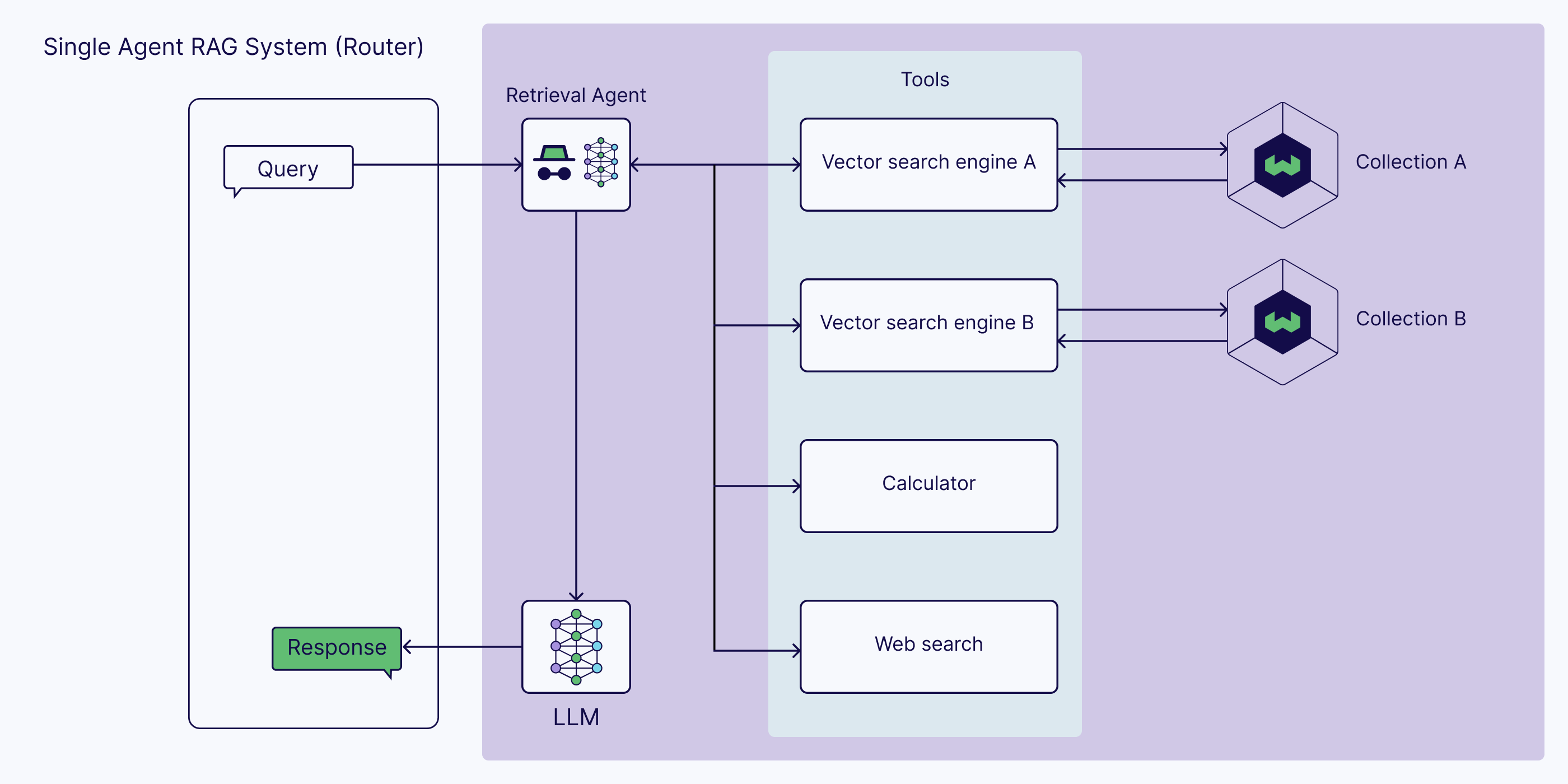

- Agentic Retrieval-Augmented Generation (RAG)

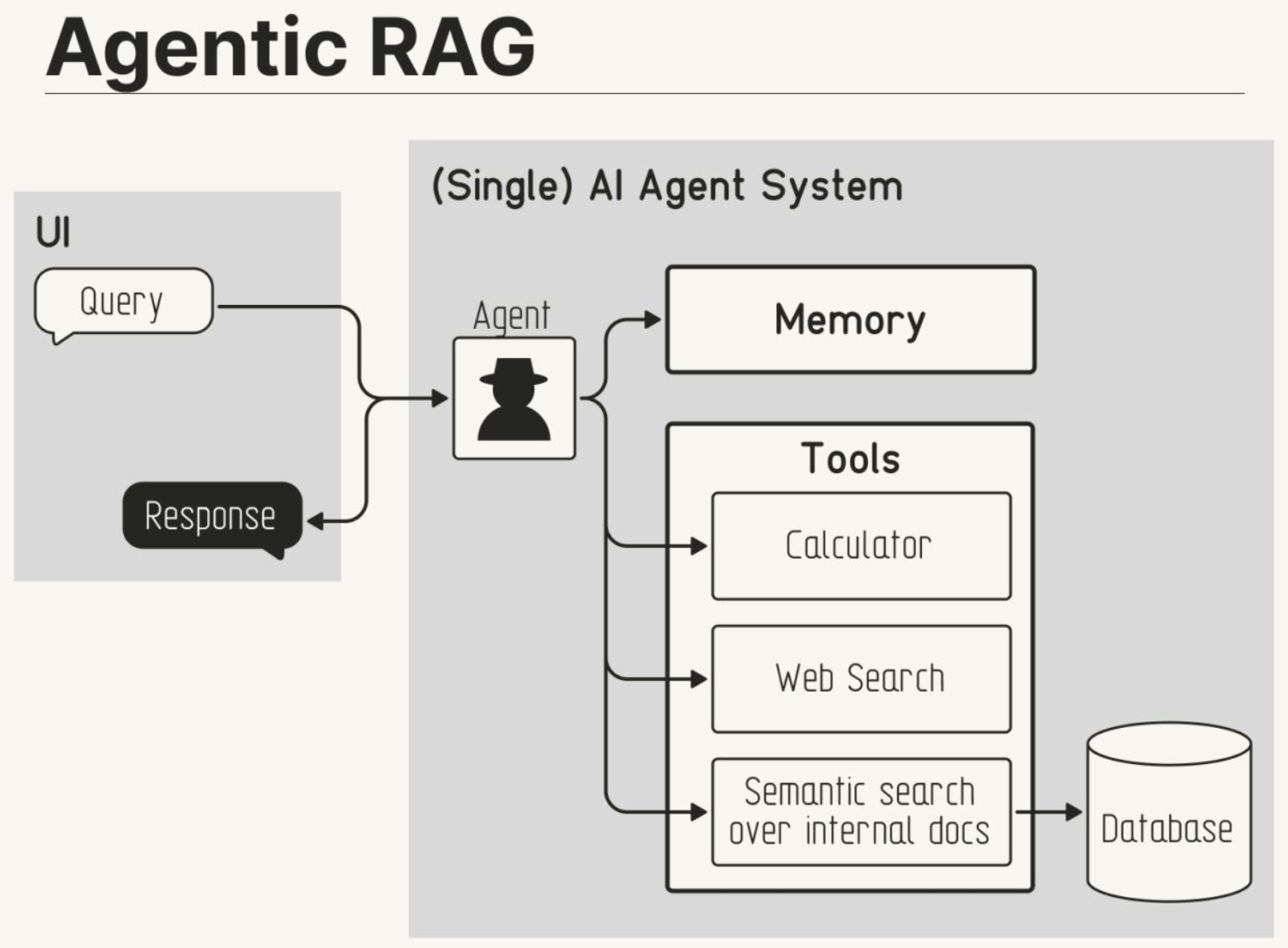

- How Agentic RAG Works

- Agentic Decision-Making in Retrieval

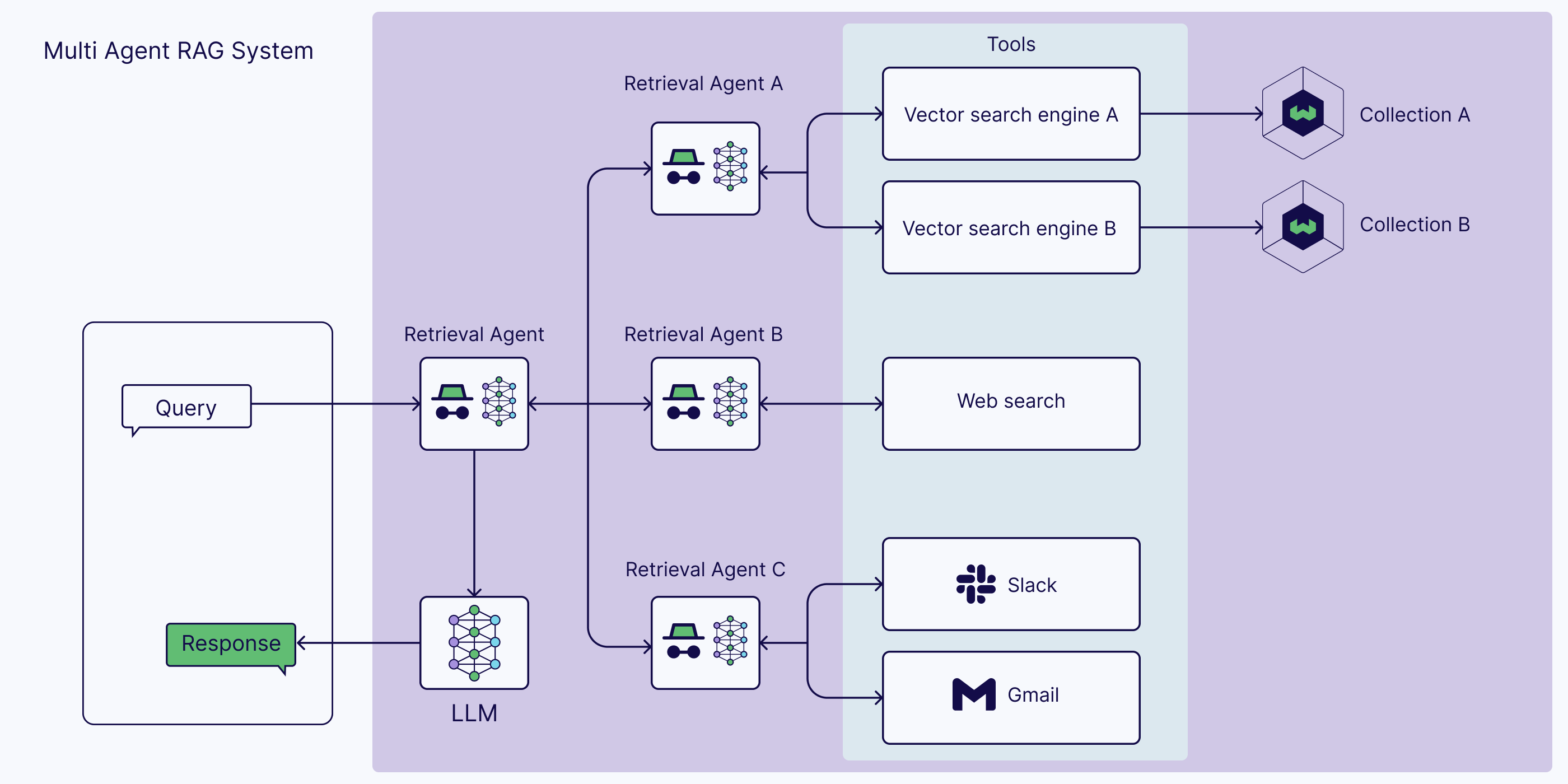

- Agentic RAG Architectures: Single-Agent vs. Multi-Agent Systems

- Beyond Retrieval: Expanding Agentic RAG’s Capabilities

- Agentic RAG vs. Vanilla RAG: Key Differences

- Implementing Agentic RAG: Key Approaches

- Enterprise-driven Adoption

- Benefits

- Limitations

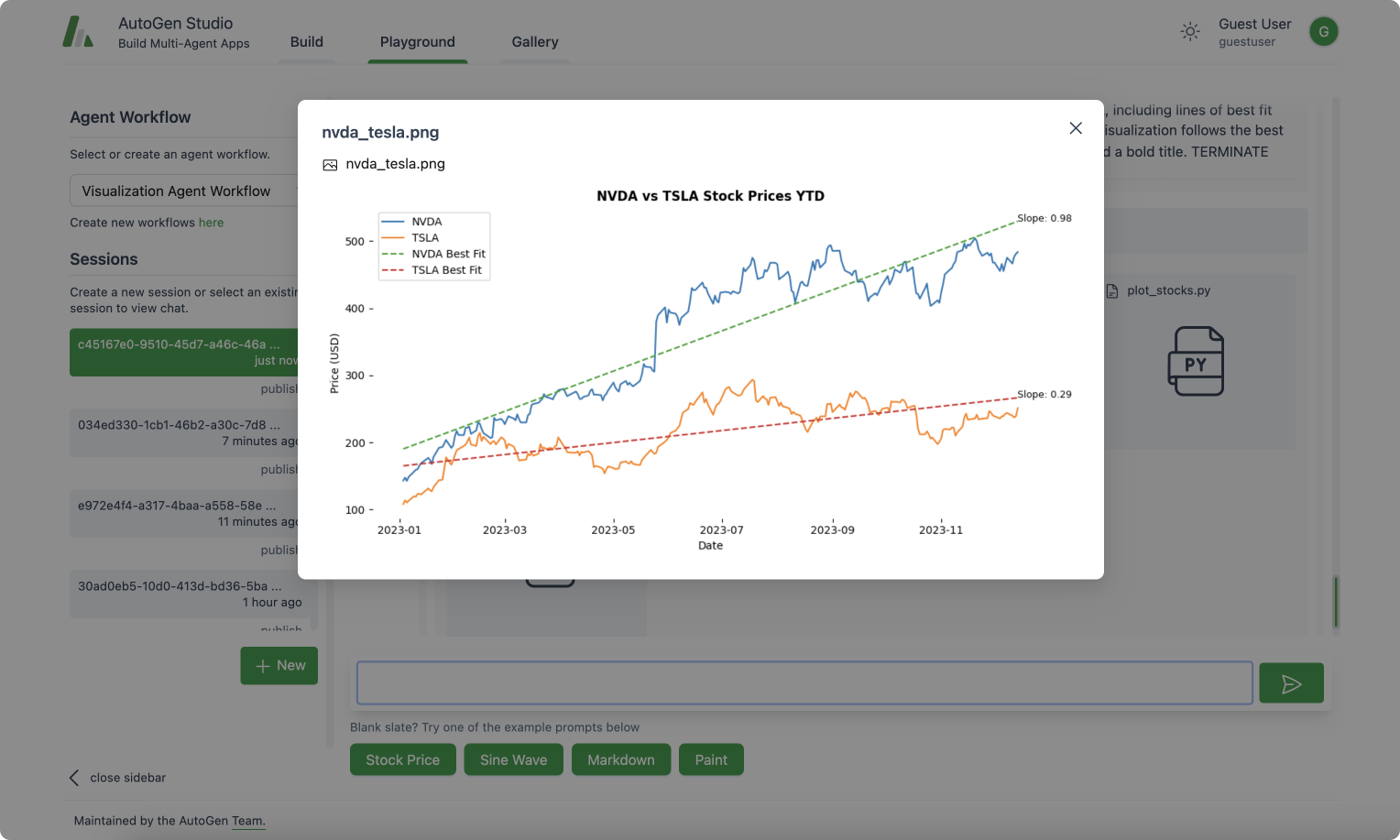

- Code

- Disadvantages of Agentic RAG

- Summary

- Reinforcement Learning for Agents

- Benchmarks

- Common Use-cases

- Case Studies

- Frameworks/Libraries

- Example Flow Chart for an LLM Agent: Handling a Customer Inquiry

- Use Cases

- Build your own LLM Agent

- Responsible AI Agents

- Related Papers

- Reflection

- Tool Calling

- Planning

- Multi-Agent Collaboration

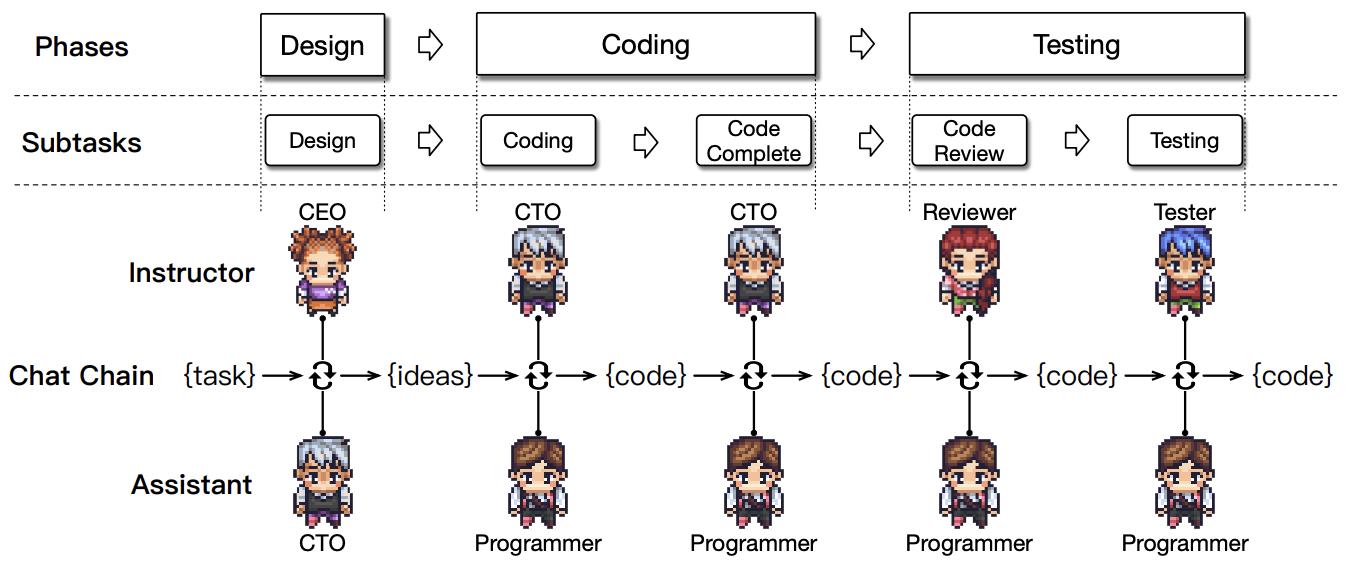

- ChatDev: Communicative Agents for Software Development

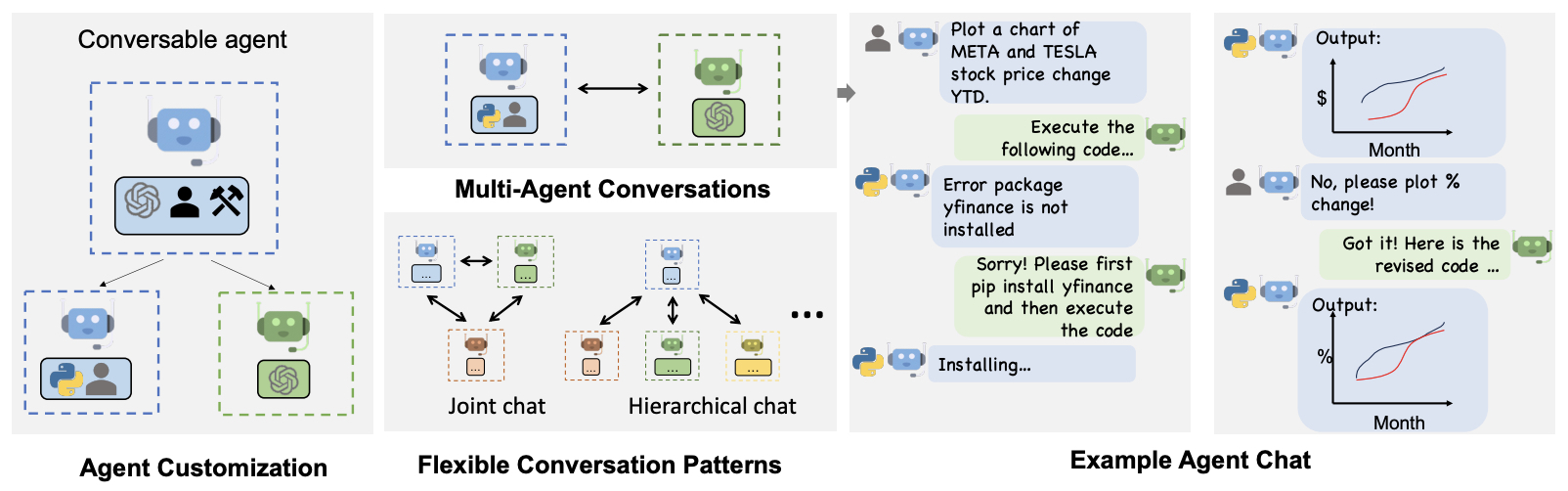

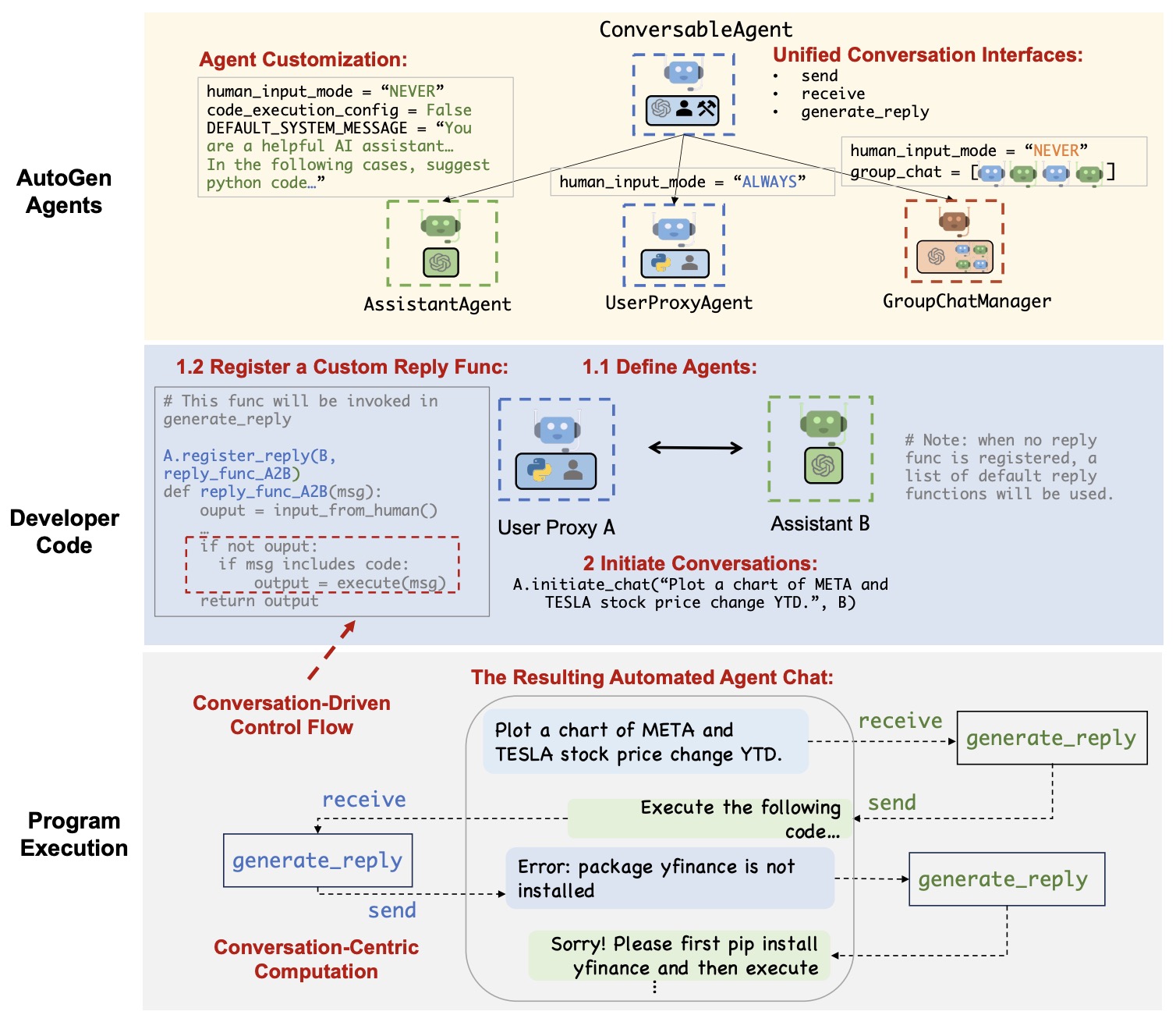

- AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation

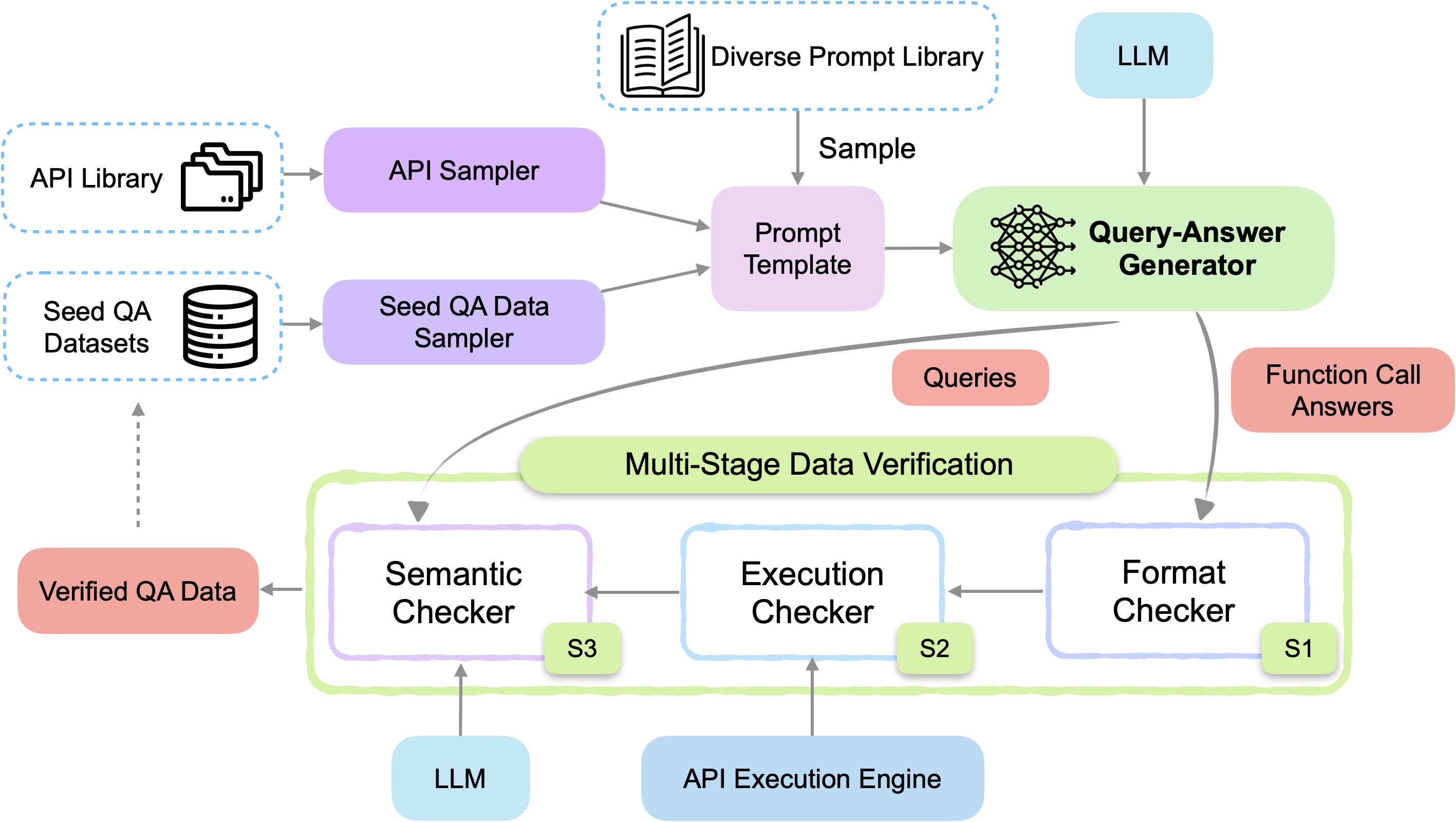

- APIGen: Automated Pipeline for Generating Verifiable and Diverse Function-Calling Datasets

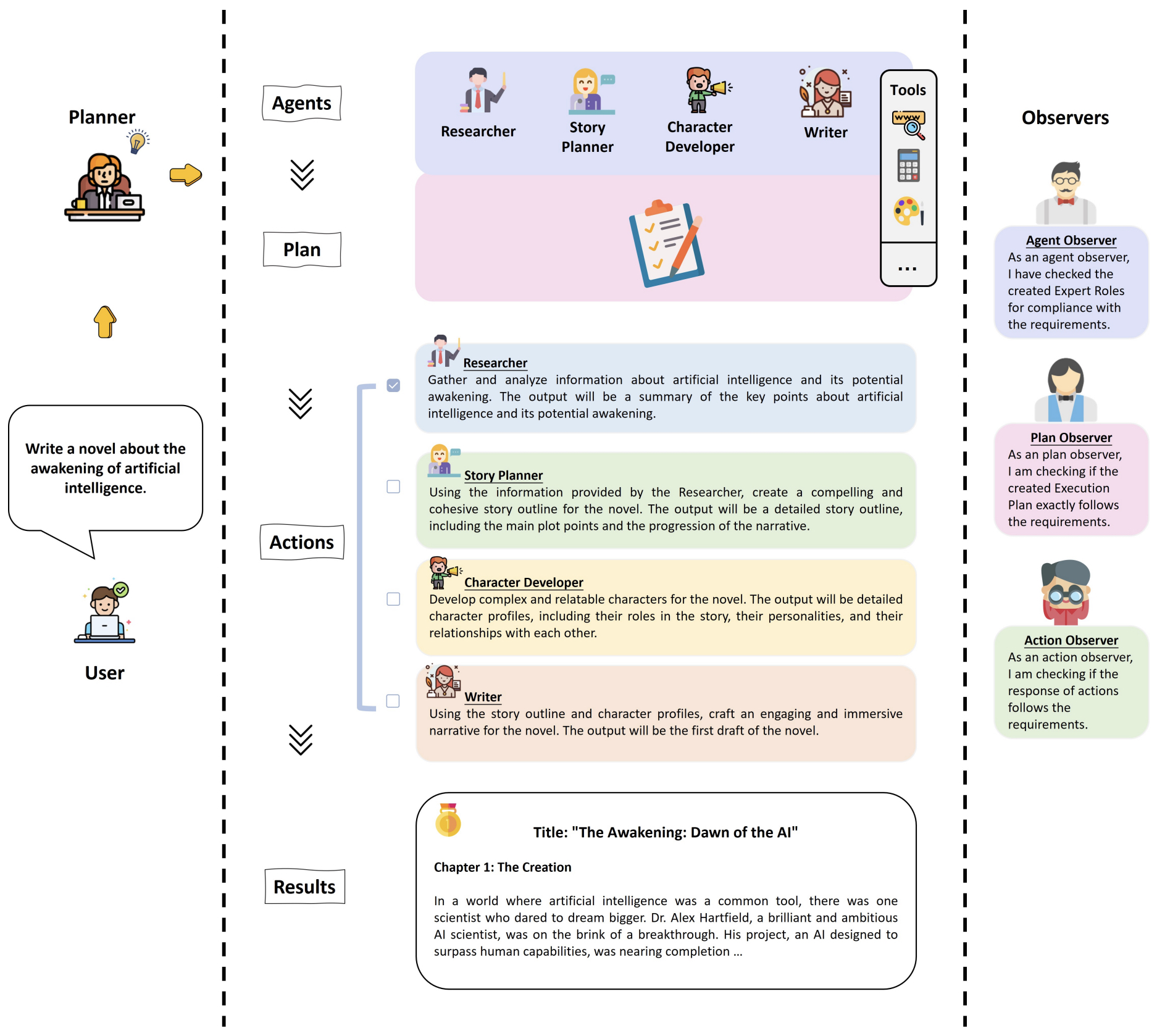

- AutoAgents: A Framework for Automatic Agent Generation

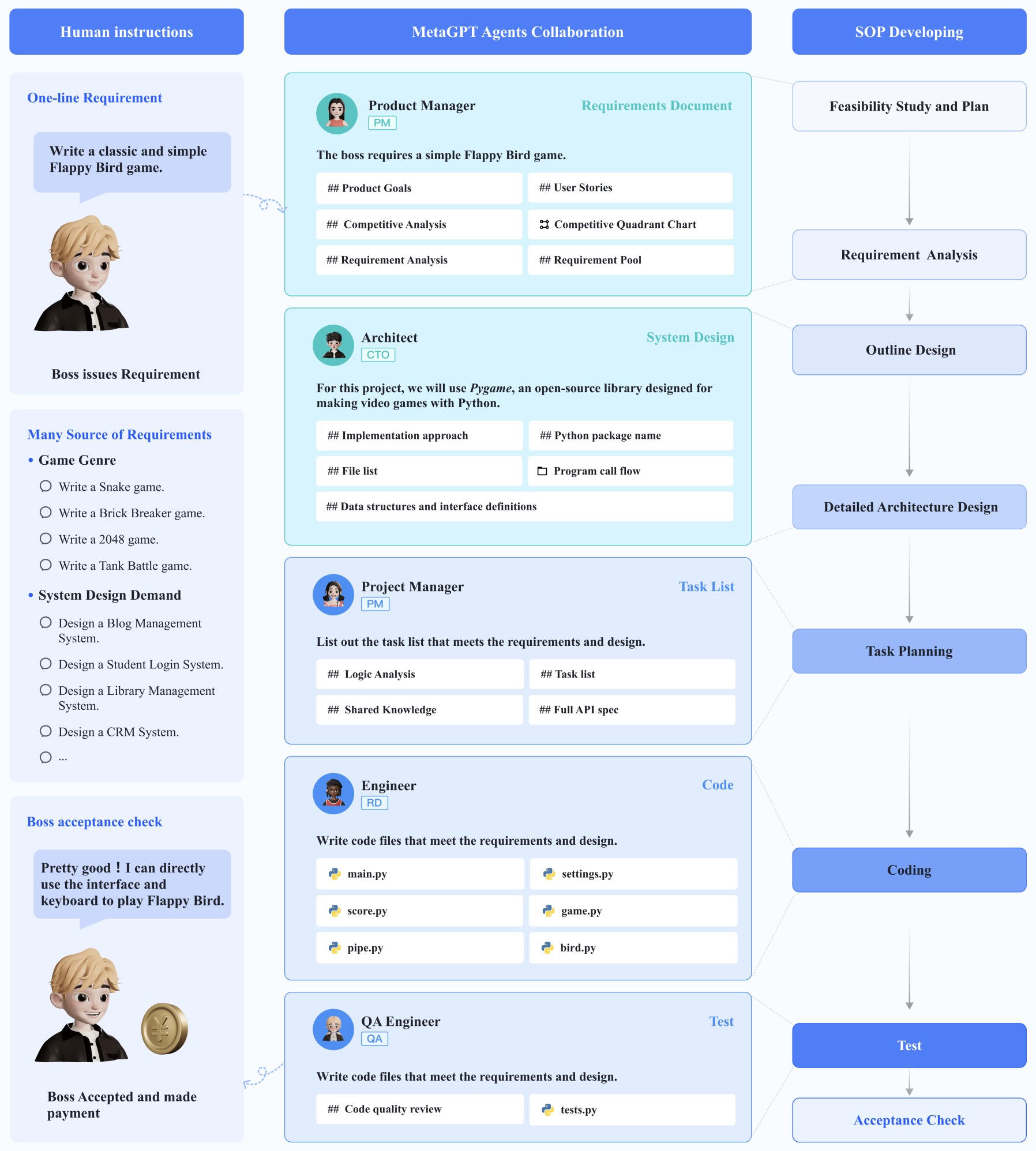

- MetaGPT: Meta Programming for Multi-Agent Collaborative Framework

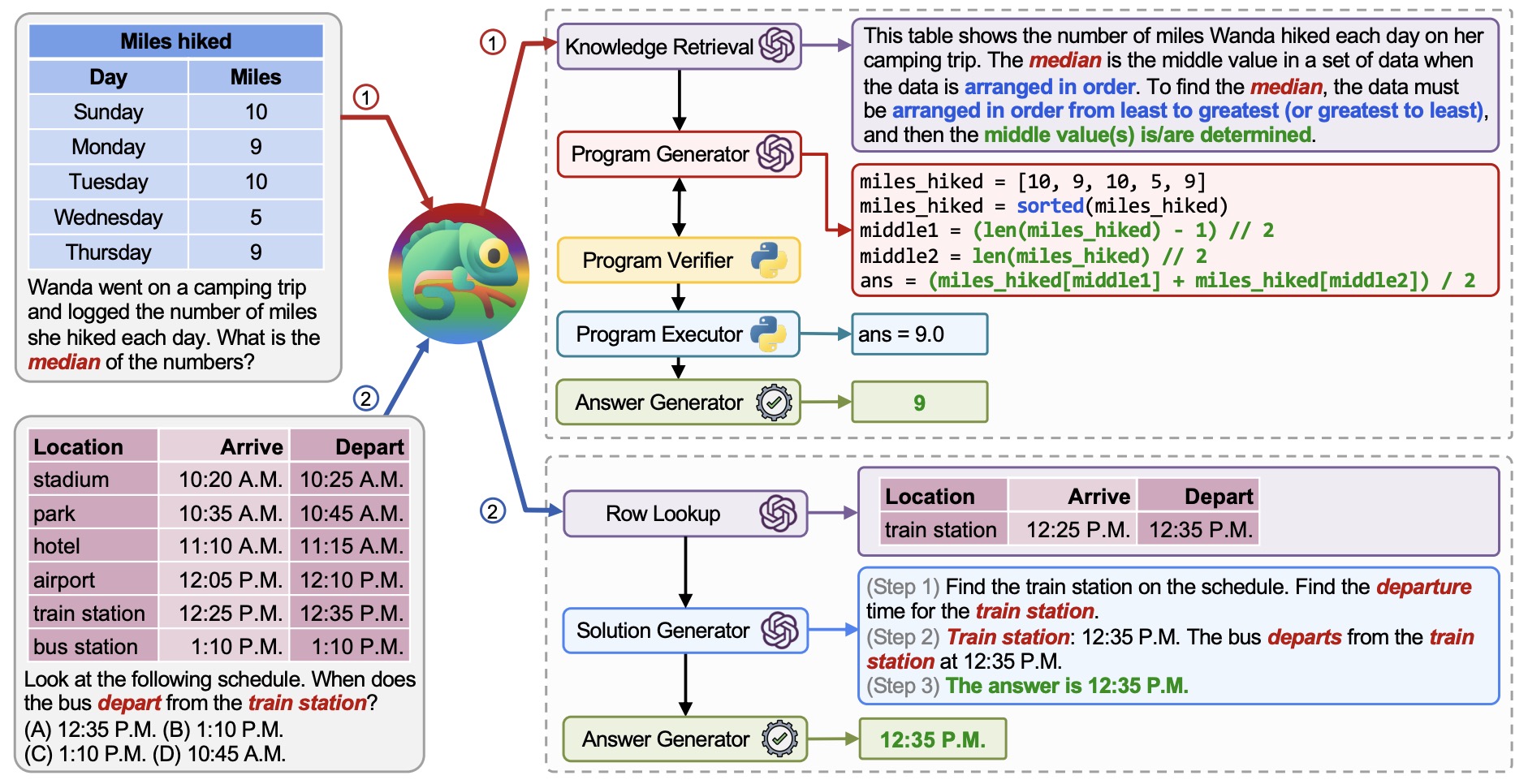

- Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models

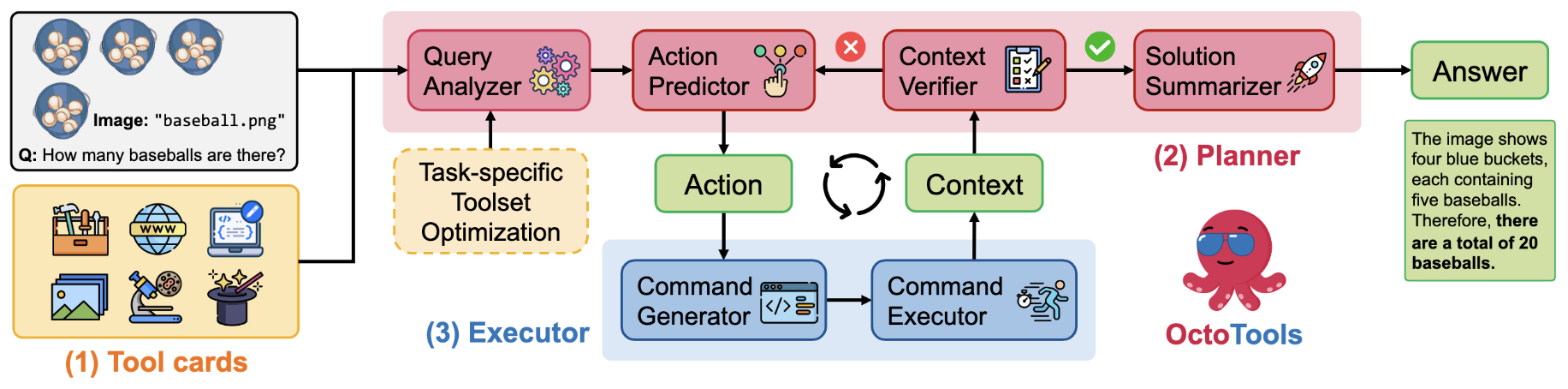

- OctoTools: An Agentic Framework with Extensible Tools for Complex Reasoning

- Further Reading

- References

- Citation

Overview

- AI agents are autonomous systems that combine the decision-making and action-oriented capabilities of autonomous frameworks with the natural language processing and comprehension strengths of large language models (LLMs). The LLM serves as the “brain” within an agent, interpreting language, generating responses, and planning tasks, while the agent framework enables the execution of these tasks within a defined environment. Together, they allow agents to engage in goal-oriented workflows, where LLMs contribute strategic insights, problem-solving, and adaptability to achieve outcomes with minimal human intervention.

- AI agents leverage LLMs as central reasoning engines, which facilitate real-time decision-making, task prioritization, and dynamic adaptation. In practice, an AI agent operates through a cycle: the LLM analyzes incoming information, formulates an actionable plan, and collaborates with a series of modular systems—such as APIs, web tools, or embedded sensors—to execute specific steps. Throughout this process, the LLM can maintain context and iterate, adjusting actions based on feedback from the agent’s environment or outcomes from previous steps. This integrated system enables AI agents to tackle complex, multi-phase tasks with increasing sophistication, driving innovation across sectors like finance, software engineering, and scientific discovery.

The “Agentic AI Moment”

- Many people experienced a pivotal “AI moment” with the release of ChatGPT—a time when the system’s capabilities exceeded their expectations. This phenomenon, often called the “ChatGPT moment,” encapsulates interactions where the AI’s performance went beyond anticipated limits, demonstrating remarkable competence, creativity, or problem-solving ability.

- Analogous to the “ChatGPT moment,” agents have had an “Agentic AI moment”—an instance where an AI system exhibits unexpected autonomy and resourcefulness. One notable example (source) involves an AI agent developed for online research. During a live demonstration, the agent encountered a rate-limiting error while accessing its primary web search tool. Instead of failing, the agent seamlessly adapted by switching to a secondary tool—a Wikipedia search feature—to complete the task effectively. This unplanned pivot showcased the agent’s ability to adjust independently to unforeseen circumstances, a hallmark of agentic planning and adaptive problem-solving that highlights the emerging potential of AI agents in complex, real-world applications.

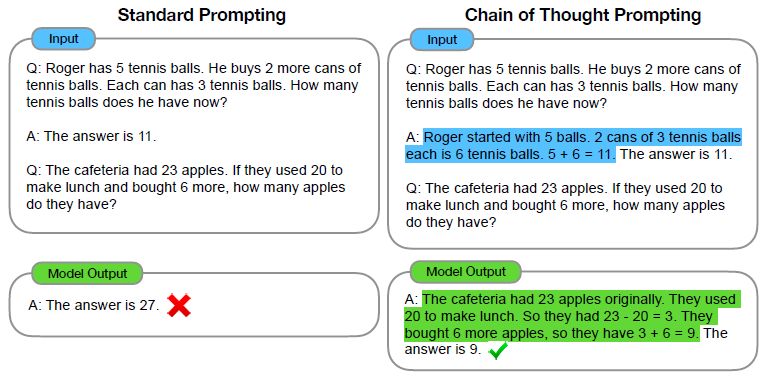

The Agentic Workflow

- Most current applications of large language models (LLMs) operate in zero-shot mode, where the model generates responses token by token without revisiting or refining its initial output. This approach is akin to asking someone to write an essay in one continuous attempt without making any corrections. While LLMs perform remarkably well under these constraints, an agentic, iterative workflow often leads to higher-quality results.

-

A typical agentic workflow involves an LLM following a structured, multi-step process. Depending on the task, some or all of the following steps may be included:

- Planning an outline for the task

- Assessing whether additional research or web searches are needed

- Drafting an initial response

- Reviewing and identifying weak or irrelevant sections

- Revising based on detected areas for improvement

- This structured, human-like refinement process enables AI agents to produce more robust and nuanced outputs compared to a single-pass approach.

Workflows vs. Agents

- The term “agent” can have multiple interpretations. Some define agents as fully autonomous systems capable of operating independently over extended periods, using various tools to complete complex tasks. Others see them as prescriptive systems that follow predefined workflows.

-

Per Anthropic, categorize both under agentic systems but distinguish between two key architectures:

- Workflows: Systems where LLMs and tools are orchestrated through predefined code paths to complete tasks.

- Agents: Systems where LLMs dynamically direct their own processes, deciding how to use tools and manage tasks autonomously.

- By leveraging these agentic approaches, AI systems can move beyond single-shot responses, refining their outputs iteratively and intelligently.

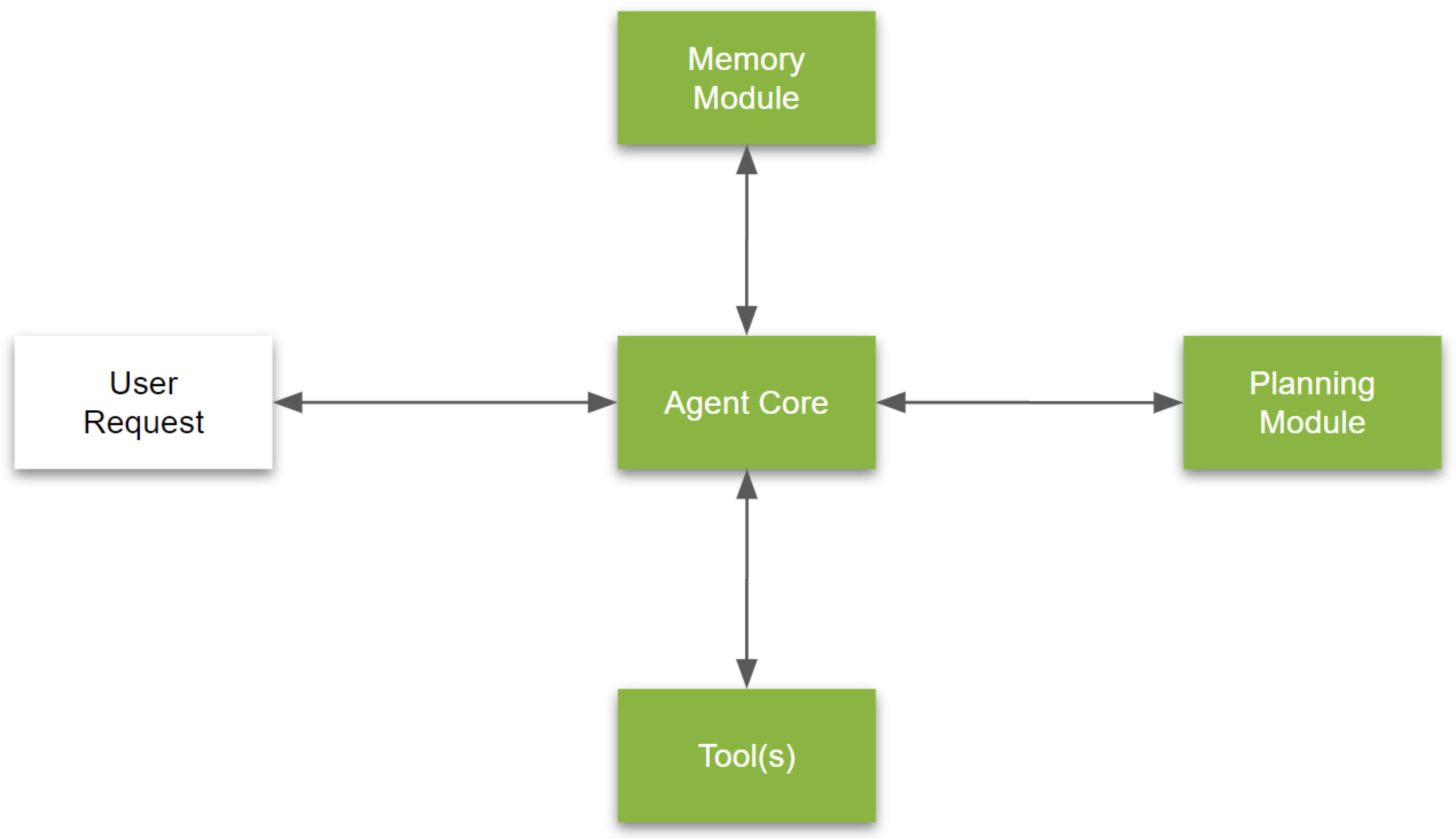

The Agent Framework

- The Agent Framework provides a structured and modular design for organizing the core components of an AI agent. This setup allows for effective, adaptive interactions by combining critical components, each with defined roles that contribute to seamless task performance.

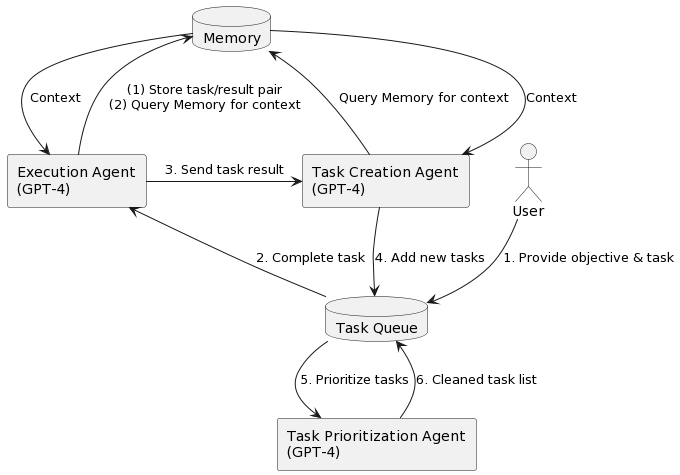

- The image above (source) illustrates the architecture of a typical end-to-end agent pipeline. Below, we explore each component in detail to understand the technical workings of an AI agent.

Agent Core (LLM)

-

At the heart of the agent, the Agent Core functions as the primary decision-making engine, where OpenAI’s GPT-4 is employed to handle high-level reasoning and dynamic task management. This component includes:

- Decision-Making Engine: Analyzes input data, memory, and goals to generate suitable responses.

- Goal Management System: Continuously updates the agent’s objectives based on task progression.

- Integration Bus: Manages the flow of information between memory, tools, and planning modules, ensuring cohesive data exchange.

-

The Agent Core uses the LLM’s capabilities to complete tasks, generate new tasks as needed, and dynamically adjust priorities based on the evolving task context.

Memory Modules

-

Memory is a fundamental part of the framework, with a vector databases (such as Pinecone, Weaviate, Chroma, etc.) providing robust storage and retrieval mechanisms for task-related data. The memory modules enhance the agent’s context-awareness and task relevance through:

- Short-term Memory (STM): Manages temporary data for immediate task requirements, stored in volatile structures like stacks or queues to support quick access and frequent clearing.

- Long-term Memory (LTM): Uses vector databases for persistent storage of historical interactions, enabling the agent to reference past conversations or data over extended periods. Semantic similarity-based retrieval is employed to enhance relevance, factoring in recency and importance for efficient access.

Tools

-

Tools empower the agent with specialized capabilities to execute tasks precisely, often leveraging the LangChain framework for structured workflows. Tools include:

- Executable Workflows: Defined within LangChain, providing structured, data-aware task handling.

- APIs: Facilitate secure access to both internal and external data sources, enriching the agent’s functional range.

- Middleware: Supports data exchange between the core and tools, handling formatting, error-checking, and ensuring security.

-

LangChain’s integration enables the agent to dynamically interact with its environment, providing flexibility and adaptability across diverse tasks.

Planning Module

- For complex problem-solving, the Planning Module enables structured approaches like task decomposition and reflection to guide the agent in optimizing solutions. The Task Management system within this module utilizes a deque data structure to autonomously generate, manage, and prioritize tasks. It adjusts priorities in real-time as tasks are completed and new tasks are generated, ensuring goal-aligned task progression.

- In summary, the LLM Agent Framework combines an LLM’s advanced language capabilities with a vector database’s efficient memory system and an agentic framework’s responsive tooling. These integrated components create a cohesive, powerful AI agent capable of adaptive, real-time decision-making and dynamic task execution across complex applications.

Agentic Design Patterns

- Agentic design patterns empower AI models to transcend static interactions, enabling dynamic decision-making, self-assessment, and iterative improvement. These patterns establish structured workflows that allow AI to actively refine its outputs, incorporate new tools, and even collaborate with other AI agents to complete complex tasks. By leveraging agentic patterns, language models evolve from simple, one-step responders to adaptable, reliable, and contextually aware systems, enhancing their application across various domains.

- A well-defined categorization of agentic design patterns is crucial for developing robust and efficient AI agents. By organizing these patterns into a clear framework, developers and researchers can better understand how to structure AI workflows, optimize performance, and ensure that agents are equipped to handle complex, dynamic tasks.

-

Below is a practical framework for classifying the most common agentic design patterns across various applications:

-

Reflection: The agent evaluates its work, identifying areas for improvement and refining its outputs based on this assessment. This process enables continuous improvement, ultimately leading to a more robust and accurate final output.

-

Tool Use: Agents are equipped with specific tools, such as web search or code execution capabilities, to gather necessary information, take actions, or process complex data in real time as part of their tasks.

-

Planning: The agent constructs and follows a comprehensive, step-by-step plan to achieve its objectives. This process may involve outlining, researching, drafting, and revising phases, as is often required in complex writing or coding tasks.

-

Multi-agent Collaboration: Multiple agents collaborate, each taking on distinct roles and contributing unique expertise to solve complex tasks by breaking them down into smaller, more manageable sub-tasks. This approach mirrors human teamwork, where roles like software engineer and QA specialist contribute to different aspects of a project.

-

- These agentic design patterns represent diverse methodologies through which AI agents can optimize task performance, refine outputs, and dynamically adapt workflows. For those exploring multi-agent systems, frameworks such as AutoGen, Crew AI, and LangGraph offer robust platforms for designing and deploying multi-agent solutions. Additionally, open-source projects such as ChatDev simulate a virtual software company operated by AI agents, provide developers with accessible tools to experiment with multi-agent systems.

Reflection

Overview

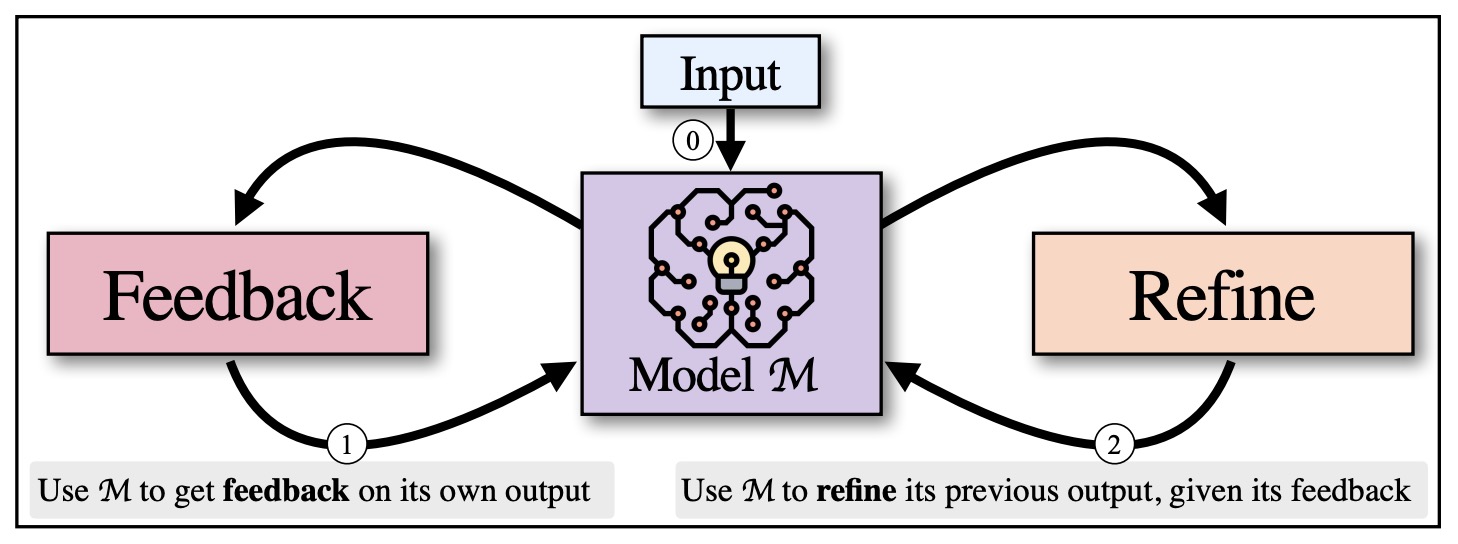

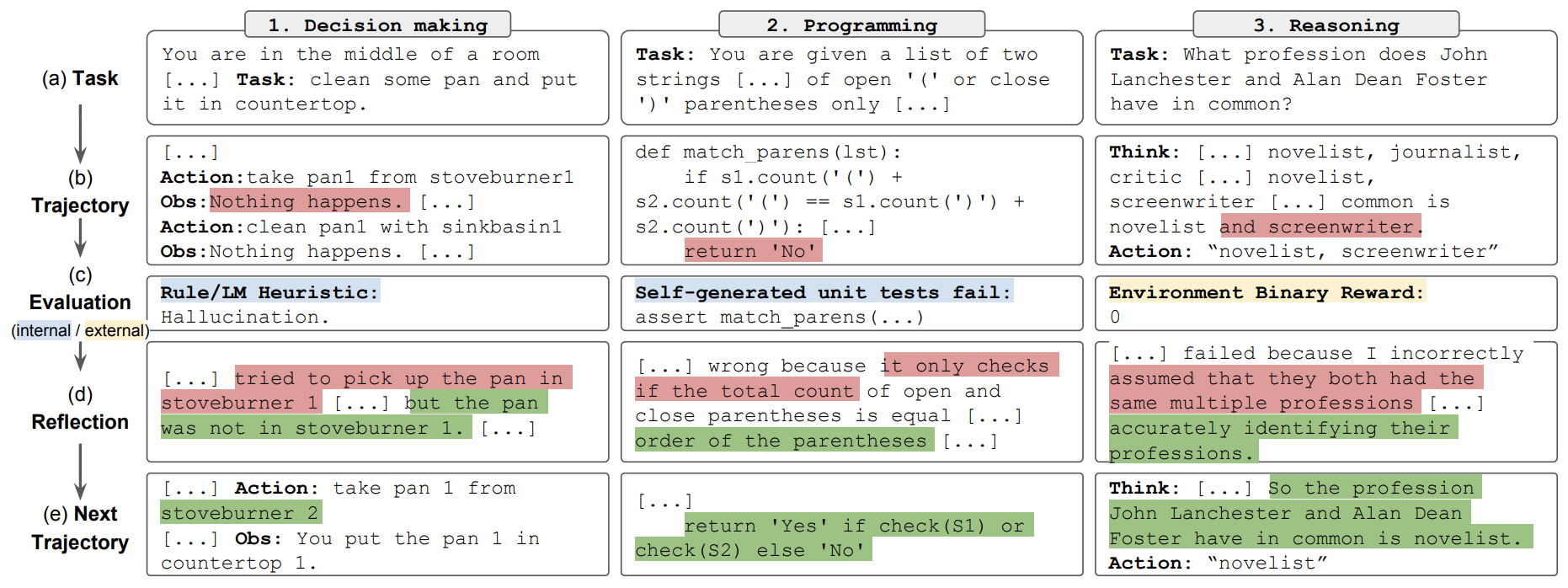

-

To boost the effectiveness of LLMs, a pivotal approach is the incorporation of a reflective mechanism within their workflows. Reflection is a method by which LLMs improve their output quality through self-evaluation and iterative refinement. By implementing this approach, an LLM can autonomously recognize gaps in its output, adjust based on feedback, and ultimately deliver responses that are more precise, efficient, and contextually aligned with user needs. This structured, iterative process transforms the typical query-response interaction into a dynamic cycle of continuous improvement.

-

Reflection represents a relatively straightforward type of agentic workflow, yet it has proven to significantly enhance LLM output quality across diverse applications. By encouraging models to reflect on their performance, refine their responses, and utilize external tools for self-assessment, this design pattern enables models to deliver accurate, efficient, and contextually relevant results. This iterative process not only strengthens an LLM’s ability to produce high-quality outputs but also imbues it with a form of adaptability, allowing it to better meet complex, evolving requirements.

-

The integration of Reflection into agentic workflows is transformative, rendering LLMs more adaptable, self-aware, and capable of handling complex tasks autonomously. As a foundational design pattern, Reflection holds substantial promise for enhancing the efficacy and reliability of LLM-based applications. This approach highlights the growing capacity of these models to function as intelligent, self-improving agents, poised to meet the demands of increasingly sophisticated tasks with minimal human intervention.

Reflection Workflow: Step-by-Step Process

Initial Output Generation

- In a typical task, such as writing code, the LLM is first prompted to generate an initial response aimed at accomplishing a specific goal (e.g., completing “task X”). This response may serve as a draft that will later be subjected to further scrutiny.

Self-Evaluation and Constructive Feedback

-

After producing an initial output, the LLM can be guided to assess its response. For instance, in the case of code generation, it may be prompted with:

“Here’s code intended for task X: [previously generated code].

Check the code carefully for correctness, style, and efficiency, and provide constructive criticism for improvement.” -

This self-critique phase enables the LLM to recognize any flaws in its work. It can identify issues related to correctness, efficiency, and stylistic quality, thus facilitating the detection of areas needing refinement.

Revision Based on Feedback

-

Once the LLM generates feedback on its own output, the agentic workflow proceeds by prompting the model to integrate this feedback into a revised response. In this stage, the context given to the model includes both the original output and the constructive criticism it produced. The LLM then generates a refined version that reflects the improvements suggested during self-reflection.

-

This cycle of criticism and rewriting can be repeated multiple times, resulting in iterative enhancements that significantly elevate the quality of the final output.

Beyond Self-Reflection: Integrating Additional Tools

-

Reflection can be further augmented by equipping the LLM with tools that enable it to evaluate its own output quantitatively. For instance:

- Code Evaluation: The model can run its code through unit tests to verify accuracy, using test cases to ensure correct results.

- Text Validation: The LLM can leverage internet searches or external databases to fact-check and verify textual content.

-

When errors or inaccuracies are detected through these tools, the LLM can reflect on the discrepancies, producing additional feedback and proposing ways to improve the output. This tool-supported reflection enables the LLM to refine its responses even further, effectively combining self-critique with external validation.

Multi-Agent Framework for Enhanced Reflection

- To optimize the Reflection process, a multi-agent framework can be utilized. In this configuration, two distinct agents are employed:

- Output Generation Agent: Primarily responsible for producing responses aimed at achieving the designated task effectively.

- Critique Agent: Tasked with critically evaluating the output of the first agent, offering constructive feedback to enhance its quality.

- Through this dialogue between agents, the LLM achieves improved results, as the two agents collaboratively identify and rectify weaknesses in the output. This cooperative approach introduces a second level of reflection, allowing the LLM to gain insights that a single-agent setup might miss.

Example of Reflection: Multi-Agent Framework for Iterative Code Improvement

- In the context of Reflection, one effective implementation involves a multi-agent interaction where two agents—a Coder Agent and a Critic Agent—collaborate to refine code through iterative feedback and revisions, emphasizing the synergy between generation and critique.

-

Initial Task and Code Generation: The process begins with a prompt given to the Coder Agent, instructing it to “write code for {task}.” The Coder Agent generates an initial version of the code, labeled here as

do_task(x). -

Critique and Error Identification: The Critic Agent then reviews the initial code. In this case, it identifies a specific issue, stating, “There’s a bug on line 5. Fix it by…” and offers a constructive suggestion for improvement. This feedback allows the Coder Agent to understand where the code falls short.

-

Code Revision: Based on the Critic Agent’s feedback, the Coder Agent revises its code, producing an updated version,

do_task_v2(x). This revised code aims to address the issues highlighted in the first critique. -

Further Testing and Feedback: The Critic Agent assesses the new version, testing it further (such as through unit tests). Here, it notes that “It failed Unit Test 3” and advises a further change, indicating that additional refinements are necessary for accuracy.

-

Final Iteration: The Coder Agent, with this additional guidance, creates yet another iteration of the code—

do_task_v3(x). This repeated process of critique and revision continues until the code meets the desired standards for functionality and efficiency.

-

This example highlights the iterative nature of Reflection within a multi-agent framework. By engaging a Coder Agent focused on output generation and a Critic Agent dedicated to providing structured feedback, the system harnesses a continuous improvement loop. This interaction enables large language models to autonomously detect errors, refine logic, and improve their responses.

-

The multi-agent setup exemplifies how Reflection can be operationalized to produce high-quality, reliable results. This structured approach not only enhances the LLM’s output but also mirrors human collaborative workflows, where constructive feedback leads to better solutions through repeated refinement.

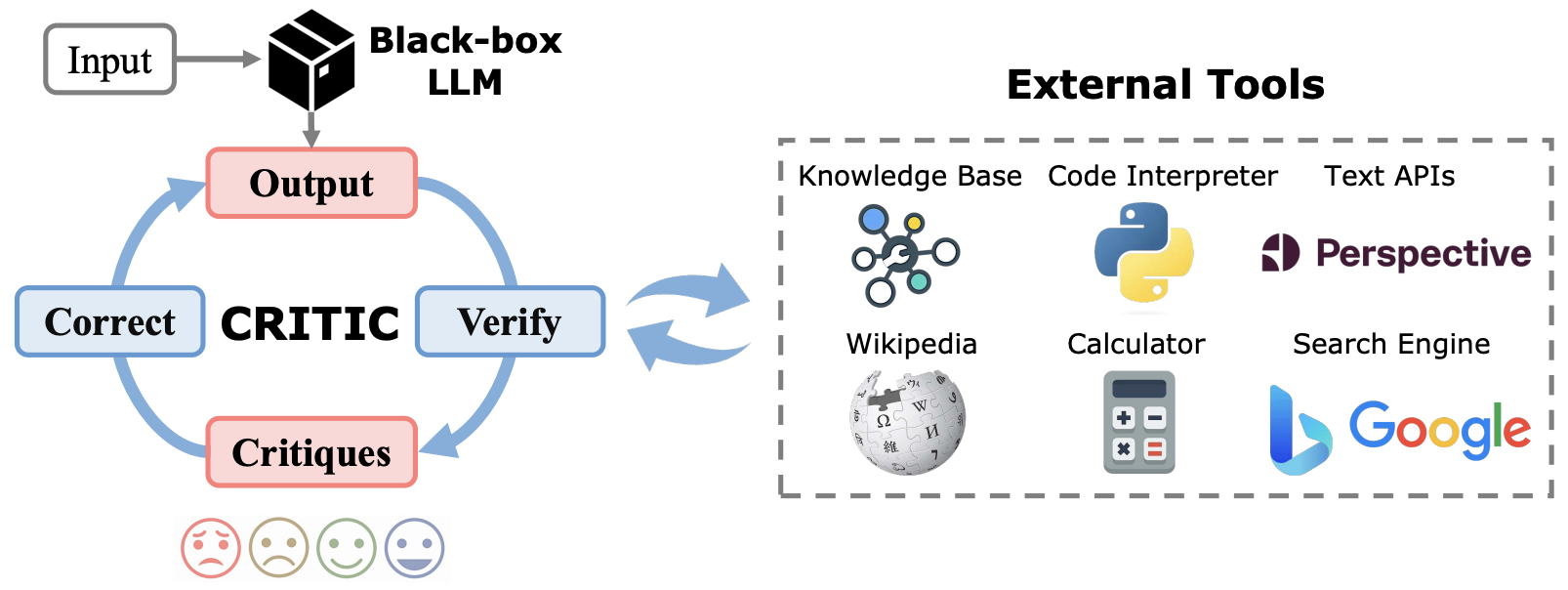

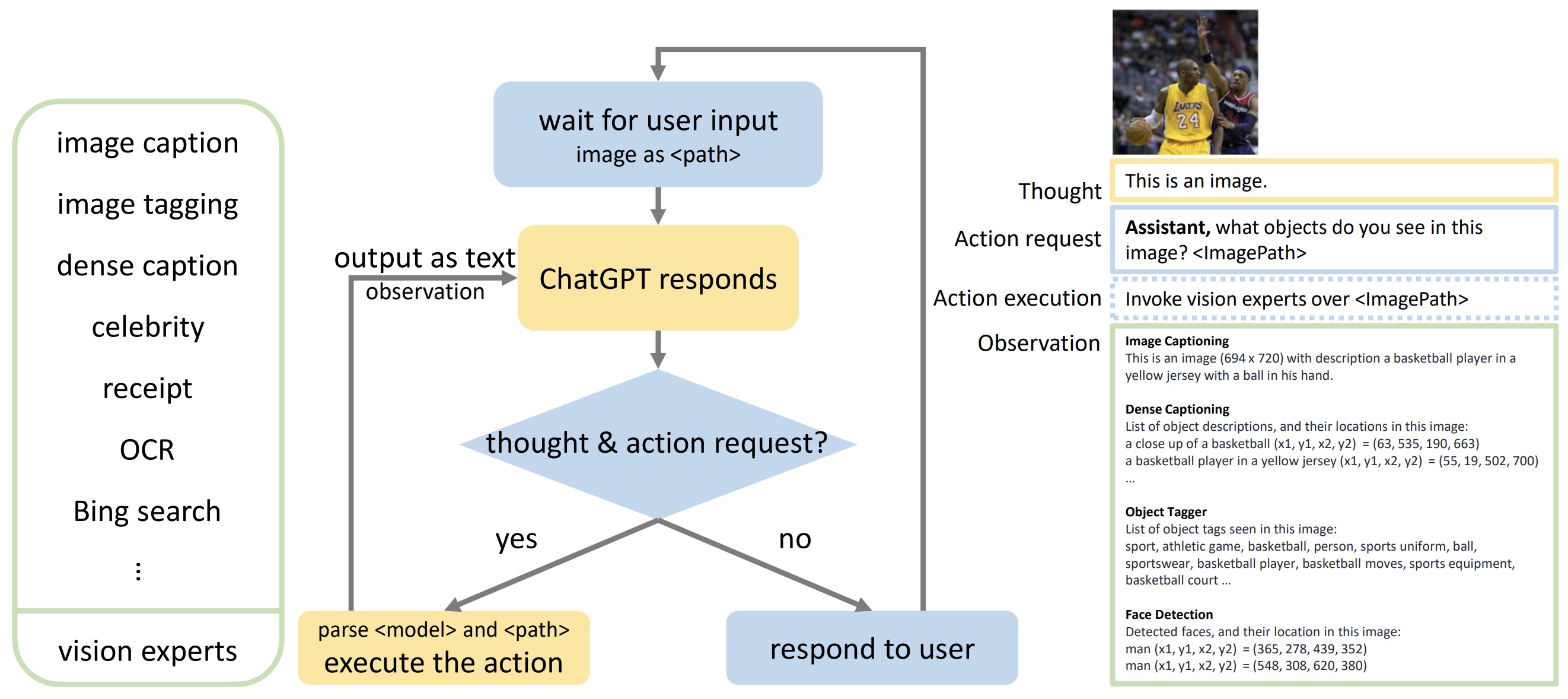

Function/Tool/API Calling

- The advent of Tool Use in LLMs represents a pivotal design pattern in agentic AI workflows, enabling LLMs to perform a diverse range of tasks beyond text generation. Tool Use refers to the capability of an LLM to utilize specific functions—such as executing code, conducting web searches, or interacting with productivity tools—within its responses, effectively expanding its utility far beyond conventional, language-based outputs. This approach allows LLMs to tackle more complex queries and execute multifaceted tasks by selectively invoking various external tools. From answering specific questions to performing calculations, the use of function calls empowers LLMs to provide highly accurate and contextually informed responses.

- A foundational example of Tool Use is seen in scenarios where users request information not available in the model’s pre-existing training data. For instance, if a user asks, “What is the best coffee maker according to reviewers?”, a model equipped with Tool Use may initiate a web search, fetching up-to-date information by generating a command string such as

{tool: web-search, query: "coffee maker reviews"}. Upon processing, the model retrieves relevant pages, synthesizes the data, and delivers an informed response. This dynamic response mechanism emerged from early realizations that traditional transformer-based language models, reliant solely on pre-trained knowledge, were inherently limited. By integrating a web search tool, developers enabled the model to access and incorporate fresh information into its output, a capability now widely adopted across various LLMs in consumer-facing applications. - Moreover, Tool Use enables LLMs to handle calculations and other tasks requiring precision that text generation alone cannot achieve. For example, when a user asks, “If I invest $100 at compound 7% interest for 12 years, what do I have at the end?”, an LLM could respond by executing a Python command like

100 * (1+0.07)**12. The LLM generates a string such as{tool: python-interpreter, code: "100 * (1+0.07)**12"}, and then the calculation tool processes this command to deliver an accurate answer. This illustrates how Tool Use facilitates complex mathematical reasoning within conversational AI systems. - The scope of Tool Use, however, extends well beyond web searches or basic calculations. As the technology has evolved, developers have implemented a wide array of functions, enabling LLMs to interface with multiple external resources. These functions may include accessing specialized databases, interacting with productivity tools like email and calendar applications, generating or interpreting images, and engaging with multiple data sources such as Search (via Google/Bing Search APIs), Wikipedia, and academic repositories like arXiv.

- Systems now prompt LLMs with detailed descriptions of available functions, specifying their capabilities and parameters. With these cues, an LLM can autonomously select the appropriate function to fulfill the user’s request. In settings where hundreds of tools are accessible, developers often employ heuristics to streamline function selection, prioritizing the tools most relevant to the current context—a strategy analogous to the subset selection techniques used in retrieval-augmented generation (RAG) systems.

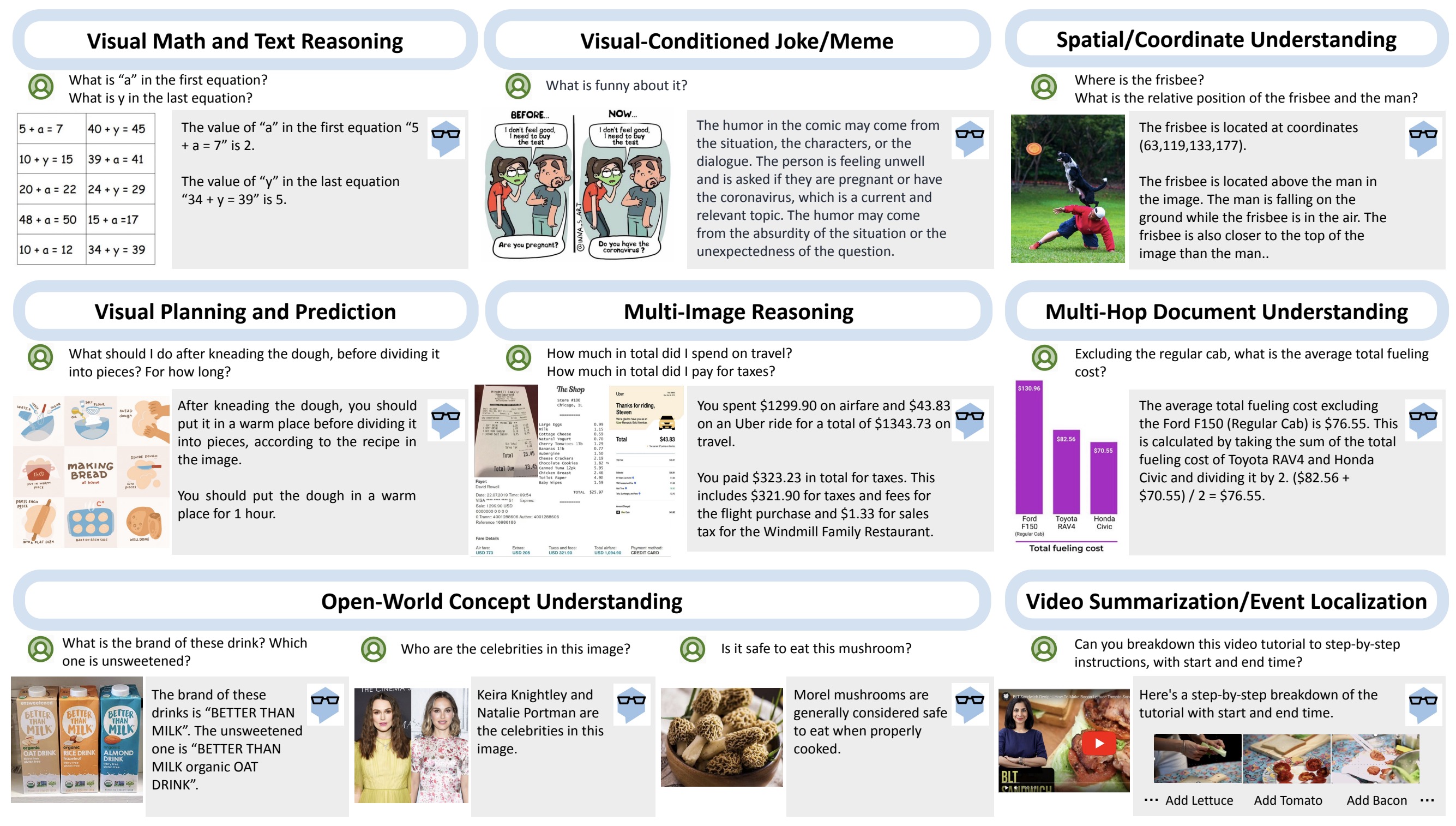

- The development of large multimodal models (LMMs) such as LLaVa, GPT-4V, and Gemini marked another milestone in Tool Use. Prior to these models, LLMs could not process or manipulate images directly, and any image-related tasks had to be offloaded to specific computer vision functions, such as object recognition or scene analysis. The introduction of GPT-4’s function-calling capabilities in 2023 further advanced Tool Use by establishing a more general-purpose function interface, laying the groundwork for a versatile, multimodal AI ecosystem where models seamlessly integrate text, image, and other data types. This new functionality has subsequently led to a proliferation of LLMs designed to exploit Tool Use, broadening the range of applications and enhancing overall adaptability.

- For instance, below is a prompt from SmolLM2 for function calling, where the model is prompted to choose relevant functions based on specific user inquiries is as follows. The prompt template guides SmolLM2 in structuring its function calls precisely and prompts it to assess the relevance and sufficiency of available parameters before executing a function.

You are an expert in composing functions. You are given a question and a set of possible functions.

Based on the question, you will need to make one or more function/tool calls to achieve the purpose.

If none of the functions can be used, point it out and refuse to answer.

If the given question lacks the parameters required by the function, also point it out.

You have access to the following tools:

<tools></tools>

The output MUST strictly adhere to the following format, and NO other text MUST be included.

The example format is as follows. Please make sure the parameter type is correct. If no function call is needed, please make the tool calls an empty list '[]'.

<tool_call>[

{"name": "func_name1", "arguments": {"argument1": "value1", "argument2": "value2"}},

(more tool calls as required)

]</tool_call>

- The evolution of Tool Use and function-calling capabilities in LLMs demonstrates the significant strides taken toward realizing general-purpose, agentic AI workflows. By enabling LLMs to autonomously utilize specialized tools across various contexts, developers have transformed these models from static text generators into dynamic, multifunctional systems capable of addressing a vast array of user needs. As the field advances, we can expect further innovations that expand the breadth and depth of Tool Use, pushing the boundaries of what LLMs can achieve in an integrated, agentic environment.

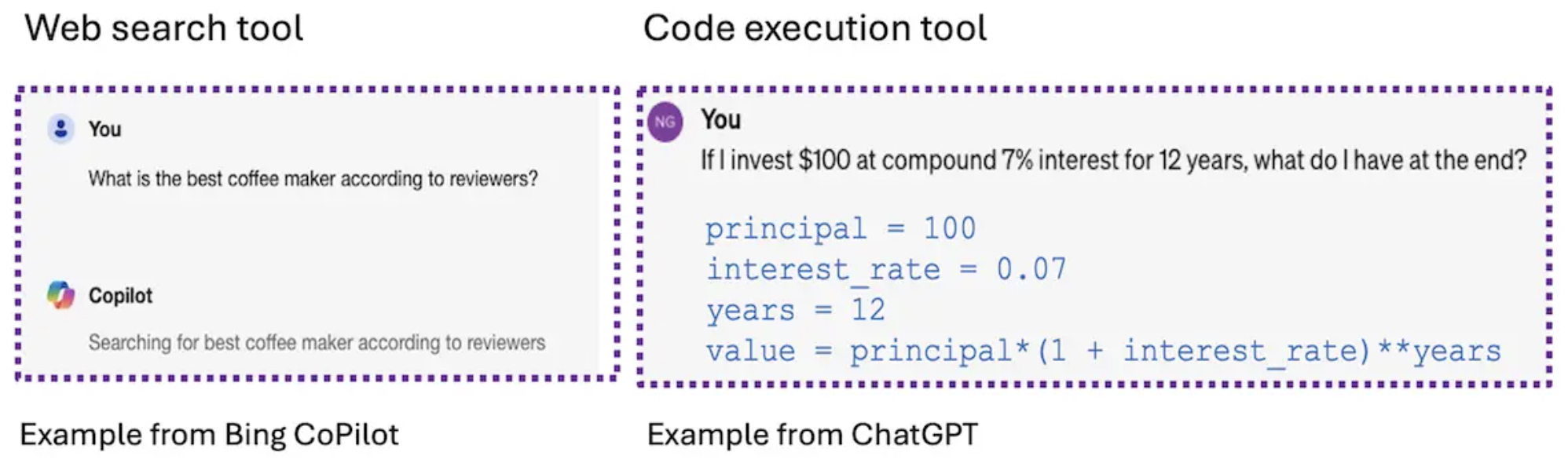

Tool Calling Examples: Web Search and Code Execution

- LLMs can leverage tools such as web search to provide current product recommendations and code execution to handle complex calculations, showcasing their adeptness at choosing and using the right tools based on user input.

- The image below (source) showcases practical examples of tool calling in LLMs, highlighting two specific tools: a web search tool and a code execution tool. In the left panel, an example from Bing Copilot illustrates how an LLM can utilize a web search tool. When a user asks, “What is the best coffee maker according to reviewers?”, the model initiates a web search to gather relevant information from current online reviews. This allows the LLM to provide an informed answer based on up-to-date data.

- The right panel demonstrates an example from ChatGPT using a code execution tool. When a user asks, “If I invest $100 at compound 7% interest for 12 years, what do I have at the end?”, the LLM responds by generating a Python command to calculate the compounded interest. The code snippet,

principal = 100; interest_rate = 0.07; years = 12; value = principal * (1 + interest_rate) ** years, is executed, providing an accurate financial calculation rather than relying solely on text-based reasoning. - These examples illustrate the model’s ability to identify and select the appropriate tool based on the user’s query, further demonstrating the flexibility and enhanced capabilities of Tool Use in agentic LLM workflows.

Function Calling Datasets

Hermes Function-Calling V1

- The Hermes Function-Calling V1 dataset is designed for training language models to perform structured function calls and return structured outputs based on natural language instructions.

- It includes function-calling conversations, json-mode samples, agentic json-mode, and structured extraction examples, showcasing various scenarios where AI agents interpret queries and execute relevant function calls.

- The Hermes Function-Calling Standard enables language models to execute API calls based on user requests, improving AI’s practical utility by allowing direct API interactions.

Glaive Function Calling V2

- The Glaive Function Calling (52K) and Glaive Function Calling v2 (113K) are datasets generated through Glaive for the task of function calling, in the following format:

SYSTEM: You are an helpful assistant who has access to the following functions to help the user, you can use the functions if needed-

{

JSON function definiton

}

USER: user message

ASSISTANT: assistant message

Function call invocations are formatted as-

ASSISTANT: <functioncall> {json function call}

Response to the function call is formatted as-

FUNCTION RESPONSE: {json function response}

- There are also samples which do not have any function invocations, multiple invocations and samples with no functions presented and invoked to keep the data balanced.

Salesforce’s xlam-function-calling-60k

- Salesforce’s xlam-function-calling-60k contains 60,000 data samples collected by APIGen, an automated data generation pipeline designed to produce verifiable high-quality datasets for function-calling applications. Each data in our dataset is verified through three hierarchical stages: format checking, actual function executions, and semantic verification, ensuring its reliability and correctness.

- Also, xLAM-1b-fc-r and xLAM-7b-fc-r.

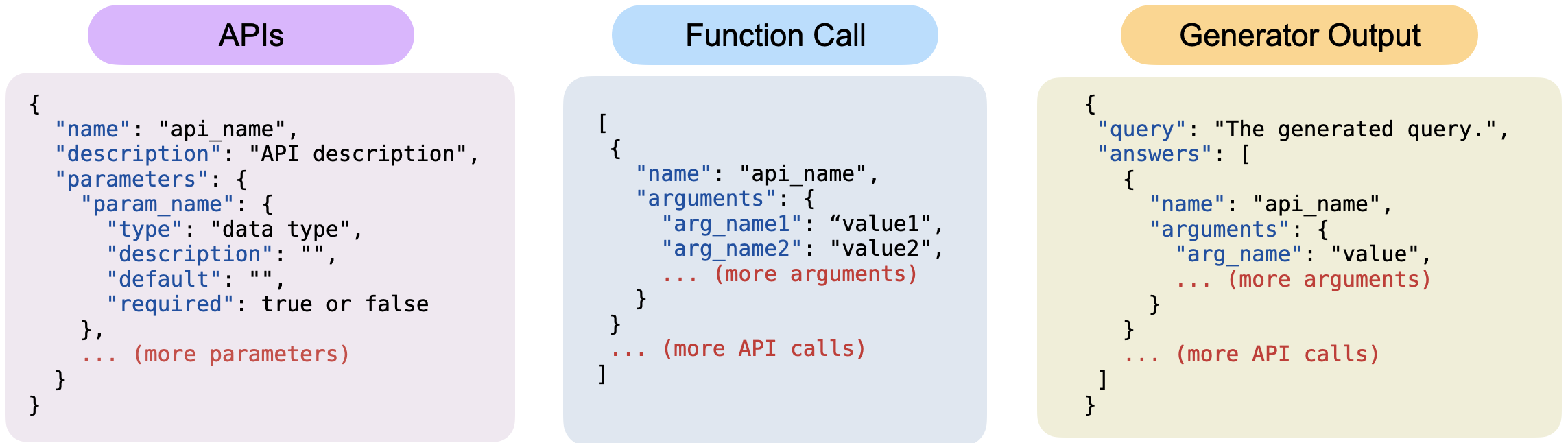

JSON Data Format for Query and Answers

- This JSON data format is used to represent a query along with the available tools and the corresponding answers. Here’s a description of the JSON format which consists of the following key-value pairs: ```

-

query (string): The query or problem statement.

-

tools (array): An array of available tools that can be used to solve the query.

-

Each tool is represented as an object with the following properties:

-

name (string): The name of the tool.

-

description (string): A brief description of what the tool does.

-

parameters (object): An object representing the parameters required by the tool.

-

Each parameter is represented as a key-value pair, where the key is the parameter name and the value is an object with the following properties:

-

type (string): The data type of the parameter (e.g., “int”, “float”, “list”).

-

description (string): A brief description of the parameter.

-

required (boolean): Indicates whether the parameter is required or optional.

-

-

-

-

-

answers (array): An array of answers corresponding to the query.

-

Each answer is represented as an object with the following properties:

-

name (string): The name of the tool used to generate the answer.

-

arguments (object): An object representing the arguments passed to the tool to generate the answer.

- Each argument is represented as a key-value pair, where the key is the parameter name and the value is the corresponding value. ```

-

-

- Note that they format the query, tools, and answers as a string, but you can easily recover each entry to the JSON object via

json.loads(...).

Example

- Here’s an example JSON data:

{

"query": "Find the sum of all the multiples of 3 and 5 between 1 and 1000. Also find the product of the first five prime numbers.",

"tools": [

{

"name": "math_toolkit.sum_of_multiples",

"description": "Find the sum of all multiples of specified numbers within a specified range.",

"parameters": {

"lower_limit": {

"type": "int",

"description": "The start of the range (inclusive).",

"required": true

},

"upper_limit": {

"type": "int",

"description": "The end of the range (inclusive).",

"required": true

},

"multiples": {

"type": "list",

"description": "The numbers to find multiples of.",

"required": true

}

}

},

{

"name": "math_toolkit.product_of_primes",

"description": "Find the product of the first n prime numbers.",

"parameters": {

"count": {

"type": "int",

"description": "The number of prime numbers to multiply together.",

"required": true

}

}

}

],

"answers": [

{

"name": "math_toolkit.sum_of_multiples",

"arguments": {

"lower_limit": 1,

"upper_limit": 1000,

"multiples": [3, 5]

}

},

{

"name": "math_toolkit.product_of_primes",

"arguments": {

"count": 5

}

}

]

}

- In this example, the query asks to find the sum of multiples of 3 and 5 between 1 and 1000, and also find the product of the first five prime numbers. The available tools are

math_toolkit.sum_of_multiplesandmath_toolkit.product_of_primes, along with their parameter descriptions. The answers array provides the specific tool and arguments used to generate each answer.

Synth-APIGen-v0.1

- A dataset of 50k samples by Argilla.

Example

- This example demonstrates the use of the

complex_to_polarfunction, which is designed to convert complex numbers into their polar coordinate representations. The input query requests conversions for two specific complex numbers,3 + 4jand1 - 2j, showcasing how the function can be called with different arguments to obtain their polar forms.

{

"func_name": "complex_to_polar",

"func_desc": "Converts a complex number to its polar coordinate representation.",

"tools": "[{\"type\":\"function\",\"function\":{\"name\":\"complex_to_polar\",\"description\":\"Converts a complex number to its polar coordinate representation.\",\"parameters\":{\"type\":\"object\",\"properties\":{\"complex_number\":{\"type\":\"object\",\"description\":\"A complex number in the form of `real + imaginary * 1j`.\"}},\"required\":[\"complex_number\"]}}}]",

"query": "I'd like to convert the complex number 3 + 4j and 1 - 2j to polar coordinates.",

"answers": "[{\"name\": \"complex_to_polar\", \"arguments\": {\"complex_number\": \"3 + 4j\"}}, {\"name\": \"complex_to_polar\", \"arguments\": {\"complex_number\": \"1 - 2j\"}}]",

"model_name": "meta-llama/Meta-Llama-3.1-70B-Instruct",

"hash_id": "f873783c04bbddd9d79f47287fa3b6705b3eaea0e5bc126fba91366f7b8b07e9",

}

],

"category": "E-commerce Platforms",

"subcategory": "Kayak",

"task": "Flight Search"

}

Evaluation

- To ensure that Tool Use capabilities meet the demands of diverse real-world scenarios, it is crucial to evaluate the function-calling performance of LLMs rigorously. This evaluation encompasses assessing model performance across both Python and non-Python programming environments, with a focus on how effectively the model can execute functions, select the appropriate tools, and discern when a function is necessary within a conversational context. An essential aspect of this evaluation is testing the model’s ability to invoke functions accurately based on user prompts and determine whether certain functions are applicable or needed.

- This structured evaluation methodology enables a holistic understanding of the model’s function-calling performance, combining both syntactic accuracy and real-world execution fidelity. By examining the model’s ability to navigate various programming contexts and detect relevance in function invocation, this approach underscores the practical reliability of LLMs in diverse applications.

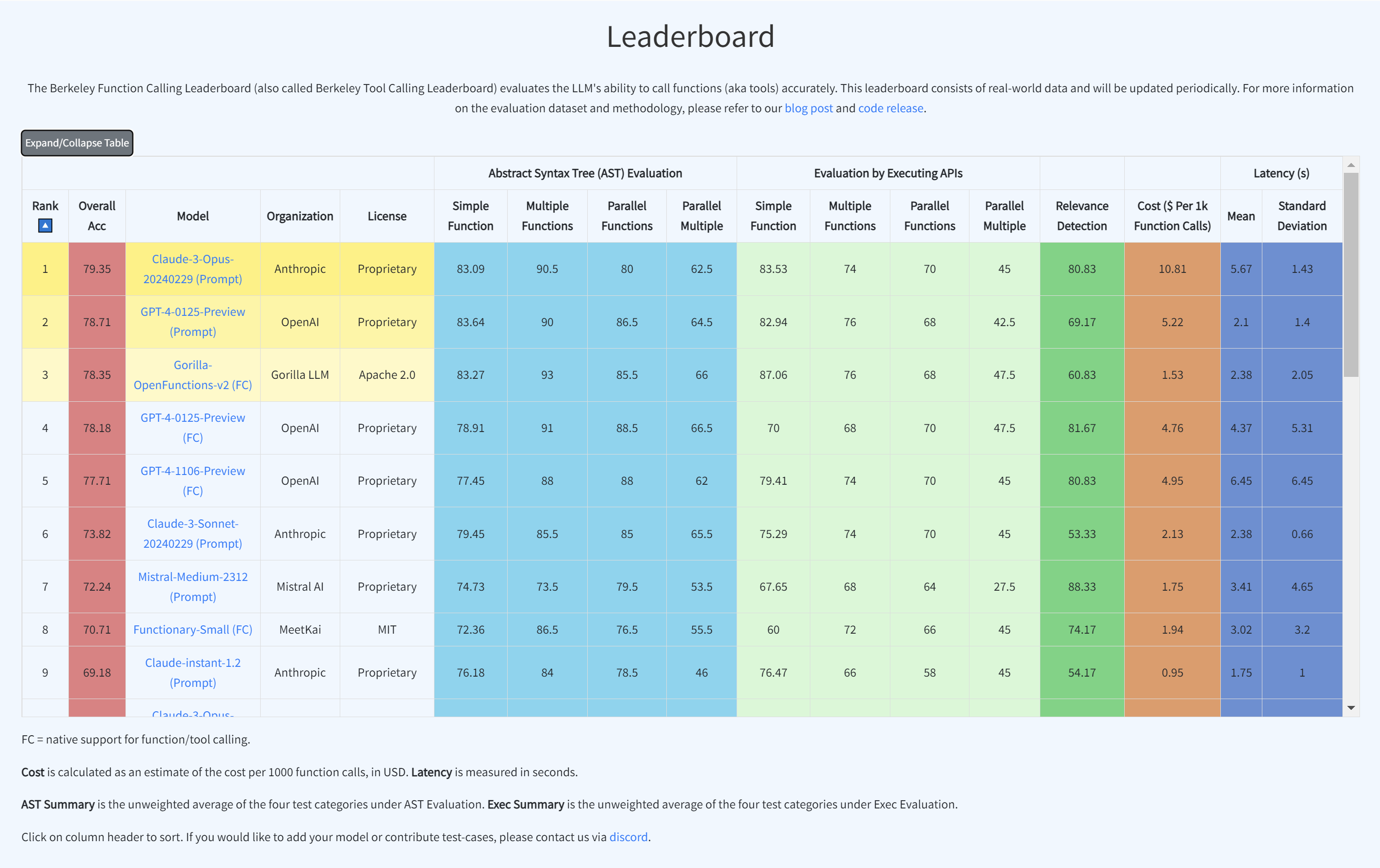

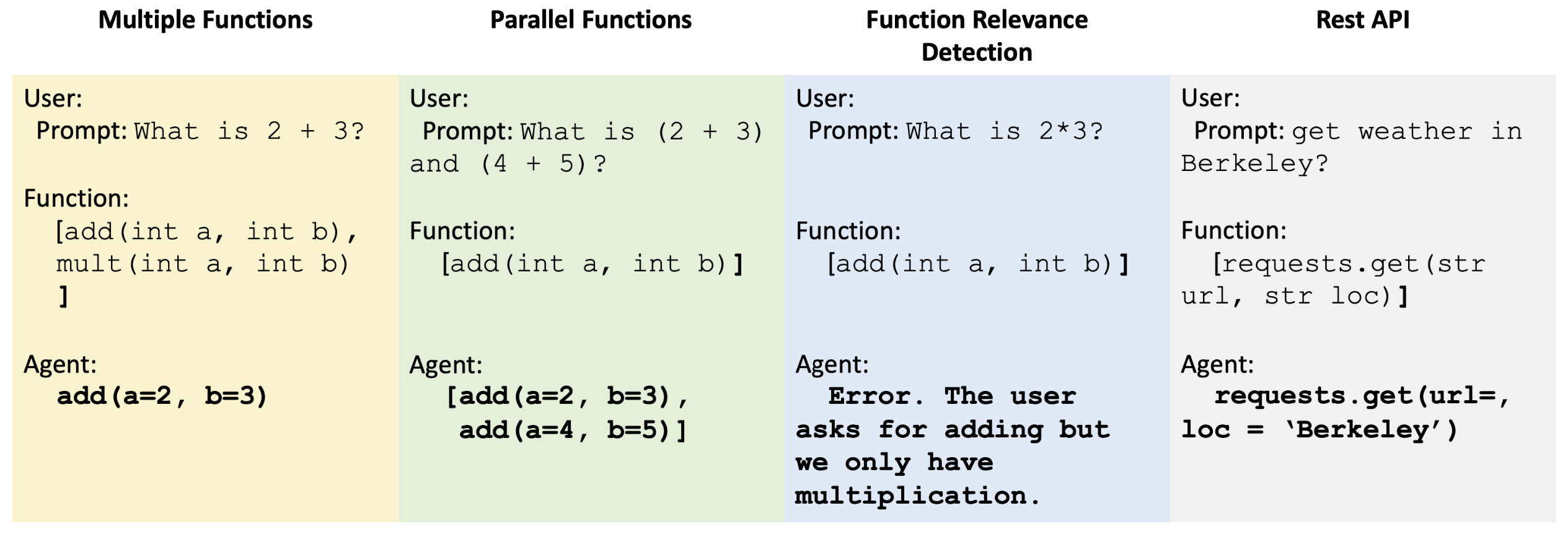

Berkeley Function-Calling Leaderboard

- Berkeley Function-Calling Leaderboard (BFCL) assesses the function-calling capabilities of various LLMs. It consists of 2,000 question-function-answer pairs across multiple programming languages (Python, Java, JavaScript, REST API, SQL).

- The evaluation covers complex use cases, including simple, multiple, and parallel function calls, requiring the selection and simultaneous execution of functions.

- BFCL tests function relevance detection to see how models handle irrelevant functions, expecting them to return an error message.

- Both proprietary and open-source models perform similarly in simple scenarios, but GPT-series models excel in more complex function-calling tasks.

- The Gorilla OpenFunctions dataset has expanded from 100 to 2,000 data points, increasing diversity and complexity in evaluations. The dataset includes functions from varied fields such as Mathematics, Sports, Finance, and more, covering 40 sub-domains.

- Evaluations are divided into Python (simple, multiple, parallel, parallel multiple functions) and Non-Python (chatting capability, function relevance, REST API, SQL, Java, JavaScript) categories.

- Python evaluations cover scenarios from single function calls to complex parallel multiple function calls.

- Non-Python evaluations test models on general-purpose chat, relevance detection, and specific API and language scenarios.

- Function relevance detection is a key focus, evaluating whether models avoid using irrelevant functions and highlighting their potential for hallucination.

- REST API testing involves real-world GET requests with parameters in URLs and headers, assessing models’ ability to generate executable API calls.

- SQL evaluation includes basic SQL queries, while Java and JavaScript testing focus on language-specific function-calling abilities.

- BFCL uses AST evaluation to check syntax and structural accuracy, and executable evaluation to verify real-world function execution.

- AST evaluation ensures function matching, parameter consistency, and type/value accuracy.

- Executable function evaluation runs generated functions to verify response accuracy and consistency, particularly for REST APIs.

- The evaluation approach requires complete matching of model outputs to expected results; partial matches are considered failures.

- Ongoing development includes continuous updates and community feedback to refine evaluation methods, especially for SQL and chat capabilities.

Python Evaluation

- Inspired by the Berkeley Function-Calling Leaderboard and APIGen, the evaluation framework can be organized by function type (simple, multiple, parallel, parallel multiple) or evaluation method (AST or execution of APIs). This categorization helps in comparing model performances on standard function-calling scenarios and assessing their accuracy and efficiency. By structuring the evaluation in this way, it provides a comprehensive view of how well the model performs across different types of function calls and under varying conditions. More on the section on Evaluation Methods.

- The Python evaluation categories, listed below, assess the model’s ability to handle single and multiple function calls, both sequentially and in parallel. These tests simulate realistic scenarios where the model must interpret user queries, select appropriate functions, and execute them accurately, mimicking real-world applications. By testing these different scenarios, the evaluation can highlight the model’s proficiency in using Python-based function calls under varying degrees of complexity and concurrency.

-

Simple Function: In this category, the evaluation involves a single, straightforward function call. The user provides a JSON function document, and the model is expected to invoke only one function call. This test examines the model’s ability to handle the most common and basic type of function call correctly.

-

Parallel Function: This evaluation scenario requires the model to make multiple function calls in parallel in response to a single user query. The model must identify how many function calls are necessary and initiate them simultaneously, regardless of the complexity or length of the user query.

-

Multiple Function: This category involves scenarios where the user input can be matched to one function call out of two to four available JSON function documentations. The model must accurately select the most appropriate function to call based on the given context.

-

Parallel Multiple Function: This is a complex evaluation combining both parallel and multiple function categories. The model is presented with multiple function documentations, and each relevant function may need to be invoked zero or more times in parallel.

- As mentioned earlier, each Python evaluation category includes both Abstract Syntax Tree (AST) and executable evaluations. A significant limitation of AST evaluation is the variety of methods available to construct function calls that achieve the same result, leading to challenges in consistency and accuracy. In these cases, executable evaluations provide a more reliable alternative by directly running the code to verify outcomes, allowing for precise and practical validation of functionality across different coding approaches.

Non-Python Evaluation

- The non-Python evaluation categories, listed below, test the model’s ability to handle diverse scenarios involving conversation, relevance detection, and the use of different programming languages and technologies. These evaluations provide insights into the model’s adaptability to various contexts beyond Python. By including these diverse categories, the evaluation aims to ensure that the model is versatile and capable of handling various use cases, making it applicable in a broad range of applications.

-

Chatting Capability: This category evaluates the model’s general conversational abilities without invoking functions. The goal is to see if the model can maintain coherent dialogue and recognize when function calls are unnecessary. This is distinct from function relevance detection, which involves determining the suitability of invoking any provided functions.

-

Function Relevance Detection: This tests whether the model can discern when none of the provided functions are relevant. The ideal outcome is that the model refrains from making any function calls, demonstrating an understanding of when it lacks the required function information or user instruction.

-

REST API: This evaluation focuses on the model’s ability to generate and execute realistic REST API calls using Python’s requests library. It tests the model’s understanding of GET requests, including path and query parameters, and its ability to generate calls that match real-world API documentation.

-

SQL: This category assesses the model’s ability to construct simple SQL queries using custom

sql.executefunctions. The evaluation is limited to basic SQL operations like SELECT, INSERT, UPDATE, DELETE, and CREATE, testing whether the model can generalize function-calling capabilities beyond Python. -

Java + JavaScript: Despite the uniformity in function-calling formats across languages, this evaluation examines how well the model adapts to language-specific types and syntax, such as Java’s HashMap. It includes examples that test the model’s handling of Java and JavaScript, emphasizing the need for language-specific adaptations.

Evaluation Methods

-

Two primary methods are used to evaluate model performance:

-

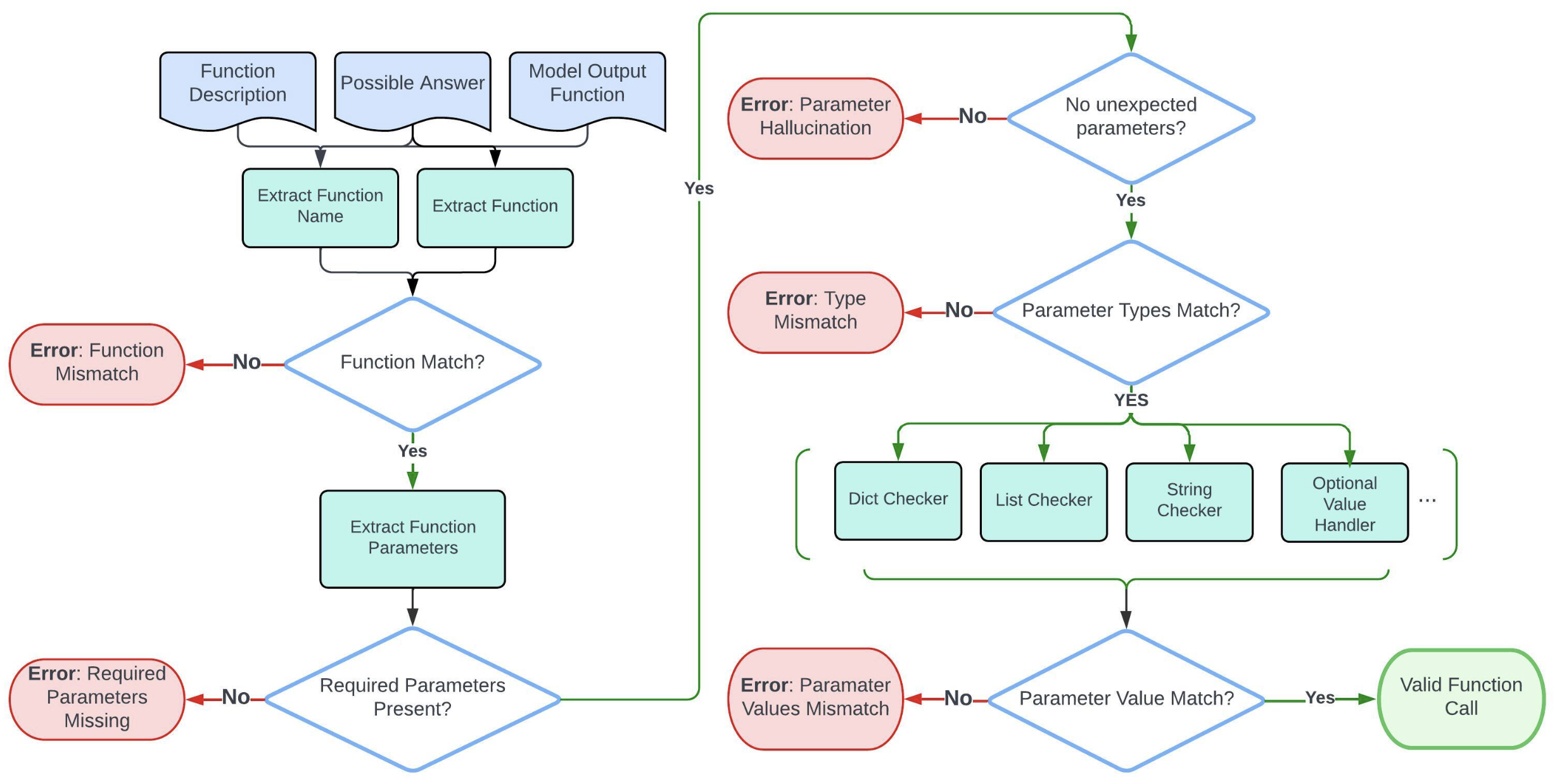

Abstract Syntax Tree (AST) Evaluation: AST evaluation involves parsing the model-generated function calls to check their structure against expected outputs. It verifies the function name, parameter presence, and type correctness. AST evaluation is ideal for cases where execution isn’t feasible due to language constraints or when the result cannot be easily executed.

- Simple Function AST Evaluation

- The AST evaluation process focuses on comparing a single model output function against its function doc and possible answers. Here is a flow chart (source) that shows the step-by-step evaluation process.

- The AST evaluation process focuses on comparing a single model output function against its function doc and possible answers. Here is a flow chart (source) that shows the step-by-step evaluation process.

- Multiple/Parallel/Parallel-Multiple Functions AST Evaluation

- The multiple, parallel, or parallel-multiple function AST evaluation process extends the idea in the simple function evaluation to support multiple model outputs and possible answers.

- The evaluation process first associates each possible answer with its function doc. Then it iterates over the model outputs and calls the simple function evaluation on each function (which takes in one model output, one possible answer, and one function doc).

- The order of model outputs relative to possible answers is not required. A model output can match with any possible answer.

- The evaluation process first associates each possible answer with its function doc. Then it iterates over the model outputs and calls the simple function evaluation on each function (which takes in one model output, one possible answer, and one function doc).

- The evaluation employs an all-or-nothing approach to evaluation. Failure to find a match across all model outputs for any given possible answer results in a failed evaluation.

- The multiple, parallel, or parallel-multiple function AST evaluation process extends the idea in the simple function evaluation to support multiple model outputs and possible answers.

- Simple Function AST Evaluation

-

Executable Function Evaluation: This metric assesses the model by executing the function calls it generates and comparing the outputs against expected results. This evaluation is crucial for testing real-world applicability, focusing on whether the function calls run successfully, produce the correct types of responses, and maintain structural consistency in their outputs.

-

-

The combination of AST and executable evaluations ensures a comprehensive assessment, providing insights into both the syntactic and functional correctness of the model’s output.

Inference Example Output with XML and JSON

-

Typical function calling datasets uses a combination of both XML and JSON elements (cf. inference output sample below), as detailed below.

-

XML Structure: Elements like

<|im_start|>,<tool_call>, and<tool_response>resemble XML-like tags, which help demarcate different parts of the communication. -

Dictionary/JSON Structure: Within the

<tool_call>and<tool_response>tags, the data for the function arguments and the stock fundamentals is formatted as Python-style dictionaries (or JSON-like key-value pairs), such as{'symbol': 'TSLA'}and{"name": "get_stock_fundamentals", "content": {'symbol': 'TSLA', 'company_name': 'Tesla, Inc.' ...}}.

-

-

This combination provides an XML-like structure for message flow and JSON for data representation, allowing for structured, nested data representation and demarcation of sections.

-

Here’s an example of the inference output from Hermes Function-Calling V1:

<|im_start|>user

Fetch the stock fundamentals data for Tesla (TSLA)<|im_end|>

<|im_start|>assistant

<tool_call>

{'arguments': {'symbol': 'TSLA'}, 'name': 'get_stock_fundamentals'}

</tool_call><|im_end|>

<|im_start|>tool

<tool_response>

{"name": "get_stock_fundamentals", "content": {'symbol': 'TSLA', 'company_name': 'Tesla, Inc.', 'sector': 'Consumer Cyclical', 'industry': 'Auto Manufacturers', 'market_cap': 611384164352, 'pe_ratio': 49.604652, 'pb_ratio': 9.762013, 'dividend_yield': None, 'eps': 4.3, 'beta': 2.427, '52_week_high': 299.29, '52_week_low': 152.37}}

</tool_response>

<|im_end|>

JSON/Structured Outputs

- Once a model is trained on a system prompt that asks for JSON-based structured outputs (below), the model should respond with only a JSON object response, based on the specific JSON schema provided.

<|im_start|>system

You are a helpful assistant that answers in JSON. Here's the JSON schema you must adhere to:\n<schema>\n{schema}\n</schema><|im_end|>

-

The schema can be made from a pydantic object using (e.g., a standalone script available is here from Hermes Function-Calling V1).

-

As an example from Hermes Function-Calling V1:

{

"id": "753d8365-0e54-43b1-9514-3f9b819fd31c",

"conversations": [

{

"from": "system",

"value": "You are a function calling AI model. You are provided with function signatures within <tools> </tools> XML tags. You may call one or more functions to assist with the user query. Don't make assumptions about what values to plug into functions.\n<tools>\n[{'type': 'function', 'function': {'name': 'search_flights', 'description': 'Searches for flights based on departure and destination cities, dates, class, and other preferences.', 'parameters': {'type': 'object', 'properties': {'departure_city': {'type': 'string', 'description': 'The city from which the flight will depart.'}, 'destination_city': {'type': 'string', 'description': 'The destination city for the flight.'}, 'departure_date': {'type': 'string', 'description': 'The departure date for the flight.', 'format': 'date'}, 'return_date': {'type': 'string', 'description': 'The return date for the flight.', 'format': 'date'}, 'class': {'type': 'string', 'description': 'The class of the flight ticket.', 'enum': ['economy', 'business', 'first']}, 'flexible_cancellation': {'type': 'boolean', 'description': 'Indicates if the search should filter for flights with flexible cancellation policies.'}}, 'required': ['departure_city', 'destination_city', 'departure_date', 'return_date', 'class']}}}]\n</tools>\nFor each function call return a json object with function name and arguments within <tool_call> </tool_call> tags with the following schema:\n<tool_call>\n{'arguments': <args-dict>, 'name': <function-name>}\n</tool_call>\n"

},

{

"from": "human",

"value": "I'm planning a kayaking trip and looking to book flights from Los Angeles to Auckland. My departure is scheduled for July 10th, 2023, and I intend to return on July 24th, 2023. I would prefer to travel in economy class and would also like the option to have flexible cancellation policies for the tickets due to the uncertain nature of outdoor activities. Could you please search for flights that meet these criteria and provide me with the available options?"

},

{

"from": "gpt",

"value": "<tool_call>\n{'arguments': {'departure_city': 'Los Angeles', 'destination_city': 'Auckland', 'departure_date': '2023-07-10', 'return_date': '2023-07-24', 'class': 'economy', 'flexible_cancellation': True}, 'name': 'search_flights'}\n</tool_call>\n"

},

{

"from": "tool",

"value": "<tool_response>\n{\"name\": \"search_flights\", \"content\": {\"flights\": [{\"flight_number\": \"AA118\", \"airline\": \"American Airlines\", \"departure_airport\": \"LAX\", \"departure_city\": \"Los Angeles\", \"departure_date\": \"2023-07-10T08:00:00Z\", \"departure_terminal\": \"4\", \"arrival_airport\": \"AKL\", \"arrival_city\": \"Auckland\", \"arrival_date\": \"2023-07-11T14:25:00Z\", \"arrival_terminal\": \"I\", \"duration\": \"17h 25m\", \"class\": \"Economy\", \"price\": \"$950\", \"flexible_cancellation\": true}, {\"flight_number\": \"UA796\", \"airline\": \"United Airlines\", \"departure_airport\": \"LAX\", \"departure_city\": \"Los Angeles\", \"departure_date\": \"2023-07-10T11:30:00Z\", \"departure_terminal\": \"7\", \"arrival_airport\": \"AKL\", \"arrival_city\": \"Auckland\", \"arrival_date\": \"2023-07-11T18:30:00Z\", \"arrival_terminal\": \"I\", \"duration\": \"16h 0m\", \"class\": \"Economy\", \"price\": \"$975\", \"flexible_cancellation\": true}, {\"flight_number\": \"NZ6\", \"airline\": \"Air New Zealand\", \"departure_airport\": \"LAX\", \"departure_city\": \"Los Angeles\", \"departure_date\": \"2023-07-10T21:00:00Z\", \"departure_terminal\": \"B\", \"arrival_airport\": \"AKL\", \"arrival_city\": \"Auckland\", \"arrival_date\": \"2023-07-12T05:20:00Z\", \"arrival_terminal\": \"I\", \"duration\": \"13h 20m\", \"class\": \"Economy\", \"price\": \"$1020\", \"flexible_cancellation\": true}], \"return_flights\": [{\"flight_number\": \"AA117\", \"airline\": \"American Airlines\", \"departure_airport\": \"AKL\", \"departure_city\": \"Auckland\", \"departure_date\": \"2023-07-24T14:10:00Z\", \"departure_terminal\": \"I\", \"arrival_airport\": \"LAX\", \"arrival_city\": \"Los Angeles\", \"arrival_date\": \"2023-07-24T06:00:00Z\", \"arrival_terminal\": \"4\", \"duration\": \"12h 50m\", \"class\": \"Economy\", \"price\": \"$950\", \"flexible_cancellation\": true}, {\"flight_number\": \"UA797\", \"airline\": \"United Airlines\", \"departure_airport\": \"AKL\", \"departure_city\": \"Auckland\", \"departure_date\": \"2023-07-24T19:15:00Z\", \"departure_terminal\": \"I\", \"arrival_airport\": \"LAX\", \"arrival_city\": \"Los Angeles\", \"arrival_date\": \"2023-07-24T10:15:00Z\", \"arrival_terminal\": \"7\", \"duration\": \"11h 0m\", \"class\": \"Economy\", \"price\": \"$975\", \"flexible_cancellation\": true}, {\"flight_number\": \"NZ5\", \"airline\": \"Air New Zealand\", \"departure_airport\": \"AKL\", \"departure_city\": \"Auckland\", \"departure_date\": \"2023-07-24T19:30:00Z\", \"departure_terminal\": \"I\", \"arrival_airport\": \"LAX\", \"arrival_city\": \"Los Angeles\", \"arrival_date\": \"2023-07-24T10:30:00Z\", \"arrival_terminal\": \"B\", \"duration\": \"12h 0m\", \"class\": \"Economy\", \"price\": \"$1020\", \"flexible_cancellation\": true}]}}\n</tool_response>\n"

},

{

"from": "gpt",

"value": "Here are the available flight options with flexible cancellation policies for your kayaking trip from Los Angeles to Auckland:\n\n**Outbound Flights (Los Angeles to Auckland):**\n1. **American Airlines AA118**\n - Departure: July 10, 2023, 8:00 AM from LAX Terminal 4\n - Arrival: July 11, 2023, 2:25 PM at AKL Terminal I\n - Duration: 17h 25m\n - Price: $950\n\n2. **United Airlines UA796**\n - Departure: July 10, 2023, 11:30 AM from LAX Terminal 7\n - Arrival: July 11, 2023, 6:30 PM at AKL Terminal I\n - Duration: 16h 0m\n - Price: $975\n\n3. **Air New Zealand NZ6**\n - Departure: July 10, 2023, 9:00 PM from LAX Terminal B\n - Arrival: July 12, 2023, 5:20 AM at AKL Terminal I\n - Duration: 13h 20m\n - Price: $1020\n\n**Return Flights (Auckland to Los Angeles):**\n1. **American Airlines AA117**\n - Departure: July 24, 2023, 2:10 PM from AKL Terminal I\n - Arrival: July 24, 2023, 6:00 AM at LAX Terminal 4\n - Duration: 12h 50m\n - Price: $950\n\n2. **United Airlines UA797**\n - Departure: July 24, 2023, 7:15 PM from AKL Terminal I\n - Arrival: July 24, 2023, 10:15 AM at LAX Terminal 7\n - Duration: 11h 0m\n - Price: $975\n\n3. **Air New Zealand NZ5**\n - Departure: July 24, 2023, 7:30 PM from AKL Terminal I\n - Arrival: July 24, 2023, 10:30 AM at LAX Terminal B\n - Duration: 12h 0m\n - Price: $1020\n\nAll the listed prices are in economy class with flexible cancellation policies. Please review the options and let me know if you would like to proceed with booking any of these flights or if you need further assistance."

}

],

"category": "E-commerce Platforms",

"subcategory": "Kayak",

"task": "Flight Search"

}

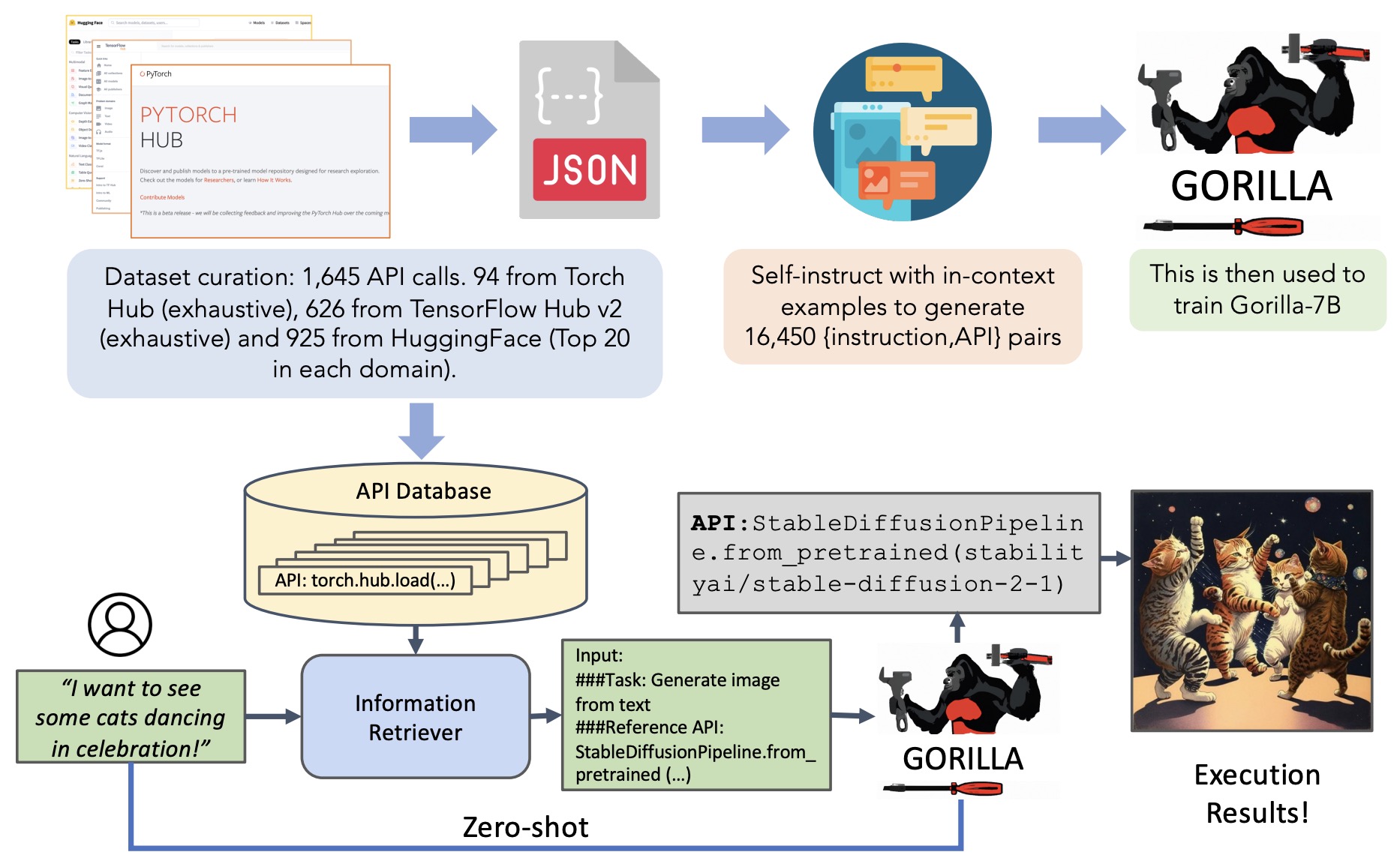

Gorilla OpenFunctions-v2 LLM

-

Overview:

- State-of-the-Art Performance: Gorilla OpenFunctions-v2 is an open-source Large Language Model (LLM) that offers advanced function-calling capabilities, comparable to GPT-4.

- Extended Chat Completion: Extends LLM chat completion with the ability to generate executable API calls from natural language instructions and relevant API contexts.

-

Key Features:

- Multi-Function Support:

- Allows selection from multiple available functions based on user instructions, offering flexibility and adaptability within a single prompt.

- Parallel Function Calling:

- Supports executing the same function multiple times with different parameter values, streamlining workflows needing simultaneous function calls.

- Combined Multi & Parallel Functionality:

- Executes both multi-function and parallel function calls in one chat completion call, handling complex API call scenarios in a single prompt for efficient, high-capability outputs.

- Expanded Data Type Support:

- Enhanced compatibility with diverse programming languages by supporting extensive data types:

- Python: Supports

string,number,boolean,list,tuple,dict, andAny. - Java: Includes support for

byte,short,int,float,double,long,boolean,char, and complex types likeArrayList,Set,HashMap, andStack. - JavaScript: Covers

String,Number,BigInt,Boolean,Array,Date,dict (object), andAny.

- Python: Supports

- Extending beyond typical JSON schema limits, this feature allows users to leverage OpenFunctions-v2 in a straightforward plug-and-play fashion without intricate data handling or reliance on string literals.

- Enhanced compatibility with diverse programming languages by supporting extensive data types:

- Function Relevance Detection:

- Minimizes irrelevant function calls by detecting whether the user’s prompt is conversational or function-oriented.

- If no function is relevant, the model raises an “Error” message with additional guidance, helping refine requests and reducing hallucinations.

- Enhanced RESTful API Capabilities:

- Specially trained to handle RESTful API calls, Gorilla OpenFunctions-v2 optimizes interactions with widely-used services, such as Slack and PayPal.

- This high-quality support for REST API execution boosts compatibility across a broad range of applications and services.

- Pioneering Open-Source Model with Seamless Integration:

- As the first open-source model to support multi-language, multi-function, and parallel function calls, Gorilla OpenFunctions-v2 stands at the forefront of function calling in LLMs.

- Integrates effortlessly into diverse applications, making it a seamless drop-in replacement that requires minimal setup.

- Broad Application Compatibility:

- Gorilla OpenFunctions-v2’s versatility supports a wide range of platforms, from social media like Instagram to delivery and utility services such as Google Calendar, Stripe, and DoorDash.

- Its adaptability makes it a top choice for developers aiming to expand functional capabilities across multiple sectors with ease.

- Multi-Function Support:

-

The figure below (source) highlights some of the key features of OpenFunctions-v2:

Example

- The example below demonstrates function calling, where the LLM interprets a natural language prompt to generate an API request. Given the user’s request for weather data at specific coordinates, the model formulates an API call with precise parameters, enabling automated data retrieval.

"User": "Can you fetch me the weather data for the coordinates

37.8651 N, 119.5383 W, including the hourly forecast for temperature,

wind speed, and precipitation for the next 10 days?"

"Function":

{

...

"parameters":

{

"type": "object",

"properties":

{

"url":

{

"type": "string",

"description": "The API endpoint for fetching weather

data from the Open-Meteo API for the given latitude

and longitude, default

https://api.open-meteo.com/v1/forecast"

}

...

}

}

}

"GPT-4 output":

{

"name": "requests.get",

"parameters": {

"params":

{

"latitude": "37.8651",

"longitude": "-119.5383",

"forecast_days": 10

},

}

}

Best Practices, Guidelines, and Limitations

- Per Anthropic, when using tools with Claude, it’s important to follow best practices, understand limitations, and optimize tool design to ensure effective interactions.

Choosing the Right Model for Tool Use

- Claude 3 Opus is best for complex tool use, as it can handle multiple tools simultaneously and detect missing arguments. It will ask for clarification when necessary.

- Claude 3 Haiku is better suited for simple tool use but defaults to using tools more frequently—even when unnecessary. It will also infer missing parameters rather than asking for clarification.

Tool Usage Limits

- Handling large toolsets

- Claude can accurately select from over 250+ tools, as long as the user query includes all required parameters.

- This limit applies regardless of tool complexity. Complex tools typically have numerous parameters or deeply nested schemas.

- Optimizing tool complexity

- Claude performs better with simpler tools.

- To improve accuracy, avoid deeply nested JSON objects and reduce the number of required inputs.

Sequential vs. Parallel Tool Execution

- Claude generally prefers sequential tool execution—using one tool at a time, analyzing the output, and then deciding on the next step.

- While parallel tool use is possible, it may lead to:

- Missing dependencies (e.g., filling in placeholder values for parameters that depend on previous outputs).

- Unnecessary tool invocations.

- Best practice: Design workflows that encourage sequential tool execution to improve accuracy.

Error Handling and Retries

- If Claude’s tool request is invalid, returning an error response will often prompt it to retry with missing parameters filled in.

- However, after 2-3 failed attempts, Claude may stop retrying and instead return an apology message.

Designing Effective Tool Interfaces

- Tools are an essential part of agentic systems, enabling Claude to interact with external services and APIs. However, tools should be carefully designed, just like prompts, to ensure clarity and usability. By thoughtfully designing your tools, you can reduce errors, improve model accuracy, and create more efficient agent workflows, per the below guidelines per Anthropic’s blog on building effective agents.

Choosing the Right Tool Format

- The way tools are structured can significantly impact Claude’s accuracy. Some formats are harder for an LLM to generate correctly than others:

- Diff vs. Full Rewrite:

- Writing a diff requires pre-determining line changes, which is error-prone.

- A full rewrite avoids this complexity.

- Markdown vs. JSON for structured output:

- JSON requires escaping newlines and quotes, increasing error risks.

- Markdown is often easier for Claude to handle accurately.

Best Practices for Tool Design

- Give the model enough tokens to “think” before committing to an output.

- Stick to familiar formats that naturally occur in publicly available text (e.g., Markdown over escaped JSON).

- Minimize formatting overhead—avoid requiring Claude to track line counts or escape large text blocks.

Improving Tool Usability

- Make tool descriptions intuitive—think like a human: Would a developer immediately understand how to use this tool?

- Refine parameter names and descriptions—write them as if documenting an API for a junior developer.

- Test extensively—run real-world inputs in a sandbox environment to uncover edge cases and refine accordingly.

- Poka-yoke (mistake-proof) your tools.

- Example: Instead of using relative file paths, require absolute paths to avoid errors when switching directories.

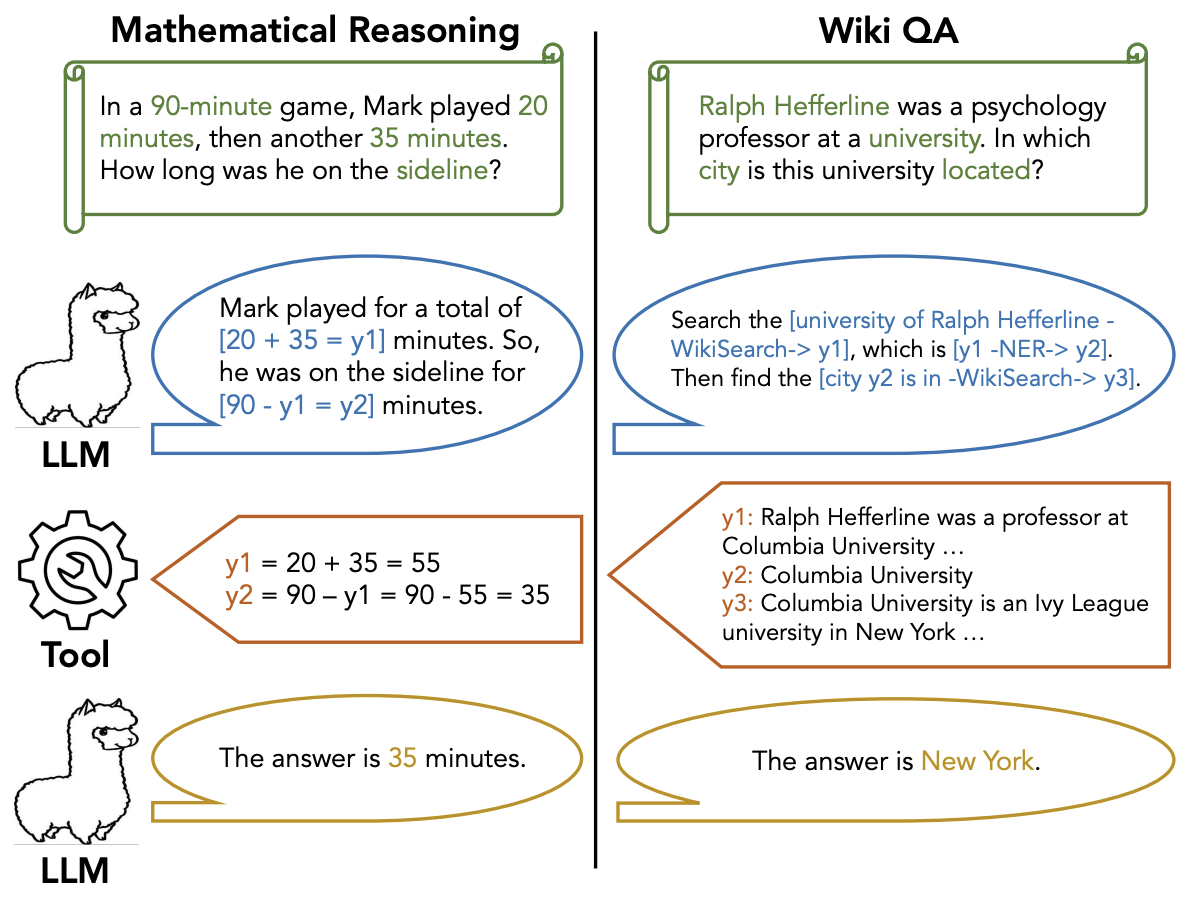

Planning

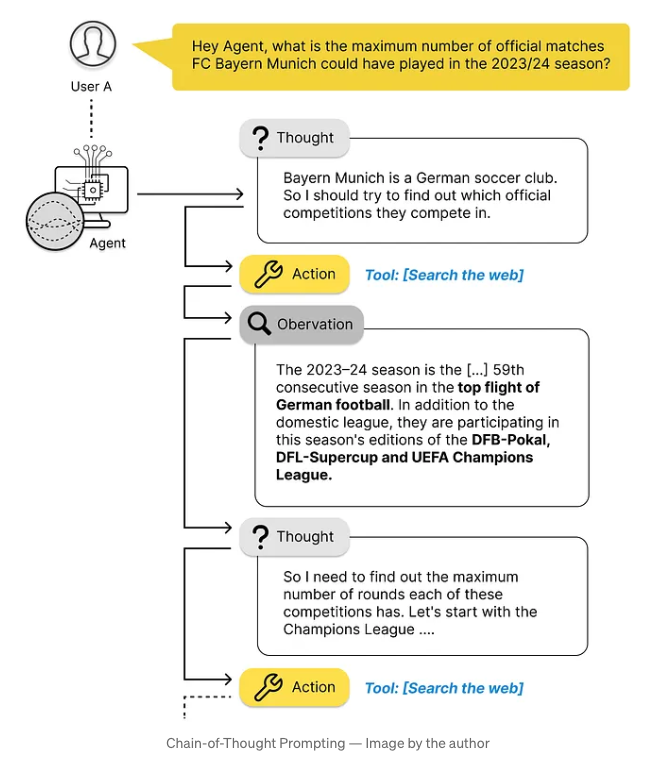

- Planning is a foundational design pattern that empowers an AI system, typically a LLM, to autonomously determine a sequence of actions or steps needed to accomplish complex tasks. Through this dynamic decision-making process, the AI breaks down broad objectives into smaller, manageable steps, executing them in a structured sequence to produce coherent, often intricate outputs. This document delves into the importance of Planning in agentic AI design, illustrating its function with examples and examining its current capabilities alongside its limitations.

- As a transformative design pattern, Planning grants LLMs the ability to autonomously devise and execute plans/strategies for completing tasks. Although current implementations can still exhibit unpredictability, Planning can empower an AI agent with enhanced creative problem-solving capability that enables it to navigate tasks in unforeseen, innovative ways.

- The power of Planning lies in its flexibility and adaptability. When effectively implemented, Planning enables an AI to respond to unforeseen conditions, make informed decisions about task progression, and select tools best suited to each step. This autonomy, however, introduces unpredictability in the agent’s behavior and outcomes.

Overview

- Planning in agentic AI refers to the AI’s ability to autonomously design a task plan, selecting the steps necessary to achieve a given goal. Unlike more deterministic processes, Planning involves a level of adaptability, allowing the AI to adjust its approach based on available tools, task requirements, and unforeseen constraints.

- For example, if an AI agent is tasked with conducting online research on a specific topic, it can independently generate a series of subtasks. These might include identifying key subtopics, gathering relevant information from reputable sources, synthesizing findings, and compiling the research into a cohesive report. Through Planning, the agent does not simply execute pre-programmed instructions but rather determines the optimal sequence of actions to meet the objective.

Example

-

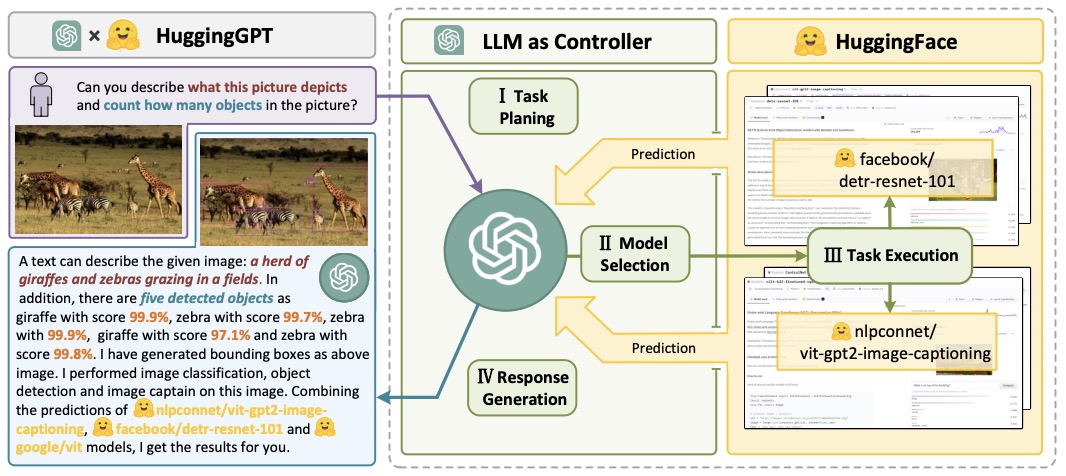

Agentic Planning becomes especially critical when tasks are multifaceted and cannot be completed in a single step. In such cases, an LLM-driven agent dynamically designs a sequence of steps to accomplish the overarching goal. An example from the HuggingGPT paper illustrates this approach: if the objective is to render a picture of a girl in the same pose as a boy in an initial image, the AI might decompose the task as follows:

- Step 1: Detect the pose in the initial picture of the boy using a pose-detection tool, producing a temporary output file (e.g.,

temp1). - Step 2: Use a pose-to-image tool to generate an image of a girl in the detected pose from

temp1, yielding the final output.

- Step 1: Detect the pose in the initial picture of the boy using a pose-detection tool, producing a temporary output file (e.g.,

- In this structured format, the AI specifies each action step, defining the tool to use, the input file, and the expected output. This process then triggers software that invokes the necessary tools in the designated sequence to complete the task successfully. The agent’s autonomous Planning ability facilitates this multi-step workflow, demonstrating its capacity to tackle intricate, non-linear tasks.

- The following figure (source) offers a visual overview of the above process:

Planning vs. Deterministic Approaches

- Planning is not required in every agentic workflow. For simpler tasks or those that follow a predefined sequence, a deterministic, step-by-step approach may suffice. For instance, if an agent is programmed to reflect on and revise its output a fixed number of times, it can execute this series of steps without needing adaptive planning.

- However, for complex or open-ended tasks where it is difficult to predefine the necessary sequence, Planning allows the AI to dynamically decide on the appropriate steps. This adaptive approach is especially valuable for tasks that may involve unexpected challenges or require the agent to select from a range of tools and methods to reach the best outcome.

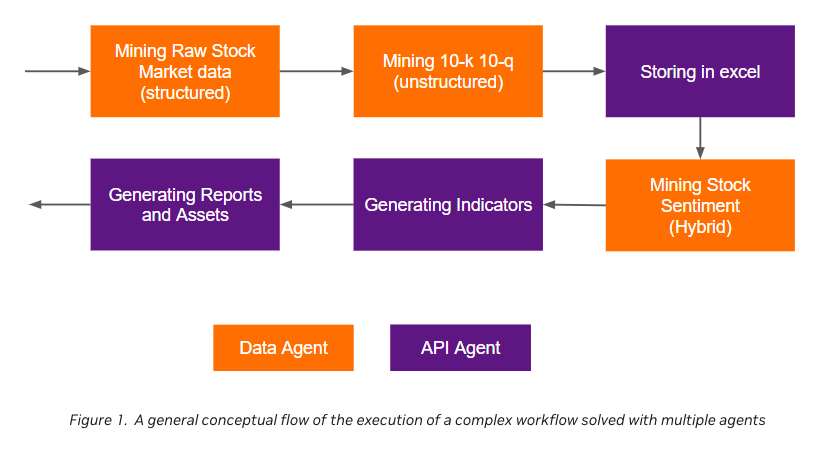

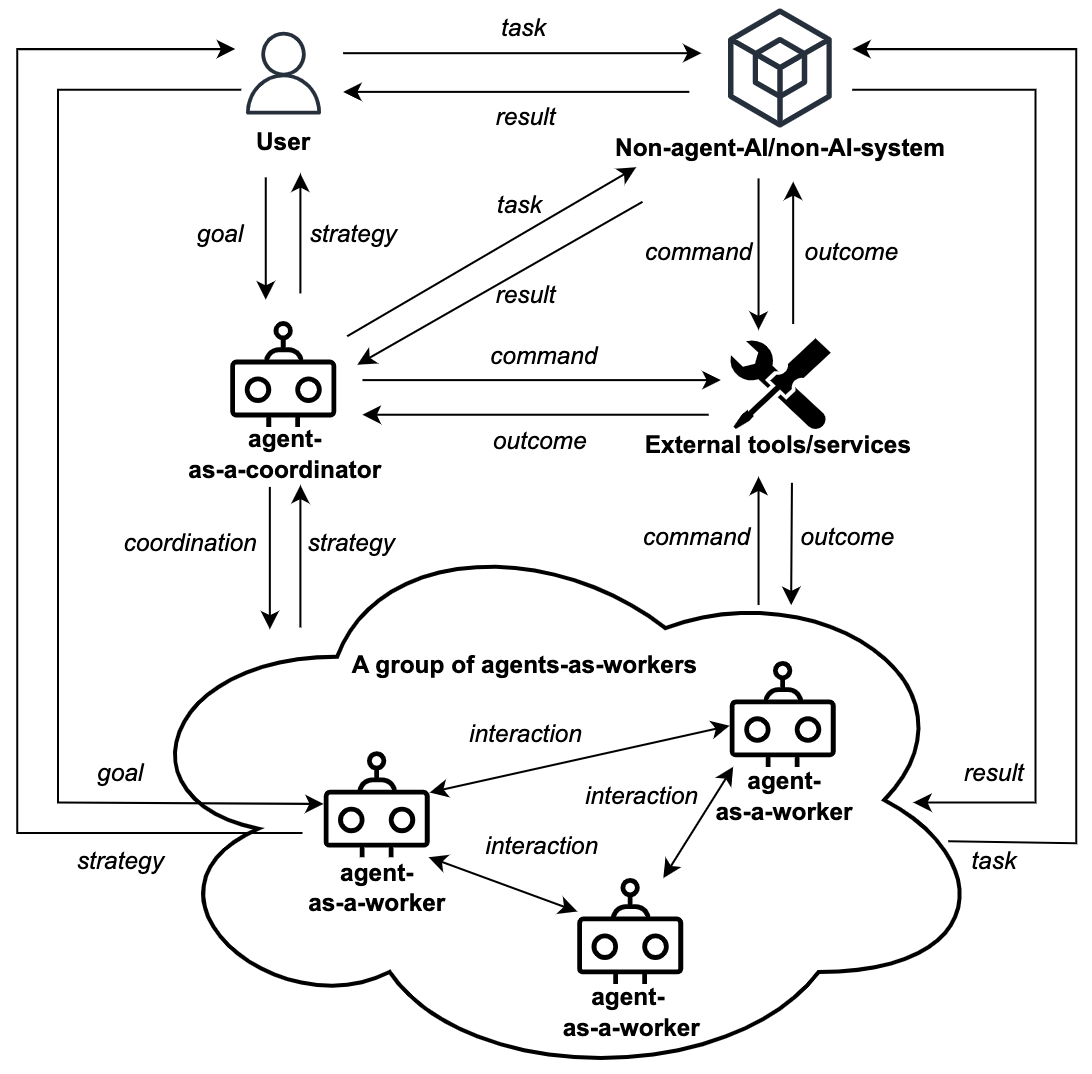

Multi-agent Collaboration

Background

- Multi-agent collaboration has emerged as a pivotal AI design pattern for executing complex tasks by breaking them down into manageable subtasks. By assigning these subtasks to specialized agents—each acting as a software engineer, product manager, designer, QA engineer, etc.—multi-agent collaboration mirrors the structure of a well-coordinated team, where each agent performs specific, designated roles. These agents, whether built by prompting a single LLM in various ways or by employing multiple LLMs, can carry out their assigned tasks with tailored capabilities. For instance, prompting an LLM to act as a “software engineer” by instructing it to “write clear, efficient code” enables it to focus solely on that aspect, thereby honing its output to the requirements of the software engineering subtask.

- This approach has strong parallels in multi-threading, where complex programs are divided across multiple processors or threads to be executed concurrently, improving efficiency and performance. The agentic model thus offers a divide-and-conquer structure that enables AI systems to manage intricate workflows by breaking them into smaller, role-based actions.

Motivation

- The adoption of multi-agent systems in AI is driven by several key factors:

-

Demonstrated Effectiveness: The multi-agent approach has consistently produced positive results across various projects. Ablation studies, such as those presented in the AutoGen paper, have confirmed that multi-agent systems often yield superior performance compared to single-agent configurations for complex tasks. The multi-agent structure allows each agent to focus narrowly on a specific subtask, which is conducive to better performance than attempting to accomplish the entire task in a monolithic approach.

-

Enhanced Task Focus and Optimization: Despite recent advancements allowing some LLMs to accept extensive input contexts (e.g., Gemini 1.5 Pro with 1 million tokens), a multi-agent system still holds distinct advantages. Each agent can be directed to focus on one isolated subtask at a time, enhancing its ability to execute that task with precision. By setting tailored expectations—such as prioritizing code clarity for a “software engineer” agent over scalability or security—developers can optimize the output of each subtask according to specific project requirements.

-

Decomposition of Complex Tasks: Beyond immediate efficiency gains, multi-agent systems offer a powerful conceptual framework for managing complex tasks by breaking them down into smaller, more manageable subtasks. This design pattern enables developers to simplify workflows while simultaneously enhancing communication and task alignment among agents. Much like a manager in a company would assign tasks to specialized employees to address different facets of a project, multi-agent systems use this human organizational structure as a blueprint for assigning AI tasks.

- This design abstraction supports developers in “hiring” agents for distinct roles and assigning tasks according to their “specializations,” with each agent independently executing its workflow, utilizing memory to track interactions, and potentially collaborating with other agents as necessary. Multi-agent workflows can involve dynamic elements like planning and tool use, enabling agents to respond adaptively and collectively in complex scenarios through interconnected calls and message passing.

Implementation

-

While managing human teams has inherent challenges, applying similar organizational strategies to multi-agent AI systems is not only manageable but also offers low-risk flexibility; any issues in an AI agent’s performance are easily rectified. Emerging frameworks such as AutoGen, CrewAI, and LangGraph provide robust platforms for developing and implementing multi-agent systems tailored to diverse applications. Additionally, open-source projects like ChatDev allow developers to experiment with multi-agent setups in a virtual “software company” environment, offering valuable insights into the collaborative potential of AI agents. Such tools represent the leading edge of multi-agent technology, providing a foundation for AI-driven task decomposition and collaboration.

-

In summary, multi-agent collaboration is a compelling and effective AI design pattern that leverages agent specialization, task decomposition, and focused prompting to enable more efficient handling of complex tasks. As multi-agent frameworks continue to advance, they are likely to become foundational in AI-driven workflows, providing developers with both the structure and flexibility to tackle increasingly sophisticated projects.

Agentic Workflow Patterns

- This section examines common patterns observed in the development of agentic systems, beginning with the fundamental component—augmented LLMs—and progressively increasing in complexity, from structured workflows to autonomous agents.

- Each of these patterns enhances the efficiency and effectiveness of agentic systems by structuring tasks in a way that optimally leverages LLM capabilities.

Prompt Chaining

- Prompt chaining involves decomposing a task into a sequence of steps, where each LLM call processes the output of the preceding step. Programmatic validation mechanisms (referred to as “gates”) may be applied at intermediate stages to ensure procedural accuracy.

- Optimal Use Cases:

- This workflow is beneficial when a task can be clearly divided into structured subtasks. It prioritizes improved accuracy over latency by simplifying each LLM call.

- Examples:

- Generating marketing copy and subsequently translating it into another language.

- Creating an outline for a document, verifying its adherence to specific criteria, and then generating the final document based on the outline.

Routing

-

Routing involves classifying an input and directing it to an appropriate specialized task. This approach facilitates the separation of concerns and enables more precise prompts. Without routing, optimizing for one input type may degrade performance for others.

- Optimal Use Cases:

- Routing is effective for tasks with distinct categories that require specialized handling. Classification can be performed by either an LLM or a traditional classification model.

- Examples:

- Categorizing customer service queries (e.g., general inquiries, refund requests, technical support) and routing them to appropriate downstream processes.

- Assigning simple queries to lightweight models (e.g., Claude 3.5 Haiku) while directing complex queries to more capable models (e.g., Claude 3.5 Sonnet) to balance efficiency and cost.

Parallelization

- Parallelization entails multiple LLM instances working on a task simultaneously, with results aggregated programmatically. It manifests in two key forms:

- Sectioning: Dividing a task into independent subtasks that can be processed in parallel.

- Voting: Running the same task multiple times to obtain diverse outputs.

- Optimal Use Cases:

- Parallelization is advantageous when tasks can be effectively subdivided to enhance speed or when multiple perspectives improve reliability.

- Examples:

- Sectioning:

- Implementing safeguards where one LLM processes user queries while another screens for inappropriate content.

- Automating model performance evaluations by assigning different evaluation criteria to separate LLM calls.

- Voting:

- Conducting code reviews for security vulnerabilities, with multiple prompts assessing different aspects of the code.

- Evaluating content for appropriateness by using multiple assessments to balance false positives and negatives.

- Sectioning:

Orchestrator-Workers

-

This workflow features a central LLM that dynamically decomposes tasks, assigns them to worker LLMs, and synthesizes their results.

- Optimal Use Cases:

- This approach is suited for complex tasks where the required subtasks are not predefined but must be determined dynamically. Unlike parallelization, which follows a fixed structure, this workflow offers greater adaptability.

- Examples:

- Software development tools that implement complex modifications across multiple files.

- Research tasks requiring information retrieval and synthesis from diverse sources.

Evaluator-Optimizer

-

In this iterative workflow, one LLM generates responses while another evaluates and refines them in a continuous loop.

- Optimal Use Cases:

- This pattern is particularly effective when clear evaluation criteria exist and iterative refinement adds measurable value. It is most beneficial when:

- Human feedback has been shown to enhance LLM-generated outputs.

- The LLM itself is capable of providing constructive feedback.

- This pattern is particularly effective when clear evaluation criteria exist and iterative refinement adds measurable value. It is most beneficial when:

- Examples:

- Literary translation, where an evaluator LLM can provide nuanced critiques that improve translation quality.

- Complex search tasks requiring iterative refinement, where an evaluator assesses whether additional searches are necessary to obtain comprehensive information.

Single-Agent Systems vs. Multi-Agent Systems

- While multi-agent systems may be suited for specific use cases—such as those involving role-based separation or access to privileged information—single-agent systems present a more elegant and pragmatic solution in many scenarios. Their capacity to maintain holistic context, reduce system complexity, and adapt fluidly to varied tasks positions them as a strong alternative to the prevailing trend of multi-agent design. Specifics below.

Preservation of Context

- While multi-agent systems offer structural advantages in dividing and distributing tasks, there are compelling reasons why single-agent systems are often the preferred choice in particular contexts. These advantages stem largely from considerations of system coherence, efficiency, and maintainability.

- First, single-agent systems mitigate the risk of context fragmentation. In multi-agent setups, information must be passed between distinct agents, each with its own prompt and action space. This process can lead to significant information loss or misinterpretation, especially when intermediate outputs are condensed or abstracted. In contrast, a single-agent system maintains a unified internal context throughout its operation, thereby preserving the continuity and depth of understanding necessary for complex reasoning or nuanced decision-making.

Simplicity and Maintainability