Primers • Reinforcement Learning for Agents

- Overview

- Background: Why SFT Fails (and RL Is Required) for Tool-Calling Agents

- Background: Teaching Agents Tool-Calling with RL

- Motivation

- Recipe

- Environment, MDP Formulation, and Action Space

- The MDP for “When / Which / How”

- State (\(s_t\))

- Structured, Factored Action Space

- Action Type 1:

ANSWER(final_text) - Action Type 2:

CALL(tool_name, args_json) - Structured Action Encoding

- Episode Dynamics

- Handling Invalid/Malformed Actions

- Integrating “When / Which / How” of Tool-Calling into the Action Space

- Annotation Sources for Reward Components (“When”, “Which”, and “How”)

- Reward Component: Call (Deciding “When” a Tool Should Be Invoked)

- Reward Component: Tool Selection (Choosing “Which” Tool)

- Reward Component: Tool-Syntax Correctness

- Reward Component: Tool-Execution Correctness

- Reward Component: Argument Quality (Deciding “How” to Call a Tool)

- Reward Component: Final Task Success

- Merged Preference-Based Rewards (For “Call”, “Which”, and “How”)

- Unified Reward Formulation

- Asymmetric Rewards in Tool-Calling RL

- RL Optimization Pipeline: Shared Flow + PPO vs. GRPO

- Curriculum Design, Evaluation Strategy, and Diagnostics for Tool-Calling RL

- Curriculum Design Overview

- Stage 0: Pure Supervised Bootstrapping (SFT)

- Stage 1: Binary Decision Curriculum (Learning When)

- Stage 2: Tool-Selection Curriculum (Learning Which)

- Stage 3: Argument-Construction Curriculum (Learning How)

- Stage 4: Multi-Step Tool Use (Pipelines)

- Stage 5: Open-Domain Free-Form Tasks

- Diagnostics and Monitoring

- Detecting Skill Collapse

- Curriculum Scheduling (Putting It All Together)

- Final Note

- Reinforcement Learning and the Emergence of Intelligent Agents

- The Role of Reinforcement Learning in Self-Improving Agents

- Environments for Reinforcement Learning in Modern Agents

- The Three Major Types of Reinforcement Learning Environments

- Reinforcement Learning for Web and Computer-Use Agents

- Background: Policy-Based and Value-Based Methods

- Background: Process-Wise Rewards vs. Outcome-Based Rewards

- Reinforcement Learning from Human Feedback (RLHF) and Direct Preference Optimization (DPO)

- Why These Algorithms Matter for Web & Computer-Use Agents

- Key Equations

- Agentic Reinforcement Learning via Policy Optimization

- Agent Training Pipeline

- Environment Interaction Patterns for Agent Design

- Reward Modeling

- Search-Based Reinforcement Learning, Monte Carlo Tree Search (MCTS), and Exploration Strategies in Multi-Step Agents

- Motivation: Exploration vs. Exploitation in Complex Agentic Systems

- Monte Carlo Tree Search (MCTS) in RL-Based Agents

- Neural-Guided Search: Policy Priors and Value Models

- Integration of Search with Reinforcement Learning and Fine-Tuning

- Process-Wise Reward Shaping in Search-Based RL

- Integration of Search with Reinforcement Learning and Fine-Tuning

- Exploration Strategies in Web and Computer-Use Environments

- Planning and Value Composition Across Multiple Environments

- Summary and Outlook

- Memory, World Modeling, and Long-Horizon Credit Assignment

- The Need for Memory and Temporal Reasoning

- Explicit vs. Implicit Memory Architectures

- World Modeling: Learning Predictive Environment Representations

- Temporal Credit Assignment and Advantage Estimation

- Hierarchical Reinforcement Learning (HRL)

- Memory-Augmented Reinforcement Learning (MARL)

- Long-Horizon Planning via Latent Rollouts and Model Predictive Control

- Takeaways

- Evaluation, Safety, and Interpretability in Reinforcement-Learning-Based Agents

- Why Evaluation and Safety Matter in RL-Based Agents

- Core Dimensions of Agent Evaluation

- Safety Challenges in RL Agents

- Interpretability and Traceability in Agent Behavior

- Safety-Aware RL Algorithms

- Human-in-the-Loop (HITL) Evaluation and Oversight

- Benchmarking Frameworks for Safe and Transparent Evaluation

- Toward Aligned, Interpretable, and Reliable Agentic Systems

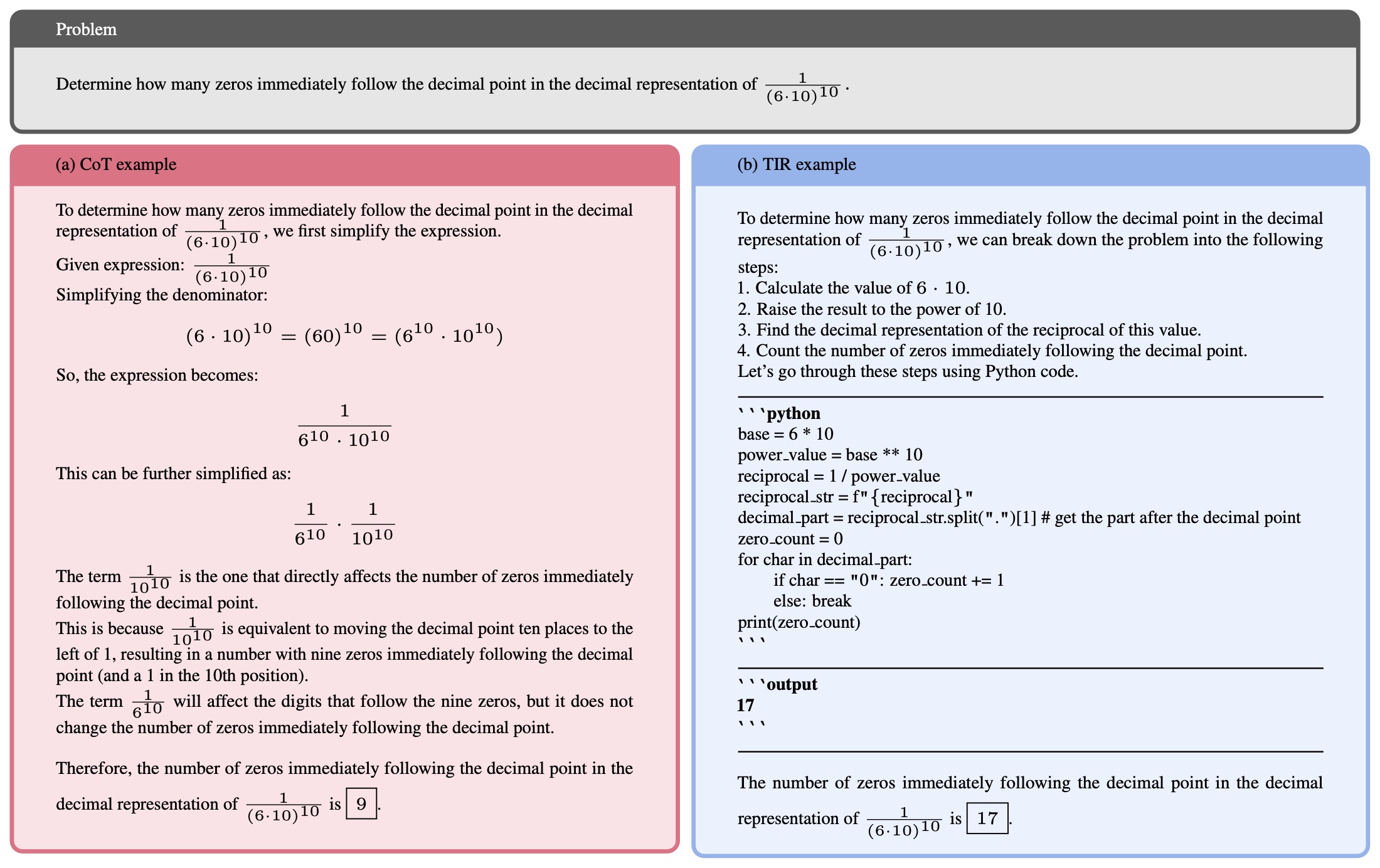

- Tool-Integrated Reasoning

- Foundations and Theoretical Advancements in TIR

- Practical Engineering for Stable Multi-Turn TIR

- Scaling Tool-Integrated RL from Base Models

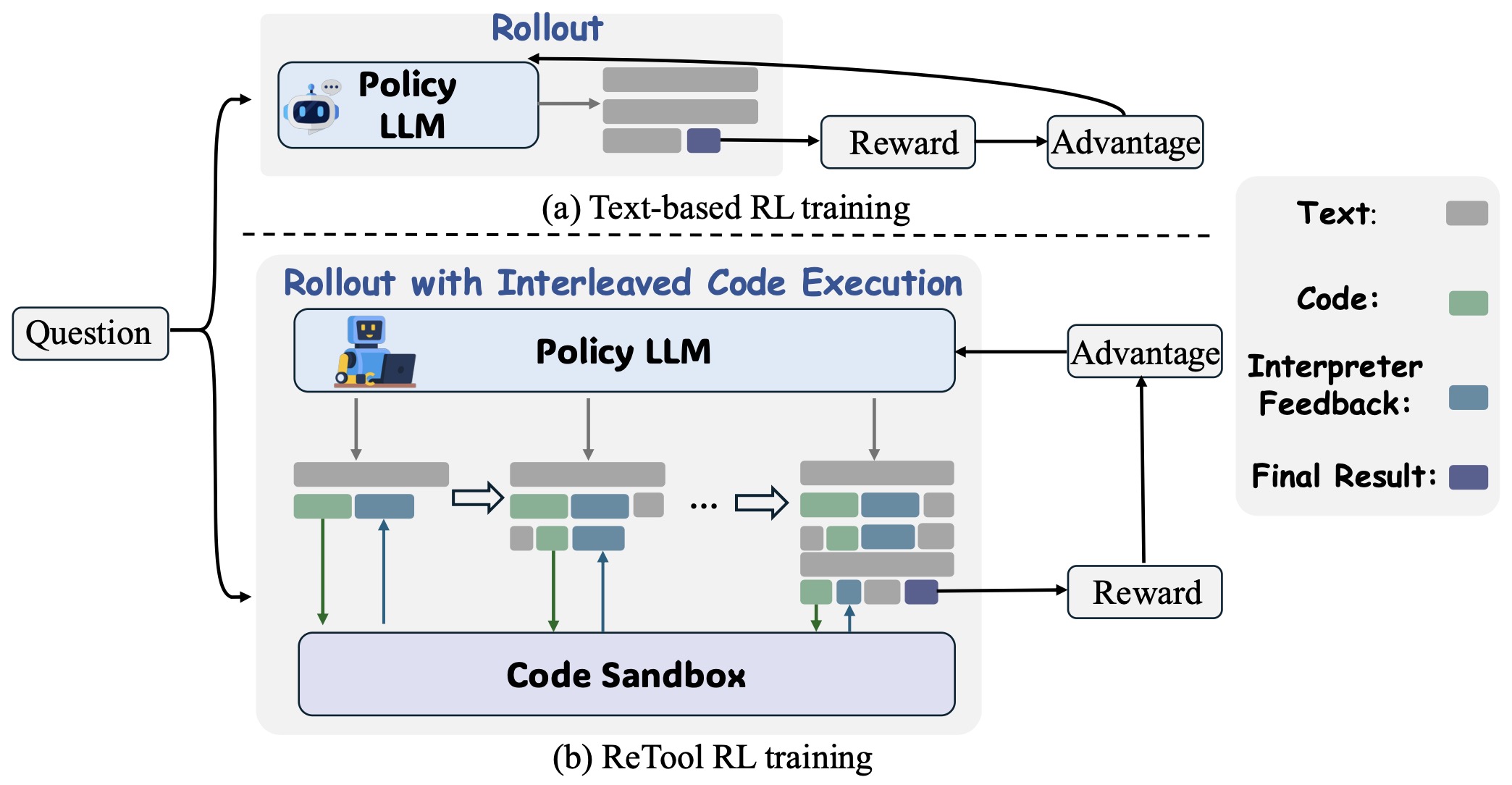

- Code-Interleaved Reinforcement for Tool Use

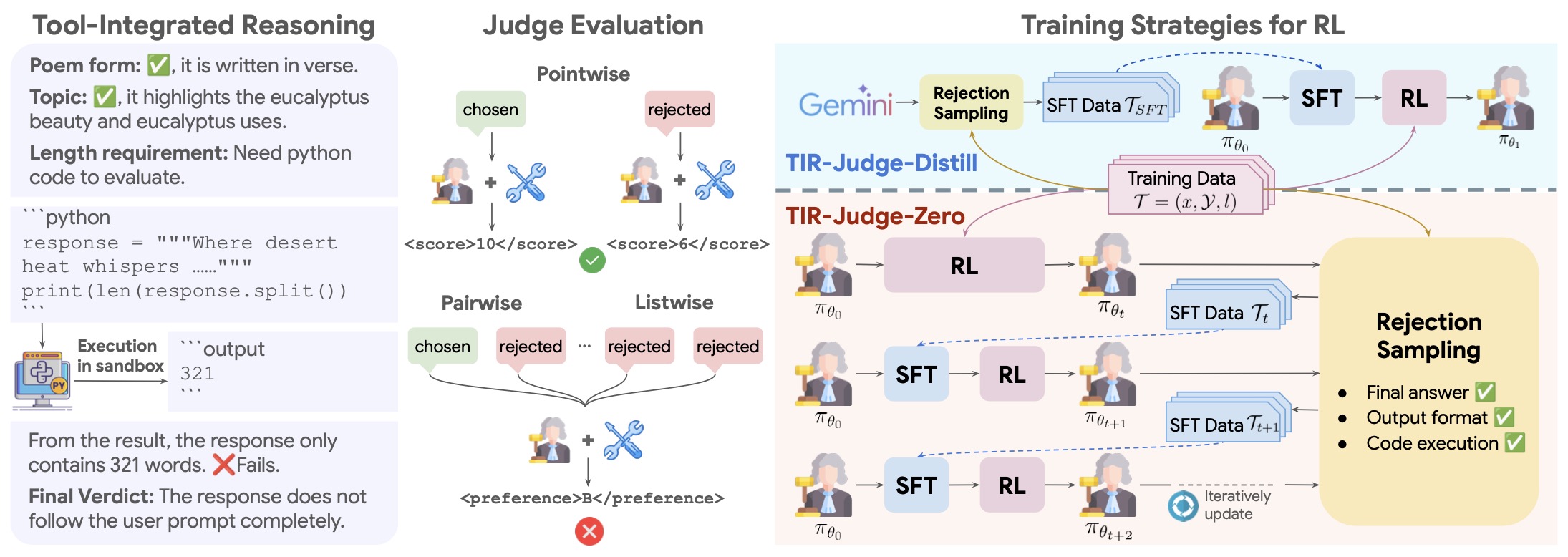

- Tool-Augmented Evaluation Agents

- Synthesizing Trends in TIR + RL Integration

- Synthesis: Beyond Individual Tool Use

- Unifying RL and TIR: Process vs. Outcome Rewards

- Synthesis and Outlook

- Citation

Overview

- Reinforcement Learning (RL) provides a formal framework for teaching artificial agents how to make decisions by interacting with an environment and learning from the outcomes of their actions. The learning process is governed by a Markov Decision Process (MDP), defined as a tuple \((S, A, P, R, \gamma)\), where \(S\) denotes the set of all possible states, \(A\) the set of available actions, \(P(s' \mid s,a)\) the transition probability function that determines how the environment changes, \(R(s,a)\) the reward function that provides feedback to the agent, and \(\gamma\) the discount factor controlling how much the agent values future rewards relative to immediate ones. The agent seeks to learn a policy \(\pi(a \mid s)\), representing the probability of choosing action \(a\) when in state \(s\), that maximizes the expected cumulative reward:

- RL is distinct from supervised learning in that the correct answers (labels) are not provided directly. Instead, the agent must explore different actions, observe the consequences, and adapt its policy based on the rewards it receives. This trial-and-error process makes RL a natural fit for agents operating in complex digital environments such as the web, desktop systems, and software tools.

Background: Why SFT Fails (and RL Is Required) for Tool-Calling Agents

-

Training language models to reliably call tools (APIs, calculators, search engines, etc.) requires more than just supervised learning. While supervised fine-tuning (SFT) can teach the model to mimic example traces, it cannot teach the policy to decide when, which, or how to call a tool in a dynamic interactive environment. Specifics below:

-

SFT lacks decision-making over tool invocation:

- Tool-calling isn’t merely generating a correct JSON snippet; it requires deciding whether a tool call is appropriate in context. SFT merely imitates demonstration actions—maximising:

- … with no dependence on outcomes or future consequences. In tool-use settings, the cost of calling a tool (latency, billing, context switching) must be factored in — SFT cannot encode this. RL, by contrast, can optimise for cumulative return

- … and thus learn when to avoid tool calls.

-

SFT cannot teach selection among tools:

- When multiple tools exist (search vs. calculator vs. map API), the model must learn a selection policy. SFT only learns to replicate the choice made in the demonstration, but it does not learn the trade-offs or consequences of selecting the wrong tool. RL provides negative reward for wrong choices, which in turn teaches discrimination among tools.

-

SFT cannot incorporate tool output feedback:

- Even if SFT teaches correct argument formatting, it does not receive feedback on execution success, tool output quality, or how the return value impacts the final answer. In RL, the reward can include syntax success, execution success, argument quality and final answer correctness — something not captured by SFT.

-

SFT is poor at multi-step workflows and stopping conditions:

- Many tool-use tasks require multiple sequential calls, conditional logic, and a decision when to stop calling tools and answer. SFT sees fixed demonstration lengths and cannot generalise to dynamic lengths or stopping decisions. RL handles this via episodic returns and learnt policies for “ANSWER” actions vs. further “CALL”.

-

SFT cannot penalize misuse, over-use or under-use of tools:

- Unnecessary tool calls (which increase cost/latency) or missing required tool calls (which degrade correctness) need explicit penalties. SFT cannot encode such cost signals because the training loss only rewards matching demonstration tokens. RL directly incorporates costs into the reward function.

-

SFT does not generalize well beyond the demonstration distribution:

- New tools, new argument schemas, unseen queries or dynamic contexts are common in tool-use systems. SFT tends to overfit to the fixed distribution of demonstration actions. RL, via exploration and returns optimization, helps the model discover new behaviours and adapt to changed context.

-

SFT cannot optimize multi-component objectives:

- Tool use requires coordination across distinct sub-skills: the decision of when to call a tool, the choice of which tool is appropriate, the construction of arguments, the formatting of JSON, the success of tool execution, the correctness of the final answer, and the minimisation of tool cost and latency.

- SFT provides a single monolithic loss that does not distinguish these components. It cannot selectively penalize errors in timing, selection, argument structure, schema fields, or step efficiency. RL, in contrast, enables fine-grained reward shaping where each component contributes its own reward term to the overall objective. This makes it possible to reward correct tool timing separately from correct tool selection, reward argument correctness separately from execution success, and reward final answers separately from intermediate steps.

-

What Is Imitation Learning and Why SFT Is Used Before RL

-

Before applying reinforcement learning to teach tool-calling behavior, modern LLM systems almost always begin with imitation learning. In the LLM context, imitation learning is implemented via Supervised Fine-Tuning (SFT) — training the model to reproduce expert-authored examples of correct tool usage.

-

This section explains (i) what imitation learning is, (ii) why SFT is a special case of it, and (iii) why imitation learning is a necessary warm-start for RL in tool-use settings.

What is imitation learning?

-

Imitation learning trains a policy by directly copying expert actions instead of learning via trial-and-error. No rewards, no exploration, no environment optimisation — just supervised mapping from states to actions.

-

Formally, given demonstration trajectories \(\tau = {(s_0, a_0), (s_1, a_1), \dots, (s_T, a_T)}\), imitation learning maximises the likelihood of expert actions:

\[\mathcal{L}_{\rm IL}(\theta) = - \sum_{t=0}^{T} \log p_\theta(a_t^{\rm expert} \mid s_t)\] -

This is close to standard supervised learning, but in robotics and RL theory it is known as behavior cloning, one of the simplest imitation-learning methods.

Why SFT is exactly imitation learning

-

When training LLMs to produce reasoning traces, tool-call JSON, or final answers using labelled examples, SFT implements the above loss directly. The model does not explore, does not observe tool outputs, and is not rewarded for correct long-term actions.

-

In the tool-calling context, SFT teaches:

- how tool calls look (syntax),

- rough patterns of when humans call tools,

- typical argument structures,

- final-answer formatting.

-

It is imitation, not policy optimisation.

Why imitation learning is essential before RL

-

Reinforcement learning over raw text is unstable. The action space is huge, syntax is fragile, and initial random exploration produces invalid tool calls. Therefore, all effective tool-use RL systems warm-start with SFT to give the model baseline competencies:

-

Basic tool syntax and schema literacy: Without SFT, the model would produce malformed JSON during RL, causing constant errors and noisy gradients.

-

A minimal “when/which/how” prior: SFT examples give the model at least a heuristic pattern of tool timing, tool choice, and argument formation.

-

Reduced exploration burden: Starting RL from scratch would require immense exploration before any correct tool call is sampled. SFT drastically reduces the search space.

-

Stability and safety in early RL training: RL at random-init leads to:

- runaway tool-call loops,

- malformed arguments,

- no successful episodes,

- degenerate policies.

-

-

SFT prevents this collapse by anchoring the initial model to sane behavior.

Why imitation learning alone is insufficient (recap)

-

SFT gives you competence, not policy mastery. After SFT, models still fail at:

- deciding when to avoid unnecessary tool calls,

- selecting among multiple tools based on trade-offs,

- tuning arguments based on execution feedback,

- multi-step planning,

- minimising tool-use cost,

- stopping when enough information is gathered.

-

Imitation learning provides the starting point, while RL provides the decision-making optimisation needed for real tool-use proficiency.

Background: Teaching Agents Tool-Calling with RL

Motivation

-

In recent years, the paradigm of tool-augmented reasoning with large language models (LLMs) has gained traction: for example, Tool Learning with Foundation Models by Qin et al. (2023) provides a systematic overview of how foundation models can select and invoke external tools (e.g., APIs) to solve complex tasks.

-

Teaching an LLM to use tools is fundamentally a three-part learning problem:

- When to call a tool: deciding whether a tool invocation is necessary, optional, or unnecessary for a given query.

- Which tool to call: selecting the correct tool among several available tools.

- How to call a tool: generating valid, correctly structured arguments that allow the tool to execute successfully.

- These three categories correspond to decision-level, selection-level, and argument-level competencies, each requiring distinct supervision and reward signals.

-

Prior work such as ReTool: Reinforcement Learning for Strategic Tool Use in LLMs by Feng et al. (2025) and ToolRL: Reward is All Tool Learning Needs by Qian et al. (2025) demonstrates that fine-grained decomposition of the tool-learning problem significantly boosts RL stability and policy quality, especially when separating the decision to call a tool from the actual mechanics of tool invocation.

-

This write-up presents a full end-to-end RL recipe where a single policy is optimized with PPO or related algorithms to simultaneously learn:

- When to call a tool,

- Which tool to choose, and

- How to construct correct arguments.

Why “when / which / how” decomposition is necessary

-

When: The timing of tool usage determines the efficiency and correctness of solutions. Over-calling leads to unnecessary cost and latency, while under-calling leads to incomplete or incorrect answers. Tool timing thus forms a binary or multi-class policy decision that must be explicitly learned.

-

Which: Even when a tool call is appropriate, the model must choose the correct tool among a library of APIs. This is a classification problem, requiring a structured action space and tool-selection reward.

-

How: Tool arguments must be valid JSON, consistent with schemas, and semantically correct. This is a structured generation problem, requiring rewards for syntax, executability, and argument quality.

-

Even within one policy, these decisions require different supervision signals, and RL benefits from isolating their reward terms so the model knows why a trajectory is good or bad.

-

Research such as ToolRL shows that decomposed reward components for these distinct competencies improve reward signal clarity, reduce credit assignment difficulty, and produce more controllable execution-time behavior.

Recipe

-

Here is a summary of the major phases to be implemented:

-

Define an environment and action space that supports:

- when-decisions (tool vs. no-tool),

- which-decisions (tool selection),

- how-decisions (argument generation).

-

Annotate or derive labels for each learning axis:

- when-labels: \(y_{\text{when}} \in {0,1}\)

- which-labels: \(y_{\text{which}} \in {1,\dots,K}\) for \(K\) tools

- how-labels: argument-schema exemplars or reference traces

-

Bootstrap the LLM via supervised fine-tuning (imitation learning) so the policy starts with a basic understanding of:

- tool timing,

- tool selection,

- valid argument formats.

-

Design a multi-component reward function including:

- a when-reward for correct tool/no-tool decisions,

- a which-reward for correct tool selection,

- a how-reward for syntax validity, executability, and argument quality,

- a final task-success reward.

-

Train using PPO (or GRPO) over trajectories with the combined reward:

- compute returns \(R_t\),

- compute advantages \(A_t\) (e.g., with GAE),

- update policy and value model with KL regularization to a supervised fallback policy.

-

Curriculum design: progress from simple supervised traces to complex multi-step workflows where the model must interleave “when”, “which”, and “how” decisions.

-

Diagnostics and evaluation: track metrics for each axis separately:

- when-accuracy,

- which-accuracy,

- argument correctness,

- executability rate,

- and final task accuracy.

-

Environment, MDP Formulation, and Action Space

- Tool-augmented LLMs must make three decisions during reasoning:

- whether a tool should be invoked (when),

- which tool is appropriate (which), and

- how to construct valid and effective arguments (how).

- This decomposition mirrors the behavioral factorization used in systems such as Toolformer by Schick et al. (2023) and the structured planning seen in ReAct by Yao et al. (2022). It also aligns with the policy design in recent RL approaches like ReTool by Feng et al. (2025) and ToolRL (2025), where tool selection is modeled as a multi-stage decision.

The MDP for “When / Which / How”

-

We model tool use as an MDP:

\[\mathcal{M} = (S, A, P, R, \gamma)\]- … with a factored action space that explicitly captures the “when/which/how” structure.

State (\(s_t\))

-

Each state encodes:

- the user’s query

- ongoing reasoning steps

- past tool calls and outputs

- system instructions

- optional episodic memory (short-term trajectories)

-

The full state is serialized into a structured text prompt fed into the LLM, much like ReAct-style reasoning traces.

Structured, Factored Action Space

-

The action space is decomposed into:

- When to call a tool

- Which tool to call (conditional on calling)

- How to construct arguments (conditional on chosen tool)

-

This yields two disjoint high-level action types:

Action Type 1: ANSWER(final_text)

- Used when the model decides no more tool calls are needed.

Action Type 2: CALL(tool_name, args_json)

-

Further factored into:

- When: deciding to call a tool rather than answer

- Which: selecting a tool from the available toolset

- How: generating a valid argument JSON for that tool

-

This factorization improves learning by ensuring that RL gradients reflect distinct sub-skills within tool usage.

Structured Action Encoding

-

To stabilize RL training, each action is formatted in strict machine-readable JSON, following the practice in ReTool and Toolformer:

- Example: CALL action

<action> { "type": "call", "when": true, "which": "weather_api", "how": { "city": "Berlin", "date": "2025-05-09" } } </action>- Example: ANSWER action

<action> { "type": "answer", "when": false, "content": "It will rain in Berlin tomorrow." } </action> -

The when flag can be made explicit or implicit; explicit inclusion helps debugging and credit assignment.

Episode Dynamics

-

An episode proceeds as follows:

- LLM receives state \(s_0\).

- LLM produces a structured action \(a_0\) containing “when/which/how”.

- Environment parses the action:

- If

ANSWER\(\rightarrow\) episode ends. - If

CALL\(\rightarrow\) execute tool, append output to context, produce next state \(s_1\).

- If

- Reward is computed for “when”, “which”, “how” correctness and final answer quality.

- Continue until ANSWER or max-step limit.

-

This multi-step structure supports multi-hop reasoning as used in ReAct and aligns with task settings in Toolformer.

Handling Invalid/Malformed Actions

-

Invalid “when/which/how” choices should not terminate the episode. Instead:

- Assign negative syntax or validity rewards

- Return an error message to the model

- Allow the agent to continue

-

This is consistent with reward-shaping strategies from Deep RL from Human Preferences by Christiano et al. (2017).

Integrating “When / Which / How” of Tool-Calling into the Action Space

-

During RL optimization:

- The policy gradient is computed over the entire structured action

- But reward is decomposed along the three decision axes

- PPO or GRPO provides stable updates (as seen in ReTool and ToolRL)

-

Thus, the policy learns simultaneously:

- When a tool is appropriate

- Which tool should be chosen

- How to construct high-quality arguments

-

This modularity also makes reward engineering substantially easier, as each component can be trained and debugged independently.

Annotation Sources for Reward Components (“When”, “Which”, and “How”)

-

This section explains how to generate supervision signals for all reward components in the RL system, reflecting the decomposition of tool-use behavior into:

- When \(\rightarrow\) deciding if and when a tool should be used

- Which \(\rightarrow\) selecting which tool to call

- How \(\rightarrow\) constructing how to call it via correctly formed arguments

-

To support this, the reward is decomposed into the following components:

- Call (when-to-call): whether a tool should be called.

- Tool-selection: whether the correct tool was chosen (which).

- Tool-syntax correctness: whether the tool call was formatted properly.

- Tool-execution correctness: whether the tool executed successfully.

- Argument quality: whether the arguments were appropriate (how).

- Final task success: whether the entire episode produced the right answer.

- Preference-based / generative evaluation: higher-level judgment (LLM-as-a-Judge).

-

Each reward dimension can be supervised using a mixture of:

- Rule-based heuristics

- Discriminative reward models trained on human data

- Generative reward models (LLM-as-a-Judge as in DeepSeek-R1 by Guo et al. (2025)).

Reward Component: Call (Deciding “When” a Tool Should Be Invoked)

- This component supports the when dimension: Is a tool call appropriate/necessary at this point in the reasoning process?

Rule-based supervision

-

Use deterministic rules and intent detectors inspired by works like Toolformer by Schick et al. (2023):

- Weather questions \(\rightarrow\) require weather API

- Math expressions \(\rightarrow\) require calculator

- “Define X / explain Y” \(\rightarrow\) no tool

- Factual queries \(\rightarrow\) search tool

- Actionable tasks (e.g., booking) \(\rightarrow\) appropriate domain tool

-

This produces binary or graded labels \(y_{\text{call}} \in {0,1}\).

Discriminative reward model

- Train a classifier \(f_{\phi}(x)\) predicting \(P(y_{\text{call}} = 1 \mid x)\) using human-labeled examples indicating if/how strongly the query requires tool use.

- This mirrors methodology from RLHF as in InstructGPT by Ouyang et al. (2022).

Generative reward model (LLM-as-a-Judge)

-

Use a judge model (e.g., DeepSeek-V3 per DeepSeek-R1):

-

Prompt: “Given this user query and available tools, should the agent call a tool at this stage? Provide yes/no and reasoning.”

-

Extract a scalar reward from the generative verdict.

-

This can capture nuanced timing requirements over multiple steps.

Reward Component: Tool Selection (Choosing “Which” Tool)

- This component supports the which dimension: Given that a tool is to be called, was the correct tool chosen?

Rule-based supervision

-

If rules map tasks to a specific tool or tool category, then:

- If the predicted tool matches the rule \(\rightarrow\) +reward

- Otherwise \(\rightarrow\) −reward

-

This is similar to mapping tool types in ReAct by Yao et al. (2022).

Discriminative reward model

- Train a classifier \(f_{\psi}(s_t, a_t)\) that judges whether the selected tool matches human expectations for that state.

Generative reward model

-

Ask a judge LLM: “Was TOOL_X the best tool choice for this request at this step?”

-

Score the answer and normalize.

Reward Component: Tool-Syntax Correctness

-

Supports the how dimension partially, focusing on format:

- JSON validity

- Required argument fields

- Correct schema shape

Rule-based

- JSON parse success

- Schema validation

-

Argument-type validation

-

Reward:

\[r_t^{\text{syntax}} = \begin{cases} +1 & \text{if JSON + schema valid} \ -1 & \text{otherwise} \end{cases}\] - This echoes structured action enforcement in ReAct.

Discriminative reward model

- Classify correct vs. incorrect tool-call formats.

Generative reward model

- Ask an LLM judge whether the formatting is correct (1–10), normalize to reward.

Reward Component: Tool-Execution Correctness

- Did the tool run without error?

Rule-based

- HTTP 200 or success flag \(\rightarrow\) +reward

- Errors / exceptions \(\rightarrow\) −reward

Discriminative reward model

- Trained to predict execution feasibility or correctness.

Generative reward model

- Judge evaluates based on logs and outputs.

Reward Component: Argument Quality (Deciding “How” to Call a Tool)

- This is the core of the how dimension: constructing appropriate arguments.

Rule-based

- For numeric or structured problems:

- For strings, use embedding similarity or fuzzy match.

Discriminative reward model

- Trained to identify argument errors (bad city name, missing date, etc.).

Generative reward model

- LLM-as-a-Judge evaluates argument plausibility/fit to the query.

Reward Component: Final Task Success

- Whether the overall trajectory produced a correct answer.

Rule-based

- Unit test pass

- Exact match

- Tolerance-based numeric match

Discriminative reward model

- Using preference modeling as in Deep RL from Human Preferences by Christiano et al. (2017), train:

Generative reward model

- Judge LLM compares model prediction with ground truth (as in DeepSeek-R1).

Merged Preference-Based Rewards (For “Call”, “Which”, and “How”)

-

You can construct pairs of trajectories differing in:

- timing of tool calls (call),

- choice of tool (which), and

- argument construction (how)

-

Let the judge or human annotator choose the better one.

-

Train a preference RM to provide combined signals.

Unified Reward Formulation

-

All reward signals—process and outcome—are merged into one scalar:

\[\boxed{ R = \underbrace{ w_{\text{call}} r^{\text{call}} }_{\text{when}} + \underbrace{ \left( w_{\text{tool}} r^{\text{tool}} \right) }_{\text{which}} + \underbrace{ \left( w_{\text{syntax}} r^{\text{syntax}} + w_{\text{exec}} r^{\text{exec}} + w_{\text{args}} r^{\text{args}} \right) }_{\text{how}} + \underbrace{ \left( w_{\text{task}} r^{\text{task}} + w_{\text{pref}} r^{\text{pref}} \right) }_{\text{outcome-level}} }\]-

where:

- The when group controls whether a tool is invoked.

- The which + how group supervises tool choice and argument construction.

- The outcome-level group ensures the final result is correct and aligns with human/judge preferences.

-

-

This single scalar reward \(R\) is what enters the RL optimizer (e.g., PPO or GRPO).

-

Weights \(w\) are tuned to balance shaping vs. final correctness.

Asymmetric Rewards in Tool-Calling RL

-

This section explains why tool-calling RL systems use asymmetric rewards (positive rewards much larger than negative rewards), how this stabilizes PPO/GRPO, and how asymmetry applies across the when / which / how components. A full worked example and a comprehensive reward table are included.

-

Asymmetric reward schedules are used in practical tool-use RL systems such as ReTool, ToolRL, DeepSeek-R1, and RLHF pipelines. They ensure that:

- Success is highly rewarded.

- Failure incurs penalties but not catastrophic ones.

- Exploration does not collapse into inert policies (e.g., “never call tools”).

- The hierarchy — deciding when to call tools, which tool to call, and how to construct correct arguments — all receive stable and interpretable feedback.

Why Asymmetry Is Required

-

Because tool-calling introduces many potential failure points (incorrect timing, wrong tool, malformed arguments, bad final answer), symmetric rewards would cause massive early negative returns. The policy would quickly learn the degenerate strategy: “Never call any tool; always respond directly.”

-

Asymmetric rewards avoid this by:

- Using large positive rewards for correct full trajectories.

- Using mild or moderate negative rewards for mistakes.

- Ensuring that exploratory attempts are only slightly penalized.

- Allowing the policy to differentiate between “bad idea but learning” vs. “excellent behavior.”

-

This encourages exploration in the factored action space and prevents PPO/GRPO from collapsing into trivial policies.

Reward Table: Positive and Negative Rewards by Category

- Below is a consolidated table representing typical asymmetric reward magnitudes for each component. These values are illustrative and are often tuned per domain.

Reward Values for “When / Which / How” and Outcome-Level Components

| Reward Component | Description | Positive Reward Range | Negative Reward Range |

|---|---|---|---|

| When (call decision) | Correctly calling a tool when needed | +0.5 to +1.5 | −0.2 (tool required but not called) |

| Correctly not calling a tool | +0.3 to +1 | −0.2 (tool called when unnecessary) | |

| Which (tool selection) | Selecting correct tool | +0.5 to +2.0 | −0.3 to −0.7 (wrong tool) |

| How: Syntax | JSON validity and schema correctness | +0.3 to +1.0 | −1.0 (malformed JSON or wrong schema) |

| How: Execution | Tool executes successfully (HTTP 200, etc.) | +0.5 to +1.0 | −1.0 to −2.0 (execution error) |

| How: Argument Quality | High-quality arguments (correct fields, values) | +0.5 to +2.0 | −0.5 to −1.5 (missing/incorrect/poor arguments) |

| Outcome: Final Task Success | Producing correct final answer using tool output | +8.0 to +15.0 | −0.3 to −1.0 (incorrect final answer) |

| Outcome: Preference/Judge Score | Judge or LLM-as-a-critic evaluation of final output | +1.0 to +5.0 | −0.1 to −1.0 |

-

This table reflects the following structural principles:

- The largest rewards are reserved for correct end-to-end solution quality.

- The largest penalties correspond only to errors that break execution (syntax, runtime failure).

- Small errors in timing, selection, or argument quality incur light penalties.

- Rewards across “when / which / how” are significantly lower than final-task success, ensuring shaping rewards guide early learning but final correctness dominates late learning.

Worked Example With Asymmetric Rewards

-

Consider the user query: “What’s the weather in Paris tomorrow?”

-

Correct behavior requires:

- Deciding a tool is required (when).

- Selecting the weather API (which).

- Providing correct arguments in JSON (how).

- Producing the correct final answer using the tool output.

-

Below are two trajectories demonstrating asymmetry.

Trajectory A: Imperfect but Reasonable Exploration

- When decision correct \(\rightarrow\) +1.0

- Which tool wrong \(\rightarrow\) −0.5

- JSON syntax valid \(\rightarrow\) +0.5

- Tool executes (but irrelevant) \(\rightarrow\) 0

- Final answer wrong \(\rightarrow\) −0.5

- Total reward:

- Even though the overall answer is wrong, the trajectory gets a small positive reward because several subcomponents were correct. This prevents the model from concluding that tool use is too risky.

Trajectory B: Full Correct Behavior

- Correct when \(\rightarrow\) +1.0

- Correct which \(\rightarrow\) +1.5

- Correct JSON arguments \(\rightarrow\) +1.0

- Successful tool execution \(\rightarrow\) +1.0

- Correct final answer \(\rightarrow\) +10.0

- Total reward:

- The tremendous difference between +14.5 and +0.5 clearly guides PPO/GRPO toward producing the full correct behavior.

How Asymmetry Stabilizes PPO/GRPO

- Advantages are computed via:

-

With asymmetric rewards:

- Failed trajectories receive slightly negative or slightly positive returns.

- Successful trajectories receive large positive returns.

- Advantage variance stays manageable.

- Exploration does not collapse into “never call tools.”

- The policy improves steadily across “when / which / how” dimensions.

-

If rewards were symmetric (e.g., +10 vs. −10), then most exploratory episodes would produce extreme negative advantages, instantly pushing the model toward refusing all tool calls. Asymmetry prevents this collapse.

Takeaways

-

Asymmetric rewards are essential for training LLM tool-calling policies because they:

- Preserve exploration.

- Deliver stable gradients for PPO/GRPO.

- Avoid trivial degenerate strategies.

- Properly balance shaping rewards (for “when / which / how”) with outcome-level rewards.

- Distinguish partial correctness from catastrophic failure.

- Encourage correct final answers without over-penalizing small mistakes.

-

The reward table and examples above provide a practical blueprint for implementing and tuning asymmetric rewards in your own RL tool-calling system.

RL Optimization Pipeline: Shared Flow + PPO vs. GRPO

- This section describes how to take the unified reward from Section 3 and plug it into a full reinforcement learning (RL) pipeline—including both Proximal Policy Optimization (PPO) by Schulman et al., 2017 and Group Relative Policy Optimization (GRPO) by Shao et al., 2024. We present first the shared components, then algorithm‐specific losses and update rules.

- A detailed discourse of preference optimization algorithms is available in the Preference Optimization primer.

Shared RL Training Flow

-

Rollout Generation:

- Use the policy \(\pi_\theta\) (based on the LLM) to interact with the tool‐calling environment defined in Section 2.

- At each step \(t\) you have state \(s_t\), select action \(a_t\) (

CALLtool orANSWER), observe next state \(s_{t+1}\), and receive scalar reward \(r_t\) (from the unified reward). - Repeat until terminal (ANSWER) or maximum steps \(T\).

- Collect trajectories \(\tau = {(s_0,a_0,r_0),\dots,(s_{T-1},a_{T-1},r_{T-1}), (s_T)}\).

-

Return and Advantage Estimation:

-

Compute discounted return:

\[R_t = \sum_{k=t}^{T} \gamma^{k-t} , r_k\] -

Estimate value baseline \(V_\psi(s_t)\) (for PPO) or compute group‐relative statistics (for GRPO).

-

Advantage (for PPO):

\[A_t = R_t - V_\psi(s_t)\]-

Use Generalized Advantage Estimation (GAE) if desired (as typically done in PPO):

\[A_t^{(\lambda)} = \sum_{l=0}^{\infty} (\gamma\lambda)^l \delta_{t+l}, \quad \delta_t = r_t + \gamma V_\psi(s_{t+1}) - V_\psi(s_t)\]

-

-

-

-

Policy Update:

- Use a surrogate objective (dependent on algorithm) to update θ (policy), and update value parameters ψ where needed.

- Optionally include a KL-penalty or clipping to ensure policy stability.

-

Repeat:

- Collect new rollouts, update, evaluate. Monitor metrics such as tool‐call decision accuracy (“when”), correct tool selection (“which”), argument correctness (“how”), and final task success.

PPO: Losses and Update Rules

Surrogate Objective

- For PPO the objective is using clipped surrogate:

- where:

- … and \(\epsilon \approx 0.1-0.3\).

Value Loss

\[L_{\rm value}(\psi) = \mathbb{E}_{s_t\sim\pi} \big[ (V_{\psi}(s_t) - R_t)^2 \big]\]KL/Entropy Penalty

- Often a term is added:

- … to keep the policy close to either the old policy or a reference SFT policy.

Full PPO Loss

\[L^{\rm total}_{\rm PPO} = -L^{\rm PPO}(\theta) + c_v,L_{\rm value}(\psi) + c_{\rm KL},L_{\rm KL}(\theta)\]- … with coefficients \(c_v, c_{\rm KL}\).

Implementation Notes

- Use mini-batches and multiple epochs per rollout.

- Shuffle trajectories, apply Adam optimizer.

- Clip gradients; log metrics for tool decisions and argument quality.

GRPO: Losses and Update Rules

Group Sampling & Relative Advantage

- In GRPO [Shao et al., 2024] you sample a group of \(G\) actions \((a_1,\dots,a_G)\) under the same state \(s\). Compute each reward \(r(s,a_j)\). Then define group mean and standard deviation: \(\mu,\sigma\). Advantage for each is:

GRPO Surrogate

\[L^{\rm GRPO}(\theta) = \frac{1}{G} \sum_{j=1}^G \mathbb{E}_{s,a_{1:G}\sim\pi_{\theta_{\rm old}}} \Big[ \min \big( r_{j}(\theta)A^{\rm GRPO}(s,a_j), \mathrm{clip}(r_{j}(\theta),1-\epsilon,1+\epsilon)A^{\rm GRPO}(s,a_j) \big) \Big]\]- … with the same ratio definition \(r_j(\theta)=\pi_\theta(a_j \mid s)/\pi_{\theta_{\rm old}}(a_j\mid s)\).

Value Loss

- GRPO typically omits a parametric value estimator—baseline derived via group statistics.

KL/Entropy Penalty

- Same form as in PPO if desired.

Full GRPO Loss

\[L^{\rm total}_{\rm GRPO} = -L^{\rm GRPO}(\theta) + c_{\rm KL} L_{\rm KL}(\theta)\]Implementation Notes

- At each state draw multiple candidate tool/answer actions, compute rewards, form group.

- This is particularly suited for LLM tool-calling contexts where you can generate multiple alternate completions.

- GRPO reduces reliance on value network.

Integrating the Unified Reward

- Given the unified reward \(R\) from the prior step, each step’s \(r_t\) is used in return and advantage estimation. The policy thus simultaneously learns “when/which/how” tool calling by maximizing return:

- Both PPO and GRPO approximate gradient ascent on \(J(\theta)\) under stability constraints.

Curriculum Design, Evaluation Strategy, and Diagnostics for Tool-Calling RL

- This section describes how to structure training so the model reliably learns when, which, and how to call tools, and how to evaluate progress during RL. Curriculum design is crucial because tool-calling is a hierarchical skill; introducing complexity too early destabilizes learning, and introducing it too late yields underfitting.

Curriculum Design Overview

-

Curriculum design gradually increases difficulty along three axes:

- When \(\rightarrow\) recognizing tool necessity vs. non-necessity

- Which \(\rightarrow\) selecting the correct tool

- How \(\rightarrow\) providing high-quality arguments

-

Each axis has its own progression. The curriculum alternates between breadth (many domains/tools) and depth (multi-step workflows).

-

This staged approach mirrors the structured curricula seen in code-generation RL (e.g., unit-tests \(\rightarrow\) multi-step tasks) in works like Self-Refine by Madaan et al. (2023).

Stage 0: Pure Supervised Bootstrapping (SFT)

-

Before RL begins, do supervised fine-tuning on a dataset that explicitly includes:

- Examples requiring a tool,

- Examples that must not use a tool,

- Examples mapping queries to correct tool types,

- Examples showing valid argument formats.

-

The SFT initializes:

- An approximately correct “when \(\rightarrow\) which \(\rightarrow\) how” policy,

- JSON formatting reliability,

- Stable tool-calling syntax.

-

This prevents “flailing” during early RL where the model might emit random tool calls.

Stage 1: Binary Decision Curriculum (Learning When)

-

Focus: detect whether a tool is required.

-

Task mix:

- 50% queries that require a specific tool (weather/math/search)

- 50% queries that must be answered without tools

-

Goal: learn the call/no-call boundary.

-

Metrics:

- Call precision

- Call recall

- False-positive rate (unnecessary calls)

- False-negative rate (missed calls)

-

Reward emphasis:

- Increase (w_{\text{call}})

- Reduce penalties for syntax/execution errors early on

Stage 2: Tool-Selection Curriculum (Learning Which)

-

Add tasks that require choosing between tools:

-

Task examples:

- Weather vs. news

- Search vs. calculator

- Translation vs. summarization (if tools exist)

Goal: learn discriminative mapping from task intent \(\rightarrow\) tool identity.

-

Curriculum trick:

- For ambiguous queries, include diverse examples so the RL agent learns to think (internal chain-of-thought) before issuing tool calls.

-

Metrics:

- Tool-selection accuracy

- Confusion matrix across tool categories

- Average number of tool attempts per query

-

Reward emphasis:

- Shift weight from (w_{\text{call}}) \(\rightarrow\) (w_{\text{which}})

- Introduce penalties for repeated incorrect tool choices

Stage 3: Argument-Construction Curriculum (Learning How)

-

Introduce tasks with argument complexity:

-

Task examples:

- Weather(city, date)

- Maps(location, radius)

- Calculation(expressions with multiple steps)

- API requiring nested JSON fields

-

Training strategy:

- Start with minimal arguments (one field)

- Add multi-argument calls

- Introduce noisy contexts (typos, ambiguity)

-

Metrics:

- Argument correctness (string similarity or numeric error)

- Schema completeness

- Tool execution success rate

-

Reward emphasis:

- Increase \(w_{\text{args}}\)

- Tighten penalty for malformed JSON or missing fields

-

Stage 4: Multi-Step Tool Use (Pipelines)

-

Introduce tasks requiring multiple sequential tool calls, e.g.:

- Search for restaurants

- Get the address

- Query weather at that address

- Produce a combined answer

-

Here the agent must plan sequences and must choose when to stop calling tools.

-

Metrics:

- Number of steps per episode

- Optimality of tool sequence

- Rate of premature or redundant tool calls

-

Reward emphasis:

- Add step penalties

- Strengthen outcome reward since multi-step tasks dominate final task success

Stage 5: Open-Domain Free-Form Tasks

-

Finally, mix in diverse real-world questions with unconstrained natural-language variety.

-

Goal: produce a robust “universal” tool-use agent.

-

Metrics:

- Overall episodic return

- Win-rate vs. evaluator models (LLM-as-a-Judge)

- Human preference win-rate

- Task success accuracy in open benchmarks

Diagnostics and Monitoring

Process-Level Metrics

-

Aligned with the when \(\rightarrow\) which \(\rightarrow\) how decomposition:

-

When:

- Call precision/recall

- Unnecessary call rate

- Missed call rate

- Call timing consistency

-

Which:

- Tool selection accuracy

- Error matrix across tools

- Repeated incorrect tool selection episodes

-

How:

- Argument correctness scores

- JSON validity rate

- Execution success rate

-

Outcome-Level Metrics

-

Final answer accuracy:

- Exact match

- Tolerance-based match

- Semantic similarity

- Pass rate vs. LLM-judge (DeepSeek-V3, GPT-4, etc.)

-

Task efficiency:

- Number of steps per solved task

- Number of tool calls per successful episode

- Reward per timestep

-

User-facing metrics:

- Latency per episode

- Number of external API calls

Detecting Skill Collapse

-

Red flags include:

- Spike in JSON errors \(\rightarrow\) syntax collapse

- Rising unnecessary tool use \(\rightarrow\) call collapse

- Tool-selection deterioration \(\rightarrow\) “which” collapse

- Rising tool execution failures \(\rightarrow\) argument collapse

- Flat final-task accuracy \(\rightarrow\) plateau due to overfitting on shaping rewards

-

Solutions:

- Adjust reward weights \(w_{\cdot}\)

- Reintroduce supervised examples

- Increase entropy regularization

- Add KL penalties to keep model close to reference

Curriculum Scheduling (Putting It All Together)

-

A typical recipe:

- Stage 0 (SFT): 30k–200k examples

- Stage 1 (When): 1–5 RL epochs

- Stage 2 (Which): 3–10 RL epochs

- Stage 3 (How): 5–20 RL epochs

- Stage 4 (Pipelines): 10–30 RL epochs

- Stage 5 (Open-domain): continuous RL/adaptation

-

Dynamic curriculum: shift task sampling probabilities based on evaluation metrics—for example, increase argument-focused tasks if argument correctness stagnates.

Final Note

-

A well-designed curriculum ensures the policy does not simply memorize tool-call structures but truly internalizes:

- when tool use is warranted,

- which tool to call,

- how to call it correctly,

- … and how to combine tools into multi-step workflows to solve real tasks.

Reinforcement Learning and the Emergence of Intelligent Agents

-

With the rise of Large Language Models (LLMs) and multimodal foundation models, RL has become a critical mechanism for developing autonomous, reasoning-capable agents. Early efforts demonstrated that LLMs could act as agents that browse the web, search for information, and perform tasks by issuing actions and interpreting observations.

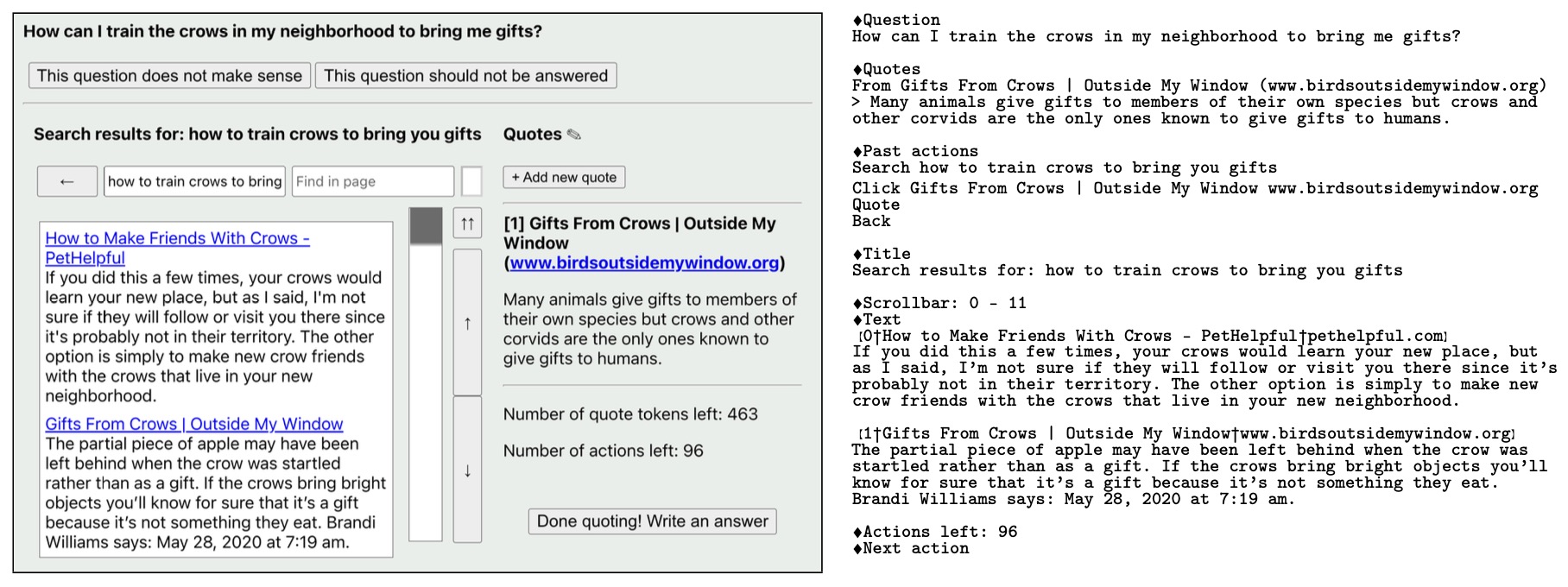

- One of the first large-scale examples was WebGPT by Nakano et al. (2022), which extended GPT-3 to operate in a simulated text-based browsing environment. The model was trained through a combination of imitation learning and reinforcement learning from human feedback (RLHF).

- WebGPT introduced a text-based web interface where the model interacts via discrete commands such as Search, Click, Quote, Scroll, and Back, using the Bing Search API as its backend. Human demonstrators first generated browsing traces that the model imitated through behavior cloning, after which it was fine-tuned via PPO against a reward model trained on human preference data. The reward model predicted human judgments of factual accuracy, coherence, and overall usefulness.

- Each browsing session ended when the model issued “End: Answer,” triggering a synthesis phase where it composed a long-form response using the collected references. The RL objective included both a terminal reward from the reward model and a per-token KL penalty to maintain policy stability. Empirically, the best 175B “best-of-64” WebGPT model achieved human-preference rates of 56% over human demonstrators and 69% over Reddit reference answers, showing the success of combining structured tool use with RLHF.

- The following figure (source) shows the text-based browsing interface used in WebGPT, where the model issues structured commands to retrieve and quote evidence during question answering.

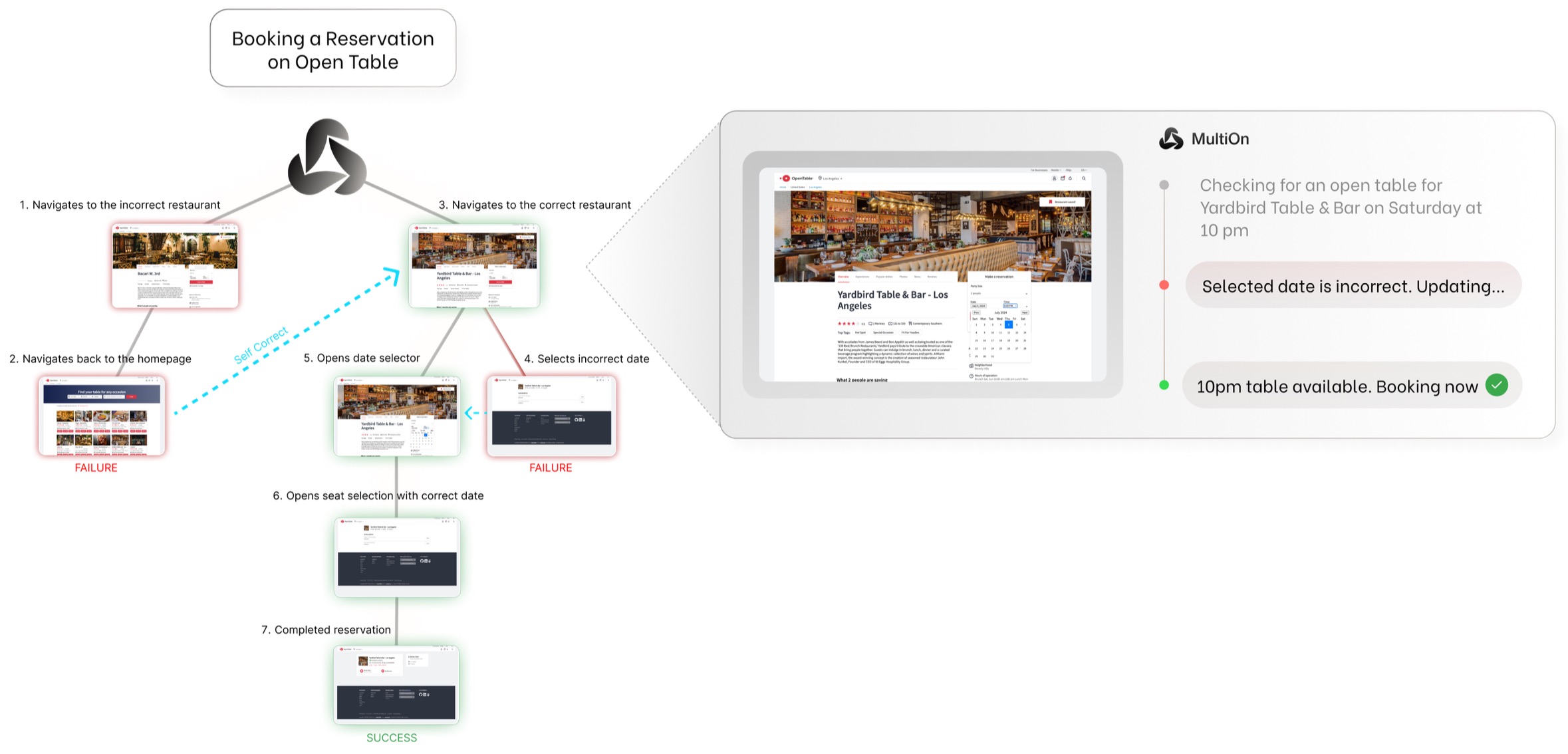

- Subsequent systems expanded these capabilities. Agent Q by Putta et al. (2024) introduced a hybrid RL pipeline that integrates Monte Carlo Tree Search (MCTS) with Direct Preference Optimization (DPO).

- Agent Q formalizes decision making as a reasoning tree, where each node represents a thought–action pair and edges correspond to plausible continuations. MCTS explores multiple reasoning branches guided by a value model estimating downstream reward. During training, preference data between trajectories is used to train a DPO objective, directly optimizing the policy toward preferred rollouts without relying on an explicit reward scalar.

- This setup enables off-policy reuse of exploratory trajectories: the model learns from both successes and failures by evaluating them through a learned preference model. Empirically, this led to substantial gains in reasoning depth and factual accuracy across multi-step question answering benchmarks, demonstrating that structured search and preference-based policy updates can yield stronger reasoning alignment than gradient-only PPO approaches.

-

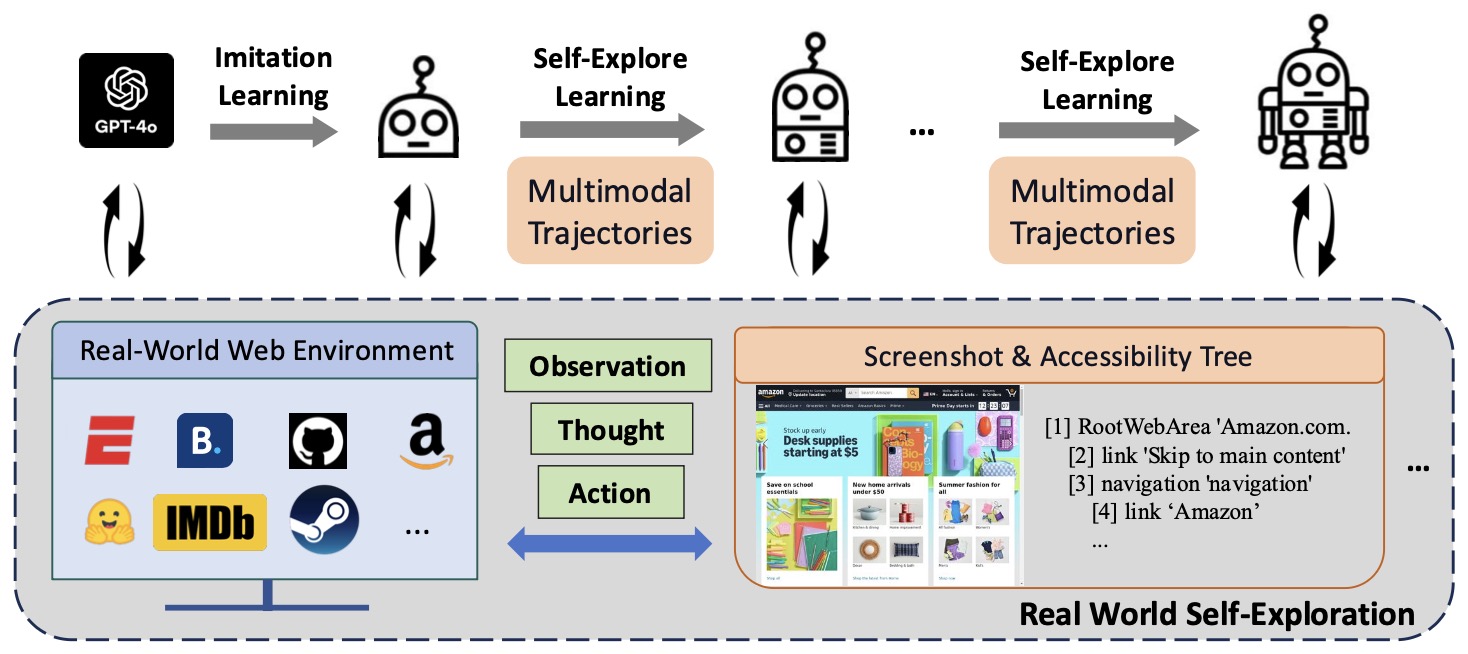

More recent advancements such as OpenWebVoyager by He et al. (2024) brought these ideas into the multimodal realm. OpenWebVoyager extends open-source multimodal models (Idefics2-8B-Instruct) to perform real-world web navigation using both textual accessibility trees and visual screenshots. The training process unfolds in two phases:

-

Imitation Learning (IL): The model first learns from expert trajectories collected with GPT-4o via the WebVoyager-4o system. Each trajectory contains sequences of thoughts and actions derived from multimodal observations (screenshot + accessibility tree). The IL objective jointly maximizes the log-likelihood of both action and reasoning token sequences:

\[J_{IL}(\theta) = E_{(q,\tau)\sim D_{IL}} \sum_t [\log \pi_\theta(a_t|q,c_t) + \log \pi_\theta(h_t|q,c_t)]\] -

Exploration–Feedback–Optimization Cycles: After imitation, the agent autonomously explores the open web, generating new trajectories. GPT-4o then acts as an automatic evaluator, labeling successful trajectories that are retained for fine-tuning. Each cycle introduces newly synthesized tasks using the Self-Instruct framework, ensuring continuous policy improvement. Iteratively, the task success rate improves from 19.9% to 25.8% on WebVoyager test sets and from 6.3% to 19.6% on cross-domain Mind2Web tasks.

- The following figure (source) shows the overall process of OpenWebVoyager, including the Imitation Learning phase and the exploration–feedback–optimization cycles.

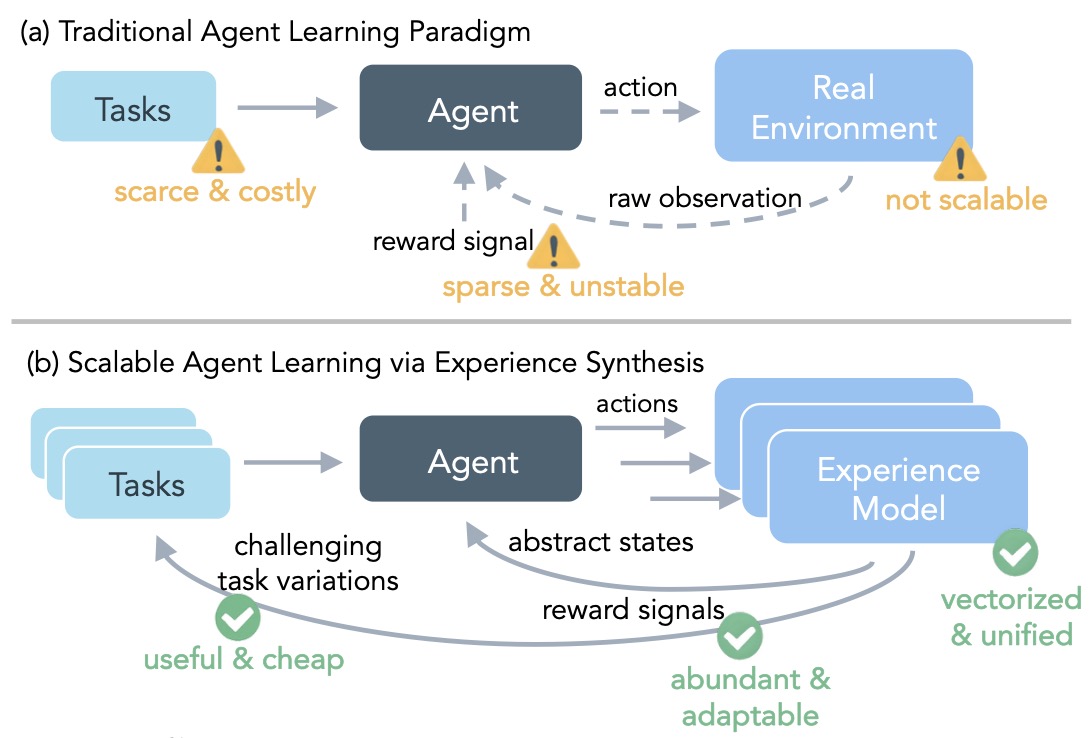

- The following figure (source) shows the model architecture of OpenWebVoyager. The system uses the most recent three screenshots and the current accessibility tree to guide multimodal reasoning, ensuring temporal grounding across page transitions.

-

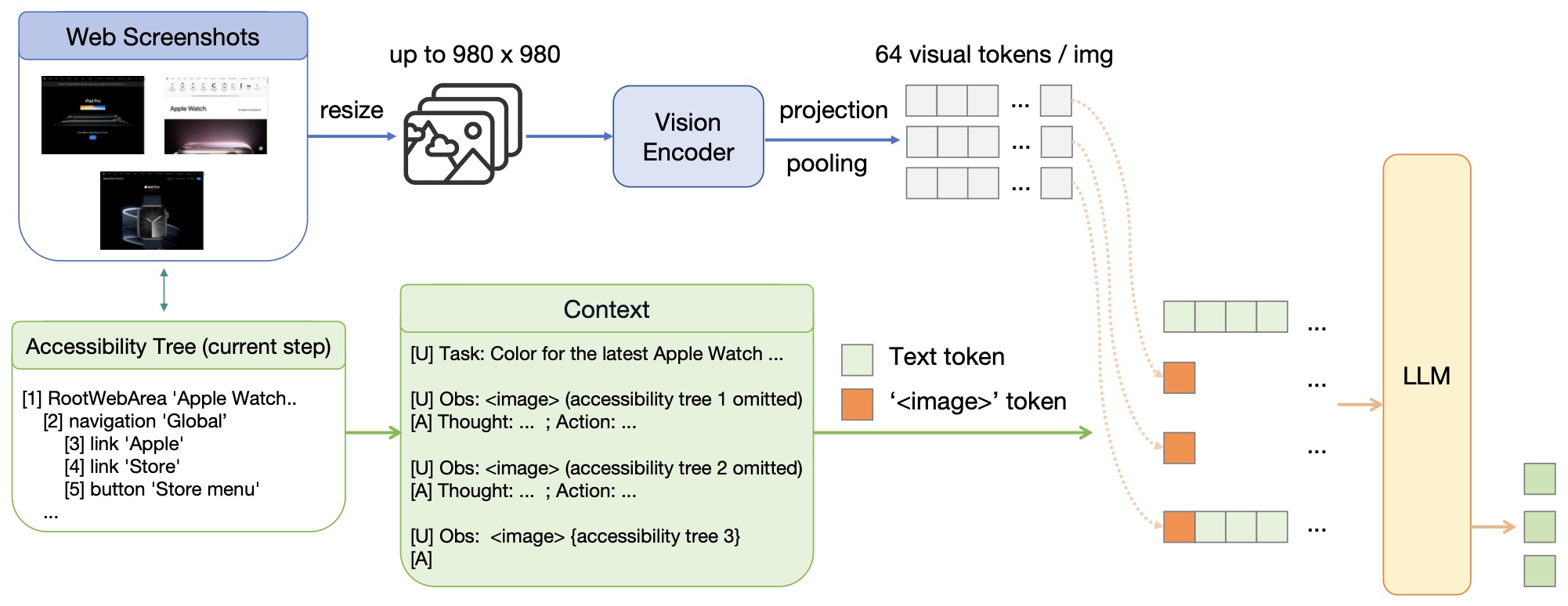

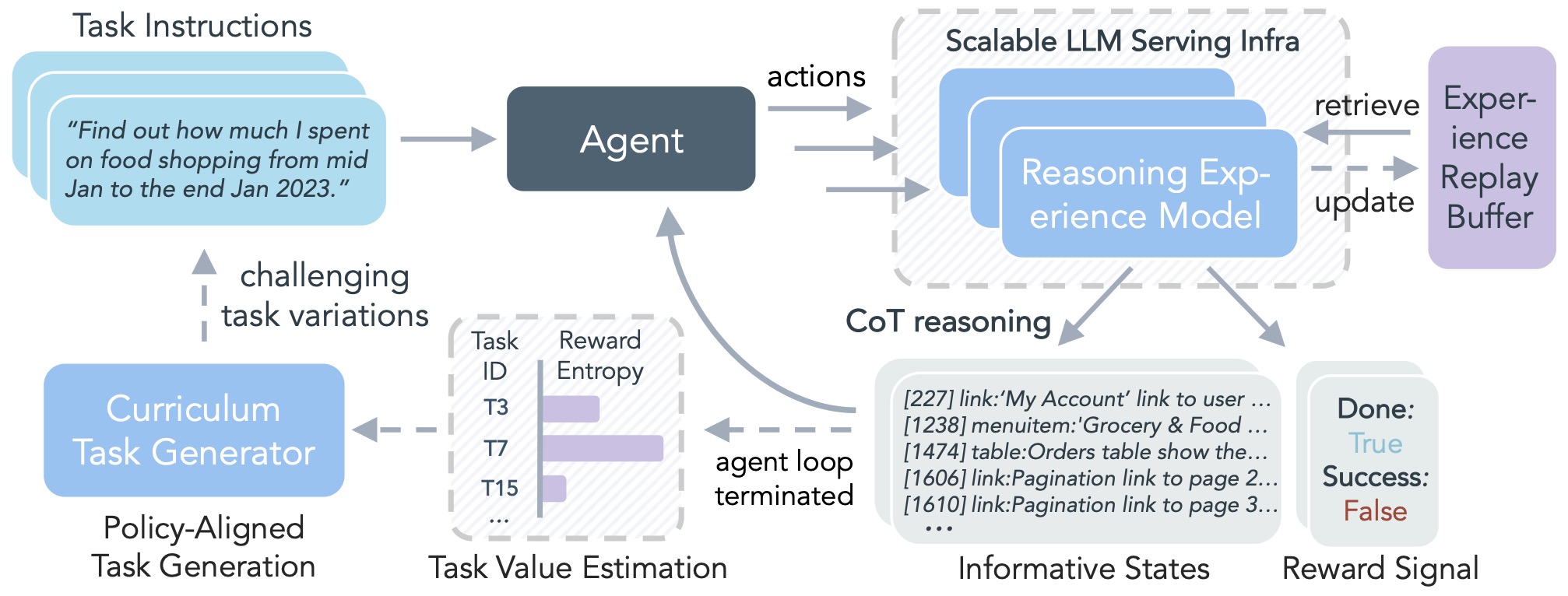

- Alongside real-environment exploration, a complementary approach is to scale policy learning with synthetic but reasoning-grounded interaction data. DreamGym, proposed in (Scaling Agent Learning via Experience Synthesis by Chen et al. (2025)), formalizes this by training a reasoning-based experience model that serves as both a generative teacher and an adaptive simulator. This model produces synthetic task curricula and consistent next-state transitions, enabling closed-loop reinforcement learning at scale.

- The framework introduces experience synthesis as a core principle—training a language-conditioned simulator capable of generating realistic interaction traces that preserve reasoning consistency and causal coherence. By jointly optimizing the policy and the experience model under trust-region constraints, DreamGym maintains stability and theoretical convergence guarantees: if the model error and reward mismatch remain bounded, improvements in the synthetic domain provably transfer to real-environment performance.

- The result is a unified infrastructure that decouples exploration (handled by the experience model) from policy optimization, dramatically reducing real-environment sample costs while preserving fidelity in reasoning tasks. Empirically, DreamGym demonstrates significant gains in multi-tool reasoning, long-horizon planning, and web navigation.

- The following figure illustrates that compared to the traditional agent learning paradigm, DreamGym provides the first scalable and effective RL framework with unified infrastructure.

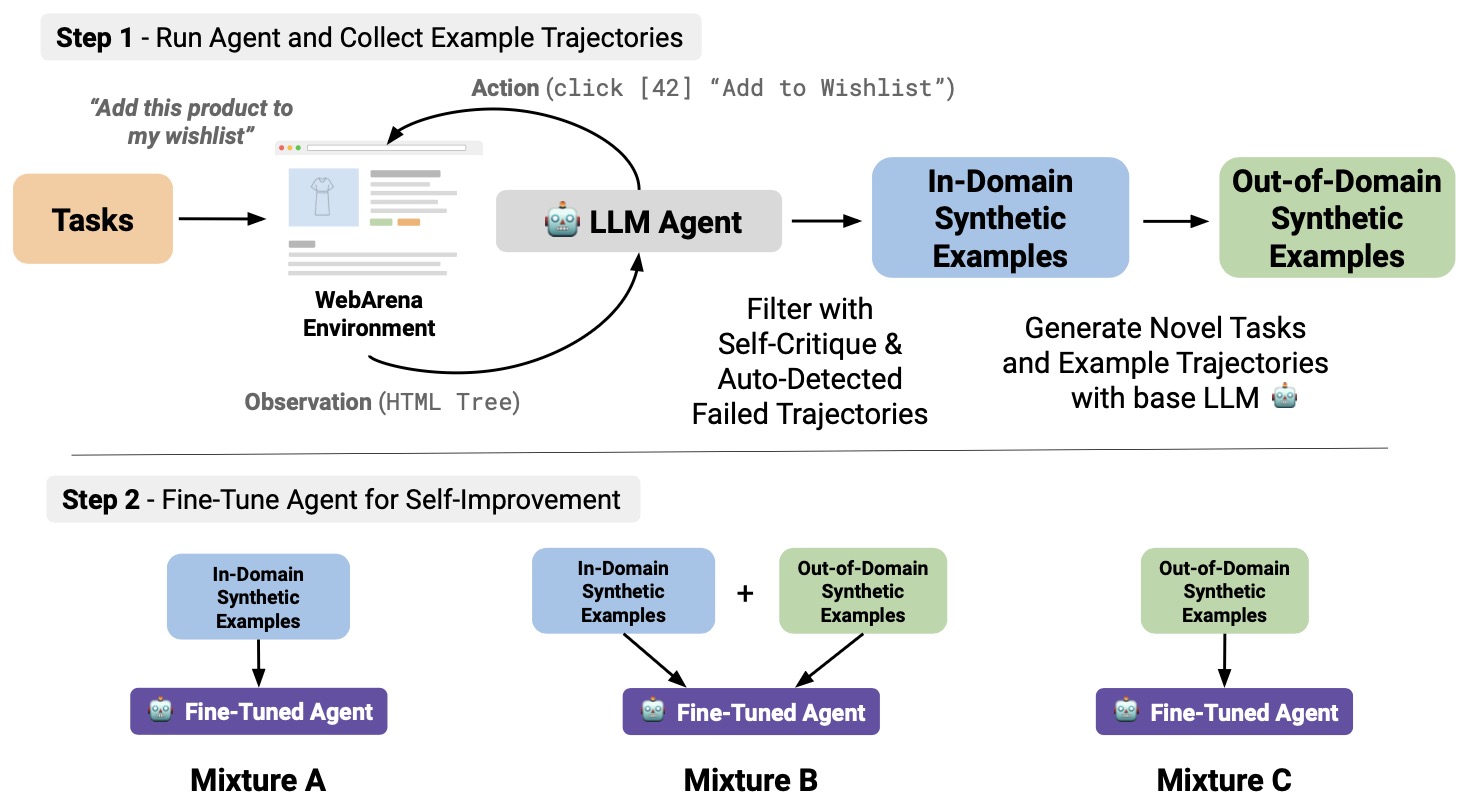

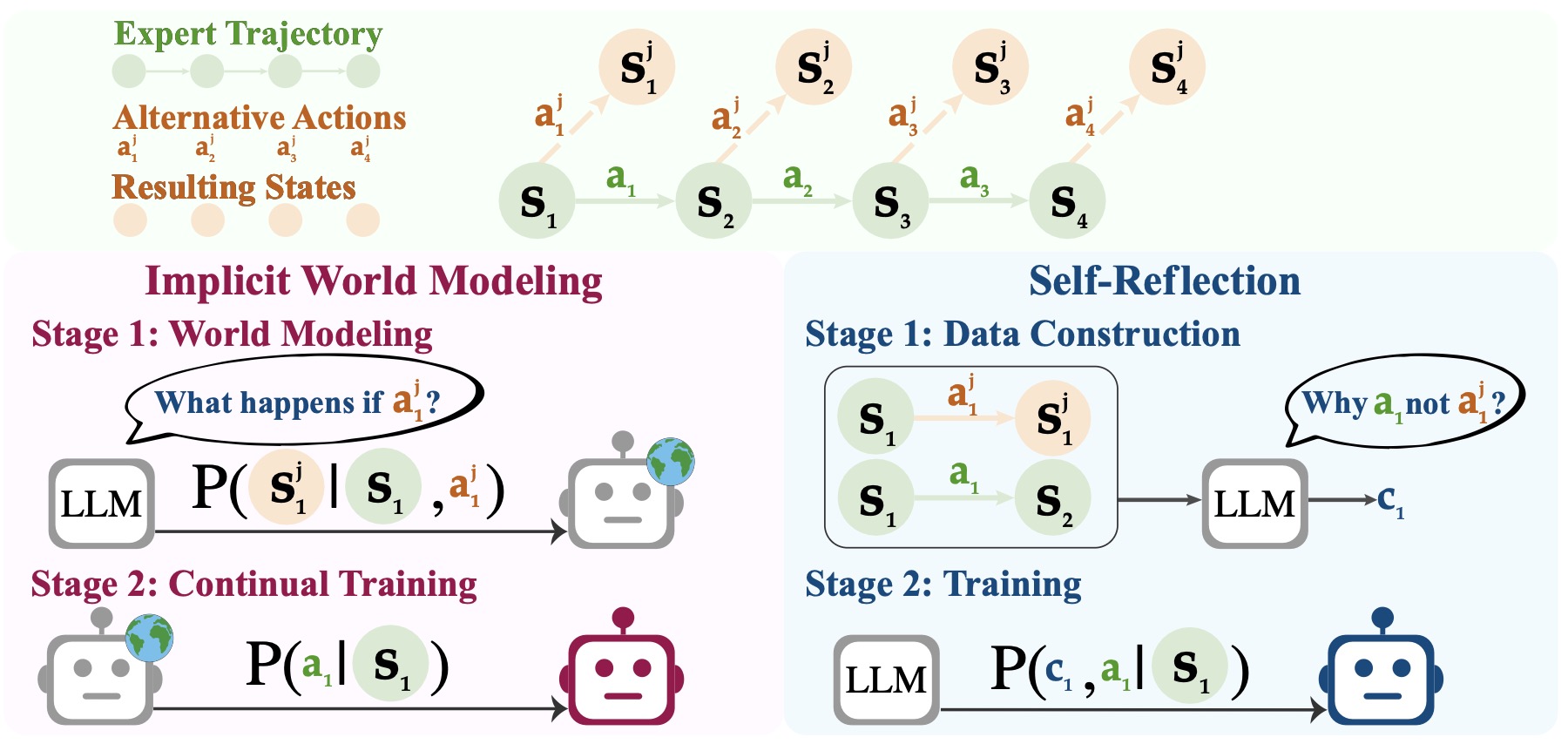

- Early Experience, proposed in (Agent Learning via Early Experience by Zhang et al. (2025)), establishes a two-stage curriculum—implicit world modeling and self-reflection over alternative actions—that uses only language-native supervision extracted from the agent’s own exploratory branches, before any reward modeling or PPO/GRPO.

- The first stage, implicit world modeling, trains the agent to predict environmental dynamics and next states, effectively learning the structure of interaction without any external reward. The second stage, self-reflection, asks the agent to introspectively compare expert and non-expert behaviors, generating rationale-based preferences that bootstrap value alignment.

- These objectives serve as pre-RL signals that warm-start the policy, leading to faster and more stable convergence once reinforcement learning begins. In empirical evaluations, the Early Experience framework significantly improves downstream success rates across both web-based and software-agent benchmarks, and integrates seamlessly with later RL fine-tuning methods like PPO or GRPO.

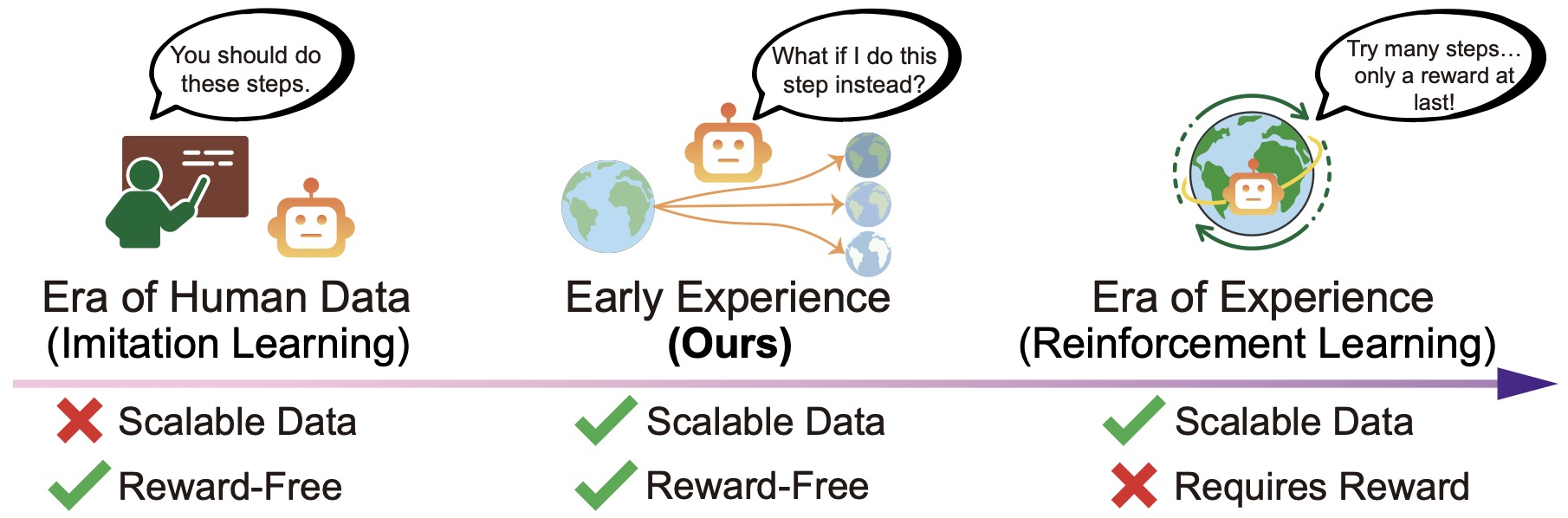

- The following figure shows the progression of training paradigms. (Left:) The Era of Human Data relies on expert demonstrations, where supervision comes from human-/expert-curated actions; it is reward-free (i.e., does not require the environment to provide verifiable reward) but not data-scalable. (Right:) The envisioned Era of Experience builds upon environments with verifiable rewards, using them as the primary supervision for reinforcement learning; however, many environments either lack such rewards (Xue et al., 2025) or require inefficient long-horizon rollouts (Xie et al., 2024a). Center: Our Early Experience paradigm enables agents to propose actions and collect the resulting future states, using them as a scalable and reward-free source of supervision

The Role of Reinforcement Learning in Self-Improving Agents

-

RL serves as the foundation of self-improving artificial agents. These agents do not depend solely on human-provided supervision; instead, they learn continuously from their own experiences.

-

A representative example of this approach is Large Language Models Can Self-improve at Web Agent Tasks by Patel et al. (2024), which introduced a looped learning process where an agent repeatedly performs tasks, evaluates its own performance, and fine-tunes itself on the best results. In their experiments, agents improved their web-navigation success rates by over 30% without any additional human data, demonstrating that RL can bootstrap the agent’s progress over time.

-

The following figure shows (source) the self-improvement loop used in Patel et al. (2024), illustrating how the agent collects trajectories, filters low-quality outputs, fine-tunes itself, and iterates for continual improvement.

-

Synthetic-experience RL closes the loop for self-improving agents by letting a reasoning experience model synthesize adaptive rollouts and curricula matched to the current policy, yielding consistent gains in both synthetic and sim-to-real settings; theory further bounds the sim-to-real gap by reward-accuracy and domain-consistency errors, rather than strict pixel/state fidelity metrics (cf. Scaling Agent Learning via Experience Synthesis by Chen et al. (2025)).

-

This iterative process typically follows these stages:

- Data Collection: The agent generates task trajectories by interacting with the environment.

- Filtering and Evaluation: The system automatically assesses each trajectory, discarding low-quality samples.

- Fine-Tuning: The agent is retrained using successful examples, effectively reinforcing good behavior.

- Re-evaluation: The improved agent is tested, and the cycle repeats.

-

This form of continual self-improvement makes RL a key enabler for developing general-purpose, autonomous web and software agents.

Environments for Reinforcement Learning in Modern Agents

-

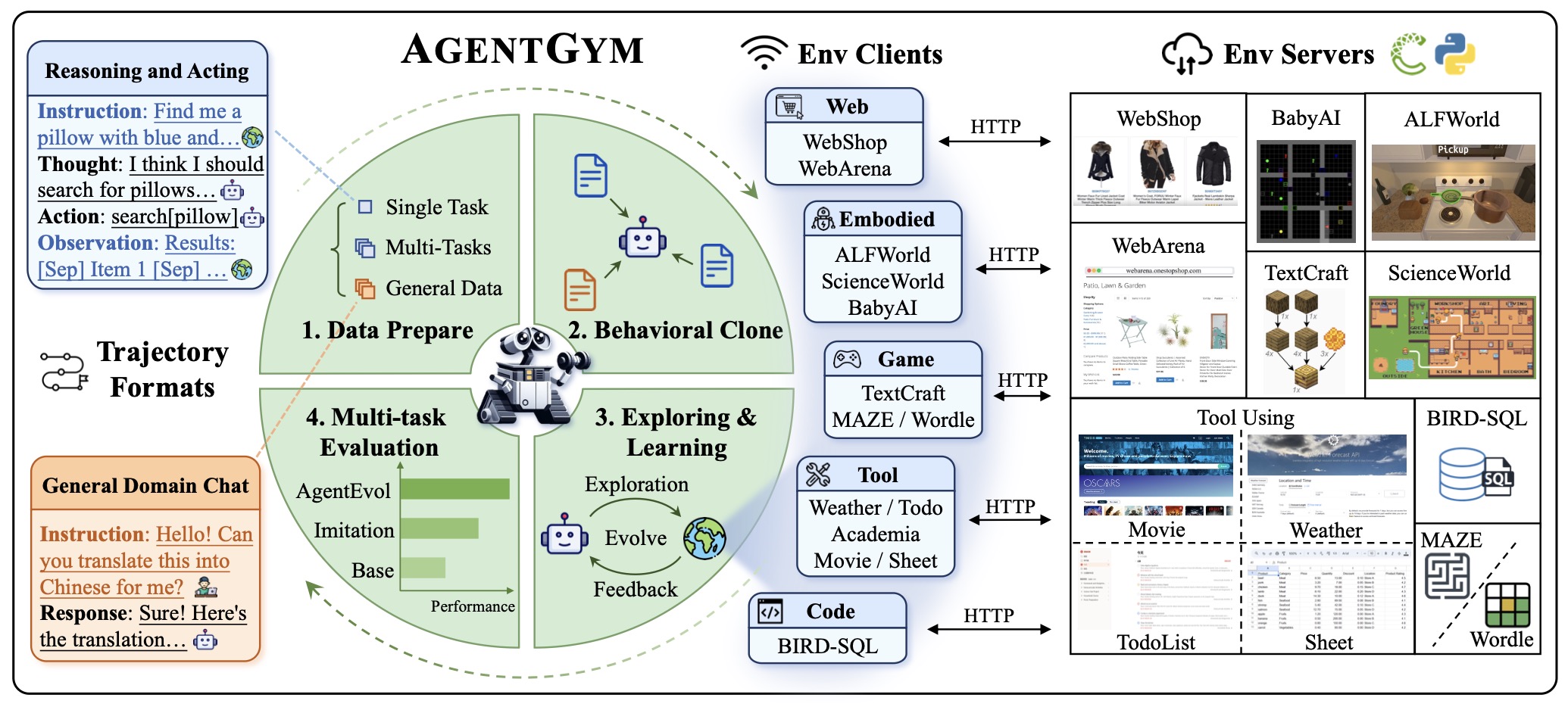

To support these learning processes, researchers have developed structured environments that simulate the complexity and variety of real-world digital interactions. One comprehensive framework is AgentGym by Xi et al. (2024), which defines a unified interface for training and evaluating LLM-based agents across 14 environment types—ranging from academic reasoning and games to embodied navigation and web interaction.

-

The following figure (source) shows the AgentGym framework, illustrating the standardized environment interface, modular design, and integration of various environment types for LLM-driven agent training.

-

In AgentGym, an agent’s experience is modeled as a trajectory consisting of repeated thought–action–observation cycles:

\[\tau = (h_1, a_1, o_1, ..., h_T, a_T) \sim \pi_\theta(\tau | e, u)\]- where \(h_t\) represents the agent’s internal reasoning (its “thought”), \(a_t\) the action it takes, \(o_t\) the resulting observation, and \(e, u\) the environment and user prompt respectively.

-

This approach bridges the symbolic reasoning capabilities of LLMs with the sequential decision-making framework of RL, forming the basis for modern interactive agents.

The Three Major Types of Reinforcement Learning Environments

- Modern RL environments for language-based and multimodal agents are generally organized into three broad categories. Each category captures a distinct interaction pattern and optimizes the agent for a different type of intelligence or capability.

Single-Turn Environments (SingleTurnEnv)

-

These environments are designed for tasks that require only a single input–output interaction, where the agent must produce one decisive response and then the environment resets. Examples include answering a question, solving a programming challenge, or completing a math problem.

-

In this setting, the reward signal directly evaluates the quality of the single output. Training methods usually combine supervised fine-tuning with RL from human or synthetic feedback (RLHF). For instance, in coding problems or reasoning benchmarks, the agent’s response can be automatically graded using execution correctness or symbolic validation. Such setups are ideal for optimizing precision and factual correctness in domains where each query is independent of the previous one.

-

SingleTurnEnv tasks are computationally efficient to train because there is no need to maintain long-term memory or context. They are commonly used to bootstrap an agent’s basic competencies before moving to more complex, multi-step environments.

Tool-Use Environments (ToolEnv)

-

Tool-use environments focus on enabling agents to perform reasoning and decision-making that involve invoking external tools—such as APIs, search engines, calculators, code interpreters, or databases—to complete a task. These environments simulate the agent’s ability to extend its cognitive boundaries by interacting with external systems.

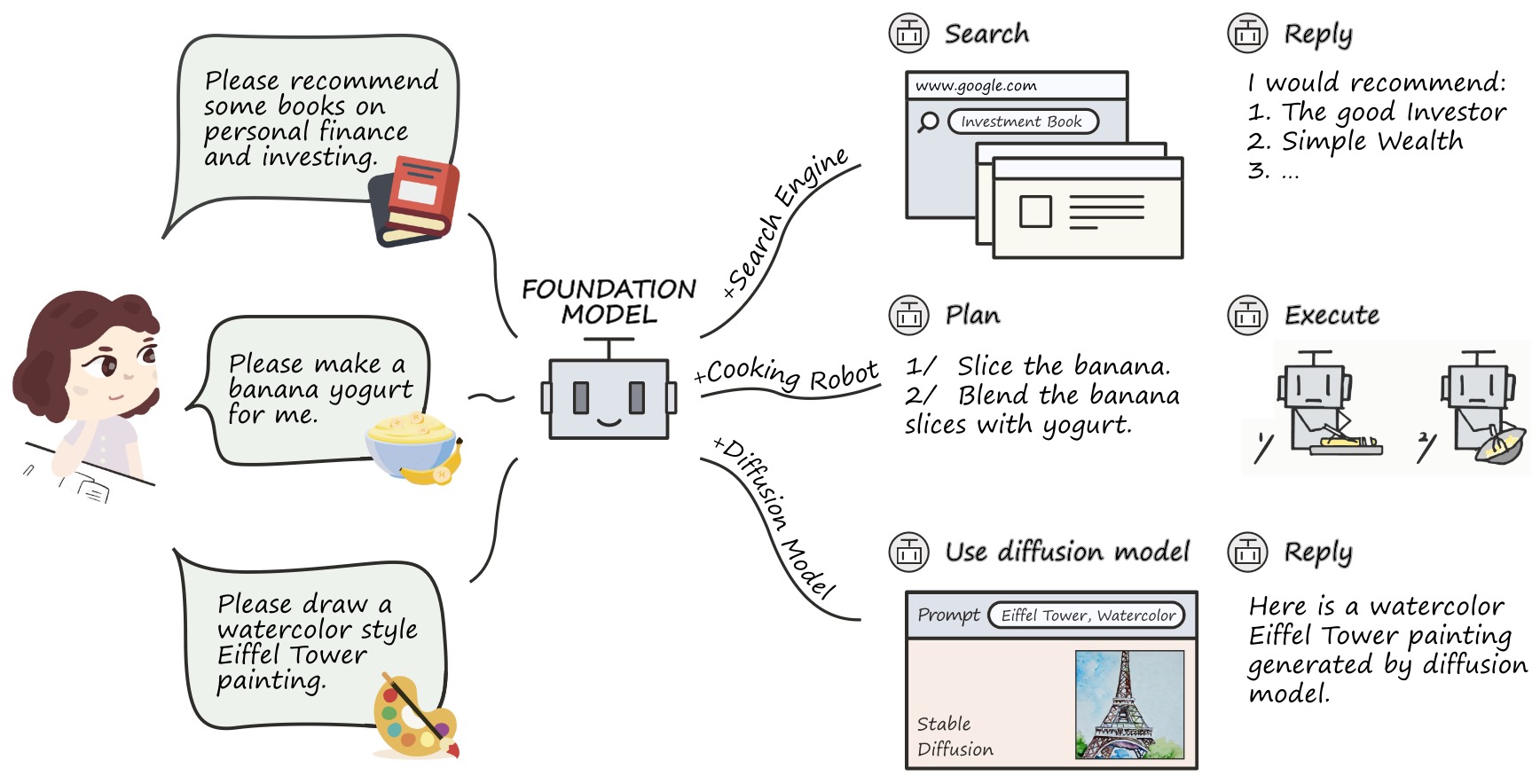

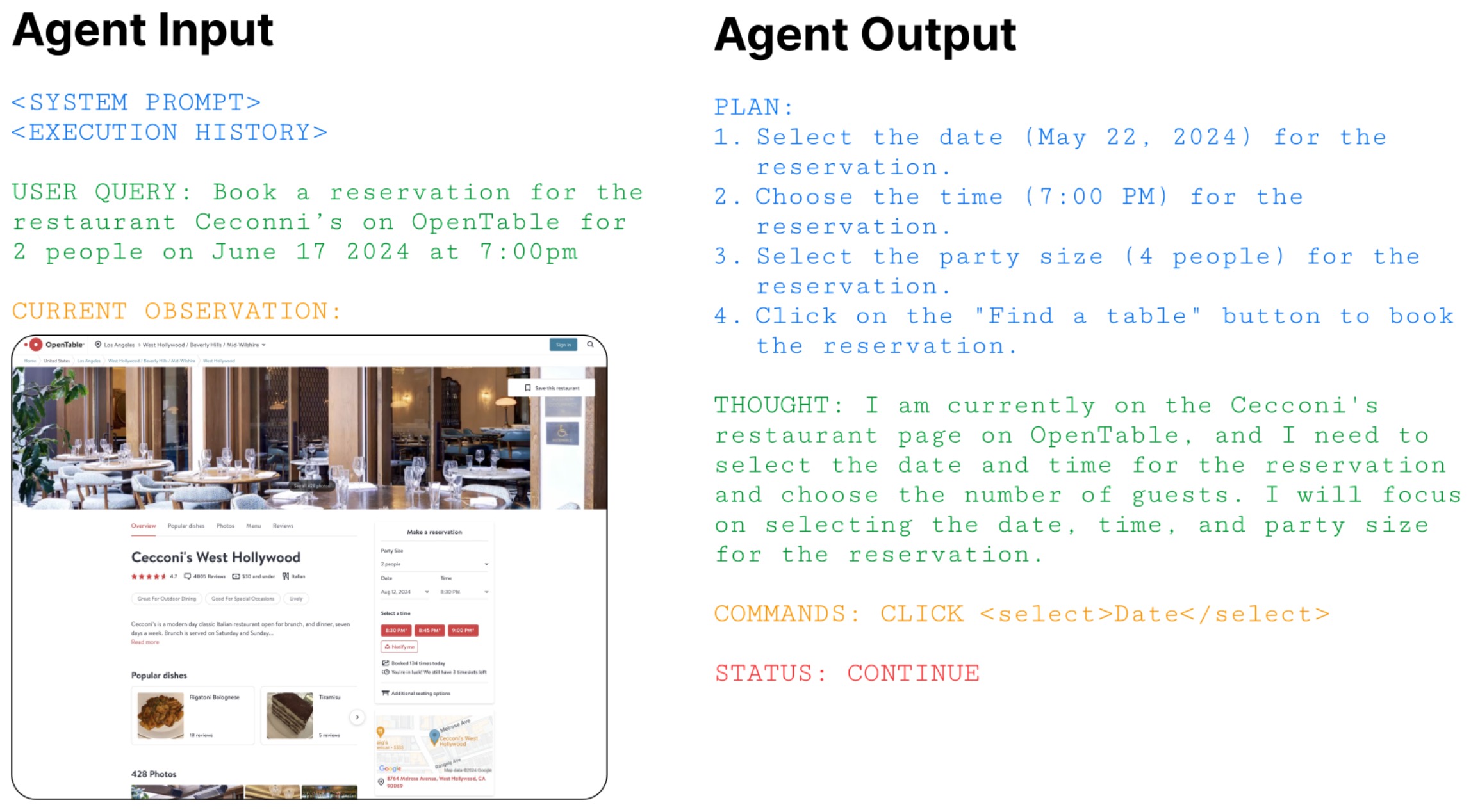

-

In Tool Learning with Foundation Models by Qin et al. (2024), the authors surveyed a wide range of approaches where foundation models learn to select, call, and integrate the outputs of external tools into their reasoning processes. This kind of training allows the model to perform symbolic computation, factual verification, and data retrieval in ways that pure text-based reasoning cannot.

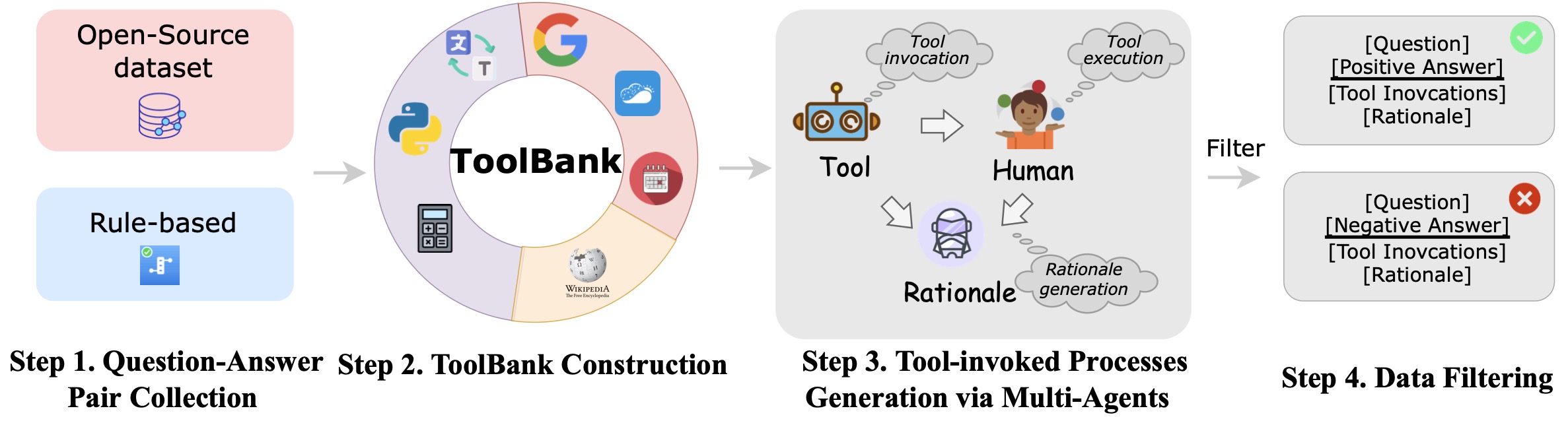

-

The following figure shows (source) the conceptual overview of tool learning with foundation models, where models dynamically decide when and how to invoke tools such as web search and other APIs to solve complex problems.

-

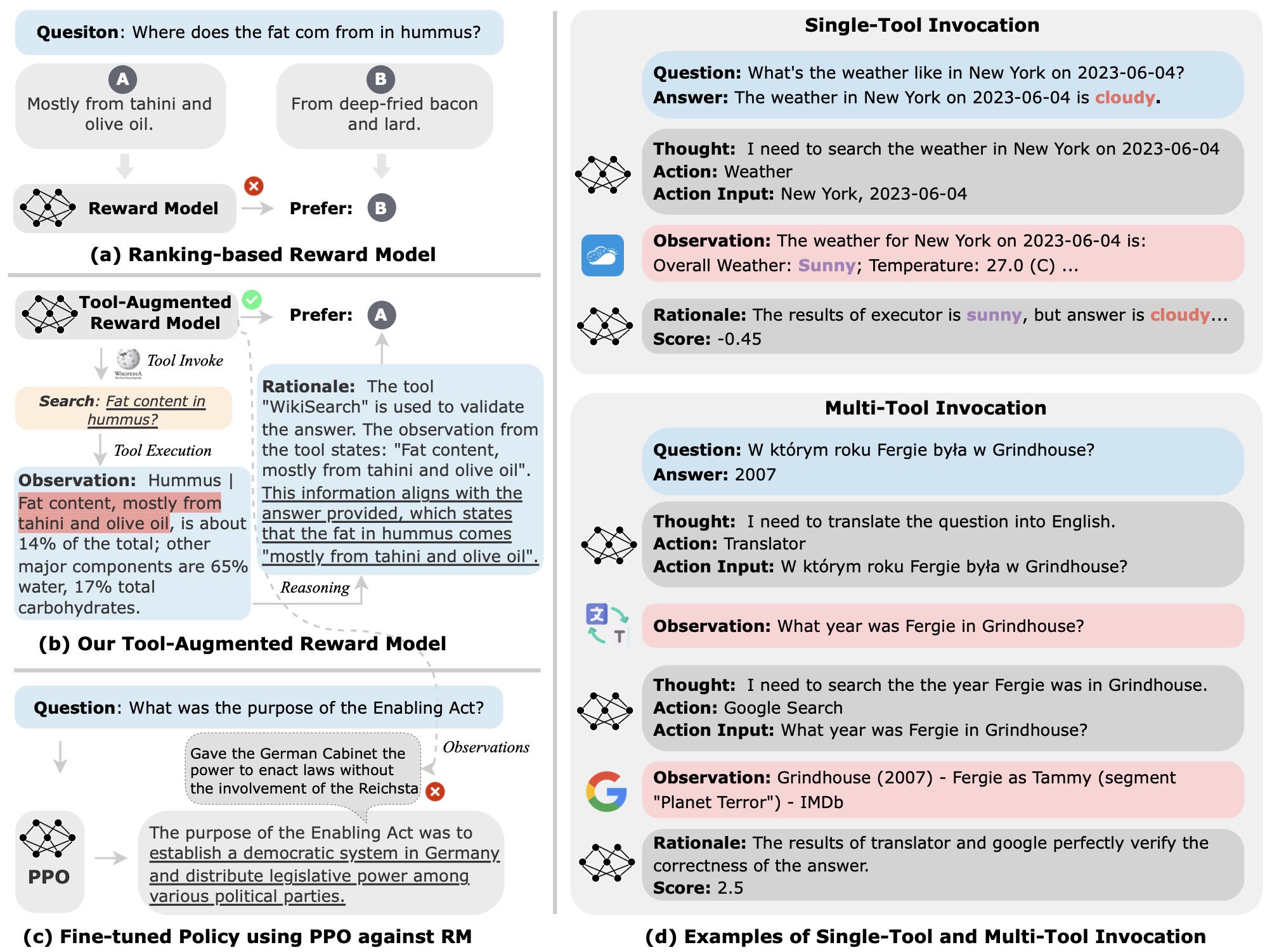

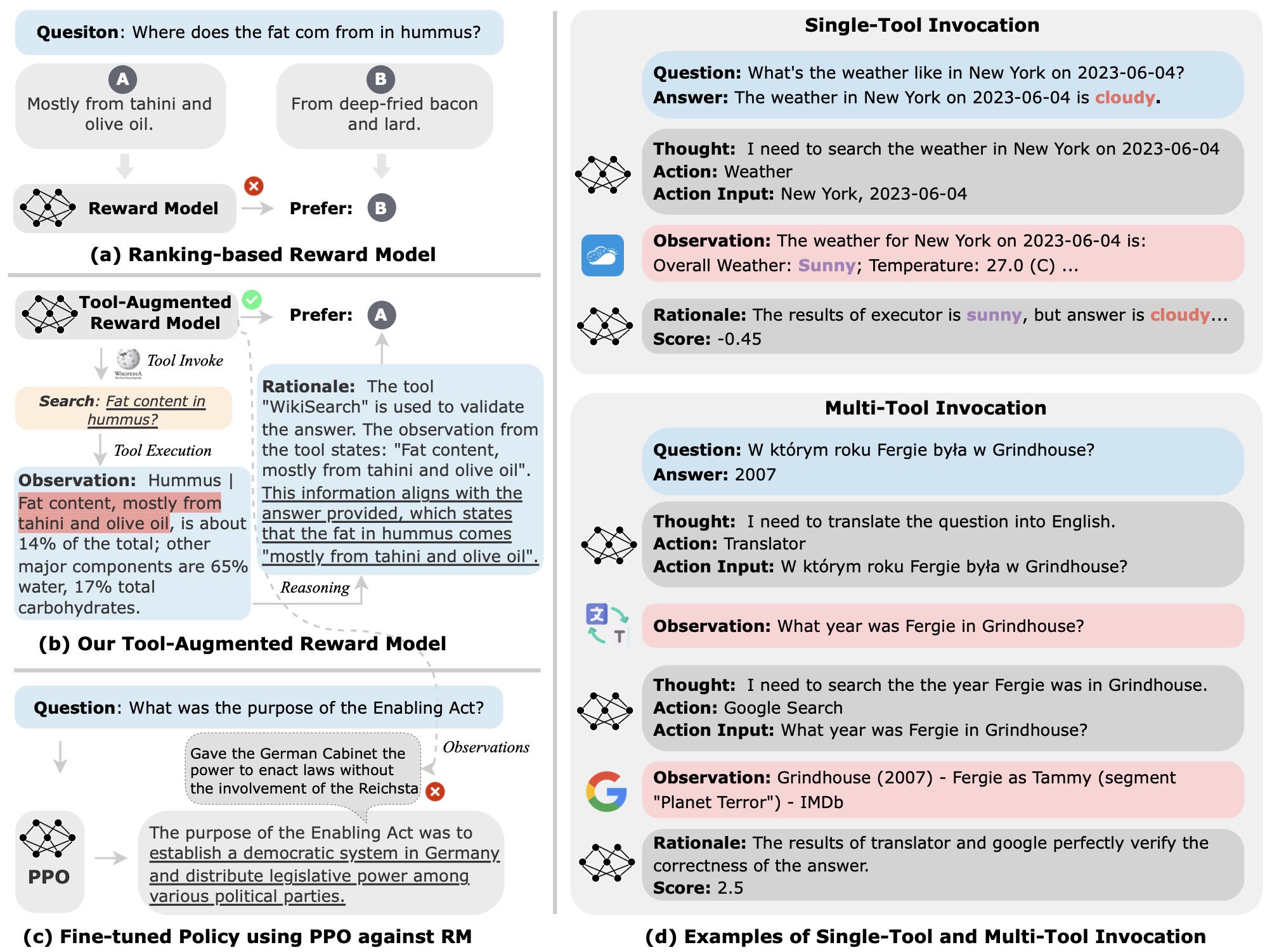

A related innovation is Tool-Augmented Reward Modeling by Li et al. (2024), which enhanced RL reward models by giving them access to external APIs such as search engines or translation systems. This modification made reward models not only more accurate but also more interpretable, as each decision could be traced through explicit tool calls.

-

The following figure (source) shows illustrates the pipeline of (a) Vanilla reward models (RMs); (b) Tool-augmented RMs, namely Themis; (c) RL via proximal policy optimization (PPO) on above RMs; (d) Examples of single or multiple tool use process in the proposed approach.

- Tool-use environments test the agent’s ability to decide when and how to use a tool, what input arguments to provide, and how to interpret the returned results. This capability is crucial for building practical software assistants and web agents that interact with real systems.

Multi-Turn, Sequential Environments (MultiTurnEnv)

-

Multi-turn environments represent the most complex and realistic category of RL settings. In these environments, an agent engages in extended, multi-step interactions where each decision depends on the evolving context and memory of previous steps. Examples include navigating a website, writing and revising code iteratively, managing files on a computer, or executing multi-phase workflows such as online booking or document editing.

-

Agents operating in these environments must reason about long-term goals, plan multiple actions in sequence, and interpret feedback dynamically. Systems such as WebArena, WebShop, Agent Q by Putta et al. (2024), and OpenWebVoyager by He et al. (2024) exemplify this paradigm. They train agents through multi-step RL using trajectory-based feedback, where each complete sequence of actions and observations contributes to the learning signal.

-

These environments are optimized for developing autonomy and adaptability. The agent must not only predict the next best action but also understand how that action contributes to the overall task objective. MultiTurnEnv scenarios are thus the closest analogs to real-world usage, making them essential for training general-purpose digital agents.

Implications

-

Agentic RL, which is the evolution of RL for agents—from single-turn tasks to tool-augmented reasoning and complex multi-turn workflows—reflects a progressive layering of capabilities. Each environment type plays a distinct role:

- Single-turn environments emphasize accuracy and efficiency, teaching agents to produce correct, concise responses.

- Tool-use environments focus on functional reasoning and integration, giving agents the ability to extend their knowledge through computation and external APIs.

- Multi-turn environments train autonomy and planning, enabling agents to navigate, adapt, and make decisions across extended sequences of interactions.

-

Together, these environments form the backbone of modern RL for LLM-based and multimodal agents. They provide a structured pathway for training models that can perceive, reason, and act—bringing us closer to general-purpose artificial intelligence capable of performing diverse tasks in real-world digital environments.

Reinforcement Learning for Web and Computer-Use Agents

- A detailed discourse on RL can be found in our Reinforcement Learning primer.

Background: Policy-Based and Value-Based Methods

-

At its core, RL employs two broad families of algorithmic approaches:

- Value-based methods, which learn a value function (e.g., \(Q(s,a)\) or \(V(s)\)) that estimates the expected return of taking action \(a\) in state \(s\) (or being in state \(s\)).

-

Policy-based (or actor-critic) methods, which directly parameterize a policy \(\pi_\theta(a \mid s)\) and optimize its parameters \(\theta\) to maximize expected return

\[J(\pi_\theta) = \mathbb{E}_{\tau\sim\pi_\theta}\left[\sum_{t=0}^T \gamma^t R(s_t,a_t)\right]\]

-

In modern agentic applications (web agents, computer-use agents), policy‐based methods tend to dominate because the action space is large, discrete (e.g., “click link”, “invoke API”, “enter code”), and policies must be expressive.

-

One widely used algorithm is Proximal Policy Optimization (PPO) Schulman et al. (2017), which introduces a clipped surrogate objective to ensure stable updates and avoid large shifts in policy space.

-

The surrogate objective can be expressed as:

\[L^{\rm CLIP}(\theta) = \mathbb{E}_{s,a\sim\pi_{\theta_{\rm old}}}\left[ \min\left( r_t(\theta) A_t, \mathrm{clip}(r_t(\theta),1-\epsilon,1+\epsilon) A_t \right) \right]\]- where \(r_t(\theta)=\frac{\pi_\theta(a_t \mid s_t)}{\pi_{\theta_{\rm old}}(a_t \mid s_t)}\) and \(A_t\) is the advantage estimate at time \(t\).

-

This ensures that the policy update does not diverge too far from the previous one while still improving expected return.

Background: Process-Wise Rewards vs. Outcome-Based Rewards

-

When designing RL systems for digital agents, one of the most consequential design choices lies in how rewards are provided to the model.

-

Outcome-based rewards give feedback only at the end of a task—for instance, a success/failure score after the agent completes a booking or answers a question. This is common in SingleTurnEnv tasks and short workflows, where each interaction produces a single measurable outcome.

- While simple, outcome-based rewards are sparse, often forcing the agent to explore many possibilities before discovering actions that yield high return.

-

Process-wise (step-wise) rewards, in contrast, provide incremental feedback during the task. In a web-navigation scenario, for example, the agent might receive positive reward for successfully clicking the correct link, partially filling a form, or retrieving relevant information—even before the final goal is achieved.

- This approach is critical in MultiTurnEnv or ToolEnv setups where tasks span many steps. By assigning intermediate rewards, process-wise systems promote shaped learning—accelerating convergence and improving interpretability of the agent’s learning process.

-

Formally, if an episode runs for \(T\) steps, the total return under step-wise rewards is:

\[R_t = \sum_{k=t}^{T} \gamma^{k-t}r_k\]- where \(r_k\) are per-step rewards. In outcome-based schemes, \(r_k = 0\) for all \(k<T\), and \(r_T\) encodes task success. Choosing between these schemes depends on the environment’s complexity and availability of fine-grained performance metrics.

-

For web agents, hybrid strategies are often used: process-wise signals derived from browser state (e.g., correct navigation, reduced error rate) combined with final outcome rewards (task completion). This hybridization reduces the high variance of pure outcome-based rewards while preserving the integrity of long-horizon objectives.

Reinforcement Learning from Human Feedback (RLHF) and Direct Preference Optimization (DPO)

-

For web/computer-use agents built on LLMs or similar, one key method is RL from Human Feedback (RLHF). The standard RLHF pipeline is:

- Supervised fine-tune a base language model on prompt–response pairs.

- Collect human preference data: for each prompt, have humans rank multiple model responses (or choose preferred vs. non-preferred).

- Train a reward model \(r_\phi(x,y)\) to predict human preferences.

- Use an RL algorithm (often PPO) to optimize the policy \(\pi_\theta\) to maximise expected reward under the reward model, possibly adding KL-penalty to stay close to base model.

-

For example, the survey article Reinforcement Learning Enhanced LLMs: A Survey provides an overview of this field.

-

However, RLHF can be unstable, costly in compute, and sensitive to reward-model errors. Enter Direct Preference Optimization (DPO) Rafailov et al. (2023), which posits that one can skip the explicit reward model + RL loop and simply fine-tune the model directly to optimize human preference pairwise comparisons.

-

The DPO loss in the pairwise case (winner \(y_w\), loser \(y_l\)) is approximately:

\[\mathcal{L}_{\rm DPO} = -\mathbb{E}_{(x,y_w,y_l)}\left[ \ln \sigma\left(\beta \ln\frac{\pi_\theta(y_w|x)}{\pi_{\rm ref}(y_w|x)} - \beta \ln\frac{\pi_\theta(y_l|x)}{\pi_{\rm ref}(y_l \mid x)}\right) \right]\]- where \(\pi_{\rm ref}\) is the reference model (often the supervised fine-tuned model), and \(\beta\) is a temperature-like constant.

-

Some practical analyses (e.g., Is DPO Superior to PPO for LLM Alignment?) compare PPO vs. DPO in alignment tasks.

Why These Algorithms Matter for Web & Computer-Use Agents

-

When training agents that interact with the web or software systems (for example, clicking links, filling forms, issuing API calls), several factors make the choice of algorithm especially important:

- Action spaces are large and heterogeneous (e.g., browser UI actions, tool function calls).

- The reward signals may be sparse (e.g., task success only after many steps) or come from human annotation (in RLHF).

- Policies must remain stable and avoid drift (especially when built on pretrained LLMs).

- Computation cost is high (LLM inference, environment simulation), so sample efficiency matters.

-

Thus:

- Algorithms like PPO are well-suited because of their stability and simplicity (compared to e.g. TRPO) in high-dimensional policy spaces.

- RLHF/DPO are relevant because many web-agents and computer-agents are aligned to human goals (helpfulness, correctness, safety) rather than just raw reward.

- There is an increasing trend toward hybrid methods that combine search, planning (e.g., MCTS) plus RL fine-tuning for complex workflows.

Key Equations

Advantage estimation & value networks

-

In actor–critic variants (including PPO), we often learn a value function \(V_\psi(s)\) to reduce variance:

\[A_t = R_t - V_\psi(s_t) \quad R_t = \sum_{k=0}^{\infty} \gamma^k r_{t+k}\]-

where:

- \(A_t\): the advantage estimate at timestep \(t\), measuring how much better an action performed compared to the policy’s expected performance.

- \(R_t\): the discounted return, or the total expected future reward from time \(t\).

- \(\gamma\): the discount factor (\(0 < \gamma \le 1\)), controlling how much future rewards are valued compared to immediate ones.

- \(r_{t+k}\): the immediate reward received at step \(t+k\).

- \(V_\psi(s_t)\): the critic’s value estimate for state \(s_t\), parameterized by \(\psi\), representing the expected return from that state under the current policy.

-

-

The update for the critic aims to minimize:

\[L_{\rm value}(\psi) = \mathbb{E}_{s_t\sim\pi}\big[(V_\psi(s_t) - R_t)^2 \big]\]-

where:

- \(L_{\rm value}(\psi)\): the value loss, quantifying how far the critic’s predictions are from the actual returns.

- \(\mathbb{E}_{s_t\sim\pi}[\cdot]\): the expectation over states \(s_t\) sampled from the current policy (\pi).

- The squared term \((V_\psi(s_t) - R_t)^2\): penalizes inaccurate value predictions, guiding the critic to estimate returns more accurately.

-

KL-penalty / trust region

-

Some RLHF implementations add a penalty to keep the new policy close to the supervised model:

\[L_{\rm KL}(\theta) = \beta \cdot \mathbb{E}_{x,y\sim\pi}\left[ \log\frac{\pi_\theta(y|x)}{\pi_{\rm SFT}(y|x)} \right]\]-

where:

- \(L_{\rm KL}(\theta)\): the KL-divergence loss, which penalizes the new policy \(\pi_\theta\) if it deviates too far from the supervised fine-tuned (SFT) reference policy \(\pi_{\rm SFT}\).

- \(\beta\): a scaling coefficient controlling the strength of this regularization; larger \(\beta\) enforces tighter adherence to the reference model.

- \(\mathbb{E}_{x,y\sim\pi}[\cdot]\): the expectation over sampled input–output pairs from the current policy’s distribution.

- \(\pi_\theta(y \mid x)\): the current policy’s probability of generating output \(y\) given input \(x\).

- \(\pi_{\rm SFT}(y \mid x)\): the reference policy’s probability, often from the supervised model used before RL fine-tuning.

-

… so the total objective may combine PPO’s surrogate loss with this KL penalty (and possibly an entropy bonus) to balance exploration, stability, and fidelity to the base model.*

-

Preference Optimization (DPO)

- As shown above, DPO reframes alignment as maximising the probability that the fine-tuned model ranks preferred outputs higher than non-preferred ones, bypassing the explicit RL loop.

Sample efficiency & off-policy corrections

- For agents interacting with web or tools where running many episodes is costly, sample efficiency matters. Off-policy methods (e.g., experience replay) or offline RL variants (e.g., A Survey on Offline Reinforcement Learning by Kumar et al. (2022)) may become relevant.

Agentic Reinforcement Learning via Policy Optimization

-

In policy optimization, the agent learns from a unified reward function that draws its signal from one or more available sources—such as rule-based rewards, a scalar reward output from a learned reward model, or another model that is proficient at grading the task (such as an LLM-as-a-Judge). Each policy update seeks to maximize the expected cumulative return:

\[J(\theta) = \mathbb{E}_{\pi_\theta}\left[\sum_t \gamma^t r_t\right]\]- where \(r_t\) represents whichever reward signal is active for the current environment or training regime. In some settings, this may be a purely rule-based signal derived from measurable events (like navigation completions, form submissions, or file creations). In others, the reward may come from a trained model \(R_\phi(o_t, a_t, o_{t+1})\) that generalizes human preference data, or from an external proficient verifier (typically a larger model) such as an LLM-as-a-Judge.

-

These components are modular and optional—only one or several may be active at any time. The optimization loop remains identical regardless of source: the policy simply maximizes whichever scalar feedback \(r_t\) it receives. This flexible design allows the same framework to operate with deterministic, model-based, or semantic reward supervision, depending on task complexity, available annotations, and desired interpretability.

-

Rule-based rewards form the foundation of this framework, providing deterministic, auditable feedback grounded in explicit environment transitions and observable state changes. As demonstrated in DeepSeek-R1: Incentivizing Reasoning Capability in Large Language Models by Gao et al. (2025), rule-based rewards yield transparent and stable optimization signals that are resistant to reward hacking and reduce reliance on noisy human annotation. In the context of computer-use agents, rule-based mechanisms correspond directly to verifiable milestones in user interaction sequences—for example:

- In web navigation, detecting a URL transition, page load completion, or DOM state change (

NavigationCompleted,DOMContentLoaded). - In form interaction, observing DOM model deltas that indicate fields were populated, validation succeeded, or a “Submit” action triggered a confirmation dialog.

- In file handling/artifact generation, confirming the creation or modification of a file within the sandbox (e.g., registering successful exports such as

.csv,.pdf, or.pngoutputs following specific actions). - In application state transitions, monitoring focus changes, dialog closures, or process launches via OS accessibility APIs.

- In UI interaction success, verifying that a button, link, or menu item was activated and that the resulting accessibility tree or visual layout changed accordingly.

- These measurable indicators serve as the atomic verification layer of the reward system, ensuring that each environment step corresponds to reproducible, auditable progress signals without requiring human intervention.

- In web navigation, detecting a URL transition, page load completion, or DOM state change (

-

To generalize beyond fixed rules, a trainable reward model \(R_\phi(o_t, a_t, o_{t+1})\) can be introduced. This model is trained on human-labeled or preference-ranked trajectories, similar to the reward modeling stage in PPO-based RLHF pipelines. Once trained, \(R_\phi\) predicts scalar reward signals that approximate human preferences for unseen tasks or ambiguous states. It operates faster and more consistently than a generative LLM-as-a-Judge (which can be implemented as a Verifier Agent), while maintaining semantic fidelity to human supervision.

-

The three-tier reward hierarchy thus becomes:

- Rule-based rewards (preferred default): deterministic, event-driven, and auditable (no reward hacking).

- Learned, discriminative reward model (\(R_\phi\)): generalizes human feedback for subtle, unstructured, or context-dependent goals where rules are insufficient.

- Generative reward model (e.g., LLM-as-a-Judge): invoked only when both rule-based detectors and \(R_\phi\) cannot confidently score outcomes (e.g., for semantic reasoning, style alignment, or multimodal understanding). This is similar to how DeepSeek-R1 uses a generative reward model by feeding the ground-truth and model predictions into DeepSeek-V3 for judgment during the rejection sampling stage for reasoning data.

-