Internal • Netflix

- Keywords

- Key Stats

- Why did you interview with Netflix? / Why do you want to switch jobs? / What excites you most about potentially joining the Netflix team?

- How do you provide context for your team?

- Desired qualities in your team

- Recommendations

- Causal Inference

- Future Works / Improvement

- Cold Start

- Search

- Content Decision Making

- Media ML

- Evidence Innovation

- The Keeper Test / High Talent Density

- Leading with Context

- No decision-making approvals needed

- Dream team of stunning colleagues

- Going Global

- Things on the culture memo: You must read them carefully + your own opinions + your own examples.

- Why do you think you are a good match for this group?

- Establish Clear Roles and Responsibilities

- Align on Shared Goals

- Regular and Transparent Communication

- Balance Short-Term and Long-Term Priorities

- Use Data to Drive Decisions

- Build Trust and Empathy

- Collaborate on Roadmaps and Timelines

- Escalate and Resolve Conflicts Promptly

- Leverage Company Culture

- Continuous Learning and Retrospectives

- Conclusion

- HM

- Increase experimentation velocity via configurable, modular flows. Amazon Music personalization, North - South Carousels

- Netflix Rows

- Netflix Games

- Business Models: (i) Digital on-demand streaming service and (ii) DVD-by-mail rental service

- Netflix Title Distribution

- Memberships

- Plans and Pricing

- Netflix Culture Memo/Deck

- Netflix Originals / Original Programming / Only on Netflix

- Diverse Audience

- Netflix Ratings

- Netflix Deep-learning Toolboxes/Libraries

- Netflix’s Long Term View/Investor Relations Memo

- Netflix (Personalized) Search

- Meetings

- Netflix RecSys Talks

- Deep learning for recommender systems: A Netflix case study

- Design a recommendation system that can recommend movies, TV shows, and games. Note that games are only about 10-20 in number while there are thousands of movies and TV shows.

- ML

- 1. Intuition of ROC (Receiver Operating Characteristic) Curve:

- 2. Why Do We Need Penalization?

- 3. What Are the Corresponding Methods in Neural Networks?

- 4. Which One Do You Like Best?

- 2. Asked me to describe in detail a model I am most familiar with. I went back to GBDT and then was asked what are the parameters of GBDT and how to adjust them:

- 3. Classic ML question: when the number of features is much larger than the number of data (p » n), how to handle this situation:

- 1. How to serialize and deserialize the parameters of an ML model?

- 2. How to use the context information, such as the query searched by the user, etc.?

- System Design

- Music + MAB

- Data Quality

- End Data Quality

- Data Platform

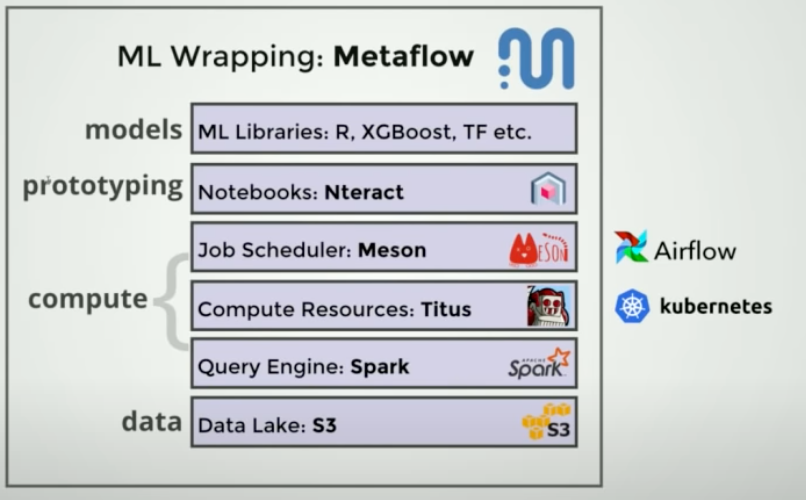

- Evan Cox/ Faisal Siddique - MetaFlow

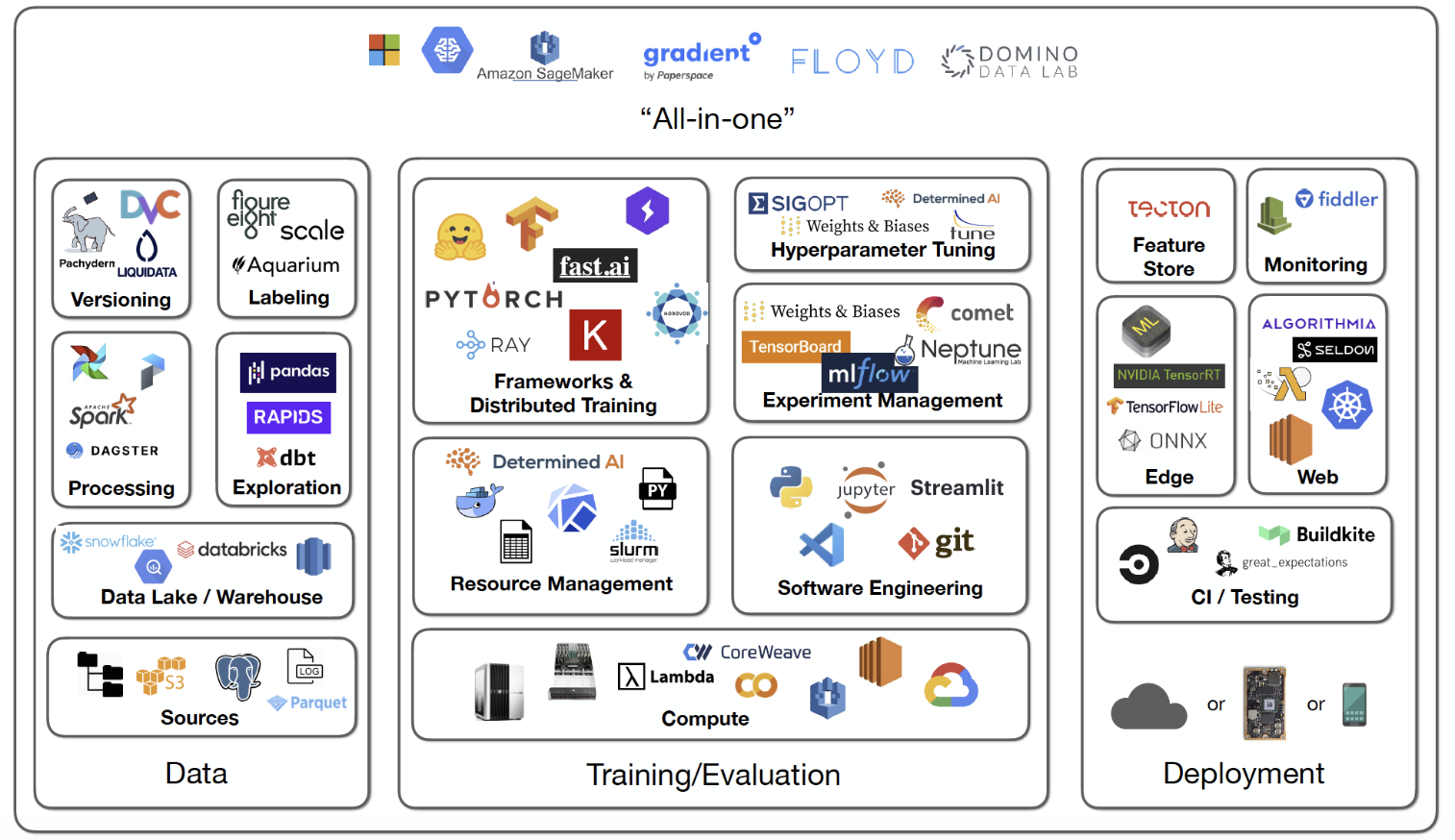

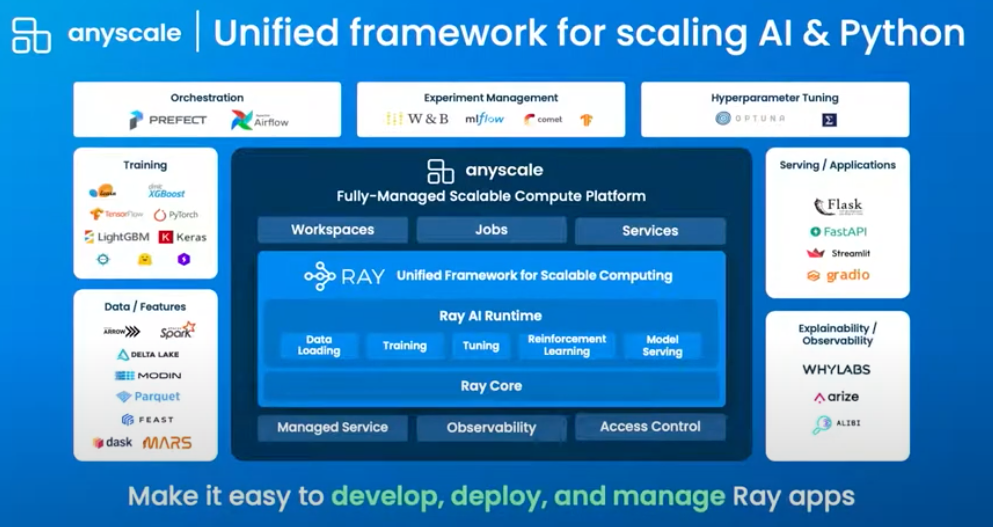

- Tools

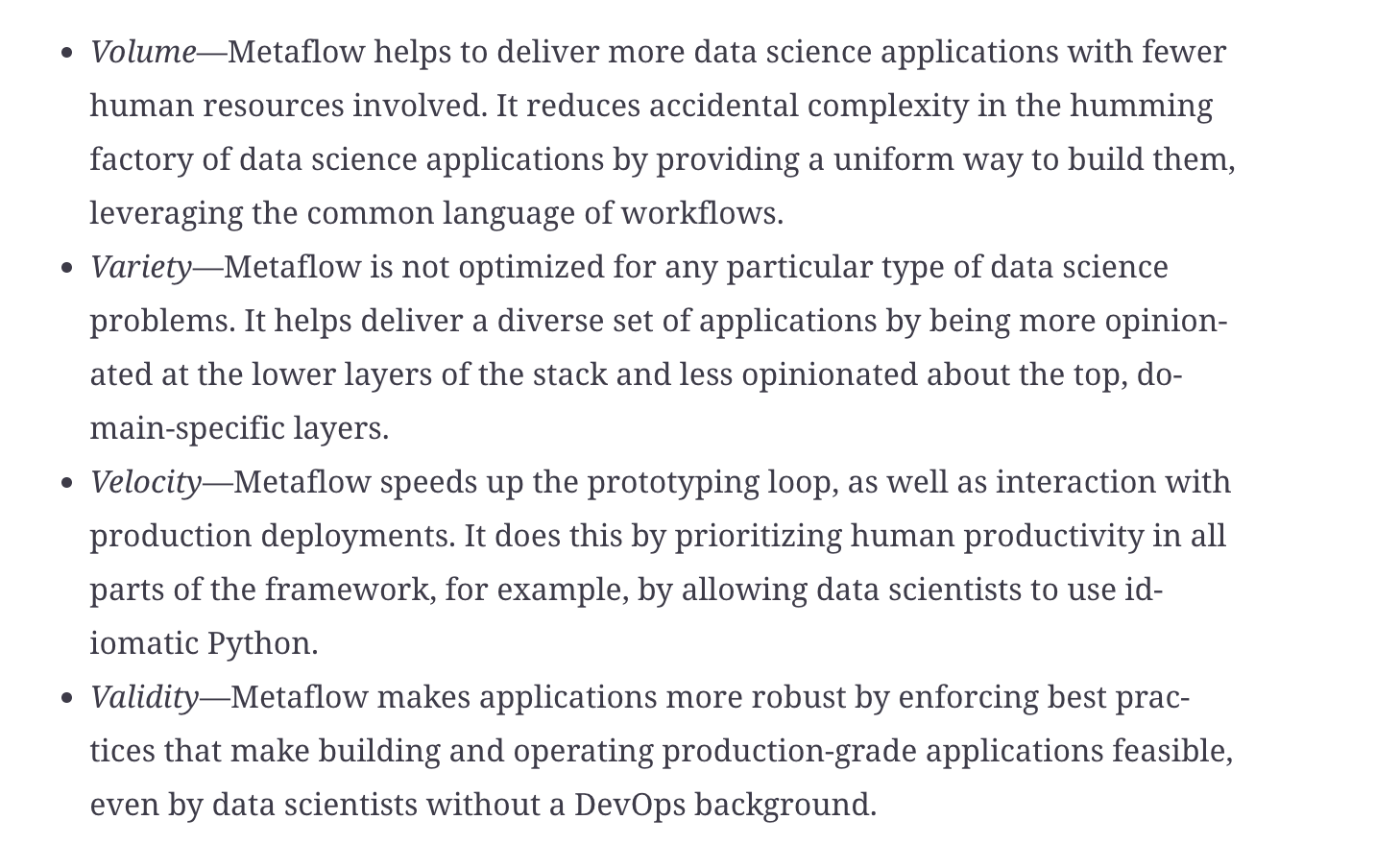

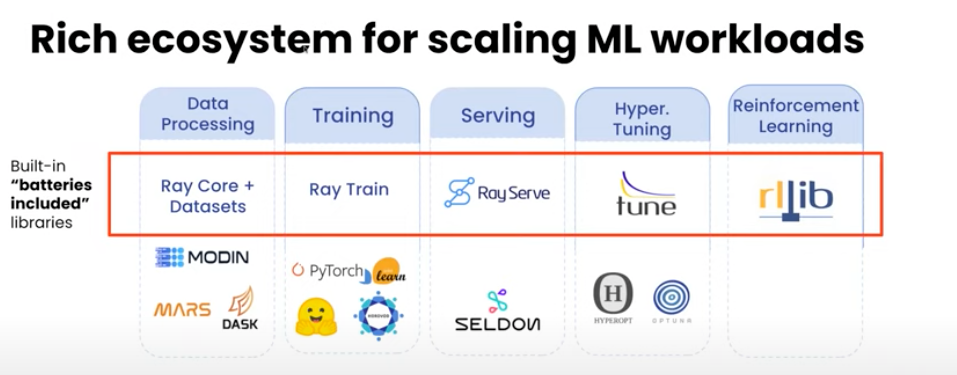

- Metaflow specs

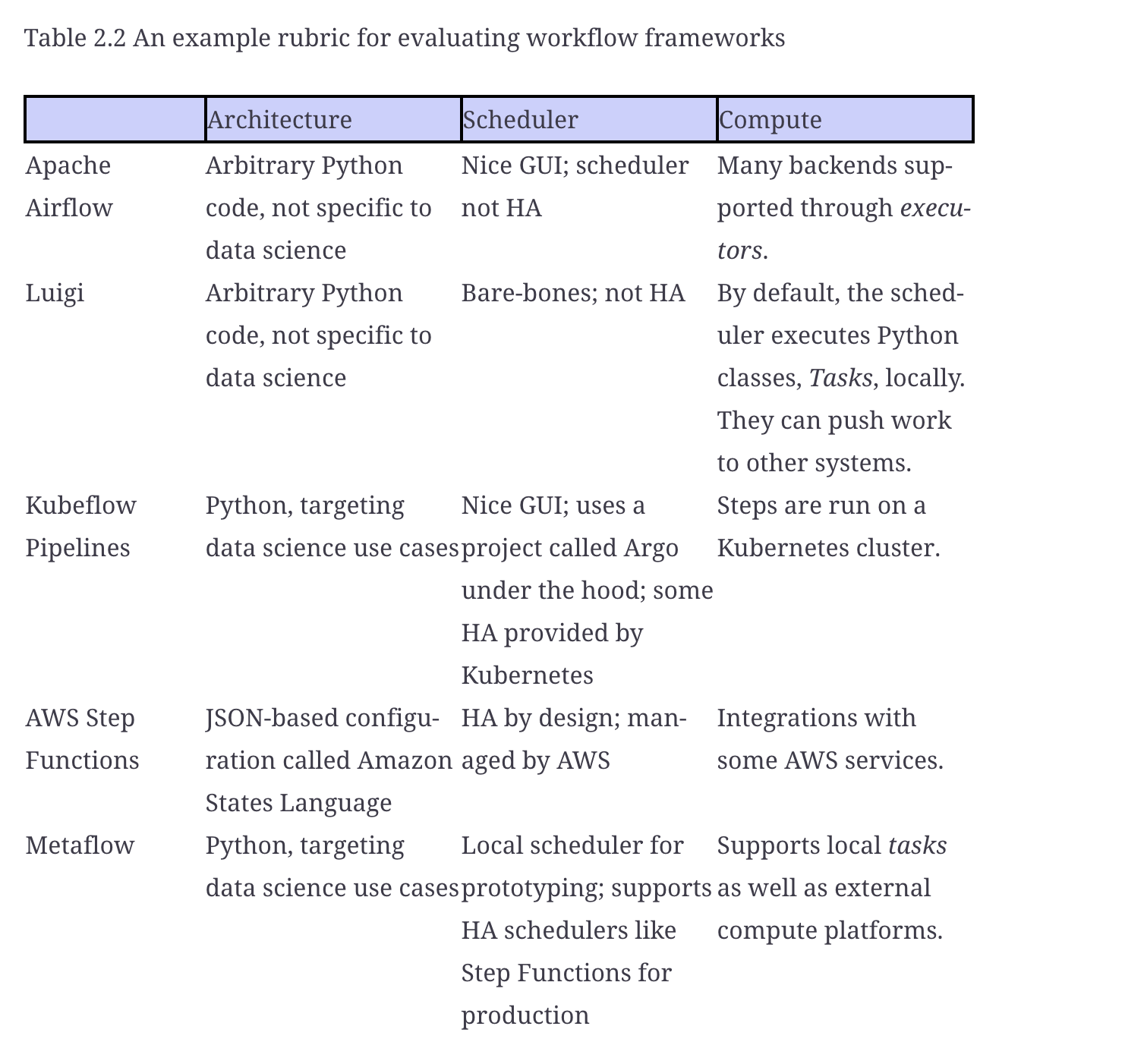

- comparision

- Ville tutorial

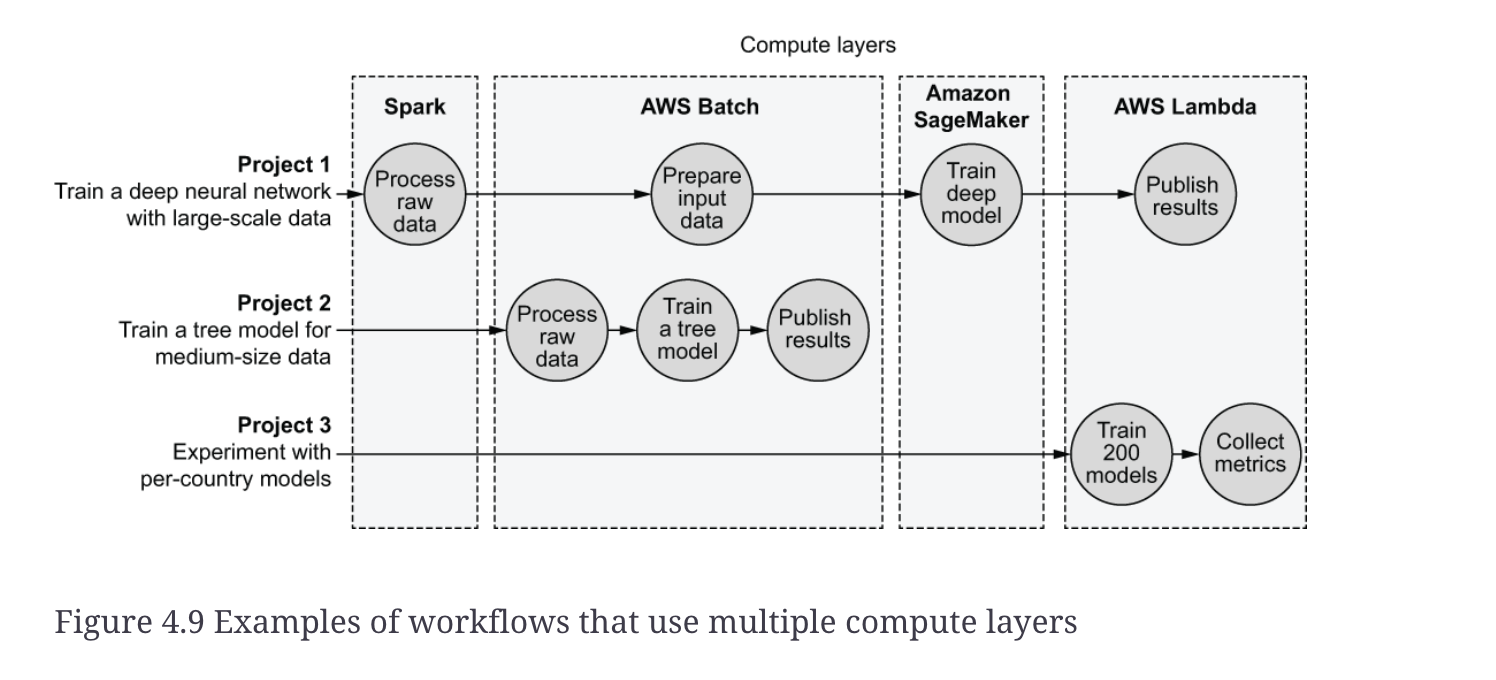

- Compute types

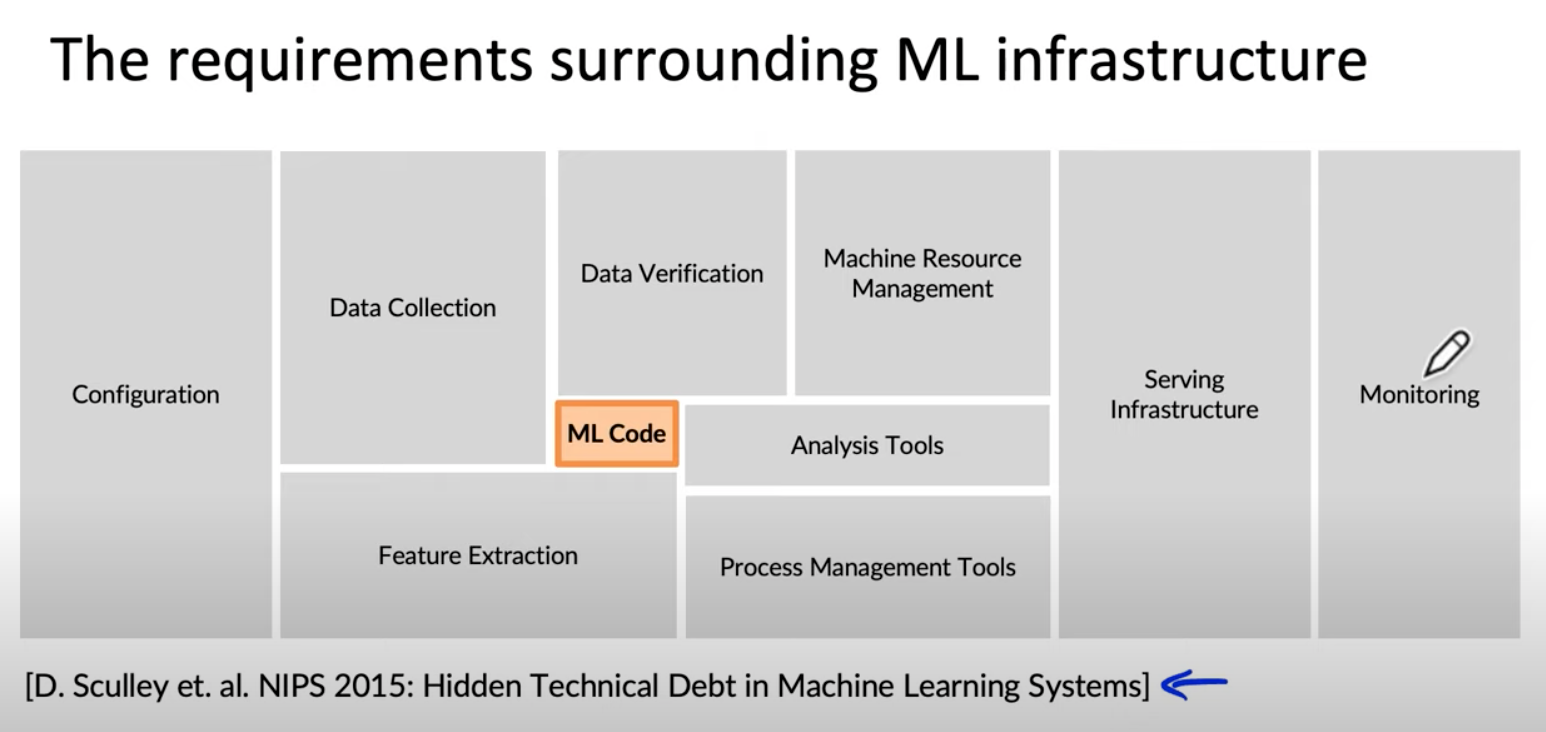

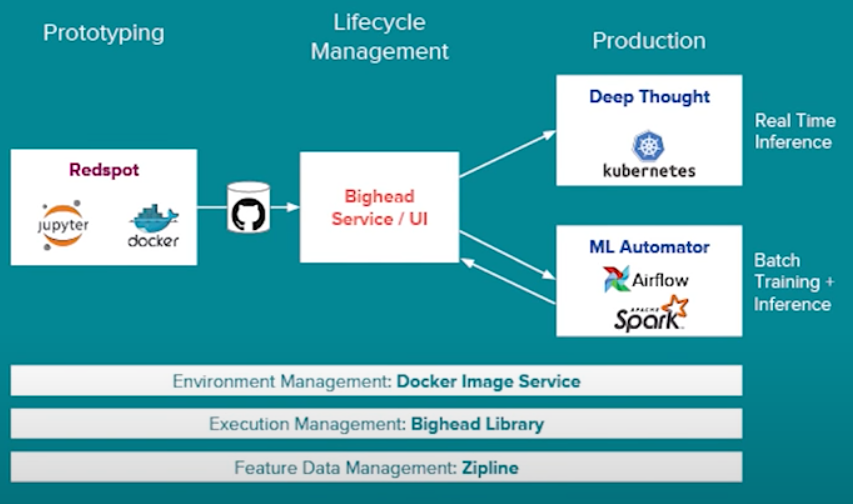

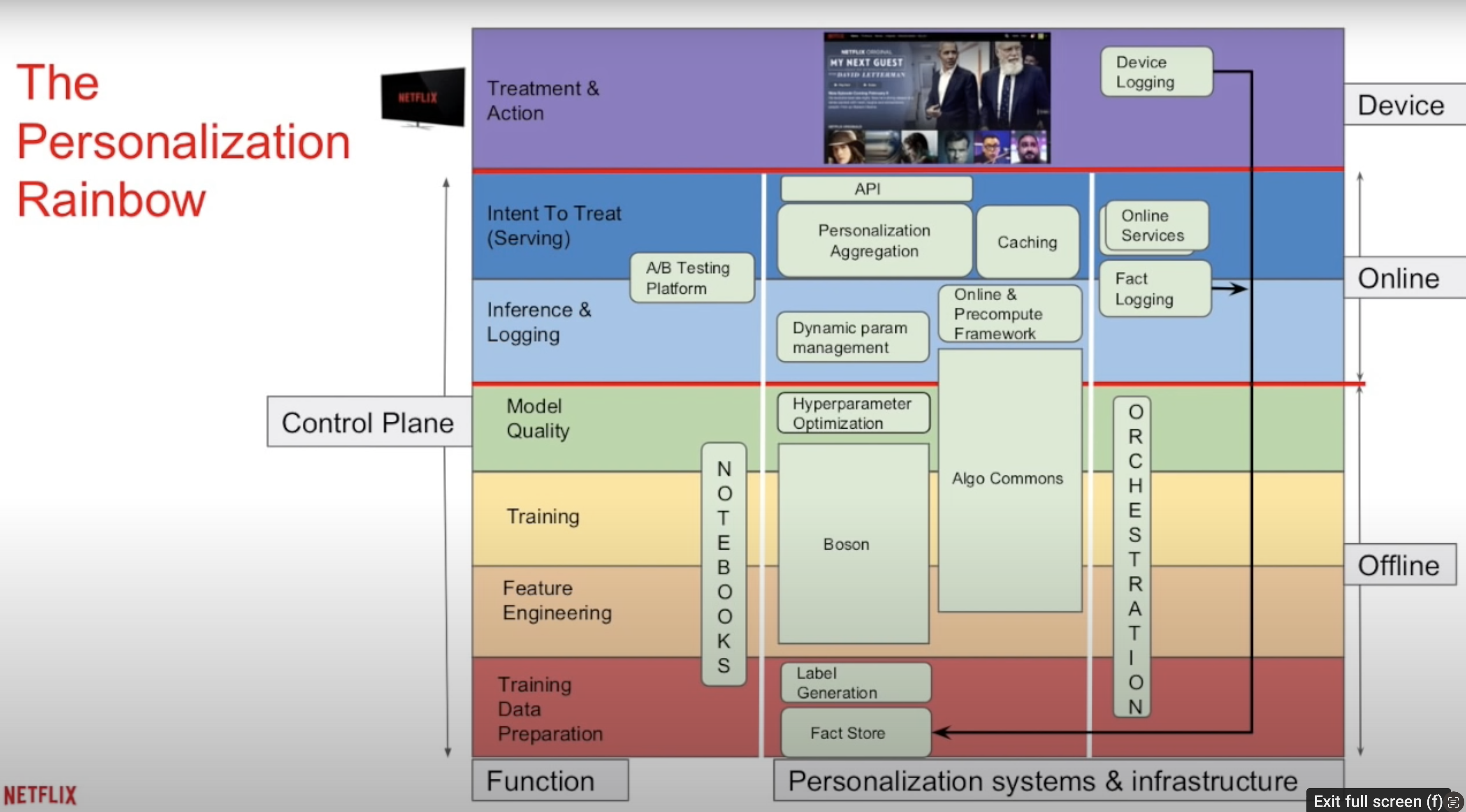

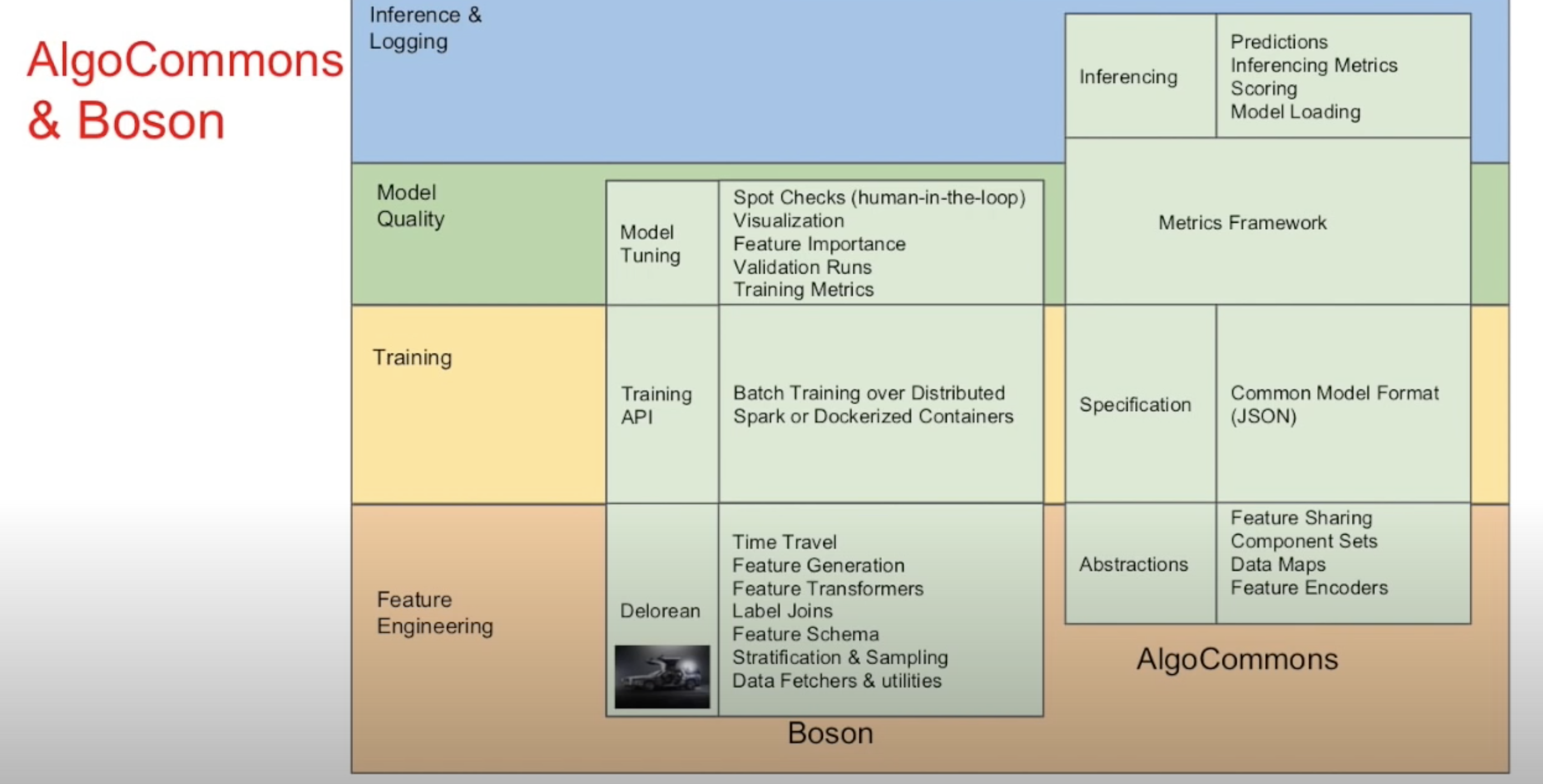

- Infrastructure

- Below are the areas of focus:

- Q’s

- Increase experimentation velocity via configurable, modular flows. Amazon Music personalization, North - South Carousels

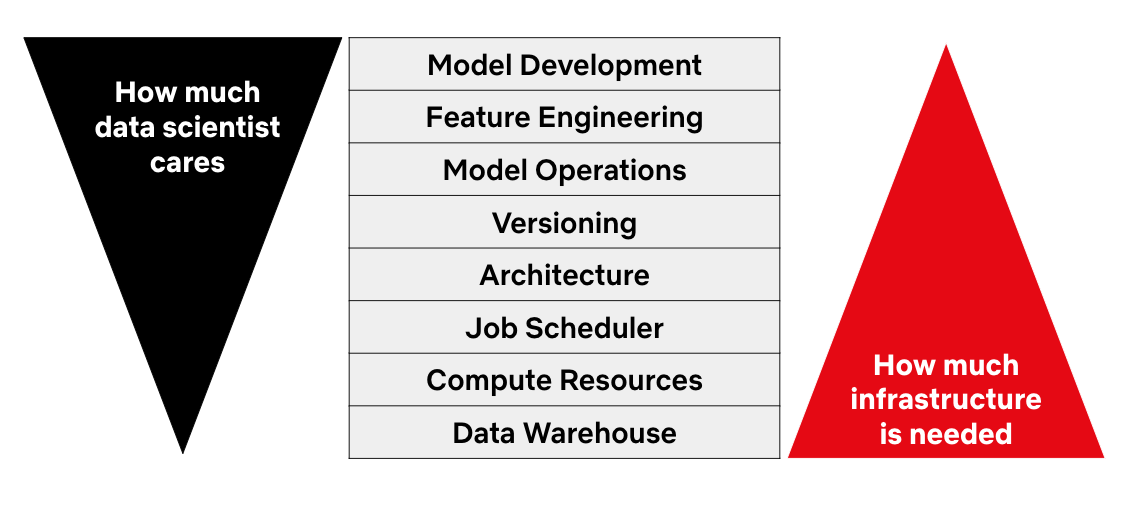

- The motivation

- Competitors

- Problems

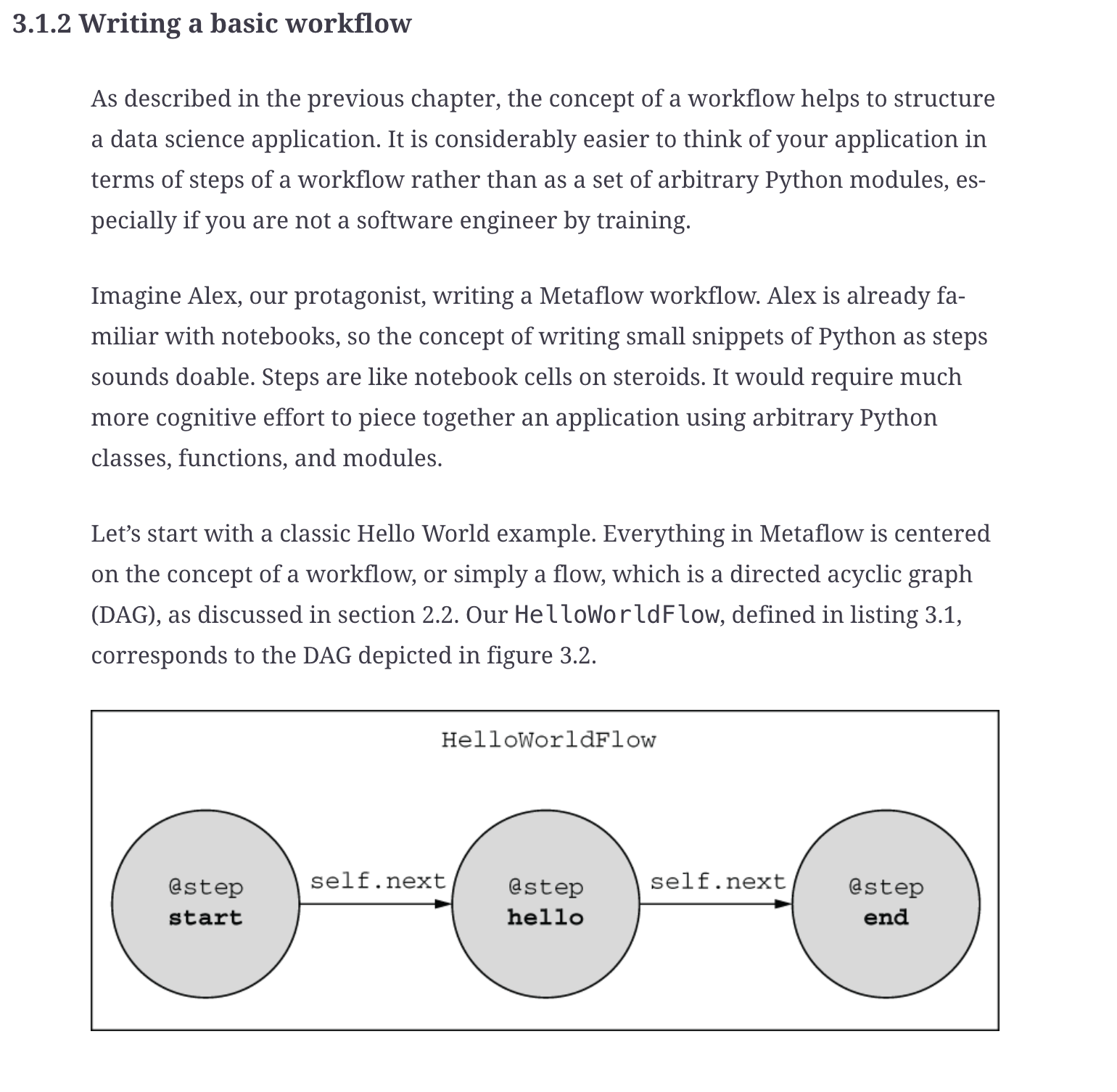

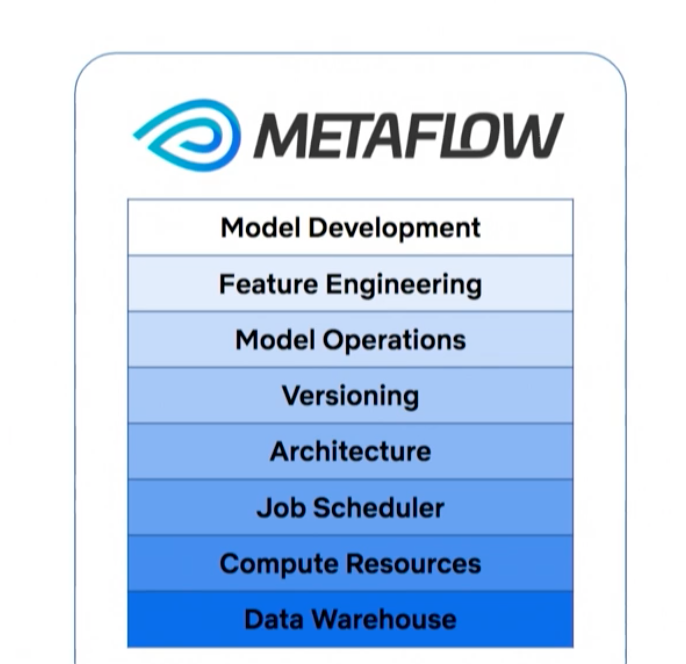

- What is Metaflow?

- Metaflow observability

- Metaflow achieves its functionality through a combination of a well-designed Python library, a set of conventions and best practices for workflow design, and integration with underlying infrastructure, particularly cloud services. Here’s a closer look at how Metaflow accomplishes its objectives:

- Sample Metaflow Workflow

- Running the Flow

- Explanation

- Integration with AWS

- Metaflow

- Outerbounds

- Metaflow job descrip

- Fairness among New Items in Cold Start Recommender Systems

- Data drift

- Causal Ranker

- Question bank

- your projects

- ooooo

- Syllabus

- Week 1-2: Introduction to Econometrics

- Week 3-4: Time-Series Analysis & Forecasting

- Week 5-6: Causal Inference - Basics

- Week 7-8: Experimental Design & A/B Testing

- Week 9-10: Advanced Causal Inference & Machine Learning Integration

- Week 11-12: Reinforcement Learning

- Week 13-14: Application to Real-World Problems

- Ongoing: Networking & Keeping Up-to-Date

- Further Reading

- References

Keywords

- Netflix is one of the world’s leading entertainment platforms, with 283 million paid memberships in over 190 countries enjoying TV series, films and games across a wide variety of genres and languages. Members can play, pause and resume watching as much as they want, anytime, anywhere, and can change their plans at any time.

- At Netflix, we want to entertain the world and constantly innovate how entertainment is imagined, created, and delivered to a global audience.

- We stream content in over 30 languages across 190 countries, topping over 283 million paid subscribers. We launched a new ad-supported tier in November 2022 and are building an in-house world-class ad tech ecosystem to offer our members more choices in consuming their content. Our new tier allows us to attract new members at a lower price point while also creating a compelling path for advertisers to reach deeply engaged audiences.

- Netflix is global - roughly 60% of Netflix’s members are outside US and and a significant minority do not consume content in English at all.

- The engineering team’s mission is to develop advanced technology that ensures exceptional experiences for our members.

- We deliver thoughtful member viewing experiences. Our team is faced with the enormous ambitions of building highly performant, large scale, low-latency distributed systems and delivering an incredible slate of content.

- We seek to build unique value propositions that help us differentiate us and become a market leader in record time. Our roles come with expectations of delivering in a rapid clip, comfort with lofty goals (BHAG) and big ambitions, the visibility that comes with strategic initiatives, and responsibility and an appetite to operate like a startup within an established company.

- Join us on this journey, to lead some exciting initiatives, and the talented engineers who work on realizing our ambitious vision. As a stunning colleague, you will be responsible for building and leading a team of world-class engineers and researchers doing cutting-edge applied machine learning. You will foster/cultivate a vision and strategy for the team aligned with our mission and guide innovation projects from end-to-end: research ideas to production A/B tests.

- As an engineering manager you will:

- Drive success in a fast paced, flat organization with minimal process and a heavy sense/emphasis on ownership and accountability.

- Support your team by contextualizing the larger vision, enabling prioritization and fostering high focus and executional excellence.

- Ability to lead in alignment with our unique culture. Operate as an ambassador of the Netflix Culture.

- Create a dream team by hiring, retaining, and growing high performing talent.

- Netflix live: NFL Christmas Gameday Live – an audience of nearly 65 million US viewers. In the US, both games became the most-streamed NFL games in history, marking Netflix’s most-watched Christmas day ever.

- Netflix live seeks to tap into massive fandoms across comedy, reality TV, sports, etc.

- Squid Game Season 2 enthralled fans all over the world as it skyrocketed to the top of the Netflix Global Top 10 (weekly), amassing an astounding 68 million views in its debut, ranking as the week’s most-watched title and breaking into the Most Popular List (all time) in a record three days.

Key Stats

- Recommender – primary means of driving revenue, 80% of views on Netflix were from the service’s recommendations – drives member joy, satisfaction, and retention.

- ~30% of US internet traffic

- Netflix streams in more than 30 languages and 190 countries, because great stories can come from anywhere and be loved everywhere. Great, high-quality storytelling has universal appeal that transcends borders.

- According to Netflix, the average paid subscriber spends around two hours per day on the platform.

- As of 2023, Netflix employs approximately 13,000 full-time workers.

- Netflix is programming for well over half a billion people globally — something no other entertainment company has ever done before.

- Did you know that over 70% of all viewing on Netflix involves subtitles or dubs, and about 13% of hours viewed in the US are non-English titles? At the heart of this is building a product and technology that ensures Netflix feels immersive and meaningful, no matter what language you speak.

- Context, not control, guides the work for data scientists and algorithm engineers at Netflix. Contributors enjoy a tremendous amount of latitude to come up with experiments and new approaches, rapidly test them in production contexts, and scale the impact of their work.

Why did you interview with Netflix? / Why do you want to switch jobs? / What excites you most about potentially joining the Netflix team?

- Culture: We’re at the cusp of a revolution in technology, thanks to the transformational power of AI. Netflix, with it’s unique culture that emphasizes people over process, context not control, extraordinary candor, the concept of a dream team with stunning colleagues, etc. – all of this ensures it’s uniquely positioned to succeed since the fast-paced nature of AI requires a culture that offers the nimbleness and agility to experiment. Reed Hasting’s No Rules Rules was the first book I read as I built my managerial chops back in 2017 at Apple, adopting some of it for my own teams at Apple and Amazon, etc. – its been crazy! The amount of influence Netflix has had on my life is phenomenal.

- Technical Prowess in RecSys: Coming to the area of recommendations and search – I’ve built search and recommender applications for music streaming on Alexa, search for Siri, etc. and Netflix stands out due to its culture of innovation which has lead to it being a frontrunner in the recommendations space for the past couple of decades. I’ve spent a ton of time on the Netflix blog over the past decade – especially going through articles from Justin’s team. As an area, Recommender and Search are critical to the business – they are the primary discovery channels that drive revenue, 80% of views on Netflix were from the service’s recommendations. RecSys is an area that carries significant impact.

- Personal Level: Lastly, on a more personal level, I am thrilled about the possibility of blending my passion for AI with my love for storytelling. I write AI primers and blogs (you can say its technical storytelling) and the prospect of contributing to a platform that reaches millions of people around the world, enhancing their entertainment experience, is truly exciting and fulfilling. Netfix has the world stage – the opportunity to delight over 283M members spanning over 190 countries representing hugely diverse cultures and tastes with Netflix’s content slate. The better the recommendations, the easier it is for Netflix members to find they’d love and resonate with and thereby bring about a lift in member joy, satisfaction, and retention is exciting.

How do you provide context for your team?

- I’ve been wildly fortunate to hire and work with some of the most gifted SWEs I’ve ever known.

- I thinking bringing in a mix of perspectives and having people play to their strengths is a good recipe.

- Recognizing where the team has the deeper context needed to solve some of these problems. As an EM, providing that connective tissue and giving everyone the context they need to make the best decisions at every step to move forward.

- One of Netflix’s core values is FNR, people-over-process – you can do literally anything but the responsibility part kicks in which leads you to think about the implications/repercussions/first-second order effects of what you’re working on. Is this going to help other groups? Can we spend a little more time in the beginning to make it more resilient, say, make it less prone to error with flaky input? Not only making it more useful for the person using it but also more thoughtful for the person maintaining it.

- I prize a combination of pragmatism and empathy. This combination essentially makes for someone who can solve any problem that is presented to them.

- Source

Desired qualities in your team

- Culture of Extraordinary Candor: “Adapting” it as much as possible for my own team at Apple and Amazon

- Candid and Continuous Feedback: Open feedback can be considered an attack at face value

- Dream team: Worst morale, sourced, hired,

- Lead with empathy:

Recommendations

- Figuring out how to bring unique joy to each member.

- Personalization enables us to find an audience even for relatively niche videos that would not make sense for broadcast TV models because their audiences would be too small to support significant advertising revenue, or to occupy a broadcast or cable channel time slot. A benefit of Internet TV is that it can carry videos from a broader catalog appealing to a wide range of demographics and tastes, and including niche titles of interest only to relatively small groups of users.

- We also believe that recommender systems can democratize access to long-tail products, services, and information, because machines have a much better ability to learn from vastly bigger data pools than expert humans, thus can make useful predictions for areas in which human capacity simply is not adequate to have enough experience to generalize usefully at the tail.

Artwork Personalization

- To train our model, we leveraged existing logged data from a previous system that chose images in an unpersonalized manner. We will present results comparing the contextual bandit personalization algorithms using offline policy evaluation metrics, such as inverse propensity scoring and doubly robust estimators.

- We are far from done when it comes to improving artwork selection. We have several dimensions along which we continue to experiment. Can we move beyond artwork and optimize across all asset types (artwork, motion billboards, trailers, montages, etc.) and choose between the best asset types for a title on a single canvas?

- Images that have expressive facial emotion that conveys the tone of the title do particularly well.

“No Dead Ends”

- If, on Netflix, the video Sonic the Hedgehog is in fact unavailable (as depicted, only Sonic X is available), we can still produce recommendations for similar available videos that are relevant to the query “sonic t”, and thus help avoid “dead ends” that users may otherwise experience.

- From Raveesh Bhalla’s Substack:

- “No dead ends” is a commonly stated product/design “principle” for Search and Recommendation products. Unfortunately, it’s also wrong more often than not.

- “No dead ends” is a commonly stated product/design “principle” for Search and Recommendation products. The thought behind it amongst every team is that users should never run out of choice: if we keep giving them some more, including in the form of “pivots” or “guided search suggestions”, users will ultimately be more successful and satisfied.

- Unfortunately, for most products, this principle is wrong.

- As an example from earlier in my career, I was working on introducing infinite scroll to a search product. Enough past tests had shown that if we increased recall and made it easier for people to see more options, they’d act more often. Some users would find the first thing to take action on, while others would find more to take action on.

- A far more experienced Search PM warned me about the test, saying he’d run similar versions several times and they’d all failed. I didn’t believe him and went ahead.

- Turns out, he was right. Users did consider more options (I.e. scroll and click on more), but they actually acted on fewer. This is because we effectively put them in a state of decision paralysis: “should I act on this item or scroll and see more?”

- The exception to the rule would be passive consumption products - think shortform video feeds - where there is no action to take but to browse. In this world, infinite feeds will lead to greater success.

- From Raveesh Bhalla’s Substack:

Causal Inference

- “Testing your way into a better product” by letting members make the decisions. Test out every area of the product — where we relentlessly test our way to a better member experience with an increasingly complex set of hypotheses using the insights we have gained along the way.

- For more than 20 years, Netflix has utilized A/B testing to inform product decisions, allowing our users to “vote”—via their actions—for what they prefer. The platform that enables this decision-making encompasses UIs, backend services, and libraries, and is used by product managers, engineers, data scientists, and other roles internal to Netflix.

- Netflix consistently employs a simple but powerful approach to product innovation: we ask our members, through online experiments, which of several possible experiences resonate with them.

- We use controlled A/B experiments to test nearly all proposed changes to our product, including new recommendation algorithms, user interface (UI) features, content promotion tactics, title launch and scheduling strategies, streaming algorithms, new member signup process, and payment method

- Over the course of this series of tests, we have found many interesting trends among the winning images as detailed in this blog post. Images that have expressive facial emotion that conveys the tone of the title do particularly well. Our framework needs to account for the fact that winning images might be quite different in various parts of the world. Artwork featuring recognizable or polarizing characters from the title tend to do well. Selecting the best artwork has improved the Netflix product experience in material ways. We were able to help our members find and enjoy titles faster.

- We are far from done when it comes to improving artwork selection. We have several dimensions along which we continue to experiment. Can we move beyond artwork and optimize across all asset types (artwork, motion billboards, trailers, montages, etc.) and choose between the best asset types for a title on a single canvas?\

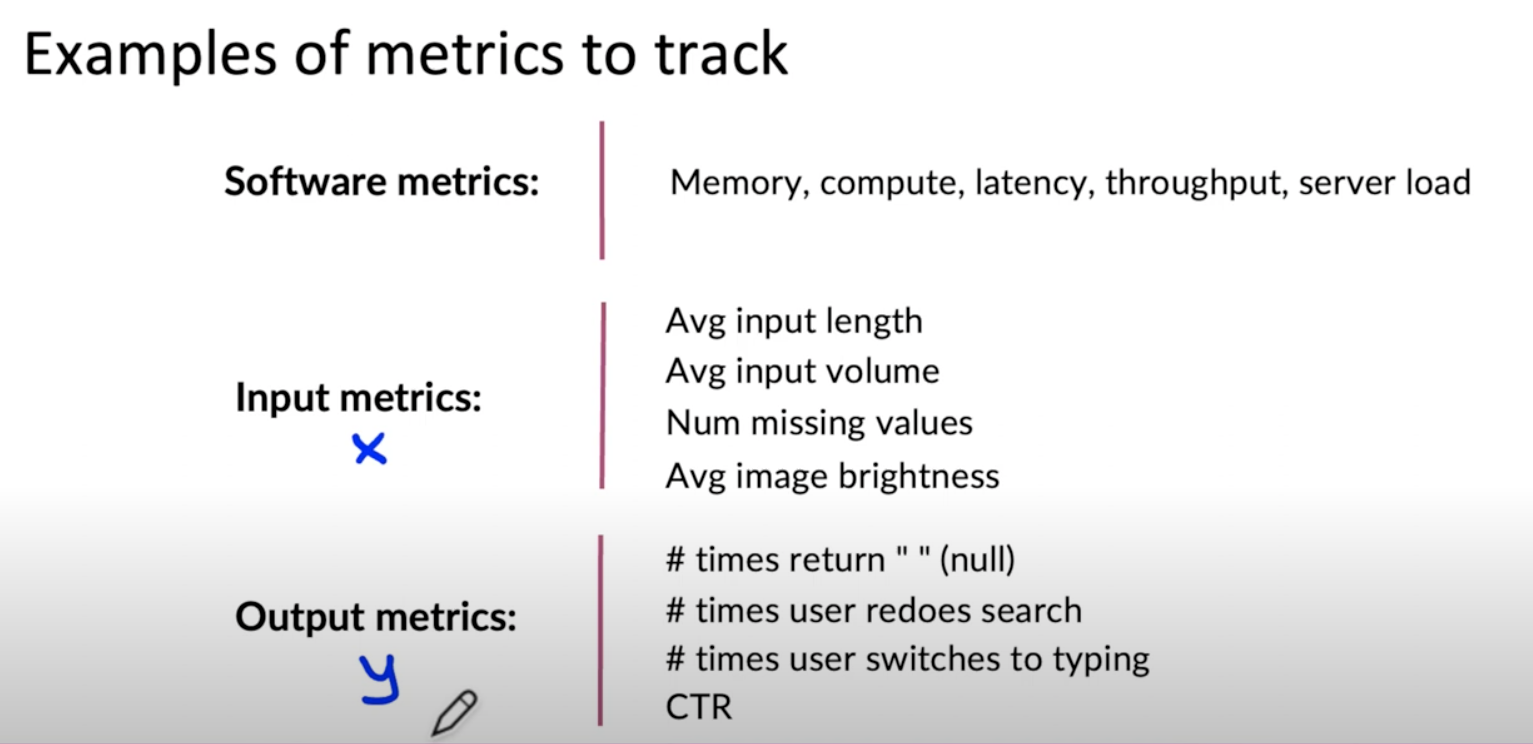

- Metrics:

- There are many other possible metrics that we could use, such as time to first play, sessions without a play, days with a play, number of abandoned plays, and more. Each of these changes, perhaps quite sensitively, with variations in algorithms, but we are unable to judge which changes are for the better. For example, reducing time to first play could be associated with presenting better choices to members; however, presenting more representative supporting evidence might cause members to skip choices that they might otherwise have played, resulting in a better eventual choice and more satisfaction, but associated with a longer time to first play.

- A related challenge with engagement metrics is to determine the proper way to balance long- and short-form content. Since we carry both movies (typically 90–120 minutes of viewing) and multiseason TV shows (sometimes 60 hour-long episodes), a single discovery event might engage a customer for one night or for several weeks of viewing. Simply counting hours of streaming gives far too much credit to multiseason shows; counting “novel plays” (distinct titles discovered) perhaps overcorrects in favor of one-session movies.

Future Works / Improvement

- AR/VR for games – connecting people

- Auto-dubbing

- We are also interested in models that take into account how the languages available for the audio and subtitles of each video match the languages that each member across the world is likely to be comfortable with when generating the recommendations, for example, if a member is only comfortable (based on explicit and implicit data) with Thai and we think would love to watch “House of Cards,” but we do not have Thai audio or subtitles for it, then perhaps we should not recommend “House of Cards” to that member, or if we do have “House of Cards” in Thai, we should highlight this language option to the member when recommending “House of Cards.”

- Part of our mission is to commission original content across the world, license local content from all over the world, and bring this global content to the rest of the world. We would like to showcase the best French drama in Asia, the best Japanese anime in Europe, and so on. It will be too laborious and expensive to cross-translate every title into every other language, thus we need to learn what languages each member understands and reads from the pattern of content that they have watched, and how they have watched it (original audio vs. dub, with or without subtitles), so that we can suggest the proper subset of titles to members based on what they will enjoy.

- We have lots of research and exploration left to understand how to automatically credit viewing to the proper profile, to share viewing data when more than one person is viewing in a session, and to provide simple tools to create recommendations for the intersection of two or more individuals’ tastes instead of the union, as we do today.

Cold Start

- Today, our member cold start approach has evolved into a survey given during the sign-up process, during which we ask new members to select videos from an algorithmically populated set that we use as input into all of our algorithms.

Search

- Historically, Search and Recommendations have been treated as two separate problems; search being mainly focused on the query, recommendations on the user. Personalized Search brings them together because both the query and user information can be taken into account to effectively respond to the user’s needs. Search particularly can benefit from personalization as in many cases queries are broad enough to require different results for different users.

- Recognizing the variety of our customer’s needs, our advanced search features are designed to empower members to efficiently navigate our catalog, allowing them to find the right videos and games. This includes addressing the challenges associated with accommodating numerous languages and handling diverse input mechanisms from various devices, such as TV remotes and voice controls. We also extend our work beyond the title selection layer by looking for new ways we can present recommendations, explain them, and have members interact with our systems. Our goal is to minimize the time browsing and searching while maximizing enjoyment.

- Similar to other (video) search engines, when users search on Netflix they have a particular intent, i.e., an immediate reason, purpose or goal in mind. From qualitative and quantitative data we observe that search intents fall on a spectrum between Fetching a specific video from the catalog (“I know what I want, I need you to get it for me”) to extensively Exploring the catalog (“I don’t know what I want, let’s understand what you have”). We also observe that users express their intents using different query facets: (available and unavailable) videos to stream on Netflix, talent (e.g., actors), and collections (e.g,. genres). To illustrate the difference between intents and facets: a user searching for a specific video (i.e., the query facet), may have an intent to either play that video (Fetch) or to explore content that is similar to that video (Explore). By understanding the query facet, we can optimize for both intents.

- We define a search match as a video retrieved by the search engine by keyword-matching the query with the indexed videos (or by applying techniques such as query expansion). A search recommendation, on the other hand, is a video selected by the search engine by relaxing the match constraints, i.e., a video retrieved via traditional recommender systems approaches (e.g., collaborative filtering) in the query context. We use the term search results to refer to the union of search matches and search recommendations, i.e., all videos returned in response to a user query.

- A unique characteristic of search, and specifically Instant Search on TV where queries are very short. This presents a great opportunity for personalization as rich knowledge about the user can complement the limited query context. Users’ historical preferences can help the system to better predict users’ intent and in turn return a unique set of tailored results.

- The two typical search use cases for Netflix are fetch and explore. The fetch use case is most common, where users have a clear intent to search for a specific title (“I know what I want, I need you to get it for me”). Personalization can help users get to their results faster (less typing) if the title they are looking for has high affinity with their taste profile, which is often the case. A subset of this intent is for out-of-catalog videos, where the title the user is looking for is not available. Given that search results are the union of search matches and search recommendations, in the event of no search matches (out-of-catalog videos), this use-case degenerates to a purely recommendations-based use-case and the system should return titles which are related to the unavailable one. Also in this scenario personalization may provide additional value to the recommended results as the set of related titles can be broad and the notion of related titles can be different for different users. The explore use case (“I don’t know what I want, let’s understand what you have”) consists of broad queries, such as genres. Given the broad nature of the results, personalization can help optimize for the correct relevant titles for each user.

- One important aspect to highlight is that different search intents have different relevance personalization trade-offs. For example, query relevance is of primary importance for the fetch intent, since too much personalization could hurt the user experience if high affinity but lexically irrelevant results are ranked high in the list. On the other hand, for a genre query, titles belonging to the requested genre with high user affinity but low lexical query similarity are preferable. In other words, there is a delicate balance between relevance and personalization which is also intent specific.

- Re. Netflix search query testing framework for pre-launch and post launch regression analysis: In the pre-launch phase, we try to predict the types of failures the search system can have by creating a variety of test queries including exact matches, prefix matching, transliteration, and misspelling. Our query testing framework is a library which allows us to test a dataset of queries against a search engine. The focus is on the handling of tokens specific to different languages (word delimiters, special characters, morphemes, etc.)

Content Decision Making

- Netflix creates content at an unprecedented scale. From movies to series: they release thousands of hours of content across hundreds of titles each year under “Netflix Originals”. But creating something great is expensive! Each project competes for budget and talented people.

- The big question? Greenlight or axe the project? Choosing wrong could mean missing out on the next “Squid Game” or “Stranger Things”. That’s a huge bummer not only for the producers but also for us viewers!

- To make well-informed decisions, Netflix uses data and machine learning to predict a project’s success before they film it. This helps them make informed decisions and hopefully avoid ditching the next big hit. Content decision making (CDM) is the question of what content Netflix should bring to the service.

- Content, marketing, and studio production executives make the key decisions that aspire to maximize each series’ or film’s potential to bring joy to our subscribers as it progresses from pitch-to-play on our service.

- We identified two ways to support content decision makers: surfacing similar titles and predicting audience size (“audience sizing”), drawing from various areas such as transfer learning, embedding representations, natural language processing, and supervised learning.

- Another crucial input for content decision makers is an estimate of how large the potential audience will be (and ideally, how that audience breaks down geographically). For example, knowing that a title will likely drive a primary audience in Spain along with sizable audiences in Mexico, Brazil, and Argentina would aid in deciding how best to promote it and what localized assets (subtitles, dubbings) to create ahead of time.

- By offering multiple views into how a given title is situated within the broader content universe, these similarity maps offer a valuable tool for ideation and exploration for our creative decision makers.

- Machine Learning goes way beyond the obvious. It analyzes data to discover non-obvious patterns. This is then used to answer key questions [3] and make the decision about a potential project:

- Similar Movies and Shows: What are similar movies or series to the candidate project? Is this the next Stranger Things or a forgotten B-movie?

- Regional Appeal: Predicting audience sizes across demographics and geographic locations. Will teens in Tokyo love it as much as families in France?

- This helps Netflix avoid duds and greenlight shows you’ll love.

Media ML

- ML in Media use-cases:

- Match Cutting: Finding Cuts with Smooth Visual Transitions Using Machine Learning

- Discovering Creative Insights in Promotional Artwork

- Video Understanding

- Detecting Scene Changes in Audiovisual Content

- AVA Discovery View: Surfacing Authentic Moments

- Building In-Video Search

- Detecting Speech and Music in Audio Content

Evidence Innovation

- Gone in 90 seconds: Broadly, we know that if you don’t capture a member’s attention within 90 seconds, that member will likely lose interest and move onto another activity. Such failed sessions could at times be because we did not show the right content or because we did show the right content but did not provide sufficient evidence as to why our member should watch it. How can we make it easy for our members to evaluate if a piece of content is of interest to them quickly?

- Evidence includes everything we use to communicate to members what a movie, show, or game is and why it might be for them: critical for the discovery experience.

- Evidence is the information we present about movies, shows, and games - in the form of images, videos, and text - that help a member understand what a movie, show, or game is and why Netflix is recommending it. In success, evidence reflects a deep understanding of our titles and members’ taste.

- Leveraging available data, you will craft and communicate a strategic roadmap for the evidence product area and see to its execution leading cross-functional teams of the industry’s best data scientists, consumer insights researchers, designers, machine learning and software engineers. As a high-leverage thought leader, you will have the ability to shape our thinking on how to evolve our product, and have a massive impact on how members enjoy our service.

- Overall, putting these aspects together has helped us significantly reduce issues, increased trust with our stakeholders, and allowed us to focus on innovation.

- Excellent written communication skills and ability to present technical content to non-technical audiences.

- Ability to partner with different functions to ensure that your solutions drive real business impact. Strategic thinking and ability to incorporate larger business context into algorithm and product development.

- You combine a technical background with a strong product sense and excellent communication skills to define, explain and execute your vision. You naturally gravitate towards experimentation as a way to validate your hypotheses while having a healthy skepticism/cautiously optimistic when interpreting experimental results. In order to be successful in this position, you need to be able to work with world-class engineers, have the statistical acumen to collaborate with top-notch data scientists, the design sense to partner with a stellar experience design team, and the business sense to drive product goals and strategies. Demonstrated ability to build successful consumer-facing applications and algorithms, and a strong feel for the entertainment business are big pluses.

What do you think of feedback?

- 4As

- At Netflix, it is tantamount to being disloyal to the company if you fail to speak up when you disagree with a colleague or have feedback that could be helpful.

- In the book “No Rules Rules” by Reed Hastings and Erin Meyer, the 4As of feedback serve as a guideline for giving and receiving constructive criticism effectively within a culture that emphasizes candor. Here’s a breakdown:

Aim to Assist

- Purpose: Feedback should always be given with the intent to help the recipient improve, not to vent frustrations or assert dominance.

- How: Consider whether your feedback will genuinely benefit the person and improve the situation. Frame it as an act of support.

Actionable

- Purpose: Feedback should be specific and include clear suggestions or examples so the recipient knows how to address the issue.

- How: Avoid vague statements like “Do better.” Instead, provide details, e.g., “You could improve this report by organizing the data into clear sections.”

Appreciate

- Purpose: When receiving feedback, focus on the value it brings, even if it stings at first. Assume positive intent and be grateful for the opportunity to improve.

- How: Instead of becoming defensive, thank the person for taking the time to share their perspective and insights.

Accept or Discard

- Purpose: The recipient of feedback has the autonomy to decide what to do with it. Not all feedback is equally relevant or accurate.

-

How: Reflect on the feedback and determine if it aligns with your goals or values. You can choose to act on it or respectfully set it aside.

-

These principles are part of Netflix’s culture of radical candor and are designed to foster openness and growth while minimizing the potential for feedback to feel personal or unhelpful.

- From No Rules Rules:

- Say what you really think with positive intent.

- Openly voice opinions and feedback instead of whispering behind one another’s backs, reducing backstabbing and politics, and enabling faster decision-making.

- Coin the term “Only say about someone what you will say to their face.”

- Frequent feedback encourages learning and enhances workplace effectiveness.

- High Performance + Selfless Candor = Extremely High Performance.

- At Netflix, failing to speak up when you disagree or have helpful feedback is seen as disloyal to the company.

- Netflix promotes both candid and frequent feedback, even if it risks being hurtful.

- Receiving bad news about your work can trigger feelings of self-doubt, frustration, and vulnerability, as the brain responds to negative feedback with fight-or-flight reactions.

- A feedback loop is one of the most effective tools for improving performance, reducing misunderstandings, fostering co-accountability, and minimizing the need for hierarchy and rules.

- To build a culture of candor, bosses must give copious feedback and also encourage employees to provide candid feedback to them.

- Encouraging honest feedback can be facilitated by including it as an agenda item in meetings.

- Netflix dedicates significant time to teaching employees the right and wrong ways to give feedback.

- 4A Feedback Guidelines:

- Giving Feedback:

- Aim to Assist: Feedback must have positive intent, clearly explaining how a specific behavior change benefits the individual or company.

- Actionable: Feedback must focus on what the recipient can do differently.

- Receiving Feedback:

- Appreciate: Show appreciation for the feedback by listening carefully, considering it with an open mind, and avoiding defensiveness or anger.

- Accept or Discard: You must listen and consider all feedback but are not required to act on it. Always respond with sincere thanks.

- Giving Feedback:

- Feedback can be provided anywhere and anytime, including in private behind closed doors.

- A culture of candor requires consideration of how feedback impacts others and adherence to the 4A guidelines.

- There is one Netflix guideline that if practiced religiously would force everyone to be either radically candid or radically quiet – “Only say about someone what you will say on their face”

- Netflix established regular mechanisms so that critical feedback is given at the right time.

- Reed came up with a Live 360 feedback, which was more like a Speed feedback – each pair gave one another feedback using the “Start, Stop, Continue” method. Once all the members were covered, they had a group discussion on what they learnt during the feedback.

The Keeper Test / High Talent Density

- Netflix did not want people to see their jobs as a life time arrangement. A job is something you do for that magical period of time when you the best person for the job and that job is the best position for you.

- Once you stop learning or stop excelling, that is the moment for you to pass on that spot to some one else who is better fitted for it and to move on to a better role for you.

- They found a professional sports team as a good metaphor for high talent density since athletes:

- Demand/expect excellence – making sure every position is filled by the best person at any given time

- Train to win – expect to receive candid and continuous feedback about how to up their game from the coach and from one another

- Know effort isn’t enough and putting in a B performance despite an A for effort – they will be thanked and swapped for another player.

Leading with Context

- The benefit is that the person builds the decision-making muscle and makes better independent decisions.

- However, Leading with Context requires that you have a high talent density.

- A great example differentiating the Leading with Control and Leading with Context is how you treat your teenage son going out to party on Saturday nights – monitor him every half hour till he comes home or explain to him the dangers of drinking and driving and once he understands, let him go without any process or oversight.

- A second key question to consider to lead with context or with control is whether the goal is error prevention or innovation. If the focus is on eliminating mistakes, control is best. If the focus is on innovation, it is best to lead with context, encourage original thinking and not telling employees what to do

- A third criteria to consider is whether the system is loosely or tightly coupled. In a tightly coupled system, the various components are intricately intertwined and making changes to one of the systems may impact the entire system. In a loosely coupled system, there are few interdependencies between the component parts making the entire system flexible.

- Maintaining an unusually high level of transparency within a company can drive a more informed and engaged workforce.

- Netflix shares sensitive information with employees, including financial data and strategies, underscoring its trust in them.

- By providing employees with more information, they can make better, more informed decisions that align with the company’s overarching goals.

- When employees have a broader understanding of the company’s performance and goals, they feel more empowered and invested in its success. This empowerment increases their sense of accountability.

- Sharing information is a testament to the trust Netflix places in its employees, and in turn, this openness fosters a deeper trust between the company and its workforce.

- Many employees will respond to their new freedom by spending less than they would in a system with rules. When you tell people you trust them, they will show you how trustworthy they are.

- As companies grow, bureaucracy often increases. Netflix’s approach ensures that even as it scales, it remains nimble and avoids becoming bogged down by excessive processes.

- Removing layers of approvals accelerates decision-making, allowing the company to respond quickly to challenges and opportunities. The speed and agility gained allow for better flexibility.

- Instead of imposing control, leaders provide employees with the context they need to make informed decisions that align with the company’s goals.

- While this approach can lead to occasional mistakes, Netflix believes that the benefits of faster decision-making and employee empowerment outweigh the drawbacks.

- When errors occur, they’re viewed as learning opportunities. The focus is on understanding what went wrong and how to prevent it in the future, rather than placing blame.

- As companies grow, bureaucracy often increases. Netflix’s approach ensures that even as it scales, it remains nimble and avoids becoming bogged down by excessive processes.

- By providing employees with the necessary context and freedom, they can be more innovative, agile, and proactive, leading to better outcomes for the company as a whole.

- Instead of micromanaging, leaders provide their teams with the necessary context to make informed decisions on their own.

- When you have the freedom to make decisions, it leads to ownership and greater investment in results.

- As a team or unit grows, there’s often a tendency to implement more rules. By leading with context, you can avoid this trap, ensuring that the team remains agile.

- By providing clear context, leaders set expectations for high performance and empower employees to meet these standards without being bogged down by excessive rules.

- Leaders are encouraged to be transparent about their decisions, ensuring that their teams understand the ‘why’ behind strategies and actions.

- When employees understand the broader context, they can anticipate needs and challenges, becoming proactive rather than just reactive.

- We believe that our culture is key to our success and so we want to ensure that anyone applying for a job here knows what motivates Netflix — and all employees are working from a shared understanding of what we value most.

- Our emphasis on individual autonomy has created a very successful business. This is because in our industry, the biggest threats are a lack of creativity and innovation. And we’ve found that giving people the freedom to use their judgment is the best way to succeed long term.

Loosely coupled but tightly aligned

-

Yes, Reed Hastings and Erin Meyer discuss the idea of being “loosely coupled but tightly aligned” in [No Rules Rules: Netflix and the Culture of Reinvention]. This principle plays a significant role in Netflix’s organizational philosophy, emphasizing how autonomy and alignment coexist in their corporate culture.

-

Here’s a breakdown of the concept as discussed in the book:

-

Loosely Coupled:

Netflix encourages autonomy at all levels of the organization. Teams and individuals are given significant freedom to make decisions, innovate, and act without needing layers of approval or micromanagement. This reduces bottlenecks, enables faster decision-making, and fosters creativity. -

Tightly Aligned:

Despite the high level of autonomy, everyone at Netflix is expected to align around the company’s overarching goals and strategy. This ensures that while teams operate independently, their work supports the company’s shared objectives. Alignment is achieved through clear communication, transparency, and a deep understanding of the organization’s priorities. -

Why It’s Important:

The balance of autonomy (looseness) with alignment (tightness) prevents chaos while avoiding the stifling effects of bureaucracy. Employees are trusted to do what they think is best while being mindful of how their actions contribute to the bigger picture.

- Hastings uses the example of a sports team to explain the concept: each player (team/department) focuses on their role but understands the game plan and works toward a common goal.

- This principle underpins Netflix’s broader cultural framework, which emphasizes freedom, responsibility, and innovation.

(Symphonic orchestras with Synchronicity + perfect coordination) Manufacturing v/s (Freedom and Responsibility to ensure Innovation) Creative Economy

- Netflix believes that when you lead or manage a company, you have a clear choice – either working to control the movements of your employees through rules and process or implement a culture of freedom and responsibility, choosing speed and flexibility and offering more freedom to employees

- It is important to differentiate the different ways of working – in a manufacturing environment, you are trying to eliminate variation and most management approaches have been designed with this in mind. So, companies operated as symphonic orchestras with synchronicity, and perfect coordination as a goal.

- If you are leading an emergency room, testing airplanes or managing coal mines or delivering just in time medication to senior citizens, rules is the way to go. However, for those who are operating in the creative economy, where innovation, speed and flexibility are the keys to success, those which operate in closer to edge of chaos – the symphonic orchestra may not be the right type of musical score – it is more like a jazz and when it comes altogether, the music is beautiful.

- The insights into building a cohesive organizational culture ensure smoother and more effective partnerships. Empowering your team while maintaining accountability as well as decentralizing decisions and trusting team members result in a loosely coupled but tightly aligned team which raises the bar on excellence.

- Cutting down on bureaucratic approvals ensures faster decision-making and reduces administrative burdens, leading to greater efficiency.

- With great freedom comes great responsibility. Team members are expected to be judicious and prudent with their expenses and time off.

No decision-making approvals needed

- People thrive in jobs that give them control over their own decisions. The more people are given control over their own projects, the more ownership they feel, and the more motivated they are to do their life’s best work.

- If your employees are excellent and you give them the freedom to implement the bright ideas they believe in, innovation will happen.

- Netflix believes that since they are in a creative market, their big threat in the long run is not making a mistake, it is lack of innovation.

- The Netflix Innovation Cycle talks of four steps:

- “Farm for dissent” or “socialize” the idea

- For a big idea, test it out

- As the informed captain, make your bet.

- If it succeeds, celebrate. If it fails, sunshine it

- It is disloyal to Netflix when you disagree with an idea and do not express the disagreement. By withholding your opinion, you are implicitly choosing not to help the company.

- Farming for dissent is about actively seeking out different perspectives before making any major decision. Different opinions could be through comments on a document or rating the idea on a scale -10 to +10 on a spreadsheet.

- At Netflix, getting it perfect does not matter, what matters is moving quickly and learning from what you are doing

- Netflix believed in celebrating it – if an idea blooms, and sunshine it, if it fails. For projects/ideas that don’t succeed, they had a three-part response

- Ask what learning came from the project – be candid about your failed bets and talk about the learning

- Don’t make a big deal about it – When a bet fails, the manager must be careful to express interest in the takeaways, but no condemnation – nobody will scream, and nobody will lose his job

- Sunshine the failures/mistakes - They believe that when you sunshine your failed bets, everyone wins – it is about learning, and taking responsibility for your actions.

- The bigger the mistake, the more you lean into the sunshine. Talk openly about it – you will be forgiven. But if you brush your mistakes under the rug and keep making mistakes, the end result will be much more serious.

Dream team of stunning colleagues

- Assemble a dream team of stunning colleagues

Going Global

- Expanding this unique corporate culture to the global stage was not without difficulties.

- As Netflix expanded globally, it faced the challenge of applying its distinctive culture across various countries and regions with different norms and practices.

- Allow local teams to make decisions tailored to their regions, ensuring that content and strategies resonate with local audiences.

- Despite regional autonomy, there’s an emphasis on maintaining a unified culture. The core values of freedom and responsibility are consistent, even if applied differently in various locations.

- Expansion brings about challenges like understanding diverse cultural norms and working practices. See these as opportunities for growth and adaptation.

- Rely on seasoned employees, familiar with its culture, to act as ambassadors when entering new regions. They would help new teams integrate and understand the company’s values.

- When the culture of candid feedback is maintained globally, it involves respecting and understanding cultural differences in communication but ensuring the essence of open dialogue remains.

- By applying its culture globally, Netflix aims to harness innovation and creativity from all corners of the world, making it a truly global entertainment provider ### What harsh feedback have you received?

Things on the culture memo: You must read them carefully + your own opinions + your own examples.

- Innovate in the recommender space and strive to win more of our members’ “moments of truth”. Those decision points are, say, at 7:15 pm when a member wants to relax, enjoy a shared experience with friends and family, or is bored. The member could choose Netflix, or a multitude of other options.

Why do you think you are a good match for this group?

- Effective collaboration between an Engineering Manager (EM) and a Product Manager (PM) at a large company like Netflix, or similar organizations, hinges on clear communication, aligned goals, and a shared understanding of priorities. Here are key principles and practices for fostering such collaboration:

Establish Clear Roles and Responsibilities

-

- Product Manager’s Role:

- Owns the product vision, roadmap, and prioritization.

- Focuses on user needs, business goals, and defining “what” to build.

- Engineering Manager’s Role:

- Responsible for the technical execution, team development, and defining “how” to build.

- Ensures scalable, reliable, and efficient technical solutions.

- By defining boundaries, they can prevent overlaps and focus on complementary strengths.

Align on Shared Goals

- Both the EM and PM must work toward shared objectives, such as:

- Delivering value to customers.

- Achieving product and business outcomes.

- Ensuring long-term scalability and technical health.

- At Netflix, with its culture of high performance and ownership, this means regularly revisiting goals and ensuring alignment between product priorities and technical feasibility.

Regular and Transparent Communication

- Weekly Syncs: Hold regular one-on-one meetings to discuss priorities, challenges, and updates.

- Real-Time Problem Solving: Stay in close contact via Slack, email, or quick in-person chats to resolve issues promptly.

- Document Collaboration: Use shared documentation tools (e.g., Confluence, Notion) to co-create and track product requirements and technical designs.

Balance Short-Term and Long-Term Priorities

- PMs often prioritize delivering features to meet immediate market needs.

- EMs need to advocate for technical investments, such as refactoring or infrastructure improvements, to avoid long-term debt.

- Collaborate to create a balance, using frameworks like RICE (Reach, Impact, Confidence, Effort) for prioritization or agreeing on time-boxing for tech debt work.

Use Data to Drive Decisions

- Netflix values a data-driven culture. Both PMs and EMs should:

- Leverage A/B testing to evaluate the impact of features.

- Analyze user feedback, operational metrics, and system performance data.

- Align on KPIs (Key Performance Indicators) to measure success.

Build Trust and Empathy

- EMs should understand user-centric perspectives brought by the PM.

- PMs should appreciate the complexities of engineering challenges.

- Foster mutual respect by listening actively and considering each other’s constraints and motivations.

Collaborate on Roadmaps and Timelines

- Joint Planning: Collaborate on quarterly or sprint roadmaps to ensure priorities and technical feasibility are aligned.

- Trade-offs: Discuss trade-offs openly. For example, if a feature has a tight deadline, negotiate scope reduction rather than overburdening the team.

- Resource Allocation: Work together to balance feature development with engineering capacity.

Escalate and Resolve Conflicts Promptly

- Disagreements are natural. Address them by:

- Seeking data and objective criteria.

- Involving stakeholders or leadership when necessary.

- Prioritizing customer and business value over personal preferences.

Leverage Company Culture

- Netflix’s “Freedom and Responsibility” culture encourages ownership and transparency. Both EMs and PMs should:

- Be direct and candid in feedback.

- Take ownership of outcomes rather than just deliverables.

- Empower their teams to contribute ideas and solutions.

Continuous Learning and Retrospectives

- Conduct regular retrospectives to assess what’s working and what isn’t in their collaboration.

- Adapt based on feedback from the team and each other.

Conclusion

- By adhering to these principles, an Engineering Manager and a Product Manager can forge a strong partnership that aligns technical execution with product strategy, driving meaningful results for both the company and its users.

HM

Expectations from your next job?

- Best Work of My Life (Netflix style: thanks to its unique culture)

- Innovate and solve challenging problems that are herculean tasks not just technically but also non-technical (highly cross-functional, etc.)

- Bring people joy and satisfaction

What aspects of the Culture Memo do you agree with? / hat aspects of the Culture Memo are your favorites and why?

- Keeper’s Test: Stunning colleagues

- People over Process

- Context not Control

- Drum up the wood quote

- FNR (x)

What aspects of the Culture Memo do you disagree with?

What was the most challenging project you have worked on?

-

Increase experimentation velocity via configurable, modular flows. Amazon Music personalization, North - South Carousels

- Flows: allows swapping out models with ease w/in the config file

- Implement data from S3 via DataSource

-

SageMaker inference toolkit

- Ideation -> productionization time reduce

- Repetitve manual effort due to complex, fragmented code process

- One of the most challenging projects I’ve had to work on is creating a unified infrastructure for Amazon Music.

- S: So the Amazon entertainment suite, Music, Prime Video, Audible, Wondery Podcast, we cross collaborate often. There’s a lot of cross-functional, item-to-item recommendation systems we run that help both products.

- In this case, we wanted to collaborate with Prime Video, Taylor Swift is a big artist on our platform and she’s recently done a tour which she’s made into a movie and whenever the user pauses, they basically should have a link back to Music to listen to that song/ playlist. For many artists, as well as original shows that have playlists on our app.

- T: Our task was to collaborate, in the past, to get from research to production for us would be a fairly long process, just to get from research to productionization takes months.

- Every single team has their own approach to go to prod from research. Own pipelines/ tooling platform for common tasks

- Lack of standardized metrics and analysis tools: calculating position

- Lack of established component APIs: Each model would have it’s own APIs so to switch out the model, would require a lot of work to adapt the model to the existing interface

- Feature engineering inside the model, makes the model not transferrable

- Metrics: not measuring

- Research - python tooling, prod: Scala/ Java code -> ONNX. Checking in code, setting in pipelines, periodic flows needed in prod, monitoring steps. Was model in research same as in prod, are we measuring it the same

- Two different pipelines, environment variables in different files, dynamo db has configs everywhere, different clusters, EMR jobs, hard to test change isn’t breaking anything. Time to onboard was too long, too many tooling. New processes.

- Bottom line was, we were not able to get from prototype to production with high velocity which was stifling our need for increased experimentation.

- A: This was our current norm, we would make snowflake/ unique but repetitive fixes for each collaboration we did. We would have different env variables, clusters, components that we would have to rebuild just for this project. Time to onboard was long, too much tooling here. Outside of this, we also needed to configure regular jobs, retries, monitoring, cost analysis needed to be set up, data drift checks.

- Our original methodology included creating a new pipeline for each project, we were maintaining as you can imagine, quite a few pipelines in quite a few environments.

- This was inefficient, I wanted to create a solution that would be less problem specific and more easy to be reusable. I wanted to change the way we do things. This overhead was neither good for our customers, it stifles experimentation, nor was it good for our data scientists, to be working on repetitive non creative tasks. Thats not why we hired them.

- As part of this collaboration, I wanted to fix this bottleneck of course, along with our cross collaborators and team members.

- Researched a few options out in the market as well as custom solutions. Airflow, Metaflow

- R: Our eventual goal is to have a unified platform that the entire entertainment suite at Amazon can leverage

- R:

When did you question the status quo?

- Daily update meetings / project

- The issue is when you have a daily meeting, it’s hard to come into the meeting with a proper agenda and make sure everyone’s time is respected. There are nominal movements within projects on an everyday basis.

- Work with Program Managers, create excel sheets categorizing tasks, as well as Jira tickets, and sync up on a less frequent cadence. There should be a point/agenda to a meeting

How do you communicate with stakeholders?

- How to gear the message towards the audience, audience intended messaging

Unique Culture

- Not every employee is worth keeping.

-

What Netflix knows about pay that the rest of us are too scared to implement:

- Netflix has a radical approach to paying people.

- They eliminated performance bonuses and replaced them with this 3-part comp plan:

- Pay top of the market on salaries.

- Offer equity for long-term incentives.

- Use the “Keeper Test” to quickly exit underperformers.

- Element 1: Top-of-Market Salary

- Netflix Co-founder Reed Hastings shares: “People are most creative when they have a big enough salary to remove some of the stress from home. But people are less creative when they don’t know whether or not they’ll get paid extra. Big salaries, not merit bonuses, are good for innovation.”

- Element 2: Equity

- Netflix lets employees choose to include equity (and how much) in their compensation package with no vesting period.

- So the employee can choose to cash out at any point. From day one they are owners. The message - think long term, like an owner.

- Element 3: The Keeper’s Test

- These two elements ONLY work when paired with Netflix’s infamous Keeper Test:

- Managers have to always ask: Which of my people, if they told me they were leaving for a similar job at a peer company, would I fight hard to keep at Netflix?

- Anyone else gets a generous severance now so they can open that role up for a star.

- Uniquely, all Netflix employees know that if they are not performing, the culture expects them to be exited from the company.

- Thus, while they do pay top of market, but they also are very quick to let someone go. They are acutely aware that their culture is not for everyone and it’s very much a you didn’t work out, no hard feelings, here is your severance package.

- These two elements ONLY work when paired with Netflix’s infamous Keeper Test:

- Takeaways:

- Many business owners and CEOs like the IDEA of the keeper test. But rarely ask themselves: how often am I proactively exiting people I wouldn’t fight to keep?

- The reality is that most companies don’t eliminate mediocre employees.

- The Keeper Test, fully lived out, is the CRITICAL element that makes all this work.

- It’s an incentive protecting against poor performance that is an ESSENTIAL complement to the elimination of bonuses.

- This ultimately saves the company and the team a tremendous amount of heartache and money.

Netflix Rows

- From 10,000 rows, there are typically up to 40 rows shown on each homepage (depending on the capabilities of the device), and up to 75 videos per row.

- Thematically coherent rows:

- Top 10 TV Shows/Movies

- Continue Watching

- Only on Netflix

- Watch It Again

- My List

- New on Netflix

- Genres: Action / Comedy / Sci-Fi / Horror / Documentaries / Dramas

- Games

- Gems for you / Top Picks for You

- Watch In One Weekend

- Feel-Good Romantic Movies

- 30 Minute Laughs

- Because You Watched/Liked

- Watch Together for Older Kids

The most strongly recommended titles start on the left of each row and go right – unless you have selected Arabic or Hebrew as your language in our systems, in which case these will go right to left.

-

10-40 rows per personalized load.

- Top-left: most likely to see; bottom-right: least likely to see

- Row lifecycle:

- Select candidates

- Select evidence (personalized/ad-hoc genres / based on tag combinations)

- Rank

- Filter (titles previously watched, de-dup)

- Format UI based on device

- Choose

- Row Features:

- Quality of items

- Features of items

- Quality of evidence

- User-row interactions

- Item/row metadata

- Recency

- Item-row affinity

- Row length

- Position on page

- Context

- Title

- Diversity

- Freshness

- Location: most important (i) licensing model/content is only licensed for certain regions (apart from Netflix originals); (ii) user preferences are different (Japan: people watch more anime).

- Time: recommendations for the same user at 9AM would have more child content; evening is more of adult content (inferred signal: Companion).

- Device: On the phone app, more binge-watching; not as much discovery. On the TV, more discovery and/or binge-watching.

-

Language: Dutch in Belgium v/s French in Belgium (even though content is the same since it is licensed at a country resolution)

- 2D versions of ranking quality metrics:

- Example: Recall @ row-by-column

- User Modes of Watching:

- Continuation

- Discovery

- Play from My List

- Rewatch

- Search

- Certain rows may be static/always be required:

- Examples: Continue Watching and My List

-

Netflix is dipping into more types of content, like the live fight between Jake Paul and Mike Tyson, the NFL games coming later this year, and the WWE’s Monday Night Raw.

- Page-Level Optimization:

- A more sophisticated approach involves a full-page scoring function that aims to optimize the entire layout (rows and videos) rather than ranking rows in isolation. The ranking function evaluates the quality of each row or page. It is driven by machine learning and incorporates:

- Content relevance: How well the videos in the row match the member’s preferences.

- Diversity: Ensuring variety in themes, genres, or other aspects across rows.

- Navigation modeling: Anticipating how users interact with the page (e.g., vertical scanning, visibility of content in the top-left corner).

- Evidence quality: How strong the contextual or behavioral evidence is for recommending a particular row.

- Page constraints: Considering device-specific limitations and avoiding duplicate content.

- This full-page optimization may use machine learning and learning-to-rank models trained on user interaction data.

- A more sophisticated approach involves a full-page scoring function that aims to optimize the entire layout (rows and videos) rather than ranking rows in isolation. The ranking function evaluates the quality of each row or page. It is driven by machine learning and incorporates:

Netflix Games

- Can do a multi-objective recommender system to suggest games with the objective of increasing the play time.

- Hades, Dead Cells, Into the Breach, Arcanium, Into the Dead 2, and TMNT are some of the best ones on that service and of course there is the GTA series. Hades is a massive win for Netflix.

- They don’t have ads or in app purchases.

- Much better than Apple Arcade.

- These are native iOS games just require subscription to access. Very very similar to Apple Arcade.

- A series of streamable games are available in select regions alongside the more well known downloadable games

- If they figure out a way to stream those games to your TV/device and let you use your phone as a controller (or any Bluetooth controller) that would be ideal.

- Games features/characteristics:

- Category/Genre: Action, Adventure, Arcade, Card Game, Interactive Story, Puzzle, Sports, Simulation

- Maturity/Age/Parental Rating: 9+ or 12+ or 17+ (mild/realistic violence, horror/fear themes, suggestive themes, profanity, crude humor)

- Mode: Single Player, Local Multiplayer, Online Co-op

- Language(s)

- Requires Internet

- Game controller supported?

- Developer?

- Release year

- Platform (not all games are on all platforms)

- Depending on the device/surface, games’ titles change. Modes change (single player v/s double player).

Feedback

- At the end of the game: “How was your experience playing

?" "What could we improve? Video quality; Audio quality; Delayed input; Gameplay quality; Other "

Netflix as a game publisher-developer hybrid

- Netflix is primarily a game publisher, not a developer, although it has made steps toward developing its own games. Here’s the distinction and where Netflix fits:

Game Publisher

- A publisher funds, markets, and distributes games but doesn’t necessarily develop them in-house.

- Netflix’s Role as a Publisher:

- Netflix collaborates with external game developers to publish games that align with its brand and content.

- Examples: Netflix has worked with established studios to create games inspired by its popular franchises, like Stranger Things: 1984 and Stranger Things 3: The Game.

Game Developer

- A developer is responsible for creating and coding the games.

- Netflix’s Development Efforts:

- Netflix has acquired game studios, such as Night School Studio (known for Oxenfree) and Boss Fight Entertainment, signaling its intent to develop games in-house.

- These acquisitions suggest Netflix is transitioning into a developer-publisher hybrid, focusing on creating original games while still publishing games from third-party developers.

Strategy

- Netflix’s entry into gaming is part of its strategy to enhance user engagement and diversify its offerings beyond streaming movies and TV shows. Its game catalog is accessible via its app for subscribers, aligning its gaming efforts with its core subscription model rather than standalone sales.

Summary

- Currently, Netflix is primarily a game publisher but is moving toward becoming a hybrid developer-publisher as it builds in-house capabilities and develops original content in gaming.

Business Models: (i) Digital on-demand streaming service and (ii) DVD-by-mail rental service

- In 2007, the year the company introduced its digital on-demand streaming option alongside its DVD-by-mail rental service.

Netflix Title Distribution

- As of July 2023, Netflix had 6,621 movies, series, and specials available in the US, not including over 60 video games. Of those titles, 3,657 were Netflix Originals, making up 55% of the US library.

- As of November 2024, Netflix has over 7-8k films/movies, TV shows and 100 mobile games available on its platform.

Memberships

- As of November 2024, Netflix has approximately 283M global paid memberships, and remains the largest premium video on-demand service in the world.

- Netflix accounts for 17 percent of all worldwide online video subscriptions.

Plans and Pricing

- Standard with ads: $6.99 / month

- Standard: $15.49 / month (extra member slots can be added for $7.99 each / month)

- Premium: $22.99 / month (extra member slots can be added for $7.99 each / month)

Netflix Culture Memo/Deck

Netflix Originals / Original Programming / Only on Netflix

- Stranger Things

- The Crown

- 3 Body Problem

- Emily in Paris

- Wednesday

- The company’s first true original show was award-winning House of Cards.

- Categories:

- Scripted series

- Unscripted series/Special

- Documentary film

- Kids series

- Foreign-language series

- Film

- The company is actively pursuing awards as part of its Netflix’s original programming has received over 800 award nominations and 250 awards given. The Crown holds 129 of those awards.

Diverse Audience

- Netflix is one of the world’s leading entertainment services with over 200 million members in over 190 countries. Our library of TV shows and movies varies by country and changes periodically.

- Netflix is not available in:

- China

- Crimea

- North Korea

- Russia

- Syria

Netflix Ratings

-

Netflix does NOT allow users to rate shows or movies using a star or numerical rating system. However, users can provide feedback through a thumbs up or thumbs down system:

- Thumbs Up: Indicates you liked the content, helping Netflix recommend similar shows or movies.

- Thumbs Down: Indicates you did not enjoy the content, so Netflix avoids recommending similar content.

- This simple system replaced the older star rating system in 2017 to streamline user feedback and enhance personalization.

- “Not for me”, Thumbs Up/”I like this”, Double Thumbs Up/”Love this!”

Netflix Deep-learning Toolboxes/Libraries

- Netflix deploys a fairly large number of AWS EC2 instances that host their web services and applications. They collectively emit more than 1.5 million events per second during peak hours, or around 80 billion events per day. The events could be log messages, user activity records, system operational data, or any arbitrary data that our systems need to collect for business, product, and operational analysis.

- Experimentation Platform: the service which makes it possible for every Netflix engineering team to implement their A/B tests with the support of a specialized engineering team

- ABlaze: View test allocations in real-time across dimensions of interest. To help test owners track down potentially conflicting tests, we provide them with a test schedule view in ABlaze, the front end to our Experimentation Platform.

- Ignite: Netflix’s internal A/B Testing visualization and analysis tool. It is within Ignite that test owners analyze metrics of interest and evaluate the results of a test.

- DeLorean is our internal project to build the system that takes an experiment plan, travels back in time to collect all the necessary data from the snapshots, and generates a dataset of features and labels for that time in the past to train machine learning models. One of the primary motivations for building DeLorean is to share the same feature encoders between offline experiments and online scoring systems to ensure that there are no discrepancies between the features generated for training and those computed online in production. Note that the feature encoders are shared between online and offline to guarantee the consistency of feature generation.

- To validate reliability, we have Chaos Monkey which tests our instances for random failures, along with the Simian Army.

- The Hive metadata store is a central repository that stores metadata about the tables, partitions, schemas, and data locations in a Hive data warehouse. It enables Hive to manage and query structured data efficiently by maintaining information about the structure and storage of the underlying datasets.

- Presto is an interactive querying engine which is an open source project that could handle our scale of data & processing needs, had great momentum, was well integrated with the Hive metastore, and was easy for us to integrate with our DW on S3. We were delighted when Facebook open sourced Presto.

- Netflix uses a standardized schema for passing the Spark DataFrames of training features to machine learning algorithms, as well as computing predictions and metrics for trained models on the validation and test feature DataFrames.

- Meson is a general purpose workflow orchestration and scheduling framework that we built to manage ML pipelines that execute workloads across heterogeneous systems. Meson offers a simple ‘for-loop’ construct that allows data scientists and researchers to express parameter sweeps allowing them to run tens of thousands of docker containers across the parameter values.

- Metaflow is an open source machine learning infrastructure framework. Since its inception, Metaflow has been designed to provide a human-friendly API for building data and ML (and today AI) applications and deploying them in our production infrastructure frictionlessly. Modeling, Deployment, Versioning, Orchestration, Compute, Data.

Netflix’s Long Term View/Investor Relations Memo

- Great, high-quality storytelling has universal appeal that transcends borders

- Netflix increasingly licenses and produces content all across the globe and Netflix members everywhere in the world can increasingly enjoy the same movies and TV series at the same time, free of legacy business models and outdated restrictions.

- With our global distribution, Netflix is well positioned to bring engaging stories from many cultures to people all across the globe.

Netflix (Personalized) Search

- Ranking entities for partial queries

- Optimizing for the minimum number of interactions needed to find something

- Different languages involve very different interaction patterns

- How to automatically detect and adapt to such patterns in newly introduced languages?

Meetings

- Gary Tang

- Multi-objective (MOO) recommender systems

- Reward innovation for long-term member satisfaction by predicting delayed rewards

- Raveesh Bhalla

- “Your models are only as good as your data”

- Cytation: “Find YouTube videos that cite an arXiv paper”

- Decision-making frameworks

- Erik Schmidt

- Linas Baltrunas

- ICLR 2016 paper: Session-based Recommendations with Recurrent Neural Networks

- Contextual Multi-Armed Bandit for Email Layout Recommendation

Netflix RecSys Talks

- 2021: Trends in Recommendation & Personalization at Netflix

- Oct 2024: Raising a Recommender System

Deep learning for recommender systems: A Netflix case study

Challenges in the data for building real-world recommender-systems compared to literature