Natural Language Processing • Language Models

- Language Modeling + RNN

- N-gram language models

- Neural Language Model

- Evaluating language models

- Contextual Embeddings

- ELMO

- GPT-3

- Citation

Language Modeling + RNN

- This is the task of predicting what word comes next.

- Probability distribution over next words given preceding contexts.

- Language model is a system that does that.

- It assigns probability to a piece of text.

- Gboard is a language model

- Query completion is a language model

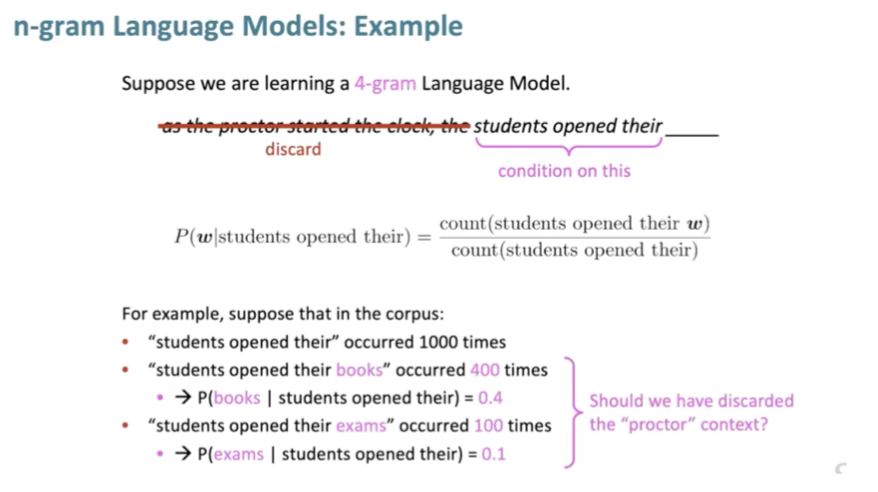

N-gram language models

- Chunk of n consecutive words (4 gram, 5 gram)

- Make a markov assumption: predict t+1, we throw away earlier words and just use n-1 words and use conditional probability

- 4 gram language model:

- Only use last 3 words

- Only use last 3 words

- Problem is if you don’t see that data within your window, you’ll get probability 0.

- Count how often word sequences occur in a corpus.

- N gram will not have context, it will be incoherent. We need to consider more than 3-4 words at a time if we want to model language well

- N grams have a sparsity problem.

Neural Language Model

- Input seq of words

- Output prob dist of the next word

- How about a window based neural model

- A fixed window neural language model (discard far away words)

- These still suck, precursor to RNN

- Single hidden layer

Evaluating language models

- Perplexity: standard evaluation metric; lower is better

Contextual Embeddings

- Word embeddings work in NLP by neural networks

- Word embeddings are context free

- Bank will have the same embedding even if river or financial place

- Solution: contextual representation on text corpus

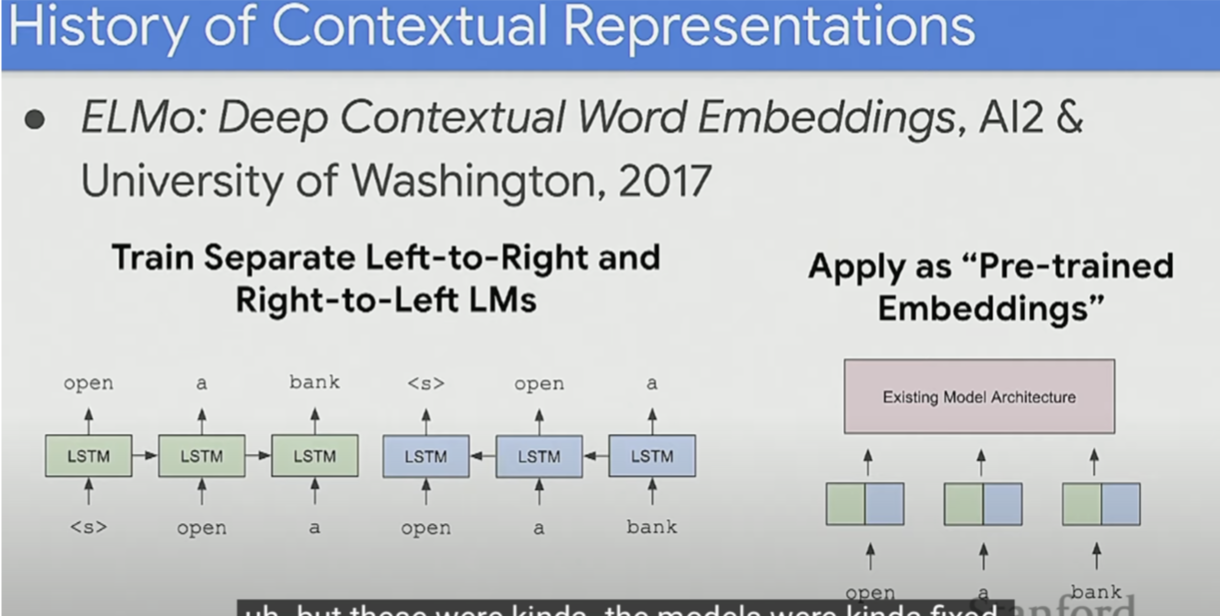

ELMO

- Contextualized word-embeddings

- Trained bidirectional, but weakly

- Instead of using a fixed embedding for each word, ELMo looks at the entire sentence before assigning each word in it an embedding. It uses a bi-directional LSTM trained on a specific task to be able to create those embeddings.

- Left to right and right to left LM and concatenate

- Models are still kind of fixed

- Embedding from Language Model (ELMo) (Peters et al., 2018) is one such method that provides deep contextual embeddings.

- ELMo produces word embeddings for each context where the word is used, thus allowing different representations for varying senses of the same word

GPT-3

- Can use for DB

- Language model

- Given context, probability of next word is what it predicts

- What new about GPT 3 is flexible “in-context” learning

- Demonstrates some level of fast adaptation to completely new tasks via in context learning

- The language model training (outer loop) is learning how to learn from the context (inner loop)

Citation

If you found our work useful, please cite it as:

@article{Chadha2021Distilled,

title = {Language Models},

author = {Jain, Vinija and Chadha, Aman},

journal = {Distilled Notes for Stanford CS224n: Natural Language Processing with Deep Learning},

year = {2021},

note = {\url{https://aman.ai}}

}